Integrating AI Literacy with the TPB-TAM Framework to Explore Chinese University Students’ Adoption of Generative AI

Abstract

1. Introduction

2. Literature Review

2.1. Theoretical Model Evolution

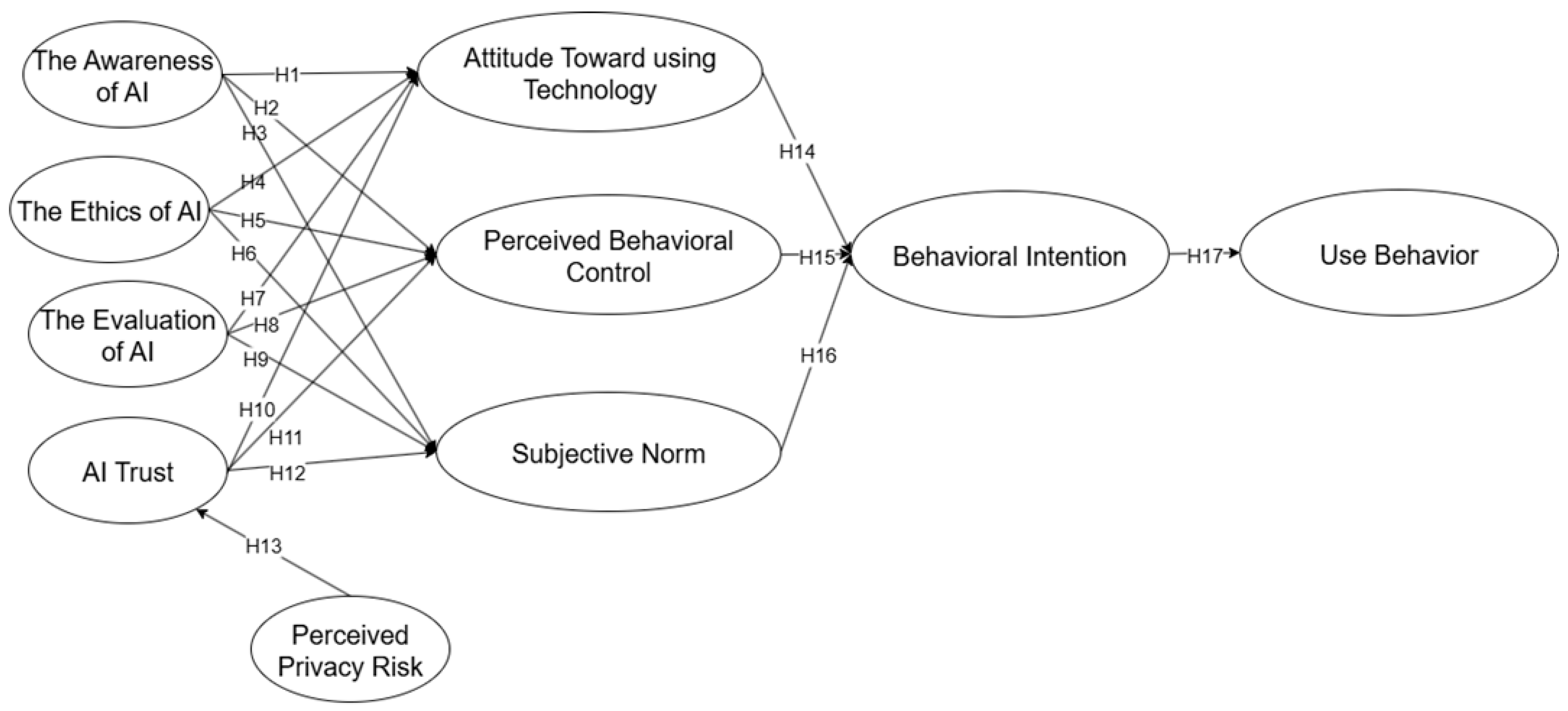

2.2. Model Development

2.2.1. AI Literacy

2.2.2. AI Trust and Perceived Privacy Risk

2.2.3. Behavioral Motivation-Attitude, Support, and Subjective Norms

2.2.4. Behavioral Intentions and Use Behavior

3. Methodology

3.1. Survey Design

3.2. Sampling and Data Collection Procedures

3.3. Data Analysis

4. Results

4.1. Validity and Reliability Tests

4.2. Structural Model Evaluation

5. Discussion

5.1. TPB

5.2. The Influence of AI Literacy on GenAI Adoption

5.3. TAM

5.4. Group Difference Analysis

6. Theoretical Contributions and Practical Implications

6.1. Theoretical Contributions

6.2. Practical Implications

7. Limitations and Future Prospects

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Achmadi, H., Samuel, S., & Patria, D. (2025). The influence of AI literacy, subjective norm, attitude toward using AI, perceived usefulness of AI and confidence of learning AI toward behavior intention. International Journal of Educational Research and Development, 7(2), 32–39. [Google Scholar]

- Ajzen, I. (1985). From intentions to actions: A theory of planned behavior. In J. Kuhl, & J. Beckmann (Eds.), Action Control (pp. 11–39). Springer. [Google Scholar] [CrossRef]

- Ajzen, I. (1991). The theory of planned behavior. Organizational Behavior and Human Decision Processes, 50(2), 179–211. [Google Scholar] [CrossRef]

- Ajzen, I. (2002). Perceived behavioral control, self-efficacy, locus of control, and the theory of planned behavior. Journal of Applied Social Psychology, 32(4), 665–683. [Google Scholar] [CrossRef]

- Al-Emran, M., Abu-Hijleh, B., & Alsewari, A. A. (2024). Exploring the effect of Generative AI on social sustainability through integrating AI attributes, TPB, and T-EESST: A deep learning-based hybrid SEM-ANN approach. IEEE Transactions on Engineering Management, 71, 14512–14524. [Google Scholar] [CrossRef]

- Al-Emran, M., Al-Maroof, R., Al-Sharafi, M. A., & Arpaci, I. (2022). What impacts learning with wearables? An integrated theoretical model. Interactive Learning Environments, 30(10), 1897–1917. [Google Scholar] [CrossRef]

- Antonakis, J., Bendahan, S., Jacquart, P., & Lalive, R. (2010). On making causal claims: A review and recommendations. The Leadership Quarterly, 21(6), 1086–1120. [Google Scholar] [CrossRef]

- Beldad, A., De Jong, M., & Steehouder, M. (2010). How shall I trust the faceless and the intangible? A literature review on the antecedents of online trust. Computers in Human Behavior, 26(5), 857–869. [Google Scholar] [CrossRef]

- Brewer, P. R., Bingaman, J., Paintsil, A., Wilson, D. C., & Dawson, W. (2022). Media use, interpersonal communication, and attitudes toward artificial intelligence. Science Communication, 44(5), 559–592. [Google Scholar] [CrossRef]

- Calvani, A., Fini, A., & Ranieri, M. (2009). Assessing digital competence in secondary education—Issues, models and instruments. USA. Available online: https://flore.unifi.it/handle/2158/328911?mode=complete (accessed on 13 August 2025).

- Chai, C. S., Wang, X., & Xu, C. (2020). An extended theory of planned behavior for the modelling of Chinese secondary school students’ intention to learn artificial intelligence. Mathematics, 8(11), 2089. [Google Scholar] [CrossRef]

- Chan, C. K. Y., & Hu, W. (2023). Students’ voices on generative AI: Perceptions, benefits, and challenges in higher education. International Journal of Educational Technology in Higher Education, 20(1), 43. [Google Scholar] [CrossRef]

- Chen, Y., & Esmaeilzadeh, P. (2024). Generative AI in medical practice: In-depth exploration of privacy and security challenges. Journal of Medical Internet Research, 26, e53008. [Google Scholar] [CrossRef] [PubMed]

- Cheon, J., Lee, S., Crooks, S. M., & Song, J. (2012). An investigation of mobile learning readiness in higher education based on the theory of planned behavior. Computers & Education, 59(3), 1054–1064. [Google Scholar] [CrossRef]

- Choudhury, A., & Shamszare, H. (2023). Investigating the impact of user trust on the adoption and use of ChatGPT: Survey analysis. Journal of Medical Internet Research, 25, e47184. [Google Scholar] [CrossRef]

- Colquitt, J. A., Scott, B. A., & LePine, J. A. (2007). Trust, trustworthiness, and trust propensity: A meta-analytic test of their unique relationships with risk taking and job performance. Journal of Applied Psychology, 92(4), 909–927. [Google Scholar] [CrossRef] [PubMed]

- Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly, 13(3), 319. [Google Scholar] [CrossRef]

- Dong, W., Pan, D., & Kim, S. (2024). Exploring the integration of IoT and generative AI in English language education: Smart tools for personalized learning experiences. Journal of Computational Science, 82, 102397. [Google Scholar] [CrossRef]

- Dong, Y., Hou, J., Zhang, N., & Zhang, M. (2020). Research on how human intelligence, consciousness, and cognitive computing affect the development of artificial intelligence. Complexity, 2020, 1680845. [Google Scholar] [CrossRef]

- Dwivedi, Y. K., Hughes, L., Ismagilova, E., Aarts, G., Coombs, C., Crick, T., Duan, Y., Dwivedi, R., Edwards, J., Eirug, A., Galanos, V., Ilavarasan, P. V., Janssen, M., Jones, P., Kar, A. K., Kizgin, H., Kronemann, B., Lal, B., Lucini, B., & Williams, M. D. (2021). Artificial Intelligence (AI): Multidisciplinary perspectives on emerging challenges, opportunities, and agenda for research, practice and policy. International Journal of Information Management, 57, 101994. [Google Scholar] [CrossRef]

- Fishbein, M., & Ajzen, I. (2010). Predicting and changing behavior (1st ed.). Psychology Press. [Google Scholar] [CrossRef]

- Floridi, L., Cowls, J., Beltrametti, M., Chatila, R., Chazerand, P., Dignum, V., Luetge, C., Madelin, R., Pagallo, U., Rossi, F., Schafer, B., Valcke, P., & Vayena, E. (2018). AI4People—An ethical framework for a good AI society: Opportunities, risks, principles, and recommendations. Minds and Machines, 28(4), 689–707. [Google Scholar] [CrossRef]

- Fong, L., & Law, R. (2013). Hair, J. F. Jr., Hult, G. T. M., Ringle, C. M., Sarstedt, M. (2014). A primer on partial least squares structural equation modeling (PLS-SEM). Sage Publications. ISBN: 978-1-4522-1744-4. 307 pp. European Journal of Tourism Research, 6(2), 211–213. [Google Scholar] [CrossRef]

- Glikson, E., & Woolley, A. W. (2020). Human trust in artificial intelligence: Review of empirical research. Academy of Management Annals, 14(2), 627–660. [Google Scholar] [CrossRef]

- Gold, A. H., Malhotra, A., & Segars, A. H. (2001). Knowledge management: An organizational capabilities perspective. Journal of Management Information Systems, 18(1), 185–214. [Google Scholar] [CrossRef]

- Grassini, S. (2023). Development and validation of the AI attitude scale (AIAS-4): A brief measure of general attitude toward artificial intelligence. Frontiers in Psychology, 14, 1191628. [Google Scholar] [CrossRef]

- Gumusel, E. (2025). A literature review of user privacy concerns in conversational chatbots: A social informatics approach: An annual review of information science and technology (ARIST) paper. Journal of the Association for Information Science and Technology, 76(1), 121–154. [Google Scholar] [CrossRef]

- Hair, J. F., Howard, M. C., & Nitzl, C. (2020). Assessing measurement model quality in PLS-SEM using confirmatory composite analysis. Journal of Business Research, 109, 101–110. [Google Scholar] [CrossRef]

- Hair, J. F., Ringle, C. M., & Sarstedt, M. (2011). PLS-SEM: Indeed a silver bullet. Journal of Marketing Theory and Practice, 19(2), 139–152. [Google Scholar] [CrossRef]

- Hair, J. F., Risher, J. J., Sarstedt, M., & Ringle, C. M. (2019). When to use and how to report the results of PLS-SEM. European Business Review, 31(1), 2–24. [Google Scholar] [CrossRef]

- Hair, J. F., Jr., & Sarstedt, M. (2019). Factors versus composites: Guidelines for choosing the right structural equation modeling method. Project Management Journal, 50(6), 619–624. [Google Scholar] [CrossRef]

- Henseler, J., Ringle, C. M., & Sarstedt, M. (2015). A new criterion for assessing discriminant validity in variance-based structural equation modeling. Journal of the Academy of Marketing Science, 43(1), 115–135. [Google Scholar] [CrossRef]

- Ivanov, S., Soliman, M., Tuomi, A., Alkathiri, N. A., & Al-Alawi, A. N. (2024). Drivers of generative AI adoption in higher education through the lens of the Theory of Planned Behaviour. Technology in Society, 77, 102521. [Google Scholar] [CrossRef]

- Katsantonis, A., & Katsantonis, I. G. (2024). University students’ attitudes toward artificial intelligence: An exploratory study of the cognitive, emotional, and behavioural dimensions of AI attitudes. Education Sciences, 14(9), 988. [Google Scholar] [CrossRef]

- Knox, J. (2020). Artificial intelligence and education in China. Learning, Media and Technology, 45(3), 298–311. [Google Scholar] [CrossRef]

- Lo, C. K., Hew, K. F., & Jong, M. S. (2024). The influence of ChatGPT on student engagement: A systematic review and future research agenda. Computers & Education, 219, 105100. [Google Scholar] [CrossRef]

- Ma, D., Akram, H., & Chen, H. (2024). Artificial intelligence in higher education: A cross-cultural examination of students’ behavioral intentions and attitudes. The International Review of Research in Open and Distributed Learning, 25(3), 134–157. [Google Scholar] [CrossRef]

- Ministry of Education of the People’s Republic of China. (2025, May 16). China’s smart education white paper. Ministry of Education of the People’s Republic of China. Available online: http://www.moe.gov.cn (accessed on 9 June 2025).

- Mittelstadt, B. D., Allo, P., Taddeo, M., Wachter, S., & Floridi, L. (2016). The ethics of algorithms: Mapping the debate. Big Data & Society, 3(2), 2053951716679679. [Google Scholar] [CrossRef]

- Moravec, V., Hynek, N., Gavurova, B., & Kubak, M. (2024). Everyday artificial intelligence unveiled: Societal awareness of technological transformation. Oeconomia Copernicana, 15(2), 367–406. [Google Scholar] [CrossRef]

- Morris, M. G., & Venkatesh, V. (2000). Age differences in technology adoption decisions: Implications for a changing work force. Personnel Psychology, 53(2), 375–403. [Google Scholar] [CrossRef]

- Nazaretsky, T., Mejia-Domenzain, P., Swamy, V., Frej, J., & Käser, T. (2025). The critical role of trust in adopting AI-powered educational technology for learning: An instrument for measuring student perceptions. Computers and Education: Artificial Intelligence, 8, 100368. [Google Scholar] [CrossRef]

- Ng, D. T. K., Leung, J. K. L., Chu, K. W. S., & Qiao, M. S. (2021). AI literacy: Definition, teaching, evaluation and ethical issues. Proceedings of the Association for Information Science and Technology, 58(1), 504–509. [Google Scholar] [CrossRef]

- Oliveira, T., Thomas, M., Baptista, G., & Campos, F. (2016). Mobile payment: Understanding the determinants of customer adoption and intention to recommend the technology. Computers in Human Behavior, 61, 404–414. [Google Scholar] [CrossRef]

- Pavlou. (2003). Consumer acceptance of electronic commerce: Integrating trust and risk with the technology acceptance model. International Journal of Electronic Commerce, 7(3), 101–134. [Google Scholar] [CrossRef]

- Regmi, P. R., Waithaka, E., Paudyal, A., Simkhada, P., & van Teijlingen, E. (2016). Guide to the design and application of online questionnaire surveys. Nepal Journal of Epidemiology, 6(4), 640–644. [Google Scholar] [CrossRef] [PubMed]

- Ribeiro, M. T., Singh, S., & Guestrin, C. (2016, August 13–17). “Why should I trust you?”: Explaining the predictions of any classifier. The 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (pp. 1135–1144), San Francisco, CA, USA. [Google Scholar] [CrossRef]

- Sarstedt, M., Ringle, C. M., Smith, D., Reams, R., & Hair, J. F. (2014). Partial least squares structural equation modeling (PLS-SEM): A useful tool for family business researchers. Journal of Family Business Strategy, 5(1), 105–115. [Google Scholar] [CrossRef]

- Sergeeva, O. V., Zheltukhina, M. R., Shoustikova, T., Tukhvatullina, L. R., Dobrokhotov, D. A., & Kondrashev, S. V. (2025). Understanding higher education students’ adoption of generative AI technologies: An empirical investigation using UTAUT2. Contemporary Educational Technology, 17(2), ep571. [Google Scholar] [CrossRef]

- Shang, X. (2025). Legal orientation of digital behavioral traces and the protection of rights and interests: Addressing the personal information protection challenges for AI platforms such as DeepSeek. Jinan Journal (Philosophy & Social Sciences), 47(2), 70–91. [Google Scholar]

- Shin, D. (2020). User perceptions of algorithmic decisions in the personalized AI system: Perceptual evaluation of fairness, accountability, transparency, and explainability. Journal of Broadcasting & Electronic Media, 64(4), 541–565. [Google Scholar] [CrossRef]

- Shin, D. (2021). The effects of explainability and causability on perception, trust, and acceptance: Implications for explainable AI. International Journal of Human-Computer Studies, 146, 102551. [Google Scholar] [CrossRef]

- Shin, D., & Park, Y. J. (2019). Role of fairness, accountability, and transparency in algorithmic affordance. Computers in Human Behavior, 98, 277–284. [Google Scholar] [CrossRef]

- Singh, A. K., Kiriti, M. K., Singh, H., & Shrivastava, A. (2025). Education AI: Exploring the impact of artificial intelligence on education in the digital age. International Journal of System Assurance Engineering and Management, 16(4), 1424–1437. [Google Scholar] [CrossRef]

- Stein, J.-P., Messingschlager, T., Gnambs, T., Hutmacher, F., & Appel, M. (2024). Attitudes towards AI: Measurement and associations with personality. Scientific Reports, 14(1), 2909. [Google Scholar] [CrossRef]

- Streukens, S., & Leroi-Werelds, S. (2016). Bootstrapping and PLS-SEM: A step-by-step guide to get more out of your bootstrap results. European Management Journal, 34(6), 618–632. [Google Scholar] [CrossRef]

- Taylor, S., & Todd, P. A. (1995). Understanding information technology usage: A test of competing models. Information Systems Research, 6(2), 144–176. [Google Scholar] [CrossRef]

- Teo, T. (2016). Modelling Facebook usage among university students in Thailand: The role of emotional attachment in an extended technology acceptance model. Interactive Learning Environments, 24(4), 745–757. [Google Scholar] [CrossRef]

- Teo, T. S. H., Srivastava, S. C., & Jiang, L. (2008). Trust and electronic government success: An empirical study. Journal of Management Information Systems, 25(3), 99–132. [Google Scholar] [CrossRef]

- U.S. Department of Education, Office of Educational Technology. (2023). Artificial intelligence and the future of teaching and learning: Insights and recommendations. U.S. Department of Education. Available online: https://tech.ed.gov (accessed on 15 September 2025).

- Van Den Berg, G., & Du Plessis, E. (2023). ChatGPT and Generative AI: Possibilities for its contribution to lesson planning, critical thinking and openness in teacher education. Education Sciences, 13(10), 998. [Google Scholar] [CrossRef]

- Venkatesh, V., Morris, M. G., Davis, G. B., & Davis, F. D. (2003). User acceptance of information technology: Toward a unified view. MIS Quarterly, 27(3), 425–478. [Google Scholar] [CrossRef]

- Venkatesh, V., Thong, J. Y. L., & Xu, X. (2012). Consumer acceptance and use of information technology: Extending the unified theory of acceptance and use of technology. MIS Quarterly, 36(1), 157. [Google Scholar] [CrossRef]

- Vorm, E. S., & Combs, D. J. Y. (2022). Integrating transparency, trust, and acceptance: The intelligent systems technology acceptance model (ISTAM). International Journal of Human–Computer Interaction, 38(18–20), 1828–1845. [Google Scholar] [CrossRef]

- Wang, B., Rau, P.-L. P., & Yuan, T. (2023). Measuring user competence in using artificial intelligence: Validity and reliability of artificial intelligence literacy scale. Behaviour & Information Technology, 42(9), 1324–1337. [Google Scholar] [CrossRef]

- Wang, C., Wang, H., Li, Y., Dai, J., Gu, X., & Yu, T. (2025). Factors influencing university students’ behavioral intention to use generative artificial intelligence: Integrating the theory of planned behavior and AI literacy. International Journal of Human–Computer Interaction, 41(11), 6649–6671. [Google Scholar] [CrossRef]

- Wei, J., Jia, K., Zeng, R., He, Z., Qiu, L., Yu, W., Tang, M., Huang, H., Zeng, X., Zhang, H., Zheng, L., Zhang, H., Zhang, X., Zhao, J., Fu, H., & Jiang, Y. (2025). Artificial intelligence innovation development and governance transformation under the DeepSeek breakthrough effect. E-Government, 3, 2–39. [Google Scholar] [CrossRef]

- Wold, S., Trygg, J., Berglund, A., & Antti, H. (2001). Some recent developments in PLS modeling. Chemometrics and Intelligent Laboratory Systems, 58(2), 131–150. [Google Scholar] [CrossRef]

- Wright, K. B. (2005). Researching internet-based populations: Advantages and disadvantages of online survey research, online questionnaire authoring software packages, and web survey services. Journal of Computer-Mediated Communication, 10(3), JCMC1034. [Google Scholar] [CrossRef]

- Yasmin Khairani Zakaria, N., Hashim, H., & Azhar Jamaludin, K. (2025). Exploring the impact of AI on critical thinking development in ESL: A systematic literature review. Arab World English Journal, 1, 330–347. [Google Scholar] [CrossRef]

- Zhang, B., & Dafoe, A. (2019). Artificial intelligence: American attitudes and trends. SSRN Electronic Journal. [Google Scholar] [CrossRef]

- Zhang, C., Hu, M., Wu, W., Kamran, F., & Wang, X. (2025). Unpacking perceived risks and AI trust influences pre-service teachers’ AI acceptance: A structural equation modeling-based multi-group analysis. Education and Information Technologies, 30(2), 2645–2672. [Google Scholar] [CrossRef]

- Zhang, Y., Wu, M., Tian, G. Y., Zhang, G., & Lu, J. (2021). Ethics and privacy of artificial intelligence: Understandings from bibliometrics. Knowledge-Based Systems, 222, 106994. [Google Scholar] [CrossRef]

| Construct | Code | Question | Source |

|---|---|---|---|

| AIT | AIT1 | The functionality of GenAI is reliable. | (Al-Emran et al., 2022) |

| AIT2 | GenAI can be trusted. | ||

| AIT3 | I believe it is feasible to use GenAI in learning activities. | ||

| ATT | ATT1 | GenAI makes learning and working more interesting. | (T. Teo, 2016; Venkatesh et al., 2003) |

| ATT2 | I can accept the idea of using GenAI. | ||

| ATT3 | Using GenAI is a good idea. | ||

| AWA | AWA1 | I can distinguish between GenAI systems and non-GenAI systems. | (Calvani et al., 2009; C. Wang et al., 2025) |

| AWA2 | I know how GenAI systems can help me. | ||

| AWA3 | I can identify the GenAI technologies adopted in the applications and products I use. | ||

| BI | BI1 | In the future, I plan to continue using GenAI. | (Taylor & Todd, 1995; Venkatesh et al., 2003) |

| BI2 | I will keep trying to use GenAI in my daily life. | ||

| BI3 | I plan to continue using GenAI frequently. | ||

| ETH | ETH1 | I always follow ethical principles when using GenAI. | (Calvani et al., 2009; C. Wang et al., 2025) |

| ETH2 | I am very concerned about privacy and information security issues when using GenAI. | ||

| ETH3 | I try not to abuse GenAI. | ||

| EVA | EVA1 | I can evaluate the functions and limitations of GenAI systems after using them for a period of time. | |

| EVA2 | I can choose the appropriate solutions from the various solutions provided by GenAI systems. | ||

| EVA3 | I can select the most suitable GenAI system for a specific task from various GenAI systems. | ||

| PBC | PBC1 | I have control over using GenAI. | (Morris & Venkatesh, 2000) |

| PBC2 | I have the resources necessary to use GenAI. | ||

| PBC3 | I have the knowledge necessary to use GenAI. | ||

| PPR | PPR1 | During my personal learning process and in future teaching, I am worried that GenAI will collect too much of my personal information. | (C. Zhang et al., 2025) |

| PPR2 | During my personal learning process and in future teaching, GenAI will use my personal information for other purposes without my authorization. | ||

| PPR3 | During my personal learning process and in future teaching, GenAI will share my personal information without my authorization. | ||

| SN | SN1 | My parents support me in learning how to use GenAI. | (Venkatesh et al., 2012; C. Wang et al., 2025) |

| SN2 | My teacher believes it is necessary to learn how to use GenAI. | ||

| SN3 | My classmates think it is necessary to learn how to use GenAI. | ||

| SN4 | Most of the people I know think I should know how to use GenAI. |

| Attributes | Attributes | Frequency | Percentage (%) |

|---|---|---|---|

| Gender | Male | 242 | 24.06 |

| Female | 764 | 75.94 | |

| Age | 18–23 years old | 909 | 90.36 |

| 24–29 years old | 86 | 8.55 | |

| 30–35 years old | 6 | 0.60 | |

| ≥36 years old | 5 | 0.50 | |

| Major | Liberal Arts | 671 | 66.70 |

| Science | 106 | 10.54 | |

| Engineering | 159 | 15.81 | |

| Others | 70 | 6.96 | |

| Education | Associate’s degree | 217 | 21.57 |

| Bachelor’s degree | 673 | 66.90 | |

| Master’s degree | 96 | 9.54 | |

| Doctoral degree | 23 | 2.29 | |

| Region | West China | 114 | 11.33 |

| East China | 168 | 16.70 | |

| Central China | 686 | 68.19 | |

| South China | 17 | 1.69 | |

| North China | 21 | 2.09 |

| AIT | ATT | AWA | BI | ETH | EVA | PBC | PPR | SN | |

|---|---|---|---|---|---|---|---|---|---|

| AIT1 | 0.858 | 0.375 | 0.429 | 0.364 | 0.346 | 0.412 | 0.431 | 0.301 | 0.438 |

| AIT2 | 0.822 | 0.293 | 0.357 | 0.269 | 0.259 | 0.374 | 0.346 | 0.269 | 0.394 |

| AIT3 | 0.832 | 0.527 | 0.373 | 0.448 | 0.481 | 0.512 | 0.471 | 0.318 | 0.584 |

| ATT1 | 0.452 | 0.922 | 0.507 | 0.512 | 0.376 | 0.456 | 0.520 | 0.209 | 0.439 |

| ATT2 | 0.456 | 0.916 | 0.437 | 0.519 | 0.427 | 0.436 | 0.520 | 0.211 | 0.515 |

| ATT3 | 0.494 | 1.000 | 0.515 | 0.561 | 0.436 | 0.485 | 0.566 | 0.228 | 0.518 |

| AWA1 | 0.299 | 0.283 | 0.745 | 0.220 | 0.161 | 0.343 | 0.451 | 0.181 | 0.293 |

| AWA2 | 0.457 | 0.551 | 0.849 | 0.476 | 0.385 | 0.557 | 0.654 | 0.215 | 0.453 |

| AWA3 | 0.330 | 0.354 | 0.820 | 0.321 | 0.201 | 0.455 | 0.538 | 0.234 | 0.339 |

| BI1 | 0.433 | 0.562 | 0.425 | 0.939 | 0.415 | 0.474 | 0.510 | 0.231 | 0.477 |

| BI2 | 0.403 | 0.494 | 0.408 | 0.942 | 0.349 | 0.441 | 0.490 | 0.216 | 0.421 |

| BI3 | 0.443 | 0.558 | 0.442 | 1.000 | 0.403 | 0.484 | 0.530 | 0.237 | 0.474 |

| ETH1 | 0.429 | 0.439 | 0.311 | 0.419 | 0.906 | 0.413 | 0.395 | 0.228 | 0.463 |

| ETH2 | 0.373 | 0.362 | 0.305 | 0.341 | 0.882 | 0.405 | 0.355 | 0.223 | 0.411 |

| ETH3 | 0.372 | 0.335 | 0.243 | 0.293 | 0.829 | 0.381 | 0.351 | 0.279 | 0.401 |

| EVA1 | 0.458 | 0.391 | 0.492 | 0.389 | 0.344 | 0.839 | 0.489 | 0.313 | 0.422 |

| EVA2 | 0.458 | 0.454 | 0.474 | 0.445 | 0.402 | 0.869 | 0.521 | 0.287 | 0.471 |

| EVA3 | 0.429 | 0.389 | 0.504 | 0.401 | 0.417 | 0.838 | 0.546 | 0.277 | 0.477 |

| PBC1 | 0.387 | 0.495 | 0.570 | 0.454 | 0.398 | 0.529 | 0.810 | 0.215 | 0.441 |

| PBC2 | 0.471 | 0.487 | 0.587 | 0.460 | 0.321 | 0.509 | 0.869 | 0.243 | 0.470 |

| PBC3 | 0.406 | 0.432 | 0.579 | 0.414 | 0.335 | 0.492 | 0.822 | 0.256 | 0.437 |

| PPR1 | 0.330 | 0.223 | 0.183 | 0.232 | 0.368 | 0.321 | 0.265 | 0.803 | 0.345 |

| PPR2 | 0.288 | 0.172 | 0.243 | 0.174 | 0.151 | 0.264 | 0.218 | 0.861 | 0.261 |

| PPR3 | 0.251 | 0.161 | 0.225 | 0.173 | 0.132 | 0.254 | 0.212 | 0.804 | 0.292 |

| SN1 | 0.488 | 0.419 | 0.385 | 0.343 | 0.363 | 0.392 | 0.427 | 0.329 | 0.752 |

| SN2 | 0.466 | 0.391 | 0.352 | 0.363 | 0.398 | 0.436 | 0.430 | 0.297 | 0.848 |

| SN3 | 0.489 | 0.472 | 0.378 | 0.430 | 0.464 | 0.473 | 0.473 | 0.304 | 0.886 |

| SN4 | 0.498 | 0.451 | 0.429 | 0.451 | 0.408 | 0.497 | 0.473 | 0.303 | 0.859 |

| Construct | Item | Outer Loading | Cronbach’s Alpha (α > 0.7) | Rho-A (>0.7) | Composite Reliability (>0.7) | AVE (>0.5) |

|---|---|---|---|---|---|---|

| AIT | AIT1 | 0.858 | 0.792 | 0.811 | 0.876 | 0.701 |

| AIT2 | 0.822 | |||||

| AIT3 | 0.832 | |||||

| ATT | ATT1 | 0.922 | 0.941 | 0.945 | 0.963 | 0.896 |

| ATT2 | 0.916 | |||||

| ATT3 | 1.000 | |||||

| AWA | AWA1 | 0.745 | 0.736 | 0.772 | 0.847 | 0.649 |

| AWA2 | 0.849 | |||||

| AWA3 | 0.820 | |||||

| BI | BI1 | 0.939 | 0.958 | 0.960 | 0.973 | 0.923 |

| BI2 | 0.942 | |||||

| BI3 | 1.000 | |||||

| ETH | ETH1 | 0.906 | 0.844 | 0.853 | 0.906 | 0.762 |

| ETH2 | 0.882 | |||||

| ETH3 | 0.829 | |||||

| EVA | EVA1 | 0.839 | 0.806 | 0.808 | 0.886 | 0.721 |

| EVA2 | 0.869 | |||||

| EVA3 | 0.838 | |||||

| PBC | PBC1 | 0.810 | 0.781 | 0.781 | 0.873 | 0.696 |

| PBC2 | 0.869 | |||||

| PBC3 | 0.822 | |||||

| PPR | PPR1 | 0.803 | 0.764 | 0.771 | 0.863 | 0.678 |

| PPR2 | 0.861 | |||||

| PPR3 | 0.804 | |||||

| SN | SN1 | 0.752 | 0.857 | 0.863 | 0.904 | 0.702 |

| SN2 | 0.848 | |||||

| SN3 | 0.886 | |||||

| SN4 | 0.859 |

| AIT | ATT | AWA | BI | ETH | EVA | PBC | PPR | SN | UB | |

|---|---|---|---|---|---|---|---|---|---|---|

| AIT | ||||||||||

| ATT | 0.549 | |||||||||

| AWA | 0.586 | 0.588 | ||||||||

| BI | 0.492 | 0.590 | 0.499 | |||||||

| ETH | 0.526 | 0.487 | 0.389 | 0.447 | ||||||

| EVA | 0.645 | 0.556 | 0.726 | 0.551 | 0.554 | |||||

| PBC | 0.629 | 0.660 | 0.893 | 0.614 | 0.518 | 0.771 | ||||

| PPR | 0.447 | 0.265 | 0.350 | 0.273 | 0.330 | 0.433 | 0.364 | |||

| SN | 0.684 | 0.577 | 0.563 | 0.523 | 0.572 | 0.645 | 0.658 | 0.450 | ||

| UB | 0.117 | 0.118 | 0.165 | 0.185 | 0.028 | 0.095 | 0.109 | 0.042 | 0.123 |

| AIT | ATT | AWA | BI | ETH | EVA | PBC | PPR | SN | UB | |

|---|---|---|---|---|---|---|---|---|---|---|

| AIT | 1.567 | 1.567 | 1.567 | |||||||

| ATT | 1.623 | |||||||||

| AWA | 1.580 | 1.580 | 1.580 | |||||||

| BI | 1.000 | |||||||||

| ETH | 1.370 | 1.370 | 1.370 | |||||||

| EVA | 1.838 | 1.838 | 1.838 | |||||||

| PBC | 1.697 | |||||||||

| PPR | 1.000 | |||||||||

| SN | 1.573 | |||||||||

| UB |

| Hypothesis | Path Coefficient (β) (Bootstrap Mean) | Effect Size (f2) | T Statistics (|O/STDEV|) | p Values | Conclusion |

|---|---|---|---|---|---|

| AIT → ATT | 0.212 | 0.047 | 6.535 | 0.000 | Supported |

| AIT → PBC | 0.121 | 0.023 | 4.245 | 0.000 | Supported |

| AIT → SN | 0.318 | 0.118 | 9.532 | 0.000 | Supported |

| ATT → BI | 0.327 | 0.110 | 8.869 | 0.000 | Supported |

| AWA → ATT | 0.281 | 0.083 | 7.857 | 0.000 | Supported |

| AWA → PBC | 0.490 | 0.378 | 15.734 | 0.000 | supported |

| AWA → SN | 0.130 | 0.020 | 3.741 | 0.000 | Unsupported |

| BI → UB | 0.181 | 0.034 | 5.306 | 0.000 | Supported |

| ETH → ATT | 0.193 | 0.045 | 5.507 | 0.000 | Supported |

| ETH → PBC | 0.111 | 0.023 | 3.791 | 0.000 | Supported |

| ETH → SN | 0.212 | 0.060 | 7.195 | 0.000 | Supported |

| EVA → ATT | 0.123 | 0.014 | 3.245 | 0.001 | Unsupported |

| EVA → PBC | 0.223 | 0.067 | 6.508 | 0.000 | Supported |

| EVA → SN | 0.199 | 0.040 | 5.468 | 0.000 | Supported |

| PBC → BI | 0.263 | 0.068 | 7.188 | 0.000 | Supported |

| PPR → AIT | 0.356 | 0.146 | 9.186 | 0.000 | Supported |

| SN → BI | 0.162 | 0.028 | 4.653 | 0.000 | Supported |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Hu, X.; Sun, Y.; Li, L.; Deng, S.; Chen, X. Integrating AI Literacy with the TPB-TAM Framework to Explore Chinese University Students’ Adoption of Generative AI. Behav. Sci. 2025, 15, 1398. https://doi.org/10.3390/bs15101398

Zhang X, Hu X, Sun Y, Li L, Deng S, Chen X. Integrating AI Literacy with the TPB-TAM Framework to Explore Chinese University Students’ Adoption of Generative AI. Behavioral Sciences. 2025; 15(10):1398. https://doi.org/10.3390/bs15101398

Chicago/Turabian StyleZhang, Xiaoxuan, Xiaoling Hu, Yinguang Sun, Lu Li, Shiyi Deng, and Xiaowen Chen. 2025. "Integrating AI Literacy with the TPB-TAM Framework to Explore Chinese University Students’ Adoption of Generative AI" Behavioral Sciences 15, no. 10: 1398. https://doi.org/10.3390/bs15101398

APA StyleZhang, X., Hu, X., Sun, Y., Li, L., Deng, S., & Chen, X. (2025). Integrating AI Literacy with the TPB-TAM Framework to Explore Chinese University Students’ Adoption of Generative AI. Behavioral Sciences, 15(10), 1398. https://doi.org/10.3390/bs15101398