Discovering Engagement Personas in a Digital Diabetes Prevention Program

Abstract

:1. Introduction

2. Materials and Methods

2.1. Program Overview

2.2. Participants and Recruitment

2.3. Measures

2.3.1. Demographics

2.3.2. Weight Loss

2.3.3. Engagement Metrics

2.3.4. Creation of Engagement Time Series Variables

2.4. Statistical Analyses

2.4.1. Unsupervised Discovery of Engagement Personas

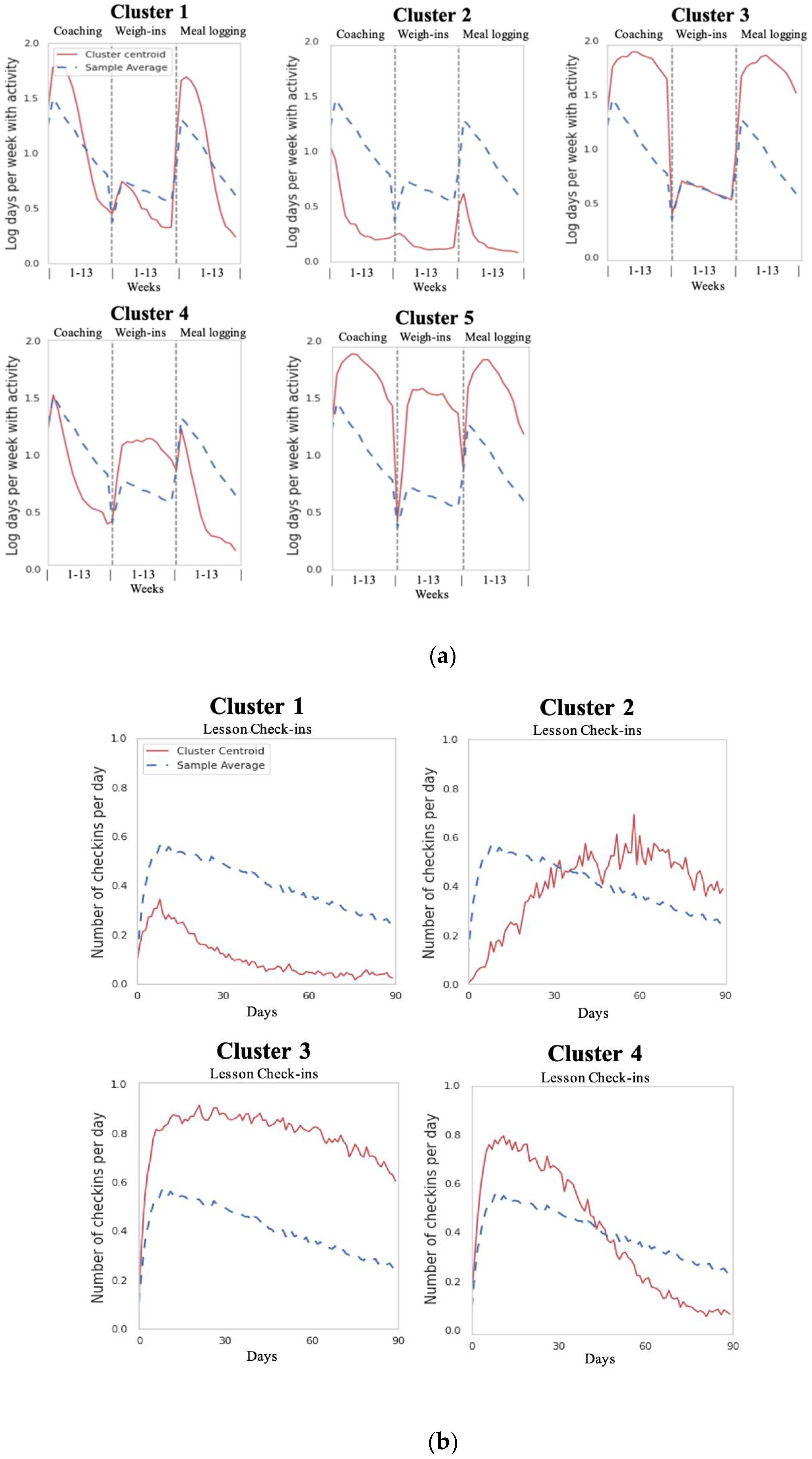

2.4.2. Univariate Cluster Analyses

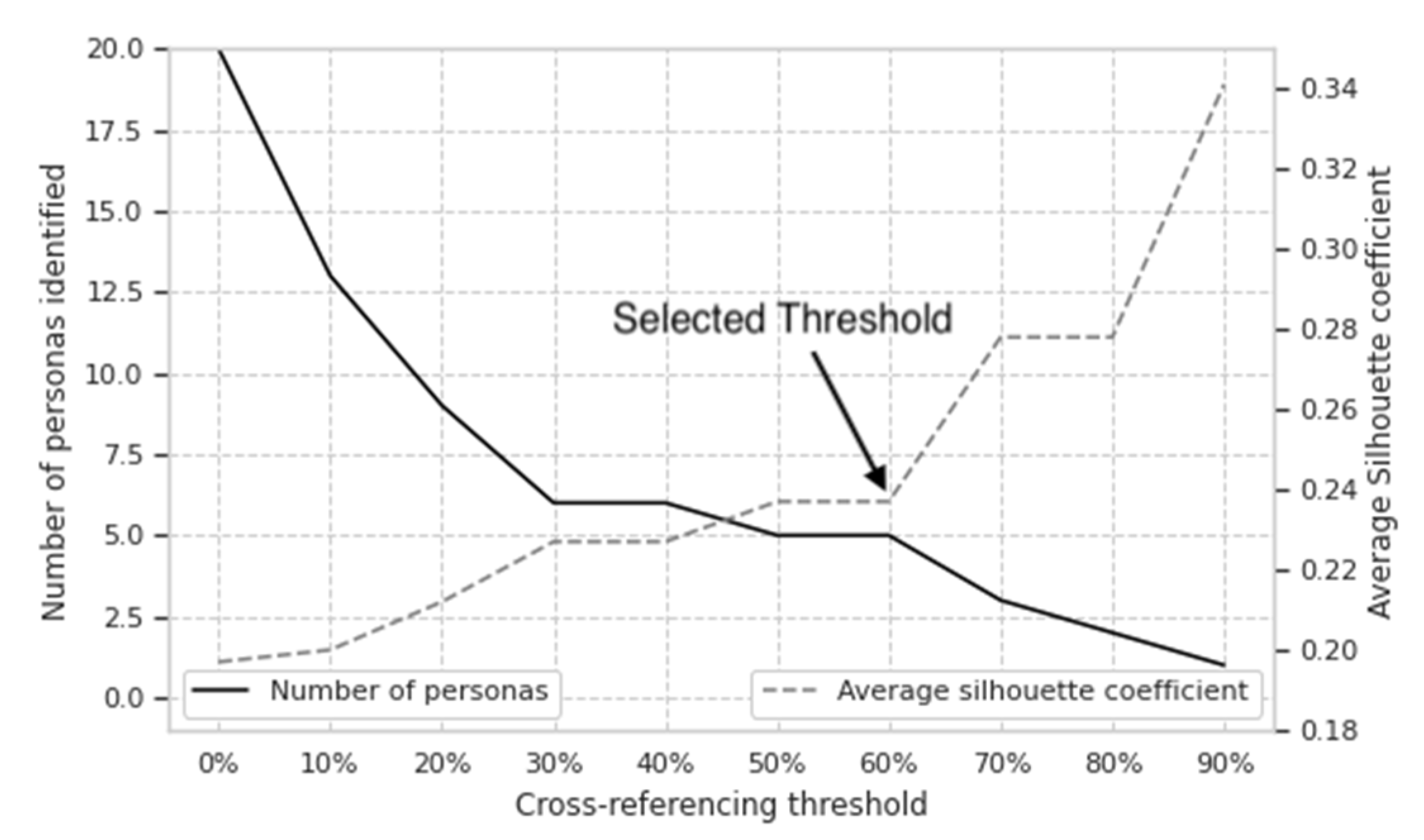

2.4.3. Bivariate Clustering Method to Determine Engagement Personas

2.4.4. Demographics and Characteristics of Engagement Personas

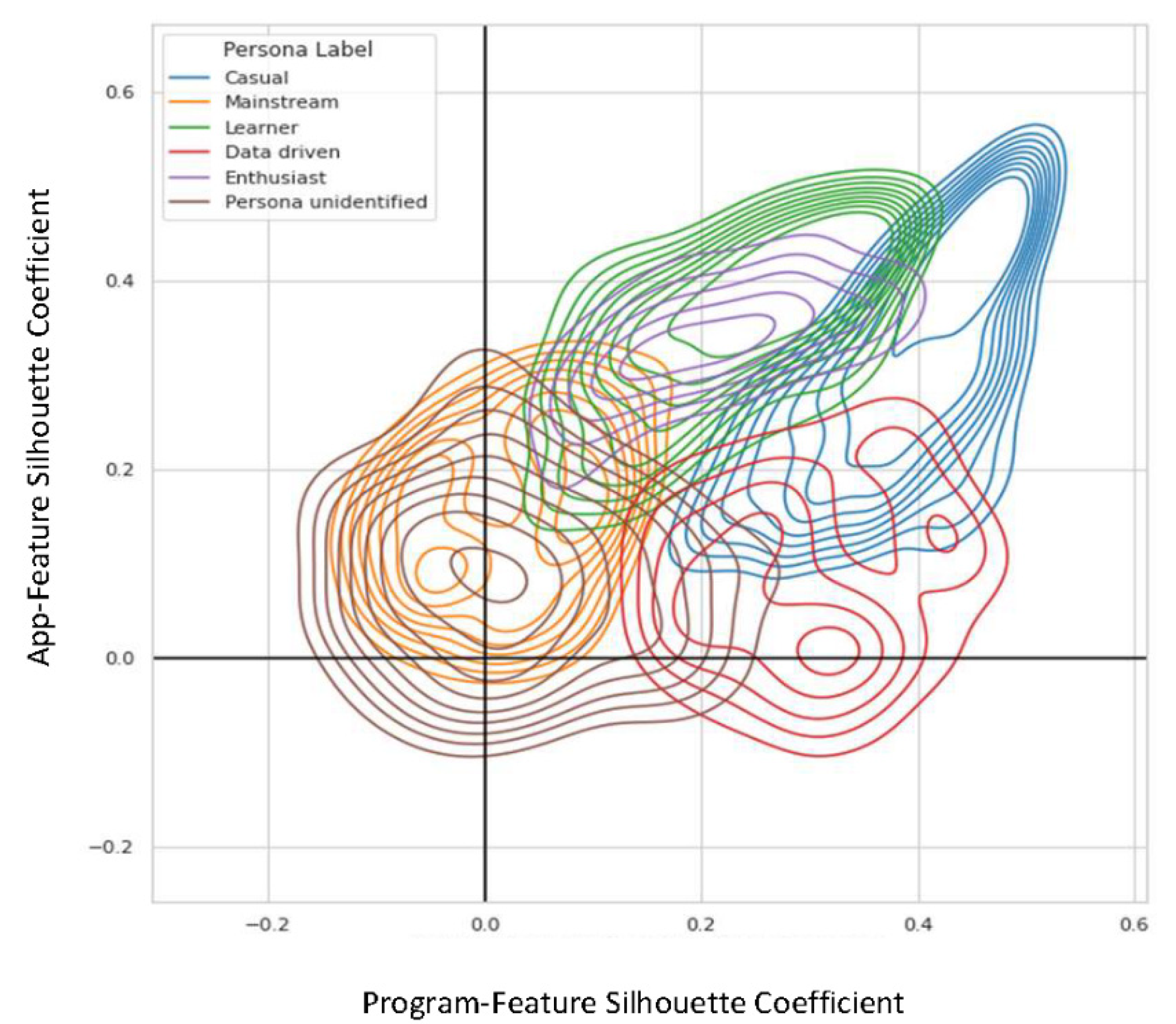

2.4.5. Statistical Validation of Engagement Personas

3. Results

3.1. Univariate Clusters

3.2. Bivariate Clusters: Identification of Engagement Personas

3.3. Demographics and Characteristics of Engagement Personas

3.4. Statistical Validation of Engagement Personas

4. Discussion

4.1. Utility of the Engagement Personas

4.2. Future Directions

4.3. Strengths and Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix A.1. Results of the Statistical Validation: Hierarchical Clustering

| Persona | % of Members Persisting |

|---|---|

| Not Identified | 71% |

| Casual Members | 38% |

| Mainstream Members | 69% |

| Learners | 82% |

| Data-Driven | 57% |

| Enthusiasts | 79% |

Appendix A.2. Results of the Statistical Validation: Independent Test Set

References

- Meskó, B.; Drobni, Z.; Bényei, É.; Gergely, B.; Győrffy, Z. Digital health is a cultural transformation of traditional healthcare. mHealth 2017, 3, 38. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Robbins, T.; Hudson, S.; Ray, P.; Sankar, S.; Patel, K.; Randeva, H.; Arvanitis, T.N. COVID-19: A new digital dawn? Digit. Health 2020, 6, 2055207620920083. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Centers for Disease Control and Prevention. About the National DPP. 2021. Available online: https://www.cdc.gov/diabetes/prevention/about.htm (accessed on 20 March 2022).

- Levine, B.J.; Close, K.L.; Gabbay, R.A. Reviewing US connected diabetes care: The newest member of the team. Diabetes Technol. Ther. 2020, 22, 1–9. [Google Scholar] [CrossRef]

- Centers for Disease Control and Prevention. About Prediabetes & Type 2 Diabetes. 2021. Available online: https://www.cdc.gov/diabetes/prevention/about-prediabetes.html (accessed on 20 March 2022).

- Diabetes Prevention Program Research Group. 10-year follow-up of diabetes incidence and weight loss in the Diabetes Prevention Program Outcomes Study. Lancet 2009, 374, 1677–1686. [Google Scholar] [CrossRef] [Green Version]

- American Diabetes Association. Economic Costs of Diabetes in the US in 2017. Diabetes Care 2018, 41, 917. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hamman, R.F.; Wing, R.R.; Edelstein, S.L.; Lachin, J.M.; Bray, G.A.; Delahanty, L.; Hoskin, M.; Kriska, A.M.; Mayer-Davis, E.J.; Pi-Sunyer, X.; et al. Effect of weight loss with lifestyle intervention on risk of diabetes. Diabetes Care 2006, 29, 2102–2107. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pew Research Center. Mobile Phone Fact Sheet. Internet and Technology. 2021. Available online: https://www.pewresearch.org/internet/fact-sheet/mobile/ (accessed on 20 March 2022).

- Ritchie, N.D.; Phimphasone-Brady, P.; Sauder, K.A.; Amura, C.R. Perceived barriers and potential solutions to engagement in the National Diabetes Prevention Program. ADCES Pract. 2021, 9, 16–20. [Google Scholar] [CrossRef]

- Michie, S.; Johnston, M.; Francis, J.; Hardeman, W.; Eccles, M. From Theory to Intervention: Mapping Theoretically Derived Behavioural Determinants to Behaviour Change Techniques. Appl. Psychol. 2008, 57, 660–680. [Google Scholar] [CrossRef]

- O’Connor, S.; Hanlon, P.; O’Donnell, C.A.; Garcia, S.; Glanville, J.; Mair, F.S. Understanding factors affecting patient and public engagement and recruitment to digital health interventions: A systematic review of qualitative studies. BMC Med. Inform. Decis. Mak. 2016, 16, 1–15. [Google Scholar] [CrossRef] [Green Version]

- Yardley, L.; Morrison, L.; Bradbury, K.; Muller, I. The person-based approach to intervention development: Application to digital health-related behavior change interventions. J. Med. Internet Res. 2015, 17, e30. [Google Scholar] [CrossRef]

- Nelson, L.A.; Coston, T.D.; Cherrington, A.L.; Osborn, C.Y. Patterns of user engagement with mobile-and web-delivered self-care interventions for adults with T2DM: A review of the literature. Curr. Diabetes Rep. 2016, 16, 1–20. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chapman, C.N.; Milham, R.P. The personas′ new clothes: Methodological and practical arguments against a popular method. In Proceedings of the Human Factors and Ergonomics Society, Los Angeles, CA, USA, 16–20 October 2006; pp. 634–636. [Google Scholar] [CrossRef] [Green Version]

- Pratap, A.; Neto, E.C.; Snyder, P.; Stepnowsky, C.; Elhadad, N.; Grant, D.; Mohebbi, M.H.; Mooney, S.; Suver, C.; Wilbanks, J.; et al. Indicators of retention in remote digital health studies: A cross-study evaluation of 100,000 participants. NPJ Digit. Med. 2020, 3, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; Turchioe, M.R.; Creber, R.M.; Biviano, A.; Hickey, K.; Bakken, S. Phenotypes of engagement with mobile health technology for heart rhythm monitoring. JAMIA Open 2021, 4, ooab043. [Google Scholar] [CrossRef]

- Robertson, M.C.; Raber, M.; Liao, Y.; Wu, I.; Parker, N.; Gatus, L.; Le, T.; Durand, C.P.; Basen-Engquist, K.M. Patterns of self-monitoring technology use and weight loss in people with overweight or obesity. Transl. Behav. Med. 2021, 11, 1537–1547. [Google Scholar] [CrossRef]

- Chien, I.; Enrique, A.; Palacios, J.; Regan, T.; Keegan, D.; Carter, D.; Tschiatschek, S.; Nori, A.; Thieme, A.; Richards, D.; et al. A machine learning approach to understanding patterns of engagement with internet-delivered mental health interventions. JAMA Netw. Open 2020, 3, e2010791. [Google Scholar] [CrossRef] [PubMed]

- Salminen, J.; Jansen, B.J.; An, J.; Kwak, H.; Jung, S. Are personas done? Evaluating their usefulness in the age of digital analytics. Pers. Stud. 2018, 4, 47–65. [Google Scholar] [CrossRef] [Green Version]

- Centers for Disease Control and Prevention. Could You Have Prediabetes? 2021. Available online: https://www.cdc.gov/prediabetes/takethetest/ (accessed on 20 March 2022).

- Cannon, M.J.; Masalovich, S.; Ng, B.P.; Soler, R.E.; Jabrah, R.; Ely, E.K.; Smith, B.D. Retention among Participants in the National Diabetes Prevention Program Lifestyle Change Program, 2012–2017. Diabetes Care 2020, 43, 2042–2049. [Google Scholar] [CrossRef]

- Lakerveld, J.; Palmeira, A.L.; van Duinkerken, E.; Whitelock, V.; Peyrot, M.; Nouwen, A. Motivation: Key to a healthy lifestyle in people with diabetes? Current and emerging knowledge and applications. Diabet. Med. 2020, 37, 464–472. [Google Scholar] [CrossRef]

- Lee, D.H.; Keum, N.N.; Hu, F.B.; Orav, E.J.; Rimm, E.B.; Willett, W.C.; Giovannucci, E.L. Comparison of the association of predicted fat mass, body mass index, and other obesity indicators with type 2 diabetes risk: Two large prospective studies in US men and women. Eur. J. Epidemiol. 2018, 33, 1113–1123. [Google Scholar] [CrossRef]

- Pourzanjani, A.; Quisel, T.; Foschini, L. Adherent use of digital health trackers is associated with weight loss. PLoS ONE 2016, 11, e0152504. [Google Scholar] [CrossRef] [Green Version]

- Sauder, K.A.; Ritchie, N.D.; Crowe, B.; Cox, E.; Hudson, M.; Wadhwa, S. Participation and weight loss in online National Diabetes Prevention Programs: A comparison of age and gender subgroups. Transl. Behav. Med. 2020. [Google Scholar] [CrossRef] [PubMed]

- Barrett, S.; Begg, S.; O’Halloran, P.; Kingsley, M. Integrated motivational interviewing and cognitive behaviour therapy for lifestyle mediators of overweight and obesity in community-dwelling adults: A systematic review and meta-analyses. BMC Public Health 2018, 18, 1–10. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- West, D.S.; Krukowski, R.A.; Finkelstein, E.A.; Stansbury, M.L.; Ogden, D.E.; Monroe, C.M.; Carpenter, C.A.; Naud, S.; Harvey, J.R. Adding Financial Incentives to Online Group-Based Behavioral Weight Control: An RCT. Am. J. Prev. Med. 2020, 59, 237–246. [Google Scholar] [CrossRef] [PubMed]

- Harrison, C.R.; Phimphasone-Brady, P.; DiOrio, B.; Raghuanath, S.G.; Bright, R.; Ritchie, N.D.; Sauder, K.A. Barriers and Facilitators of National Diabetes Prevention Program Engagement Among Women of Childbearing Age: A Qualitative Study. Diabetes Educ. 2020, 46, 279–288. [Google Scholar] [CrossRef] [PubMed]

- Ufholz, K. Peer Support Groups for Weight Loss. Curr. Cardiovasc. Risk Rep. 2020, 14, 1–11. [Google Scholar] [CrossRef]

- Zheng, Y.; Burke, L.E.; Danford, C.A.; Ewing, L.J.; Terry, M.A.; Sereika, S.M. Patterns of self-weighing behavior and weight change in a weight loss trial. Int. J. Obes. 2016, 40, 1392–1396. [Google Scholar] [CrossRef]

- Houston, M.; Van Dellen, M.; Cooper, J.A. Self-weighing Frequency and Its Relationship with Health Measures. Am. J. Health Behav. 2019, 43, 975–993. [Google Scholar] [CrossRef]

- Ely, E.K.; Gruss, S.M.; Luman, E.T.; Gregg, E.W.; Ali, M.K.; Nhim, K.; Rolka, D.B.; Albright, A.L. A national effort to prevent type 2 diabetes: Participant-level evaluation of CDC’s National Diabetes Prevention Program. Diabetes Care 2017, 40, 1331–1341. [Google Scholar] [CrossRef] [Green Version]

- Orji, R.; Lomotey, R.; Oyibo, K.; Orji, F.; Blustein, J.; Shahid, S. Tracking feels oppressive and ‘punishy’: Exploring the costs and benefits of self-monitoring for health and wellness. Digit. Health 2018, 4, 205520761879755. [Google Scholar] [CrossRef] [Green Version]

- Hamari, J.; Hassan, L.; Dias, A. Gamification, quantified-self or social networking? Matching users’ goals with motivational technology. In User Modeling and User-Adapted Interaction; Springer: Dordrecht, The Netherlands, 2018; Volume 28. [Google Scholar] [CrossRef] [Green Version]

- Kelly, M.P.; Barker, M. Why is changing health-related behaviour so difficult? Public Health 2016, 136, 109–116. [Google Scholar] [CrossRef] [Green Version]

- Lentferink, A.J.; Oldenhuis, H.K.E.; De Groot, M.; Polstra, L.; Velthuijsen, H.; Van Gemert-Pijnen, J.E.W.C. Key components in ehealth interventions combining self-tracking and persuasive eCoaching to promote a healthier lifestyle: A scoping review. J. Med. Internet Res. 2017, 19, e7288. [Google Scholar] [CrossRef] [PubMed]

- American Diabetes Association. Standards of Medical Care in Diabetes—2021. Diabetes Care. 2021, 44, S53. [Google Scholar] [CrossRef] [PubMed]

- Unni, E.J.; Gupta, S.; Sternbach, N. Trends of self-reported non-adherence among type 2 diabetes medication users in the United States across three years using the self-reported Medication Adherence Reasons Scale. Nutr. Metab. Cardiovasc. Dis. 2022, 32, 151–159. [Google Scholar] [CrossRef] [PubMed]

- Fischer, M.; Oberänder, N.; Weimann, A. Four main barriers to weight loss maintenance? A quantitative analysis of difficulties experienced by obese patients after successful weight reduction. Eur. J. Clin. Nutr. 2020, 74, 1192–1200. [Google Scholar] [CrossRef] [PubMed]

- Torous, J.; Michalak, E.E.; O’Brien, H.L. Digital Health and Engagement-Looking Behind the Measures and Methods. JAMA Netw. Open 2020, 3, e2010918. [Google Scholar] [CrossRef] [PubMed]

| Program-Feature Cluster Label | Total | |||||

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | |||

| App-Feature Cluster Label | 1 | 79 | 24 | 12 | 242 | 357 |

| 2 | 303 | 14 | 1 | 4 | 322 | |

| 3 | 7 | 74 | 306 | 42 | 429 | |

| 4 | 222 | 17 | 2 | 27 | 268 | |

| 5 | 4 | 33 | 146 | 54 | 237 | |

| Total | 615 | 162 | 467 | 369 | 1613 | |

| Persona Not Identified 1 (n = 394) | Casual Members 2 (n = 303) | Mainstream Members 3 (n = 242) | Learners 4 (n = 306) | Data-Driven Members 5 (n = 222) | Enthusiasts 6 (n = 146) | All (n = 1613) | |

|---|---|---|---|---|---|---|---|

| Mean (SE) % of n if <100 | |||||||

| Age (years) | 50.9 (0.5) 2,4,5 | 46.3 (0.6) 1,3,4,6 | 49.8 (0.7) 2,4,6 | 53.2 (0.5) 1,2,3,5 | 47.4 (0.6) 1,4,6 | 53.5 (0.8) 1,2,3,5 | 50.1 (0.3) |

| Body mass index (kg/m2) | 37.3 (0.4) 2 82% | 39.5 (0.5) 1,3,4,5,6 84% | 37.4 (0.5) 2 95% | 37.2 (0.4) 2 95% | 37.5 (0.4) 2 93% | 35.7 (0.6) 2 97% | 37.5 (0.2) 90% |

| % weight loss at 4 months | 2.7 (0.2) 6 65% | 1.6 (0.2) 5,6 29% | 2.0 (0.2) 6 52% | 2.6 (0.2) 6 82% | 3.1 (0.3) 2,6 80% | 4.5 (0.3) 1,2,3,4,5 95% | 2.8 (0.1) 64% |

| # of weigh-ins | 29.0 (1.4) 2,3,4,5,6 | 6.4 (0.5) 1,3,4,5,6 | 16.8 (0.9) 1,2,5,6 | 19.7 (0.7) 1,2,5,6 | 39.4 (2.1) 1,2,3,4,6 | 70.9 (2.9) 1,2,3,4,5 | 26.4 (0.7) |

| # of meals logged | 96.2 (3.5) 2,4,5,6 | 16.1 (0.8) 1,3,4,6 | 87.8 (3.0) 2,4,5,6 | 189.8 (4.8) 1,2,3,5 | 26.6 (1.2) 1,3,4,6 | 202.7 (8.9) 1,2,3,5 | 97.7 (2.3) |

| # of coaching exchanges | 115.6 (3.0) 2,4,5,6 | 31.9 (1.0) 1,3,4,6 | 114.7 (3.3) 2,4,5,6 | 219.0 (5.4) 1,2,3,5,6 | 47.9 (1.5) 1,3,4,6 | 246.7 (12.5) 1,2,3,4,5 | 121.9 (2.6) |

| # of check-ins | 34.9 (0.8) 2,4,5,6 | 6.7 (0.3) 1,3,4,5,6 | 35.7 (0.6) 2,4,5,6 | 71.2 (0.7) 1,2,3,5 | 11.6 (0.4) 1,2,3,4,6 | 68.9 (1.1) 1,2,3,5 | 36.5 (0.7) |

| % n % of n if <100 | |||||||

| Gender (% female) | 70% 2 | 60% 1,3 | 76% 2,4,6 | 61% 3 | 67% | 63% 3 | 66% |

| Race (% white) | 72% 96% | 68% 96% | 74% 95% | 72% 92% | 70% 97% | 77% 94% | 72% 95% |

| Ethnicity (% Hispanic or Latino) | 10% 96% | 12% 96% | 10% 95% | 13% 92% | 8% 97% | 9% 94% | 10% 95% |

| % in Facebook Group | 46% 2,5,6 | 20% 1,3,4,5,6 | 42% 2,5,6 | 44% 2,5,6 | 29% 1,2,3,4,6 | 65% 1,2,3,4,5 | 39% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hori, J.H.; Sia, E.X.; Lockwood, K.G.; Auster-Gussman, L.A.; Rapoport, S.; Branch, O.H.; Graham, S.A. Discovering Engagement Personas in a Digital Diabetes Prevention Program. Behav. Sci. 2022, 12, 159. https://doi.org/10.3390/bs12060159

Hori JH, Sia EX, Lockwood KG, Auster-Gussman LA, Rapoport S, Branch OH, Graham SA. Discovering Engagement Personas in a Digital Diabetes Prevention Program. Behavioral Sciences. 2022; 12(6):159. https://doi.org/10.3390/bs12060159

Chicago/Turabian StyleHori, Jonathan H., Elizabeth X. Sia, Kimberly G. Lockwood, Lisa A. Auster-Gussman, Sharon Rapoport, OraLee H. Branch, and Sarah A. Graham. 2022. "Discovering Engagement Personas in a Digital Diabetes Prevention Program" Behavioral Sciences 12, no. 6: 159. https://doi.org/10.3390/bs12060159

APA StyleHori, J. H., Sia, E. X., Lockwood, K. G., Auster-Gussman, L. A., Rapoport, S., Branch, O. H., & Graham, S. A. (2022). Discovering Engagement Personas in a Digital Diabetes Prevention Program. Behavioral Sciences, 12(6), 159. https://doi.org/10.3390/bs12060159