Development of an Ecologically Valid Assessment for Social Cognition Based on Real Interaction: Preliminary Results

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

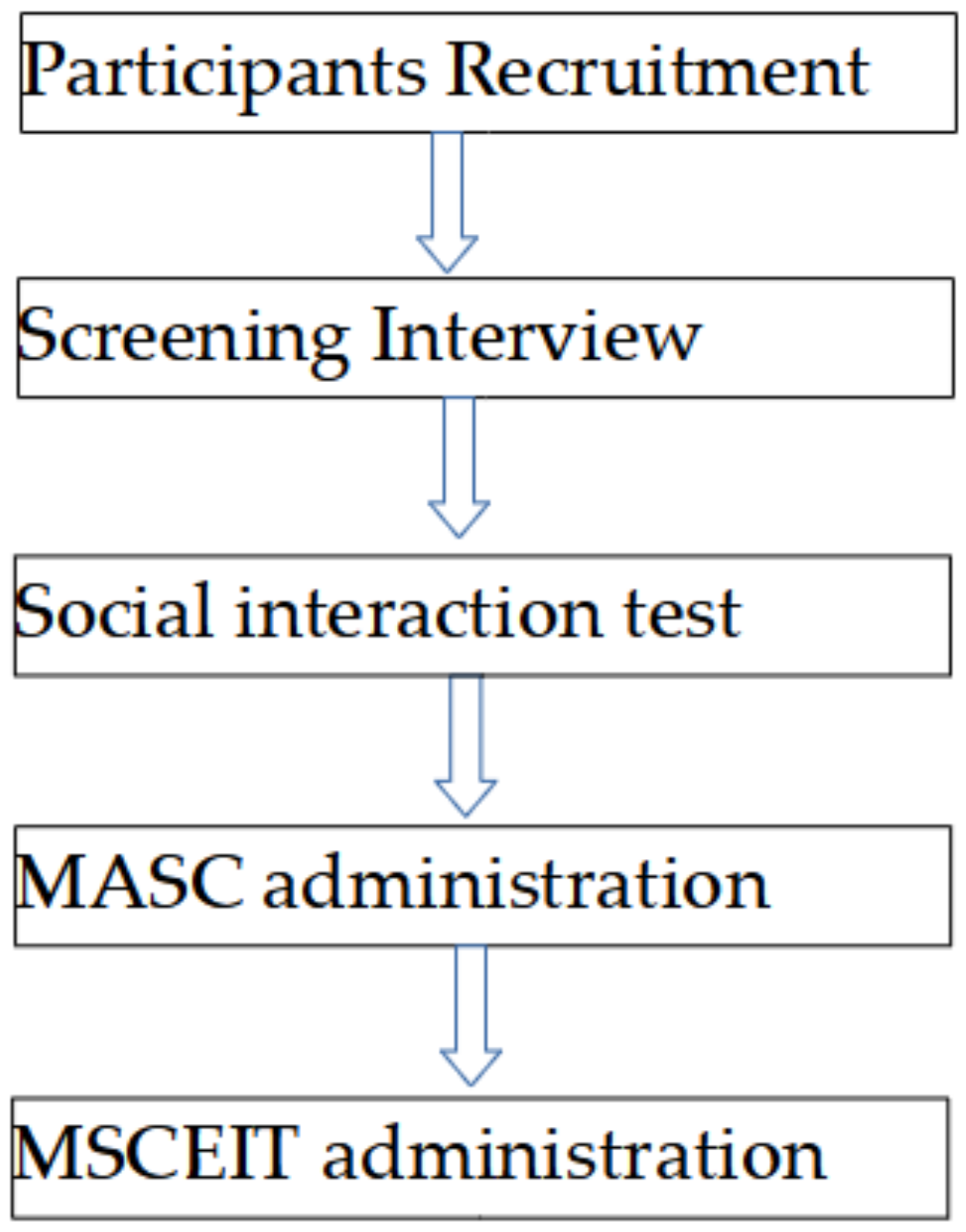

2.2. Research Design

2.2.1. Social Interaction Assessment Development

- -

- Natural feel: The actor’s performance must be accurate to the presented situation.

- -

- Credibility: Overall credibility, but most importantly in the expression of emotions, the performances corresponding to each item must be appropriate in intensity and type.

- -

- Rhythm: There must be extra natural interaction between items; otherwise, the resulting assessment could be perceived as too fast, “cold”, or hardly believable.

- -

- Pertinence: The item could be included in this test (yes or no); there must be a consensus among all judges to keep the item in the test.

- -

- Relevance: The item was considered accurate if it assessed the cognitive domain that it was intended to assess (apparent validity) (5-point Likert scale).

- -

- Apparent discrimination: The item was useful to identify subjects with high and low cognitive capabilities in each domain (5-point Likert scale).

- -

- All six judges consider the item to be pertinent.

- -

- The average relevance and apparent discrimination are 4 or higher.

- -

- The inter-judge deviation quotient on relevance and apparent discrimination is less than 0.25.

2.2.2. Procedure

2.3. Measures

3. Results

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Beaudoin, C.; Beauchamp, M.H. Social cognition. Handb. Clin. Neurol. 2020, 173, 255–264. [Google Scholar] [CrossRef]

- Kilford, E.J.; Garrett, E.; Blakemore, S.J. The development of social cognition in adolescence: An integrated perspecive. Neurosci. Biobehav. Rev. 2016, 70, 106–120. [Google Scholar] [CrossRef] [PubMed]

- Lieberman, M.D. Social cognitive neuroscience: A review of core processes. Annu. Rev. Psychol. 2007, 58, 259–289. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Happé, F.; Cook, J.L.; Bird, G. The Structure of Social Cognition: In(ter)dependence of Sociocognitive Processes. Anu. Rev. Psychol. 2017, 68, 243–267. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pinkham, A.E.; Harvey, P.D.; Penn, D.L. Social Cognition Psychometric Evaluation: Results of the Final Validation Study. Schizophr. Bull. 2018, 44, 737–748. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Beer, J.S.; Ochsner, K.N. Social cognition: A multi level analysis. Brain Res. 2006, 1079, 98–105. [Google Scholar] [CrossRef] [PubMed]

- Brothers, L. The Social Brain: A Project for Integrating Primate Behavior and Neurophysiology in a New Domain. In Foundations in Social Neuroscience; Cacioppo, J.T., Ed.; MIT Press: Cambridge, UK, 2002; pp. 367–385. [Google Scholar]

- Green, M.F.; Penn, D.L.; Bentall, R.; Carpenter, W.T.; Gaebel, W.; Gur, R.C.; Kring, A.M.; Park, S.; Silverstein, S.M.; Heinssen, R. Social cognition in schizophrenia: An NIMH workshop on definitions, assessment, and research opportunities. Schizophr. Bull. 2008, 34, 1211–1220. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Penn, D.L.; Corrigan, P.W.; Bentall, R.P.; Racenstein, J.M.; Newman, L. Social cognition in schizophrenia. Psychol. Bull. 1997, 121, 114–132. [Google Scholar] [CrossRef]

- Premack, D.; Woodruff, P.W. Does the chimpanzee have a theory of mind? Behav. Brain Sci. 1978, 4, 515–629. [Google Scholar] [CrossRef] [Green Version]

- Corbera, S.; Wexler, B.E.; Ikezawa, S.; Bell, M.D. Factor structure of social cognition in schizophrenia: Is empathy preserved? Schizophr. Res. Treat. 2013, 2013, 409205. [Google Scholar] [CrossRef] [Green Version]

- Lewkowicz, D.J. The Concept of Ecological Validity: What Are Its Limitations and Is It Bad to Be Invalid? Infancy 2001, 2, 437–450. [Google Scholar] [CrossRef]

- Odhuba, R.A.; van den Broek, M.D.; Johns, L.C. Ecological validity of measures of executive functioning. Br. J. Clin. Psychol. 2005, 44 Pt 2, 269–278. [Google Scholar] [CrossRef] [PubMed]

- Sbordone, R.J. Ecological validity: Some critical issues for the neuropsychologist. In Ecological Validity of Neuropsychological Testing; Sbordone, R.J., Long, C.J., Eds.; GR Press/St. Lucie Press: Delray Beach, FL, USA, 1996; pp. 15–41. [Google Scholar]

- Dawson, D.R.; Marcotte, T.D. Special issue on ecological validity and cognitive assessment. Neuropsychol. Rehabil. 2017, 27, 599–602. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Herbort, M.C.; Iseev, J.; Stolz, C.; Roeser, B.; Großkopf, N.; Wüstenberg, T.; Hellweg, R.; Walter, H.; Dziobek, I.; Schott, B.H. The ToMenovela—A Photograph-Based Stimulus Set for the Study of Social Cognition with High Ecological Validity. Front. Psychol. 2016, 7, 1883. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Reader, A.T.; Holmes, N.P. Examining ecological validity in social interaction: Problems of visual fidelity, gaze, and social potential. Cult. Brain 2016, 4, 134–146. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Burgess, P.W.; Alderman, N.; Volle, E.; Benoit, R.G.; Gilbert, S.J. Mesulam’s frontal lobe mystery re-examined. Restor. Neurol. Neurosci. 2009, 27, 493–506. [Google Scholar] [CrossRef] [PubMed]

- Mesulam, M.-M. Frontal cortex and behavior. Ann. Neurol. 1986, 19, 320–325. [Google Scholar] [CrossRef]

- Warnell, K.R.; Redcay, E. Minimal coherence among varied theory of mind measures in childhood and adulthood. Cognition 2019, 191, 103997. [Google Scholar] [CrossRef] [Green Version]

- Bombín-González, I.; Cifuentes-Rodríguez, A.; Climent-Martínez, G.; Luna-Lario, P.; Cardas-Ibáñez, J.; Tirapu-Ustárroz, J.; Díaz-Orueta, U. Validez ecológica y entornos multitarea en la evaluación de las funciones ejecutivas [Ecological validity and multitasking environments in the evaluation of the executive functions]. Rev. Neurol. 2014, 59, 77–87. (In Spanish) [Google Scholar]

- Gioia, D.; Brekke, J.S. Neurocognition, Ecological Validity, and Daily Living in the Community for Individuals with Schizophrenia: A Mixed Methods Study. Psychiatry 2009, 72, 94–107. [Google Scholar] [CrossRef]

- Lippa, S.M.; Pastorek, N.J.; Romesser, J.; Linck, J.; Sim, A.H.; Wisdom, N.M.; Miller, B.I. Ecological Validity of Performance Validity Testing. Arch. Clin. Neuropsychol. 2014, 29, 236–244. [Google Scholar] [CrossRef] [Green Version]

- Newton, P.; Shaw, S. Validity in Educational & Psychological Assessment; SAGE Publications Ltd.: Thousand Oaks, CA, USA, 2015. [Google Scholar]

- Corrigan, P.W.; Addis, I.B. The effects of cognitive complexity on a social sequencing task in schizophrenia. Schizophr. Res. 1995, 16, 137–144. [Google Scholar] [CrossRef]

- Corrigan, P.W.; Buican, B.; Toomey, R. Construct validity of two tests of social cognition in schizophrenia. Psychiatry Res. 1996, 63, 77–82. [Google Scholar] [CrossRef]

- Combs, D.R.; Penn, D.L.; Wicher, M.; Waldheter, E. The Ambiguous Intentions Hostility Questionnaire (AIHQ): A new measure for evaluating hostile social-cognitive biases in paranoia. Cogn. Neuropsychiatry 2007, 12, 128–143. [Google Scholar] [CrossRef] [PubMed]

- Rosenthal, R.; Hall, J.; DiMatteo, M.R.; Rogers, P.L.; Archer, D. Sensitivity to Non Verbal Communication; The PONS Test; Johns Hopkins Universirty Press: Baltimore, MD, USA, 1979. [Google Scholar]

- Costanzo, M.; Archer, D. Interperting the expressive behavior of others: The Interpersonal Perception Task. J. Nonverbal Behav. 1989, 13, 225–245. [Google Scholar] [CrossRef]

- Cutting, J.; Murphy, D. Impaired ability of schizophrenics, relative to manics or depressives, to appreciate social knowledge about their culture. Br. J. Psychiatry J. Ment. Sci. 1990, 157, 355–358. [Google Scholar] [CrossRef]

- McDonald, S.; Flanagan, S.; Rollins, J.; Kinch, J. TASIT: A new clinical tool for assessing social perception after traumatic brain injury. J. Head Trauma Rehabil. 2003, 18, 219–238. [Google Scholar] [CrossRef]

- Bell, M.; Bryson, G.; Lysaker, P. Positive and negative affect recognition in schizophrenia: A comparison with substance abuse and normal control subjects. Psychiatry Res. 1997, 73, 73–82. [Google Scholar] [CrossRef]

- Gur, R.C.; Sara, R.; Hagendoorn, M.; Marom, O.; Hughett, P.; Macy, L.; Turner, T.; Bajcsy, R.; Posner, A.; Gur, R.E. A method for obtaining 3-dimensional facial expressions and its standardization for use in neurocognitive studies. J. Neurosci. Methods 2002, 115, 137–143. [Google Scholar] [CrossRef]

- Baron-Cohen, S.; Wheelwright, S.; Hill, J.; Raste, Y.; Plumb, I. The “Reading the Mind in the Eyes” Test revised version: A study with normal adults, and adults with Asperger syndrome or high-functioning autism. J. Child. Psychol. Psychiatry 2001, 42, 241–251. [Google Scholar] [CrossRef]

- Corcoran, R.; Mercer, G.; Frith, C.D. Schizophrenia, symptomatology and social inference: Investigating “theory of mind” in people with schizophrenia. Schizophr. Res. 1995, 17, 5–13. [Google Scholar] [CrossRef]

- Davidson, C.A.; Lesser, R.; Parente, L.T.; Fiszdon, J.M. Psychometrics of social cognitive measures for psychosis treatment research. Schizophr. Res. 2018, 193, 51–57. [Google Scholar] [CrossRef]

- Ludwig, K.A.; Pinkham, A.E.; Harvey, P.D.; Kelsven, S.; Penn, D.L. Social cognition psychometric evaluation (SCOPE) in people with early psychosis: A preliminary study. Schizophr. Res. 2017, 190, 136–143. [Google Scholar] [CrossRef] [PubMed]

- Pinkham, A.E.; Penn, D.L.; Green, M.F.; Harvey, P.D. Social Cognition Psychometric Evaluation: Results of the Initial Psychometric Study. Schizophr. Bull. 2016, 42, 494–504. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Keysar, B.; Barr, D.J.; Balin, J.A.; Brauner, J.S. Taking perspective in conversation: The role of mutual knowledge in comprehension. Psychol. Sci. 2000, 11, 32–38. [Google Scholar] [CrossRef] [PubMed]

- Keysar, B.; Lin, S.; Barr, D.J. Limits on theory of mind use in adults. Cognition 2003, 89, 25–41. [Google Scholar] [CrossRef]

- Sanchez-Garcia, M.; Extremera, N.; Fernandez-Berrocal, P. The factor structure and psychometric properties of the Spanish version of the Mayer-Salovey-Caruso Emotional Intelligence Test. Psychol. Assess. 2016, 28, 1404–1415. [Google Scholar] [CrossRef] [Green Version]

- Eack, S.M.; Greeno, C.G.; Pogue-Geile, M.F.; Newhill, C.E.; Hogarty, G.E.; Keshavan, M.S. Assessing Social-Cognitive Deficits in Schizophrenia With the Mayer-Salovey-Caruso Emotional Intelligence Test. Schizophr. Bull. 2008, 36, 370–380. [Google Scholar] [CrossRef] [Green Version]

- Green, M.F.; Nuechterlein, K.H.; Gold, J.M.; Barch, D.M.; Cohen, J.; Essock, S.; Fenton, W.S.; Frese, F.; Goldberg, T.E.; Heaton, R.K.; et al. Approaching a consensus cognitive battery for clinical trials in schizophrenia: The NIMH-MATRICS conference to select cognitive domains and test criteria. Biol. Psychiatry 2004, 56, 301–307. [Google Scholar] [CrossRef]

- Mayer, J.D.; Salovey, P. MSCEIT. Test de Inteligencia Emocional Mayer-Salovey-Caruso; TEA Ediciones: Madrid, Spain, 2007. [Google Scholar]

- Wearne, T.A.; McDonald, S. Social cognition v. emotional intelligence in first-episode psychosis: Are they the same? Psychol. Med. 2020, 51, 1229–1230. [Google Scholar] [CrossRef] [Green Version]

- Dziobek, I.; Fleck, S.; Kalbe, E.; Rogers, K.; Hassenstab, J.; Brand, M.; Kessler, J.; Woike, J.K.; Wolf, O.T.; Convit, A. Introducing MASC: A Movie for the Assessment of Social Cognition. J. Autism Dev. Disord. 2006, 36, 623–636. [Google Scholar] [CrossRef]

- Lahera, G.; Boada, L.; Pousa, E.; Mirapeix, I.; Morón-Nozaleda, G.; Marinas, L.; Gisbert, L.; Pamiàs, M.; Parellada, M. Movie for the Assessment of Social Cognition (MASC): Spanish Validation. J. Autism Dev. Disord. 2014, 44, 1886–1896. [Google Scholar] [CrossRef] [PubMed]

- Mayer, J.; Salovey, P.; Caruso, D.R. TARGET ARTICLES: "Emotional Intelligence: Theory, Findings, and Implications". Psychol. Inq. 2004, 15, 197–215. [Google Scholar] [CrossRef]

- Proverbio, A.M. Sex differences in the social brain and in social cognition. J. Neurosci. Res. 2021, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Quesque, F.; Rossetti, Y. What Do Theory-of-Mind Tasks Actually Measure? Theory and Practice. Perspect. Psychol. Sci. 2020, 15, 384–396. [Google Scholar] [CrossRef] [PubMed]

- Hogenelst, K.; Schoevers, R.A.; Rot, M.A.H. Studying the neurobiology of human social interaction: Making the case for ecological validity. Soc. Neurosci. 2015, 10, 219–229. [Google Scholar] [CrossRef]

| Total (n = 50) | Women (n = 28) | Men (n = 22) | |

|---|---|---|---|

| Mean | 10.02 | 8.96 | 11.36 |

| Standard Deviation | 3.47 | 3.49 | 3.03 |

| Minimum | 0 | 0 | 6 |

| Maximum | 18 | 15 | 18 |

| F = 6.521 (Significance = 0.014) KS = 0.854 (Significance = 0.460) Crombach’s alpha = 0.701 | |||

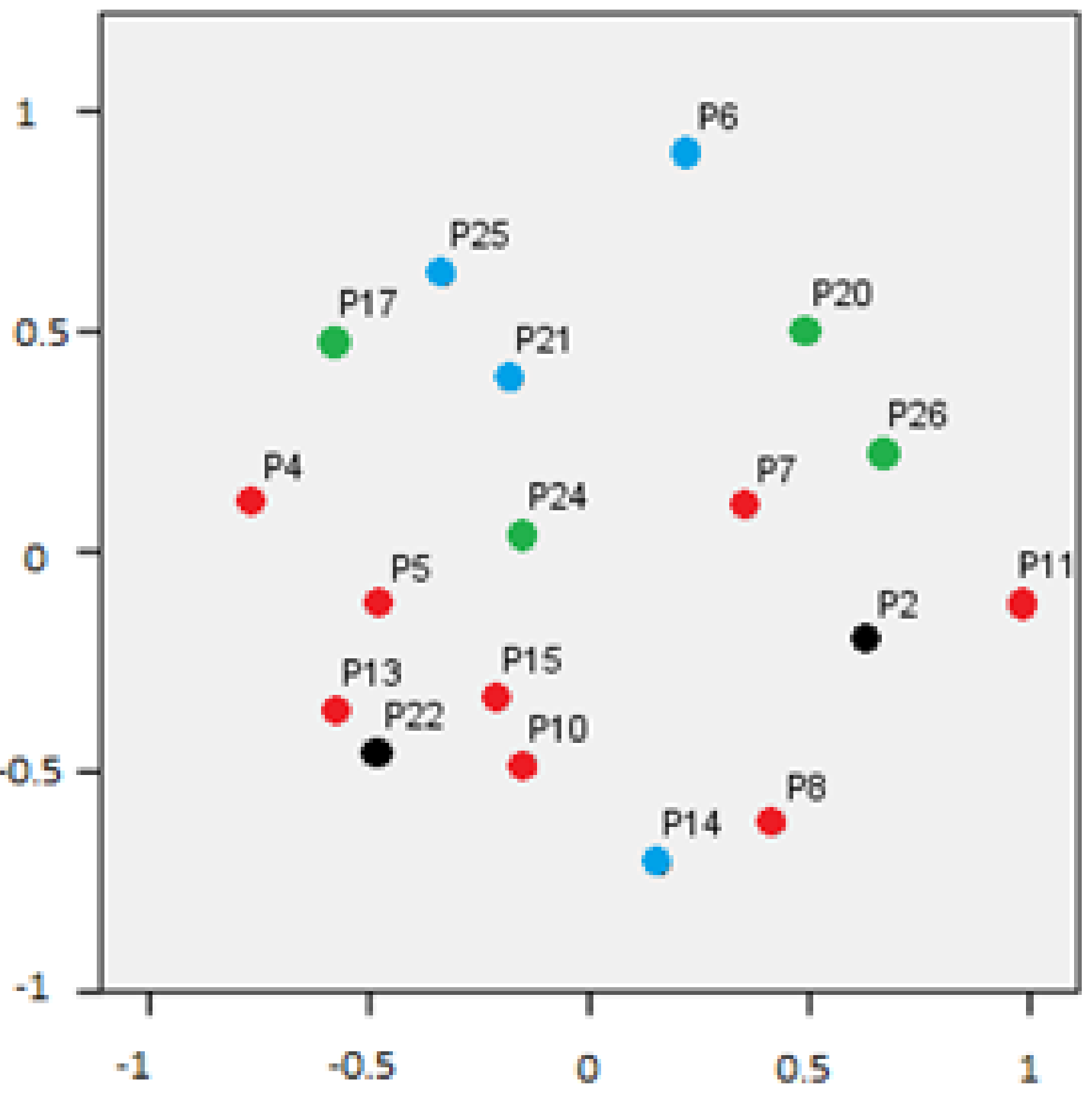

| Raw Standardized Stress | 0.067 |

|---|---|

| Stress-I | 0.259 (*) |

| Stress-II | 0.667 (*) |

| S-Stress | 0.165 (**) |

| Dispersion accounted for | 0.932 |

| Tucker’s Congruence Coefficient | 0.965 |

| MSCEIT Emotional Management | MSCEIT Social Management | MSCEIT Emotional Management Branch | MASC Total Score | Social Interaction Test Score | |

|---|---|---|---|---|---|

| MSCEIT Emotional Management | 1 | ||||

| MSCEIT Social Management | 0.304 (*) | 1 | |||

| MSCEIT Emotional Management Branch | 0.694 (**) | 0.882 (**) | 1 | ||

| MASC total score | 0.055 | 0.159 | 0.137 | 1 | |

| Social Interaction Test Score | 0.218 | 0.008 | 0.106 | 0.465 (**) | 1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Benito-Ruiz, G.; Luzón-Collado, C.; Arrillaga-González, J.; Lahera, G. Development of an Ecologically Valid Assessment for Social Cognition Based on Real Interaction: Preliminary Results. Behav. Sci. 2022, 12, 54. https://doi.org/10.3390/bs12020054

Benito-Ruiz G, Luzón-Collado C, Arrillaga-González J, Lahera G. Development of an Ecologically Valid Assessment for Social Cognition Based on Real Interaction: Preliminary Results. Behavioral Sciences. 2022; 12(2):54. https://doi.org/10.3390/bs12020054

Chicago/Turabian StyleBenito-Ruiz, Guillermo, Cristina Luzón-Collado, Javier Arrillaga-González, and Guillermo Lahera. 2022. "Development of an Ecologically Valid Assessment for Social Cognition Based on Real Interaction: Preliminary Results" Behavioral Sciences 12, no. 2: 54. https://doi.org/10.3390/bs12020054

APA StyleBenito-Ruiz, G., Luzón-Collado, C., Arrillaga-González, J., & Lahera, G. (2022). Development of an Ecologically Valid Assessment for Social Cognition Based on Real Interaction: Preliminary Results. Behavioral Sciences, 12(2), 54. https://doi.org/10.3390/bs12020054