What Are US Undergraduates Taught and What Have They Learned About US Continental Crust and Its Sedimentary Basins?

Abstract

1. Introduction

- (a)

- from what they have learned before starting the class

- (b)

- from what they hear and see in lecture, lab, and from each other while studying

- (c)

- from reading assigned texts and other course materials

- (d)

- from self-motivated learning from various sources: Wikipedia, videos, web searches, social media, etc.

2. Materials and Methods

2.1. Textbook Assessment

2.2. Student Assessment

- Depressions of the Earth’s crust in which a thick sequence of sediments has been deposited

- Craters left by a meteorite impact and filled with igneous rock

- A place where Earth’s surface has collapsed into a sinkhole

- A low-lying region carved out by glaciers

- Which of the four topics do students feel most confident about?

- Which of the four topics do students feel least confident about?

- Which, if any, of these topics can students explain clearly?

3. Results

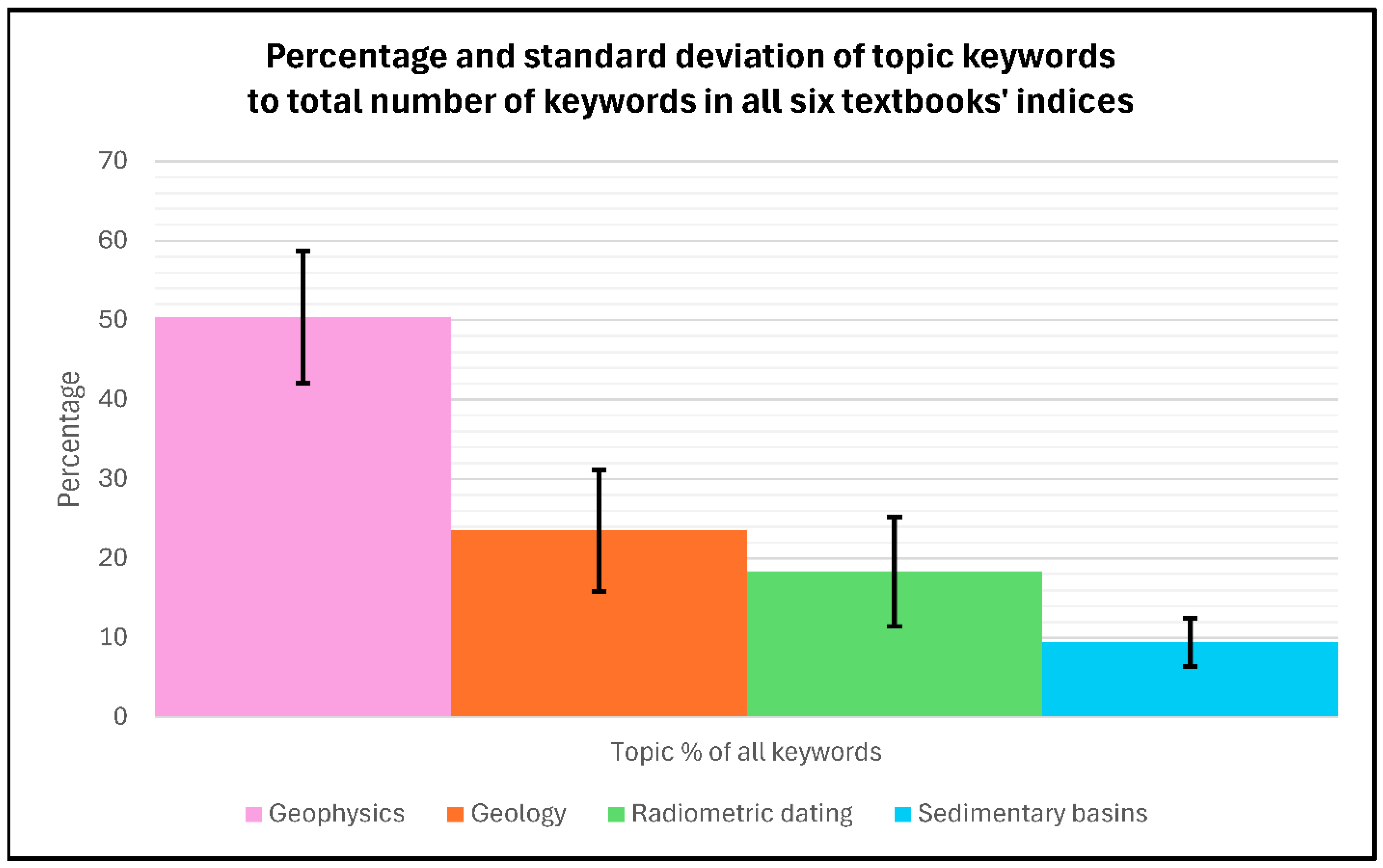

3.1. Textbook Index Review

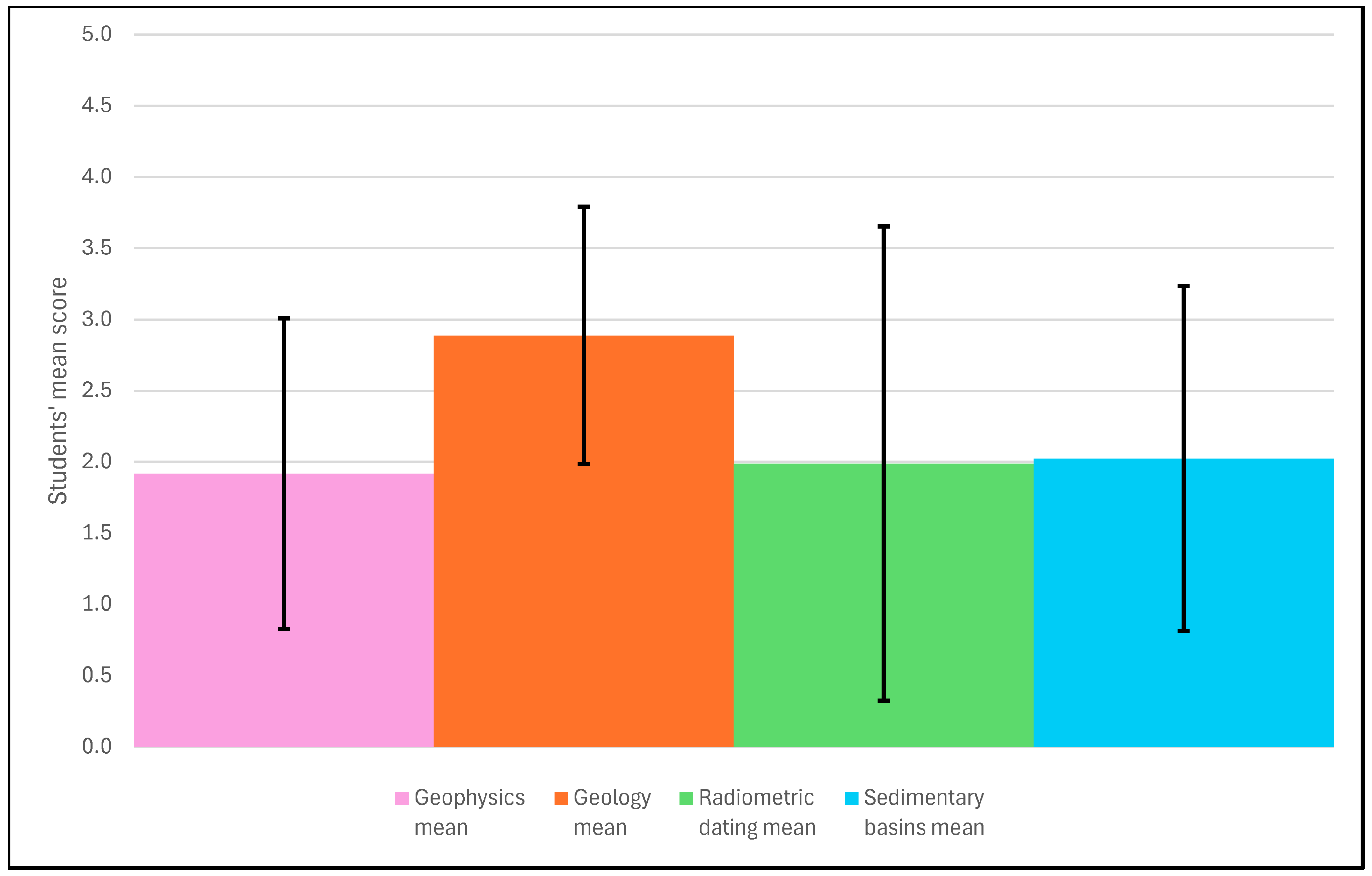

3.2. Student Interviews

- (1)

- Keyword presence is a simple count of all words related to the topic.

- (2)

- Keyword usage scores how well the word is used in the answer. A word in a sentence, “geologists find resources,” is scored higher than the word “resources” appearing in a list of words.

- (3)

- Examples awards points to relevant examples. “Geologists find resources like groundwater” is an example that would receive a high score.

- (4)

- Clarity/vagueness awards higher scores to complete sentences and full explanations and lower scores to lists and incoherent statements. A list of a dozen geophysics terms would receive a score of 5 for presence of keywords, but a 1 for Clarity/vagueness.

“I don’t think I have ever heard of one. But just going based off the two words together I think it could be an area where multiple sedimentary rocks sit. I don’t know why they would be important.”

4. Discussion

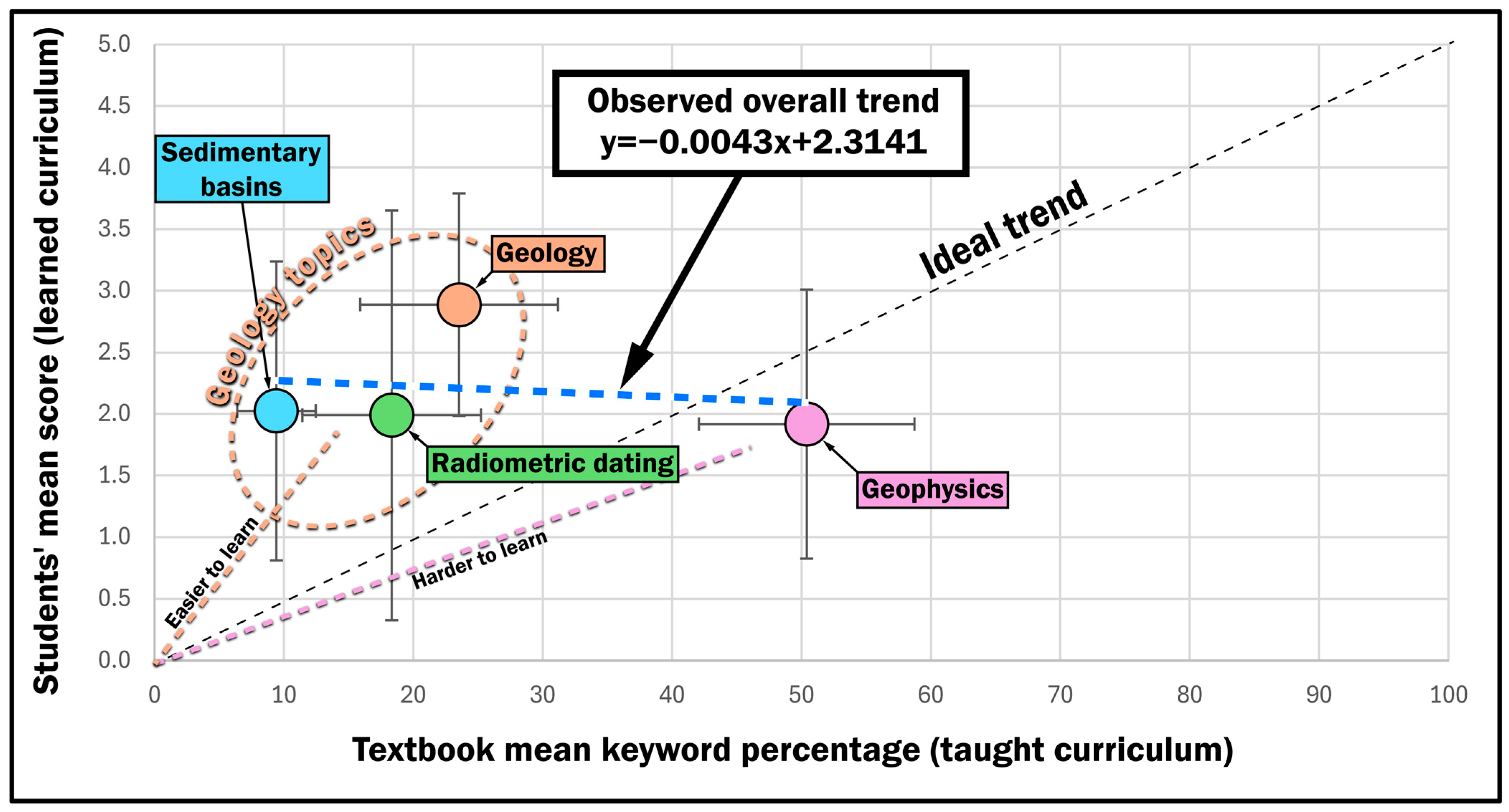

4.1. Taught Curriculum

4.2. Learned Curriculum

4.3. Comparing Taught and Learned Curriculum

4.4. Designing a Better Student Survey

4.5. Lessons for Producing the Videos

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Fortier, S.M.; Nassar, N.T.; Graham, G.E.; Hammarstrom, J.M.; Day, W.C.; Mauk, J.L. USGS Critical Minerals Review. Min. Eng. 2022, 74, 34–48. [Google Scholar]

- Schulz, K.; DeYoung, J.; Seal, R.; Bradley, D. Professional Paper 1802: Critical Mineral Resources of the United States—Economic and Environmental Geology and Prospects for Future Supply. Professional Thesis, U.S. Geological Survey, Reston, VA, USA, 2017. [Google Scholar]

- Sovacool, B.K.; Ali, S.H.; Bazilian, M.; Radley, B.; Okatz, J.; Mulvaney, D. Sustainable Minerals and Metals for a Low-Carbon Future. Science 2020, 367, 30–33. [Google Scholar] [CrossRef]

- Brady, P.V.; Freeze, G.A.; Kuhlman, K.L.; Hardin, E.L.; Sassani, D.C.; MacKinnon, R.J. Deep Borehole Disposal of Nuclear Waste. In Geological Repository Systems for Safe Disposal of Spent Nuclear Fuels and Radioactive Waste; Elsevier: Amsterdam, The Netherlands, 2017; pp. 89–112. ISBN 978-0-08-100642-9. [Google Scholar]

- Zou, L.; Cvetkovic, V. Disposal of High-Level Radioactive Waste in Crystalline Rock: On Coupled Processes and Site Development. Rock Mech. Bull. 2023, 2, 100061. [Google Scholar] [CrossRef]

- Petcovic, H.L.; Ruhf, R.J. Geoscience Conceptual Knowledge of Preservice Elementary Teachers: Results from the Geoscience Concept Inventory. J. Geosci. Educ. 2008, 56, 251–260. [Google Scholar] [CrossRef]

- Jones, J.P.; McConnell, D.A.; Wiggen, J.L.; Bedward, J. Effects of Classroom “Flipping” on Content Mastery and Student Confidence in an Introductory Physical Geology Course. J. Geosci. Educ. 2019, 67, 195–210. [Google Scholar] [CrossRef]

- Wiggen, J.; McConnell, D. Geoscience Videos and Their Role in Supporting Student Learning. J. Coll. Sci. Teach. 2017, 46, 44–49. [Google Scholar] [CrossRef]

- Willis, S.; Stern, R.J.; Ryan, J.; Bebeau, C. Exploring Best Practices in Geoscience Education: Adapting a Video/Animation on Continental Rifting for Upper-Division Students to a Lower-Division Audience. Geosciences 2021, 11, 140. [Google Scholar] [CrossRef]

- Suskie, L. Assessing Student Learning: A Common Sense Guide; John Wiley & Sons, Incorporated: Hoboken, NJ, USA, 2018. [Google Scholar]

- Suskie, L. Assessing Student Learning: A Common Sense Guide; John Wiley & Sons, Incorporated: Hoboken, NJ, USA, 2009; ISBN 978-0-470-28964-8. [Google Scholar]

- Bhattacharya, D.; Carroll Steward, K.; Forbes, C.T. Empirical Research on K-16 Climate Education: A Systematic Review of the Literature. J. Geosci. Educ. 2021, 69, 223–247. [Google Scholar] [CrossRef]

- Christman, R.; Aronoff, S.; Burmester, R.; Babcock, S.; Engebretson, D.; Schwartz, M.; Talbot, J.; Wodzicki, A. Evaluations of Some Introductory Geology Textbooks. J. Geol. Educ. 1985, 33, 188–191. [Google Scholar] [CrossRef]

- King, C.J.H. An Analysis of Misconceptions in Science Textbooks: Earth Science in England and Wales. Int. J. Sci. Educ. 2010, 32, 565–601. [Google Scholar] [CrossRef]

- Arthurs, L. What College-Level Students Think: Student Alternate Conceptions and Their Cognitive Models of Geoscience Concepts. In Qualitative Inquiry in Geoscience Education Research; Geological Society of America Special Paper 474; Geological Society of America: Boulder, CO, USA, 2011; pp. 135–152. ISBN 978-0-8137-2474-4. [Google Scholar]

- Czajka, C.D.; McConnell, D. An Exploratory Study Examining Undergraduate Geology Students’ Conceptions Related to Geologic Time and Rates. J. Geosci. Educ. 2018, 66, 231–245. [Google Scholar] [CrossRef]

- Ford, B.; Taylor, M. Investigating Students’ Ideas About Plate Tectonics. Sci. Scope 2006, 30, 38–43. [Google Scholar]

- Guffey, S.K.; Slater, T.F. Geology Misconceptions Targeted by an Overlapping Consensus of US National Standards and Frameworks. Int. J. Sci. Educ. 2020, 42, 469–492. [Google Scholar] [CrossRef]

- Z39.4-2021; ANSI/NISO Z39.4-2021 Criteria for Indexes. National Information Standards Organization Z39.4 Working Group NISO: Baltimore, MD, USA, 2022. [CrossRef]

- Stine, M.B.; Butler, D.R. A Content Analysis of Biogeomorphology within Geomorphology Textbooks. Geomorphology 2011, 125, 336–342. [Google Scholar] [CrossRef]

- Cheek, K.A. Commentary: A Summary and Analysis of Twenty-Seven Years of Geoscience Conceptions Research. J. Geosci. Educ. 2010, 58, 122–134. [Google Scholar] [CrossRef]

- Gómez-Gonçalves, A.; Corrochano, D.; Fuertes-Prieto, M.Á.; Ballegeer, A.-M. How Long Has It Taken for the Physical Landscape to Form? Conceptions of Spanish Pre-Service Teachers. Educ. Sci. 2020, 10, 373. [Google Scholar] [CrossRef]

- Bolin, P.E. What Is Learned? How Do We Know? Art Educ. 1999, 52, 4. [Google Scholar] [CrossRef]

- Egger, A. The Role of Introductory Geoscience Courses in Preparing Teachers—And All Students—For the Future: Are We Making the Grade? GSAT 2019, 29, 4–10. [Google Scholar] [CrossRef]

- Budd, D.A. Characterizing Teaching in Introductory Geology Courses: Measuring Classroom Practices. J. Geosci. Educ. 2013, 61, 461–475. [Google Scholar]

- Goldsmith, D.W. A Case-Based Curriculum for Introductory Geology. J. Geosci. Educ. 2011, 59, 119–125. [Google Scholar] [CrossRef]

- Egger, A.E.; Viskupic, K.; Iverson, E.R. Results of the National Geoscience Faculty Survey (2004–2016); National Association of Geoscience Teachers: Northfield, MN, USA, 2019. [Google Scholar]

- Riihimaki, C.A.; Viskupic, K. Motivators and Inhibitors to Change: Why and How Geoscience Faculty Modify Their Course Content and Teaching Methods. J. Geosci. Educ. 2020, 68, 115–132. [Google Scholar] [CrossRef]

- Prather, E. Students’ Beliefs About the Role of Atoms in Radioactive Decay and Half-Life. J. Geosci. Educ. 2005, 53, 345–354. [Google Scholar] [CrossRef]

- Kreager, B.Z. Concepts About Sedimentology and Stratigraphy in Undergraduate Geoscience Courses. Master’s Thesis, University of Nebraska-Lincoln, Lincoln, NE, USA, 2016. [Google Scholar]

- Camburn, E.M. Review of “Asking Students About Teaching: Student Perception Surveys and Their Implementation”; NEPC Review; National Education Policy Center: Boulder, CO, USA, 2012; p. 10. [Google Scholar]

- Tretter, T.R.; Jones, M.G.; Andre, T.; Negishi, A.; Minogue, J. Conceptual Boundaries and Distances: Students’ and Experts’ Concepts of the Scale of Scientific Phenomena. J. Res. Sci. Teach. 2006, 43, 282–319. [Google Scholar] [CrossRef]

- Jaeger, A.J.; Shipley, T.F.; Reynolds, S.J. The Roles of Working Memory and Cognitive Load in Geoscience Learning. J. Geosci. Educ. 2017, 65, 506–518. [Google Scholar] [CrossRef]

- Dentith, M.C.; Wheatley, M.R. An Introductory Geophysical Exercise Demonstrating the Use of the Gravity Method in Mineral Exploration. J. Geosci. Educ. 1999, 47, 213–220. [Google Scholar] [CrossRef]

- DiLeonardo, C.; James, B.R.; Ferandez, D.; Carter, D. Supporting Transfer Students in the Geosciences from Two-year Colleges to University Programs. New Dir. Community Coll. 2022, 2022, 107–118. [Google Scholar] [CrossRef]

- Gold, A.U.; Pendergast, P.M.; Ormand, C.J.; Budd, D.A.; Mueller, K.J. Improving Spatial Thinking Skills among Undergraduate Geology Students through Short Online Training Exercises. Int. J. Sci. Educ. 2018, 40, 2205–2225. [Google Scholar] [CrossRef]

- Waldron, J.W.F.; Locock, A.J.; Pujadas-Botey, A. Building an Outdoor Classroom for Field Geology: The Geoscience Garden. J. Geosci. Educ. 2016, 64, 215–230. [Google Scholar] [CrossRef]

- Hart, S.; Staveland, L. Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research. In Human Mental Workload Advances in Psychology; Elsevier: Amsterdam, The Netherlands, 1988; Volume 52, pp. 139–183. ISBN 978-0-444-70388-0. [Google Scholar]

- Meyer, J.; Land, R. Overcoming Barriers to Student Understanding; Routledge: New York, NY, USA, 2006; ISBN 978-1-134-18995-3. [Google Scholar]

- Stokes, A.; King, H.; Libarkin, J.C. Research in Science Education: Threshold Concepts. J. Geosci. Educ. 2007, 55, 434–438. [Google Scholar] [CrossRef]

- Kastens, K.A.; Manduca, C.A.; Cervato, C.; Frodeman, R.; Goodwin, C.; Liben, L.S.; Mogk, D.W.; Spangler, T.C.; Stillings, N.A.; Titus, S. How Geoscientists Think and Learn. EoS Trans. 2009, 90, 265–266. [Google Scholar] [CrossRef]

- Brame, C.J. Effective Educational Videos: Principles and Guidelines for Maximizing Student Learning from Video Content. LSE 2016, 15, 1–6. [Google Scholar] [CrossRef] [PubMed]

- Van De Pol, J.; Volman, M.; Beishuizen, J. Scaffolding in Teacher–Student Interaction: A Decade of Research. Educ. Psychol. Rev. 2010, 22, 271–296. [Google Scholar] [CrossRef]

- Doering, A.; Veletsianos, G. Multi-Scaffolding Environment: An Analysis of Scaffolding and Its Impact on Cognitive Load and Problem-Solving Ability. J. Educ. Comput. Res. 2007, 37, 107–129. [Google Scholar] [CrossRef]

- Van Nooijen, C.C.A.; De Koning, B.B.; Bramer, W.M.; Isahakyan, A.; Asoodar, M.; Kok, E.; Van Merrienboer, J.J.G.; Paas, F. A Cognitive Load Theory Approach to Understanding Expert Scaffolding of Visual Problem-Solving Tasks: A Scoping Review. Educ. Psychol. Rev. 2024, 36, 12. [Google Scholar] [CrossRef]

- King, H. Student Difficulties in Learning Geoscience. Planet 2012, 25, 40–47. [Google Scholar] [CrossRef]

| Title | Author(s) | Publisher | Year | Chapters | Pages | Figures | Tables |

|---|---|---|---|---|---|---|---|

| Understanding Earth | Grotzinger, Jordan | W.H. Freeman, New York, NY, USA | 2020 | 23 | 784 | 621 | 29 |

| Essentials of Geology | Marshak | W. W. Norton & Company, New York, NY, USA | 2022 | 25 | 720 | 857 | 13 |

| Physical Geology | Plummer, Carlson, Hammersley | McGraw-Hill Education, New York, NY, USA | 2022 | 23 | 672 | 747 | 35 |

| Exploring Geology | Reynolds, Johnson, Morin, Carter | McGraw-Hill LLC, New York, NY, USA | 2019 | 19 | 704 | 2137 | 10 |

| Earth: An Introduction to Physical Geology | Tarbuck, Lutgens | Pearson Education, Inc. Hoboken, NJ, USA | 2020 | 24 | 784 | 961 | 9 |

| Physical Geology: Investigating Earth | Wicander, Monroe | Cengage Learning, Inc., Independence, KY, USA | 2023 | 18 | 528 | 496 | 24 |

| Crustal Geophysics | Crustal Geology | Radiometric Dating | Sedimentary Basins |

|---|---|---|---|

| Moho | Laurentia | Absolute age | Continental rift basin |

| Seismic refraction | Crustal province | Uranium-lead | Passive margin |

| Crustal composition | Proterozoic | Isotopes | Foreland basin |

| Continental crust | Archean | Zircon | Forearc basin |

| Gravity | Granite | Radiometric dating | Intracratonic basin |

| Earthquakes | Phanerozoic | Radioactive | Back-arc basin |

| Magnetic | Paleozoic | Geochronology | Transtensional basin |

| Seismology | Mesozoic | Half-life | Hydrocarbons |

| Seismic reflection | Cenozoic | Subsidence |

| Topics | Grotzinger Jordan | Marshak | Plummer Carlson Hammersley | Reynolds Johnson Morin Carter | Tarbuck Lutgens | Wicander Monroe | Mean | Std. Dev. |

|---|---|---|---|---|---|---|---|---|

| Geophys. % | 50 | 53 | 60 | 40 | 42 | 63 | 50 | 8.3 |

| Geology % | 19 | 32 | 15 | 29 | 22 | 10 | 24 | 7.6 |

| Radiometric dating % | 24 | 5 | 20 | 15 | 27 | 18 | 18 | 6.9 |

| Sedimentary basins % | 7 | 10 | 6 | 15 | 9 | 9 | 9 | 3.0 |

| Total # of keywords | 352 | 425 | 302 | 249 | 320 | 118 | 294 | 95.1 |

| Category | Geophysics Mean ± 1 Std. Dev. | Geology Mean ± 1 Std. Dev. | Radiometric Dating Mean ± 1 Std. Dev. | Sed. Basins Mean ± 1 Std. Dev. | Category Mean Score |

|---|---|---|---|---|---|

| Keyword presence | 2.4 ± 1.21 | 3.4 ± 0.85 | 2.0 ± 1.70 | 2.3 ± 1.44 | 2.53 |

| Keyword usage | 2.1 ± 1.24 | 3.0 ± 0.87 | 2.0 ± 1.67 | 2.2 ± 1.38 | 2.33 |

| Examples | 1.7 ± 1.13 | 2.8 ± 1.16 | 2.0 ± 1.65 | 2.0 ± 1.43 | 2.13 |

| Clarity/vagueness | 1.5 ± 1.12 | 2.4 ± 1.09 | 2.0 ± 1.67 | 1.7 ± 1.17 | 1.90 |

| Topic mean score | 1.9 ± 1.09 | 2.9 ± 0.90 | 2.0 ± 1.66 | 2.0 ± 1.21 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Crowley, C.W.; Stern, R.J. What Are US Undergraduates Taught and What Have They Learned About US Continental Crust and Its Sedimentary Basins? Geosciences 2025, 15, 296. https://doi.org/10.3390/geosciences15080296

Crowley CW, Stern RJ. What Are US Undergraduates Taught and What Have They Learned About US Continental Crust and Its Sedimentary Basins? Geosciences. 2025; 15(8):296. https://doi.org/10.3390/geosciences15080296

Chicago/Turabian StyleCrowley, Clinton Whitaker, and Robert James Stern. 2025. "What Are US Undergraduates Taught and What Have They Learned About US Continental Crust and Its Sedimentary Basins?" Geosciences 15, no. 8: 296. https://doi.org/10.3390/geosciences15080296

APA StyleCrowley, C. W., & Stern, R. J. (2025). What Are US Undergraduates Taught and What Have They Learned About US Continental Crust and Its Sedimentary Basins? Geosciences, 15(8), 296. https://doi.org/10.3390/geosciences15080296