1. Introduction

Subsurface modeling is essential for characterizing underground resources such as hydrocarbon reservoirs [

1], mineral accumulations [

2], groundwater aquifers [

3], and carbon sequestration sites [

4]. Multiple realizations of subsurface models are calculated from a set of geostatistical methodologies (e.g., variogram-based, object-based, multipoint-based, and rules-based), constrained by input parameters and conditioning data [

1]. Model parameters for subsurface models include histograms, variograms, correlation coefficients, and training images, while conditioning data includes both hard data (e.g., boreholes) and soft data (e.g., geophysical data). Checking the ability of a subsurface model to match both input model parameters and hard data is well established with standard workflows and diagnostic plots, including scatter plots of truth vs. predicted models, residual histograms and location maps, and global summary statistics like mean square error (MSE). Model parameter checks involve comparing input histogram and variograms with experimental histograms and variograms [

5], as well as assessing multiple-point patterns from training images averaged over realizations [

6].

Soft data checking or assessing the ability of a subsurface model to honor less precise conditioning data information is critical to ensure good estimation and uncertainty models away from hard data locations, often critical for optimum decision-making process [

1,

7]. This process requires multiscale analysis, which integrates fine-scale heterogeneities with broader geological trends. Soft data, if unchecked or improperly used, can introduce bias, reduce the accuracy of predictions, or lead to poor decision-making [

8]. This challenge soft data checking can be addressed by framing the problem as one of image similarity evaluation, where the goal is to quantify how well images informed by soft data, such as 2D seismic slices align with the subsurface model 2D slices.

A simple metric for assessing image similarity is the by-pixel mean squared error (MSE) [

9], which calculates the average of the squared differences in pixel intensities between the ground truth image and another image Equation (1),

where

and

represent the intensity values of corresponding pixels in the two images being compared at position

. The dimensions of the images are denoted by

(number of rows) and

(number of columns). While the by-pixel MSE is appealing due to its mathematical simplicity and ease of implementation, it is shown that it performs poorly for pattern recognition tasks [

9,

10,

11]. The by-pixel MSE treats all pixel differences equally and independently, without considering spatial relationships or the structural patterns that are critical in geological contexts. As a result, the by-pixel MSE is sensitive to translation, rotation and flipping image transformations.

The Structural Similarity Index Measure (SSIM) is a widely applied metric for assessing the similarity between two images by comparing their structural content, offering a perceptual evaluation that correlates well with human visual perception. The SSIM works by breaking down the comparison into three main components, luminance, contrast, and structure [

9,

12]. The equation for the SSIM between two images

and

,

where

and

are pixel averages,

and

are pixel variances,

is covariance and

and

, where

is the dynamic range of pixel values (e.g., 255 for 8-bit images) and

and

are small constants typically

= 0.01 and

= 0.03. This formula integrates luminance, that measures brightness similarity using means,

evaluates contrast differences using standard deviations,

and assesses correlation (structure comparison) between covariance,

where

.

This combination of luminance, contrast, and structure allows the SSIM to more comprehensively evaluate image similarity compared to traditional by-pixel metrics like MSE, that measures pixel-level intensity differences and may overlook perceptually significant variations [

12]. Yet, SSIM is still sensitive to translation, rotation and flipping image transformations through the covariance component.

The sensitivity of the above image comparison methods to translation, rotation and flipping image transformations pose challenges for the soft data checking problem, as soft data like seismic images often represent subsurface structures that may not align perfectly with the large-scale transitions in the subsurface models, e.g., the boundaries of facies regions or fault blocks. Even slight differences in angle or scale of these boundaries between geological features in the soft data and the subsurface models can lead SSIM to assign a significant lower similarity score, despite the underlying geological framework similarity.

The Feature Similarity Indexing Method (FSIM) is a metric designed to assess image similarity by focusing on key visual features that are perceptually important, such as edges and textures. FSIM evaluates these features by comparing the phase congruency (PC) and gradient magnitude (GM) between images, elements that are critical in defining human visual perception of structure [

13]. Phase congruency captures the alignment of local frequencies, indicating structural features like edges, while gradient magnitude assesses the intensity variations around these edges. By combining these factors, FSIM provides an image comparison similarity metric that aligns well with how human visual perception prioritizes edges and patterns. For example, edges, distortions in high-phase-congruency regions, are more noticeable than flat areas. This makes FSIM particularly effective in scenarios where edges, corners and textures play a critical role in image quality assessment, leading to a robust and reliable evaluation of image similarity [

13].

While FSIM effectively captures edges, it may fail to fully account for higher-level perceptual aspects beyond structural and contrast changes. Additionally, FSIM relies on two constants

and

,

stabilizes PC similarity and

stabilizes GM similarity, these constants are derived from the dynamic ranges of PC and GM values in standard benchmark datasets such as LIVE and TID13 [

14]. This reliance on fixed parameters, tuned to specific benchmark datasets, can reduce adaptability to other datasets or to unseen or non-standard forms of image degradation, such as noise, blurring, or compression artifacts [

13].

Efforts to develop effective similarity indices for soft data like seismic data is a subject of considerable research. For instance, the method of seismic adaptive curves employs the adaptive curvelet transform to evaluate structural similarities between seismic images by decomposing them into components at various scales and orientations. This enables the method to capture detailed structural features, allowing comparisons that are sensitive to directional variations and multiple scales [

15]. However, this approach is primarily effective in matching seismic images containing a single geological feature, such as faults or domes, limiting its use in more complex scenarios with overlapping geological formations or variations in formation processes that is seen in Subsurface models.

A structural similarity model (SDSS) inspired by the human visual system was developed to evaluate seismic sections [

16]. This methodology allows for the quantitative comparison and analysis of two seismic sections based on energy intensity, contrast, and seismic reflection configuration similarity measures. This framework is applied to compare seismic sections before and after processing. However, this approach has notable limitations when applied to soft data checking. The SDSS model does not perform multiscale analysis, as it primarily focuses on localized features and may fail to capture the larger-scale patterns that are critical in subsurface modeling.

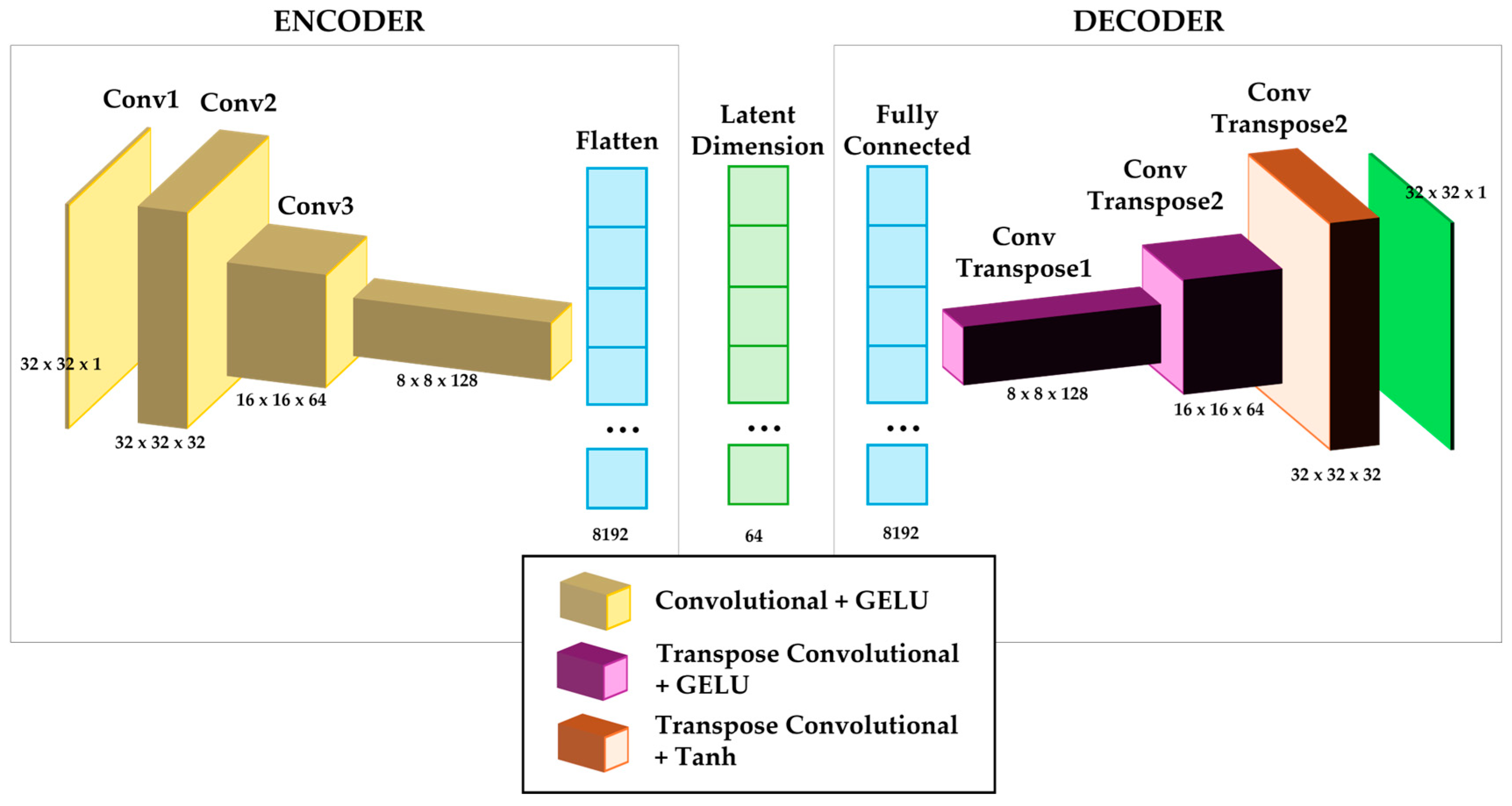

Advances in machine learning, particularly in generative AI (GenAI), offer new opportunities in subsurface modeling applications. For example, Autoencoders (AE) are a type of artificial neural network designed for unsupervised learning, particularly useful for feature extraction and dimensionality reduction [

17,

18]. An AE consists of an encoder that compresses input data into a lower-dimensional representation, known as an embedding or latent representation, and a decoder that reconstructs the original data from this compressed form. During training, the AE learns to minimize the difference between the input and the reconstructed output. The quality of the reconstruction depends in large part on the quality of the learned embedding, which serves as a feature-rich summary of the original data. The space in which these embeddings exist is referred to as the latent space, a structured, continuous domain where similar inputs are mapped closer together based on their essential features. By learning a compact, feature-rich representation, AE captures the most salient structural and spatial characteristics of images while filtering out redundant or noisy information [

19,

20].

We propose a GenAI-supported workflow that uses an AE for quantitative soft data checking. This method does not rely on by-pixel statistics and is robust for comparing high-level structures when subsurface models contain geological patterns of varying scale and location variant. Our workflow transforms 2D slices from soft data and subsurface model realizations into latent representations, enabling their comparison in latent space. By computing the principal components of these embeddings, we can visualize the relative positioning of soft data and subsurface model realizations. A distance metric is computed in this low-dimensional space to provide a quantitative measure of the similarity between subsurface models and soft data, complementing the visual analysis.

In the next section, we provide a detailed explanation of our proposed workflow. Following this, we present a study case in which soft data (seismic 3D data) is compared to different subsurface models to demonstrate the soft data check with visualization and distance metric.

2. Methodology

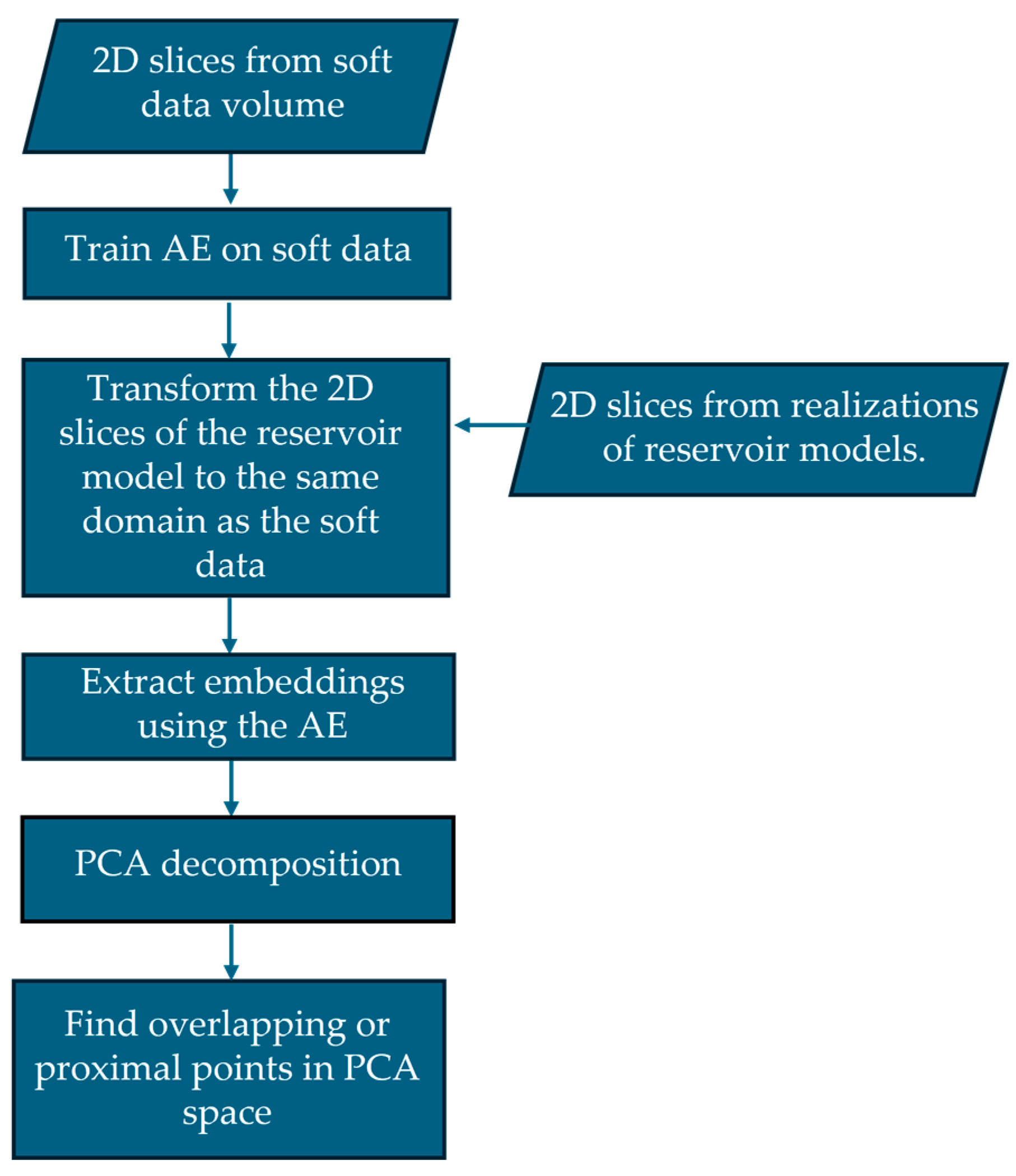

In this section, we describe our proposed workflow and its steps (in

Figure 1).

Extract 2D slices from the soft data 3D volume. If your data is already 2D, this step is not necessary;

Train the AE on the soft data 2D slices, to calculate the mapping from soft data to latent space;

Extract 2D slices from subsurface model realizations;

Transform the 2D slices of the subsurface model to the same domain as the soft data, e.g., post-stack seismic, distribution transformations, etc.;

Apply AE encoder to calculate latent space embeddings of both the soft data and the subsurface model;

Perform PCA decomposition on the latent space embeddings of both the soft data and the subsurface model realizations.

Find overlapping or proximal points in PCA space.

Depending on the source, soft data may already be available in a 2D format, for example, 2D seismic data, 2D gravimetric data, geological interpretations such as facies maps, remote sensing images, or outcrop photographs, which we refer to as 2D soft data slices. Multiple 2D soft data slices are required for effective AE training; therefore, image augmentation or additional analog images may be included.

If the soft data is provided in a 3D format, the volume is segmented into 2D soft data slices for effective AE training. It is advisable to orient these slices along the direction exhibiting the greatest heterogeneity, thereby enabling the AE to learn from the most informative variations. Additionally, the slicing should be dense and systematic to maximize the number of available training images.

Then the AE trains on 2D soft data slices to learn an efficient latent space representation. Training the AE on 2D slices instead of the full 3D soft data volume offers three key advantages. First, it dramatically reduces the computational complexity in computation and storage for training the AE model. Second, it increases the flexibility of the workflow, as soft data can already exist in a 2D format. Third, the AE requires a large number of training images to learn meaningful lower-dimensional embeddings. If the soft data consists of only a single 3D volume, the number of training samples is insufficient to properly train the AE. The 2D slices are a form of image augmentation, which improves the model’s ability to capture patterns and generalize effectively. Additionally, the AE is trained only on the soft data, and not the subsurface model realizations, to ensure that the generated latent space is tailored to the characteristics of the soft data. This allows the model to learn feature representations that accurately reflect the patterns and structures present in the soft data.

After training the AE, the 2D soft data slices used in training are encoded into a lower-dimensional latent representation. The size of these embeddings is described by an x-dimensional vector (i.e., a vector comprising x rows) based on the predefined latent space dimension, that is selected based on a balance between capturing sufficient geological features and avoiding redundant information and overfit. A smaller latent space may lose critical details, while a larger one may retain unnecessary noise. The size of the latent space is tuned with reconstruction error for the original soft data and inspection of the structure of the latent space.

The inspection of the latent structure within the latent space process involves evaluating the model’s ability to reconstruct the original data while preserving meaningful relationships among latent features, ensuring that similar soft data points remain close together. It is recommended to implement a feedback or iterative process to refine the selection of the latent space dimension. Additionally, this approach helps optimize computational efficiency by preventing the selection of an unnecessarily large latent space.

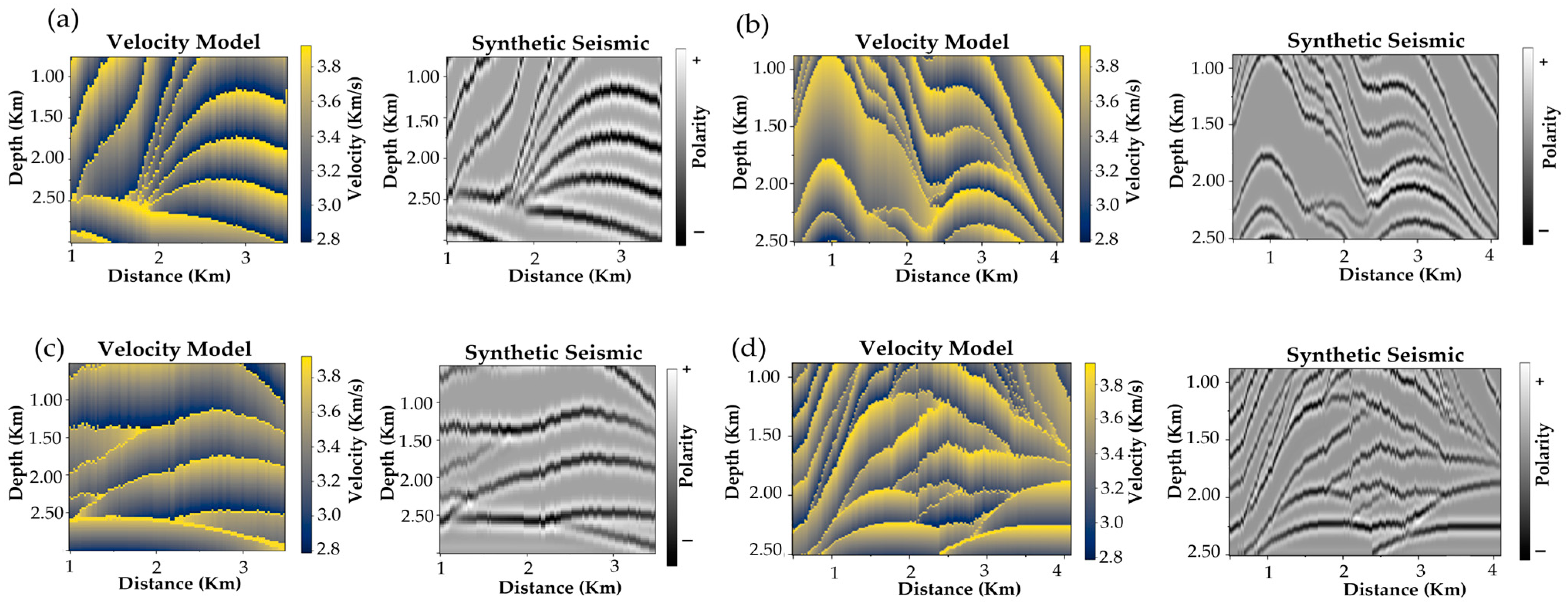

The 2D soft data slices are transformed to the same domain as the soft data (referred to as 2D transformed slices). This guarantees the subsurface model data to reside in the same domain as the soft data, facilitating effective encoding to the latent space of the autoencoder. This step enhances consistency between the soft data and the subsurface models. For example, the image distribution may be transformed to the soft data distribution, or a physics-based forward model may be applied, for example, in the case of seismic-based soft data as forward seismic transform is applied.

The trained AE is applied to the subsurface model 2D transformed slices. The embeddings extracted in this step summarize the most significant attributes of the 2D slices from the subsurface models’ realizations in the latent space of the soft data.

The dimensionality of the latent space is further reduced with Principal Component Analysis (PCA) to facilitate the comparison of the latent space representations of 2D soft data slices and the 2D transformed slices. PCA is a dimensionality reduction technique that applies an orthogonal transformation high-dimensional data into a smaller number of dimensions while preserving as much of the original variance as possible. The AE generated embeddings are reduced to two dimensions using PCA. This reduction allows for clear visualization and intuitive representation of the latent space. An additional advantage of PCA is that it allows new model realizations to be added dynamically. Once the autoencoder and PCA projection are trained, embeddings from any new model slices can be extracted and projected into the same PCA space without retraining or recomputing the entire dataset. This flexibility makes PCA particularly well suited for workflows that require iterative model evaluation. In contrast, other techniques such as Multidimensional Scaling (MDS) rely on pairwise similarity matrices that must be recalculated when new data are introduced, limiting their adaptability in this context.

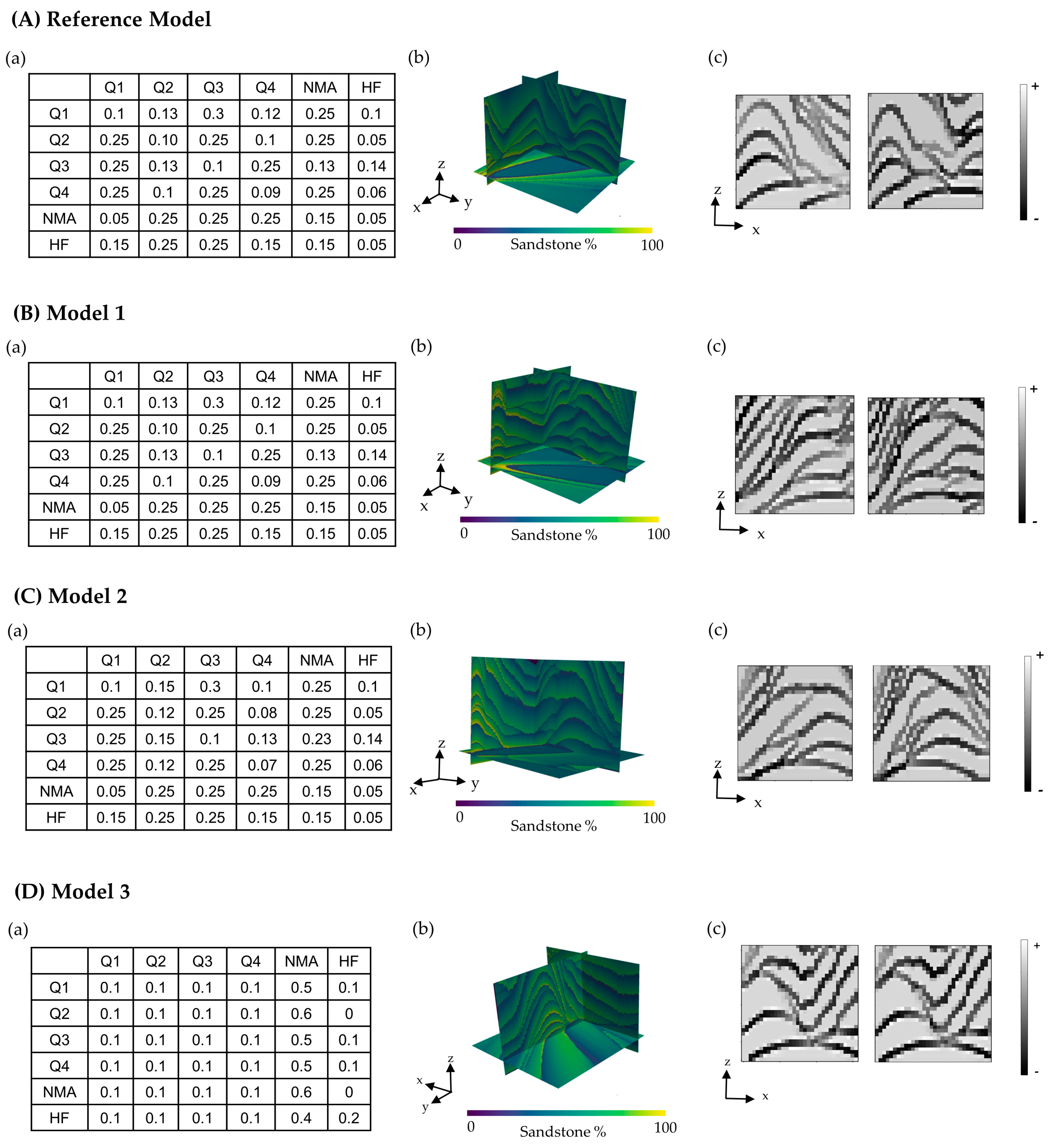

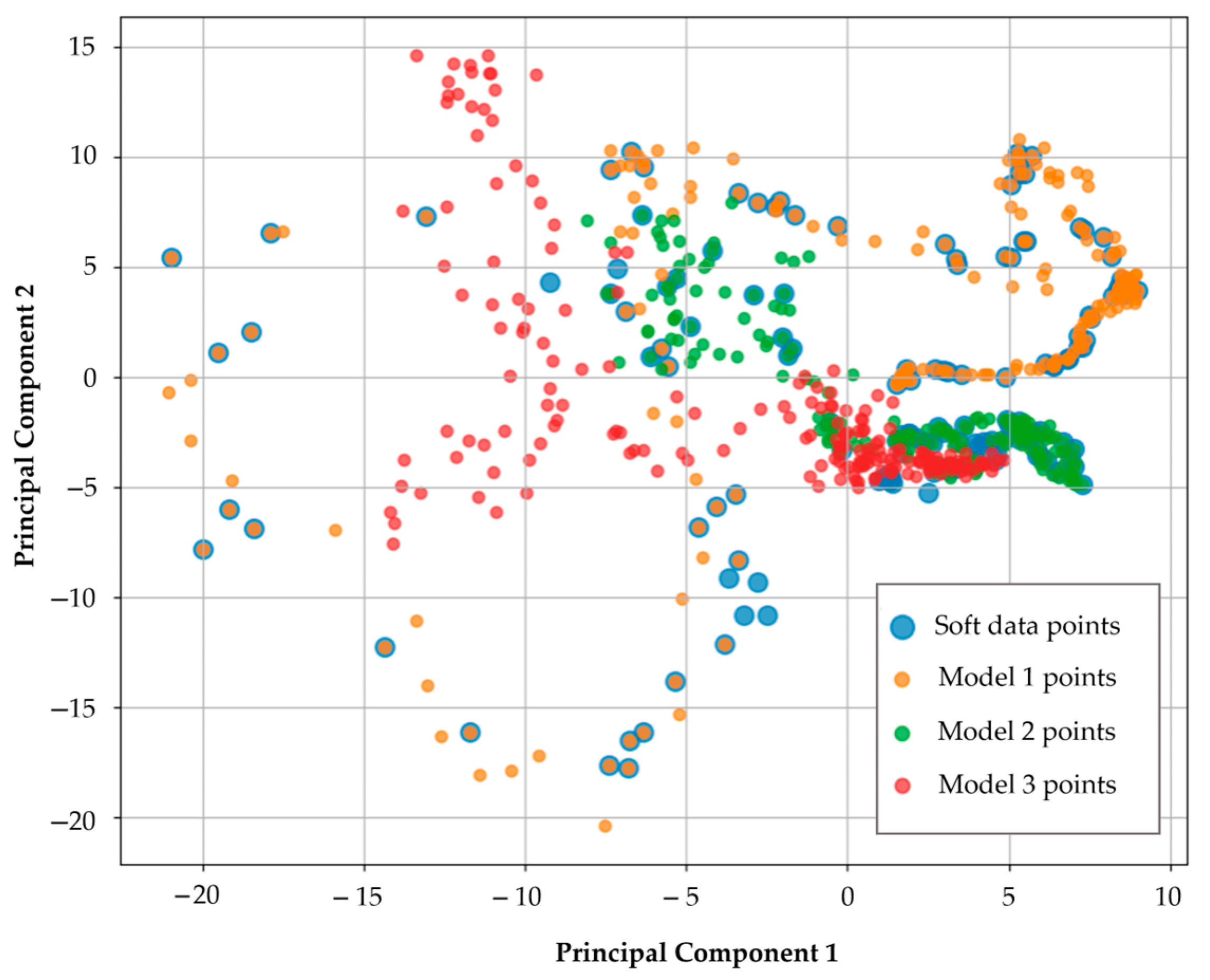

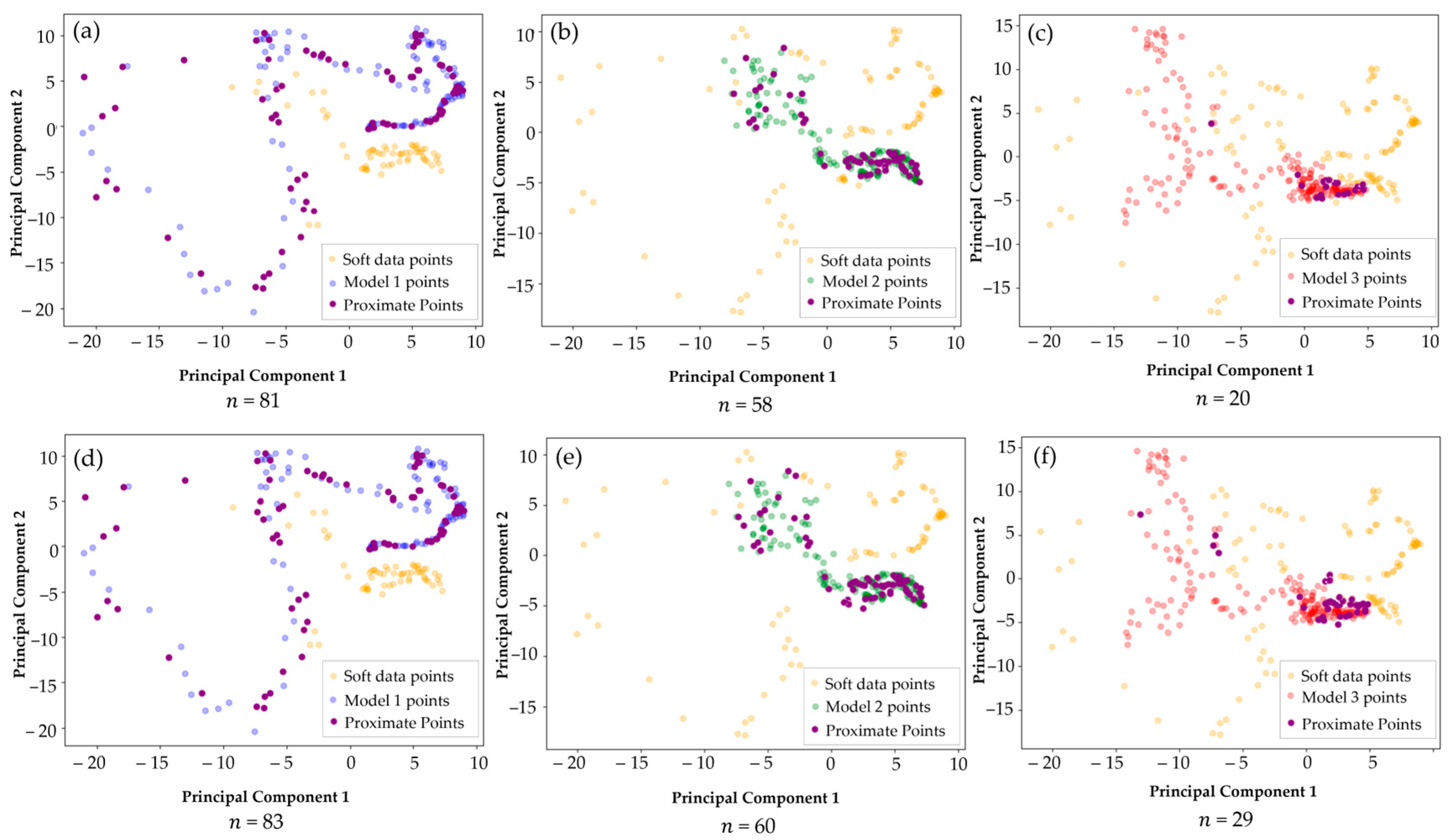

The resulting two-dimensional projections of latent space embeddings are plotted to visualize the relative positions of the embeddings. For clarity, the projections of the 2D soft data slices are referred to as soft data points, while the projections of the 2D transformed slices corresponding to the subsurface models are referred to as model points. Soft data points are expected to lie close to or among the model points that better honor the soft data. This spatial proximity reflects greater similarity in their latent representations and helps assess how well a model aligns with the seismic data, as PCA organizes data points based on shared variance and underlying patterns.

The visual analysis of the latent space serves as an initial step in soft data checking, providing an intuitive way to assess the relationship between soft data slices and subsurface model slices. However, soft data volumes may consist of hundreds of slices, and subsurface models may include hundreds of realizations that are similarly divided into hundreds of slices, making it challenging to effectively visualize the proximity and alignment between the soft data and models in the 2D latent space, because the volume of data points can result in visual clutter, hindering the ability to draw clear conclusions.

To identify overlapping or proximal points in the PCA-transformed latent space using a quantitative method, the Euclidean distance between soft data points and model points for each subsurface model realization is calculated. This approach quantifies the similarity between the seismic and synthetic seismic embeddings by measuring their spatial proximity in the 2D PCA-transformed latent space.

The soft data points are denoted as

for

, and the model points as

for

. The Euclidean distance is computed for each pair of points,

This distance calculation is applied across all soft data-model pairs. In addition, an indicator transform is employed to identify pairs of points that satisfy a predefined proximity criterion, defined by an indicator function

defined as

where 1 indicates proximate and 0 not. For each subsurface model realization, the number of proximate or overlapping points—denoted as

—is determined by counting all soft data–model point pairs that satisfy the proximity condition

(i.e., where

). This count serves as a quantitative measure of similarity; a higher

indicates a greater alignment between the soft data and the subsurface models, since more soft data points are in close proximity to the model points in the PCA-transformed latent space. This analysis enables both visual and quantitative evaluation of the degree that subsurface models honor the soft data.

4. Conclusions

We introduced a novel workflow for soft data checking, by quantifying the similarity between soft data and subsurface model realizations within a low-dimensional latent space. By training an autoencoder on soft data, the workflow generates embeddings that capture the essential structural and visual characteristics of the soft data. These embeddings form the basis for quantitatively comparing subsurface models to soft data using techniques such as PCA for dimensionality reduction and Euclidean distance for proximity analysis.

The results demonstrate the effectiveness of this workflow in assessing the ability of subsurface models to honor soft data, with models better conditioned to the seismic data scoring higher via quantification of proximity in the latent space. This approach not only fulfills the need for quantitative soft data checking but also provides a robust framework for evaluating the consistency between soft data and subsurface models, for example, geophysical data and subsurface models for hydrocarbons, gravity surveys and ore bodies, and land use maps and groundwater aquifers.

The proposed workflow is limited by its reliance on large datasets, for example, more than 100 training images, requiring the slicing of 3D data into 2D images or the application of image augmented, for example, generating additional, consistent images through simulations or generative AI, to train the autoencoder effectively. Future work could include methods to extend the proposed workflow to situations with limited data points, for example, a single 2D seismic slice or a few geologic profiles.