DAEF-YOLO Model for Individual and Behavior Recognition of Sanhua Geese in Precision Farming Applications

Simple Summary

Abstract

1. Introduction

- (1)

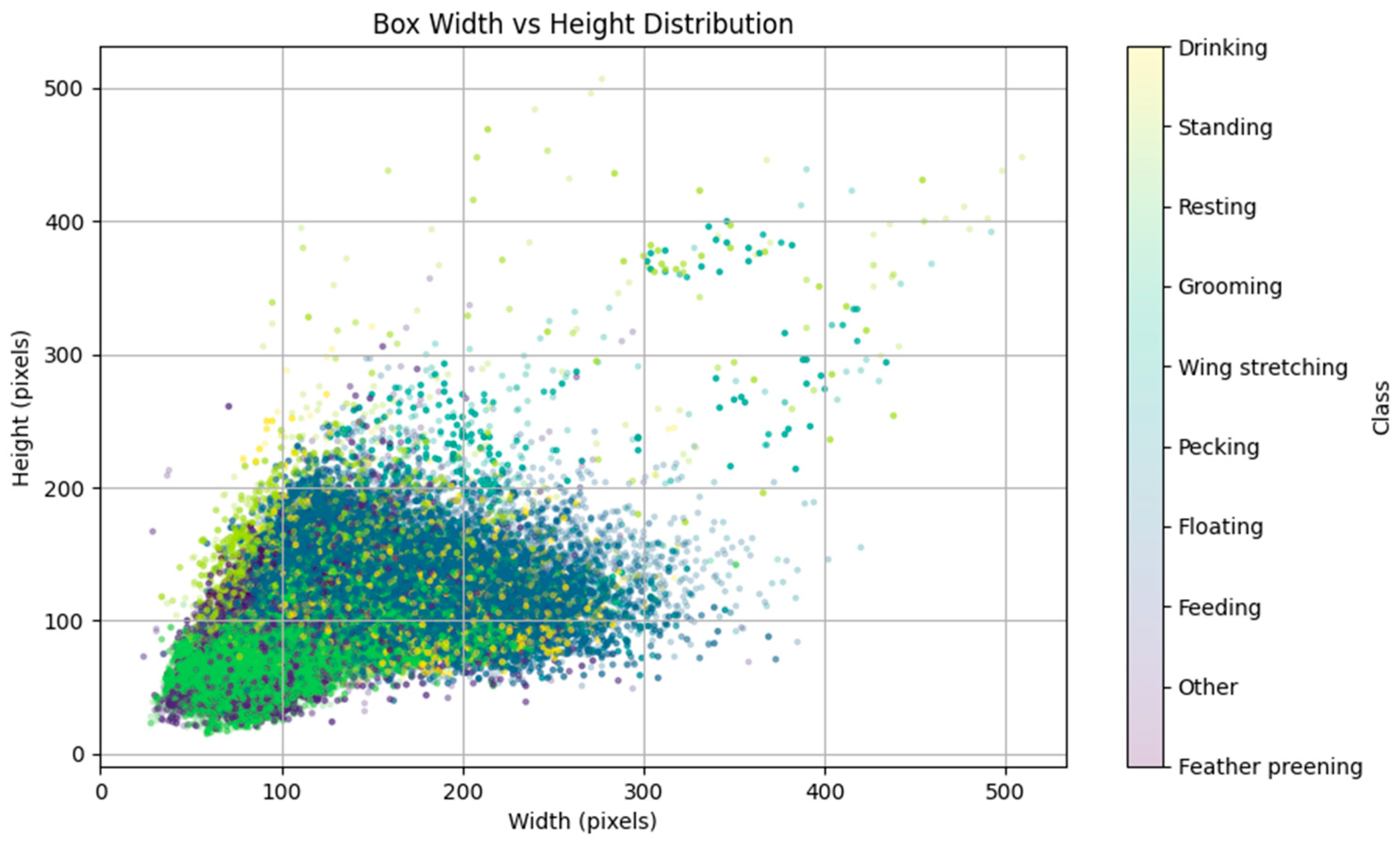

- Dedicated goose dataset: We constructed a high-quality dataset comprising multi-scale images of Sanhua goose individuals and behaviors captured under realistic and complex farming conditions. The dataset includes ten behavior categories—Drinking, Feather Preening, Feeding, Floating, Grooming, Pecking, Resting, Standing, Wing Stretching, and Other—serving as a robust foundation for multi-task model training and extending the behavioral taxonomy of geese in current research.

- (2)

- Multi-task recognition strategy: We propose a generalizable classification framework that introduces an “Other” category as a complementary class within a clearly defined multi-behavior system. This ensures that ambiguous or undefined behaviors are properly categorized, allowing for complete and simultaneous recognition of all individuals and behaviors. The strategy maintains individual recognition performance comparable to single-class detection while providing strong transferability and scalability for other species and scenarios, thereby supporting intelligent livestock management.

- (3)

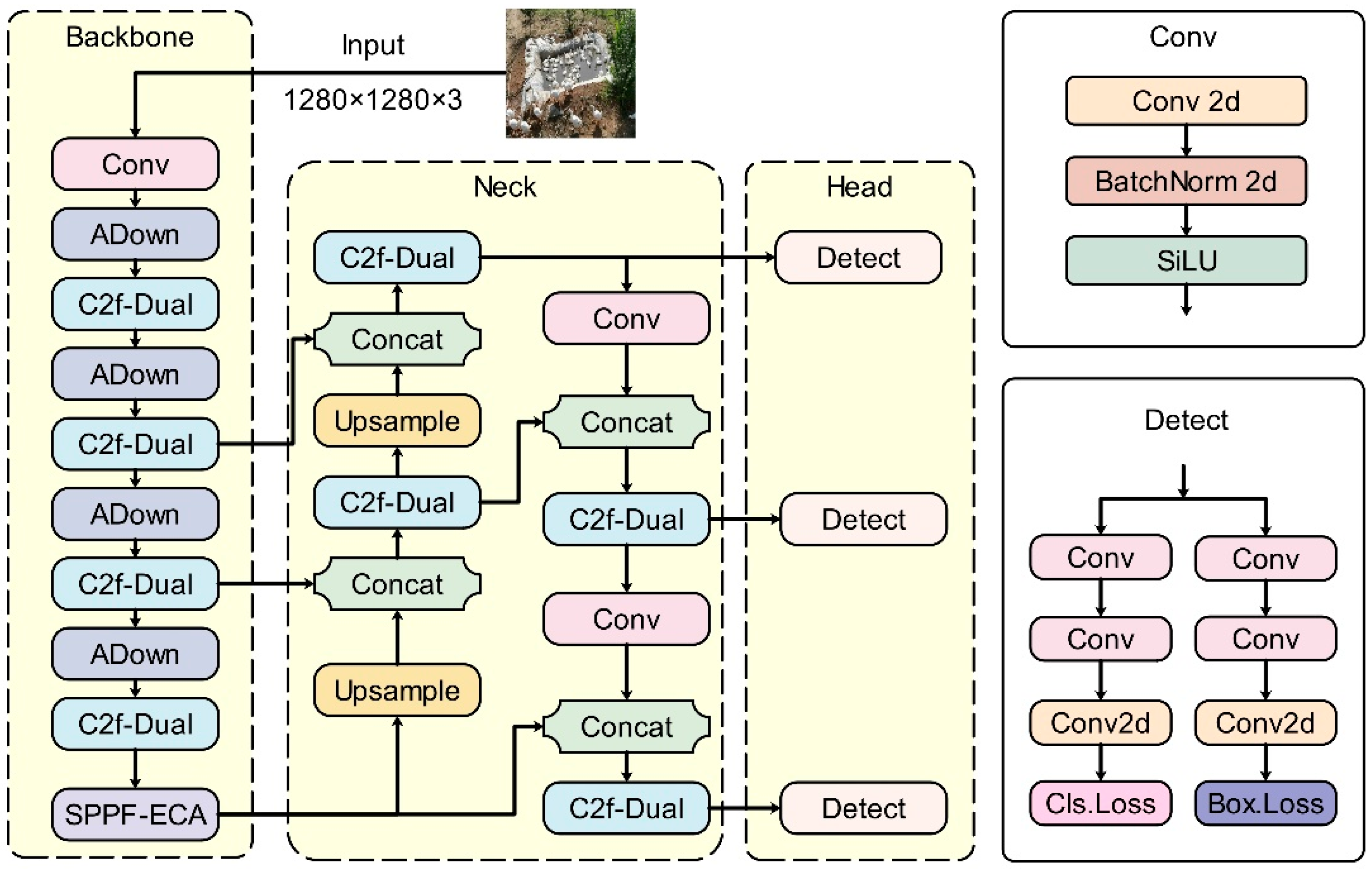

- Improved DAEF-YOLO architecture: Based on the YOLOv8s backbone, we implement targeted structural optimizations for multi-task scenarios. The DualConv-enhanced C2f module improves multi-scale feature extraction [36]; ECA within the SPPF module enhances channel interaction with minimal parameter cost [37]; the ADown module preserves information during downsampling [30], and the FocalerIoU loss improves bounding-box regression accuracy under complex backgrounds [38]. This integrated architecture achieves significant accuracy gains while retaining lightweight and real-time performance characteristics.

2. Materials and Methods

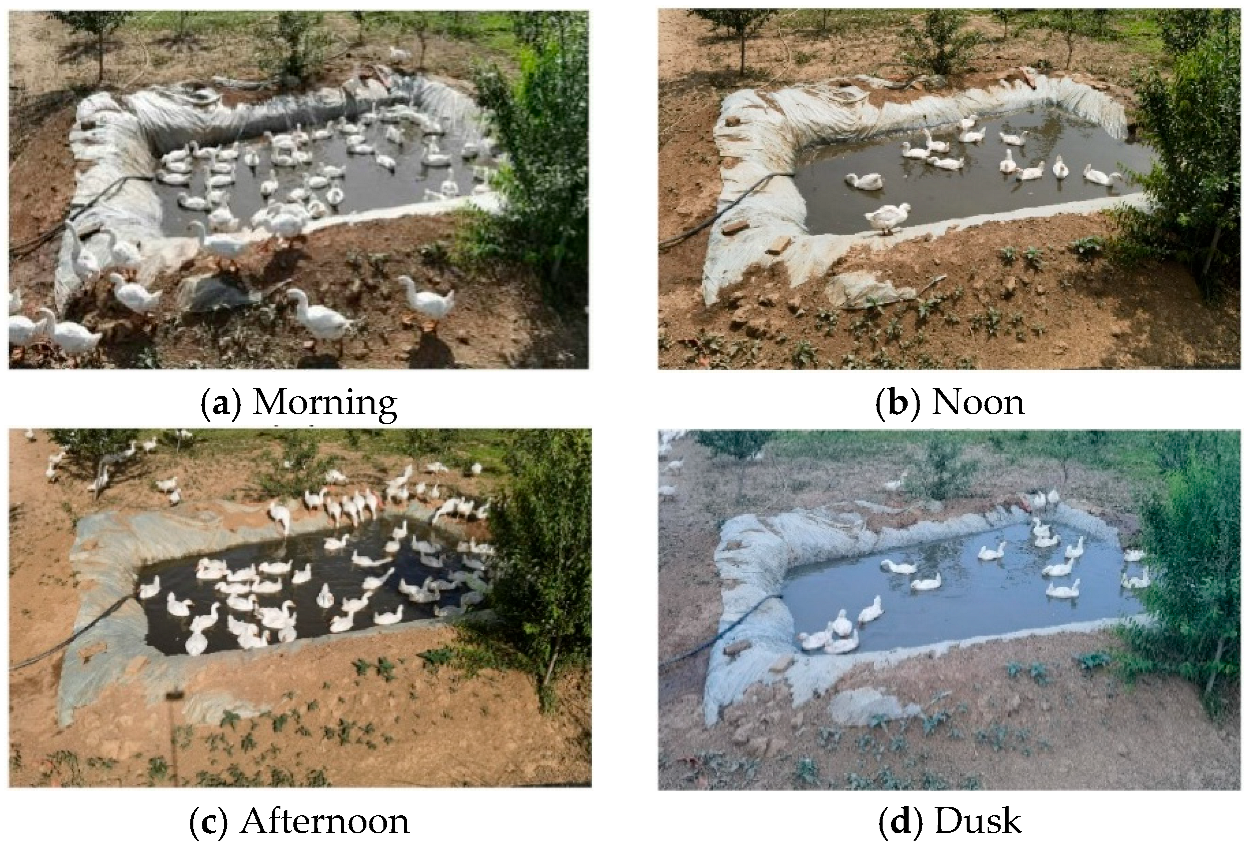

2.1. Image Acquisition and Dataset Construction

2.2. Data Augmentation

2.3. Ablation Protocol on the “Other” Complement Class

2.4. YOLOv8 Model and Performance Comparison

2.5. Construction of the Proposed DAEF-YOLO Model

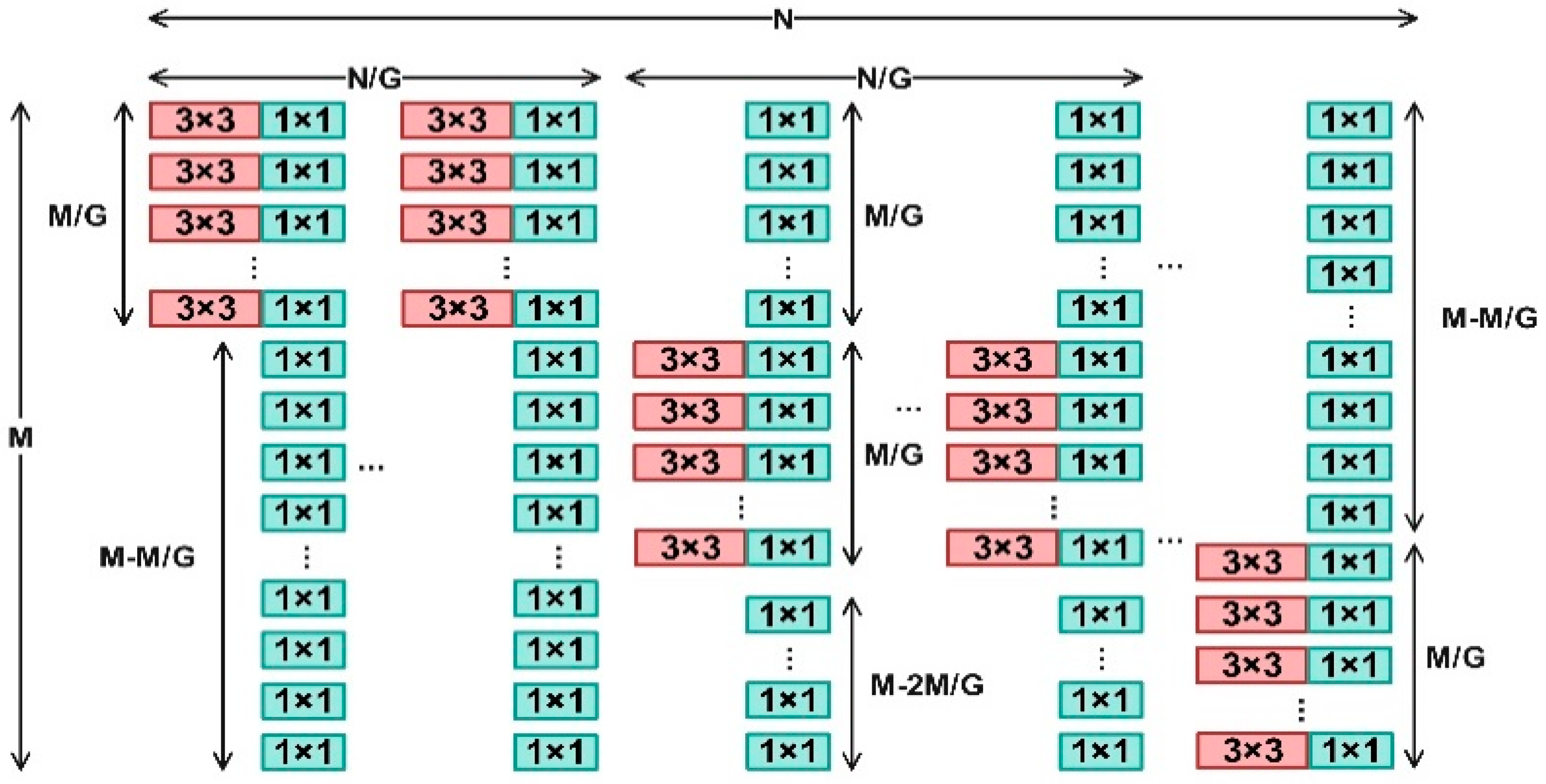

2.5.1. C2f-Dual Module Based on DualConv

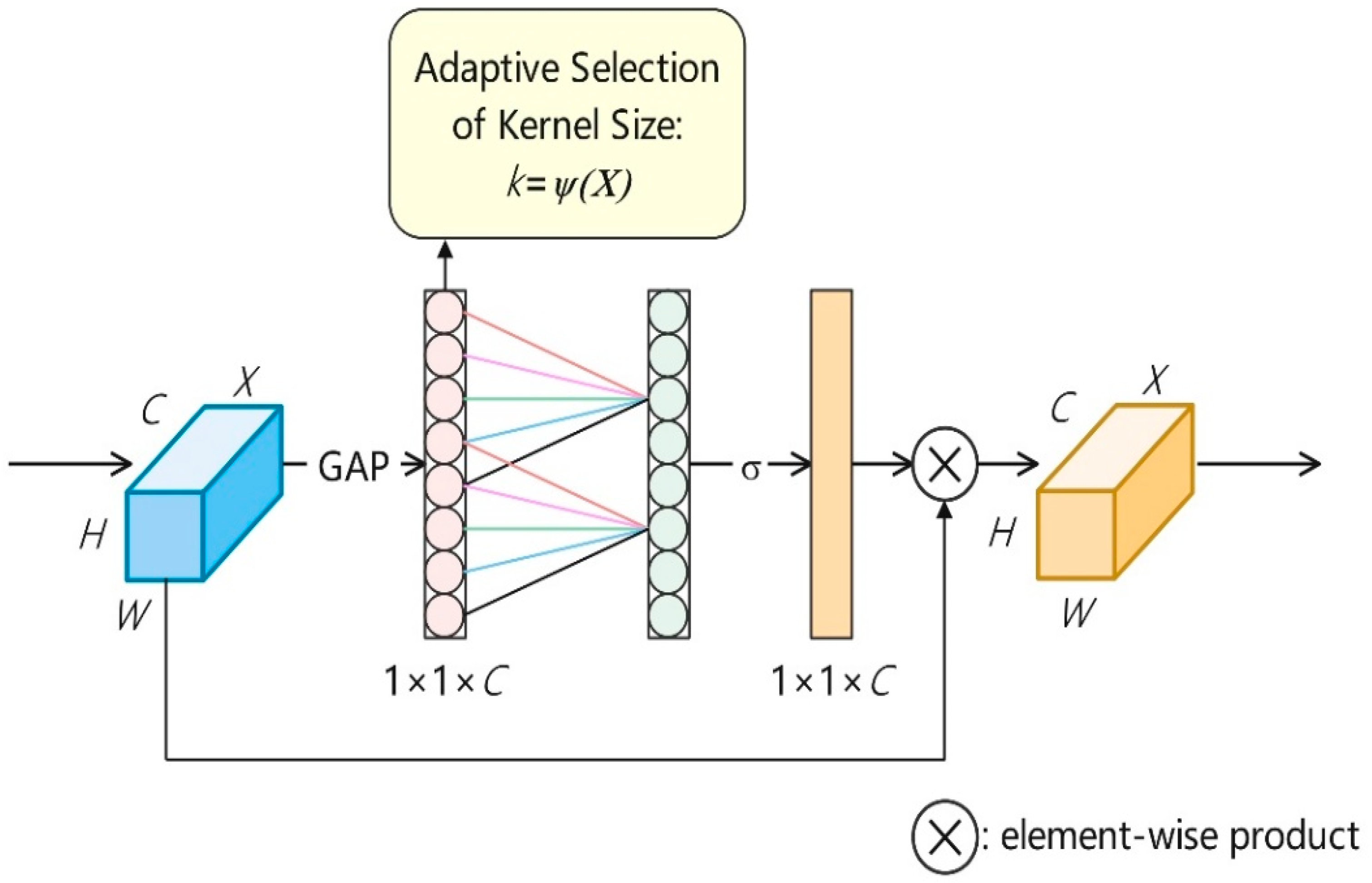

2.5.2. Improved SPPF Module with ECA

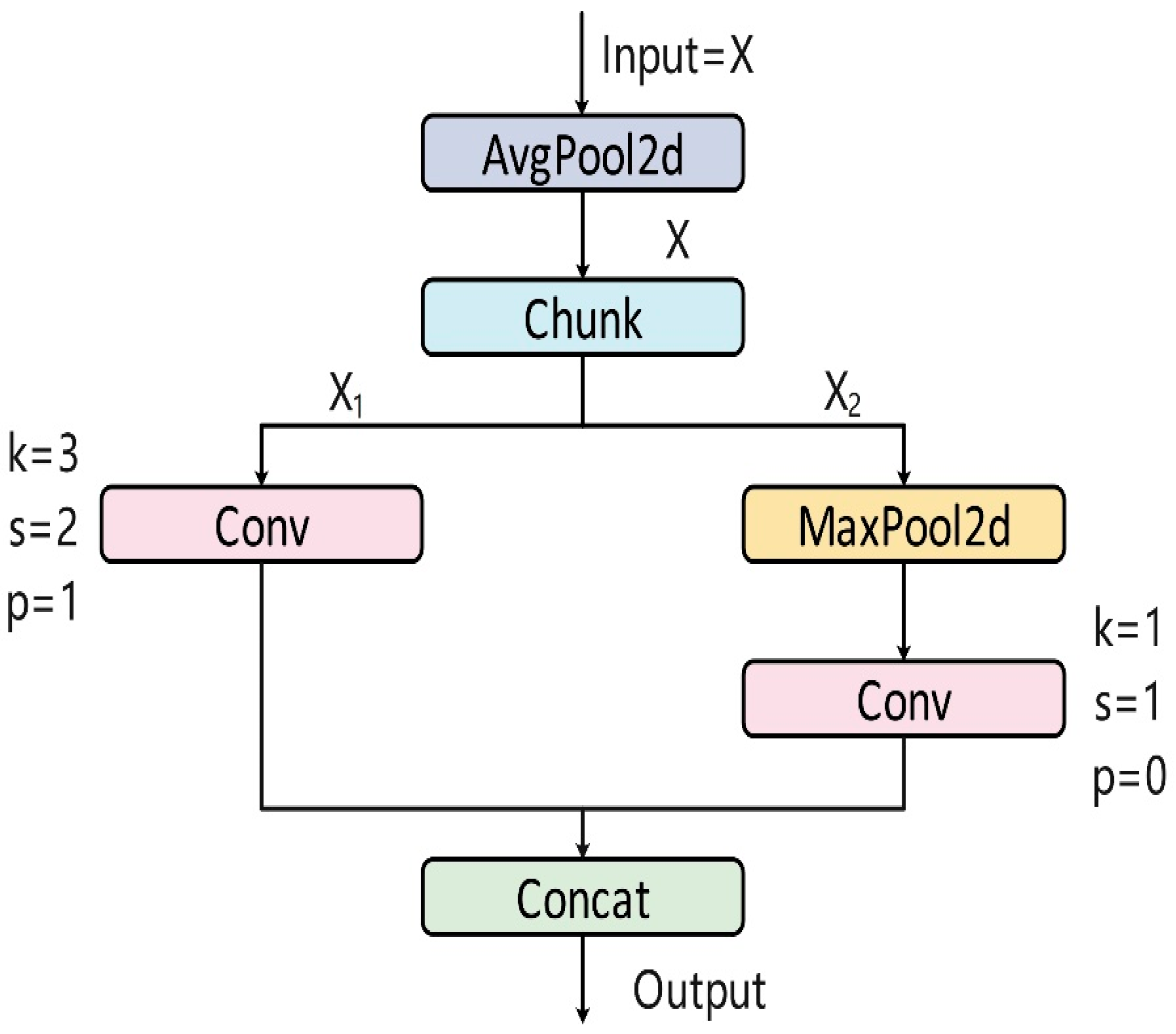

2.5.3. ADown Module for Downsampling

2.5.4. FocalerIoU Loss Function

3. Results and Analysis

3.1. Experimental Platform

3.2. Evaluation Indicators

3.3. Ablation Study on the Model’s Performance

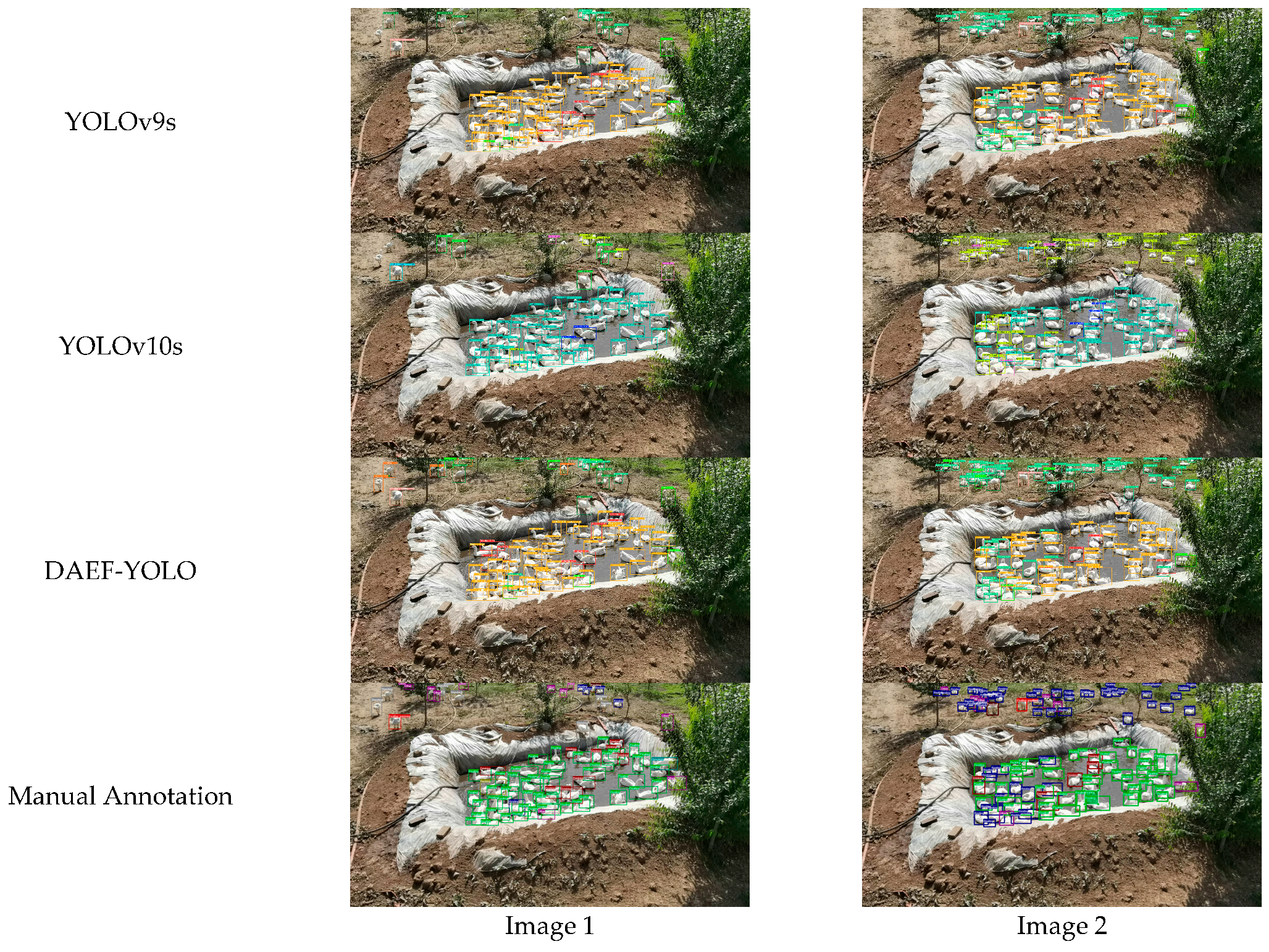

3.4. Comparative Experiments Between Different Models

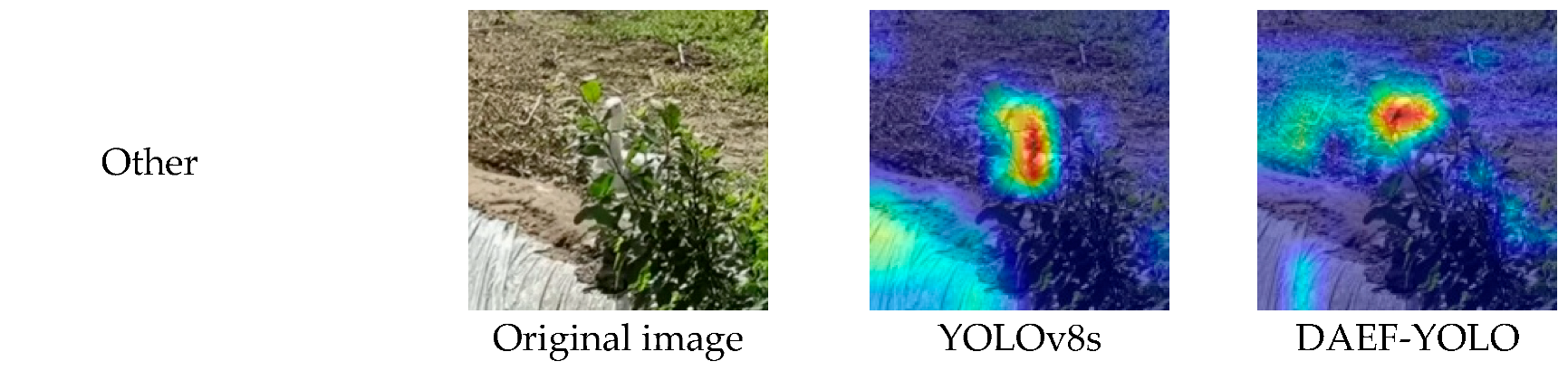

3.5. Heat Map Visualization Analysis

3.6. Ablation Study on the Effect of the “Other” Class

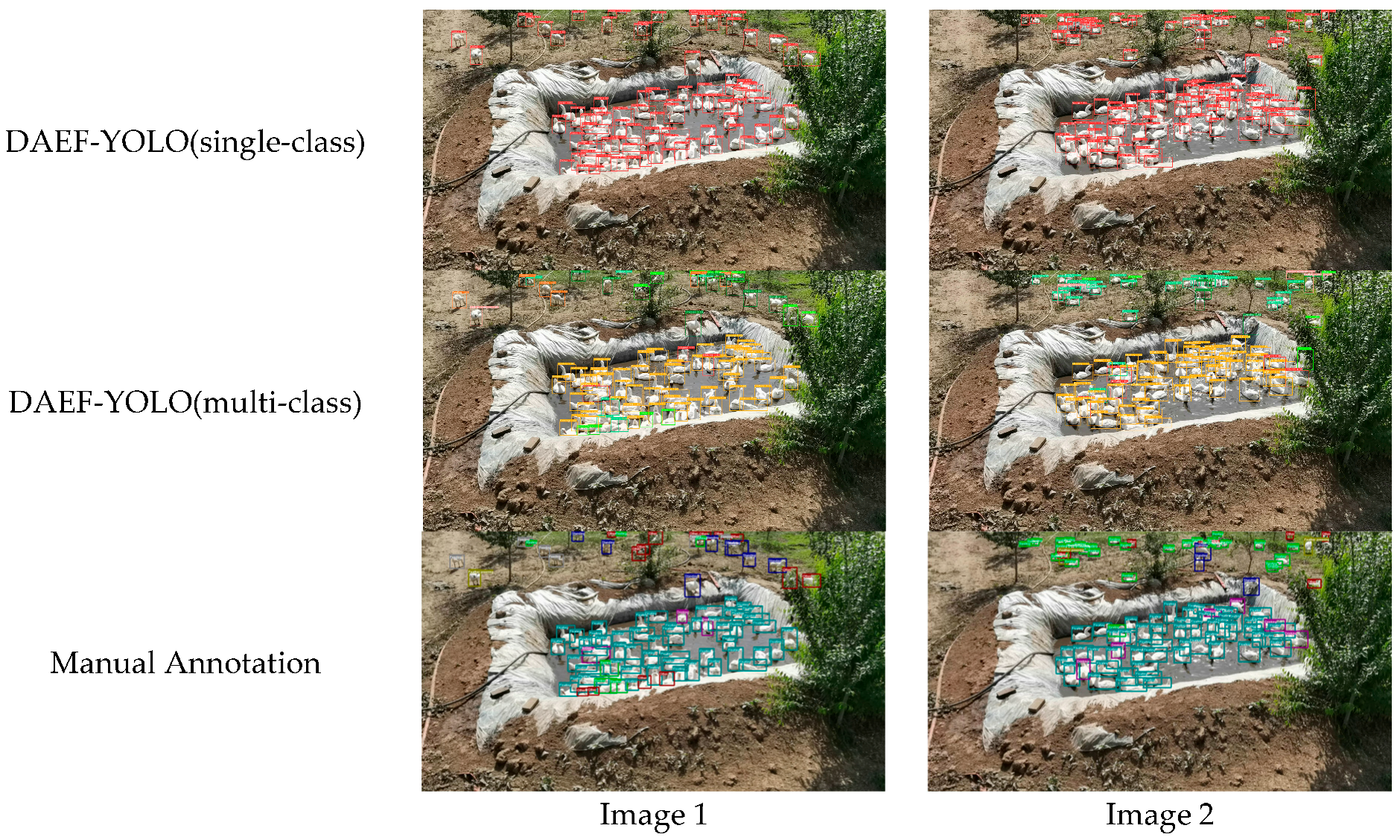

3.7. Multi-Task Capability: Individual Recognition Performance Under Different Annotation Strategies

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Berckmans, D. Precision livestock farming (PLF). Comput. Electron. Agric. 2008, 62, 1–7. [Google Scholar] [CrossRef]

- Bos, J.M.; Bovenkerk, B.; Feindt, P.H.; Van Dam, Y.K. The quantified animal: Precision livestock farming and the ethical implications of objectification. Food Ethics 2018, 2, 77–92. [Google Scholar] [CrossRef]

- Xiao, D.; Mao, Y.; Liu, Y.; Zhao, S.; Yan, Z.; Wang, W.; Xie, Q. Development and trend of industrialized poultry culture in China. J. South China Agric. Univ. 2023, 44, 1–12. [Google Scholar] [CrossRef]

- Chuang, C.; Chiang, C.; Chen, Y.; Lin, C.; Tsai, Y. Goose surface temperature monitoring system based on deep learning using visible and infrared thermal image integration. IEEE Access 2021, 9, 131203–131213. [Google Scholar] [CrossRef]

- Xiao, D.; Zeng, R.; Zhou, M.; Huang, Y.; Wang, W. Monitoring the vital behavior of Magang geese raised in flocks based on DH-YOLOX. Trans. Chin. Soc. Agric. Eng. 2023, 39, 142–149. [Google Scholar] [CrossRef]

- Liu, Y.; Cao, X.; Guo, B.; Chen, H.; Dai, Z.; Gong, C. Research on detection algorithm about the posture of meat goose in complex scene based on improved YOLO v5. J. Nanjing Agric. Univ. 2023, 46, 606–614. [Google Scholar] [CrossRef]

- Chen, C.; Zhu, W.; Norton, T. Behaviour recognition of pigs and cattle: Journey from computer vision to deep learning. Comput. Electron. Agric. 2021, 187, 106255. [Google Scholar] [CrossRef]

- Cheng, W.; Min, L.; Shuo, C. Individual identification of cattle using deep learning-based object detection in video footage. Comput. Electron. Agric. 2021, 187, 106258. [Google Scholar] [CrossRef]

- Aydin, A. Development of an early detection system for lameness of broilers using computer vision. Comput. Electron. Agric. 2017, 136, 140–146. [Google Scholar] [CrossRef]

- Li, Y.; Hao, Z.; Wang, J. Deep learning-based object detection for livestock monitoring: A review. Comput. Electron. Agric. 2021, 182, 105991. [Google Scholar] [CrossRef]

- Gao, Y.; Yan, K.; Dai, B.; Sun, H.; Yin, Y.; Liu, R.; Shen, W. Recognition of aggressive behavior of group-housed pigs based on CNN-GRU hybrid model with spatio-temporal attention mechanism. Comput. Electron. Agric. 2023, 205, 107606. [Google Scholar] [CrossRef]

- Fang, J.; Hu, Y.; Dai, B.; Wu, Z. Detection of group-housed pigs based on improved CenterNet model. Trans. Chin. Soc. Agric. Eng. 2021, 37, 136–144. [Google Scholar] [CrossRef]

- Cao, L.; Xiao, Z.; Liao, X.; Yao, Y.; Wu, K.; Mu, J.; Li, J.; Pu, H. Automated chicken counting in surveillance camera environments based on the point-supervision algorithm: LC-DenseFCN. Agriculture 2021, 11, 493. [Google Scholar] [CrossRef]

- Jiang, Y.; Li, L.; Zhang, F.; Zhang, W.; Yu, Q. Free range laying hens monitoring system based on improved mobilenet lightweight network. In Proceedings of the 2021 IEEE International Conference on Artificial Intelligence and Computer Applications (ICAICA), Dalian, China, 28–30 June 2021; pp. 487–494. [Google Scholar]

- So-In, C.; Poolsanguan, S.; Rujirakul, K. A hybrid mobile environmental and population density management system for smart poultry farms. Comput. Electron. Agric. 2014, 109, 287–301. [Google Scholar] [CrossRef]

- Jin, Y. Research on Pig Quantity Detection Method Based on Machine Vision. Master’s Thesis, Jiangxi Agricultural University, Nanchang, China, 2021. [Google Scholar]

- Huang, X. Individual Information Perception and Body Condition Score Assessment for Dairy Cow Based on Multi-Sensor. Master’s Thesis, University of Science and Technology of China, Hefei, China, 2020. [Google Scholar]

- Shi, W.; Dai, B.; Shen, W.; Sun, Y.; Zhao, K.; Zhang, Y. Automatic estimation of dairy cow body condition score based on attention-guided 3D point cloud feature extraction. Comput. Electron. Agric. 2023, 206, 107666. [Google Scholar] [CrossRef]

- Chen, Z.; Zhang, X.; Fang, Y. Automatic counting of pigs in a pen using convolutional neural networks. Biosyst. Eng. 2020, 197, 105–116. [Google Scholar] [CrossRef]

- Meunier, B.; Pradel, P.; Sloth, K.H.; Cirié, C.; Delval, E.; Mialon, M.M.; Veissier, I. Image analysis to refine measurements of dairy cow behaviour from a real-time location system. Biosyst. Eng. 2017, 173, 32–44. [Google Scholar] [CrossRef]

- Yin, X.; Wu, D.; Shang, Y.; Jiang, B.; Song, H. Using an EfficientNet-LSTM for the recognition of single Cow’s motion behaviours in a complicated environment. Comput. Electron. Agric. 2020, 177, 105707. [Google Scholar] [CrossRef]

- Yu, R.; Wei, X.; Liu, Y.; Yang, F.; Shen, W.; Gu, Z. Research on automatic recognition of dairy cow daily behaviors based on deep learning. Animals 2024, 14, 458. [Google Scholar] [CrossRef]

- Zheng, C.; Zhu, X.; Yang, X.; Wang, L.; Tu, S.; Xue, Y. Automatic recognition of lactating sow postures from depth images by deep learning detector. Comput. Electron. Agric. 2018, 147, 51–63. [Google Scholar] [CrossRef]

- Fernández, A.P.; Norton, T.; Tullo, E.; Hertem, T.v.; Youssef, A.; Exadaktylos, V.; Vranken, E.; Guarino, M.; Berckmans, D. Real-time monitoring of broiler flock’s welfare status using camera-based technology. Biosyst. Eng. 2018, 173, 103–114. [Google Scholar] [CrossRef]

- Kashiha, M.; Pluk, A.; Bahr, C.; Vranken, E.; Berckmans, D. Development of an early warning system for a broiler house using computer vision. Biosyst. Eng. 2013, 116, 36–45. [Google Scholar] [CrossRef]

- Ye, C.; Yu, Z.; Kang, R.; Yousaf, K.; Qi, C.; Chen, K.; Huang, Y. An experimental study of stunned state detection for broiler chickens using an improved convolution neural network algorithm. Comput. Electron. Agric. 2020, 170, 105284. [Google Scholar] [CrossRef]

- Li, J.; Su, H.; Li, J.; Xie, T.; Chen, Y.; Yuan, J.; Jiang, K.; Duan, X. SDSCNet: An instance segmentation network for efficient monitoring of goose breeding conditions. Appl. Intell. 2023, 53, 25435–25449. [Google Scholar] [CrossRef]

- Giannone, C.; Sahraeibelverdy, M.; Lamanna, M.; Cavallini, D.; Formigoni, A.; Tassinari, P.; Torreggiani, D.; Bovo, M. Automated dairy cow identification and feeding behaviour analysis using a computer vision model based on YOLOv8. Smart Agric. Technol. 2025, 12, 101304. [Google Scholar] [CrossRef]

- Terven, J.; Córdova-Esparza, D.M.; Romero-González, J.A. A comprehensive review of YOLO architectures in computer vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Wang, C.; Yeh, I.; Liao, H. Yolov9: Learning what you want to learn using programmable gradient information. arXiv 2024, arXiv:2402.13616. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. Yolov10: Real-time end-to-end object detection. arXiv 2024, arXiv:2405.14458. [Google Scholar] [CrossRef]

- Ni, J.; Shen, K.; Chen, Y.; Yang, S.X. An improved SSD-like deep network-based object detection method for indoor scenes. IEEE Trans. Instrum. Meas. 2023, 72, 582–591. [Google Scholar] [CrossRef]

- Chen, W.; Yu, Z.; Yang, C.; Lu, Y. Abnormal behavior recognition based on 3D dense connections. Int. J. Neural Syst. 2024, 34, 2450049. [Google Scholar] [CrossRef] [PubMed]

- Ni, J.; Wang, Y.; Tang, G.; Cao, W.; Yang, S.X. A lightweight GRU-based gesture recognition model for skeleton dynamic graphs. Multimed. Tools Appl. 2024, 83, 70545–70570. [Google Scholar] [CrossRef]

- Huo, R.; Chen, J.; Zhang, Y.; Gao, Q. 3D skeleton aware driver behavior recognition framework for autonomous driving system. Neurocomputing 2025, 613, 128743. [Google Scholar] [CrossRef]

- Zhong, J.; Chen, J.; Mian, A. DualConv: Dual convolutional kernels for lightweight deep neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2022, 34, 9528–9535. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11534–11542. [Google Scholar]

- Zhang, H.; Zhang, S. Focaler-IoU: More focused intersection over union loss. arXiv 2024, arXiv:2401.10525. [Google Scholar] [CrossRef]

- Everingham, M.; Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Yu, J.; Jiang, Y.; Wang, Z.; Cao, Z.; Huang, T. UnitBox: An advanced object detection network. In Proceedings of the 24th ACM International Conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016; pp. 516–520. [Google Scholar]

- Rezatofighi, S.H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.D.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. Proc. AAAI Conf. Artif. Intell. 2020, 34, 12993–13000. [Google Scholar] [CrossRef]

- Qiu, Z.; Zhao, Z.; Chen, S.; Zeng, J.; Huang, Y.; Xiang, B. Application of an improved YOLOv5 algorithm in real-time detection of foreign objects by ground-penetrating radar. Remote Sens. 2022, 14, 1895. [Google Scholar] [CrossRef]

- Gevorgyan, Z. SIoU loss: More powerful learning for bounding box regression. arXiv 2022, arXiv:2205.12740. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal loss for dense object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef] [PubMed]

- Romero, A.E.; Campos, L.M.d. A probabilistic methodology for multilabel classification. Intell. Data Anal. 2013, 17, 687–706. [Google Scholar] [CrossRef]

- Abramovich, F.; Pensky, M. Classification with many classes: Challenges and pluses. J. Multivar. Anal. 2019, 174, 104536. [Google Scholar] [CrossRef]

| Class | Definition | Instance |

|---|---|---|

| Drinking | Geese immerse their beaks in water to drink. |  |

| Feather preening | Geese contact their bodies with their beaks, often with their necks curved. |  |

| Feeding | Geese lower their heads to forage for food. |  |

| Floating | Geese float in water with their bodies relaxed. |  |

| Grooming | Geese immerse their necks in water and move them back and forth to clean themselves. |  |

| Pecking | One goose pecks at another goose with its beak. |  |

| Resting | Geese lie on the ground or float on water, with their necks resting on their backs. |  |

| Standing | Geese maintain a standing posture or are walking. |  |

| Wing stretching | Geese spread their wings, either to maintain balance or stretch their muscles. |  |

| Other | Postures that cannot be clearly classified into the above nine behaviors, serving as their complement. |  |

| Class | Train_Set | Val_Set | Test_Set | Total |

|---|---|---|---|---|

| Drinking | 2701 | 902 | 953 | 4556 |

| Feather preening | 1360 | 445 | 479 | 2284 |

| Feeding | 1018 | 313 | 293 | 1624 |

| Floating | 24,893 | 8571 | 8500 | 42,044 |

| Grooming | 673 | 226 | 229 | 1128 |

| Pecking | 1172 | 396 | 392 | 1960 |

| Resting | 24,478 | 8355 | 8715 | 41,548 |

| Standing | 2535 | 866 | 875 | 4276 |

| Wing stretching | 570 | 162 | 184 | 916 |

| Other | 5682 | 1888 | 1982 | 9552 |

| All | 65,082 | 22,124 | 22,682 | 109,888 |

| Model | Depth | Width | mAP0.5 (%) | Model Size (MB) | Parameters | FPS (Frame/s) |

|---|---|---|---|---|---|---|

| YOLOv8n | 0.33 | 0.25 | 86.01 | 6.4 | 3,012,798 | 97.9 |

| YOLOv8s | 0.33 | 0.50 | 91.51 | 22.7 | 11,139,454 | 74.3 |

| YOLOv8m | 0.67 | 0.50 | 95.12 | 52.2 | 25,862,110 | 28.6 |

| YOLOv8l | 1.00 | 1.00 | 96.04 | 87.8 | 43,637,550 | 26.9 |

| YOLOv8x | 1.00 | 1.25 | 96.33 | 136.0 | 68,162,238 | 22.2 |

| Module Variant | P (%) | R (%) | F1 (%) | mAP0.5 (%) | Model Size (MB) | FLOPs (G) | Notes |

|---|---|---|---|---|---|---|---|

| Vanilla C2f (YOLOv8s) | 90.09 | 85.80 | 87.89 | 91.51 | 22.7 | 28.7 | Baseline |

| C2f-Dual (Position 1) | 90.91 | 85.76 | 88.26 | 92.04 | 24.8 | 31.5 | Replace C2f in backbone |

| C2f-Dual (Position 2) | 91.23 | 86.23 | 88.66 | 92.40 | 25.2 | 31.5 | Replace C2f in neck |

| C2f-Dual (Pos. 1 + 2) | 91.61 | 87.11 | 89.30 | 92.94 | 27.4 | 34.3 | Replace both |

| Loss Function | Precision (%) | Recall (%) | F1 (%) | mAP@0.5 (%) |

|---|---|---|---|---|

| CIoU (YOLOv8s) | 90.09 | 85.80 | 87.89 | 91.51 |

| DIoU | 91.71 | 85.42 | 88.45 | 92.10 |

| SIoU | 91.46 | 85.92 | 88.60 | 92.35 |

| GIoU | 91.00 | 86.29 | 88.58 | 92.42 |

| FocalerIoU | 91.54 | 87.13 | 89.28 | 93.26 |

| Configuration Item | Value |

|---|---|

| Input image size | 1280 × 1280 × 3 pixels |

| CPU | AMD EPYC 7542, 32-core processor |

| GPU | 2 × NVIDIA GeForce RTX 4090 (24 GB memory per GPU) |

| RAM | 128 GB |

| Operating system | Ubuntu 20.04.6 |

| Programming language | Python 3.11 |

| Framework | PyTorch 2.2.1 |

| CUDA Version | 12.1.1 |

| Optimizer | Stochastic Gradient Descent (SGD) |

| Initial learning rate | 0.01 |

| Momentum | 0.937 |

| Weight decay | 0.0005 |

| Batch size | 8 |

| Epochs | 100 |

| LR schedule | Step schedule with linear warmup (3 ep) |

| EMA | Not used |

| Early stopping | Not used (patience = 100) |

| Mixed precision | Disabled (FP32 training) |

| Training time/epoch | 1.42 min (DAEF-YOLO) |

| Throughput | 9.73 img/s (DAEF-YOLO) |

| Model | C2f-Dual | SPPF-ECA | FocalerIoU | ADown | P (%) | R (%) | F1 (%) | mAP@0.5 (%) | mAP@0.5:0.95 (%) | Model Size (MB) | FLOPs (G) | FPS (Frame/s) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| YOLOv8s | × | × | × | × | 90.09 | 85.80 | 87.89 | 91.51 | 69.91 | 22.7 | 28.7 | 74.3 |

| A | √ | × | × | × | 91.61 | 87.11 | 89.30 | 92.94 | 71.16 | 27.4 | 34.3 | 74.9 |

| B | × | √ | × | × | 92.26 | 87.17 | 89.64 | 92.95 | 71.03 | 27.9 | 30.8 | 87.7 |

| C | × | × | √ | × | 91.54 | 87.13 | 89.28 | 93.26 | 71.32 | 22.7 | 28.7 | 89.2 |

| D | √ | √ | × | × | 92.35 | 86.62 | 89.39 | 92.95 | 71.40 | 32.6 | 36.5 | 69.9 |

| E | √ | × | √ | × | 90.73 | 88.96 | 89.84 | 93.80 | 71.79 | 27.4 | 34.4 | 78.7 |

| F | × | √ | √ | × | 92.86 | 87.83 | 90.27 | 93.62 | 71.33 | 27.9 | 38.8 | 86.2 |

| G | × | √ | √ | √ | 93.50 | 89.24 | 91.32 | 94.39 | 72.68 | 25.7 | 28.0 | 67.5 |

| H | √ | √ | √ | √ | 94.65 | 92.17 | 93.39 | 96.10 | 71.50 | 30.4 | 33.8 | 82.9 |

| Class | P (%) | R (%) | AP@0.5 (%) | AP@0.5:0.95 (%) |

|---|---|---|---|---|

| Drinking | 84.99 | 88.53 | 96.12 | 73.03 |

| Feather preening | 88.59 | 84.89 | 97.68 | 74.32 |

| Feeding | 85.83 | 84.67 | 94.73 | 66.41 |

| Floating | 97.38 | 93.49 | 99.08 | 78.08 |

| Grooming | 95.14 | 94.7 | 99.34 | 79.03 |

| Other | 82.98 | 85.26 | 83.69 | 46.16 |

| Pecking | 91.83 | 93.14 | 97.74 | 74.75 |

| Resting | 96.85 | 93.84 | 97.47 | 66.51 |

| Standing | 90.26 | 86.81 | 95.72 | 65.26 |

| Wing stretching | 95.99 | 96.44 | 99.38 | 76.35 |

| Overall (mean) | 94.65 | 92.17 | 96.10 | 69.82 |

| Model | P (%) | R (%) | F1 (%) | mAP@0.5 (%) | mAP@0.5:0.95 (%) | Model Size (MB) | FLOPs (G) | FPS (Frame/s) |

|---|---|---|---|---|---|---|---|---|

| YOLOv5s | 72.31 | 66.05 | 69.04 | 73.68 | 50.00 | 15.1 | 16.0 | 78.6 |

| YOLOv7-Tiny | 76.17 | 69.63 | 72.75 | 77.21 | 48.47 | 12.6 | 13.3 | 144.9 |

| YOLOv7 | 91.27 | 84.58 | 87.80 | 91.61 | 65.14 | 75.1 | 103.3 | 68.4 |

| YOLOv8s | 90.09 | 85.80 | 87.89 | 91.51 | 69.91 | 22.7 | 28.7 | 74.3 |

| YOLOv9s | 87.18 | 82.34 | 84.69 | 88.90 | 66.96 | 19.5 | 39.6 | 74.6 |

| YOLOv10s | 84.00 | 80.46 | 82.19 | 87.23 | 65.80 | 16.7 | 24.8 | 111.1 |

| DAEF-YOLO | 94.65 | 92.17 | 93.39 | 96.10 | 69.82 | 30.4 | 33.8 | 82.9 |

| Class | YOLOv5s | YOLOv7 | YOLOv9s | YOLOv10s | DAEF-YOLO | Manual Annotation |

|---|---|---|---|---|---|---|

| All | 57 | 74 | 70 | 61 | 84 | 83 |

| Drinking | 0 | 7 | 5 | 2 | 8 | 9 |

| Feather preening | 1 | 1 | 1 | 1 | 1 | 1 |

| Feeding | 1 | 0 | 0 | 0 | 5 | 1 |

| Floating | 48 | 48 | 49 | 46 | 48 | 46 |

| Grooming | 0 | 0 | 0 | 0 | 0 | 1 |

| Pecking | 0 | 0 | 0 | 0 | 0 | 1 |

| Resting | 4 | 5 | 5 | 5 | 5 | 5 |

| Standing | 1 | 8 | 6 | 5 | 10 | 8 |

| Wing stretching | 0 | 0 | 0 | 0 | 0 | 0 |

| Other | 2 | 5 | 4 | 2 | 7 | 11 |

| Class | YOLOv5s | YOLOv7 | YOLOv9s | YOLOv10s | DAEF-YOLO | Manual Annotation |

|---|---|---|---|---|---|---|

| All | 93 | 107 | 101 | 94 | 117 | 117 |

| Drinking | 0 | 5 | 5 | 3 | 5 | 5 |

| Feather preening | 0 | 1 | 1 | 1 | 1 | 1 |

| Feeding | 0 | 1 | 0 | 0 | 0 | 1 |

| Floating | 41 | 40 | 40 | 40 | 44 | 41 |

| Grooming | 0 | 0 | 0 | 0 | 0 | 0 |

| Pecking | 0 | 0 | 0 | 0 | 0 | 0 |

| Resting | 51 | 56 | 52 | 46 | 60 | 60 |

| Standing | 0 | 0 | 0 | 0 | 1 | 0 |

| Wing stretching | 0 | 0 | 0 | 0 | 0 | 0 |

| Other | 1 | 4 | 3 | 4 | 6 | 9 |

| Model | Classes | P (%) | R (%) | F1 (%) | mAP@0.5 (%) | mAP@0.5:0.95 (%) |

|---|---|---|---|---|---|---|

| YOLOv8s | 9 (without Other) | 92.61 | 88.84 | 90.65 | 94.36 | 73.68 |

| YOLOv8s | 10 (with Other) | 90.09 | 85.80 | 87.86 | 91.51 | 69.91 |

| DAEF-YOLO | 9 (without Other) | 94.50 | 91.00 | 92.75 | 96.08 | 75.68 |

| DAEF-YOLO | 10 (with Other) | 94.65 | 92.17 | 93.34 | 96.10 | 69.82 |

| Model | P (%) | R (%) | F1 (%) | mAP@0.5 (%) | mAP@0.5:0.95 (%) |

|---|---|---|---|---|---|

| YOLOv8s(single-class) | 97.20 | 94.82 | 96.00 | 97.82 | 75.26 |

| YOLOv8s(multi-class) | 90.09 | 85.80 | 87.89 | 91.51 | 69.91 |

| DAEF-YOLO(single-class) | 97.89 | 96.00 | 96.94 | 98.57 | 73.87 |

| DAEF-YOLO(multi-class) | 94.65 | 92.17 | 93.39 | 96.10 | 71.50 |

| Model | Item | Value |

|---|---|---|

| DAEF-YOLO | a (both correct) | 117 |

| b (1-only correct) | 29 | |

| c (10-only correct) | 22 | |

| d (both wrong) | 176 | |

| b + c | 51 | |

| Method | Chi-square approximation | |

| χ2 statistic | 0.961 | |

| p-value | 0.327 | |

| Accuracy (1-class) | 0.424 | |

| Accuracy (10-class) | 0.404 | |

| Conclusion | Not significant (p > 0.05) | |

| YOLOv8s | a (both correct) | 93 |

| b (1-only correct) | 13 | |

| c (10-only correct) | 6 | |

| d (both wrong) | 232 | |

| b + c | 19 | |

| Method | Exact binomial McNemar test | |

| χ2/Exact statistic | – | |

| p-value | 0.167 | |

| Accuracy (1-class) | 0.308 | |

| Accuracy (10-class) | 0.288 | |

| Conclusion | Not significant (p > 0.05) |

| Model | Input Modality | Application Domain | Reported Performance | Notes on Applicability to Farming Scenarios |

|---|---|---|---|---|

| GRU-based skeleton dynamic graph [34] | Skeleton sequences | Human gesture recognition | Accuracy ≈ 94% | Requires skeleton joint data; not feasible for large-scale goose flocks |

| 3D skeleton-aware driver behavior recognition [35] | 3D skeleton + temporal data | Driver monitoring | Accuracy ≈ 95% | Relies on motion capture or skeleton extraction; limited transferability |

| DAEF-YOLO (this study) | RGB images | Goose individual and behavior recognition | P = 94.65%, R = 92.17%, mAP@0.5 = 96.10% | Operates directly on raw farm video; deployable on embedded devices |

| Livestock | Methods | Categories | Performance(%) | |||

|---|---|---|---|---|---|---|

| mAP | Accuracy | Precision | Recall | |||

| White Roman goose [4] | Mask R-CNN (Instance Segmentation)+ Visible camera + Infrared thermal camera integration on Jetson Xavier NX | Single class: goose (individual detection for surface temperature monitoring) | 97.1 | 95.1 | ||

| Sichuan white goose [27] | SDSCNet—instance segmentation network with depthwise separable convolution encoder–decoder, INT8 quantization for embedded deployment | Single class: goose (instance segmentation of ~80 individuals, no behavior categories) | 93.3 | |||

| Magang goose [5] | DH-YOLOX—improved YOLOX with dual-head structure and attention mechanism for key behavior detection | Multi-class: drinking, foraging, other non-feeding and dinking, cluster rest, cluster stress | 98.98 | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, T.; Zhang, S.; Ren, R.; Li, J.; Xia, Y. DAEF-YOLO Model for Individual and Behavior Recognition of Sanhua Geese in Precision Farming Applications. Animals 2025, 15, 3058. https://doi.org/10.3390/ani15203058

Sun T, Zhang S, Ren R, Li J, Xia Y. DAEF-YOLO Model for Individual and Behavior Recognition of Sanhua Geese in Precision Farming Applications. Animals. 2025; 15(20):3058. https://doi.org/10.3390/ani15203058

Chicago/Turabian StyleSun, Tianyuan, Shujuan Zhang, Rui Ren, Jun Li, and Yimin Xia. 2025. "DAEF-YOLO Model for Individual and Behavior Recognition of Sanhua Geese in Precision Farming Applications" Animals 15, no. 20: 3058. https://doi.org/10.3390/ani15203058

APA StyleSun, T., Zhang, S., Ren, R., Li, J., & Xia, Y. (2025). DAEF-YOLO Model for Individual and Behavior Recognition of Sanhua Geese in Precision Farming Applications. Animals, 15(20), 3058. https://doi.org/10.3390/ani15203058