Foundations of Livestock Behavioral Recognition: Ethogram Analysis of Behavioral Definitions and Its Practices in Multimodal Large Language Models

Abstract

Simple Summary

Abstract

1. Introduction

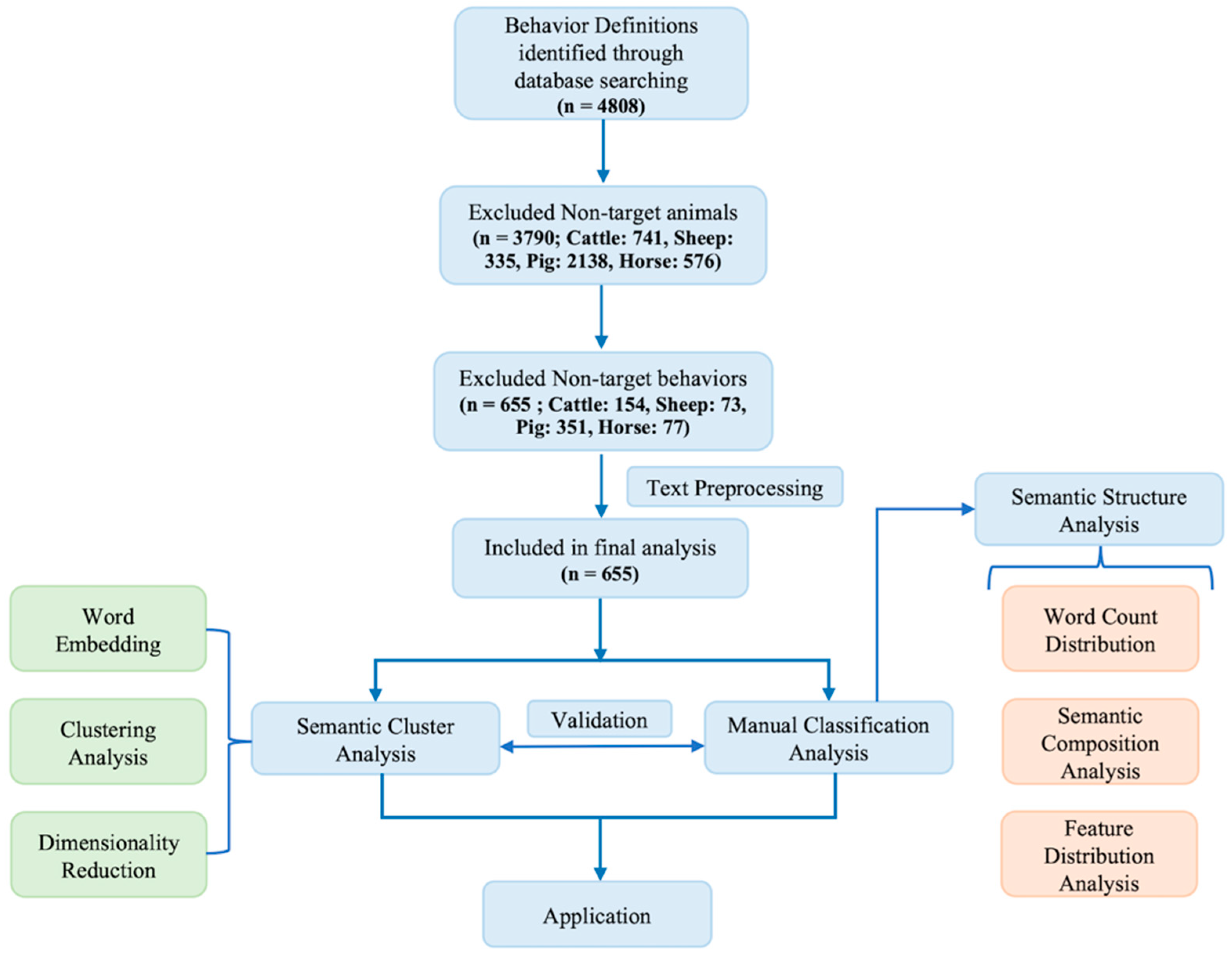

2. Methods

2.1. Data Collection

2.2. Data Preprocessing

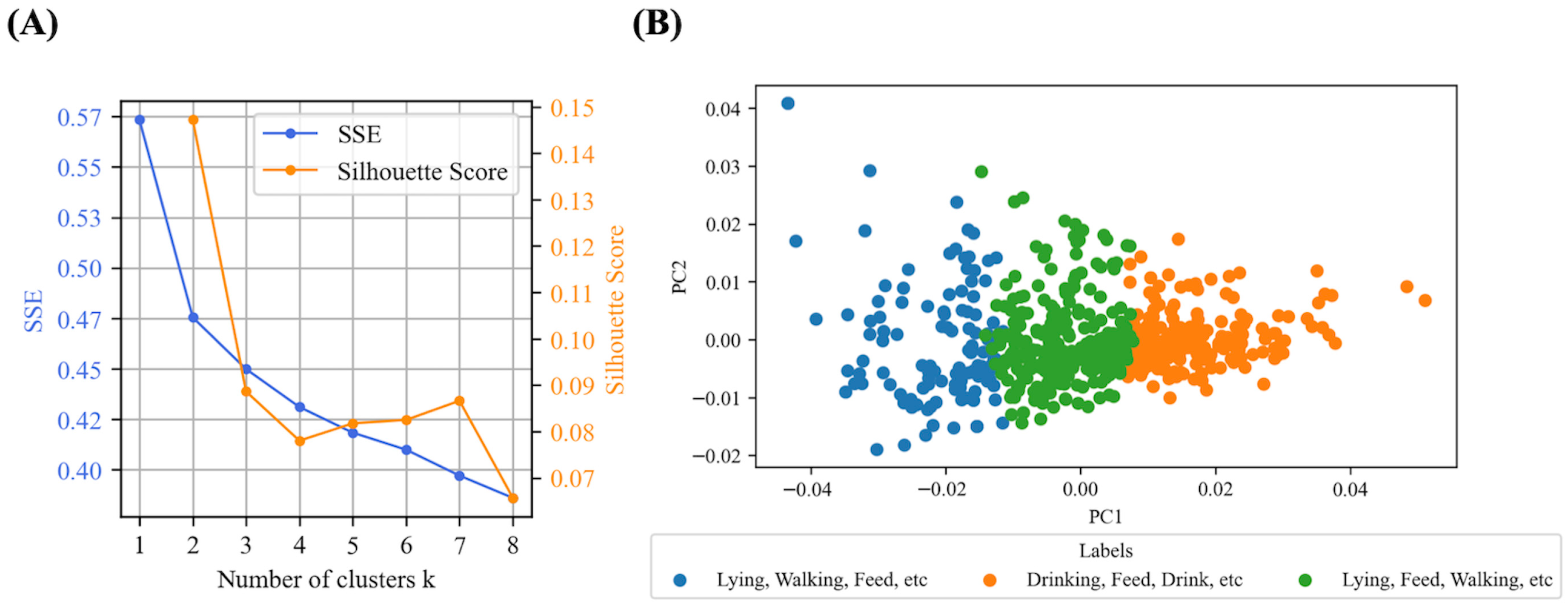

2.3. Semantic Cluster Analysis of Behavior Definitions

2.4. Validation of Cluster Results Through Classification Analysis

2.5. Semantic Structure Analysis and Validation of Behavioral Categories

3. Results and Discussions

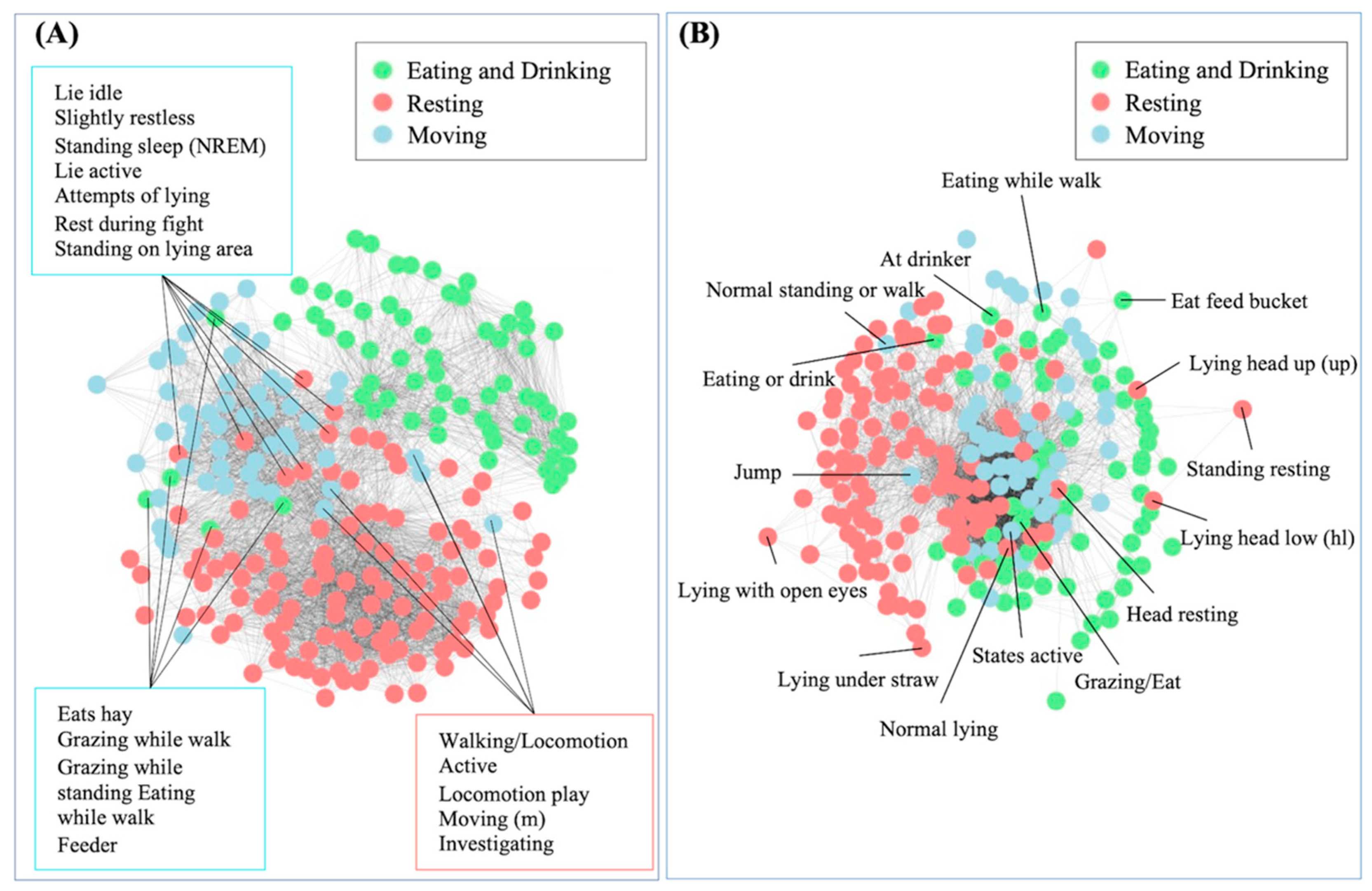

3.1. Semantic Cluster Analysis

3.2. Semantic Structure Analysis

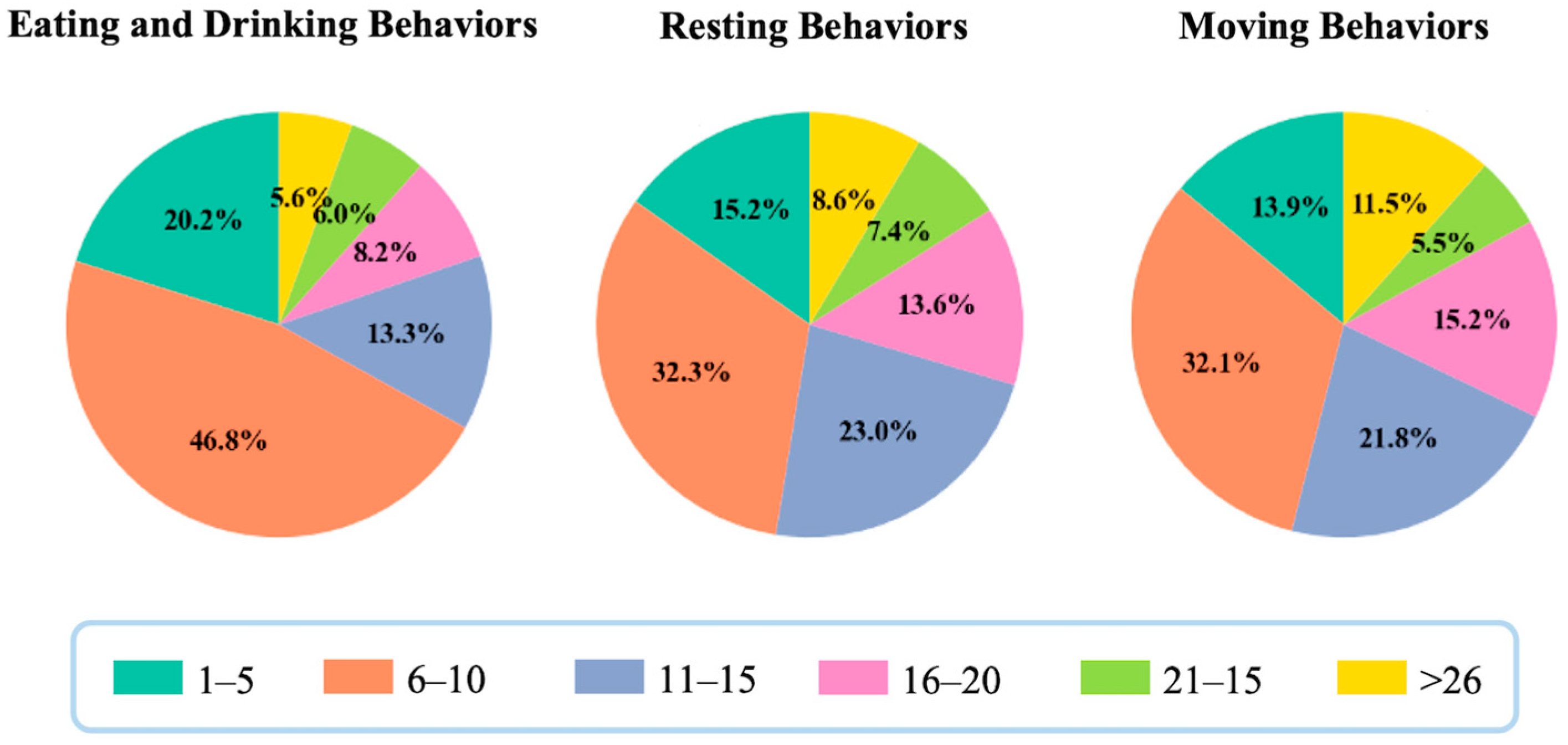

3.2.1. Word Count Distribution

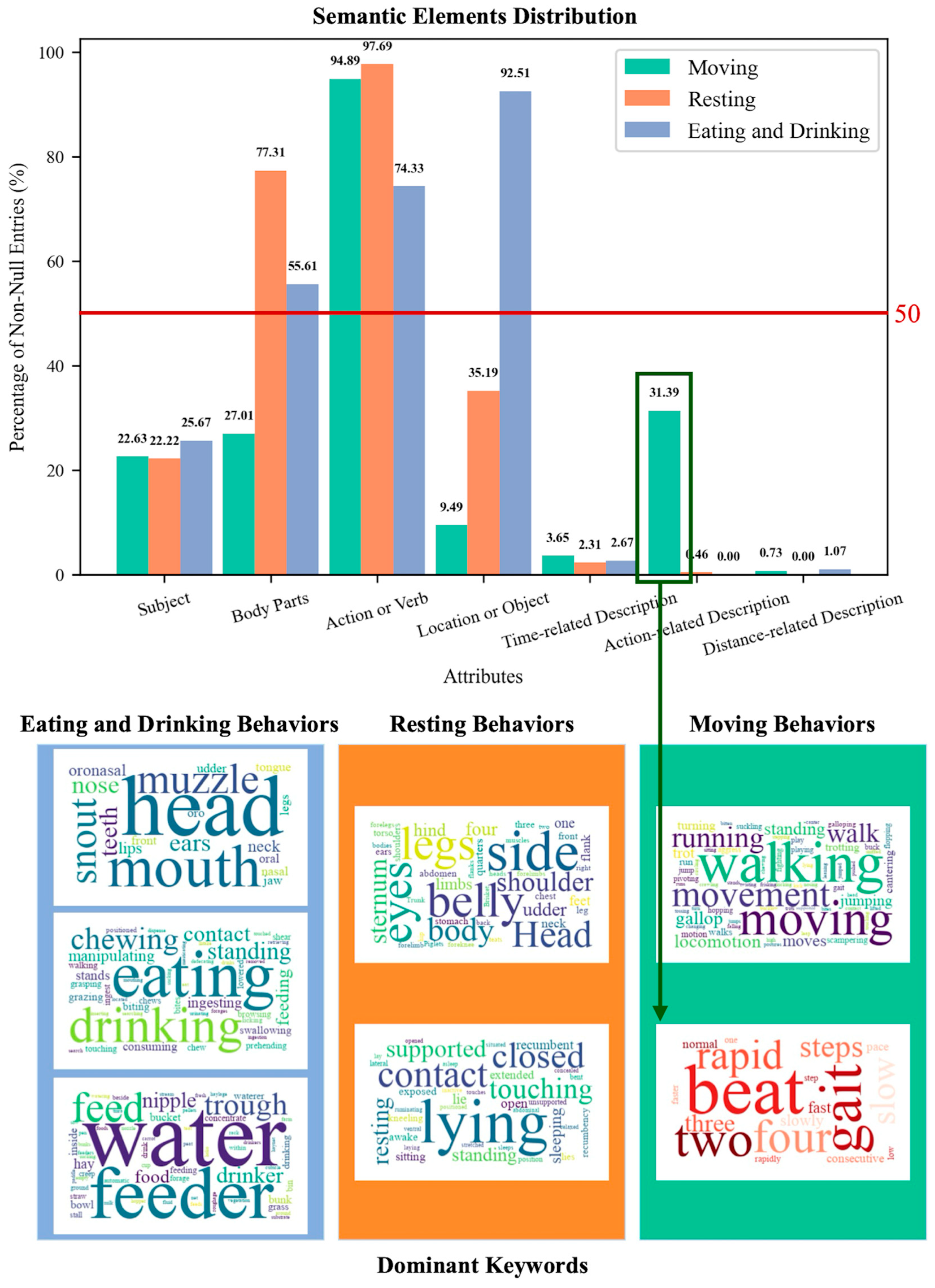

3.2.2. Semantic Structure Composition and Keywords Extraction

3.3. Consistency Between Manual Classification and Semantic Clustering

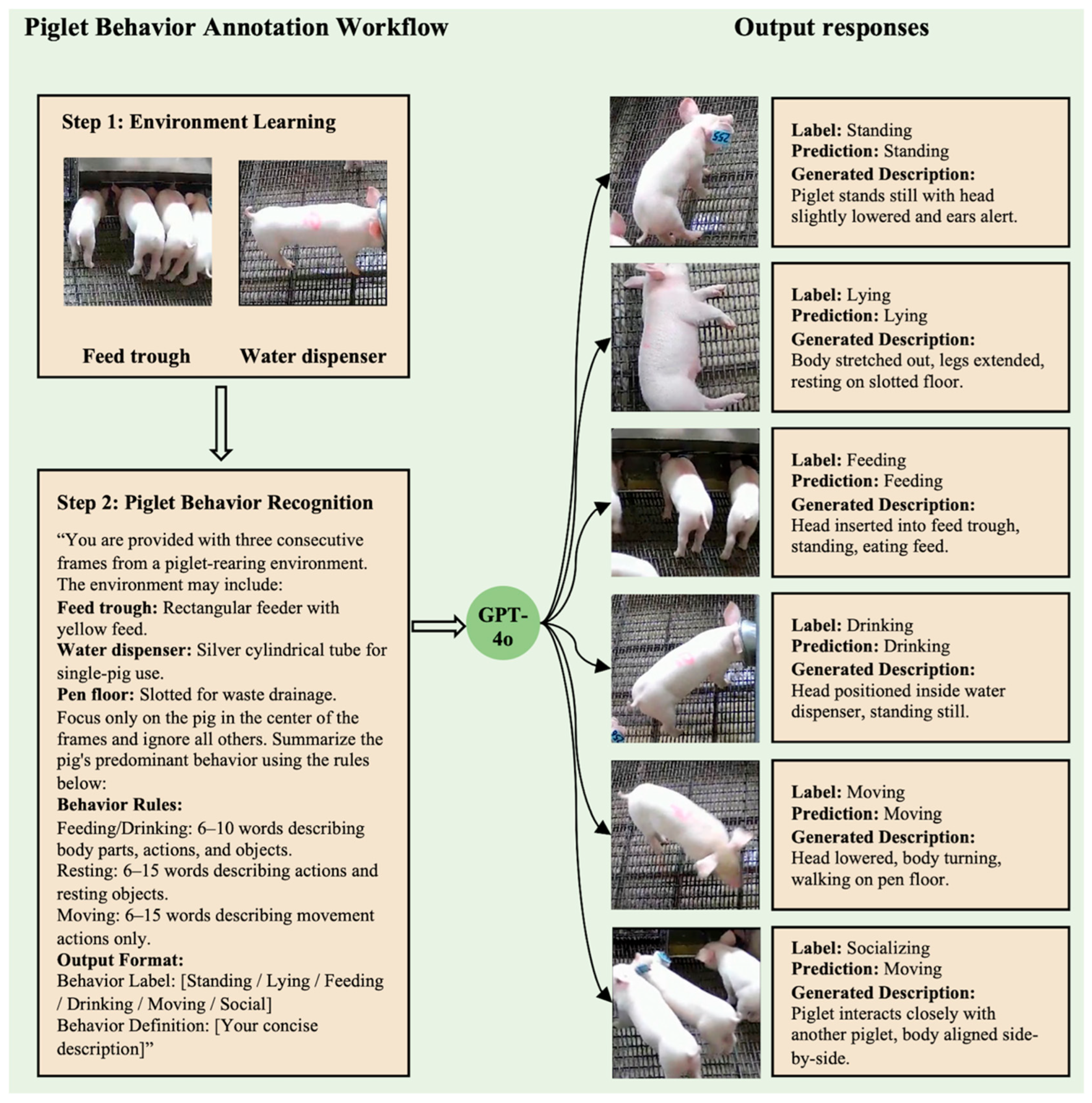

3.4. Practical Example of Image-Based Annotation Using Structured Behavior Definitions in LLMs

4. Limitation and Future Works

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chen, C.; Zhu, W.; Norton, T. Behaviour recognition of pigs and cattle: Journey from computer vision to deep learning. Comput. Electron. Agric. 2021, 187, 106255. [Google Scholar] [CrossRef]

- Siegford, J.M.; Steibel, J.P.; Han, J.; Benjamin, M.; Brown-Brandl, T.; Dórea, J.R.R.; Morris, D.; Norton, T.; Psota, E.; Rosa, G.J.M. The quest to develop automated systems for monitoring animal behavior. Appl. Anim. Behav. Sci. 2023, 265, 106000. [Google Scholar] [CrossRef]

- Lamanna, M.; Bovo, M.; Cavallini, D. Wearable Collar Technologies for Dairy Cows: A Systematized Review of the Current Applications and Future Innovations in Precision Livestock Farming. Animals 2025, 15, 458. [Google Scholar] [CrossRef]

- Cavallini, D.; Giammarco, M.; Buonaiuto, G.; Vignola, G.; De Matos Vettori, J.; Lamanna, M.; Prasinou, P.; Colleluori, R.; Formigoni, A.; Fusaro, I. Two years of precision livestock management: Harnessing ear tag device behavioral data for pregnancy detection in free-range dairy cattle on silage/hay-mix ration. Front. Anim. Sci. 2025, 6, 1547395. [Google Scholar] [CrossRef]

- Shu, H.; Bindelle, J.; Guo, L.; Gu, X. Determining the onset of heat stress in a dairy herd based on automated behaviour recognition. Biosyst. Eng. 2023, 226, 238–251. [Google Scholar] [CrossRef]

- Yang, Q.; Chen, M.; Xiao, D.; Huang, S.; Hui, X. Long-term video activity monitoring and anomaly alerting of group-housed pigs. Comput. Electron. Agric. 2024, 224, 109205. [Google Scholar] [CrossRef]

- Gu, Z.; Zhang, H.; He, Z.; Niu, K. A two-stage recognition method based on deep learning for sheep behavior. Comput. Electron. Agric. 2023, 212, 108143. [Google Scholar] [CrossRef]

- Nasirahmadi, A.; Sturm, B.; Olsson, A.-C.; Jeppsson, K.-H.; Müller, S.; Edwards, S.; Hensel, O. Automatic scoring of lateral and sternal lying posture in grouped pigs using image processing and Support Vector Machine. Comput. Electron. Agric. 2019, 156, 475–481. [Google Scholar] [CrossRef]

- Jiang, M.; Rao, Y.; Zhang, J.; Shen, Y. Automatic behavior recognition of group-housed goats using deep learning. Comput. Electron. Agric. 2020, 177, 105706. [Google Scholar] [CrossRef]

- Porto, S.M.; Arcidiacono, C.; Anguzza, U.; Cascone, G. The automatic detection of dairy cow feeding and standing behaviours in free-stall barns by a computer vision-based system. Biosyst. Eng. 2015, 133, 46–55. [Google Scholar] [CrossRef]

- Li, J.; Green-Miller, A.R.; Hu, X.; Lucic, A.; Mohan, M.R.M.; Dilger, R.N.; Condotta, I.C.F.S.; Aldridge, B.; Hart, J.M.; Ahuja, N. Barriers to computer vision applications in pig production facilities. Comput. Electron. Agric. 2022, 200, 107227. [Google Scholar] [CrossRef]

- Boneh-Shitrit, T.; Finka, L.; Mills, D.S.; Luna, S.P.; Dalla Costa, E.; Zamansky, A.; Bremhorst, A. A segment-based framework for explainability in animal affective computing. Sci. Rep. 2025, 15, 13670. [Google Scholar] [CrossRef]

- Rousing, T.; Wemelsfelder, F. Qualitative assessment of social behaviour of dairy cows housed in loose housing systems. Appl. Anim. Behav. Sci. 2006, 101, 40–53. [Google Scholar] [CrossRef]

- Vazire, S.; Gosling, S.D.; Dickey, A.S.; Schapiro, S.J. Measuring personality in nonhuman animals. In Handbook of Research Methods in Personality Psychology; The Guilford Press: New York, NY, USA, 2007; pp. 190–206. [Google Scholar]

- Wilsson, E.; Sinn, D.L. Are there differences between behavioral measurement methods? A comparison of the predictive validity of two ratings methods in a working dog program. Appl. Anim. Behav. Sci. 2012, 141, 158–172. [Google Scholar] [CrossRef]

- Dawkins, M.S. Observing Animal Behaviour: Design and Analysis of Quantitative Data; Oxford University Press: Oxford, UK, 2007. [Google Scholar]

- Bateson, M.; Martin, P. Measuring Behaviour: An Introductory Guide; Cambridge University Press: Cambridge, UK, 2021. [Google Scholar]

- Swan, K.-M.; Telkänranta, H.; Munsterhjelm, C.; Peltoniemi, O.; Valros, A. Access to chewable materials during lactation affects sow behaviour and interaction with piglets. Appl. Anim. Behav. Sci. 2021, 234, 105174. [Google Scholar] [CrossRef]

- Gaillard, C.; Deroiné, C.; Misrach, M.; Durand, M. Effects over time of different types of sounds on gestating sows’ behavior. Appl. Anim. Behav. Sci. 2023, 266, 106012. [Google Scholar] [CrossRef]

- Schanz, L.; Hintze, S.; Hübner, S.; Barth, K.; Winckler, C. Single-and multi-species groups: A descriptive study of cattle and broiler behaviour on pasture. Appl. Anim. Behav. Sci. 2022, 257, 105779. [Google Scholar] [CrossRef]

- Pullin, A.N.; Pairis-Garcia, M.D.; Campbell, B.J.; Campler, M.R.; Proudfoot, K.L.; Fluharty, F.L. The effect of social dynamics and environment at time of early weaning on short-and long-term lamb behavior in a pasture and feedlot setting. Appl. Anim. Behav. Sci. 2017, 197, 32–39. [Google Scholar] [CrossRef]

- Stange, L.M.; Wilder, T.; Siebler, D.; Krieter, J.; Czycholl, I. Comparison of physiological and ethological indicators and rein tension in headshakers and control horses in riding tests. Appl. Anim. Behav. Sci. 2023, 263, 105943. [Google Scholar] [CrossRef]

- Conrad, L.; Aubé, L.; Heuchan, E.; Conte, S.; Bergeron, R.; Devillers, N. Effects of farrowing hut design on maternal and thermoregulatory behaviour in outdoor housed sows and piglets. Appl. Anim. Behav. Sci. 2022, 251, 105616. [Google Scholar] [CrossRef]

- Cohen, J. A coefficient of agreement for nominal scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- Bokkers, E.; De Vries, M.; Antonissen, I.; De Boer, I. Inter-and intra-observer reliability of experienced and inexperienced observers for the Qualitative Behaviour Assessment in dairy cattle. Anim. Welf. 2012, 21, 307–318. [Google Scholar] [CrossRef]

- Yang, A.; Huang, H.; Zheng, B.; Li, S.; Gan, H.; Chen, C.; Yang, X.; Xue, Y. An automatic recognition framework for sow daily behaviours based on motion and image analyses. Biosyst. Eng. 2020, 192, 56–71. [Google Scholar] [CrossRef]

- Achour, B.; Belkadi, M.; Filali, I.; Laghrouche, M.; Lahdir, M. Image analysis for individual identification and feeding behaviour monitoring of dairy cows based on Convolutional Neural Networks (CNN). Biosyst. Eng. 2020, 198, 31–49. [Google Scholar] [CrossRef]

- Zhang, K.; Li, X.; Lu, J.; Han, K. Semantic correspondence: Unified benchmarking and a strong baseline. arXiv 2025, arXiv:2505.18060. [Google Scholar] [CrossRef]

- Wu, Y.; Zhou, S.; Wu, Z.; Chen, Z.; Hu, X.; Li, J. Accelerated Data Engine: A faster dataset construction workflow for computer vision applications in commercial livestock farms. Comput. Electron. Agric. 2024, 226, 109452. [Google Scholar] [CrossRef]

- Liddy, E.D. Natural language processing. In Encyclopedia of Library and Information Science, 2nd ed.; Marcel Decker, Inc.: New York, NY, USA, 2001. [Google Scholar]

- Yang, Y.; Wilbur, J. Using corpus statistics to remove redundant words in text categorization. J. Am. Soc. Inf. Sci. 1996, 47, 357–369. [Google Scholar] [CrossRef]

- Toman, M.; Tesar, R.; Jezek, K. Influence of word normalization on text classification. Proc. InSciT 2006, 4, 9. [Google Scholar]

- Makrehchi, M.; Kamel, M.S. Automatic extraction of domain-specific stopwords from labeled documents. In Advances in Information Retrieval, Proceedings of the 30th European Conference on IR Research, ECIR 2008, Glasgow, UK, 30 March–3 April 2008; Springer: Berlin/Heidelberg, Germany, 2008; pp. 222–233. [Google Scholar]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient estimation of word representations in vector space. arXiv 2013, arXiv:1301.3781. [Google Scholar] [CrossRef]

- Ikotun, A.M.; Ezugwu, A.E.; Abualigah, L.; Abuhaija, B.; Heming, J. K-means clustering algorithms: A comprehensive review, variants analysis, and advances in the era of big data. Inf. Sci. 2023, 622, 178–210. [Google Scholar] [CrossRef]

- Saha, R. Influence of various text embeddings on clustering performance in NLP. arXiv 2023, arXiv:2305.03144. [Google Scholar] [CrossRef]

- Musil, T. Examining structure of word embeddings with PCA. In Proceedings of the Text, Speech, and Dialogue: 22nd International Conference, TSD 2019, Ljubljana, Slovenia, 11–13 September 2019; pp. 211–223. [Google Scholar]

- Salton, G.; Buckley, C. Term-weighting approaches in automatic text retrieval. Inf. Process. Manag. 1988, 24, 513–523. [Google Scholar] [CrossRef]

- Ramos, J. Using tf-idf to determine word relevance in document queries. In Proceedings of the First Instructional Conference on Machine Learning, Piscataway, NJ, USA, 3–8 December 2003; Volume 242, pp. 133–142. [Google Scholar]

- Li, Y.; Yang, T. Word embedding for understanding natural language: A survey. In Guide to Big Data Applications; Springer International Publishing: Cham, Switzerland, 2018; pp. 83–104. [Google Scholar]

- Kodinariya, T.M.; Makwana, P.R. Review on determining number of Cluster in K-Means Clustering. Int. J. 2013, 1, 90–95. [Google Scholar]

- Blei, D.; Ng, A.; Jordan, M. Latent Dirichlet Allocation. J. Mach. Learn. Res. 2003, 3, 993–1022. [Google Scholar]

- Wu, Y.; Hu, X.; Fu, Z.; Zhou, S.; Li, J. GPT-4o: Visual perception performance of multimodal large language models in piglet activity understanding. arXiv 2024, arXiv:2406.09781. [Google Scholar] [CrossRef]

- Fraser, J.; Aricibasi, H.; Tulpan, D.; Bergeron, R. A computer vision image differential approach for automatic detection of aggressive behavior in pigs using deep learning. J. Anim. Sci. 2023, 101, skad347. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Komatsu, M.; Ohkawa, T.; Oyama, K. Real-Time Cattle Interaction Recognition via Triple-stream Network. In Proceedings of the 2022 21st IEEE International Conference on Machine Learning and Applications (ICMLA), Nassau, Bahamas, 12–14 December 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 61–68. [Google Scholar]

- Mudd, A.T.; Dilger, R.N. Early-life nutrition and neurodevelopment: Use of the piglet as a translational model. Adv. Nutr. 2017, 8, 92–104. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Lee, J.; Yoon, W.; Kim, S.; Kim, D.; Kim, S.; So, C.H.; Kang, J. BioBERT: A pre-trained biomedical language representation model for biomedical text mining. Bioinformatics 2020, 36, 1234–1240. [Google Scholar] [CrossRef]

| Behavior Category | Description |

|---|---|

| Feeding and Drinking Behavior | Behaviors related to eating or drinking, such as “Eat’’, “Drink’’, “Feed’’, “Graze’’, “Forage’’, etc. |

| Resting Behavior | Behaviors indicating rest or inactivity, such as “Lie’’, “Rest’’, “Sleep’’, “Inactive’’, etc. |

| Moving Behavior | Behaviors involving movement or activity, such as “Walk’’, “Run’’, “Turn’’, “Jump’’, “Trot’’, “Canter’’, “Move’’, “Play’’, “Active’’, “Locomotion’’, etc. |

| Semantic Category | Description |

|---|---|

| Subject | Includes animal nouns within the definition, such as “pigs”, “piglets”, “horse”, “sheep”, “cattle”, etc. |

| Body Parts | Refers to nouns describing parts of the animal’s body, such as “feet”, “mouth”, “belly”, etc. |

| Action or Verb | Includes verbs that indicate actions performed by the animal, such as “walk”, “run”, “graze”, etc. |

| Location or Object | Specifies where the action occurs or the object involved, such as “ground”, “in feeder”, “in trough”, etc. |

| Time-related Description | Refers to time-related details specifying the duration of the action, such as “3 s”, “5 s”, etc. |

| Action-related Description | Describes the intensity of the action, such as “slow”, “rapid”, etc. |

| Distance-related Description | Indicates the range of movement, such as “5 cm”, “15 cm”, etc. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, S.; Li, W.; Zhou, M.; Dilger, R.N.; Condotta, I.C.F.S.; Wu, Z.; Tang, X.; Wu, Y.; Wang, T.; Li, J. Foundations of Livestock Behavioral Recognition: Ethogram Analysis of Behavioral Definitions and Its Practices in Multimodal Large Language Models. Animals 2025, 15, 3030. https://doi.org/10.3390/ani15203030

Zhou S, Li W, Zhou M, Dilger RN, Condotta ICFS, Wu Z, Tang X, Wu Y, Wang T, Li J. Foundations of Livestock Behavioral Recognition: Ethogram Analysis of Behavioral Definitions and Its Practices in Multimodal Large Language Models. Animals. 2025; 15(20):3030. https://doi.org/10.3390/ani15203030

Chicago/Turabian StyleZhou, Siling, Wenjie Li, Mengting Zhou, Ryan N. Dilger, Isabella C. F. S. Condotta, Zhonghong Wu, Xiangfang Tang, Yiqi Wu, Tao Wang, and Jiangong Li. 2025. "Foundations of Livestock Behavioral Recognition: Ethogram Analysis of Behavioral Definitions and Its Practices in Multimodal Large Language Models" Animals 15, no. 20: 3030. https://doi.org/10.3390/ani15203030

APA StyleZhou, S., Li, W., Zhou, M., Dilger, R. N., Condotta, I. C. F. S., Wu, Z., Tang, X., Wu, Y., Wang, T., & Li, J. (2025). Foundations of Livestock Behavioral Recognition: Ethogram Analysis of Behavioral Definitions and Its Practices in Multimodal Large Language Models. Animals, 15(20), 3030. https://doi.org/10.3390/ani15203030