1. Introduction

Pigs constitute the primary global source of meat products, and increasing societal development has heightened focus on pork quality [

1]. Animal behavior serves as a critical indicator of health status and environmental welfare, with abnormal behaviors often signaling disease onset. Consequently, behavioral monitoring provides early health warnings and enables preventive veterinary interventions [

2], establishing its fundamental significance in livestock management [

3].

Automated behavior recognition is becoming increasingly significant for enhancing efficiency in smart livestock management [

4]. In contrast, traditional methods based on manual observation pose notable limitations due to inherent subjectivity, high labor costs, and the inability to provide continuous 24-h monitoring [

5]. Although radio frequency identification (RFID)-based systems, such as the study by Marcon et al. [

6], can detect feeding behavior via ear tag signals, they are associated with high costs and may induce animal stress. In contrast, deep learning technology enables non-contact, high-precision behavior recognition, effectively overcoming these limitations [

7]. For instance, Alameer et al. [

8] employed a GoogLeNet network to achieve non-nutritive visiting (NNV) detection with an accuracy of 99.4% without the need for individual tracking. Li et al. [

9] improved the YOLOv5-KCB model, increasing the recognition accuracy of pig heads, necks, and faces to 98.4% and 95.1%, respectively. Zhang et al. [

10] proposed an LSR-YOLO model for accurate sheep face recognition, achieving a mAP@50 of 97.8%. Hu et al. [

11] introduced a spatio-temporal feature-integrated Transformer model, PB-STR, for simultaneously recognizing seven types of pig behaviors in intensive farming environments. Zhao et al. [

12] proposed an improved lightweight model based on YOLOv11, termed ECA–YOLO. This study constructed a high-quality dataset based on hormone detection labels, validating the feasibility of ocular features in estrus recognition, with a mAP@50 reaching 93.2%, outperforming most existing methods. However, the model’s performance degrades to some extent under extreme lighting conditions (e.g., strong light or nighttime) or when significant changes in animal posture occur. Additionally, due to limited breed diversity in the training dataset, its generalization capability remains to be improved. Moreover, the model’s large parameter size is not conducive to on-site deployment. Although the aforementioned deep learning methods address the limitations of manual observation and the instability of traditional image processing techniques [

13], they still face several challenges: difficulty in detecting small and occluded targets in complex backgrounds, limited generalization across varying lighting and breeding environment configurations, and high computational costs hindering on-site deployment.

YOLOv8, a state-of-the-art iteration released by Ultralytics in 2023, achieves an optimal balance between accuracy and inference speed. Building upon its predecessors, it incorporates novel optimizations that enhance its versatility for a broad spectrum of object detection applications [

14].

Given its favorable balance, we select the lightweight YOLOv8n version as our baseline model to address the challenges of on-site deployment. To further enhance its performance for our specific task, this study proposes a novel multi-scene lightweight framework for recognizing four key pig behaviors (standing, lying on the belly, lying on the side, and feeding). The main contributions of this research include (1) integrating the SPD-Conv module to preserve spatial details during downsampling, thereby enhancing small target detection performance in low-resolution images [

15]; (2) incorporating the LSKBlock attention mechanism to improve cross-layer feature fusion; and (3) designing a dedicated small target detection head to achieve multi-granularity behavior recognition.

2. Materials and Methods

2.1. Dataset Construction

This study utilizes two publicly available datasets, with representative examples illustrated in

Table 1. The camera configurations and acquisition parameters are detailed in

Table 1. The composite dataset encompasses four distinct scenarios containing pigs exhibiting varying target sizes (large, medium, small), as systematically categorized in

Table 1 and

Figure 1.

Image acquisition spanned three daily intervals: 10:00–13:00, 16:00–18:00, and supplementary evening periods. This temporal distribution captures behavioral variations between feeding and non-feeding phases. The dataset further incorporates diverse housing configurations—including ground pens and elevated cages—with varied feeding equipment, enhancing image heterogeneity to improve model generalization, robustness, and practical applicability.

A time-conscious data collection strategy is fundamental to our goal of reliably identifying the four target behaviors: standing, feeding, prone lying, and lateral lying. These behaviors are not randomly distributed but follow predictable diurnal rhythms. Concentrating monitoring on peak activity windows (e.g., 09:00–11:00 and 15:00–17:00) ensures high probability of capturing standing and feeding, while also sampling resting postures (prone and lateral lying) within the same periods and across the day [

16,

17]. This approach ensures that our model is trained on, and evaluated against, a behaviorally complete dataset, thereby increasing the robustness of anomaly detection, such as identifying lethargy (excessive lying) during normally active times [

18].

We therefore focus on these four behaviorally validated health indicators (feeding, prone lying, lateral lying, standing) [

19]. Classification criteria and representative examples are detailed in

Table 2 and

Figure 2. All 1820 images were manually annotated using LabelImg [

20] with bounding boxes stored in TXT format.

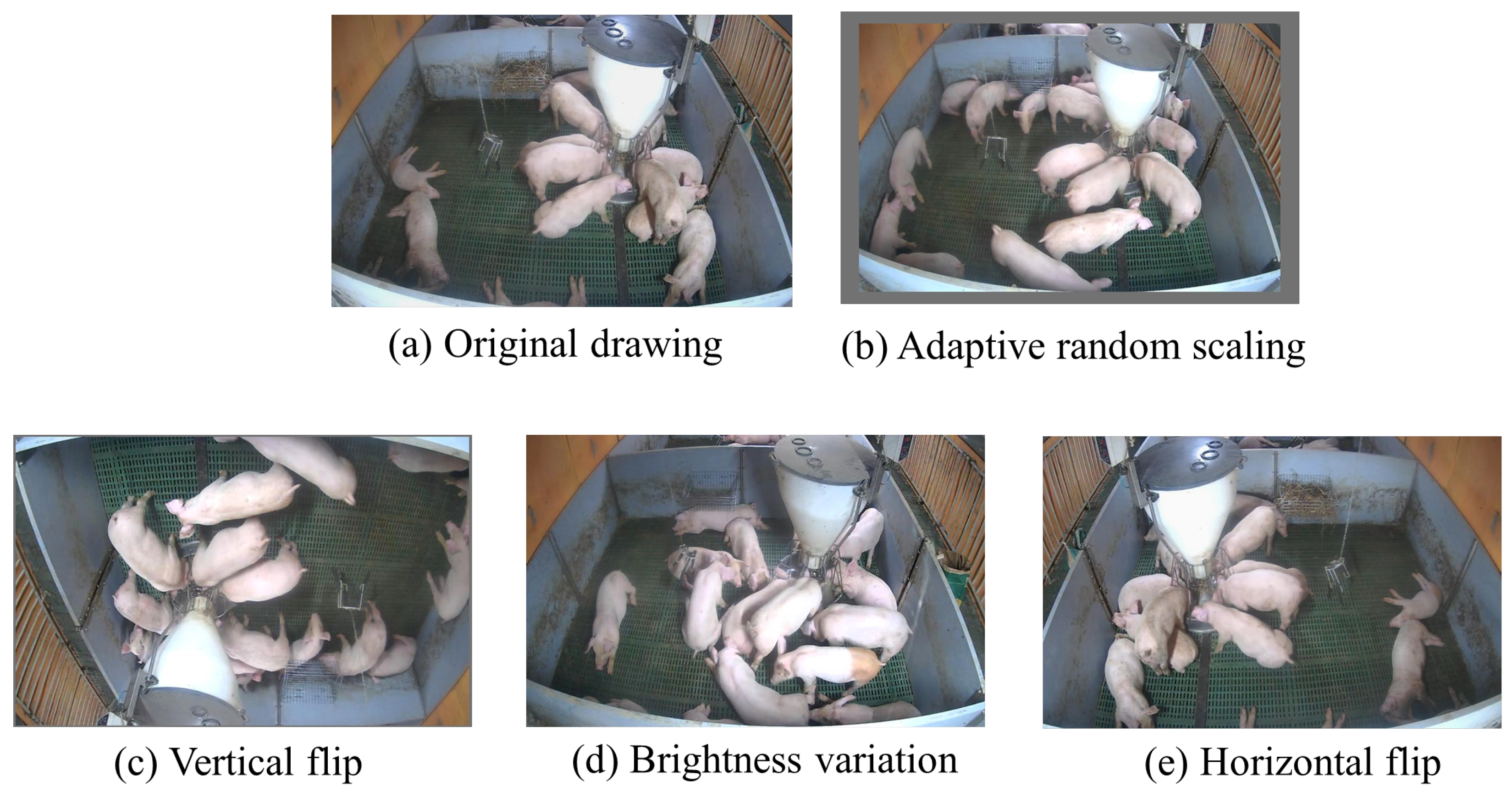

2.2. Data Augmentation

To enhance model generalization and robustness, and mitigate overfitting [

21], we implemented four augmentation techniques: random HSV adjustment, adaptive scaling, horizontal flipping, and vertical flipping (

Figure 3).

This pipeline generated 3640 augmented images, expanding the dataset to include 11,258 feeding instances, 11,530 lateral lying occurrences, 13,877 prone lying cases, and 14,599 standing postures. The augmented data was partitioned using stratified random sampling into training (2548 images), validation (728 images), and test sets (364 images) at an 8:2:1 ratio, The overall workflow is shown in

Figure 4.

2.3. YOLOv8n Network Structure

We evaluated all five variants (YOLOv8n, YOLOv8s, YOLOv8m, YOLOv8l, YOLOv8x) on our pig behavior dataset, with comparative metrics detailed in

Table 3.

Although YOLOv8l and YOLOv8x marginally surpassed YOLOv8n in average precision (AP) and mAP@0.5 (by 0.050 and 0.031, respectively), this improvement came at substantial computational cost—the parameter counts increased to 43.6 M and 67.1 M, representing 14.5× and 22.3× expansions relative to YOLOv8n. In contrast, YOLOv8n maintained competitive accuracy (AP = 0.849, mAP@0.5 = 0.896) with only 3.01 M parameters, establishing significant advantages for resource-constrained deployment environments.

Furthermore, YOLOv8n’s lightweight architecture confers significant advantages in inference speed [

22], satisfying the stringent real-time requirements of practical deployment scenarios. Balancing detection accuracy, computational efficiency, and application constraints [

23], we selected YOLOv8n as the foundational framework for this investigation. The architectural configuration is presented in

Figure 5.

The YOLOv8n architecture consists of four core components: Input: receives preprocessed pig images; Backbone: performs feature extraction using Conv layers for initial downsampling; Neck: integrates the C2f module for multi-scale feature optimization and SPPF for contextual feature fusion; Head: generates detection outputs through feature pyramid construction.

The processing pipeline initiates with Conv-based downsampling [

24], followed by the backbone’s C2f module, which enhances multi-scale representations through concatenated feature optimization. Subsequent feature fusion via the SPPF module enriches contextual information. Higher-resolution feature maps are then recovered through upsampling, enabling hierarchical feature integration where deep semantic features merge with shallow spatial details via concatenation [

25]. Finally, detection heads produce 80 × 80, 40 × 40, and 20 × 20 pixel feature maps specialized for small, medium, and large targets, respectively [

26].

2.4. Improvement of YOLOv8n Pig Daily Behavior Recognition Model

To resolve the persistent challenge of small target loss in pig detection, we integrate the SPD-Conv module, which mitigates information loss during downsampling by preserving local detail features while reducing spatial dimensions. For addressing occlusion and overlapping among pigs, the LSKBlock module [

27] is incorporated within the neck section. This component captures long-range dependencies through large-kernel convolutions, dynamically recalibrates feature maps, enhances critical feature propagation pathways, and strengthens spatial perception in complex backgrounds—collectively improving robustness for detecting occluded, overlapping, and small targets [

28]. Additionally, a dedicated small-target detection head preserves fine-grained pixel-level details, mitigates information degradation during downsampling, and enhances sensitivity to minute features. These comprehensive architectural modifications are visually summarized in

Figure 6.

2.5. SPD-Conv Spatial Depth Conversion Convolution

The mixed dataset, reflecting complex pig farming environments, presents challenges including crowded and overlapping pigs, as well as obstructions from facilities like feeding troughs. The dataset also has blurry images or small pig targets in corners. It is necessary to address the issues of low image resolution and neglect of small target tasks during training and testing, as well as information loss caused by traditional downsampling, resulting in poor model training performance [

29]. Therefore, in the selection, an SPD-Conv spatial depth conversion convolution module is added after the original Conv convolutional layer [

30].

SPD-Conv follows a non-stride convolutional layer from space to depth by introducing multiple convolution kernels with different receptive field sizes into the network, converting image spatial information into depth information and increasing the depth of feature maps without losing information, thereby enhancing the network’s perception ability for targets at different scales. By preserving more spatial and image granularity information when processing low-resolution images and small targets, algorithms can reduce missed detections and improve recognition accuracy [

31].

The SPD-Conv module consists of two parts: a space to depth layer and a non-cross row convolution (Conv) layer. The specific structure and principle of SPD-Conv when scale = 2 are shown in

Figure 7.

Firstly, a feature map with a size of S × S × C1 is input and downsampled with a step size of 2 to obtain four subfeature maps containing global spatial information.

Next, the four subfeature maps are merged along the channel dimension, with the spatial dimension becoming half of the original and the channel dimension becoming four times the original. This can convert the spatial dimension of the input image into a depth dimension, avoiding the problem of information loss caused by traditional cross row convolution and pooling operations. Finally, a non-cross row convolution with a stride of 1 is used to obtain a feature map with a size of S/2 × S/2 × C2.

Non-cross row convolution extracts image features by maintaining the original feature map size and reducing the feature map channels, capturing key information about pig behavior in low-resolution images, achieving fusion from a compressed spatial dimension to channel dimension, improving algorithm performance and reducing information loss while reducing model parameters, computational complexity, and memory usage.

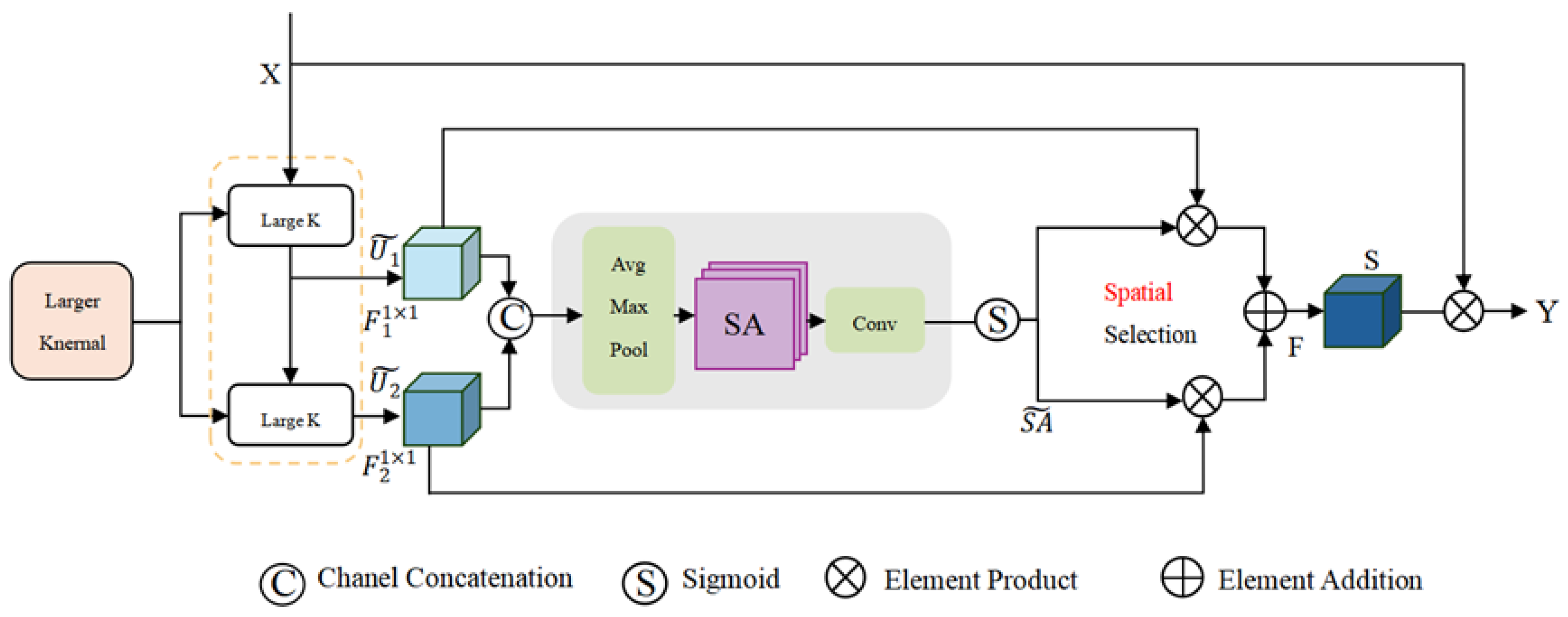

2.6. LSKBlock Large Core Space Attention Module

For overlapping occluded pigs and small target pigs in the dataset, using large kernel convolution can cover a larger area and enhance the model’s understanding of the overall structure of the target. And by focusing on the area where the pigs are located through spatial attention, the interference of the background on the model is reduced [

32]. Therefore, LSKBlocks were inserted after detection heads at different scales to optimize the feature expression at each level of the model [

33].

LSKBlock (Large Kernel Spatial Attention Block) is a neural network module that combines a large convolutional kernel with spatial attention mechanism, aiming to enhance the model’s perception ability of key regions, especially suitable for improving the performance of small object detection and complex scenes. The specific structural principle is shown in

Figure 8.

The intermediate input feature

, LSK will derive an attention map, and the entire attention process can be summarized as

Here, ⨂ represents element wise multiplication. The specific implementation steps of the LSK module are as follows: Firstly, N deep convolutional layers of different scales are applied to the input feature X to obtain the output of each layer

. This can expand the receptive field and enhance the network’s ability to focus on relevant spatial contextual regions when detecting targets. Then,

is sent to the standard convolutional layer for further processing and connected together to form

:

Here,

represents depthwise separable convolutions with different large convolution kernels, and represents standard convolutions with convolution kernel sizes of

. For

applying average pooling and max pooling operations along the channel axis, two different spatial context descriptors can be obtained—

and

—which, respectively, describe the average pooling features and max pooling features across channels. In order to effectively extract spatial relationships

and achieve information exchange between different spatial context descriptors, the AND

connection is sent into the convolutional layer to obtain N spatial attention maps. For all spatial attention maps, we apply the sigmoid activation function to obtain independent spatial attention masks

, then, with

, perform dot product operations and superimpose them together, and send them to the standard convolutional layer for fusion to generate attention maps

:

Here, represents the Sigmoid function, and represents the standard convolution with input channel 2 and output channel N. The LSK attention mechanism enables the model to focus on important features and ignore unimportant features (highlighting important features and suppressing unimportant features). This is also the key role of the attention mechanism in enhancing network learning ability.

2.7. Multi-Scale Feature Detection

The baseline YOLOv8n architecture employs three detection heads operating at 80 × 80, 40 × 40, and 20 × 20 pixel scales to detect small, medium, and large targets, respectively, from 640 × 640 input images [

34]. However, progressive resolution reduction through deeper convolutional layers causes geometric information degradation, particularly detrimental for small targets like pigs where critical spatial features may be entirely lost [

35]. To address this limitation, we introduce an additional small-target detection head that processes higher-resolution features (

Figure 9). This enhancement recuperates high-resolution features through successive upsampling, followed by cross-layer concatenation fusing shallow backbone features with neck upsampling outputs. Subsequent channel compression and feature optimization enable the generation of a dedicated 10 × 10 detection head. The resulting four-scale feature pyramid (80 × 80, 40 × 40, 20 × 20, 10 × 10) [

36], combined with LSKBlock’s attention mechanism, dynamically focuses on small-target regions while suppressing background interference.

2.8. Experimental Parameter Settings

All computational experiments were conducted on a Windows 10 system with 64-bit architecture using Python-based frameworks. The hardware configuration comprised an Intel Core i5-8300H CPU (2.30 GHz) (Intel Corporation, Santa Clara, CA, USA) paired with an NVIDIA GeForce RTX 4090 GPU (8GB VRAM) (NVIDIA Corporation, Santa Clara, CA, USA). The software environments included a Conda-managed Python 3.10.16 installation with PyTorch 2.0.1 and CUDA 11.8. The detailed training hyperparameters are provided in

Table 4.

The evaluation metrics comprise precision (P), measuring the correct target recognition proportion; recall (R), indicating the detection completeness; and mean average precision (mAP), quantifying the overall categorical accuracy [

37]. The model’s performance was objectively assessed through parameter efficiency and these established metrics across four critical porcine behaviors: standing, feeding, prone lying, and lateral lying.

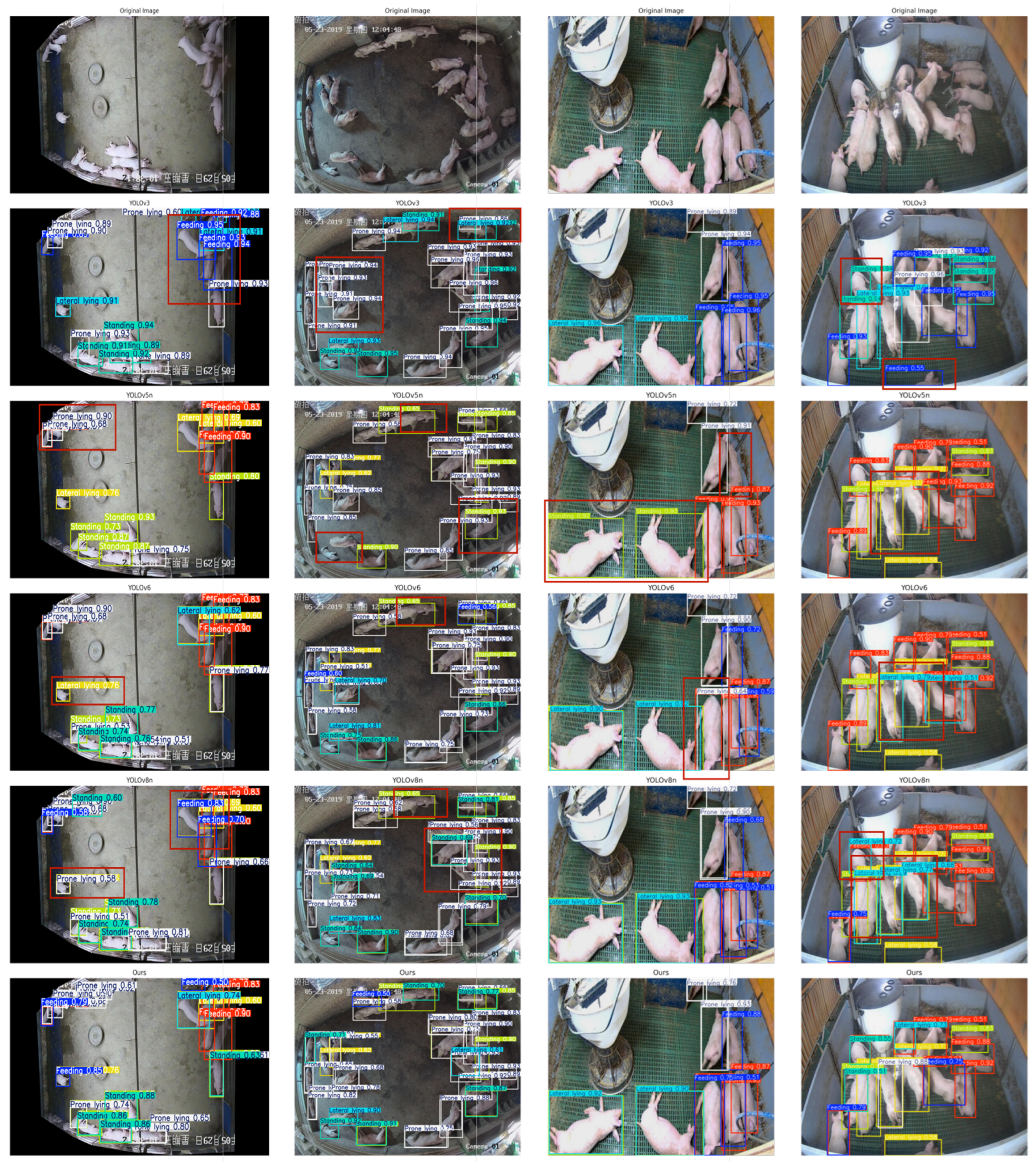

4. Discussion

The proposed lightweight framework, building upon YOLOv8n, achieved a competitive mAP@50 of 92.4% for multi-behavior recognition, with a particularly high accuracy of 94.9% in identifying standing posture. This performance demonstrates the effectiveness of our architectural modifications in addressing key challenges in complex farming environments.

The integration of the SPD-Conv module was essential for preserving fine-grained spatial details. Its spatial-to-depth transformation effectively reduced the limb edge blurring typically associated with standard downsampling. This improvement is crucial for distinguishing subtle posture differences in pigs, such as between prone and lateral lying. The model’s strong performance in detecting small-target behaviors provides quantitative support for this enhancement.

Furthermore, the introduction of the LSKBlock attention mechanism improved feature discrimination across different scales. Its adaptive receptive field capability helped suppress background interference and enhance the focus on distinctive animal contours, leading to robust recognition under varying scene complexities. The high recognition accuracy across all four behaviors indicates that the combined use of SPD-Conv and LSKBlock successfully balanced detailed feature preservation and contextual feature extraction.

When compared with existing literature, the performance of our proposed framework is competitive with, and in some aspects surpasses, that of more complex architectures reported in prior studies. For example, the ECA–YOLO model by Zhao et al. achieved a mAP@50 of 93.2% for sow estrus detection, but their model faced challenges regarding computational complexity and deployment feasibility. Similarly, although the PB-STR Transformer model by Hu et al. can recognize seven behaviors simultaneously, its high computational demand may limit its practical application in resource-constrained environments. In contrast, our model achieves a compelling balance between accuracy and efficiency, obtaining a competitive mAP@50 of 92.4% while being based on a much lighter architecture, thereby showing greater potential for on-site deployment.

While the results are satisfactory, certain limitations of the model cannot be denied. As mentioned earlier, under extreme lighting conditions or severe occlusion, the model’s performance may degrade. This indicates that, although our current feature extraction approach has been improved, it is not yet immune to such disturbances. In addition, the generalization ability of the model across different pig breeds requires further validation with more extensive datasets.

In summary, this study demonstrates that a strategically modified lightweight architecture can achieve high-precision pig behavior recognition. The successful integration of SPD-Conv, LSKBlock, and the small-object detection head provides a valuable reference for detecting pigs in agricultural settings. Future work will focus on enhancing the model’s robustness to environmental variations and exploring its real-time deployment on edge computing devices.

The model achieves a recognition accuracy of 91.2% for pig feeding behavior, enabling real-time monitoring of appetite variations and systematic logging of feeding patterns. When feeding duration decreases by more than 15%, the system alerts managers to potential disease risks. This behavioral precision feeding approach simultaneously optimizes feed utilization. Furthermore, empirical evidence indicates that the ratio of lateral to prone lying positions reflects environmental comfort levels within the pigsty. During elevated temperatures, pigs exhibit a preference for the prone position to facilitate abdominal heat dissipation, while under cooler conditions, they favor lateral recumbency to minimize body surface contact with the ground [

42]. Consequently, the model’s continuous detection and statistical analysis of lateral and prone posture proportions provide valuable data for informing environmental control decisions within the pigsty.

The model’s varying performance under different lighting conditions offers important insights into its strengths and limitations. While it performs very well under warm and strong light, where the LSKBlock and SPD-Conv work effectively together, its performance drops by about 1.2% in low-light situations. This highlights a significant challenge for real-world use. We believe that this decline is not only due to limited data but is also related to the model’s design. In low light, the large kernels in the LSKBlock may combine noisy areas, creating false features. At the same time, the SPD-Conv module, which normally preserves important details, can also amplify sensor noise and heat signatures from groups of animals. Additionally, the consistently lower detection rate for feeding behavior across all lighting conditions suggests that this activity may involve finer details that are not fully captured by our current features. The higher missed detection rate for darker-skinned pigs in low light further indicates a need for more diverse datasets to improve fairness and generalization. Therefore, future work should focus not only on adding more data but also on designing better models that can adapt to different lighting and reduce the impact of noise.

Under strong light, the contours of standing pigs become more distinct, leading to a clear improvement in detection performance. In contrast, feeding behavior is more susceptible to factors such as reflections, motion blur, loss of mouth detail, and glare from metal troughs. Therefore, it is recommended that future studies employ local enhancement techniques or multi-modal fusion (e.g., with infrared imaging) to address these challenges.

The limitations identified in this study, particularly under low-light conditions and with complex behavioral sequences, point the way for future research. To overcome these constraints, subsequent work will focus on three main directions. First, building on the real-time capability of our lightweight framework, we will expand the range of detectable behaviors to cover more welfare indicators. Second, and most importantly, the performance drop in low light strongly supports the integration of infrared imaging. This will help create a detection module that works regardless of lighting, directly addressing a key weakness found in our experiments. Finally, to go beyond static posture recognition, we plan to incorporate Transformer-based models to analyze behavioral sequences over time, such as feeding and excretion. This step from single-frame detection to sequence analysis is essential for progressing to predictive anomaly detection and smarter management systems.

Our model can accurately identify pig postures such as standing and lying, making automated behavior monitoring more practical. For example, it reliably detects standing behavior with 94.9% accuracy, which can help managers identify health issues early and intervene promptly. If a pig’s standing pattern changes, the system can alert managers to potential problems such as lameness. The model also distinguishes between lateral and prone lying postures, providing an objective way to assess animal comfort and welfare. Although full integration with veterinary knowledge for computer-aided diagnosis remains a future goal, our model offers a robust tool for collecting high-quality, long-term behavioral data. This data is essential for linking specific behavioral changes to diseases. Overall, this study not only provides an effective recognition model but also supports the transition toward data-driven management strategies that can improve both animal welfare and farm profitability.