1. Introduction

Aquaculture is a major producer of aquatic products and contributes significantly to global food and nutrition security. It is expected to expand further owing to the increasing global population and rising affluence [

1]. Information on fish size and mass is crucial in aquaculture. It supports precise feeding, optimization of stocking density, and growth prediction [

2,

3,

4]. Traditional methods rely on direct human contact to measure fish size and mass. Those methods are time-consuming and can induce stress responses in fish. Stress can reduce growth rate and compromise health [

5,

6]. Therefore, non-contact methods for measuring fish size and mass are urgently required. Such methods can replace contact-based approaches and reduce human intervention, thereby improving aquaculture efficiency.

Machine learning and deep learning have been widely applied across numerous domains and have been used to address many core problems [

7,

8,

9,

10]. In aquaculture, these techniques have been employed to extract fish body features and to enable non-contact size and mass monitoring [

11,

12,

13,

14]. From a computer-vision perspective, non-contact size and mass estimation methods fall into two categories: monocular-vision approaches [

15,

16] and stereo-vision approaches [

17,

18].

Monocular cameras offer a simple structure and low cost. Methods based on monocular cameras typically constrain the swimming direction of the fish or place a reference object of a known real size beside the fish to map pixel measurements to physical dimensions [

19,

20,

21]. Length measurements of European sea bass were obtained using ArUco fiducial markers attached to polypropylene plates [

22]. The Principal Component Analysis–Calibration Factor (PCA–CF) method was applied to extract image features, and the Backpropagation Neural Network (BPNN) was employed to estimate fish mass [

11]. Other studies extracted fish length, mass, and color from images and the length–mass relationship through the application of mathematical models [

23]. Although monocular methods provide beneficial results, they generally require fixing fish on a specific plane or using reference objects. Such requirements restrict these methods to single-fish measurements and limit their applicability in real-world environments. To address this limitation, stereo-vision techniques have been adopted to estimate the size and mass of freely swimming fish underwater.

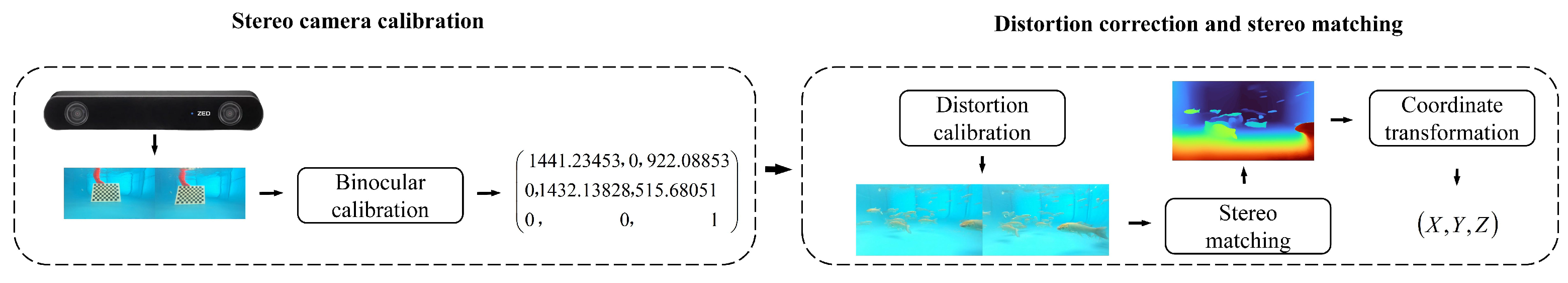

Compared to two-dimensional vision, stereoscopic vision can wholly and accurately restore the three-dimensional spatial information of objects. This capability enables high-dimensional feature extraction [

24,

25,

26]. In aquaculture, binocular stereoscopic vision captures fish images via left and right cameras. It then employs stereo matching algorithms to calculate the depth of the fish body. This process achieves the acquisition of three-dimensional fish body data without the need for setting reference objects or fixing the position of the fish. Consequently, it realizes a precise estimation of fish size and mass. This technology facilitates non-contact dynamic measurement of size and mass in free-swimming fish within modern intelligent aquaculture systems [

27,

28,

29].

The CamTrawl system processes fish images via background subtraction and combines binocular stereo vision technology with the triangulation model to estimate fish length [

30]. Local threshold segmentation and geometric model fitting algorithms were employed to process fish body images. Combined with a deformable ventral contour model and binocular stereo vision technology, tuna length was estimated [

31]. The underwater non-contact method for estimating the mass of free-swimming fish, developed based on the LabVIEW platform, estimates fish mass by integrating binocular stereo vision technology with the linear model of fish area [

32]. These methods can quickly and accurately extract fish features. However, in complex underwater environments, issues such as diverse fish postures and light refraction arise. Sole reliance on mathematical fitting methods may reduce feature extraction accuracy, thereby increasing errors in estimating fish size and mass.

To further improve the accuracy of fish feature extraction, deep learning has been integrated with binocular vision technology to estimate fish size and mass. The key points of fish bodies were extracted by utilizing the key point detection network key points R-CNN. Three-dimensional information of these key points was then obtained via 3D reconstruction of the fish body. Finally, fish body length was calculated using the three-dimensional Euclidean distance [

33]. Deep stereo matching based on Neural Radiance Fields (NeRFs) has been applied for the accurate depth estimation of fish surfaces. When coupled with instance segmentation, this approach yields edge key points for bass, which are used to compute perimeters and estimate mass [

34]. An SE-D-KP-RCNN network has been proposed for fish key point detection, with integration with CREStereo deep stereo matching, enabling the fitting of spatial planar curves to derive fish dimensions [

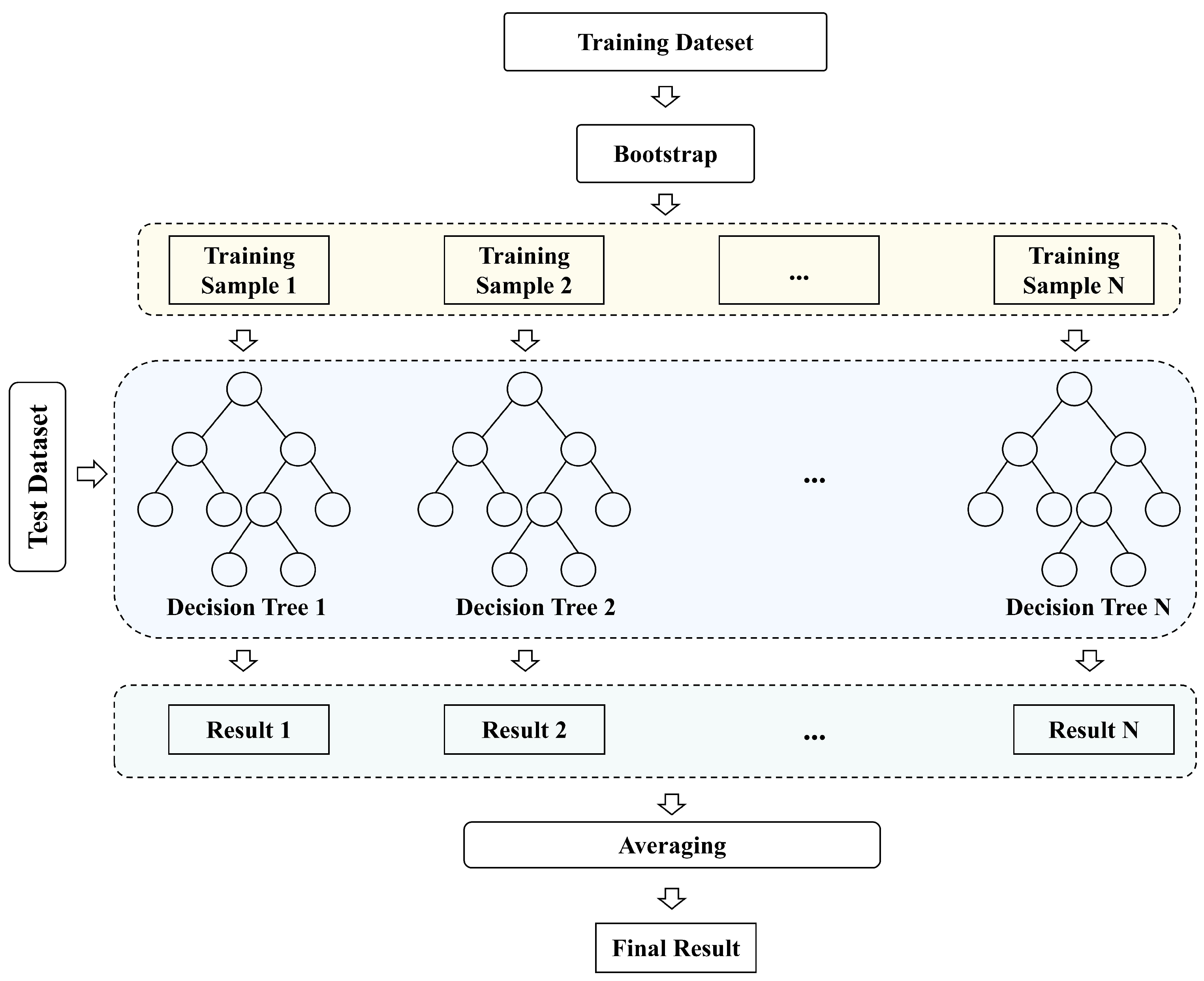

35]. Instance-segmentation methods have been used to extract fish contours; Principal Component Analysis (PCA) then corrects the orientation to obtain key points, and point-cloud processing combined with XGBoost is applied to estimate fish mass [

36]. Multi-task networks capable of concurrently executing key point detection, segmentation, and stereo matching have been developed to extract morphological features. Subsequently, a segmented fitting strategy was employed for body length measurement [

37]. Although these deep learning methods have improved the accuracy of fish feature extraction, factors such as fish body overlap, occlusion, body curvature, and adhesion affect feature extraction in dense aquaculture environments [

38]. Moreover, in practical aquaculture scenarios, the data often contains a great deal of uncertainty [

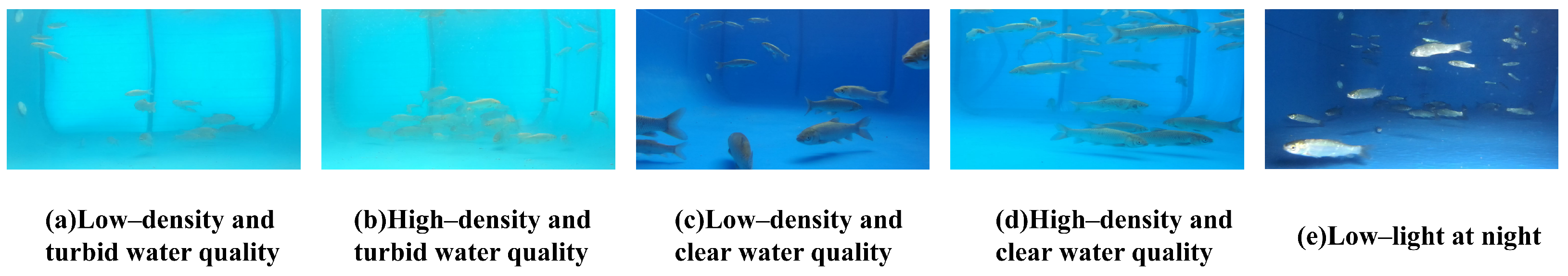

39]. Rapid changes in illumination and increased water turbidity—frequently caused by suspended white flocculent matter—degrade the quality of captured fish images and hinder reliable feature extraction [

40,

41]. These problems affect the accuracy and stability of feature extraction, further influencing the estimation accuracy of fish body size and mass.

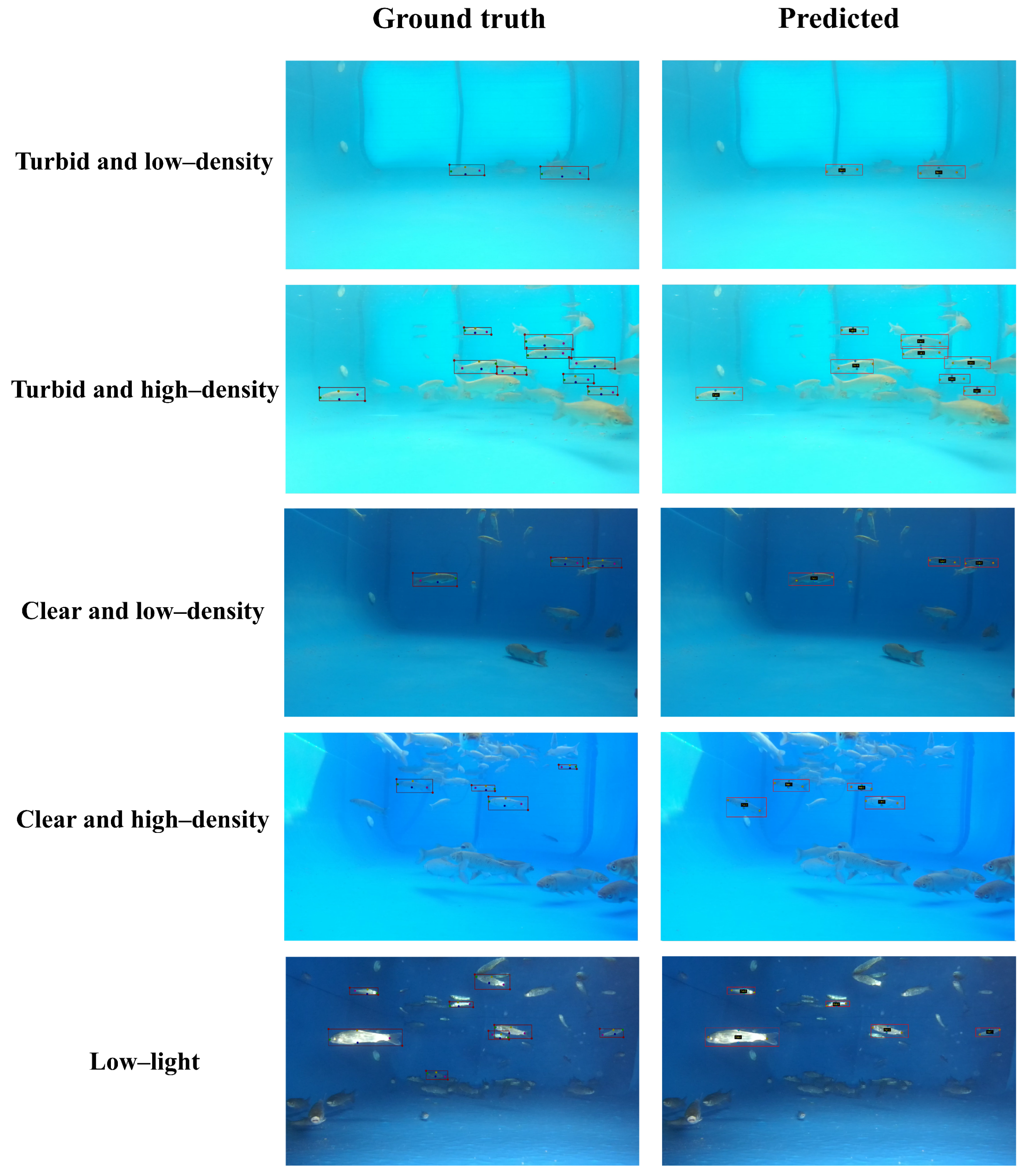

Previous studies were predominantly conducted under ideal conditions characterized by low stocking density, stable lighting, and clear water. Consequently, these methods are ill equipped to handle the complex challenges encountered in real-world aquaculture settings, such as high stocking densities, abrupt lighting fluctuations, and turbid water. Some approaches have estimated fish length from surface images; however, surface-based methods cannot meet the requirements for real-time underwater measurement of fish length and mass in deep-sea aquaculture. To overcome these limitations, this paper proposes FishKP-YOLOv11, a novel framework for estimating fish length and mass. Trained on datasets spanning diverse water conditions, illumination levels, and stocking densities, the framework enables automatic, accurate, and non-contact estimation of size and mass for free-swimming fish in complex, dynamic aquaculture environments. The main contributions of this paper are listed as follows:

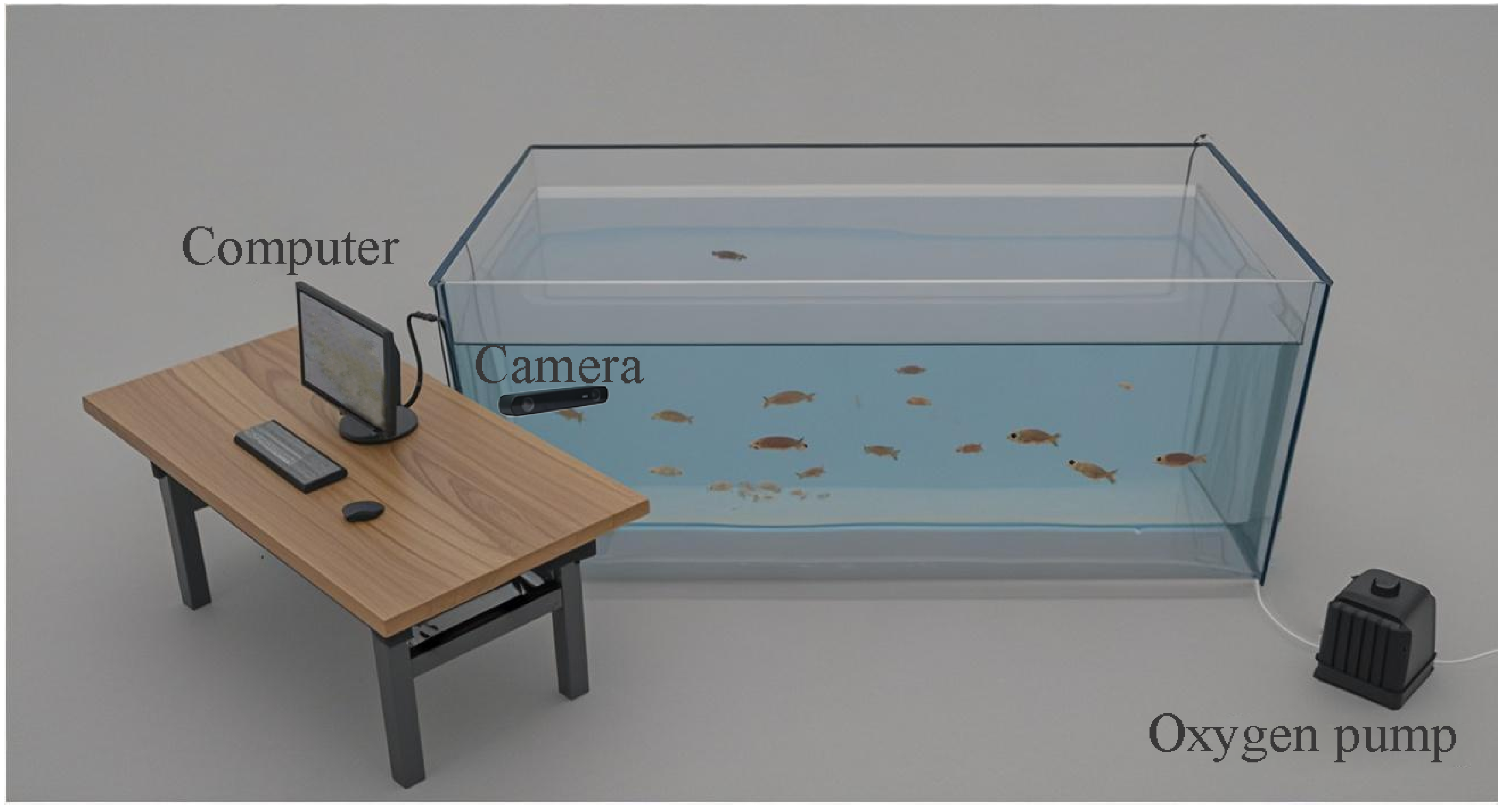

(1) A dataset is collected and constructed with a binocular camera for shooting grass carp with different conditions, containing 2674 images with corresponding annotation information. The self-built dataset makes up for the problem of a lack of data.

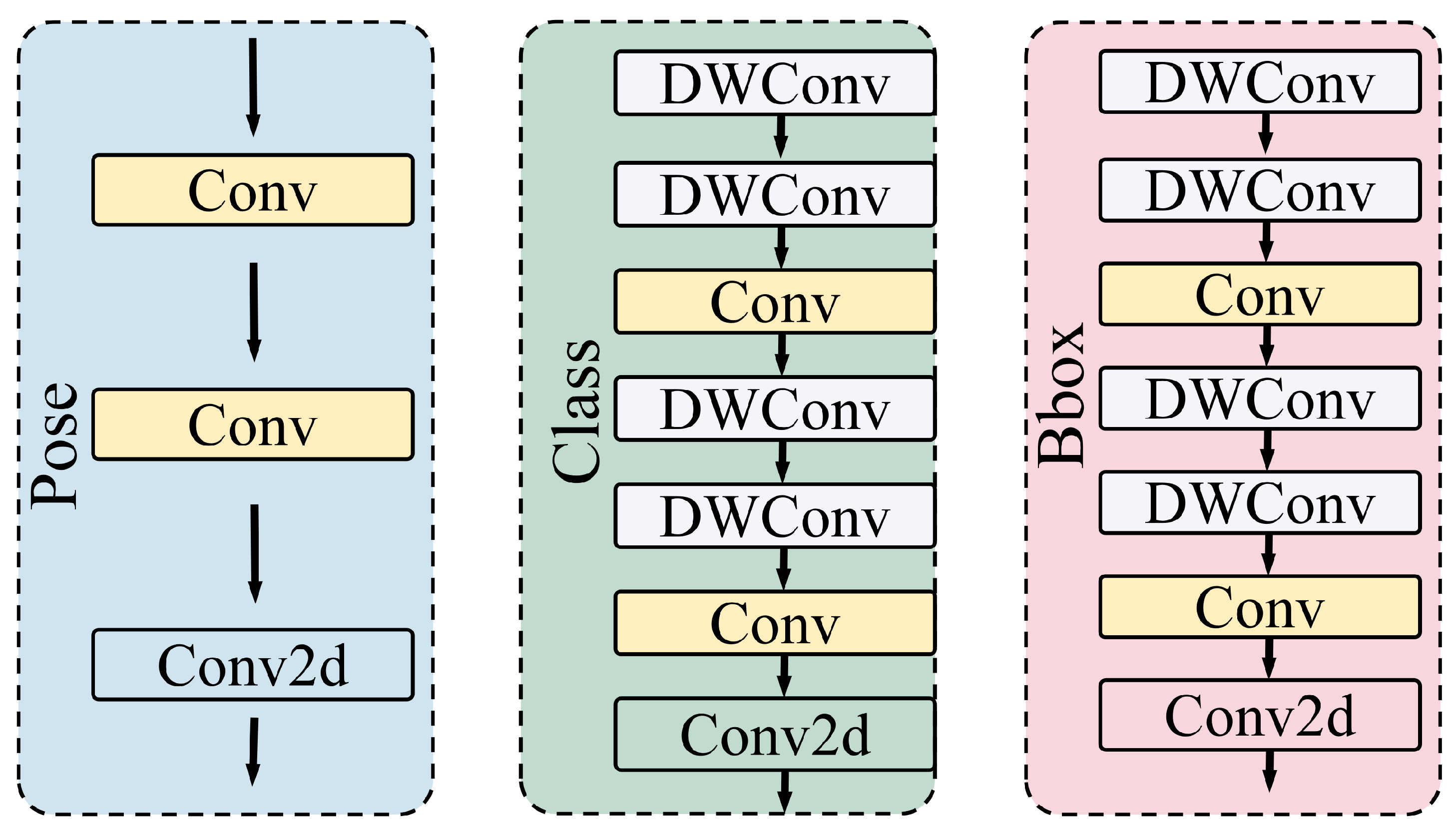

(2) A feature extraction model, HGCBlock (composed of HCEBlock and GDCBlock), was designed to capture discriminative fish features under challenging conditions. A Feature Pyramid Network (FPN) was incorporated to catch the fish’s edges and textures while reducing channel-wise redundancy and computations. The Head network was redesigned to improve both localization accuracy and classification performance.

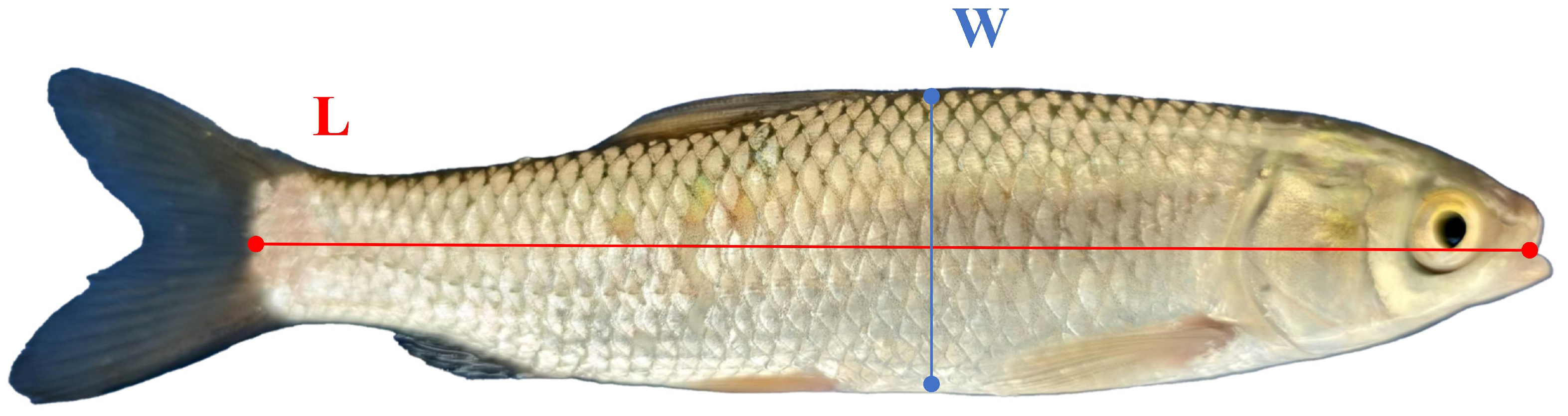

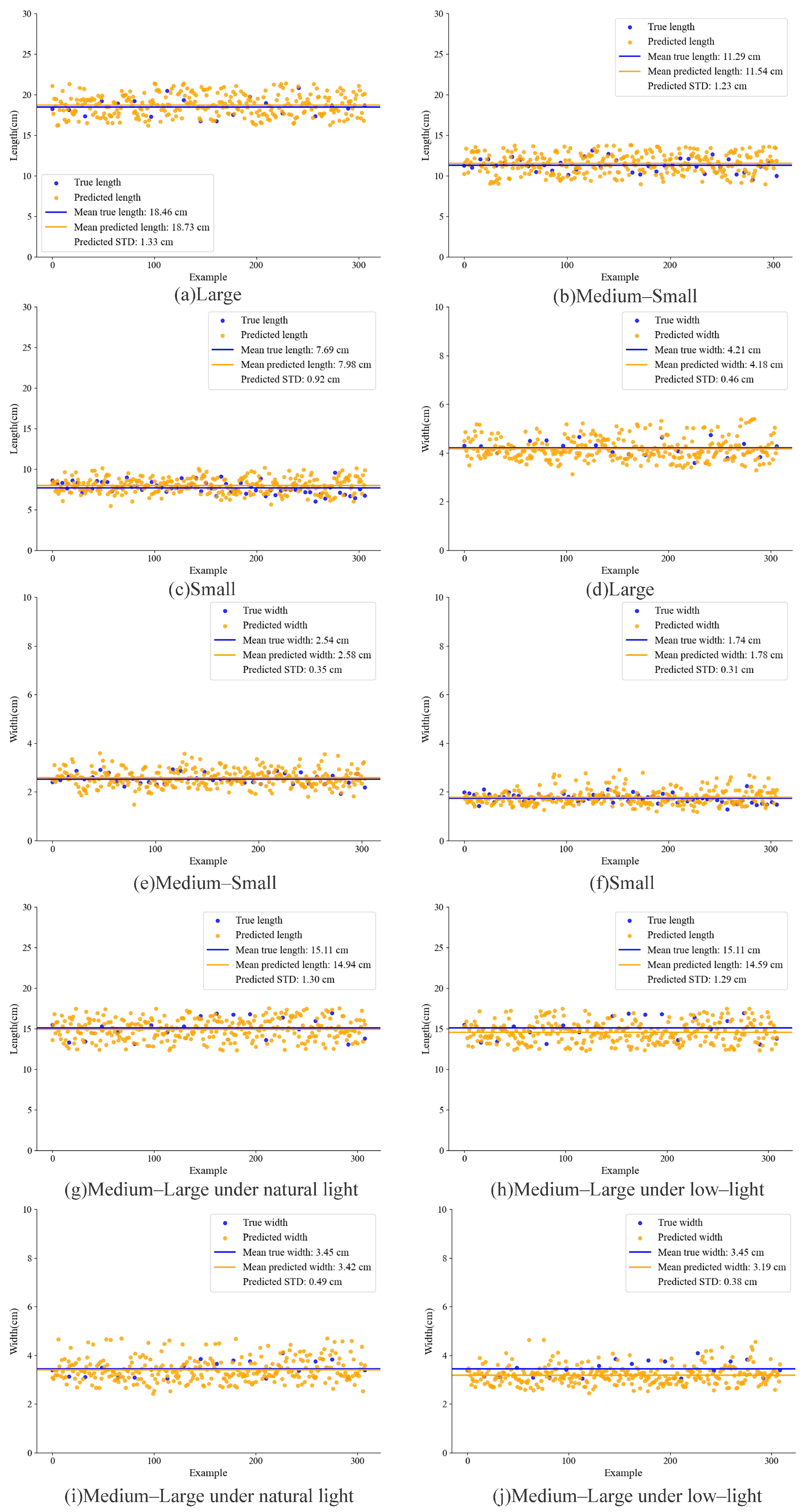

(3) A framework based on the improved YOLOv11 is proposed for estimating fish size and mass, called FishKP-YOLOv11. The detected key points are fused with binocular stereo technology to recover the three-dimensional coordinates. A Random Forest regression model is introduced to map the computed size features to fish mass. The proposed framework realized accurate and non-contact estimation of fish size and mass in complex aquaculture environments.

5. Conclusions

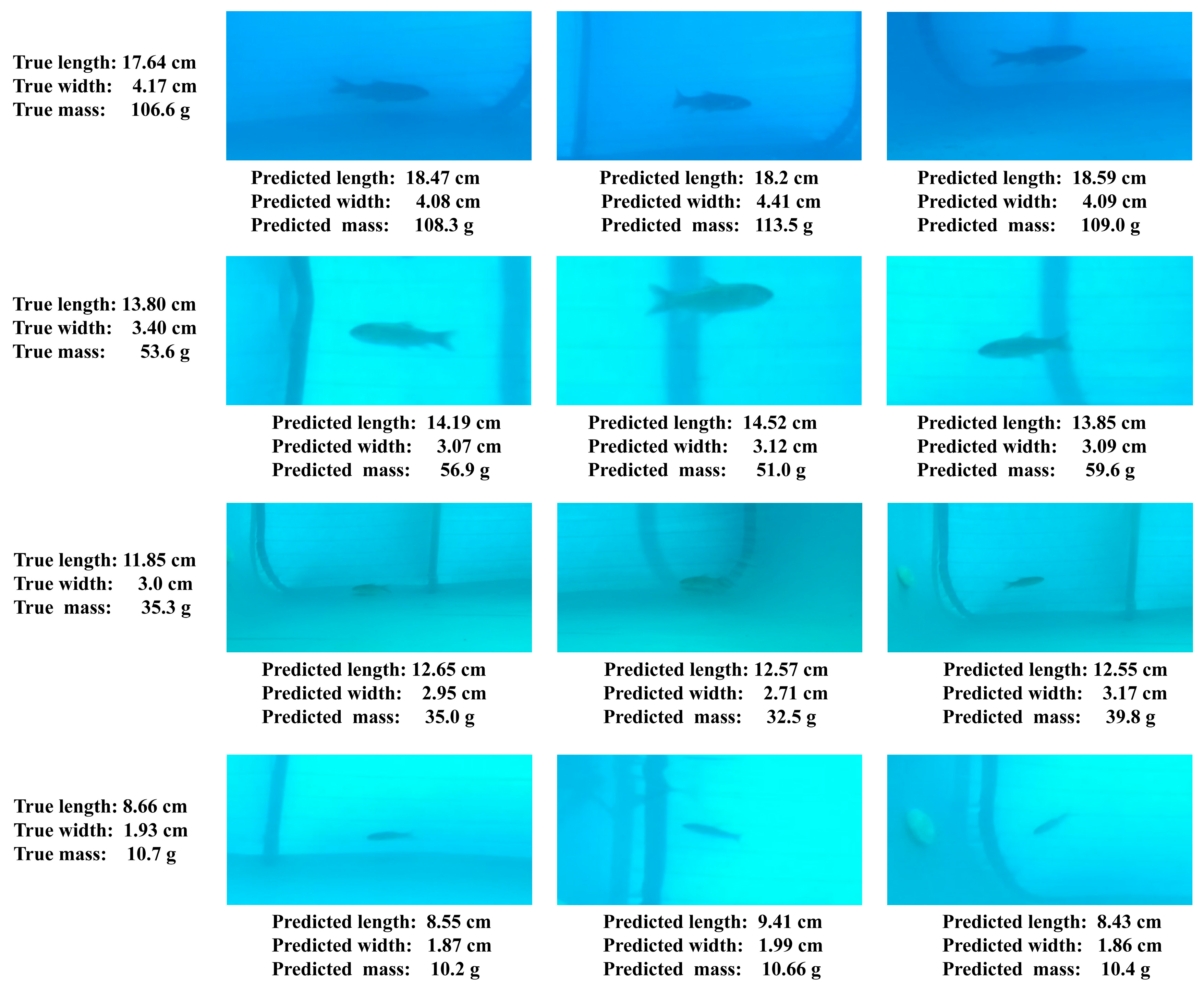

Estimating fish size and mass in actual aquaculture is challenged by complex water quality, variable illumination, and diverse fish postures. The proposed method, FishKP-YOLOv11, is more suitable for the high-density aquaculture environment. A grass carp dataset was constructed that covers multiple water qualities, lighting conditions, and stocking densities. To overcome the problem of extracting fish body features in complex underwater scenes, the key point detection model was proposed, the core extractor of which is composed of the HCEBlock and GDCBlock. The Feature Pyramid Network (FPN) was also incorporated. In addition, the Head network’s classification and bounding box regression branches, which support key point detection, were redesigned. Compared with the baseline model YOLOv11, FishKP-YOLOv11 achieved a 4.3% improvement in object detection mAP50 and a 4.2% improvement in key point detection mAP50, reaching 91.8% and 91.7%, respectively. The model also outperformed multiple versions of YOLOv5, YOLOv6, YOLOv8, YOLOv9, YOLOv10, YOLOv11, and YOLOv12. A size-computation method combined detected key points with stereo vision technology to estimate body length and width. On the grass carp dataset, the mean absolute error (MAE) between estimated and measured body length was 0.35 cm. The MAE for body width was 0.1 cm. Average sizes for groups of different sizes and for groups under varying illumination were also estimated, and the estimated means closely matched the measured means. A Random Forest-based mass prediction model was then developed. In practical mass prediction tests, the mean absolute error between predicted and actual mass was 2.7 g. Predicted mean masses for groups of different sizes and under different lighting conditions were close to the corresponding measured means. In summary, the proposed method for fish size and mass prediction demonstrated strong performance and robustness in complex tank environments and shows potential for practical application in aquaculture, providing an accurate approach for size and mass monitoring.

To improve the applicability of the fish size and mass estimation framework in practical aquaculture, future research will focus on several directions. Firstly, full-angle key point annotation methods will be investigated in combination with image enhancement techniques. This approach aims to reduce size estimation errors caused by curved fish postures and to strengthen the model’s generalization to complex body poses. Secondly, the framework will be extended to multi-species scenarios. In this process, physiological and structural differences among fish species will be incorporated to establish more universal size and mass prediction models. Moreover, although strong correlations exist between fish length or width and mass, these relationships tend to weaken as fish grow. Therefore, future work will integrate additional morphological features, such as the fish’s girth, to improve estimation accuracy and robustness. Finally, the framework will be validated through large-scale trials and long-term verification in multiple aquaculture systems, ensuring stability and supporting future industrial applications.