1. Introduction

Broiler farming occupies a central position in the global poultry industry, not only providing a large quantity of lean meat protein to humans but also creating employment opportunities for millions worldwide [

1,

2]. Modern intensive broiler production relies on high stocking densities within controlled-environment houses [

3], maximizing output while simultaneously limiting birds’ thermoregulatory capacity and behavioral options. In recent years, increasingly frequent extreme high-temperature events caused by climate change have seriously threatened the stable development of this industry [

4]. As broilers lack sweat glands, their heat dissipation primarily depends on respiratory evaporation (manifested as open-mouth behavior) and behavioral adjustments (e.g., wing spreading, reduced activity) [

5,

6,

7]. Exposure to heat stress triggers a cascade of physiological responses including hyperventilation, elevated core body temperature, reduced feed intake, and oxidative stress [

8,

9]. Among observable behavioral indicators, open-mouthed respiration behavior is one of the most prominent and readily identifiable visual signatures of heat stress [

10]. While transient gaping represents an initial adaptive response, its persistent detection by monitoring systems may indicate escalating stress that can compromise welfare [

11]. Therefore, monitoring open-mouth behavior serves as an effective visual metric for assessing heat stress severity in broilers.

With the continuous growth in global meat consumption, the scale and output of broiler production are also increasing [

12], which makes the application of intelligent management technologies particularly critical [

13]. Concurrently, modern consumers are becoming increasingly sensitive to animal welfare, actively seeking products with clear labeling about housing systems, such as ‘free-range’ [

14,

15]. This shift highlights a key challenge: while conventional cage systems offer production efficiency, they often face criticism due to space limitations, restricted movement, and associated animal stress [

16]. Consequently, behavior monitoring has emerged as a vital component of precision poultry farming. Critically, innovative technologies in this field are no longer developed solely for productivity gains; they are increasingly focused on continuous monitoring of animal behavior to enable the early detection of welfare or health issues—an imperative especially in high-density production systems [

17]. This monitoring helps optimize the rearing environment, improve animal welfare and production efficiency, and reduce mortality caused by heat stress and disease [

18]. Among the key behaviors monitored, the automatic recognition of open-mouth behavior has become a significant research hotspot. Automated detection not only improves monitoring efficiency and reduces human subjective error but also provides reliable and continuous data support for smart farming systems [

19].

Traditional behavior monitoring methods, such as manual observation or video playback, are labor-intensive, inefficient, and impractical for large-scale real-time surveillance [

20,

21], failing to meet modern intensive farming needs [

19]. With rapid advances in artificial intelligence, deep learning-based behavior recognition has become core to smart farming [

22,

23,

24,

25,

26]. The YOLO series [

27], known for fast inference and lightweight structure, is widely used in poultry behavior recognition, density estimation, and health assessment [

24,

28,

29]. For instance, Broiler-Net by Zarrat Ehsan and Mohtavipour identifies abnormal behaviors [

23]. However, accurately detecting subtle movements like open-mouth behavior remains challenging, particularly under high-density caged conditions where frequent occlusion and lighting variations cause significant drops in small-target (beak) recognition accuracy [

21,

22,

25,

30]. Critically, high-frequency open-mouth behavior interferes with essential activities (feeding, resting), leading to dehydration, reduced growth, and mortality [

11]. Thus, automated detection of this behavior provides essential data for welfare intervention.

To address detection limitations, recent studies employ multi-scale feature fusion, attention mechanisms, and behavior-specific priors [

28,

29]. Chan et al.’s YOLO-Behavior framework supports multi-label recognition across species [

24], while Elmessery et al. fused YOLOv8 with infrared imaging for robust multimodal detection [

28]. Hybrid CNN-Transformer architectures also show promise [

29]. Despite these advances, robust real-time detection of open-mouth behavior in high-density caged environments—characterized by occlusion, lighting variations, and small target size—remains challenging. Solutions like YOLO-Behavior are generic, while Elmessery’s approach requires costly infrared modalities [

28], limiting practical deployment in commercial broiler houses.

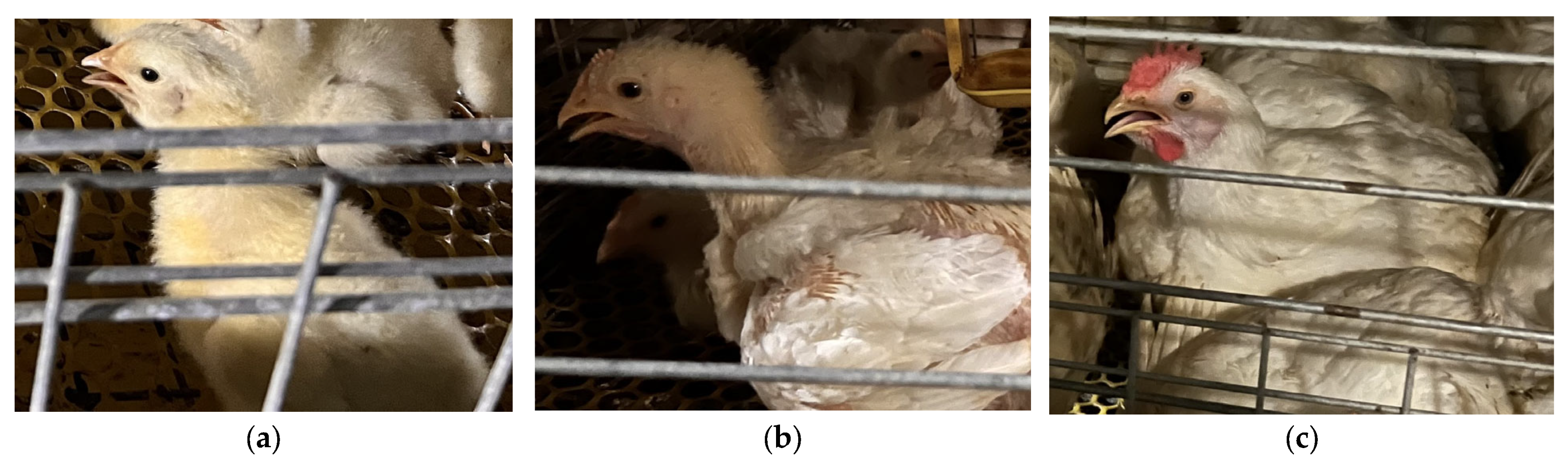

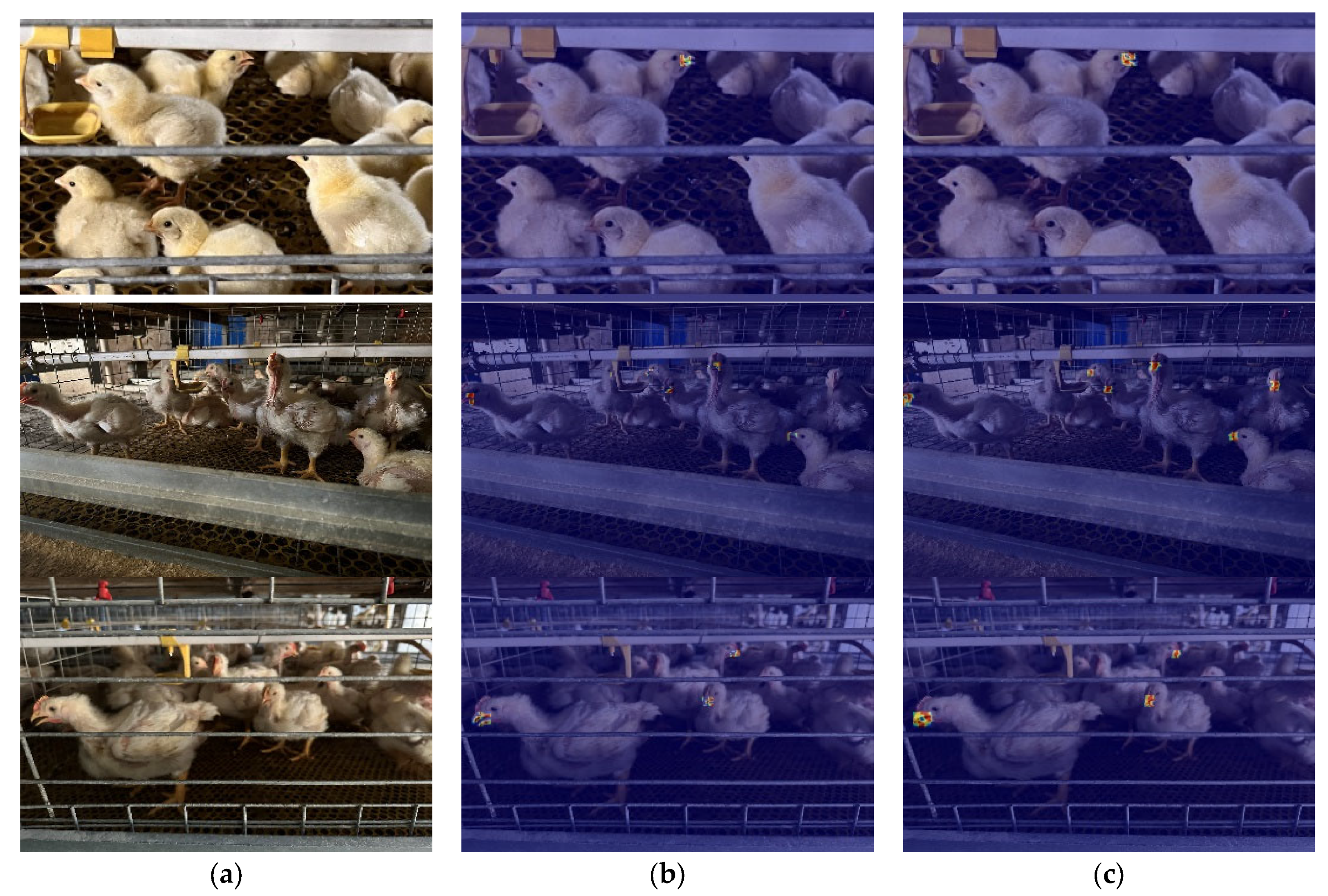

Furthermore, morphological changes during the broiler growth cycle compound recognition difficulty. As shown in

Figure 1, beak characteristics vary significantly across growth stages: Brooding stage (0–14 days): Short beak with light coloration (

Figure 1a). Growing stage (15–28 days): Moderate beak length with darkening color (

Figure 1b). Finishing stage (≥29 days): Fully mature beak with deep yellow hue (

Figure 1c). To enable whole-cycle recognition capability, this study collected full-cycle data from entry to market release.

Most poultry climate control systems rely solely on ambient parameters (temperature, humidity, airflow) [

31], lacking real-time animal-based feedback [

32]. This disconnect can cause suboptimal environmental adjustments. Automated detection of open-mouth behavior bridges this gap: metrics like gaping frequency correlate with environmental temperature [

33], enabling behavior-driven precision climate control.

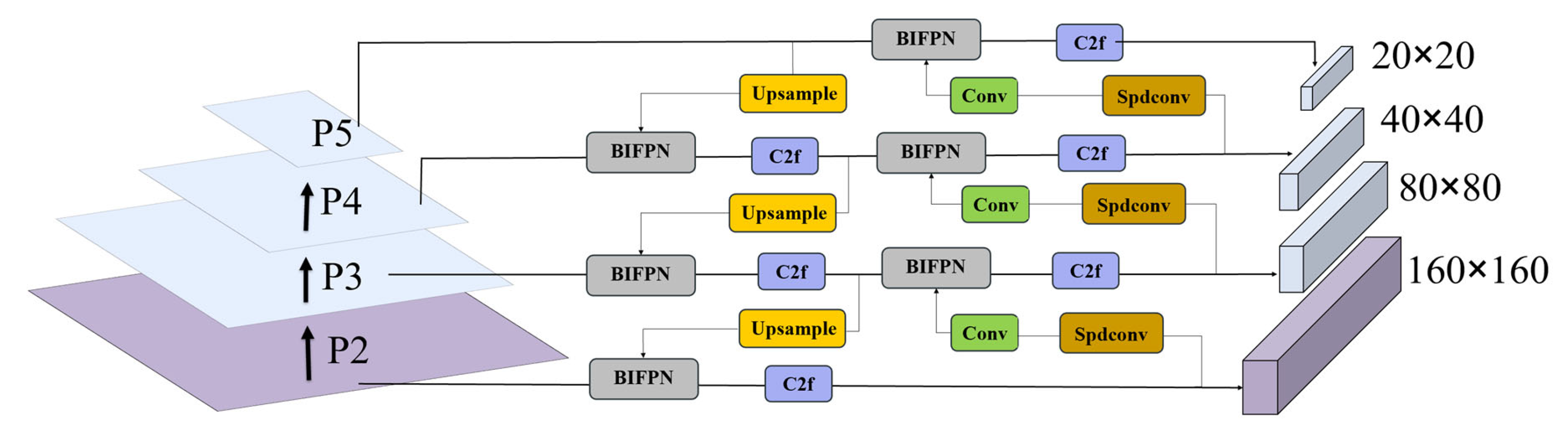

To overcome these challenges, we present OM-YOLO, an enhanced YOLOv8n method tailored for open-mouth behavior detection in commercial cage environments. The method integrates four key innovations:

- (1)

A P2 detection head to improve small-object (beak) detection in dense cages.

- (2)

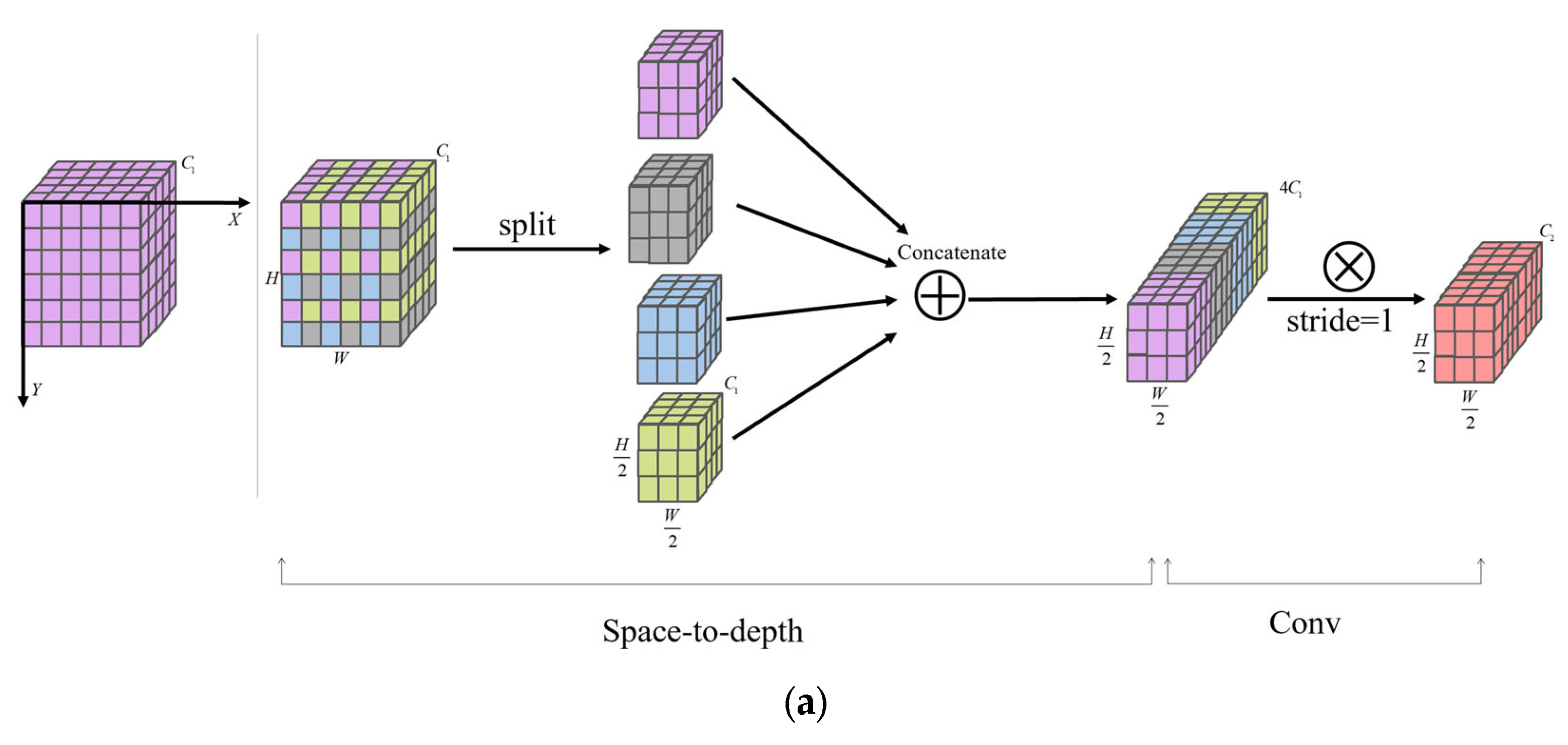

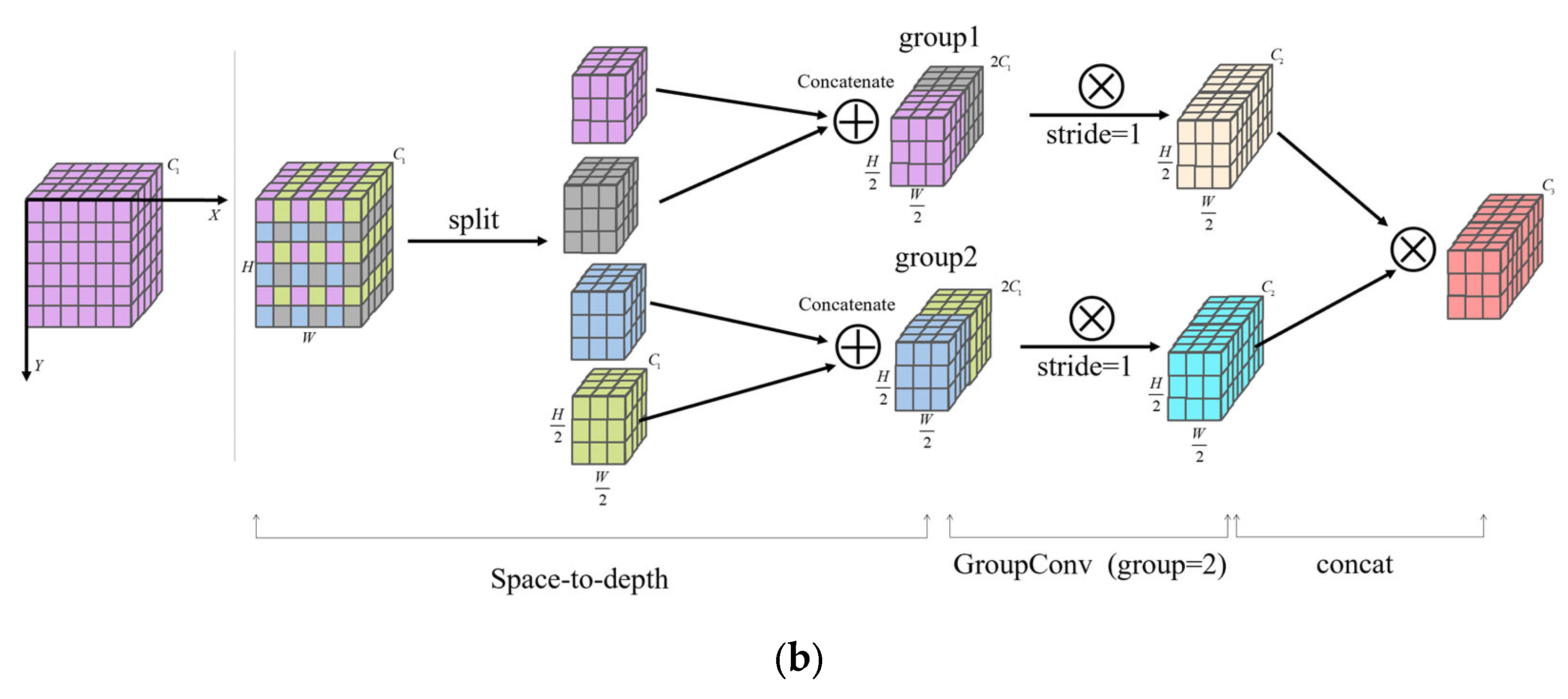

An SGConv module combining space-to-depth transformation with grouped convolution to capture essential local texture/edge features.

- (3)

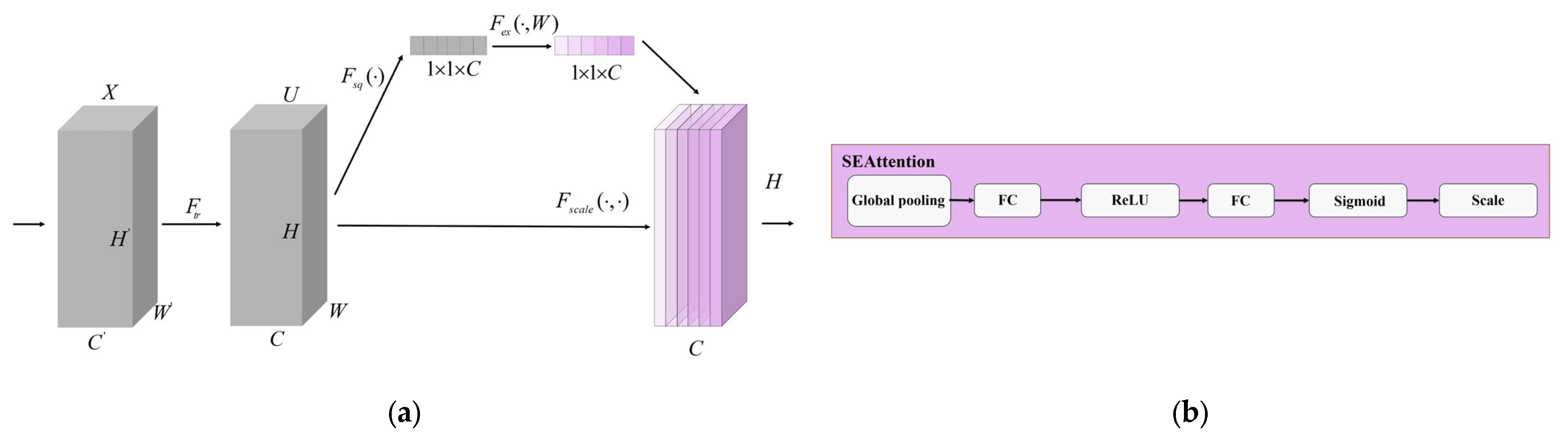

A BIFPN structure to efficiently merge semantic and low-level features.

- (4)

An SE channel attention mechanism to emphasize mouth-related features and suppress background noise.

The primary objective of this study is to develop and evaluate OM-YOLO for robust, real-time open-mouth detection under challenging commercial conditions. We hypothesize these architectural enhancements will achieve superior accuracy and speed over baseline models, providing a vision-based solution for intelligent broiler house management.

3. Results

To ensure the reliability and comparability of the experimental results, in this study, a strict control variable method was adopted to standardize the setting of all training parameters for deep learning. Parameters including batch size, initial learning rate, optimizer, and weight decay coefficient were all set uniformly. The specific parameter Settings are shown in

Table 4. This standardized setting effectively avoids the deviation of experimental results caused by differences in hyperparameters, random fluctuations or different environmental configurations, providing a reliable experimental basis for the subsequent comparative analysis of method performance.

3.1. Ablation Experiments

To assess the individual and combined contributions of each structural enhancement, we conducted ablation experiments on the YOLOv8n baseline model. Specifically, we evaluated four modules: SEAttention, BiFPN, the P2 small-object detection head, and the SGConv. It is important to note that the ablation studies in this

Section 3.1 and the subsequent method comparison

Section 3.3 were conducted under our initial experimental setup, which was later found to carry a potential risk of data leakage. While these results provided invaluable guidance for our architectural design choices and clearly demonstrated performance trends, the definitive validation of OM-YOLO’s superiority is based on the leakage-free experiments presented in

Section 3.5. Therefore, the results in

Section 3.1 and

Section 3.3 are presented as supporting evidence of our design process and for comprehensive archival purposes. The experiments were designed following three principles: controlled variable comparison, independent module evaluation, and multi-dimensional performance analysis.

3.1.1. Individual Module Experiments

In this phase, each module was integrated independently into the baseline YOLOv8n method to examine its isolated impact on detection performance. The corresponding evaluation results—including Precision, Recall, mAP@50, mAP@50–95, method parameters, and computational cost—are summarized in

Table 5.

In the task of detecting open-mouth behavior in broilers, the method needs to be sensitive to subtle actions such as slight mouth movements and perform reliably in crowded environments where broilers may overlap. The ablation study demonstrates that introducing different modules into the baseline YOLOv8n architecture affects performance in distinct ways, with each module offering particular advantages and trade-offs in terms of accuracy, recall, computational cost, and complexity.

The addition of the BIFPN module resulted in moderate improvements in both precision and recall, which increased to 0.806 and 0.853, respectively. The mAP@50 and mAP@50–95 also improved to 0.887 and 0.439. These gains suggest that BIFPN improves multi-scale feature representation through weighted fusion across layers. Moreover, the model’s parameter count and FLOPs were reduced from 3.005 M to 1.991 M and from 8.1 G to 7.1 G, respectively, indicating better computational efficiency. Nevertheless, the recall was not as high as that achieved by some other modules, suggesting that BIFPN might still miss some challenging cases.

When the SGConv module was introduced, the method achieved the highest precision among all variants (0.835), indicating improved capacity to focus on fine-grained features such as the mouth region. However, recall decreased to 0.791, slightly lower than the original model. This may be due to the limited information exchange caused by the grouped convolution structure, which can reduce sensitivity to more ambiguous targets. Although mAP improved, the gains were less significant than with other modules, suggesting that SGConv favors precision over broader detection performance.

The SEAttention mechanism provided a balanced improvement across all metrics. With precision of 0.825 and recall of 0.843, the method achieved an mAP@50 of 0.894 and mAP@50–95 of 0.445. Since SE attention strengthens important channel-wise features, it helps the network better identify key areas such as the open-mouth region. The parameter count and FLOPs remained unchanged compared to the original YOLOv8n. However, as this module does not consider spatial information directly, its effectiveness in detecting very small targets may still be limited.

The addition of the P2 detection head resulted in the highest recall (0.855), indicating improved sensitivity to small open-mouth targets. This module also led to a slight improvement in mAP@50–95 (0.446). However, precision dropped to 0.784, possibly due to increased false positives caused by low-level features introducing background noise. Furthermore, the model’s complexity increased notably, with the parameter count rising to 2.881 M and FLOPs to 11.7 G, which may affect deployment on resource-constrained devices.

In summary, each module offers unique trade-offs: BiFPN provides balanced gains with minimal cost, SGConv maximizes precision (at some cost to recall), SE attention yields very balanced improvement, and the P2 head greatly enhances small-target recall but at the expense of higher complexity.

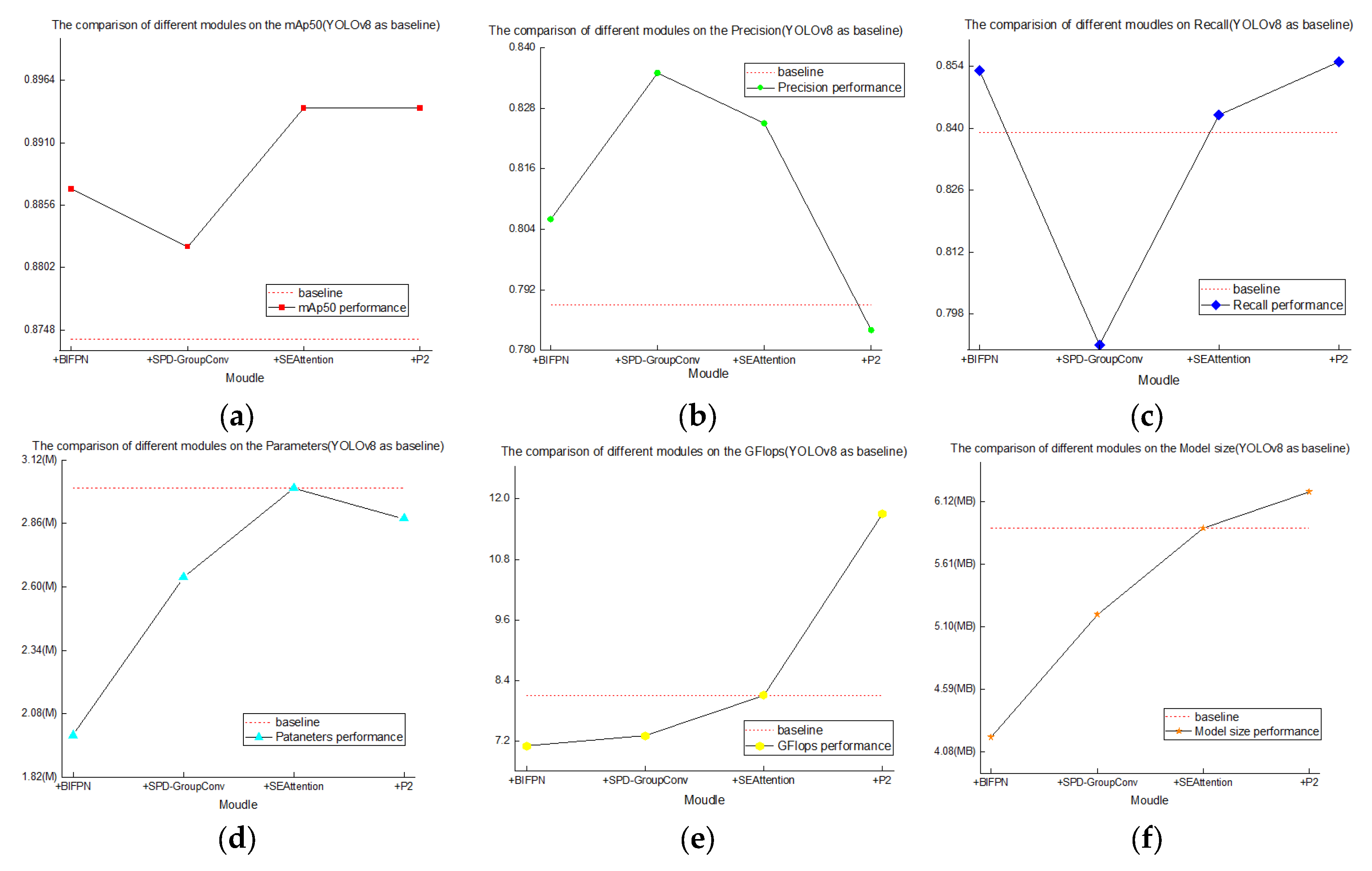

Figure 8 illustrates the performance trends in mAP@50, Precision, and Recall after integrating each of the four modules into YOLOv8n. From the experimental results, it is evident that each module enhances the base method to varying degrees.

Figure 9 shows detailed changes in mAP@50, Precision, Recall, parameter count, FLOPs, and method size when adding each module individually to the YOLOv8n baseline.

In order to more intuitively observe the different functions of each module on YOLOv8n, a comparison line graph of each module was drawn based on YOLOv8n, as shown in

Figure 9 specifically.

3.1.2. Combined Module Experiments

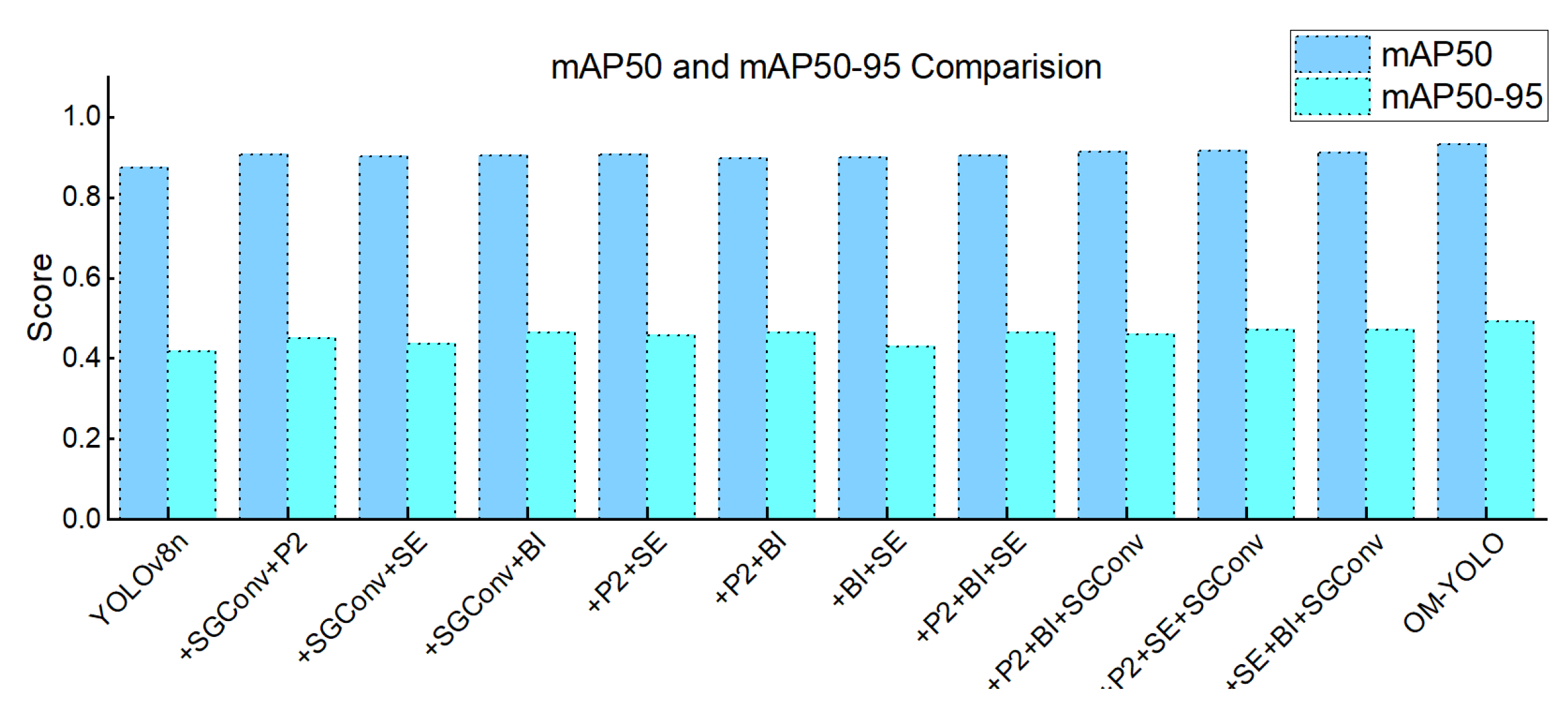

After investigating the effects of individual modules, this study further explored the synergistic effects of combining multiple modules. First, pairwise combinations of the modules were conducted, resulting in six experimental groups. Next, four groups of three-module combinations were tested. Finally, all four modules were integrated into the YOLOv8n method to obtain the experimental results of the OM-YOLO model. The results of YOLOv8n and the eleven aforementioned experimental configurations are presented in

Table 6.

To assess the impact of different structural modules and their combinations, a series of controlled experiments were conducted based on the YOLOv8n architecture. The focus was on analyzing detection performance, method size, and computational cost in the context of open-mouth behavior detection in cage-raised broilers.

The original YOLOv8n method served as the baseline, with a Precision of 0.789, Recall of 0.839, mAP@50 of 0.874, and mAP@50–95 of 0.418. It used 3.005 million parameters and 8.1 GFLOPs, demonstrating acceptable efficiency but limited performance under complex conditions.

The combination of SGConv and P2 showed improved detection accuracy, with Precision and Recall increasing to 0.856 and 0.854, respectively. The mAP@50 and mAP@50–95 values reached 0.907 and 0.451. At the same time, the parameter count decreased to 2.51 M. This synergy stems from complementary feature processing: SGConv preserves high-frequency spatial details (e.g., beak edges) through spatial-to-depth transformation [

27], while P2 amplifies low-level feature responses via high-resolution sampling [

26]. Their integration creates a “detail preservation-feature amplification” chain that enhances sensitivity to subtle structural changes. These results indicate that combining enhanced spatial detail extraction and small-object detection effectively supports this behavior recognition task.

The SGConv and SEAttention pairing achieved Precision of 0.836 and mAP@50 of 0.901, with Recall at 0.828. Feature-level complementarity manifests as: SGConv maintains spatial integrity of feather-beak boundaries [

29], while SEAttention dynamically weights channels to amplify discriminative oral regions [

33]. This “structure preservation + region enhancement” mechanism reduces false positives in cluttered scenes but may attenuate responses to partially occluded targets. The results suggest improved focus on informative features, although slightly lower recall reflects limited sensitivity to weak targets.

Using SGConv with BIFPN yielded a relatively high mAP@50–95 value of 0.464, along with balanced Precision (0.846) and Recall (0.832). BIFPN’s cross-scale fusion [

30] optimally integrates SGConv’s local features: beak contours are preserved during upsampling, while deep-layer semantics suppress feather-texture interference, creating local-global feature synergy. This reflects a complementary effect between local feature enhancement and multi-scale fusion.

The combination of P2 and SEAttention obtained a Recall of 0.856 and mAP@50–95 of 0.458, indicating good sensitivity to small-scale features. However, feature activation patterns confirm SEAttention’s channel recalibration [

34] amplifies unfiltered high-frequency noise when directly applied to P2 outputs, explaining the precision-recall trade-off. Precision reached 0.803, suggesting moderate trade-off between recall gain and low-level false positives.

In the P2 and BIFPN setup, the method reached Recall of 0.855 and mAP@50–95 of 0.465, with slightly lower Precision (0.837). BIFPN’s bidirectional pathways [

31] ensure feature-scale consistency: P2 provides pixel-level localization cues while BIFPN’s high-level semantics enhance classification robustness, though noise suppression remains suboptimal. The results point to improved consistency across varied object sizes.

The BIFPN and SEAttention pairing yielded Recall of 0.827 and mAP@50–95 of 0.430. Feature diversity analysis indicates insufficient low-level anchors: without P2/SGConv, SEAttention lacks spatial reference points for precise channel weighting [

33], and BIFPN-fused features lack fine-grained support. This explains limited gain in detecting ambiguous targets.

When combining P2, BIFPN, and SEAttention, Recall increased to 0.871 and mAP@50–95 to 0.463, while Precision decreased to 0.824. Feature redundancy arises from processing-chain misalignment: P2’s high-frequency noise propagates through BIFPN [

31], while post-fusion SEAttention [

34] incompletely suppresses irrelevant activations. This suggests improved coverage at the cost of potential false positives.

The P2, BIFPN, and SGConv configuration resulted in the highest Recall (0.892), with mAP@50 of 0.913 and mAP@50–95 of 0.459. SGConv’s spatial compression [

27] prefilters P2 noise, enabling BIFPN to fuse clean multi-scale features—a “noise-filtering to feature-fusion” cascade maximizing target coverage. Precision was 0.803, indicating preference for inclusiveness.

The combination of P2, SEAttention, and SGConv delivered Recall of 0.882 and mAP@50–95 of 0.471. Module sequence proves critical: SGConv’s spatial detail preservation [

27] precedes SEAttention’s channel weighting [

34], preventing direct processing of raw features that could degrade boundary information. Precision remained moderate (0.810).

The SEAttention, BIFPN, and SGConv trio showed stable performance across all metrics (Precision = 0.844, Recall = 0.864, mAP@50–95 = 0.470). BIFPN acts as a “feature coordinator” [

30], balancing SGConv’s spatial focus and SEAttention’s channel emphasis to minimize representation conflict. With 2.66 M parameters, this configuration demonstrates computational efficiency.

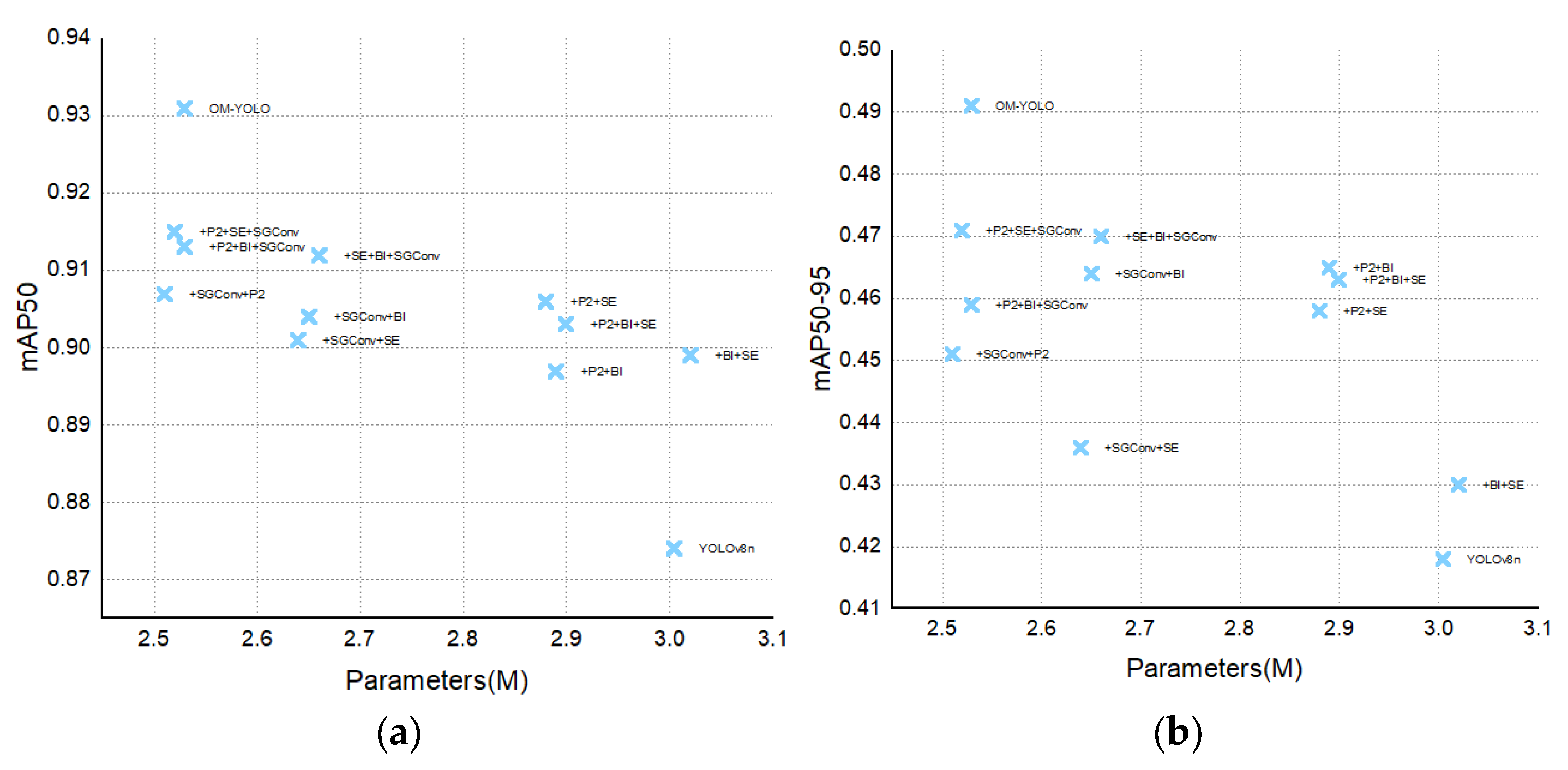

The fully integrated OM-YOLO achieved the highest mAP@50 (0.931) and mAP@50–95 (0.491), with Precision = 0.872 and Recall = 0.873. A four-tier feature synergy chain operates: (1) SGConv+P2 extract multi-granularity spatial features [

26,

27]; (2) BIFPN fuses cross-scale contexts [

30]; (3) SEAttention purifies channels [

33]. This hierarchy minimizes feature conflict while maximizing discriminative power in occlusion scenarios through complementary processing pathways. Total parameters = 2.53 M, FLOPs = 11.6 G. As demonstrated in

Figure 10, OM-YOLO outperforms all other combinations in both mAP@50 and mAP@50-95 metrics.

3.2. OM-YOLO Training Results

OM-

YOLO training stopped at epoch 188, and the training outcomes are shown in

Figure 11a. Throughout the training process, both the training loss and validation loss exhibited a synchronized downward trend, indicating that the method did not suffer from overfitting. The precision–recall (P–R) curve, as shown in

Figure 11b, demonstrates strong consistency between the training and validation sets in terms of precision and recall. The validation loss decreased alongside the training loss and eventually stabilized, further confirming that OM-YOLO did not experience overfitting.

3.3. Performance Comparison of Different Models

The following comparative analysis was performed concurrently with the ablation studies under the same initial setup. The performance trends observed here were conclusively validated by the leakage-free retraining experiment in

Section 3.5, which confirms the structural advantage of OM-YOLO over all compared models. To comprehensively evaluate the performance advantages of the proposed OM-YOLO method in open-mouth behavior detection, six representative object detection models were selected for comparison. These include various generations of the YOLO series and mainstream general-purpose detection frameworks. Specifically, YOLOv5n and YOLOv8n are current mainstream lightweight detection networks widely applied in real-world deployments, known for their strong real-time performance and stability. YOLOv10n and YOLOv11n are recent iterations in the YOLO family, featuring deeper structural optimizations and adaptive inference mechanisms, representing the cutting edge in lightweight detection. Faster R-CNN, as a classical two-stage detector, is not lightweight but provides a strong accuracy benchmark. Finally, the TOOD model, which combines an anchor-free architecture with dynamic quality assessment, performs well in complex object classification tasks, making it suitable for validating method robustness. The detailed results of these experiments are shown in

Table 7.

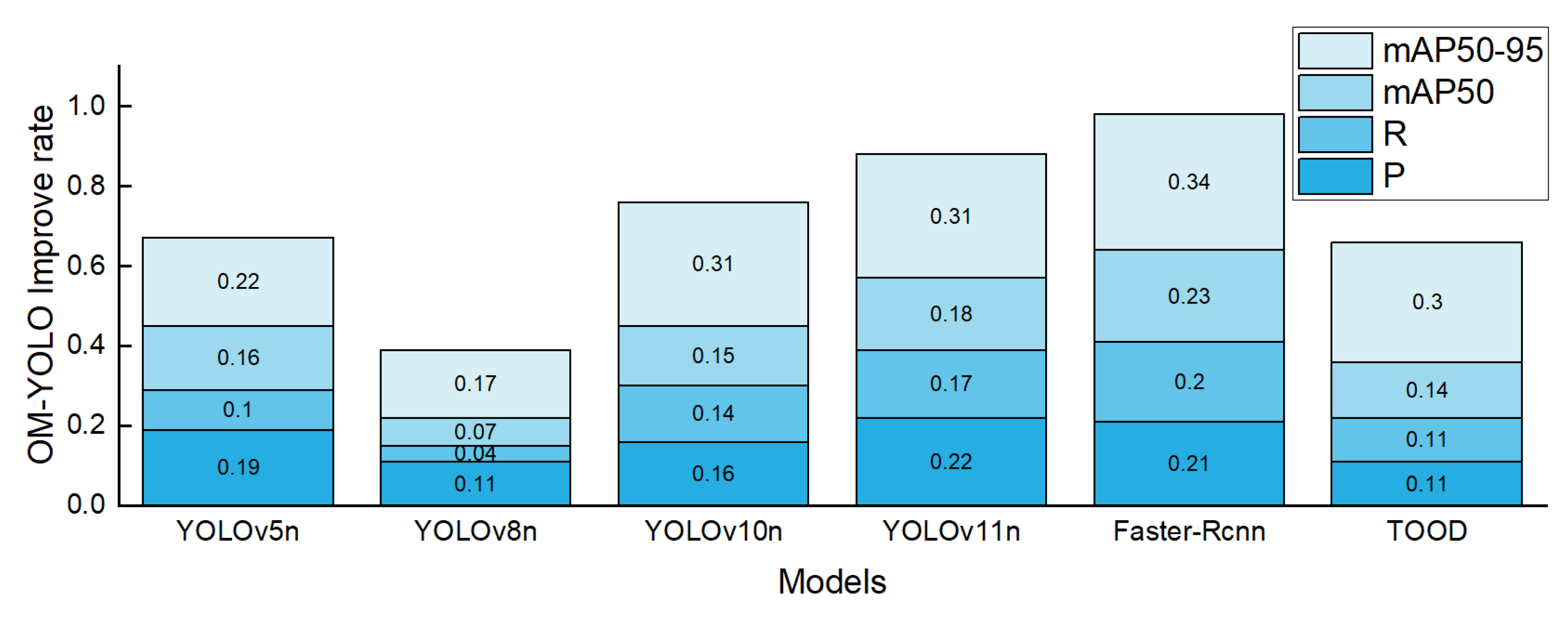

As presented in

Table 6, the proposed OM-YOLO method demonstrates superior performance compared to six widely used object detection models across several evaluation metrics. In terms of mAP@50, OM-YOLO achieves a score of 0.931, outperforming YOLOv8n (0.874), YOLOv5n (0.803), YOLOv10n (0.813), and YOLOv11n (0.790). It also exceeds the performance of traditional models such as Faster R-CNN (0.755) and TOOD (0.814), indicating improved accuracy in identifying and localizing open-mouth targets in broilers.

For the more stringent mAP@50–95 metric, which reflects method performance across a range of IoU thresholds, OM-YOLO again ranks highest with a score of 0.491. This result surpasses that of YOLOv8n (0.418), YOLOv5n (0.404), and YOLOv10n (0.374), suggesting stronger robustness under varied detection conditions. In terms of recall, OM-YOLO achieves 0.873, indicating improved sensitivity in detecting panting behavior, even in the presence of occlusions, motion blur, or small target sizes. The method also attains the highest precision score (0.872), further confirming its overall detection reliability.

In addition to its accuracy, OM-YOLO maintains a compact architecture, with 2.53 million parameters and 11.6 GFLOPs. This is fewer than YOLOv8n (3.005 M) and YOLOv10n (2.69 M), and comparable to YOLOv5n (2.503 M). Although its FLOPs are slightly higher than those of YOLOv5n and YOLOv11n (6.4G), OM-YOLO remains significantly more efficient than Faster R-CNN (208 G) and TOOD (199 G), supporting its suitability for deployment on devices with limited computing resources.

Overall, OM-YOLO demonstrates a favorable balance between detection accuracy, recall rate, and computational efficiency, highlighting its potential for practical application in monitoring panting behavior among cage-raised broilers.

Figure 12 presents a stacked bar chart illustrating the exact improvements of OM-YOLO over other models in mAP@50–95, mAP@50, Precision, and Recall, offering a more intuitive visualization of the specific advantages OM-YOLO holds in terms of accuracy and recall.

Figure 13 presents a heatmap comparison between OM-YOLO and YOLOv8n.

3.4. Independent Test Set Validation

To scientifically evaluate the model’s generalization capability on strictly unseen data, we constructed a completely independent test set under the following protocols:

- (1)

Data Source: 300 newly acquired original images from distinct broiler flocks and time periods (ensuring spatiotemporal separation from the training set).

- (2)

Preprocessing: Identical standardization to the original test set without any data augmentation.

- (3)

Evaluation Protocol: Comparative assessment under identical inference conditions.

As shown in

Table 8, the performance metrics on the independent test set are as follows:

As demonstrated in

Table 3, OM-YOLO maintains high performance (mAP@50 = 0.920) on the strictly isolated independent test set, showing only a 1.2% reduction vs. the original test set. Marginal variations across all key metrics (≤1.3%: mAP@50—1.2%, precision—0.4%, recall—1.3%) definitively refute performance dependency on data leakage from the original test set. This deviation falls within expected thresholds for independent evaluation, confirming that data correlation flaws (

Section 2.1.3) do not materially compromise true generalization capability.

The method sustains > 90% mAP@50 under spatiotemporal separation between training and testing data, proving that OM-YOLO’s generalization stems fundamentally from core architectural innovations—the P2 detection head, spatial-group convolution (SGConv), bidirectional feature pyramid (BiFPN), and squeeze-excitation attention (SEAttention) modules—rather than train-test data correlation. The ≤1.3% generalization gap indicates intrinsic adaptability to novel data distributions, preserving strong discriminative power across varying broiler flocks, rearing periods, and imaging conditions.

3.5. Leakage-Free Retraining

3.5.1. Leakage-Free Dataset Construction

To address the data partitioning concern in

Section 2.1.3, we implemented a corrected protocol:

- (1)

Merged the original 1000-image dataset and independent test set (300 images) into a 1300-image pool;

- (2)

Performed stratified partitioning (fixed seed: Python = 42, PyTorch = 42, NumPy = 42) into training set (1040 images, 80%), validation set (130 images, 10%), and test set (130 images, 10%) with growth-stage distribution in

Table 9;

- (3)

Applied augmentation exclusively to the training set: +1040 horizontal flips and +1040 random crops, keeping validation/test sets pristine.

Table 9.

Growth-stage distribution in corrected partitions.

Table 9.

Growth-stage distribution in corrected partitions.

| Growth Stage | Train Set | Val Set | Test Set |

|---|

| Brooding | 338 (32.5%) | 42 (32.4%) | 43 (33.1%) |

| growth | 354 (34%) | 44 (33.8%) | 44 (33.8%) |

| Fattening | 348 (33.5%) | 44 (33.8%) | 43 (33.1%) |

| Total | 1040 | 130 | 130 |

3.5.2. Core Method Retraining and Validation

Using a newly partitioned dataset that strictly prevents data leakage, we retrained and evaluated both the baseline YOLOv8n method and the proposed OM-YOLO model, with detailed results presented in

Table 10.

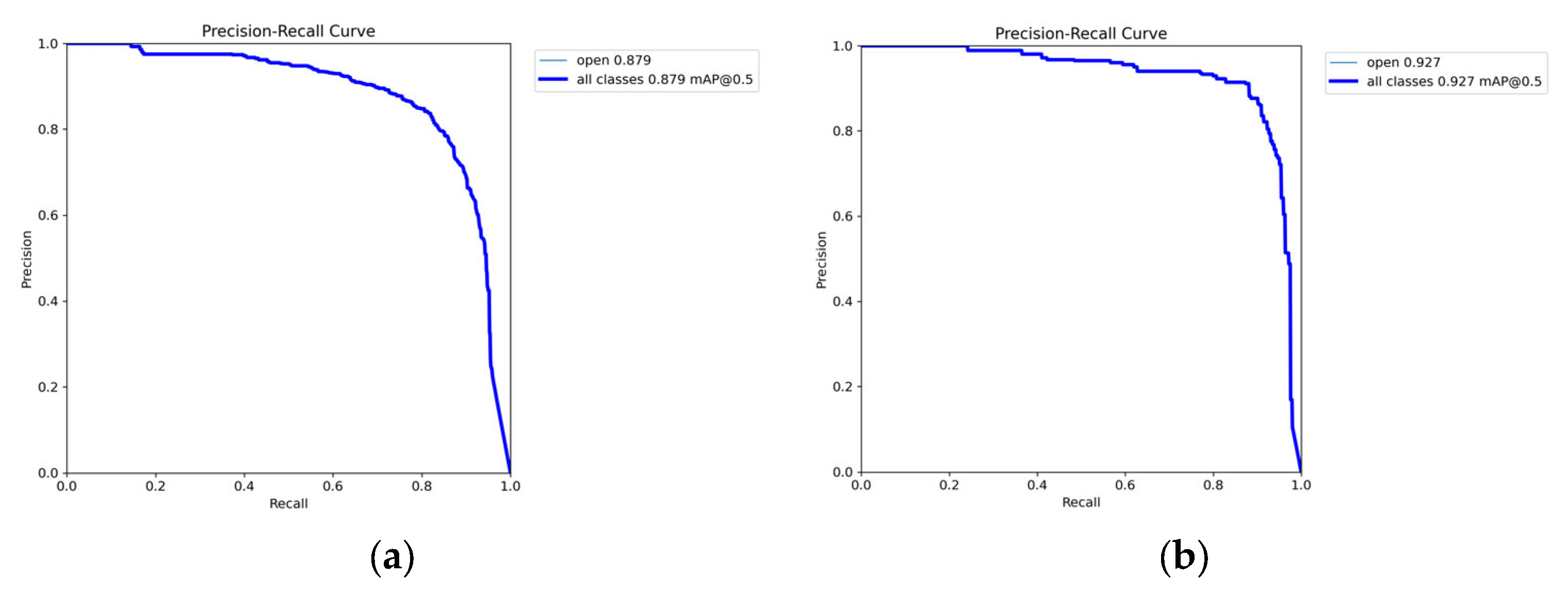

After retraining with corrected partitioning, YOLOv8n exhibited an improvement in mAP@50 from 0.874 to 0.879 (a gain of 0.5 percentage points), attributed to reduced test set complexity. Conversely, OM-YOLO showed a decrease from 0.931 to 0.927 (a reduction of 0.4 percentage points), quantitatively reflecting the eliminated data leakage impact. Critically, retrained OM-YOLO maintained a substantial advantage of 4.8 percentage points over retrained YOLOv8n (0.927 versus 0.879), with this structural superiority exceeding leakage-induced variation by twelvefold. The original OM-YOLO achieved 0.92 mAP@50 on the independent test set, demonstrating less than 0.8 percent deviation from the retrained model’s performance (0.927), confirming consistent generalization across data distributions. These results establish that OM-YOLO’s enhancements—including the P2 detection head, SGConv module, BIFPN structure, and SEAttention mechanism—deliver authentic performance gains independent of partitioning artifacts. Data leakage contributed only marginal inflation (≤0.4 percentage points in mAP), substantially outweighed by the 4.8 percentage-point structural improvement.

Figure 14 presents the Precision-Recall curves for both retrained models.

The YOLOv8n’s mAP@50 improvement and OM-YOLO’s reduction are scientifically explainable: (1) Sample analysis revealed fewer challenging cases (e.g., heavy occlusions) in the corrected test set, benefiting simpler models; (2) Advanced architectures like OM-YOLO are more sensitive to data leakage removal due to their stronger ability to exploit subtle correlations. These variations (≤0.4%) fall within expected test-set fluctuation ranges and do not invalidate OM-YOLO’s dominant 4.8% structural advantage.

Collectively, the rigorous retraining process—with data leakage strictly eliminated—confirms OM-YOLO’s inherent robustness and practical efficacy. The persistent performance advantage under leakage-free conditions demonstrates that our structural innovations fundamentally enhance detection capability rather than exploiting data artifacts. OM-YOLO thus establishes itself as a deploy-ready solution for precision livestock monitoring.

4. Discussion

The proposed OM-YOLO method achieves significant performance improvements in the task of recognizing panting behavior in cage-raised broilers through structural optimizations. By integrating SEAttention, BIFPN, SGConv, and the P2 small-object detection layer, the method outperforms existing lightweight YOLO variants and traditional object detection frameworks across multiple key performance metrics. Ablation studies and module combination analyses further validate the specific contributions of each structural improvement to the model’s precision, recall, and computational efficiency. However, the method still has certain limitations regarding recognition stability, dynamic behavior modeling, and practical deployment. The following discussion will focus on three aspects.

4.1. Contribution of Module Synergy to Detection Performance

The integration of multiple functional modules contributed to noticeable improvements in detecting opening the mouth in broilers, a biologically significant thermoregulatory behavior. The P2 detection head enhanced sensitivity to small-scale features, critical for capturing subtle beak movements during this behavior. SGConv preserved spatial details to distinguish opening the mouth from feeding or other motions. BIFPN enabled precise localization amid cluttered farm backgrounds, while SEAttention boosted robustness against occlusions in high-density flocks. With these components, the method achieved high mAP@50 and mAP@50–95 performance at only 2.53 million parameters, demonstrating an optimal balance between accuracy and efficiency for deployable behavior-driven monitoring.

Figure 15 confirms this computational advantage.

4.2. Method Limitations and Analysis of Recognition Errors

Despite the improved detection performance achieved by OM-YOLO, several limitations remain that may affect its practical deployment.

First, a critical methodological limitation exists in the initial dataset partitioning: Validation and test set images originated from the identical source pool as the training augmentation data (

Table 1). This may have allowed the method to indirectly “encounter” augmented variants of test samples during evaluation. Although the independent test set (

Section 3.4) confirms the model’s generalization capability, this flaw likely resulted in marginal overestimation of original test metrics (

Table 5 and

Table 6, estimated 3–5%). Future implementations will adopt a rigorous partition-then-augment protocol (e.g., splitting original images into mutually exclusive training/validation/test subsets, with augmentation applied exclusively to the training subset).

Second, the method struggles with detecting subtle mouth movements, especially in situations where visual features resemble the target object—such as overlapping elements, complex background structures, or occlusions between individuals. Under these conditions, the method may misclassify or entirely miss actual panting behaviors, which compromises overall reliability. Quantitative analysis of error types across the test set reveals that occlusion (e.g., by drinkers or cage bars) accounts for 55.5% of errors (126/227), while background interference (e.g., feather similarity or structural confusion) contributes 44.5% (101/227). This distribution aligns with the qualitative cases shown in

Figure 16, which illustrates representative examples.

Third, although the method maintains a relatively small parameter size, the inclusion of the P2 detection layer and the BIFPN module increases computational complexity, raising the FLOPs to 11.6 G. This additional computational load may hinder the model’s usability on resource-constrained devices, such as mobile or edge platforms.

Fourth, the dataset used for training was collected from a single poultry facility located in Yunfu, Guangdong Province. The uniformity of this dataset—with standardized lighting, consistent cage structure, and a single broiler breed (818 Small-sized Quality Broiler)—limits the model’s exposure to environmental variability. As a result, the model’s robustness across different farming scenarios remains unverified. Such scenarios may include other broiler breeds (e.g., colored-feather varieties), varied lighting conditions (e.g., low light or strong backlight), diverse cage types (e.g., wire mesh versus plastic flooring), and different camera placements (e.g., top-down versus side views). These factors may limit the model’s immediate applicability to heterogeneous production systems.

Fifth, this study focused solely on vision-based detection and did not incorporate real-time ambient temperature monitoring. Since temperature is a critical factor influencing thermoregulatory behavior, this exclusion risks conflating adaptive thermoregulation with pathological heat stress.

Finally, the static-image paradigm cannot differentiate transient mouth-opening (<1 s during feeding/vocalization) from pathological panting (>10 s rhythmic cycles at 2–4 Hz). This may cause false positives in complex environments, triggering unnecessary interventions (e.g., excessive ventilation) that waste energy or induce chick chilling stress.

4.3. Future Research Directions

Based on the above analysis, future research can be further advanced in the following aspects:

First, to rectify the data partitioning flaw (validation/test sets sharing origins with training augmentations,

Table 1), we will implement a rigorous partition-then-augment protocol: (1) Split original images into mutually exclusive training, validation, testing subsets. (2) Apply augmentation exclusively to the training subset. (3) Use pristine non-augmented images for all evaluation tasks. Expected outcome: Eliminate 3–5% metric inflation observed in original tests (

Table 6 and

Table 7).

Second, addressing the 55.5% occlusion errors (

Figure 16a–c) and 44.5% background interference (

Figure 15b), we proposed a triple-strategy enhancement: (1) Hardware: Install polarized-filter cameras to mitigate drinker reflections. (2) Algorithm: Develop geometric-invariant convolutional layers. (3) Data: Generate synthetic occlusion datasets (30% adversarial samples). Target: Reduce occlusion-related errors to <15%.

Third, to overcome computational barriers (11.6 G FLOPs from P2+BIFPN), we designed a dynamic inference architecture. (1) Activate BIFPN channel pruning (≤60% retention) on edge devices. (2) Bypass P2 detection head for high-resolution inputs (>1280 × 720). (3) Deactivate SEAttention modules in low-density scenes (<0.5 birds/m2). Goal: Achieve ≤ 7.0 G FLOPs (40% reduction) for mobile deployment.

Fourth, resolving data homogeneity bias (single Yunfu facility, 818 Small-sized Quality Broiler), we will: (1) Systematically collect video datasets from at least five commercial poultry farms spanning diverse climatic zones. (2) Include color-feathered breeds (e.g., Rhode Island Red, Plymouth Rock) to account for phenotypic variability. (3) Encompass both wire-mesh cages and plastic flooring systems as representative housing infrastructures. (4) Acquire multi-perspective imagery under variable lighting. Validation metric: Cross-farm mAP@50 variance < ±2%.

Fifth, to compensate for the exclusion of ambient temperature monitoring [102, 103], we will integrate thermal sensing mechanisms: (1) Embed IoT temperature sensors in poultry houses. (2) Dynamically adjust detection sensitivity based on real-time thermal readings. (3) Establish temperature-triggered alert thresholds to reduce false interventions. Implementation focus: Correlation between thermal stress and panting intensity.

Finally, to bridge the temporal modeling gap and enable behavior-level interpretation, we propose a phased approach specifically designed to integrate ethological benchmarks for distinguishing adaptive thermoregulation from heat-stress-induced dysfunction: (1) Short-term: Implement lightweight LSTM networks to analyze temporal sequences. Crucially, this will not only detect panting occurrence but also quantify its rhythm and duration. (2) Mid-term: Develop 3D-CNN architectures to process extended video clips for robust spatiotemporal feature fusion, facilitating continuous monitoring of panting bouts. (3) Long-term: Advance to transformer-based models that will explicitly correlate key behavioral metrics—particularly panting duration and its potential disruption of resting periods—with physiological stress biomarkers. This integration aims to establish quantitative thresholds: short-duration panting within the normal rhythm range signifies adaptive thermoregulation, while prolonged panting (exceeding physiologically adaptive timeframes) or panting coinciding with significant behavioral suppression (e.g., reduced resting) will be flagged as indicators of heat-stress-induced dysfunction and compromised welfare.