YOLO-WildASM: An Object Detection Algorithm for Protected Wildlife

Simple Summary

Abstract

1. Introduction

- We established a specialized wildlife dataset comprising over 8000 images of 10 protected species (giant panda, leopard, tiger, snow leopard, wolf, red fox, black bear, red panda, yellow-throated marten, and otter) collected through verified channels. Video frame differencing techniques were applied to expand image diversity from collected video sources. All data were annotated via LabelImg 1.8.6, ensuring comprehensive coverage of complex backgrounds, diverse postures, multi-target/small-target scenarios, and diurnal/nocturnal conditions.

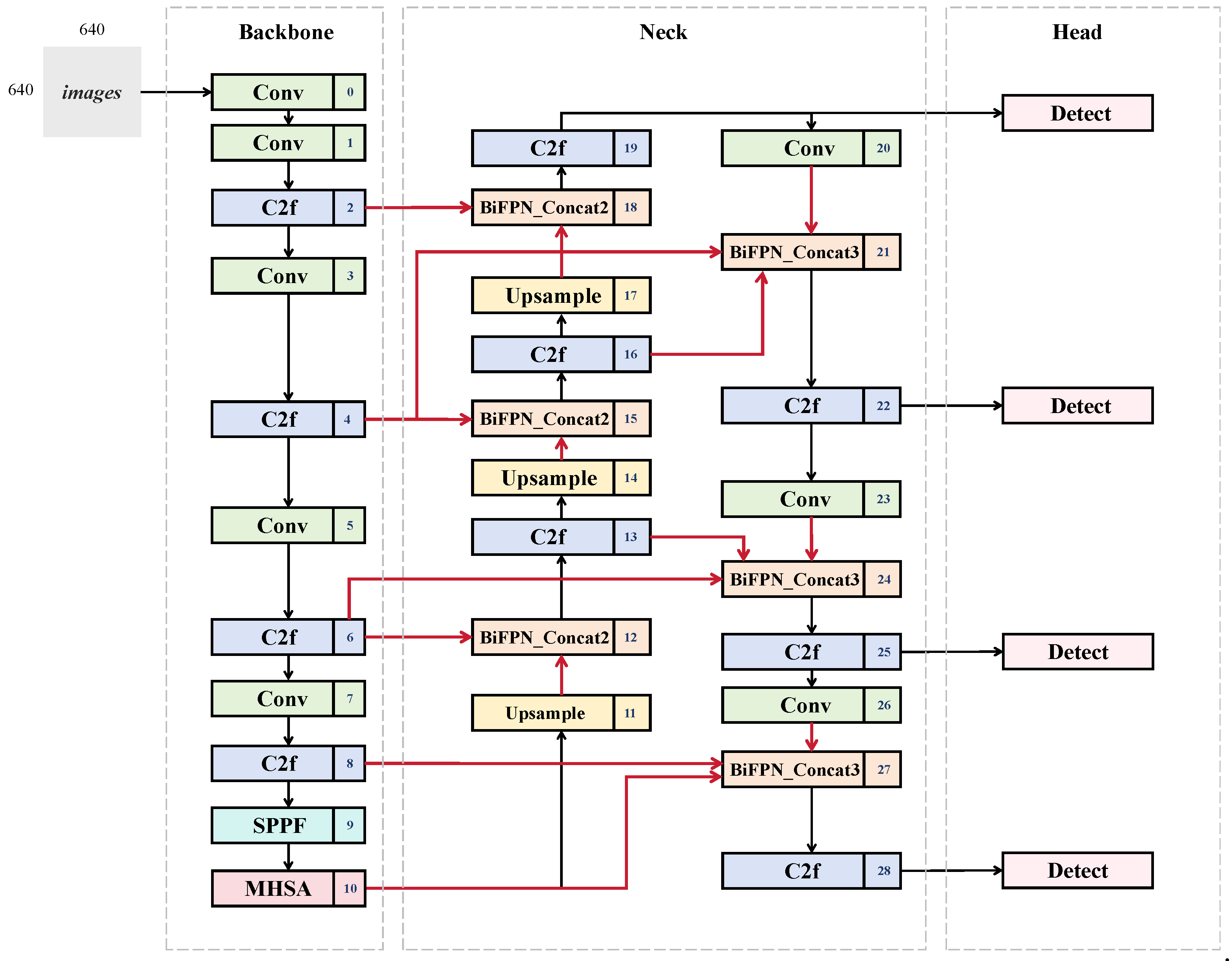

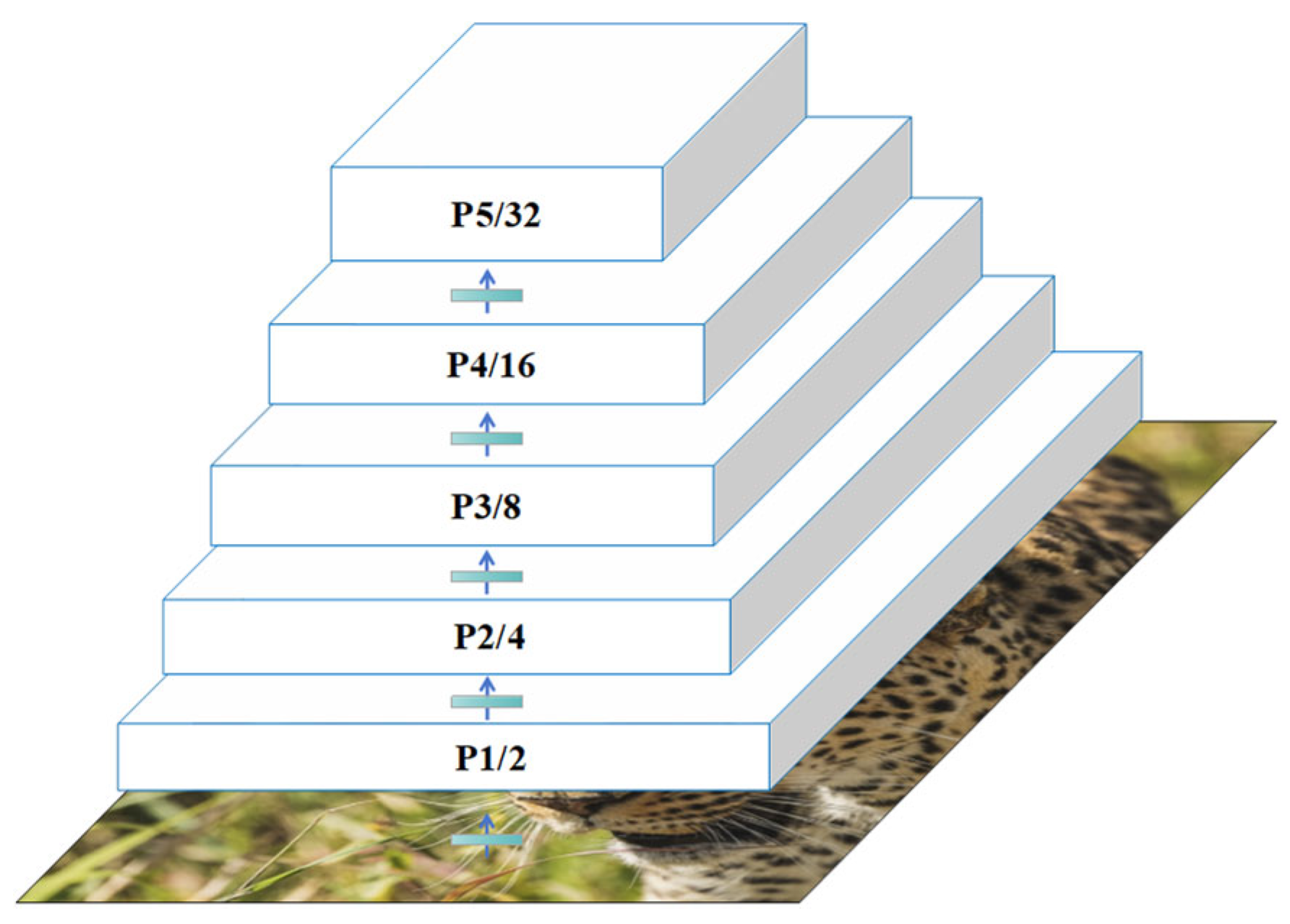

- The proposed model enhances small-object detection by incorporating a P2/4 layer (160 × 160 resolution) into YOLOv8′s PAN-FPN neck network. This modification preserves high-resolution spatial details for targets smaller than 32 × 32 pixels. By propagating detailed shallow features to deeper network layers, the optimization mitigates conventional FPN limitations while improving spatial localization for larger objects through enriched feature representation.We replace the original concatenation layers with BiFPN (Bidirectional Feature Pyramid Network)-based weighted fusion modules, including dual-channel BiFPN_Concat2 and triple-channel BiFPN_Concat3. This architecture strengthens the model’s capacity to concurrently detect targets for multi-target coexistence in complex environments.

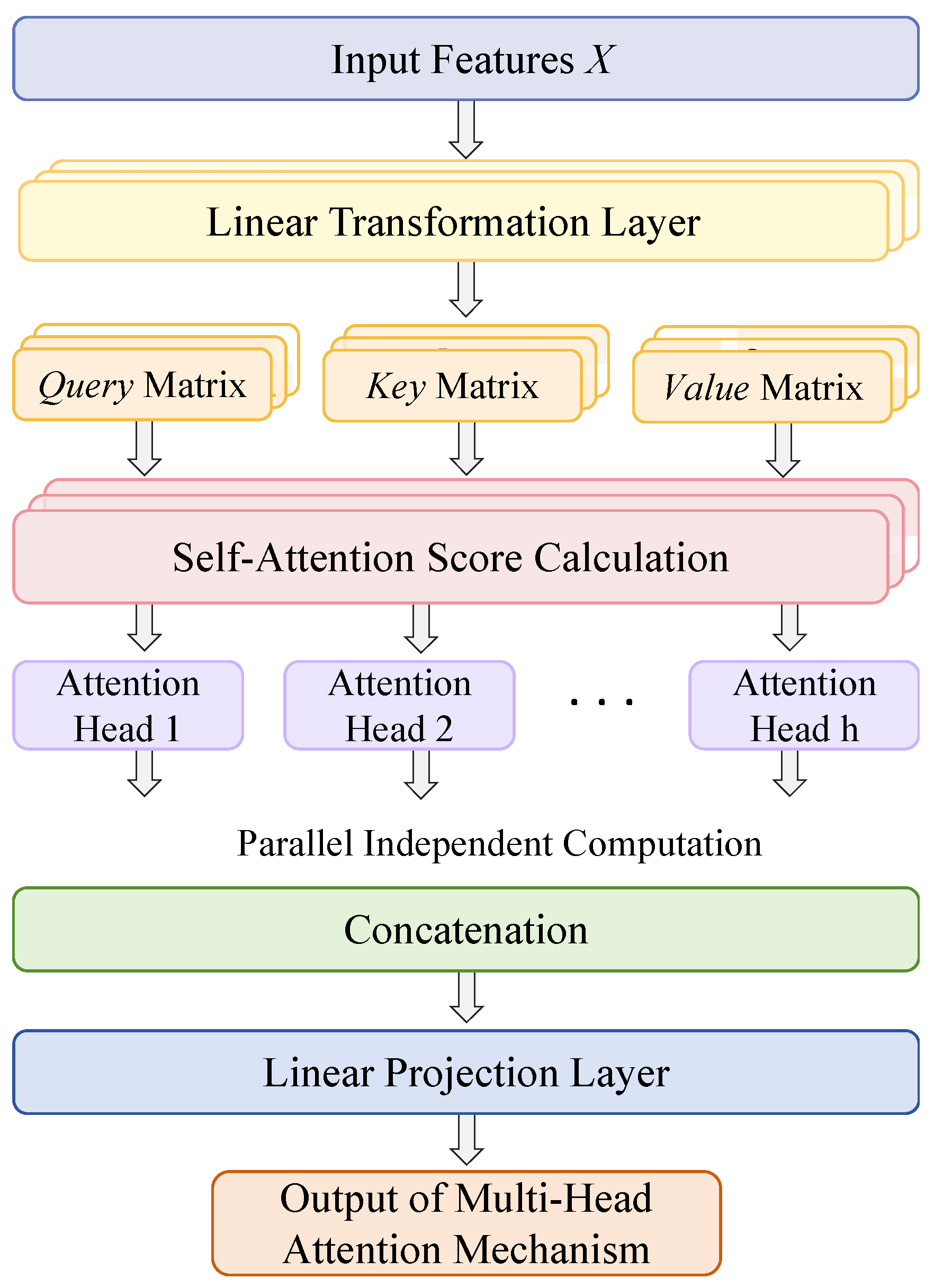

- YOLO-WildASM incorporates Multi-Head Self-Attention (MHSA) mechanisms to address occlusion challenges and complex background interference. The parallel computation of multiple attention heads captures global contextual dependencies, enhancing feature discriminability for occluded targets and cluttered environments. Synergizing with the P2 layer and BiFPN enhancements, this integration substantially promotes detection accuracy in multi-object scenarios, providing robust technical support for wildlife monitoring applications.

2. Materials and Methods

2.1. Data and Preprocessing

2.2. The YOLO-WildASM Model

2.2.1. YOLOv8

2.2.2. P2 Feature Pyramid Layer Integration for Small-Object Detection

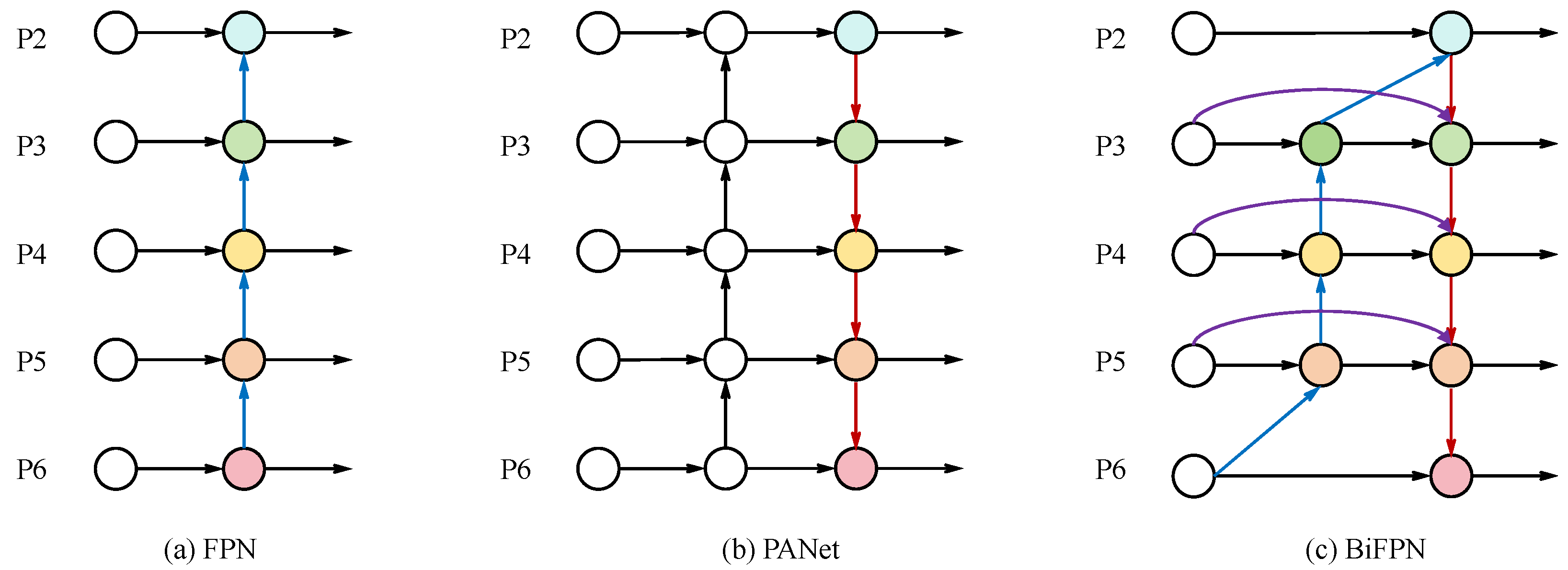

2.2.3. BiFPN_Concat-Driven Feature Fusion for Complex Scene Robustness

- Iterative bidirectional fusion, where repeated bidirectional fusion blocks enable cyclic propagation of multi-scale features across the network, thereby amplifying feature representation capacity and fostering comprehensive cross-scale interactions through progressive refinement.

- Adaptive weighted feature fusion, which introduces learnable weight parameters to dynamically optimize the proportional contributions of heterogeneous-scale feature maps. This weighting mechanism empowers task-aware prioritization of critical features by autonomously adjusting their importance during training.

- Cross-layer connectivity, facilitating direct interactions between non-adjacent hierarchical layers during concatenation to ensure global feature consistency and unified contextual encoding across spatial and semantic hierarchies.

2.2.4. Multi-Head Self-Attention (MHSA) Mechanism

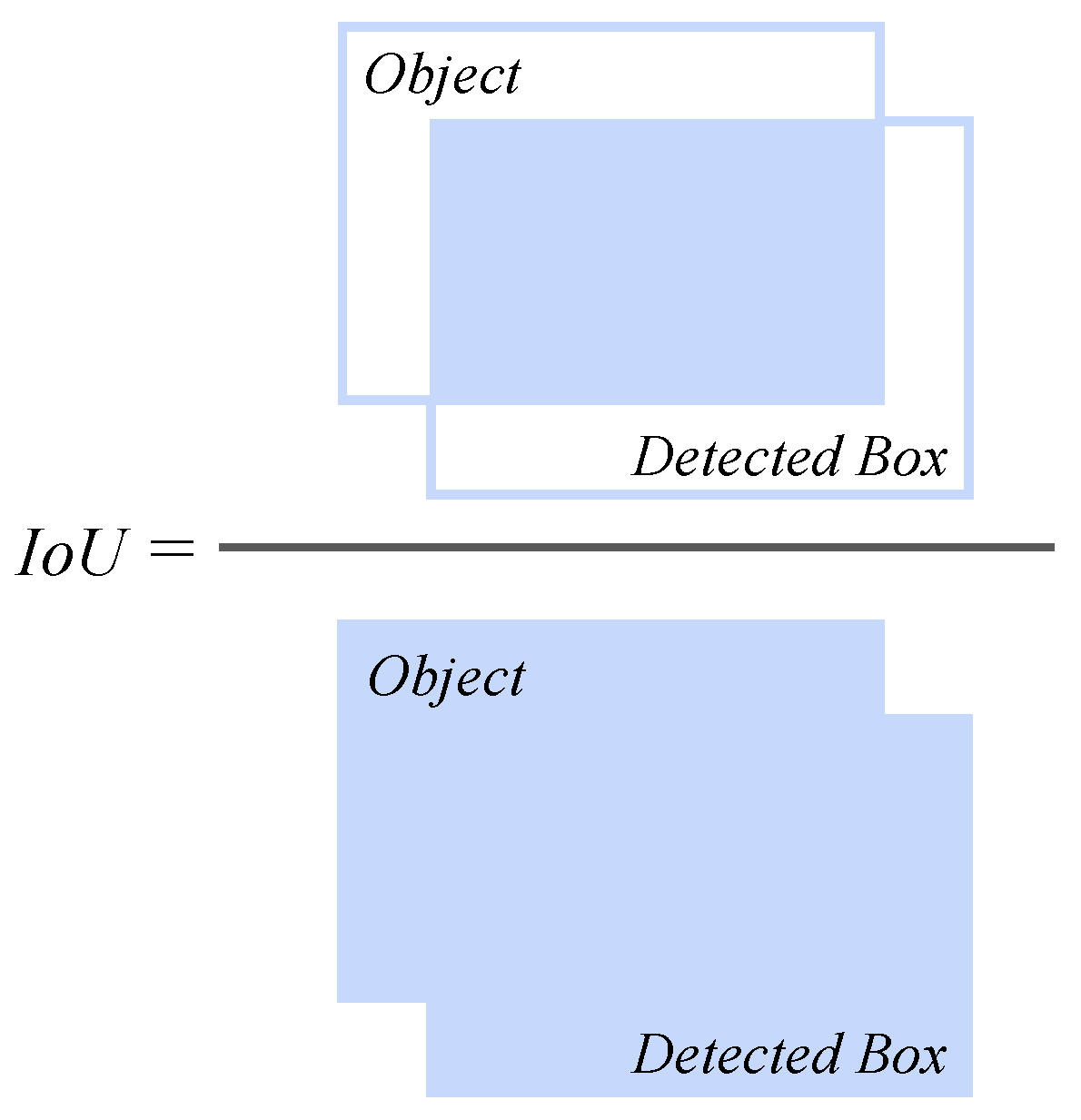

2.3. Evaluation Indicators

3. Experimental Evaluation

3.1. Experimental Environment

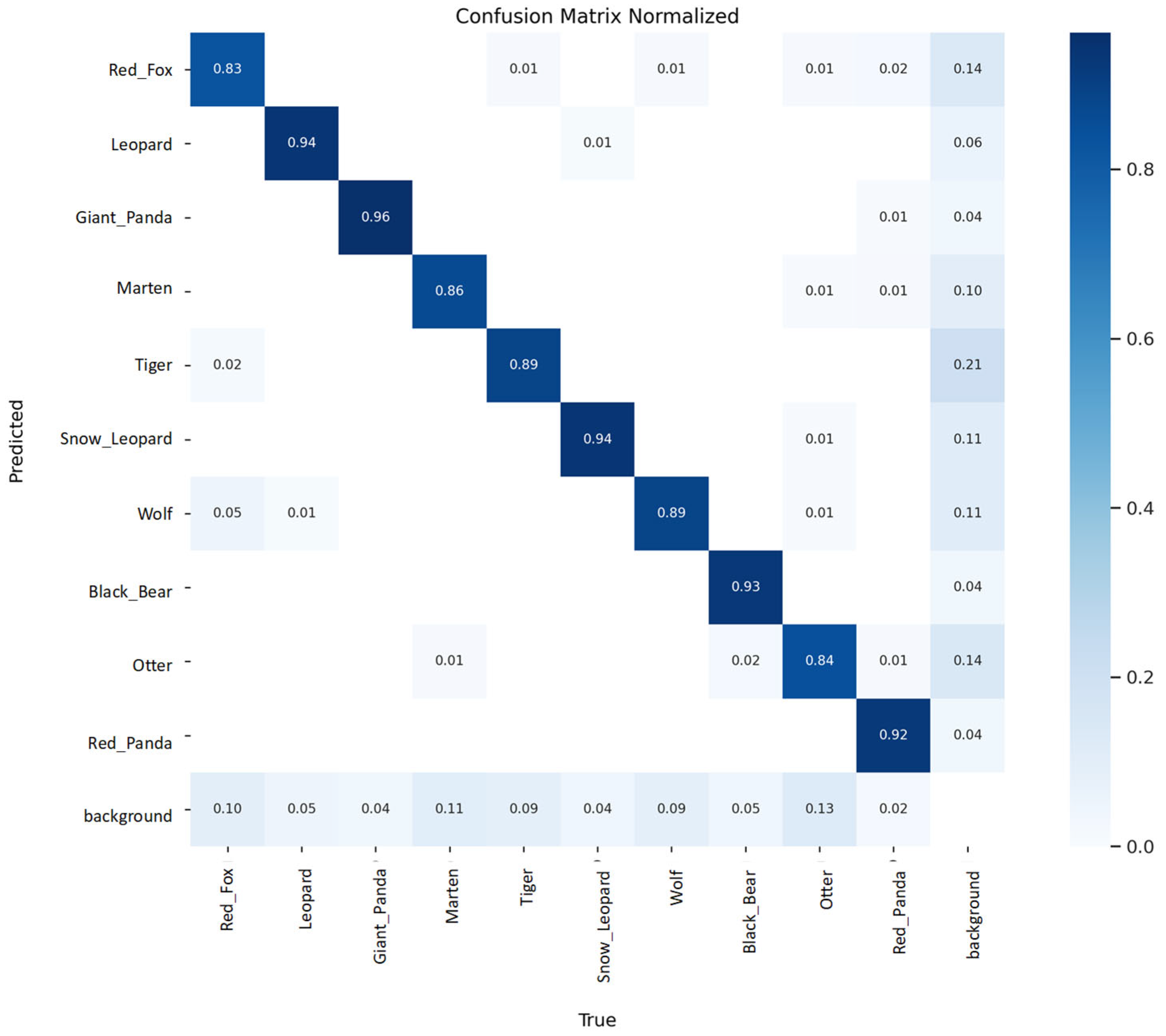

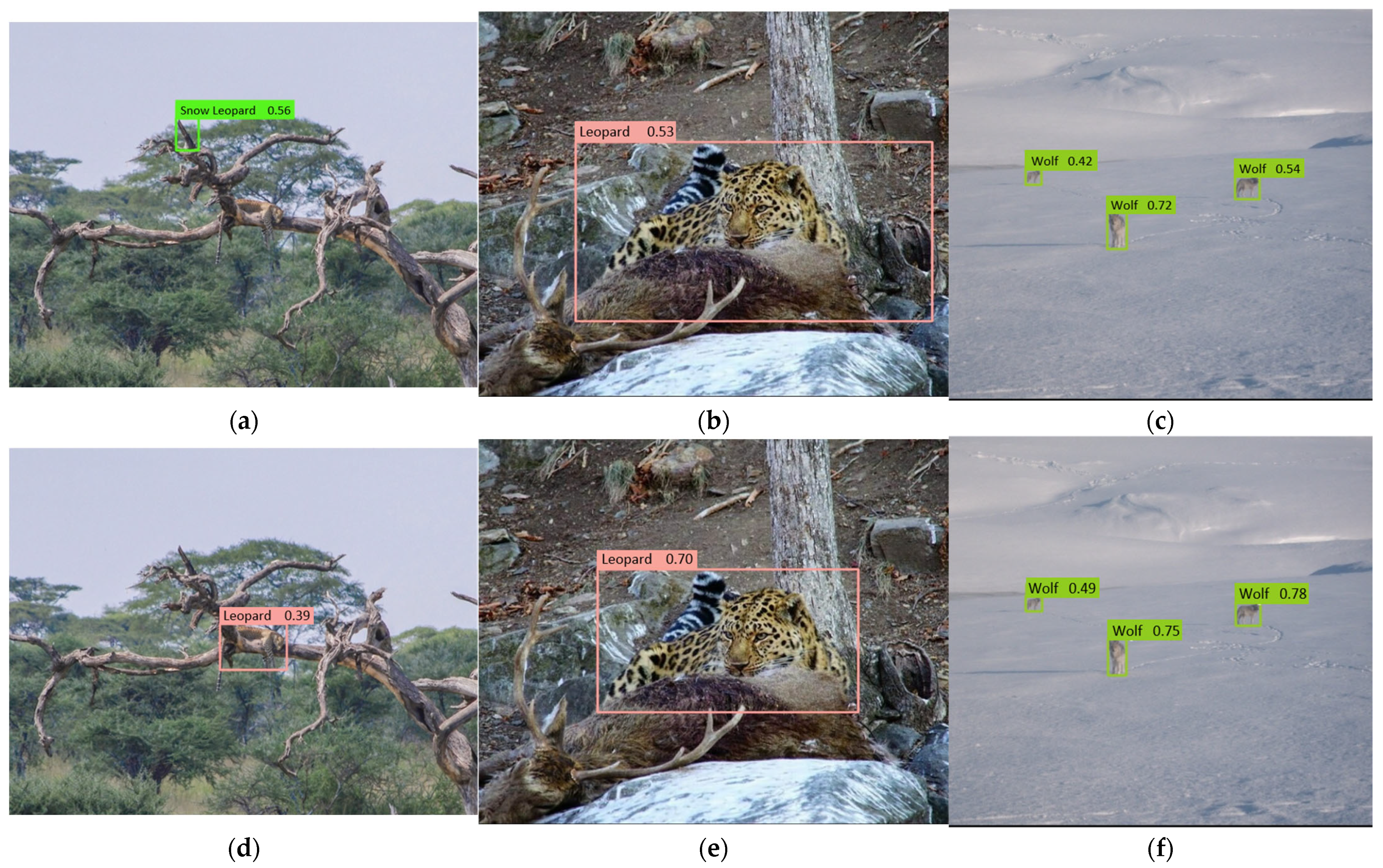

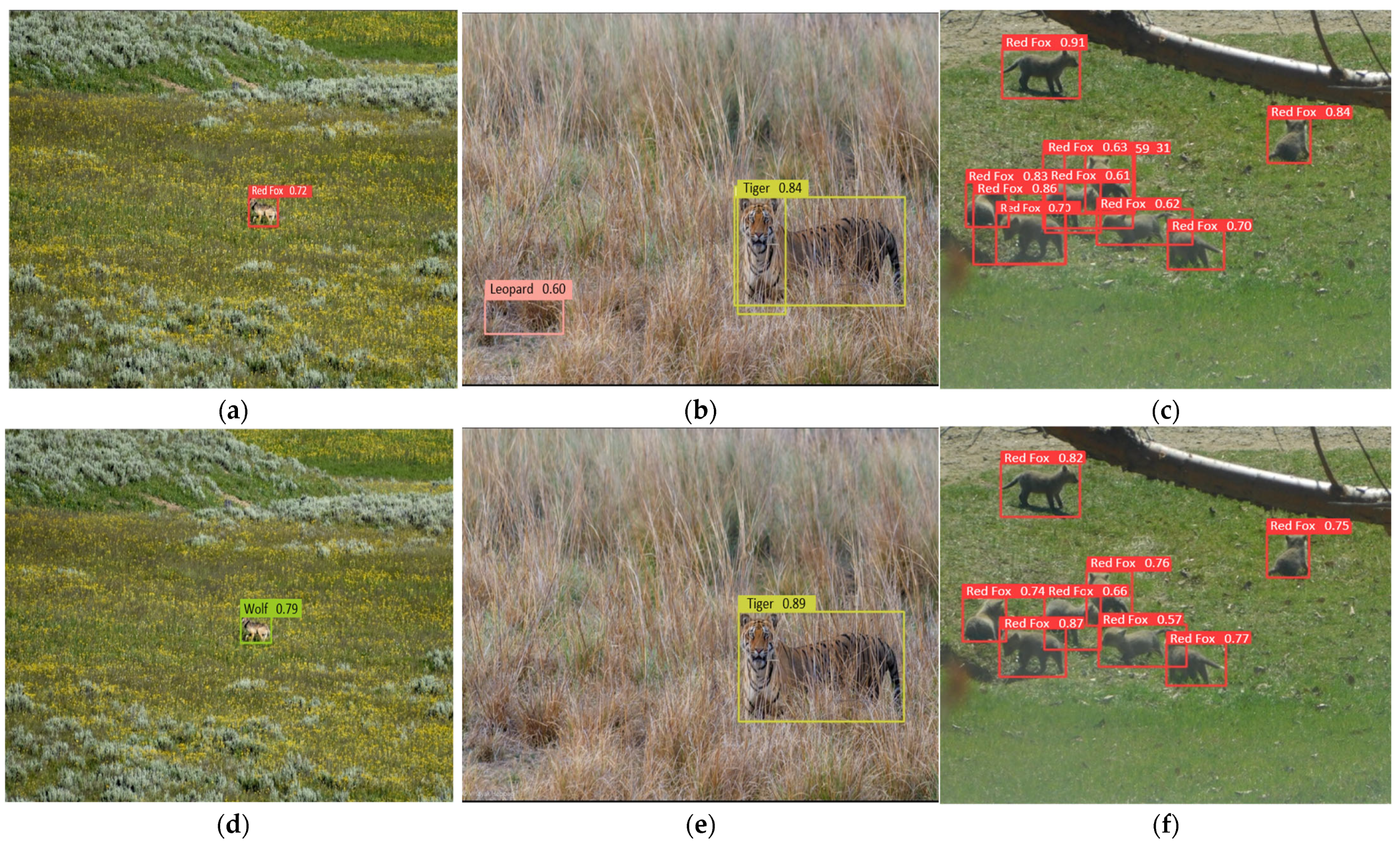

3.2. Experimental Results and Analysis

3.2.1. Ablation Experiment

3.2.2. Comparative Experiment

3.2.3. Model Complexity Comparison

3.2.4. Extended Comparative Experiment

3.2.5. Cross-Dataset Generalization Experiment

4. Discussion

4.1. Estimation Accuracy and Portability of YOLO-WildASM

4.2. Limitations of Modeling Methods

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Caro, T.; Rowe, Z.W.; Berger, J.L.; Wholey, P.; Dobson, A. An inconvenient misconception: Climate change is not the principal driver of biodiversity loss. Conserv. Lett. 2022, 15, e12868. [Google Scholar] [CrossRef]

- Lee, S.X.T.; Amir, Z.; Moore, J.H.; Campos-Arceiz, A.; Gray, T.N.E.; Lynam, A.J.; Wich, S.A.; Wong, E.P.; Davies, A.B. Effects of human disturbances on wildlife behaviour and consequences for predator–prey overlap in Southeast Asia. Nat. Commun. 2024, 15, 1521. [Google Scholar] [CrossRef] [PubMed]

- Mercugliano, E.; Boshoff, M.; Dissegna, A.; Cerizza, A.F.; Laner, L.; Indovina, A.E.; Biasetti, P.; Da Re, R.; Mascarello, G.; De Mori, B. Wildlife management and conservation in South Africa: Informing legislative reform through expert consultation using the Policy Delphi methodology. Animals 2025, 12, 1549222. [Google Scholar] [CrossRef] [PubMed]

- Cao, H.; Quan, R.-C.; Bai, Y.; He, R.; Geng, Y.; Liu, Y.; Li, J.; Wang, L. Assessment of changes in the composition and distribution of large and medium-sized mammals in Xishuangbanna, Southwest China. Animals 2024, 14, 70432. [Google Scholar] [CrossRef] [PubMed]

- Chalmers, C.; Fergus, P.; Wich, S.A.; Longmore, S.N.; Walsh, N.D.; Oliver, L.; Warrington, J.; Quinlan, J.; Appleby, K. AI-Driven Real-Time Monitoring of Ground-Nesting Birds: A Case Study on Curlew Detection Using YOLOv10. Remote Sens. 2025, 17, 769. [Google Scholar] [CrossRef]

- Torres, R.; Kuemmerle, T.; Baumann, M.; Romero-Muñoz, A.; Altrichter, M.; Boaglio, G.I.; Cabral, H.; Camino, M.; Campos Krauer, J.M.; Cartes, J.L.; et al. Partitioning the effects of habitat loss, hunting and climate change on the endangered Chacoan peccary. Divers. Distrib. 2023, 31, e258915558. [Google Scholar] [CrossRef]

- Zwerts, J.A.; Stephenson, P.J.; Maisels, F.; Rowcliffe, M.; Astaras, C.; Jansen, P.A.; van der Waarde, J.; Sterck, L.E.H.M.; Verweij, P.A.; Bruce, T.; et al. Methods for wildlife monitoring in tropical forests: Comparing human observations, camera traps, and passive acoustic sensors. Conserv. Sci. Pract. 2021, 3, e568. [Google Scholar] [CrossRef]

- Braga-Pereira, F.; Morcatty, T.Q.; El Bizri, H.R.; Tavares, A.S.; Mere-Roncal, C.; González-Crespo, C.; Bertsch, C.; Rodriguez, C.R.; Bardales-Alvites, C.; von Mühlen, E.M. Congruence of local ecological knowledge (LEK)-based methods and line-transect surveys in estimating wildlife abundance in tropical forests. Methods Ecol. Evol. 2022, 13, 743–756. [Google Scholar] [CrossRef]

- McEvoy, J.F.; Hall, G.P.; McDonald, P.G. Evaluation of unmanned aerial vehicle shape, flight path and camera type for waterfowl surveys: Disturbance effects and species recognition. PeerJ 2016, 4, e1831. [Google Scholar] [CrossRef]

- Liu, Y.; Li, W.; Liu, X.; Zhang, H.; Chen, M. Deep learning in multiple animal tracking: A survey. Comput. Electron. Agric. 2024, 224, 109161. [Google Scholar] [CrossRef]

- Roopashree, Y.A.; Bhoomika, M.; Priyanka, R.; Kumar, N. Monitoring the movements of wild animals and alert system using deep learning algorithm. In Proceedings of the 2021 International Conference on Recent Trends on Electronics, Information, Communication & Technology (RTEICT), Bengaluru, India, 20–21 August 2021; pp. 626–630. [Google Scholar]

- Witmer, G.W. Wildlife population monitoring: Some practical considerations. Wildl. Res. 2005, 32, 259. [Google Scholar] [CrossRef]

- Kays, R.; McShea, W.J.; Wikelski, M. Born-digital biodiversity data: Millions and billions. Divers. Distrib. 2020, 26, 644–648. [Google Scholar] [CrossRef]

- Berckmans, D. Precision livestock farming technologies for welfare management in intensive livestock systems. Rev. Sci. Tech. 2014, 33, 189–196. [Google Scholar] [CrossRef] [PubMed]

- Blackwell, B.F.; Seamans, T.W.; Washburn, B.E.; Bernhardt, G.E. Use of infrared technology in wildlife surveys. In Proceedings of the Vertebrate Pest Conference, Berkeley, CA, USA, 6–9 March 2006; pp. 22, 124–130. [Google Scholar] [CrossRef]

- Mota-Rojas, D.; Pereira, A.M.F.; Martínez-Burnes, J.; Domínguez-Oliva, A.; Mora-Medina, P.; Casas-Alvarado, A.; Rios-Sandoval, J.; de Mira Geraldo, A.; Wang, D. Thermal imaging to assess the health status in wildlife animals under human care: Limitations and perspectives. Animals 2022, 12, 3558. [Google Scholar] [CrossRef]

- Zhang, R.; Yang, B.; Xu, L.; Huang, Y.; Xu, X.; Zhang, Q.; Jiang, Z.; Liu, Y. A Benchmark and Frequency Compression Method for Infrared Few-Shot Object Detection. IEEE Trans. Geosci. Remote Sens. 2025, 63, 1–11. [Google Scholar] [CrossRef]

- Tuia, D.; Kellenberger, B.; Beery, S.; Costelloe, B.R.; Zuffi, S.; Risse, B.; Mathis, A.; Mathis, M.W.; van Langevelde, F.; Burghardt, T. Perspectives in machine learning for wildlife conservation. Nat. Commun. 2022, 13, 792. [Google Scholar] [CrossRef]

- Norouzzadeh, M.S.; Nguyen, A.; Kosmala, M.; Swanson, A.; Palmer, M.S.; Packer, C.; Clune, J. Automatically identifying, counting, and describing wild animals in camera-trap images with deep learning. Proc. Natl. Acad. Sci. USA 2018, 115, E5716–E5725. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, R.; Xu, L.; Lu, X.; Yu, Y.; Xu, M.; Zhao, H. FasterSal: Robust and Real-Time Single-Stream Architecture for RGB-D Salient Object Detection. IEEE Trans. Multimed. 2025, 27, 2477–2488. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Zhang, M.; Gao, F.; Yang, W.; Zhang, H. Wildlife object detection method applying segmentation gradient flow and feature dimensionality reduction. Electronics 2023, 12, 377. [Google Scholar] [CrossRef]

- Ma, Z.; Dong, Y.; Xia, Y.; Xu, D.; Xu, F.; Chen, F. Wildlife real-time detection in complex forest scenes based on YOLOv5s deep learning network. Remote Sens. 2024, 16, 1350. [Google Scholar] [CrossRef]

- Su, X.; Zhang, J.; Ma, Z.; Dong, Y.; Zi, J.; Xu, N.; Zhang, H.; Xu, F.; Chen, F. Identification of Rare Wildlife in the Field Environment Based on the Improved YOLOv5 Model. Remote Sens. 2024, 16, 1535. [Google Scholar] [CrossRef]

- Chen, L.; Li, G.; Zhang, S.; Wang, Y.; Zhao, H. YOLO-SAG: An improved wildlife object detection algorithm based on YOLOv8n. Ecol. Inform. 2024, 83, 102791. [Google Scholar] [CrossRef]

- He, A.; Li, X.; Wu, X.; Su, C.; Chen, J.; Xu, S.; Guo, X. ALSS-YOLO: An adaptive lightweight channel split and shuffling network for TIR wildlife detection in UAV imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 17308–17326. [Google Scholar] [CrossRef]

- Mou, C.; Zhu, C.; Liu, T.; Cui, X. A novel efficient wildlife detecting method with lightweight deployment on UAVs based on YOLOv7. IET Image Process. 2024, 18, 1296–1314. [Google Scholar] [CrossRef]

- Jiang, L.; Wu, L. Enhanced YOLOv8 network with extended Kalman filter for wildlife detection and tracking in complex environments. Ecol. Inform. 2024, 84, 102856. [Google Scholar] [CrossRef]

- Roy, A.M.; Bhaduri, J.; Kumar, T.; Raj, K. WilDect-YOLO: An efficient and robust computer vision-based accurate object localization model for automated endangered wildlife detection. Ecol. Inform. 2023, 75, 101919. [Google Scholar] [CrossRef]

- Zhang, R.; Cao, Z.; Huang, Y.; Yang, S.; Xu, L.; Xu, M. Visible-Infrared Person Re-Identification With Real-World Label Noise. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 4857–4869. [Google Scholar] [CrossRef]

- Van Horn, G.; Mac Aodha, O.; Song, Y.; Cui, Y.; Sun, C.; Shepard, A.; Adam, H.; Perona, P.; Belongie, S. The inaturalist species classification and detection dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 8769–8778. [Google Scholar]

- Yaseen, M. What is YOLOv8: An in-depth exploration of the internal features of the next-generation object detector. arXiv 2024, arXiv:2408.15857. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 7464–7475. [Google Scholar]

- Jocher, G. Ultralytics YOLOv5. Available online: https://github.com/ultralytics/yolov5 (accessed on 1 June 2025).

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and efficient object detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 10778–10787. [Google Scholar]

| Animal Name | Chengdu Institute of Biology | iNaturalist | iStock | Landbridge Ecology Center | China Conservation and Research Center for the Giant Panda | Chengdu Research Base of Giant Panda Breeding | Total Images |

|---|---|---|---|---|---|---|---|

| Leopard | 960 | 960 | |||||

| Giant Panda | 13 | 338 | 524 | 34 | 909 | ||

| Tiger | 892 | 21 | 913 | ||||

| Snow Leopard | 124 | 298 | 456 | 878 | |||

| Red Fox | 119 | 725 | 105 | 949 | |||

| Yellow-throated Marten | 106 | 709 | 815 | ||||

| Wolf | 508 | 273 | 30 | 811 | |||

| Black Bear | 198 | 129 | 205 | 532 | |||

| Otter | 69 | 775 | 844 | ||||

| Red Panda | 185 | 90 | 189 | 99 | 563 |

| Model | Precision (P) | Recall (R) | F1-Score | mAP50 | mAP50-95 |

|---|---|---|---|---|---|

| YOLOv8 (Baseline) | 0.92 | 0.833 | 0.874 | 0.913 | 0.736 |

| YOLOv8 + P2 | 0.922 | 0.848 | 0.884 | 0.919 | 0.741 |

| YOLOv8 + BiFPN | 0.911 | 0.852 | 0.881 | 0.922 | 0.742 |

| YOLOv8 + MHSA | 0.906 | 0.871 | 0.888 | 0.927 | 0.757 |

| YOLOv8 + P2 + BiFPN | 0.93 | 0.859 | 0.893 | 0.928 | 0.755 |

| YOLOv8 + P2 + MHSA | 0.91 | 0.872 | 0.891 | 0.933 | 0.767 |

| YOLOv8 + BiFPN + MHSA | 0.915 | 0.848 | 0.880 | 0.929 | 0.754 |

| YOLO-WildASM | 0.922 | 0.888 | 0.905 | 0.941 | 0.777 |

| Model | Precision (P) | Recall (R) | F1-Score | mAP50 | mAP50-95 |

|---|---|---|---|---|---|

| YOLOv8n | 0.92 | 0.833 | 0.874 | 0.913 | 0.736 |

| YOLOv9t | 0.922 | 0.854 | 0.887 | 0.922 | 0.766 |

| YOLOv10n | 0.92 | 0.833 | 0.874 | 0.911 | 0.737 |

| YOLOv11n | 0.901 | 0.865 | 0.883 | 0.922 | 0.757 |

| YOLOv12n | 0.911 | 0.863 | 0.886 | 0.922 | 0.785 |

| YOLOv12s | 0.925 | 0.882 | 0.903 | 0.937 | 0.783 |

| YOLO-WildASM | 0.922 | 0.888 | 0.905 | 0.941 | 0.777 |

| Model | Layers | Parameters | GFLOPs | Size (MB) | Latency (ms) | FPS |

|---|---|---|---|---|---|---|

| YOLOv8n | 168 | 3,007,598 | 8.1 | 6.2 | 2.4 | 417 |

| YOLO-WildASM | 212 | 3,205,767 | 12.5 | 6.8 | 3.3 | 303 |

| Model | Precision (P) | Recall (R) | F1-Score | mAP50 | mAP50-95 |

|---|---|---|---|---|---|

| YOLOv11n | 0.901 | 0.865 | 0.883 | 0.922 | 0.757 |

| YOLOv11n-ASM | 0.907 | 0.872 | 0.903 | 0.925 | 0.759 |

| YOLO-WildASM (ours) | 0.922 | 0.888 | 0.905 | 0.941 | 0.777 |

| Model | Precision (P) | Recall (R) | F1-Score | mAP50 | mAP50-95 |

|---|---|---|---|---|---|

| YOLOv8n | 0.927 | 0.888 | 0.907 | 0.95 | 0.8 |

| YOLOv9t | 0.946 | 0.888 | 0.916 | 0.95 | 0.799 |

| YOLOv10n | 0.942 | 0.876 | 0.908 | 0.954 | 0.808 |

| YOLOv11n | 0.937 | 0.89 | 0.913 | 0.946 | 0.807 |

| YOLOv11s | 0.939 | 0.93 | 0.934 | 0.967 | 0.807 |

| YOLOv12n | 0.953 | 0.895 | 0.923 | 0.964 | 0.8 |

| YOLOv12s | 0.932 | 0.904 | 0.918 | 0.966 | 0.821 |

| YOLO-WildASM | 0.95 | 0.916 | 0.933 | 0.973 | 0.82 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, Y.; Zhao, Y.; He, Y.; Wu, B.; Su, X. YOLO-WildASM: An Object Detection Algorithm for Protected Wildlife. Animals 2025, 15, 2699. https://doi.org/10.3390/ani15182699

Zhu Y, Zhao Y, He Y, Wu B, Su X. YOLO-WildASM: An Object Detection Algorithm for Protected Wildlife. Animals. 2025; 15(18):2699. https://doi.org/10.3390/ani15182699

Chicago/Turabian StyleZhu, Yutong, Yixuan Zhao, Yanxin He, Baoguo Wu, and Xiaohui Su. 2025. "YOLO-WildASM: An Object Detection Algorithm for Protected Wildlife" Animals 15, no. 18: 2699. https://doi.org/10.3390/ani15182699

APA StyleZhu, Y., Zhao, Y., He, Y., Wu, B., & Su, X. (2025). YOLO-WildASM: An Object Detection Algorithm for Protected Wildlife. Animals, 15(18), 2699. https://doi.org/10.3390/ani15182699