PBC-Transformer: Interpreting Poultry Behavior Classification Using Image Caption Generation Techniques

Simple Summary

Abstract

1. Introduction

- How can we replicate the expert classification process comprising observation, focus, recall, description, and decision-making when poultry behavior categories are similar, rather than simply offering a decision?

- How can we improve classification accuracy when data are limited or behavior categories are imbalanced?

- How can we overcome the challenge of static vectors, which prevent models from dynamically selecting image regions to generate accurate descriptions, especially in images with many irrelevant elements?

- The development of the first dual-task dataset, PBC-CapLabels, for poultry behavior classification and description.

- The introduction of a novel concentrated attention mechanism (HSPC+LSM+KFE) that enhances the ability of the model to locate and extract detailed local features of poultry.

- The proposal of a novel multi-stage attention differentiator that overcomes the limitation of static vectors by dynamically selecting different regions to generate accurate descriptions.

- The development of a multi-stage attention memory report and classification decoder to ensure persistent feature memory and alignment of textual and categorical information.

- The proposal of an enhanced contrastive loss function that integrates contrastive learning of category, text, and image feature losses.

2. Methods

2.1. General

2.2. Feature Extractor

2.3. Concentrated Encoder

2.3.1. Concentrated Attention Mechanism

| Algorithm 1: Concentrated attention with HSPC, LSM, and KFE. |

| , , and . Output: output_CA,attn. |

| Steps: 1. Head Space Position Coding (HSPC): - Compute initial attention scores. - Apply positional encoding and update scores. 2. Limited Softmax Masking (LSM): - Apply the attention mask if provided. - Perform top-k filtering on scores. - Apply softmax and dropout. - Compute the context vector. 3. Kernel Fusion Enhancement (KFE): - Apply depth-wise separable convolution to value matrix. - Combine the context vector with convolved values. |

2.3.2. Head Space Position Coding (HSPC)

2.3.3. Learnable Sparse Mechanism (LSM)

2.3.4. Key Feature Enhancement (KFE)

2.4. Multi-Level Attention Differentiator

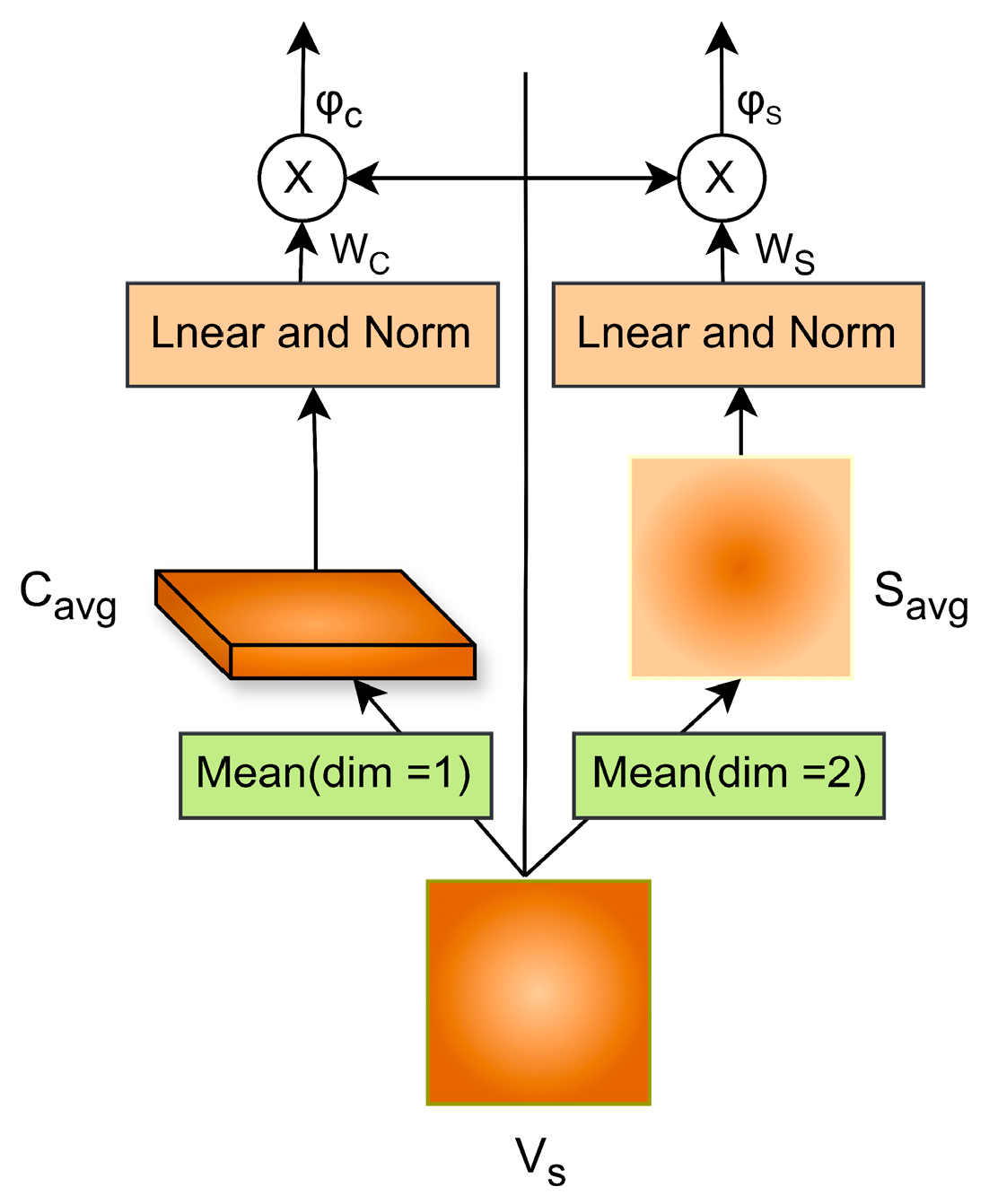

2.4.1. Refinement of Spatial Features

2.4.2. Refinement of Channel Features

2.5. Memory Description Decoder

2.6. Classification Decoder

2.7. ICL-Loss (Image, Caption, and Label Loss Function)

2.8. Dataset and Evaluation Metrics

2.8.1. PBC-CapLabels (Poultry Behavior Classify—Caption Labels)

2.8.2. Flickr8K Dataset

2.8.3. Evaluation Metrics

3. Experiments

3.1. Overview

- Comparative experiments between PBC-Transformer and traditional classification models.

- Comparative experiments between PBC-Transformer and conventional caption generation models.

- Ablation study of PBC-Transformer.

- Qualitative comparison of PBC-Transformer and other models on poultry behavior classification and description generation tasks.

- Generalization experiments of PBC-Transformer.

3.2. Experimental Setup

3.3. Comparative Experiments

3.3.1. Comparative Experiments on the Caption Generation Task

3.3.2. Comparative Experiments on the Classification Task

3.4. Ablation Study of PBC-Transformer

3.5. Qualitative Comparison

3.5.1. Qualitative Comparison on Caption Generation Task

3.5.2. Qualitative Comparison on Classification Task

3.6. Generalization Experiments

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Koolhaas, J.; Van Reenen, C. Animal behavior and well-being symposium: Interaction between coping style/personality, stress, and welfare: Relevance for domestic farm animals. J. Anim. Sci. 2016, 94, 2284–2296. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Cai, C.; Zhang, R.; Ju, L.; He, J. Deep cascaded convolutional models for cattle pose estimation. Comput. Electron. Agric. 2019, 164, 104885. [Google Scholar] [CrossRef]

- Rodríguez, I.; Branson, K.; Acuña, E.; Agosto-Rivera, J.; Giray, T.; Mégret, R. Honeybee detection and pose estimation using convolutional neural networks. In Proceedings of the Congres Reconnaissance des Formes, Image, Apprentissage et Perception (RFIAP), Paris, France, 26–28 June 2018. [Google Scholar]

- Egnor, S.R.; Branson, K. Computational analysis of behavior. Annu. Rev. Neurosci. 2016, 39, 217–236. [Google Scholar] [CrossRef] [PubMed]

- Breslav, M.; Fuller, N.; Sclaroff, S.; Betke, M. 3D pose estimation of bats in the wild. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision, Steamboat Springs, CO, USA, 24–26 March 2014; pp. 91–98. [Google Scholar]

- Salem, G.; Krynitsky, J.; Hayes, M.; Pohida, T.; Burgos-Artizzu, X. Three-dimensional pose estimation for laboratory mouse from monocular images. IEEE Trans. Image Process. 2019, 28, 4273–4287. [Google Scholar] [CrossRef] [PubMed]

- Duan, L.; Shen, M.; Gao, W.; Cui, S.; Deussen, O. Bee pose estimation from single images with convolutional neural network. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 2836–2840. [Google Scholar]

- Lin, C.-Y.; Hsieh, K.-W.; Tsai, Y.-C.; Kuo, Y.-F. Monitoring chicken heat stress using deep convolutional neural networks. In Proceedings of the 2018 ASABE Annual International Meeting, Detroit, MI, USA, 29 July–1 August 2018; p. 1. [Google Scholar]

- Okinda, C.; Lu, M.; Liu, L.; Nyalala, I.; Muneri, C.; Wang, J.; Zhang, H.; Shen, M. A machine vision system for early detection and prediction of sick birds: A broiler chicken model. Biosyst. Eng. 2019, 188, 229–242. [Google Scholar] [CrossRef]

- Degu, M.Z.; Simegn, G.L. Smartphone based detection and classification of poultry diseases from chicken fecal images using deep learning techniques. Smart Agric. Technol. 2023, 4, 100221. [Google Scholar] [CrossRef]

- Cuan, K.; Zhang, T.; Huang, J.; Fang, C.; Guan, Y. Detection of avian influenza-infected chickens based on a chicken sound convolutional neural network. Comput. Electron. Agric. 2020, 178, 105688. [Google Scholar] [CrossRef]

- Gori, M. What’s wrong with computer vision? In Proceedings of the IAPR Workshop on Artificial Neural Networks in Pattern Recognition, Siena, Italy, 19–21 September 2018; pp. 3–16. [Google Scholar]

- Ruder, S. An overview of multi-task learning in deep neural networks. arXiv 2017, arXiv:1706.05098. [Google Scholar]

- Vinyals, O.; Toshev, A.; Bengio, S.; Erhan, D. Show and tell: A neural image caption generator. In Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3156–3164. [Google Scholar]

- Burtsev, M.S.; Kuratov, Y.; Peganov, A.; Sapunov, G.V. Memory transformer. arXiv 2020, arXiv:2006.11527. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–21 June 2009; pp. 248–255. [Google Scholar]

- Chen, P.-C.; Tsai, H.; Bhojanapalli, S.; Chung, H.W.; Chang, Y.-W.; Ferng, C.-S. A simple and effective positional encoding for transformers. arXiv 2021, arXiv:2104.08698. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Han, D.; Pan, X.; Han, Y.; Song, S.; Huang, G. Flatten transformer: Vision transformer using focused linear attention. In Proceedings of the Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 5961–5971. [Google Scholar]

- Li, B.; Hu, Y.; Nie, X.; Han, C.; Jiang, X.; Guo, T.; Liu, L. Dropkey for vision transformer. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 22700–22709. [Google Scholar]

- Pan, X.; Ye, T.; Xia, Z.; Song, S.; Huang, G. Slide-transformer: Hierarchical vision transformer with local self-attention. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 2082–2091. [Google Scholar]

- Chen, H.; Shi, Z. A spatial-temporal attention-based method and a new dataset for remote sensing image change detection. Remote Sens. 2020, 12, 1662. [Google Scholar] [CrossRef]

- Chen, L.; Zhang, H.; Xiao, J.; Nie, L.; Shao, J.; Liu, W.; Chua, T.-S. Sca-cnn: Spatial and channel-wise attention in convolutional networks for image captioning. In Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5659–5667. [Google Scholar]

- Wang, C.; Yang, H.; Bartz, C.; Meinel, C. Image captioning with deep bidirectional LSTMs. In Proceedings of the Proceedings of the 24th ACM International conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016; pp. 988–997. [Google Scholar]

- Wang, J.; Wang, W.; Wang, L.; Wang, Z.; Feng, D.D.; Tan, T. Learning visual relationship and context-aware attention for image captioning. Pattern Recognit. 2020, 98, 107075. [Google Scholar] [CrossRef]

- Yang, K.; Deng, J.; An, X.; Li, J.; Feng, Z.; Guo, J.; Yang, J.; Liu, T. Alip: Adaptive language-image pre-training with synthetic caption. In Proceedings of the Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 2922–2931. [Google Scholar]

- Fang, C.; Zhang, T.; Zheng, H.; Huang, J.; Cuan, K. Pose estimation and behavior classification of broiler chickens based on deep neural networks. Comput. Electron. Agric. 2021, 180, 105863. [Google Scholar] [CrossRef]

- Chen, X.; Fang, H.; Lin, T.-Y.; Vedantam, R.; Gupta, S.; Dollár, P.; Zitnick, C.L. Microsoft coco captions: Data collection and evaluation server. arXiv 2015, arXiv:1504.00325. [Google Scholar]

- Li, J.; Li, D.; Xiong, C.; Hoi, S. Blip: Bootstrapping language-image pre-training for unified vision-language understanding and generation. In Proceedings of the International Conference on Machine Learning, Baltimore, MD, USA, 17–23 July 2022; pp. 12888–12900. [Google Scholar]

- Hodosh, M.; Young, P.; Hockenmaier, J. Framing image description as a ranking task: Data, models and evaluation metrics. J. Artif. Intell. Res. 2013, 47, 853–899. [Google Scholar] [CrossRef]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.-J. Bleu: A method for automatic evaluation of machine translation. In Proceedings of the Proceedings of the 40th annual meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 6–12 July 2002; pp. 311–318. [Google Scholar]

- Lin, C.-Y. Rouge: A package for automatic evaluation of summaries. In Proceedings of the Text Summarization Branches out, Barcelona, Spain, 25–26 July 2004; pp. 74–81. [Google Scholar]

- Denkowski, M.; Lavie, A. Meteor universal: Language specific translation evaluation for any target language. In Proceedings of the Proceedings of the Ninth Workshop on Statistical Machine Translation, Baltimore, MD, USA, 26–27 June 2014; pp. 376–380. [Google Scholar]

- Anderson, P.; Fernando, B.; Johnson, M.; Gould, S. Spice: Semantic propositional image caption evaluation. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 382–398. [Google Scholar]

- Arbel, R.; Rokach, L. Classifier evaluation under limited resources. Pattern Recognit. Lett. 2006, 27, 1619–1631. [Google Scholar] [CrossRef][Green Version]

- Sokolova, M.; Lapalme, G. A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

- Liu, W.; Chen, S.; Guo, L.; Zhu, X.; Liu, J. Cptr: Full Transformer Network for Image Captioning. arXiv 2021, arXiv:2101.10804. [Google Scholar]

- Xu, K.; Ba, J.; Kiros, R.; Cho, K.; Courville, A.; Salakhudinov, R.; Zemel, R.; Bengio, Y. Show, attend and tell: Neural image caption generation with visual attention. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 2048–2057. [Google Scholar]

- Rennie, S.J.; Marcheret, E.; Mroueh, Y.; Ross, J.; Goel, V. Self-critical sequence training for image captioning. In Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7008–7024. [Google Scholar]

- Lu, J.; Xiong, C.; Parikh, D.; Socher, R. Knowing when to look: Adaptive attention via a visual sentinel for image captioning. In Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 375–383. [Google Scholar]

- Anderson, P.; He, X.; Buehler, C.; Teney, D.; Johnson, M.; Gould, S.; Zhang, L. Bottom-up and top-down attention for image captioning and visual question answering. In Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6077–6086. [Google Scholar]

- Huang, L.; Wang, W.; Chen, J.; Wei, X.-Y. Attention on attention for image captioning. In Proceedings of the Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4634–4643. [Google Scholar]

- Guo, L.; Liu, J.; Zhu, X.; Yao, P.; Lu, S.; Lu, H. Normalized and geometry-aware self-attention network for image captioning. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10327–10336. [Google Scholar]

- Cornia, M.; Stefanini, M.; Baraldi, L.; Cucchiara, R. Meshed-memory transformer for image captioning. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10578–10587. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 17–21 July 2017; pp. 4700–4708. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 17–21 July 2017; pp. 1492–1500. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Liu, Z.; Hu, H.; Lin, Y.; Yao, Z.; Xie, Z.; Wei, Y.; Ning, J.; Cao, Y.; Zhang, Z.; Dong, L. Swin transformer v2: Scaling up capacity and resolution. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 17–24 June 2022; pp. 12009–12019. [Google Scholar]

| 9 Categories | ||

|---|---|---|

| Categories | Description | Count |

| 0: Walking | Moving slowly using feet | 1057 |

| 1: Running | Moving quickly using feet | 215 |

| 2: Standing | Standing still without movement | 2902 |

| 3: Eating | Pecking behavior, usually with head lowered | 286 |

| 4: Resting | Sleeping or resting on the ground | 519 |

| 5: Grooming | Using beak to touch feathers | 145 |

| 6: Abnormal Behavior | Non-routine, unusual behavior | 370 |

| 7: Flapping | Flapping wings or feathers | 316 |

| 8: Swimming | Moving through water | 1368 |

| 3 Categories | ||

| 0: Movement | Includes walking, running, swimming, flapping, and some abnormal behaviors | 3036 |

| 1: Stationary | Includes standing and some abnormal behaviors | 3068 |

| 2: Resting | Includes pecking, resting, grooming, and some abnormal behaviors | 1074 |

| Experimental Configuration | Training Strategies | ||

|---|---|---|---|

| Parameter | Value | Parameter | Value |

| GPU | RTX 4090 | Pretrained | True |

| CPU | Intel 6230R | Epoch | 200 |

| OS | Windows | Batch Size | 16 |

| Pytorch | 2.2.2 | Optimizer | AdamW |

| Software | Python 3.8 | Loss Function | Cross-Entropy |

| Cuda | 12.2 | Optimizer Alpha | 0.9 |

| Feature Extractor | EfficientNetB0 | Optimizer Beta | 0.999 |

| Encoder Layers | 3 | Extractor | 2 × 10−4 |

| Decoder Layers | 3 | Encoder_lr | 6 × 10−5 |

| Num_heads | 8 | Decoder_lr | 6 × 10−5 |

| Embedding | 1280 | Learning Rate Annealing | Every 3 Cycles |

| Imgs_size | 224 × 224 | Annealing Rate | 0.8× |

| Word Threshold | ≥2 | Early Stop | 50 |

| Word_size | 1294 | Droupt | 0.1 |

| Method | 9-Class | 3-Class | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| B4 | RL | M | S | Sm | B4 | RL | M | S | Sm | |

| ShowTell | 0.432 | 0.658 | 0.354 | 0.486 | 0.493 | 0.427 | 0.657 | 0.352 | 0.474 | 0.488 |

| Spitial | 0.415 | 0.644 | 0.345 | 0.466 | 0.478 | 0.410 | 0.641 | 0.343 | 0.463 | 0.475 |

| FC | 0.424 | 0.652 | 0.351 | 0.471 | 0.485 | 0.422 | 0.654 | 0.352 | 0.474 | 0.486 |

| Att2in | 0.420 | 0.644 | 0.347 | 0.460 | 0.477 | 0.404 | 0.628 | 0.338 | 0.445 | 0.464 |

| AdaAtt | 0.440 | 0.662 | 0.358 | 0.484 | 0.495 | 0.436 | 0.662 | 0.356 | 0.481 | 0.493 |

| Att2all | 0.405 | 0.628 | 0.338 | 0.449 | 0.465 | 0.408 | 0.631 | 0.340 | 0.453 | 0.468 |

| AoANet | 0.434 | 0.654 | 0.354 | 0.475 | 0.488 | 0.427 | 0.655 | 0.352 | 0.471 | 0.486 |

| Grid | 0.437 | 0.661 | 0.356 | 0.476 | 0.491 | 0.421 | 0.651 | 0.350 | 0.472 | 0.484 |

| M2 | 0.435 | 0.660 | 0.354 | 0.482 | 0.492 | 0.429 | 0.658 | 0.354 | 0.475 | 0.489 |

| PBC-Trans | 0.498 | 0.794 | 0.393 | 0.613 | 0.590 | 0.493 | 0.792 | 0.393 | 0.609 | 0.588 |

| Method | 9-Class | 3-Class | ||||

|---|---|---|---|---|---|---|

| Accuracy | F1 | Recall | Accuracy | F1 | Recall | |

| VggNet19 | 68.23 | 66.99 | 68.23 | 73.79 | 73.56 | 73.79 |

| GoogleNet | 69.91 | 67.77 | 69.91 | 75.89 | 75.57 | 75.89 |

| InceptionV3 | 62.55 | 58.92 | 62.55 | 67.55 | 66.84 | 67.55 |

| AlexNet | 65.04 | 62.02 | 65.04 | 67.71 | 67.14 | 67.71 |

| DenseNet121 | 71.08 | 68.74 | 71.08 | 73.68 | 73.54 | 73.68 |

| DenseNet161 | 69.83 | 66.75 | 69.83 | 73.04 | 73.19 | 73.04 |

| ResNext50 | 68.86 | 66.57 | 68.86 | 73.71 | 73.03 | 73.71 |

| ResNext101 | 66.78 | 63.00 | 66.78 | 72.88 | 72.15 | 72.88 |

| MobieNetV2 | 67.00 | 65.38 | 67.00 | 73.54 | 73.27 | 73.54 |

| EffcientNetB0 | 68.86 | 67.67 | 68.86 | 74.38 | 73.98 | 74.38 |

| EfficientnetB2 | 69.83 | 68.28 | 69.83 | 75.50 | 75.28 | 75.50 |

| SwinB | 69.56 | 67.33 | 69.56 | 73.73 | 72.91 | 73.73 |

| Swin2B | 68.87 | 66.54 | 68.87 | 74.27 | 74.13 | 74.27 |

| PBC-Trans | 78.74 | 83.94 | 78.74 | 81.70 | 81.14 | 81.70 |

| Structure | Captions Indicators | Classify Indicators | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CNN | Dec | CSA | N_m | Loss | B4 | RL | M | S | Sm | Accu | F1 | Recall | |

| A | Class–Prediction (Grid petch 20 × 20 and 40 × 40) | ||||||||||||

| × | × | × | × | × | —— | —— | —— | —— | —— | 43.61 | 31.61 | 43.61 | |

| × | × | × | × | × | —— | —— | —— | —— | —— | 42.81 | 30.92 | 42.81 | |

| B | Class and Description–Prediction (Grid petch 20 × 20 and 40 × 40) | ||||||||||||

| × | √ | × | × | × | 0.281 | 0.602 | 0.272 | 0.373 | 0.362 | 74.20 | 71.31 | 74.20 | |

| × | √ | × | × | × | 0.334 | 0.659 | 0.301 | 0.435 | 0.413 | 72.89 | 70.28 | 72.89 | |

| C | Observation–Class–Prediction | ||||||||||||

| √ | × | × | × | × | —— | —— | —— | —— | —— | 68.86 | 67.67 | 68.86 | |

| D | Observation–Attention–Description–Prediction | ||||||||||||

| √ | √ | × | × | × | 0.407 | 0.742 | 0.348 | 0.530 | 0.526 | 76.23 | 74.02 | 76.23 | |

| √ | ① | × | × | × | 0.411 | 0.743 | 0.352 | 0.542 | 0.532 | 76.70 | 74.78 | 76.70 | |

| √ | ② | × | × | × | 0.421 | 0.752 | 0.354 | 0.545 | 0.537 | 76.71 | 75.21 | 76.71 | |

| √ | ③ | × | × | × | 0.432 | 0.759 | 0.358 | 0.557 | 0.545 | 76.72 | 74.94 | 76.72 | |

| √ | ③ | √ | × | × | 0.442 | 0.767 | 0.366 | 0.564 | 0.553 | 76.78 | 75.16 | 76.78 | |

| E | Observation–Attention–Description–Recollection–Prediction | ||||||||||||

| √ | ③ | √ | 10 | × | 0.453 | 0.776 | 0.373 | 0.577 | 0.563 | 76.79 | 75.77 | 76.79 | |

| √ | ③ | √ | 20 | × | 0.460 | 0.776 | 0.379 | 0.582 | 0.567 | 76.80 | 74.95 | 76.80 | |

| √ | ③ | √ | 30 | × | 0.479 | 0.789 | 0.385 | 0.600 | 0.580 | 78.14 | 75.44 | 78.14 | |

| √ | ③ | √ | 40 | × | 0.472 | 0.787 | 0.382 | 0.596 | 0.576 | 77.06 | 75.04 | 77.06 | |

| √ | ③ | √ | 50 | × | 0.466 | 0.784 | 0.381 | 0.592 | 0.573 | 76.98 | 74.91 | 76.98 | |

| √ | ③ | √ | 30 | √ | 0.498 | 0.794 | 0.393 | 0.613 | 0.590 | 78.74 | 83.94 | 78.74 | |

| Structure | Caption Indicators | Classify Indicators | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CNN | Dec | CSA | N_m | Loss | B4 | RL | M | S | Sm | Acc | F1 | Recall | |

| A | Class–Prediction (Grid petch 20 × 20 and 40 × 40) | ||||||||||||

| × | × | × | × | × | —— | —— | —— | —— | —— | 52.31 | 47.71 | 52.32 | |

| × | × | × | × | × | —— | —— | —— | —— | —— | 49.93 | 46.02 | 49.93 | |

| B | Class and Description–Prediction (Grid petch 20 × 20 and 40 × 40) | ||||||||||||

| × | √ | × | × | × | 0.289 | 0.619 | 0.280 | 0.383 | 0.372 | 75.07 | 73.98 | 75.07 | |

| × | √ | × | × | × | 0.355 | 0.682 | 0.311 | 0.457 | 0.432 | 74.38 | 74.42 | 74.38 | |

| C | Observation–Class–Prediction | ||||||||||||

| √ | × | × | × | × | —— | —— | —— | —— | —— | 74.25 | 73.88 | 74.25 | |

| D | Observation–Attention–Description–Prediction | ||||||||||||

| √ | √ | × | × | × | 0.408 | 0.736 | 0.344 | 0.528 | 0.523 | 79.15 | 79.15 | 79.15 | |

| √ | ① | × | × | × | 0.416 | 0.745 | 0.350 | 0.534 | 0.530 | 79.20 | 78.94 | 79.20 | |

| √ | ② | × | × | × | 0.424 | 0.747 | 0.355 | 0.544 | 0.536 | 79.62 | 79.50 | 79.62 | |

| √ | ③ | × | × | × | 0.430 | 0.750 | 0.357 | 0.551 | 0.540 | 79.64 | 79.28 | 79.63 | |

| √ | ③ | √ | × | × | 0.440 | 0.759 | 0.362 | 0.556 | 0.547 | 79.70 | 79.46 | 79.70 | |

| E | Observation–Attention–Description–Recollection–Prediction | ||||||||||||

| √ | ③ | √ | 10 | × | 0.451 | 0.766 | 0.369 | 0.570 | 0.556 | 79.89 | 79.81 | 79.89 | |

| √ | ③ | √ | 20 | × | 0.460 | 0.773 | 0.375 | 0.574 | 0.562 | 80.03 | 79.86 | 80.03 | |

| √ | ③ | √ | 30 | × | 0.463 | 0.776 | 0.377 | 0.576 | 0.565 | 79.75 | 79.18 | 79.75 | |

| √ | ③ | √ | 40 | × | 0.477 | 0.785 | 0.385 | 0.593 | 0.577 | 80.24 | 80.14 | 80.24 | |

| √ | ③ | √ | 50 | × | 0.467 | 0.778 | 0.379 | 0.583 | 0.568 | 80.23 | 79.93 | 80.23 | |

| √ | ③ | √ | 30 | √ | 0.493 | 0.792 | 0.393 | 0.609 | 0.588 | 81.70 | 81.14 | 81.70 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Yang, B.; Liu, J.; Amevor, F.K.; Guo, Y.; Zhou, Y.; Deng, Q.; Zhao, X. PBC-Transformer: Interpreting Poultry Behavior Classification Using Image Caption Generation Techniques. Animals 2025, 15, 1546. https://doi.org/10.3390/ani15111546

Li J, Yang B, Liu J, Amevor FK, Guo Y, Zhou Y, Deng Q, Zhao X. PBC-Transformer: Interpreting Poultry Behavior Classification Using Image Caption Generation Techniques. Animals. 2025; 15(11):1546. https://doi.org/10.3390/ani15111546

Chicago/Turabian StyleLi, Jun, Bing Yang, Jiaxin Liu, Felix Kwame Amevor, Yating Guo, Yuheng Zhou, Qinwen Deng, and Xiaoling Zhao. 2025. "PBC-Transformer: Interpreting Poultry Behavior Classification Using Image Caption Generation Techniques" Animals 15, no. 11: 1546. https://doi.org/10.3390/ani15111546

APA StyleLi, J., Yang, B., Liu, J., Amevor, F. K., Guo, Y., Zhou, Y., Deng, Q., & Zhao, X. (2025). PBC-Transformer: Interpreting Poultry Behavior Classification Using Image Caption Generation Techniques. Animals, 15(11), 1546. https://doi.org/10.3390/ani15111546