Study Replication: Shape Discrimination in a Conditioning Procedure on the Jumping Spider Phidippus regius

Abstract

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Subjects

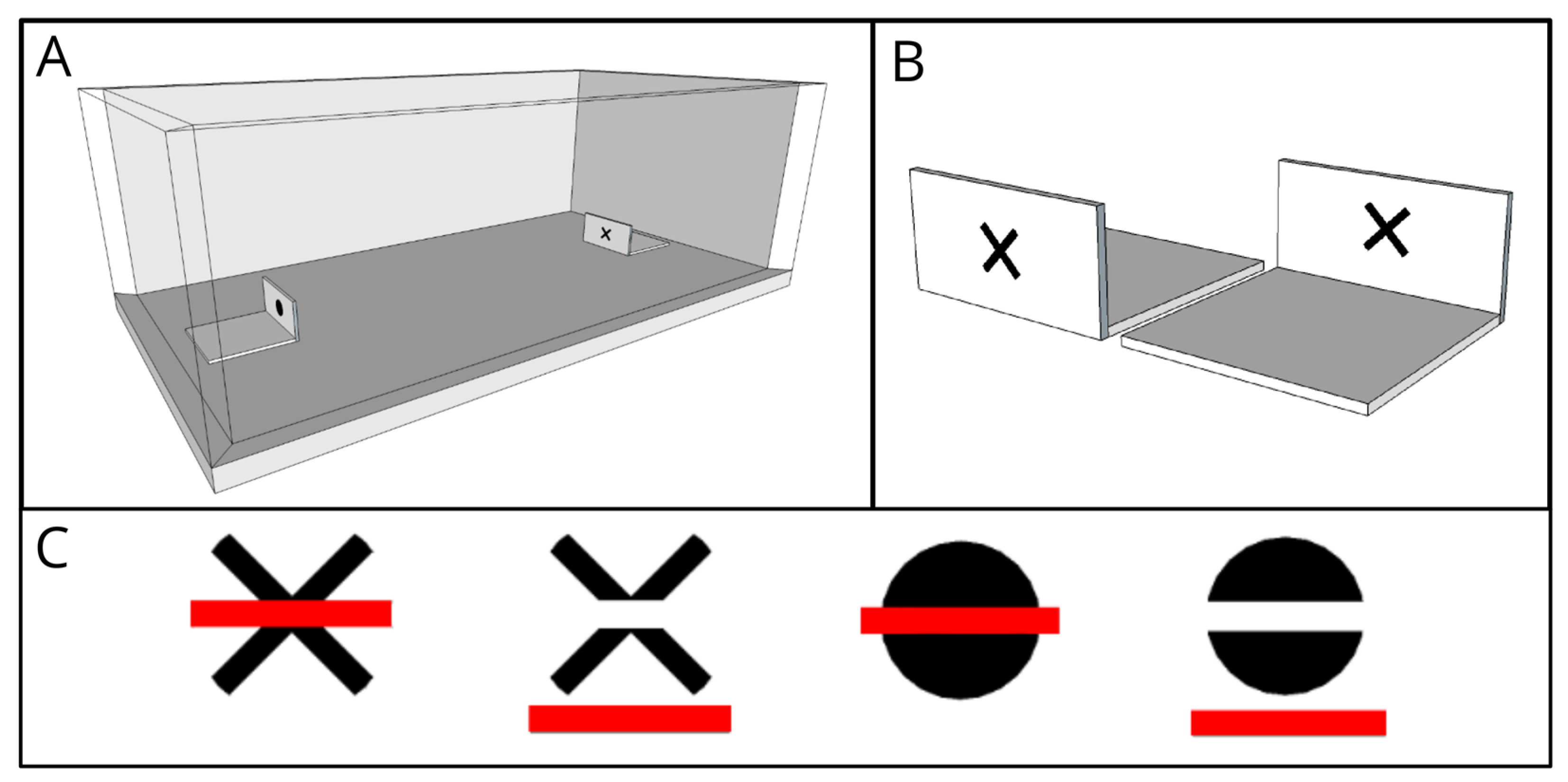

2.2. Apparatus

2.3. Design and Procedure

2.4. Visual Stimuli

2.5. Scoring and Data Analysis

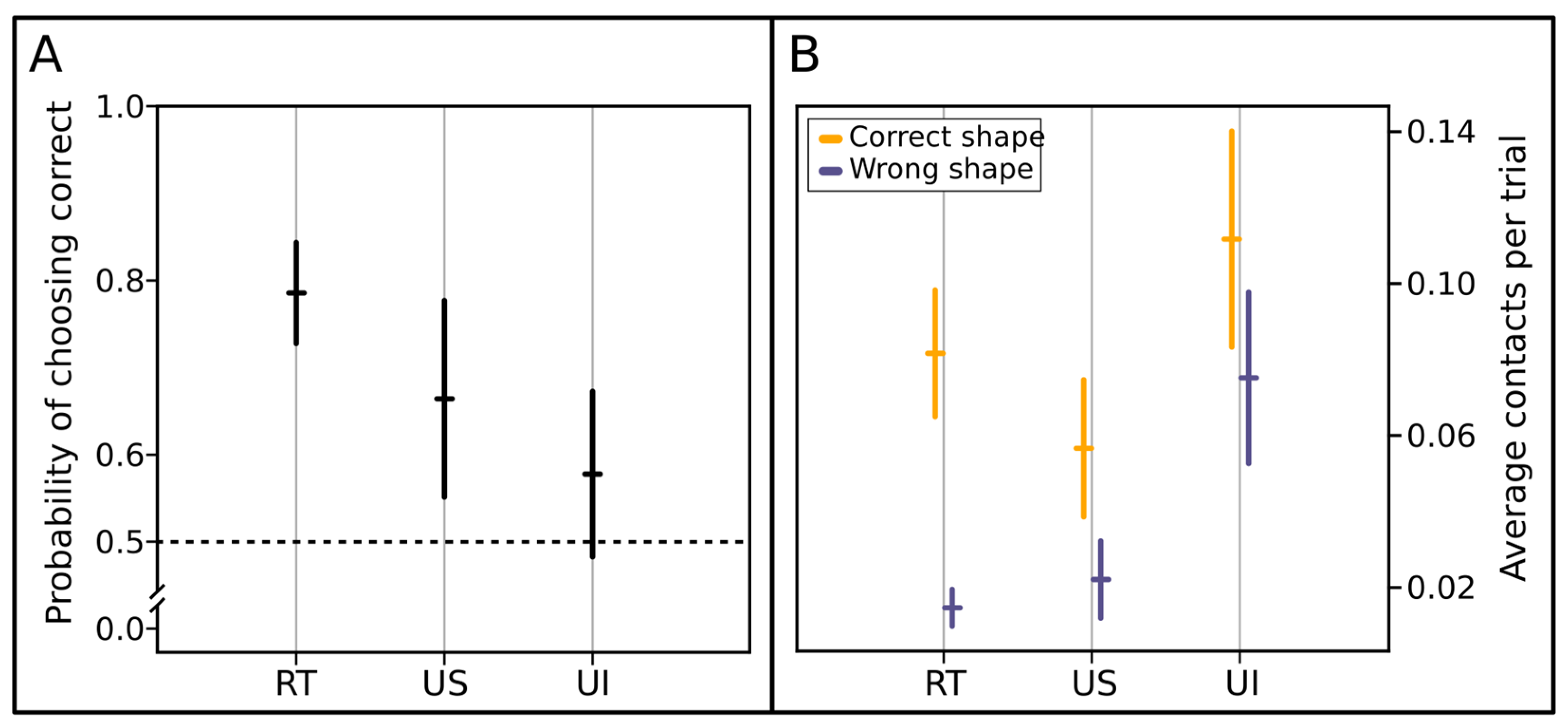

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lazareva, O.F.; Shimizu, T.; Wasserman, E.A. How Animals See the World: Comparative Behavior, Biology, and Evolution of Vision; Oxford University Press: Oxford, UK, 2012; ISBN 978-0-19-533465-4. [Google Scholar]

- Vezzani, S.; Marino, B.F.M.; Giora, E. An Early History of the Gestalt Factors of Organisation. Perception 2012, 41, 148–167. [Google Scholar] [CrossRef] [PubMed]

- Wertheimer, M. Laws of Organization in Perceptual Forms. Psycol. Forsch. 1923, 4, 301–350. [Google Scholar] [CrossRef]

- Kanizsa, G. Organization in Vision: Essays on Gestalt Perception; Praeger: New York, NY, USA, 1979; ISBN 978-0-275-90373-2. [Google Scholar]

- Michotte, A. The Perception of Causality; Basic Books: Oxford, UK, 1963; pp. xxii, 424. [Google Scholar]

- Deruelle, C.; Barbet, I.; Dépy, D.; Fagot, J. Perception of Partly Occluded Figures by Baboons (Papio Papio). Perception 2000, 29, 1483–1497. [Google Scholar] [CrossRef]

- Fujita, K.; Giersch, A. What Perceptual Rules Do Capuchin Monkeys (Cebus Apella) Follow in Completing Partly Occluded Figures? J. Exp. Psychol. Anim. Behav. Process 2005, 31, 387–398. [Google Scholar] [CrossRef]

- O’Connell, S.; Dunbar, R.I.M. The Perception of Causality in Chimpanzees (Pan Spp.). Anim. Cogn. 2005, 8, 60–66. [Google Scholar] [CrossRef]

- Sato, A.; Kanazawa, S.; Fujita, K. Perception of Object Unity in a Chimpanzee (Pan troglodytes). Jpn. Psychol. Res. 1997, 39, 191–199. [Google Scholar] [CrossRef]

- Regolin, L.; Vallortigara, G. Perception of Partly Occluded Objects by Young Chicks. Percept. Psychophys. 1995, 57, 971–976. [Google Scholar] [CrossRef]

- Nagasaka, Y.; Wasserman, E.A. Amodal Completion of Moving Objects by Pigeons. Perception 2008, 37, 557–570. [Google Scholar] [CrossRef] [PubMed]

- Kanizsa, G.; Renzi, P.; Conte, S.; Compostela, C.; Guerani, L. Amodal Completion in Mouse Vision. Perception 1993, 22, 713–721. [Google Scholar] [CrossRef]

- Sovrano, V.A.; Vicidomini, S.; Potrich, D.; Petrazzini, M.E.M.; Baratti, G.; Rosa-Salva, O. Visual Discrimination and Amodal Completion in Zebrafish. PLoS ONE 2022, 17, e0264127. [Google Scholar] [CrossRef]

- van Hateren, J.H.; Srinivasan, M.V.; Wait, P.B. Pattern Recognition in Bees: Orientation Discrimination. J. Comp. Physiol. A 1990, 167, 649–654. [Google Scholar] [CrossRef]

- Sovrano, V.A.; Bisazza, A. Recognition of Partly Occluded Objects by Fish. Anim. Cogn. 2008, 11, 161–166. [Google Scholar] [CrossRef]

- Lin, I.-R.; Chiao, C.-C. Visual Equivalence and Amodal Completion in Cuttlefish. Front. Physiol. 2017, 8, 40. [Google Scholar] [CrossRef] [PubMed]

- Lehrer, M. Shape Perception in the Honeybee: Symmetry as a Global Framework. Int. J. Plant Sci. 1999, 160, S51–S65. [Google Scholar] [CrossRef]

- Chittka, L.; Menzel, R. The Evolutionary Adaptation of Flower Colours and the Insect Pollinators’ Colour Vision. J. Comp. Physiol. A 1992, 171, 171–181. [Google Scholar] [CrossRef]

- Ibarra, N.H.D.; Vorobyev, M.; Brandt, R.; Giurfa, M. Detection of Bright and Dim Colours by Honeybees. J. Exp. Biol. 2000, 203, 3289–3298. [Google Scholar] [CrossRef] [PubMed]

- Giurfa, M.; Eichmann, B.; Menzel, R. Symmetry Perception in an Insect. Nature 1996, 382, 458–461. [Google Scholar] [CrossRef]

- Sheehan, M.J.; Tibbetts, E.A. Specialized Face Learning Is Associated with Individual Recognition in Paper Wasps. Science 2011, 334, 1272–1275. [Google Scholar] [CrossRef]

- Harland, D.P.; Jackson, R.R. Cues by Which Portia Fimbriata, An Araneophagic Jumping Spider, Distinguishes Jumping-Spider Prey From Other Prey. J. Exp. Biol. 2000, 203, 3485–3494. [Google Scholar] [CrossRef]

- Prete, F. Complex Worlds from Simpler Nervous Systems; MIT Press: Cambridge, MA, USA, 2004; pp. 1–436. [Google Scholar]

- De Agrò, M. SPiDbox: Design and Validation of an Open-Source “Skinner-Box” System for the Study of Jumping Spiders. J. Neurosci. Methods 2020, 346, 108925. [Google Scholar] [CrossRef]

- De Agrò, M.; Rößler, D.C.; Kim, K.; Shamble, P.S. Perception of Biological Motion by Jumping Spiders. PLoS Biol. 2021, 19, e3001172. [Google Scholar] [CrossRef] [PubMed]

- Ferrante, F.; Loconsole, M.; Giacomazzi, D.; De Agrò, M. Separate Attentional Processes in the Two Visual Systems of Jumping Spiders. bioRxiv 2023. [Google Scholar] [CrossRef]

- Herberstein, M.E. Spider Behaviour: Flexibility And Versatility, 1st ed.; Cambridge University Press: Cambridge, UK; New York, NY, USA, 2011; ISBN 978-0-521-74927-5. [Google Scholar]

- Jackson, R.R.; Harland, D.P. One Small Leap for the Jumping Spider but a Giant Step for Vision Science. J. Exp. Biol. 2009, 212, 2720. [Google Scholar] [CrossRef]

- Shamble, P.S.; Hoy, R.R.; Cohen, I.; Beatus, T. Walking like an Ant: A Quantitative and Experimental Approach to Understanding Locomotor Mimicry in the Jumping Spider Myrmarachne Formicaria. Proc. R. Soc. B Biol. Sci. 2017, 284, 20170308. [Google Scholar] [CrossRef]

- Lim, M.L.M.; Li, D. Behavioural Evidence of UV Sensitivity in Jumping Spiders (Araneae: Salticidae). J. Comp. Physiol. A Neuroethol. Sens. Neural Behav. Physiol. 2006, 192, 871–878. [Google Scholar] [CrossRef] [PubMed]

- Nakamura, T.; Yamashita, S. Learning and Discrimination of Colored Papers in Jumping Spiders (Araneae, Salticidae). J. Comp. Physiol. A 2000, 186, 897–901. [Google Scholar] [CrossRef]

- Zurek, D.B.; Cronin, T.W.; Taylor, L.A.; Byrne, K.; Sullivan, M.L.G.; Morehouse, N.I. Spectral Filtering Enables Trichromatic Vision in Colorful Jumping Spiders. Curr. Biol. 2015, 25, R403–R404. [Google Scholar] [CrossRef] [PubMed]

- Land, M.F. Short Communication: Fields of View of the Eyes of Primitive Jumping Spiders. J. Exp. Biol. 1985, 119, 381–384. [Google Scholar] [CrossRef]

- Land, M.F.; Nilsson, D.-E. Animal Eyes; OUP Oxford: Oxford, UK, 2012; ISBN 978-0-19-162536-7. [Google Scholar]

- Land, M.F. Orientation by Jumping Spiders in the Absence of Visual Feedback. J. Exp. Biol. 1971, 54, 119–139. [Google Scholar] [CrossRef]

- Land, M.F. Mechanisms of Orientation and Pattern Recognition by Jumping Spiders (Salticidae). In Information Processing in the Visual Systems of Anthropods: Symposium Held at the Department of Zoology, University of Zurich, 6–9 March 1972; Wehner, R., Ed.; Springe: Berlin/Heidelberg, Germany, 1972; pp. 231–247. ISBN 978-3-642-65477-0. [Google Scholar]

- Land, M.F. Stepping Movements Made by Jumping Spiders During Turns Mediated by the Lateral Eyes. J. Exp. Biol. 1972, 57, 15–40. [Google Scholar] [CrossRef]

- Zurek, D.B.; Nelson, X.J. Hyperacute Motion Detection by the Lateral Eyes of Jumping Spiders. Vis. Res. 2012, 66, 26–30. [Google Scholar] [CrossRef] [PubMed]

- Zurek, D.B.; Taylor, A.J.; Evans, C.S.; Nelson, X.J. The Role of the Anterior Lateral Eyes in the Vision-Based Behaviour of Jumping Spiders. J. Exp. Biol. 2010, 213, 2372–2378. [Google Scholar] [CrossRef] [PubMed]

- Dolev, Y.; Nelson, X.J. Innate Pattern Recognition and Categorization in a Jumping Spider. PLoS ONE 2014, 9, e97819. [Google Scholar] [CrossRef] [PubMed]

- Dolev, Y.; Nelson, X.J. Biological Relevance Affects Object Recognition in Jumping Spiders. N. Z. J. Zool. 2016, 43, 42–53. [Google Scholar] [CrossRef]

- Rößler, D.C.; De Agrò, M.; Kim, K.; Shamble, P.S. Static Visual Predator Recognition in Jumping Spiders. Funct. Ecol. 2022, 36, 561–571. [Google Scholar] [CrossRef]

- De Agrò, M.; Regolin, L.; Moretto, E. Visual Discrimination Learning in the Jumping Spider Phidippus regius. Anim. Behav. Cogn. 2017, 4, 413–424. [Google Scholar] [CrossRef]

- De Agrò, M. Brain Miniaturization and Its Implications for Cognition: Evidence from Salticidae and Hymenoptera. Ph.D. Thesis, University of Padova, Padova, Italy, 2019. [Google Scholar]

- Mathis, A.; Mamidanna, P.; Cury, K.M.; Abe, T.; Murthy, V.N.; Mathis, M.W.; Bethge, M. DeepLabCut: Markerless Pose Estimation of User-Defined Body Parts with Deep Learning. Nat. Neurosci. 2018, 21, 1281–1289. [Google Scholar] [CrossRef]

- De Voe, R.D. Ultraviolet and Green Receptors in Principal Eyes of Jumping Spiders. J. Gen. Physiol. 1975, 66, 193–207. [Google Scholar] [CrossRef]

- Powell, E.C.; Cook, C.; Coco, J.; Brock, M.; Holian, L.A.; Taylor, L.A. Prey Colour Biases in Jumping Spiders (Habronattus brunneus) Differ across Populations. Ethology 2019, 125, 351–361. [Google Scholar] [CrossRef]

- Van Rossum, G.; Drake, F.L. Python 3 Reference Manual; CreateSpace: Scotts Valley, CA, USA, 2009; ISBN 1-4414-1269-7. [Google Scholar]

- Oliphant, T.E. A Guide to NumPy; Trelgol Publishing: Austin, TX, USA, 2006; Volume 1. [Google Scholar]

- van der Walt, S.; Colbert, S.C.; Varoquaux, G. The NumPy Array: A Structure for Efficient Numerical Computation. Comput. Sci. Eng. 2011, 13, 22–30. [Google Scholar] [CrossRef]

- Virtanen, P.; Gommers, R.; Oliphant, T.E.; Haberland, M.; Reddy, T.; Cournapeau, D.; Burovski, E.; Peterson, P.; Weckesser, W.; Bright, J.; et al. SciPy 1.0: Fundamental Algorithms for Scientific Computing in Python. Nat. Methods 2020, 17, 261–272. [Google Scholar] [CrossRef] [PubMed]

- Reback, J.; McKinney, W.; Bossche, J.V.; Augspurger, T.; Cloud, P.; Klein, A.; Roeschke, M.; Tratner, J.; She, C.; Ayd, W.; et al. Pandas-Dev/Pandas: Pandas 1.0.3. 2020. Available online: https://github.com/pandas-dev/pandas (accessed on 28 April 2023).

- R Core Team R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2021.

- Brooks, M.E.; Kristensen, K.; Benthem, K.J.; Magnusson, A.; Berg, C.W.; Nielsen, A.; Skaug, H.J.; Maechler, M.; Bolker, B.M. GlmmTMB Balances Speed and Flexibility Among Packages for Zero-Inflated Generalized Linear Mixed Modeling. R. J. 2017, 9, 378–400. [Google Scholar] [CrossRef]

- Fox, J.; Weisberg, S. An R Companion to Applied Regression, 3rd ed.; Sage: Thousand Oaks, CA, USA, 2019. [Google Scholar]

- Hartig, F. DHARMa—Residual Diagnostics for HierArchical (Multi-Level/Mixed) Regression Models. R Package Version 0.1.3. Available online: https://CRAN.R-project.org/package=DHARMa (accessed on 18 December 2016).

- Lenth, R. Emmeans: Estimated Marginal Means, Aka Least-Squares Means. 2018. Available online: https://github.com/rvlenth/emmeans (accessed on 28 April 2023).

- Wickham, H. Ggplot2: Elegant Graphics for Data Analysis; Springer: New York, NY, USA, 2016; ISBN 978-3-319-24277-4. [Google Scholar]

- Ushey, K.; Allaire, J.J.; Tang, Y. Reticulate: Interface to “Python”. 2021. Available online: https://github.com/rstudio/reticulate (accessed on 28 April 2023).

- Waskom, M.; Botvinnik, O.; O’Kane, D.; Hobson, P.; Lukauskas, S.; Gemperline, D.C.; Augspurger, T.; Halchenko, Y.; Cole, J.B.; Warmenhoven, J.; et al. Mwaskom/Seaborn: V0.8.1 (September 2017). 2017. Available online: https://github.com/mwaskom/seaborn (accessed on 28 April 2023).

- Hunter, J.D. Matplotlib: A 2D Graphics Environment. Comput. Sci. Eng. 2007, 9, 90–95. [Google Scholar] [CrossRef]

- Kienitz, M.; Czaczkes, T.; De Agrò, M. Bees Differentiate Sucrose Solution from Water at a Distance. bioRxiv 2022. [Google Scholar] [CrossRef]

- Jakob, E.M.; Long, S.M. How (Not) to Train Your Spider: Successful and Unsuccessful Methods for Studying Learning. N. Z. J. Zool. 2016, 43, 112–126. [Google Scholar] [CrossRef]

- Liedtke, J.; Schneider, J.M. Association and Reversal Learning Abilities in a Jumping Spider. Behav. Process. 2014, 103, 192–198. [Google Scholar] [CrossRef]

- Long, S.M.; Leonard, A.; Carey, A.; Jakob, E.M. Vibration as an Effective Stimulus for Aversive Conditioning in Jumping Spiders. J. Arachnol. 2015, 43, 111–114. [Google Scholar] [CrossRef]

- Moore, R.J.D.; Taylor, G.J.; Paulk, A.C.; Pearson, T.; van Swinderen, B.; Srinivasan, M.V. FicTrac: A Visual Method for Tracking Spherical Motion and Generating Fictive Animal Paths. J. Neurosci. Methods 2014, 225, 106–119. [Google Scholar] [CrossRef]

- Peckmezian, T.; Taylor, P.W. A Virtual Reality Paradigm for the Study of Visually Mediated Behaviour and Cognition in Spiders. Anim. Behav. 2015, 107, 87–95. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mannino, E.; Regolin, L.; Moretto, E.; De Agrò, M. Study Replication: Shape Discrimination in a Conditioning Procedure on the Jumping Spider Phidippus regius. Animals 2023, 13, 2326. https://doi.org/10.3390/ani13142326

Mannino E, Regolin L, Moretto E, De Agrò M. Study Replication: Shape Discrimination in a Conditioning Procedure on the Jumping Spider Phidippus regius. Animals. 2023; 13(14):2326. https://doi.org/10.3390/ani13142326

Chicago/Turabian StyleMannino, Eleonora, Lucia Regolin, Enzo Moretto, and Massimo De Agrò. 2023. "Study Replication: Shape Discrimination in a Conditioning Procedure on the Jumping Spider Phidippus regius" Animals 13, no. 14: 2326. https://doi.org/10.3390/ani13142326

APA StyleMannino, E., Regolin, L., Moretto, E., & De Agrò, M. (2023). Study Replication: Shape Discrimination in a Conditioning Procedure on the Jumping Spider Phidippus regius. Animals, 13(14), 2326. https://doi.org/10.3390/ani13142326