What’s in a Meow? A Study on Human Classification and Interpretation of Domestic Cat Vocalizations

Abstract

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

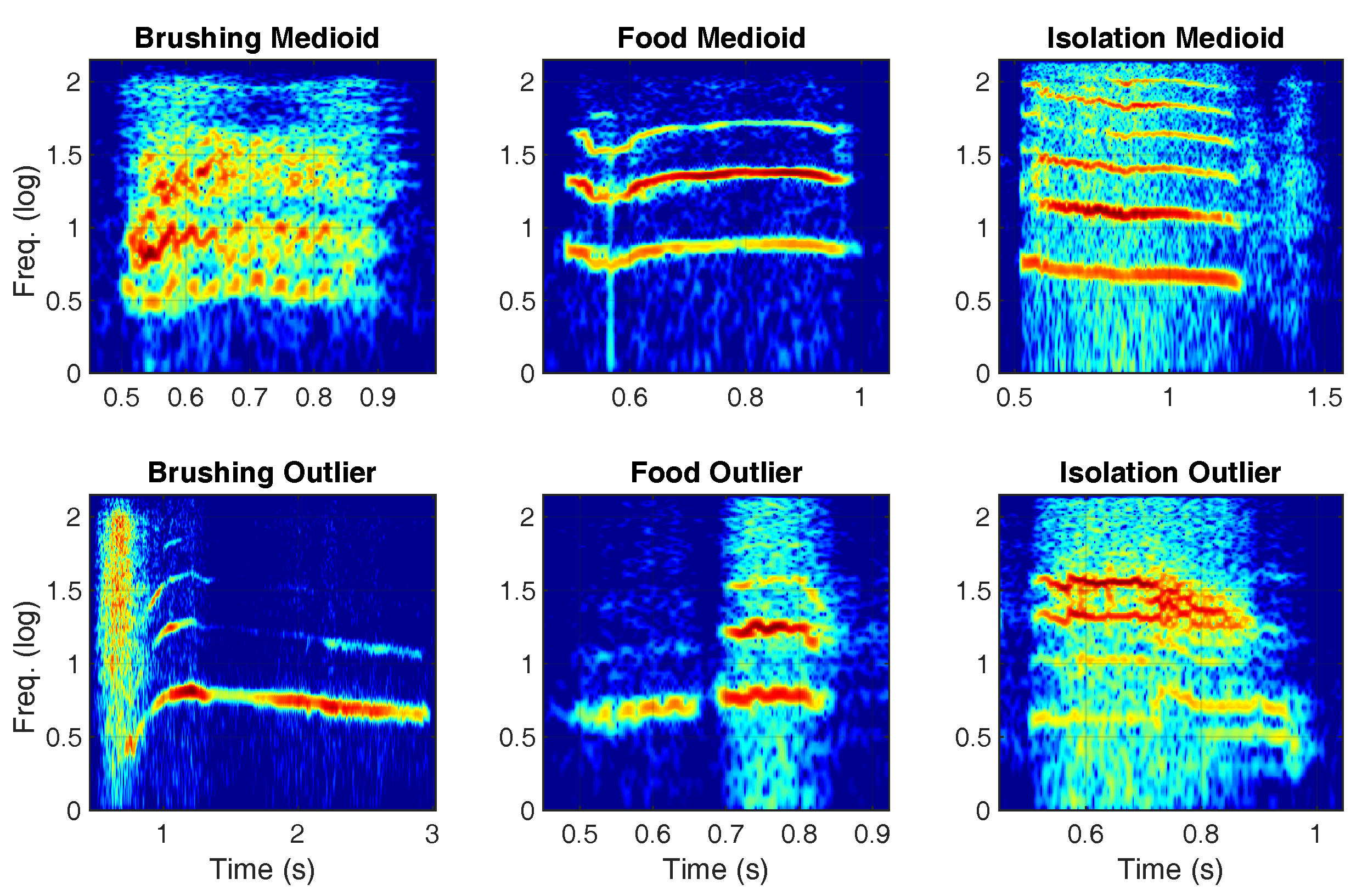

2.2. Cat Vocalizations

- Waiting for food (meows made prior to regular feeding)—The owner started the normal routine operations that precede food delivery in the home environment, but food was actually delivered with a delay of five minutes;

- Isolation (meows made during a period of isolation in an unfamiliar environment)—The cat was placed in its pet carrier and transported by its owner, adopting the same routine used to transport it for any other reason, to an unfamiliar environment (e.g., a room in a different apartment or an office, not far from their home environment). On arrival, the owner opened the pet carrier and the cat was free to roam in the room (if it wanted) for 30 min, in the presence of the owner, to recover from transportation stress. Then, the cat was left alone in the room for five minutes;

- Brushing (meows made while being brushed by the owner)—Cats were brushed by their owner in their home environment for a maximum of 5 min.

- corresponds to the pitch (or note) of the vocalization. It has been computed with the SWIPE’ method [71], ignoring values where the confidence reported by SWIPE’ is below ;

- R is defined in [72] as the sensation for rapid amplitude variations, which reduces pleasantness, and whose effect is perceived as dissonant [73]. This is a sonic descriptor which conveys information about unpleasantness. It was computed using the dedicated function of the MIRToolbox [74] and, more specifically, adopting the strategy proposed in [75];

- is a set of features coming from color perception studies that has been adapted to the audio domain [76]. is the ratio between the energy of and the total energy, is the ratio between the second to fourth harmonics and the total energy, and is the ratio between all others harmonics and the total energy. Tristimulus was chosen to represent information regarding formants, since, with a band-limited signal containing only few harmonics, the actual computation of formants with linear prediction techniques resulted to be unreliable.

2.3. Questionnaire

2.4. Procedure

2.5. Ethical Statement

3. Statistical Analysis

4. Results

5. Discussion

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Waiblinger, S.; Boivin, X.; Pedersen, V.; Tosi, M.V.; Janczak, A.M.; Visser, E.K.; Jones, R.B. Assessing the human–animal relationship in farmed species: A critical review. Appl. Anim. Behav. Sci. 2006, 101, 185–242. [Google Scholar] [CrossRef]

- Turner, D.C. A review of over three decades of research on cat-human and human-cat interactions and relationships. Behav. Process. 2017, 141, 297–304. [Google Scholar] [CrossRef]

- Miklósi, Á.; Topál, J. What does it take to become ‘best friends’? Evolutionary changes in canine social competence. Trends Cogn. Sci. 2013, 17, 287–294. [Google Scholar] [CrossRef]

- Herzog, H. Biology, culture, and the origins of pet-keeping. Anim. Behav. Cogn. 2014, 1. [Google Scholar] [CrossRef]

- Beetz, A.; Uvnäs-Moberg, K.; Julius, H.; Kotrschal, K. Psychosocial and psychophysiological effects of human-animal interactions: The possible role of oxytocin. Front. Psychol. 2012, 3, 234. [Google Scholar] [CrossRef]

- Prato Previde, E.; Valsecchi, P. The immaterial cord: The dog–human attachment bond. In The Social Dog; Elsevier: Amsterdam, The Netherlands, 2014; pp. 165–189. [Google Scholar]

- Hare, B.; Brown, M.; Williamson, C.; Tomasello, M. The domestication of social cognition in dogs. Science 2002, 298, 1634–1636. [Google Scholar] [CrossRef]

- Udell, M.A.; Dorey, N.R.; Wynne, C.D. What did domestication do to dogs? A new account of dogs’ sensitivity to human actions. Biol. Rev. 2010, 85, 327–345. [Google Scholar] [CrossRef]

- Albiach-Serrano, A.; Bräuer, J.; Cacchione, T.; Zickert, N.; Amici, F. The effect of domestication and ontogeny in swine cognition (Sus scrofa scrofa and S. s. domestica). Appl. Anim. Behav. Sci. 2012, 141, 25–35. [Google Scholar] [CrossRef]

- Miklósi, Á.; Pongrácz, P.; Lakatos, G.; Topál, J.; Csányi, V. A comparative study of the use of visual communicative signals in interactions between dogs (Canis familiaris) and humans and cats (Felis catus) and humans. J. Comp. Psychol. 2005, 119, 179–186. [Google Scholar] [CrossRef]

- Ito, Y.; Watanabe, A.; Takagi, S.; Arahori, M.; Saito, A. Cats beg for food from the human who looks at and calls to them: Ability to understand humans’ attentional states. Psychologia 2016, 59, 112–120. [Google Scholar] [CrossRef]

- Maros, K.; Gácsi, M.; Miklósi, Á. Comprehension of human pointing gestures in horses (Equus caballus). Anim. Cogn. 2008, 11, 457–466. [Google Scholar] [CrossRef]

- Proops, L.; McComb, K. Attributing attention: The use of human-given cues by domestic horses (Equus caballus). Anim. Cogn. 2010, 13, 197–205. [Google Scholar] [CrossRef]

- Kaminski, J.; Riedel, J.; Call, J.; Tomasello, M. Domestic goats, Capra hircus, follow gaze direction and use social cues in an object choice task. Anim. Behav. 2005, 69, 11–18. [Google Scholar] [CrossRef]

- Nawroth, C.; Ebersbach, M.; von Borell, E. Juvenile domestic pigs (Sus scrofa domestica) use human-given cues in an object choice task. Anim. Cogn. 2014, 17, 701–713. [Google Scholar] [CrossRef]

- Gerencsér, L.; Fraga, P.P.; Lovas, M.; Újváry, D.; Andics, A. Comparing interspecific socio-communicative skills of socialized juvenile dogs and miniature pigs. Anim. Cogn. 2019, 22, 917–929. [Google Scholar] [CrossRef]

- Hernádi, A.; Kis, A.; Turcsán, B.; Topál, J. Man’s underground best friend: Domestic ferrets, unlike the wild forms, show evidence of dog-like social-cognitive skills. PLoS ONE 2012, 7, e43267. [Google Scholar] [CrossRef]

- Malavasi, R.; Huber, L. Evidence of heterospecific referential communication from domestic horses (Equus caballus) to humans. Anim. Cogn. 2016, 19, 899–909. [Google Scholar] [CrossRef]

- Takimoto, A.; Hori, Y.; Fujita, K. Horses (Equus caballus) adaptively change the modality of their begging behavior as a function of human attentional states. Psychologia 2016, 59, 100–111. [Google Scholar] [CrossRef]

- Merola, I.; Lazzaroni, M.; Marshall-Pescini, S.; Prato-Previde, E. Social referencing and cat–human communication. Anim. Cogn. 2015, 18, 639–648. [Google Scholar] [CrossRef]

- Galvan, M.; Vonk, J. Man’s other best friend: Domestic cats (F. silvestris catus) and their discrimination of human emotion cues. Anim. Cogn. 2016, 19, 193–205. [Google Scholar] [CrossRef]

- Quaranta, A.; D’Ingeo, S.; Amoruso, R.; Siniscalchi, M. Emotion Recognition in Cats. Animals 2020, 10, 1107. [Google Scholar] [CrossRef] [PubMed]

- Smith, A.V.; Proops, L.; Grounds, K.; Wathan, J.; McComb, K. Functionally relevant responses to human facial expressions of emotion in the domestic horse (Equus caballus). Biol. Lett. 2016, 12, 20150907. [Google Scholar] [CrossRef] [PubMed]

- Nakamura, K.; Takimoto-Inose, A.; Hasegawa, T. Cross-modal perception of human emotion in domestic horses (Equus caballus). Sci. Rep. 2018, 8, 8660. [Google Scholar] [CrossRef] [PubMed]

- Nawroth, C.; Albuquerque, N.; Savalli, C.; Single, M.S.; McElligott, A.G. Goats prefer positive human emotional facial expressions. R. Soc. Open Sci. 2018, 5, 180491. [Google Scholar] [CrossRef] [PubMed]

- Vigne, J.D.; Guilaine, J.; Debue, K.; Haye, L.; Gérard, P. Early taming of the cat in Cyprus. Science 2004, 304, 259. [Google Scholar] [CrossRef]

- Driscoll, C.A.; Clutton-Brock, J.; Kitchener, A.C.; O’Brien, S.J. The Taming of the cat. Genetic and archaeological findings hint that wildcats became housecats earlier–and in a different place–than previously thought. Sci. Am. 2009, 300, 68–75. [Google Scholar] [CrossRef]

- Karsh, E.B.; Turner, D.C. The human-cat relationship. In The Domestic Cat: The Biology of Its Behaviour; Cambridge University Press: Cambridge, UK, 1988; pp. 159–177. [Google Scholar]

- Mertens, C. Human-Cat Interactions in the Home Setting. Anthrozoös 1991, 4, 214–231. [Google Scholar] [CrossRef]

- Turner, D.C. The ethology of the human-cat relationship. Schweiz. Arch. Tierheilkd. 1991, 133, 63. [Google Scholar]

- Bradshaw, J.W. Sociality in cats: A comparative review. J. Vet. Behav. 2016, 11, 113–124. [Google Scholar] [CrossRef]

- Macdonald, D.W.; Yamaguchi, N.; Kerby, G. Group-living in the domestic cat: Its sociobiology and epidemiology. In The Domestic Cat: The Biology of Its Behaviour; Cambridge University Press: Cambridge, UK, 2000; Volume 2, pp. 95–118. [Google Scholar]

- Curtis, T.M.; Knowles, R.J.; Crowell-Davis, S.L. Influence of familiarity and relatedness on proximity and allogrooming in domestic cats (Felis catus). Am. J. Vet. Res. 2003, 64, 1151–1154. [Google Scholar] [CrossRef]

- Bradshaw, J.; Cameron-Beaumont, C. The signalling repertoire of the domestic cat and its undomesticated relatives. In The Domestic Cat: The Biology of Its Behaviour; Cambridge University Press: Cambridge, UK, 2000; pp. 67–93. [Google Scholar]

- Bradshaw, J.W.; Casey, R.A.; Brown, S.L. The Behaviour of the Domestic Cat; CABI: Wallingford, UK, 2012. [Google Scholar]

- Feuerstein, N.; Terkel, J. Interrelationships of dogs (Canis familiaris) and cats (Felis catus L.) living under the same roof. Appl. Anim. Behav. Sci. 2008, 113, 150–165. [Google Scholar] [CrossRef]

- Vitale Shreve, K.R.; Udell, M.A. What’s inside your cat’s head? A review of cat (Felis silvestris catus) cognition research past, present and future. Anim. Cogn. 2015, 18, 1195–1206. [Google Scholar] [CrossRef]

- Saito, A.; Shinozuka, K.; Ito, Y.; Hasegawa, T. Domestic cats (Felis catus) discriminate their names from other words. Sci. Rep. 2019, 9, 5394. [Google Scholar] [CrossRef] [PubMed]

- Nicastro, N. Perceptual and Acoustic Evidence for Species-Level Differences in Meow Vocalizations by Domestic Cats (Felis catus) and African Wild Cats (Felis silvestris lybica). J. Comp. Psychol. 2004, 118, 287–296. [Google Scholar] [CrossRef]

- Yeon, S.C.; Kim, Y.K.; Park, S.J.; Lee, S.S.; Lee, S.Y.; Suh, E.H.; Houpt, K.A.; Chang, H.H.; Lee, H.C.; Yang, B.G.; et al. Differences between vocalization evoked by social stimuli in feral cats and house cats. Behav. Process. 2011, 87, 183–189. [Google Scholar] [CrossRef] [PubMed]

- Schötz, S.; van de Weijer, J. A Study of Human Perception of Intonation in Domestic Cat Meows. In Proceedings of the 7th International Conference on Speech Prosody, Dublin, Ireland, 20–23 May 2014; Campbell, N., Gibbon, D., Hirst, D., Eds.; International Speech Communication Association: Dublin, Ireland, 2014; pp. 874–878. [Google Scholar] [CrossRef]

- Tavernier, C.; Ahmed, S.; Houpt, K.A.; Yeon, S.C. Feline vocal communication. J. Vet. Sci. 2020, 21. [Google Scholar] [CrossRef] [PubMed]

- Fermo, J.L.; Schnaider, M.A.; Silva, A.H.P.; Molento, C.F.M. Only when it feels good: Specific cat vocalizations other than meowing. Animals 2019, 9, 878. [Google Scholar] [CrossRef]

- Nicastro, N.; Owren, M.J. Classification of domestic cat (Felis catus) vocalizations by naive and experienced human listeners. J. Comp. Psychol. 2003, 117, 44. [Google Scholar] [CrossRef]

- McComb, K.; Taylor, A.M.; Wilson, C.; Charlton, B.D. The cry embedded within the purr. Curr. Biol. 2009, 19, R507–R508. [Google Scholar] [CrossRef]

- Ellis, S.L.; Swindell, V.; Burman, O.H. Human classification of context-related vocalizations emitted by familiar and unfamiliar domestic cats: An exploratory study. Anthrozoös 2015, 28, 625–634. [Google Scholar] [CrossRef]

- Schötz, S.; Van De Weijer, J.; Eklund, R. Melody matters: An acoustic study of domestic cat meows in six contexts and four mental states. PeerJ Prepr. 2019, 7, e27926v1. [Google Scholar] [CrossRef]

- Cameron-Beaumont, C.L. Visual and Tactile Communication in the Domestic Cat (Felis silvestris catus) and Undomesticated Small Felids. Ph.D. Thesis, University of Southampton, Southampton, UK, 1999. [Google Scholar]

- Mertens, C.; Turner, D.C. Experimental analysis of human-cat interactions during first encounters. Anthrozoös 1988, 2, 83–97. [Google Scholar] [CrossRef]

- Atkinson, T. Practical Feline Behaviour: Understanding Cat Behaviour and Improving Welfare; CABI: Wallingford, UK, 2018. [Google Scholar]

- Scheumann, M.; Hasting, A.S.; Kotz, S.A.; Zimmermann, E. The voice of emotion across species: How do human listeners recognize animals’ affective states? PLoS ONE 2014, 9, e91192. [Google Scholar] [CrossRef]

- Tallet, C.; Špinka, M.; Maruščáková, I.; Šimeček, P. Human perception of vocalizations of domestic piglets and modulation by experience with domestic pigs (Sus scrofa). J. Comp. Psychol. 2010, 124, 81. [Google Scholar] [CrossRef]

- Pongrácz, P.; Molnár, C.; Miklósi, A.; Csányi, V. Human listeners are able to classify dog (Canis familiaris) barks recorded in different situations. J. Comp. Psychol. 2005, 119, 136. [Google Scholar] [CrossRef]

- Belin, P.; Fecteau, S.; Charest, I.; Nicastro, N.; Hauser, M.D.; Armony, J.L. Human cerebral response to animal affective vocalizations. Proc. R. Soc. B Biol. Sci. 2008, 275, 473–481. [Google Scholar] [CrossRef]

- Cannas, S.; Mattiello, S.; Battini, M.; Ingraffia, S.; Cadoni, D.; Palestrini, C. Evaluation of cat’s behavior during three different daily management situations. J. Vet. Behav. 2020, 37, 93–100. [Google Scholar] [CrossRef]

- Zahn-Waxler, C.; Radke-Yarrow, M. The origins of empathic concern. Motiv. Emot. 1990, 14, 107–130. [Google Scholar] [CrossRef]

- Preston, S.D.; De Waal, F.B. Empathy: Its ultimate and proximate bases. Behav. Brain Sci. 2002, 25, 1–20. [Google Scholar] [CrossRef]

- Smith, A. Cognitive empathy and emotional empathy in human behavior and evolution. Psychol. Rec. 2006, 56, 3–21. [Google Scholar] [CrossRef]

- Vellante, M.; Baron-Cohen, S.; Melis, M.; Marrone, M.; Petretto, D.R.; Masala, C.; Preti, A. The “Reading the Mind in the Eyes” test: Systematic review of psychometric properties and a validation study in Italy. Cogn. Neuropsychiatry 2013, 18, 326–354. [Google Scholar] [CrossRef]

- Zaki, J. Empathy: A motivated account. Psychol. Bull. 2014, 140, 1608. [Google Scholar] [CrossRef]

- Israelashvili, J.; Sauter, D.; Fischer, A. Two facets of affective empathy: Concern and distress have opposite relationships to emotion recognition. In Cognition and Emotion; Taylor & Francis: Abingdon, UK, 2020; pp. 1–11. [Google Scholar]

- Christov-Moore, L.; Simpson, E.A.; Coudé, G.; Grigaityte, K.; Iacoboni, M.; Ferrari, P.F. Empathy: Gender effects in brain and behavior. Neurosci. Biobehav. Rev. 2014, 46, 604–627. [Google Scholar] [CrossRef]

- Hall, J.A. Gender effects in decoding nonverbal cues. Psychol. Bull. 1978, 85, 845. [Google Scholar] [CrossRef]

- Hall, J.A.; Matsumoto, D. Gender differences in judgments of multiple emotions from facial expressions. Emotion 2004, 4, 201. [Google Scholar] [CrossRef]

- McClure, E.B. A meta-analytic review of sex differences in facial expression processing and their development in infants, children, and adolescents. Psychol. Bull. 2000, 126, 424. [Google Scholar] [CrossRef]

- Ellingsen, K.; Zanella, A.J.; Bjerkås, E.; Indrebø, A. The relationship between empathy, perception of pain and attitudes toward pets among Norwegian dog owners. Anthrozoös 2010, 23, 231–243. [Google Scholar] [CrossRef]

- Norring, M.; Wikman, I.; Hokkanen, A.H.; Kujala, M.V.; Hänninen, L. Empathic veterinarians score cattle pain higher. Vet. J. 2014, 200, 186–190. [Google Scholar] [CrossRef]

- Ludovico, L.A.; Ntalampiras, S.; Presti, G.; Cannas, S.; Battini, M.; Mattiello, S. CatMeows: A Publicly-Available Dataset of Cat Vocalizations. Zenodo 2020. [Google Scholar] [CrossRef]

- Ludovico, L.A.; Ntalampiras, S.; Presti, G.; Cannas, S.; Battini, M.; Mattiello, S. CatMeows: A Publicly-Available Dataset of Cat Vocalizations. In Proceedings of the 27th International Conference on Multimedia Modeling, Prague, Czech Republic, 25–27 January 2021. [Google Scholar]

- Alías, F.; Socoró, J.C.; Sevillano, X. A review of physical and perceptual feature extraction techniques for speech, music and environmental sounds. Appl. Sci. 2016, 6, 143. [Google Scholar] [CrossRef]

- Camacho, A.; Harris, J.G. A sawtooth waveform inspired pitch estimator for speech and music. J. Acoust. Soc. Am. 2008, 124, 1638–1652. [Google Scholar] [CrossRef]

- Daniel, P.; Weber, R. Psychoacoustical roughness: Implementation of an optimized model. Acta Acust. United Acust. 1997, 83, 113–123. [Google Scholar]

- Plomp, R.; Levelt, W.J.M. Tonal consonance and critical bandwidth. J. Acoust. Soc. Am. 1965, 38, 548–560. [Google Scholar] [CrossRef]

- Lartillot, O.; Toiviainen, P. A Matlab toolbox for musical feature extraction from audio. In Proceedings of the International Conference on Digital Audio Effects, Bordeaux, France, 10–14 September 2007; pp. 237–244. [Google Scholar]

- Vassilakis, P. Auditory roughness estimation of complex spectra—Roughness degrees and dissonance ratings of harmonic intervals revisited. J. Acoust. Soc. Am. 2001, 110, 2755. [Google Scholar] [CrossRef]

- Pollard, H.F.; Jansson, E.V. A tristimulus method for the specification of musical timbre. Acta Acust. United Acust. 1982, 51, 162–171. [Google Scholar]

- Paul, E.S. Empathy with animals and with humans: Are they linked? Anthrozoös 2000, 13, 194–202. [Google Scholar] [CrossRef]

- Colombo, E.; Pelosi, A.; Prato-Previde, E. Empathy towards animals and belief in animal-human-continuity in Italian veterinary students. Anim. Welf. 2016, 25, 275–286. [Google Scholar] [CrossRef]

- Colombo, E.S.; Crippa, F.; Calderari, T.; Prato-Previde, E. Empathy toward animals and people: The role of gender and length of service in a sample of Italian veterinarians. J. Vet. Behav. 2017, 17, 32–37. [Google Scholar] [CrossRef]

- Stubsjøen, S.M.; Moe, R.O.; Bruland, K.; Lien, T.; Muri, K. Reliability of observer ratings: Qualitative behaviour assessments of shelter dogs using a fixed list of descriptors. Vet. Anim. Sci. 2020, 10, 100145. [Google Scholar] [CrossRef]

- Lambert, W.E.; Hodgson, R.C.; Gardner, R.C.; Fillenbaum, S. Evaluational reactions to spoken languages. J. Abnorm. Soc. Psychol. 1960, 60, 44. [Google Scholar] [CrossRef]

- Campbell-Kibler, K. Sociolinguistics and perception. Lang. Linguist. Compass 2010, 4, 377–389. [Google Scholar] [CrossRef]

- Ntalampiras, S.; Ludovico, L.A.; Presti, G.; Prato Previde, E.; Battini, M.; Cannas, S.; Palestrini, C.; Mattiello, S. Automatic Classification of Cat Vocalizations Emitted in Different Contexts. Animals 2019, 9, 543. [Google Scholar] [CrossRef] [PubMed]

- Dawson, L.; Cheal, J.; Niel, L.; Mason, G. Humans can identify cats’ affective states from subtle facial expressions. Anim. Welf. 2019, 28, 519–531. [Google Scholar] [CrossRef]

- Schirmer, A.; Seow, C.S.; Penney, T.B. Humans process dog and human facial affect in similar ways. PLoS ONE 2013, 8, e74591. [Google Scholar] [CrossRef] [PubMed]

- Pongrácz, P.; Molnár, C.; Miklósi, Á. Acoustic parameters of dog barks carry emotional information for humans. Appl. Anim. Behav. Sci. 2006, 100, 228–240. [Google Scholar] [CrossRef]

- Pongrácz, P.; Molnár, C.; Dóka, A.; Miklósi, Á. Do children understand man’s best friend? Classification of dog barks by pre-adolescents and adults. Appl. Anim. Behav. Sci. 2011, 135, 95–102. [Google Scholar] [CrossRef]

- Yin, S. A new perspective on barking in dogs (Canis familaris.). J. Comp. Psychol. 2002, 116, 189. [Google Scholar] [CrossRef]

- Lawrence, E.J.; Shaw, P.; Baker, D.; Baron-Cohen, S.; David, A.S. Measuring empathy: Reliability and validity of the Empathy Quotient. Psychol. Med. 2004, 34, 911. [Google Scholar] [CrossRef]

- Proverbio, A.M.; Matarazzo, S.; Brignone, V.; Del Zotto, M.; Zani, A. Processing valence and intensity of infant expressions: The roles of expertise and gender. Scand. J. Psychol. 2007, 48, 477–485. [Google Scholar] [CrossRef]

- Thayer, J.; Johnsen, B.H. Sex differences in judgement of facial affect: A multivariate analysis of recognition errors. Scand. J. Psychol. 2000, 41, 243–246. [Google Scholar] [CrossRef]

- Mills, D. What are stress and distress and what emotions are involved? Feline Stress and Health—Managing negative emotions to improve feline health and wellbeing. In The ISFM Guide to Feline Stress and Health; International Cat Care: Tisbury, UK, 2016. [Google Scholar]

| Context | Medioid Assignment | Outlier Assignment | Significance | ||

|---|---|---|---|---|---|

| Correct | Incorrect | Correct | Incorrect | ||

| Waiting for food | 91 (40.44%) | 134 (59.56%) | 61 (27.11%) | 164 (72.89%) | p < 0.01 |

| Isolation | 60 (26.67%) | 165 (73.33%) | 32 (14.22%) | 193 (85.78%) | p < 0.001 |

| Brushing | 74 (32.89%) | 151 (67.11%) | 30 (13.33%) | 195 (86.67%) | p < 0.001 |

| Waiting for Food | Isolation | Brushing | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Correct | Incorrect | Sign. | Correct | Incorrect | Sign. | Correct | Incorrect | Sign. | |

| Gender | |||||||||

| Male | 27 (34.2%) | 52 (65.8%) | n.s. | 14 (17.7%) | 65 (82.3%) | 19 (24.1%) | 60 (75.9%) | ||

| Female | 64 (43.8%) | 82 (56.2%) | 46 (31.5%) | 100 (68.5%) | 55 (37.7%) | 91 (62.3%) | |||

| Parental status | |||||||||

| Parent | 24 (33.3%) | 48 (66.7%) | n.s. | 14 (19.4%) | 58 (80.6%) | n.s. | 18 (25.0%) | 54 (75.0%) | n.s. |

| Nonparent | 67 (43.8%) | 86 (56.2%) | 46 (30.1%) | 107 (69.9%) | 56 (36.6%) | 97 (63.4%) | |||

| Cat owner | |||||||||

| Yes | 48 (44.4%) | 60 (55.6%) | n.s. | 38 (35.2%) | 70 (64.8%) | 48 (44.4%) | 60 (55.6%) | ||

| No | 43 (36.8%) | 74 (63.2%) | 22 (18.8%) | 95 (81.2%) | 26 (22.2%) | 91 (77.8%) | |||

| Grown up with cats | |||||||||

| Yes | 51 (41.8%) | 71 (58.2%) | n.s. | 38 (31.1%) | 84 (68.9%) | n.s. | 44 (36.1%) | 78 (63.9%) | n.s. |

| No | 40 (38.8%) | 63 (61.2%) | 22 (21.4%) | 81 (78.6%) | 30 (29.1%) | 73 (70.9%) | |||

| AES | CES | |||

|---|---|---|---|---|

| Mean ± s.d. | Sign. | Mean ± s.d. | Sign. | |

| Gender | ||||

| Male | 144.28 ± 17.95 | p < 0.001 | 15.92 ± 6.59 | p < 0.05 |

| Female | 157.82 ± 18.02 | 17.97 ± 5.68 | ||

| Parental status | ||||

| Parent | 153.58 ± 21.08 | n.s. | 18.32 ± 5.64 | n.s. |

| Nonparent | 152.82 ± 18.14 | 16.75 ± 6.23 | ||

| Cat owner | ||||

| Yes | 157.11 ± 17.58 | p < 0.01 | 20.06 ± 4.53 | p < 0.001 |

| No | 149.32 ± 19.73 | 14.65 ± 6.18 | ||

| Grown up with cats | ||||

| Yes | 157.75 ± 17.20 | p < 0.001 | 19.03 ± 5.08 | p < 0.001 |

| No | 147.50 ± 19.79 | 15.14 ± 6.50 | ||

| Overall | 153.06 ± 19.09 | 17.25 ± 6.08 | ||

| AES | CES | |||||

|---|---|---|---|---|---|---|

| Context | Context Assignment | Context Assignment | ||||

| Correct | Incorrect | Sign. | Correct | Incorrect | Sign. | |

| Waiting for food | 154.02 ± 18.32 | 152.41 ± 19.63 | n.s. | 17.90 ± 5.55 | 16.81 ± 6.40 | n.s. |

| Isolation | 154.92 ± 16.48 | 152.39 ± 19.95 | n.s. | 18.75 ± 5.38 | 16.70 ± 6.24 | p < 0.05 |

| Brushing | 154.01 ± 18.92 | 152.60 ± 19.21 | n.s. | 18.31 ± 5.65 | 16.73 ± 6.23 | n.s. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Prato-Previde, E.; Cannas, S.; Palestrini, C.; Ingraffia, S.; Battini, M.; Ludovico, L.A.; Ntalampiras, S.; Presti, G.; Mattiello, S. What’s in a Meow? A Study on Human Classification and Interpretation of Domestic Cat Vocalizations. Animals 2020, 10, 2390. https://doi.org/10.3390/ani10122390

Prato-Previde E, Cannas S, Palestrini C, Ingraffia S, Battini M, Ludovico LA, Ntalampiras S, Presti G, Mattiello S. What’s in a Meow? A Study on Human Classification and Interpretation of Domestic Cat Vocalizations. Animals. 2020; 10(12):2390. https://doi.org/10.3390/ani10122390

Chicago/Turabian StylePrato-Previde, Emanuela, Simona Cannas, Clara Palestrini, Sara Ingraffia, Monica Battini, Luca Andrea Ludovico, Stavros Ntalampiras, Giorgio Presti, and Silvana Mattiello. 2020. "What’s in a Meow? A Study on Human Classification and Interpretation of Domestic Cat Vocalizations" Animals 10, no. 12: 2390. https://doi.org/10.3390/ani10122390

APA StylePrato-Previde, E., Cannas, S., Palestrini, C., Ingraffia, S., Battini, M., Ludovico, L. A., Ntalampiras, S., Presti, G., & Mattiello, S. (2020). What’s in a Meow? A Study on Human Classification and Interpretation of Domestic Cat Vocalizations. Animals, 10(12), 2390. https://doi.org/10.3390/ani10122390