Autonomous Robotic Platform for Precision Viticulture: Integrated Mobility, Multimodal Sensing, and AI-Based Leaf Sampling

Abstract

1. Introduction

- Design and implementation of a multimodal perception system enabling high-throughput phenotyping in vineyard environments.

- Application of AI-based visual servoing for real-time leaf localisation during robotic manipulation.

- Adaptation of an industrial collaborative robot to compliant manipulation tasks in agricultural settings.

- Design and validation of a dual biological sampling workflow for georeferenced field data acquisition.

2. Related Works

3. Operating Environment and Goals

3.1. Vineyard Environment Characteristics

- Row architecture: Inter-row spacing typically ranges from 2.0 to 3.5 m, with plant spacing within rows of 0.8 to 1.5 m. These dimensions constrain robot size and manoeuvrability requirements. Accordingly, the system employs a compact tracked chassis designed to fit comfortably within inter-row spacing while maintaining stability. The UR10e collaborative arm’s 1.3 m reach ensures access to leaves distributed across the 0.8–1.5 m intra-row spacing, while its 0.05 mm repeatability guarantees consistent sampling protocols despite varying plant geometries.

- Terrain morphology: Sicilian vineyards often occupy hillside locations with slopes reaching 15–25%, featuring irregular surfaces, exposed rocks, and variable soil compaction. These conditions demand robust locomotion systems with high ground clearance and traction. As detailed in the subsequent analysis, the tracked platform with dual 1.5 kW brushless motors and field-oriented control enables stable navigation on inclined terrain while minimising soil compaction. GPS/IMU fusion (Pixhawk 4 autopilot with magnetometer integration) provides decimetre-level positioning accuracy necessary for systematic coverage across variable topography.

- Canopy structure: Training systems (primarily Guyot and cordon) create complex three-dimensional canopy architectures where target leaves may be occluded, requiring sophisticated vision algorithms and flexible robotic manipulation. The system directly addresses this complexity through multimodal perception: the RGB-D camera (Intel RealSense D435, 0.3–3.0 m working range at 30 Hz) provides synchronised colour and depth data enabling 3D leaf localisation in cluttered environments, while Kalman filtering with constant velocity motion prediction handles temporary occlusions (up to 4 s) by estimating leaf position during wind-induced sway. This approach achieves 3 cm spatial tolerance despite foliage obstruction and dynamic motion. The compliant six-axis manipulator with integrated force–torque sensing enables selective leaf sampling while minimising tissue damage during insertion into occluded canopy regions.

- Ground conditions: Soil moisture varies significantly with season and irrigation, ranging from hard, compacted surfaces to soft, muddy conditions that challenge traction and stability. The tracked architecture addresses this variability by distributing robot weight across a large surface area, reducing ground pressure compared to wheeled platforms.

- Obstacles: Environmental factors: Operations must tolerate ambient temperatures of 5–40 °C, high humidity levels, direct solar radiation, wind, and occasional precipitation. Wind-induced leaf sway is directly addressed through Kalman filtering with state prediction (incorporating accelerometer data), enabling robust target tracking despite dynamic foliage motion. Variable illumination, from direct sunlight to backlit conditions in dense canopy, necessitates multimodal sensing: the thermal camera (Teledyne FLIR A68, 50 mK sensitivity) detects physiological stress indicators (elevated leaf temperature) independent of visible-light conditions, enabling early disease detection that RGB imaging alone cannot achieve. The requirement to generalise perception across varying environmental conditions informed the decision to train the YOLOv10 model on a proprietary dataset acquired directly in a Sicilian vineyard, capturing realistic variations in illumination, occlusion patterns, and canopy geometry. Obstacles for vineyard infrastructure include support posts typically every 5–8 m, irrigation lines, ground anchors, and occasionally fallen branches or maintenance equipment. The Pixhawk 4 autopilot integrates LiDAR-assisted obstacle detection with GPS waypoint following, enabling the system to autonomously navigate around infrastructure while maintaining mission-level path adherence during unsupervised operations.

- Environmental factors: Operations must tolerate ambient temperatures of 5–40 °C, high humidity levels, direct solar radiation, wind, and occasional precipitation.

3.2. Developing an Autonomous Robotic System

- Enhanced efficiency: Automated sampling reduces labour requirements by up to 70–80% compared to manual methods, enabling more frequent and comprehensive monitoring.

- Improved precision: Computer vision-based targeting and robotic manipulation ensure consistent, reproducible sampling protocols, eliminating human variability.

- Comprehensive spatial coverage: GPS-guided navigation enables systematic sampling across entire vineyard blocks, creating high-resolution disease distribution maps.

- Continuous operation capability: The system can operate during extended periods, including conditions (early morning, late evening) when human inspection is impractical.

- Data integration: Georeferenced samples and sensor data feed directly into geographic information systems (GISs) and decision support tools.

3.3. Design Assumptions and Operational Constraints

- Vineyard structure: The system requires inter-row spacing at least 2.0 m to ensure safe navigation and manoeuvrability of the tracked platform. Vineyards with narrower row configurations are not compatible with the current mechanical design.

- Weather conditions: Operations are conducted during dry weather conditions. Rain or heavy fog may compromise traction, sensor performance, and sample quality, limiting system deployment.

- GPS availability and accuracy: The system requires continuous GPS coverage with minimal positioning error for autonomous navigation. Standard GPS receivers are employed, though RTK GPS can optionally be integrated for enhanced accuracy in demanding applications. Areas with significant GPS signal obstruction.

- Lighting conditions: The vision system operates effectively under diffuse daylight conditions. While data augmentation techniques improve robustness to illumination variations, insufficient lighting (e.g., heavy cloud cover, twilight, darkness) significantly degrades leaf detection accuracy, as the neural network requires adequate visual information to identify target features.

- Operational hours: Given the lighting requirements, the system is validated for daylight operations (typically 7:00 a.m.–19:00 p.m. depending on season), when illumination is sufficient for reliable computer vision performance and human supervision is practical.

4. System Architecture

4.1. Subsystem Organisation

4.2. Locomotion and Autonomous Navigation System

- Dual brushless electric motors: High-torque, low-speed motors (rated at 36 V, 1.5 kW each) provide independent drive to left and right tracks, enabling skid-steering manoeuvres with zero-radius turning capability. Brushless architecture ensures high efficiency (>85%), minimal maintenance requirements, and excellent thermal characteristics for extended operations.

- Motor driver controllers (Zapi BLE0): Industrial-grade motor drivers implement field-oriented control (FOC) for precise torque regulation, provide comprehensive safety features (overcurrent protection, thermal management, ground fault detection), and expose CANopen communication interfaces for high-level control integration.

- Flight controller autopilot (Pixhawk 4): Originally designed for aerial vehicles, this autopilot has been adapted for ground vehicle applications, providing GPS/GNSS positioning, inertial measurement unit (IMU) data fusion, magnetometer-based heading determination, and mission management capabilities. The platform supports MAVLink protocol for standardised communication with ground control stations.

- PWM-to-CAN (STM32L4KC): This microcontroller serves as the critical interface between the PWM-based autopilot outputs and the CANopen-based motor drivers, translating high-level navigation commands into differential drive motor commands while implementing real-time control loops for trajectory tracking.

4.3. Sampling System

- RGB-D vision sensor (Intel RealSense D435): Provides synchronised colour and depth imaging at 640 × 480 resolution and 30 Hz frame rate, enabling 3D perception of the vine canopy. The active stereo depth technology operates reliably across varying lighting conditions and offers a working range of 0.3–3.0 m, suitable for close-range plant inspection.

- Thermal imaging camera (Teledyne FLIR A68): Captures radiometric thermal data at 640 × 480 resolution with <50 mK thermal sensitivity, enabling detection of plant stress, disease symptoms, and environmental anomalies invisible to RGB cameras. Thermal imaging can reveal pre-symptomatic disease indicators through localised temperature variations.

- Collaborative robotic arm (Universal Robots UR10e): Six-axis manipulator with 1.3 m reach, 12.5 kg payload capacity, and ±0.05 mm repeatability provides the dexterity required for selective leaf sampling. The cobot architecture includes integrated force–torque sensing for compliant interaction with plants, minimising mechanical damage during sampling operations.

- End-effector tools: Custom-designed gripper for leaf collection and vacuum aspiration nozzle for insect capture, mountable on the robotic arm’s tool changer interface. The gripper employs gentle pneumatic actuation to avoid crushing delicate leaf tissues.

- Sample management system: Automated carousel mechanism containing 20+ individual sample vials prefilled with preservation solution (70% ethanol for entomological specimens). A stepper motor-driven indexing system positions each vial sequentially under the sample deposit point, ensuring proper sample separation and traceability.

4.4. Control Software Architecture

- AgrimetOrchestrator: The main coordinator, composed of various submodules, exposes a comprehensive set of HTTP API endpoints that enable remote user interaction through custom-developed client applications. These APIs facilitate real-time system monitoring, remote control, and dynamic configuration adjustment, thereby supporting integration with graphical user interfaces (GUIs), operational dashboards, and supervisory control systems.

- Motors Module: Establishes and maintains a serial connection with the STM32L4KC microcontroller and runs a persistent background thread to provide three core capabilities: real-time motor telemetry acquisition (including operational status, current consumption, and performance metrics); motor control operations such as activation, deactivation, and automated calibration of tracked drive motors; and data logging management for enabling or disabling comprehensive performance logs for diagnostics and analysis.

- RealSense Module: Provides key functionalities such as video pipeline management for initialising, starting and stopping real-time capture streams, synchronised acquisition of RGB and depth frames at configurable resolutions and frame rates, and coordinate transformation between 2D image-space pixels and 3D real-world metric coordinates using depth sensor calibration parameters.

- FlirA68 Module: Provides snapshot functionality for the thermal camera.

- VisionController: Performs real-time grapevine leaf detection using an optimised YOLOv10 model. It processes RGB frames from the RealSense camera, applies target selection logic, and uses Kalman filtering to stabilise 3D leaf coordinates, ensuring robust tracking under dynamic vineyard conditions.

- ArmController: Manages high-level manipulation tasks for the UR10e robotic arm via the RTDE protocol. It integrates vision-based spatial data to execute autonomous leaf and insect sampling workflows.

- StepperController: Handles carousel homing, vial indexing, and position reporting, ensuring reliable sample handling.

- Pixhawk Module: Manages autonomous navigation using MAVsdk and MAVLink. It establishes autopilot communication, uploads waypoint missions, monitors telemetry, and coordinates sampling operations with other modules during flight.

4.5. System Interconnection

4.5.1. Locomotion and Autonomous Navigation Subsystem Connectivity

- The STM32L4KC microcontroller interconnects the ZAPI BLE0 motor drivers and the Pixhawk 4 autopilot through the PWM-to-CAN translation process.

- Both the STM32L4KC microcontroller and the Pixhawk 4 autopilot communicate with the central Jetson Orin AGX processor via serial interface protocols.

4.5.2. Biological Sampling Subsystem Connectivity

- The Universal Robots UR10e collaborative arm and the Teledyne FLIR A68 thermal camera communicate with the central processor through local area networking, established via integrated router and network switch hardware.

- The Intel RealSense D435 RGB-D camera and the stepper motor control board communicate with the central processor via serial interface protocols.

5. Leaf Detection and Sampling Pipeline

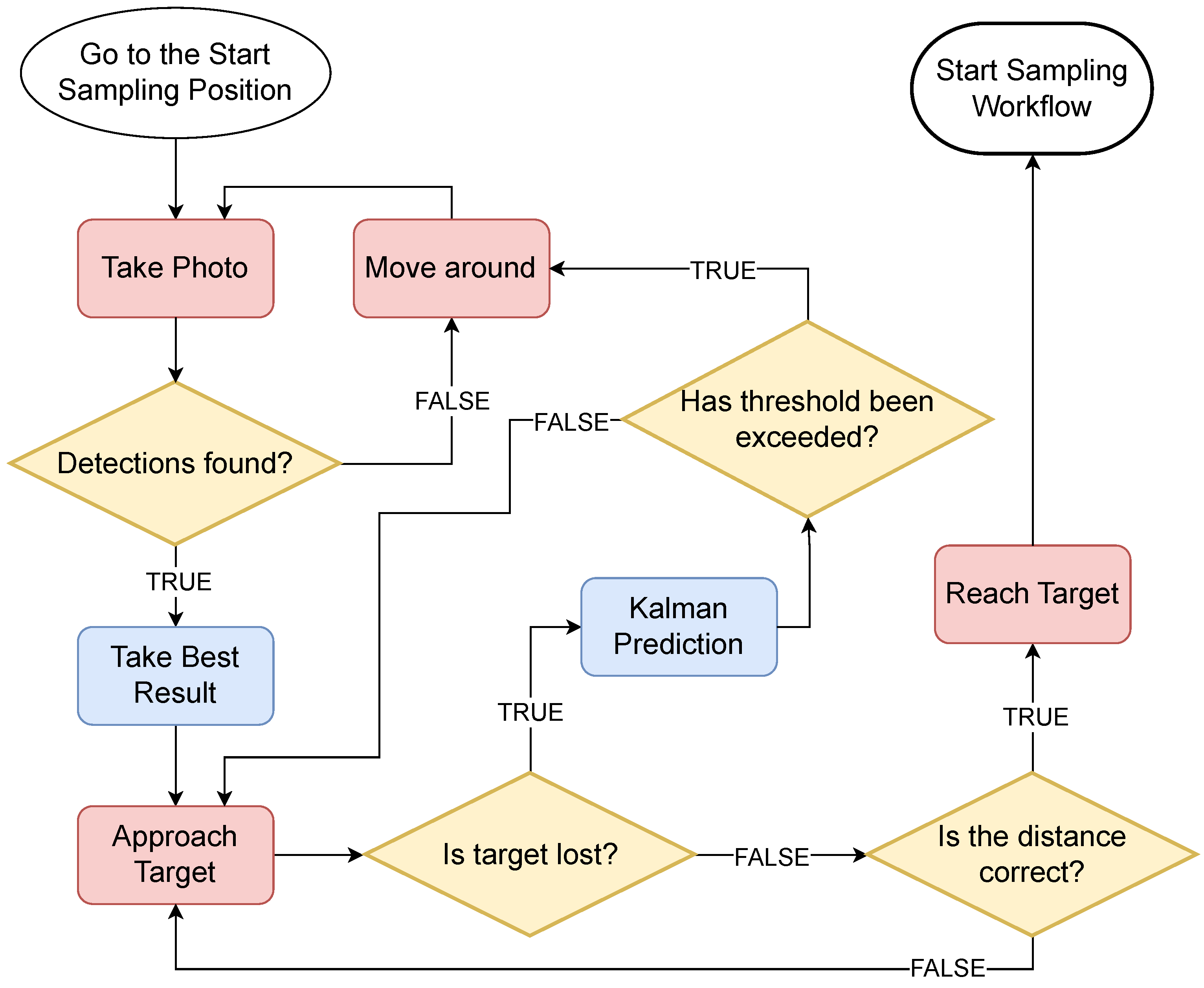

5.1. Operational Workflow

- The robotic arm starts from a view position (fixed at design time);

- The stereo camera acquires the image and searches for a good choice for a leaf;

- Once a leaf is targeted, the arm approaches and follows it with the camera;

- When the tool of the robotic arm is at the correct distance, a thermal photo is taken with the thermal camera;

- Next, the robotic arm goes forward to take a sample of the leaf;

- After that, the robotic arm positions the vacuum nozzle near a target leaf or vine structure;

- Vacuum aspiration is activated to capture insects;

- Specimens are transported through the duct and deposited into the current vial;

- The stepper motor rotates the carousel to position the next vial.

5.2. Building a Leaf Detector

5.2.1. Dataset Definition

5.2.2. Labelling

- Optimal Visibility: Selection of fully visible leaves without significant occlusions.

- Morphological Quality: Preference for specimens presenting typical and well-defined morphological characteristics.

- Dimensional Variability: Inclusion of leaves at different scales to improve generalisation capacity.

5.2.3. Annotation HeatMap

- Plant Architecture: The most developed and visible leaves tend to position themselves in the central part of the canopy.

- Lighting Conditions: The central region generally presents more favourable lighting conditions for leaf visibility.

5.2.4. Annotation Histogram

- 1 annotation category: A total of 23 occurrences represent scenes with reduced target density, typically associated with frames with limited visibility conditions or sparser compositions.

- 2–7 annotations category: In total, 312 occurrences constitute the dataset core with medium-optimal complexity scenes for training.

5.3. The Choice of YOLO Model

- NMS-free Architecture: Elimination of non-maximum suppression post-processing through dual assignment strategy, which drastically reduces inference times.

- Efficiency-oriented Design: Specific optimisations for deployment on hardware with limited resources.

- Dual assignments: Training strategy that improves prediction quality, reducing the need for post-processing.

- Holistic Efficiency Improvements: Optimisations at architecture, loss function, and training strategy levels to maximise throughput.

5.3.1. YOLOv10 Architecture

5.3.2. Model Training and Evaluation

- YOLOv10n (Nano): Ultra-lightweight version, characterised by 2.3 M parameters, optimised for devices with limited resources.

- YOLOv10s (Small): Balanced version, characterised by 7.2 M parameters, between performance and computational efficiency for portable devices.

- YOLOv10m (Medium): Reference version, characterised by 15.4 M parameters, for general-purpose detection.

- mAP@0.5-0.95: YOLOv10s emerges with 0.201, followed by YOLOv10n (0.196) and YOLOv10m (0.175). This result indicates superior YOLOv10s performance across a wide range of IoU thresholds, suggesting greater robustness in bounding box localisation.

- mAP@0.5: YOLOv10s maintains the best position with 0.409, surpassing YOLOv10m (0.387) and YOLOv10n (0.370). The IoU threshold of 0.5 is particularly relevant for visual servoing applications where accurate localisation is required but not extreme precision.

- mAP@0.75: All variants show comparable performance (0.12–0.15), but this is also given by the annotation strategy executed previously.

- Precision: YOLOv10s achieves the highest precision (0.556), indicating a lower incidence of false positives. YOLOv10n follows with 0.530, while YOLOv10m presents 0.398. The high precision of YOLOv10s is crucial to avoid the robot erroneously identifying non-target objects.

- Recall: YOLOv10m shows superior recall (0.451), followed by YOLOv10s (0.427) and YOLOv10n (0.383). A high value indicates better ability to identify leaves present in the scene, reducing false negatives that could cause target loss during tracking.

- F1-Score: YOLOv10s obtains the best balance with F1 = 0.485, surpassing YOLOv10n (0.449) and YOLOv10m (0.423). The F1-score represents the harmonic mean of precision and recall, providing a unified metric to evaluate overall performance.

5.4. Target Selection and Tracking System

Kalman Filter for Position Stabilisation

5.5. Tracking Logic

- Prediction is executed continuously at every frame to maintain temporal coherence.

- Update is performed only when the target is visible with minimum YOLO confidence (0.55 for initial lock, 0.1 for subsequent tracking), depth sensing is successful, and the detected target is matched within a 3 cm tolerance in 3D Euclidean space.

- During occlusions or temporary target loss (up to 60 consecutive frames, equivalent to 2 s at 30 FPS), the system relies solely on prediction to provide position estimates, assigning an indicative confidence of 0.6.

- After exceeding the maximum lost frame threshold, the target lock is reset and a new target selection is initiated.

6. Integration Experiments

6.1. Sensor Data Acquisition Performance

- Sensor polling frequency: Achieved 10 Hz acquisition rate for environmental data.

- API response latency: Average response time of 55 ± 15 milliseconds for telemetry queries.

- Data freshness: Sensor readings updated every 100 milliseconds.

- Concurrent sensor streams: Successfully handled simultaneous acquisition from RGB-D camera (30 Hz), thermal camera (10 Hz), and environmental sensors (10 Hz) with zero data loss.

6.2. Localisation and Navigation Integration

- Position update frequency: GPS-based location updates delivered at 5 Hz with horizontal accuracy of ±0.15 m.

- Map refresh rate: Visual interface updated at 20 FPS with position history retention.

- Navigation command execution: Autonomous waypoint missions uploaded and correctly executed (five missions tested).

- GPS-denied fallback: System successfully maintained dead reckoning estimates during GPS signal loss.

6.3. Remote Control and Command Execution

- Command latency: Movement commands received and executed with 50 ± 50 millisecond latency.

- Control bandwidth: Successfully transmitted simultaneous multi-axis commands (tracked drive + robotic arm) at 20 Hz.

- Bidirectional communication: Established and verified a complete bidirectional communication loop with zero communication timeouts over 3 h of continuous operation.

6.4. API Endpoint Validation

- API response time: Average 120 ± 40 milliseconds across all endpoint types.

- Concurrent client connections: System successfully managed four simultaneous remote client connections without performance degradation.

- Data serialisation overhead: JSON payload processing overhead of <5 milliseconds per transaction.

6.5. System Integration Coverage

- Subsystem synchronisation: All components (locomotion, perception, manipulation, sampling) coordinated with <100 millisecond maximum inter-subsystem latency.

- Protocol interoperability: A 100% successful translation of PWM autopilot signals to CANopen motor commands.

- RTDE protocol communication: Robotic arm commands executed via Real-Time Data Exchange protocol with 0.05 Hz guaranteed cycle rate maintained during all operations.

- Configuration update propagation: Dynamic configuration changes are propagated across all modules and applied within 2 s.

6.6. GUI Functionality Metrics

- Responsive interface latency: User input to visual feedback.

- Real-time visualisation: Live video feed rendering at 25–30 FPS with overlaid detection results.

- Cross-platform deployment: Successfully deployed on Windows laptops (two units), Linux laptops (two units), and Android tablets and phones (three units) with identical functionality.

6.7. Quantitative Comparison with Existing Methods

6.7.1. Detection Accuracy

- Confidence-stratified thresholds (0.75 initial, 0.1 tracking) that reduce false positives;

- Spatial proximity filtering (3 cm tolerance) that prevents adjacent leaf confusion;

- Kalman filtering with occlusion tolerance (4 s) that maintains tracking despite detection gaps;

- Redundant sampling across multiple locations (multiple passes per vineyard block) that compensates for individual leaf detection failures.

- Detection: ∼43% (recall of 0.427 means 43% of visible leaves are detected in single images).

- Tracking: ∼85–90% (once detected with confidence, maintained tracking due to Kalman filtering).

- Approach: ∼90% (robotic arm positioning well-characterised).

- Sampling: ∼85% (compliant gripper accommodates morphological variation).

- Deposition: ∼95% (carousel and stepper motor mechanisms reliable).

- Multiple passes (systematic coverage revisits areas);

- Temporal integration (missed leaves in one pass detected in subsequent passes);

- Large spatial coverage (many leaves sampled across the full vineyard block).

6.7.2. Time Efficiency and Operational Throughput

6.7.3. System-Level Comparison

7. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Pertot, I.; Caffi, T.; Rossi, V.; Mugnai, L.; Hoffmann, C.; Grando, M.; Gary, C.; Lafond, D.; Duso, C.; Thiery, D.; et al. A critical review of plant protection tools for reducing pesticide use on grapevine and new perspectives for the implementation of IPM in viticulture. Crop Prot. 2017, 97, 70–84. [Google Scholar] [CrossRef]

- Gessler, C.; Pertot, I.; Perazzolli, M. Plasmopara viticola: A review of knowledge on downy mildew of grapevine and effective disease management. Phytopathol. Mediterr. 2011, 50, 3–44. [Google Scholar]

- Gadoury, D.M.; Cadle-Davidson, L.; Wilcox, W.F.; Dry, I.B.; Seem, R.C.; Milgroom, M.G. Grapevine powdery mildew (Erysiphe necator): A fascinating system for the study of the biology, ecology and epidemiology of an obligate biotroph. Mol. Plant Pathol. 2012, 13, 1–16. [Google Scholar] [CrossRef]

- Bertsch, C.; Ramírez-Suero, M.; Magnin-Robert, M.; Larignon, P.; Chong, J.; Abou-Mansour, E.; Spagnolo, A.; Clément, C.; Fontaine, F. Grapevine trunk diseases: Complex and still poorly understood. Plant Pathol. 2013, 62, 243–265. [Google Scholar] [CrossRef]

- Rahman, M.U.; Liu, X.; Wang, X.; Fan, B. Grapevine gray mold disease: Infection, defense and management. Hortic. Res. 2024, 11, uhae182. [Google Scholar] [CrossRef]

- Shin, J.; Mahmud, M.S.; Rehman, T.U.; Ravichandran, P.; Heung, B.; Chang, Y.K. Trends and prospect of machine vision technology for stresses and diseases detection in precision agriculture. AgriEngineering 2022, 5, 20–39. [Google Scholar] [CrossRef]

- Hassan, M.U.; Ullah, M.; Iqbal, J. Towards autonomy in agriculture: Design and prototyping of a robotic vehicle with seed selector. In Proceedings of the 2016 2nd International Conference on Robotics and Artificial Intelligence (ICRAI), Rawalpindi, Pakistan, 1–2 November 2016; IEEE: New York, NY, USA, 2016; pp. 37–44. [Google Scholar]

- Shafiekhani, A.; Kadam, S.; Fritschi, F.B.; DeSouza, G.N. Vinobot and vinoculer: Two robotic platforms for high-throughput field phenotyping. Sensors 2017, 17, 214. [Google Scholar] [CrossRef]

- Polvara, R.; Molina, S.; Hroob, I.; Papadimitriou, A.; Tsiolis, K.; Giakoumis, D.; Likothanassis, S.; Tzovaras, D.; Cielniak, G.; Hanheide, M. Bacchus Long-Term (BLT) data set: Acquisition of the agricultural multimodal BLT data set with automated robot deployment. J. Field Robot. 2024, 41, 2280–2298. [Google Scholar]

- Navone, A.; Martini, M.; Chiaberge, M. Autonomous robotic pruning in orchards and vineyards: A review. Smart Agric. Technol. 2025, 12, 101283. [Google Scholar] [CrossRef]

- Brenner, M.; Reyes, N.H.; Susnjak, T.; Barczak, A.L. RGB-D and thermal sensor fusion: A systematic literature review. IEEE Access 2023, 11, 82410–82442. [Google Scholar] [CrossRef]

- Yu, Q.; Zhang, J.; Yuan, L.; Li, X.; Zeng, F.; Xu, K.; Huang, W.; Shen, Z. UAV-Based Multimodal Monitoring of Tea Anthracnose with Temporal Standardization. Agriculture 2025, 15, 2270. [Google Scholar] [CrossRef]

- Vahidi, M.; Shafian, S.; Frame, W.H. Multi-Modal sensing for soil moisture mapping: Integrating drone-based ground penetrating radar and RGB-thermal imaging with deep learning. Comput. Electron. Agric. 2025, 236, 110423. [Google Scholar] [CrossRef]

- Martini, M.; Cerrato, S.; Salvetti, F.; Angarano, S.; Chiaberge, M. Position-agnostic autonomous navigation in vineyards with deep reinforcement learning. In Proceedings of the 2022 IEEE 18th International Conference on Automation Science and Engineering (CASE), Mexico City, Mexico, 20–24 August 2022; IEEE: New York, NY, USA, 2022; pp. 477–484. [Google Scholar]

- Ali, M.L.; Zhang, Z. The YOLO framework: A comprehensive review of evolution, applications, and benchmarks in object detection. Computers 2024, 13, 336. [Google Scholar] [CrossRef]

- Yerebakan, M.O.; Hu, B. Human–robot collaboration in modern agriculture: A review of the current research landscape. Adv. Intell. Syst. 2024, 6, 2300823. [Google Scholar] [CrossRef]

- Agrimet Project Website. 2024. Available online: https://agrimet.farm (accessed on 12 January 2026).

- Universal Robots UR10e Page. Available online: https://www.universal-robots.com/products/ur10e/ (accessed on 12 January 2026).

- Upadhyay, A.; Chandel, N.S.; Singh, K.P.; Chakraborty, S.K.; Nandede, B.M.; Kumar, M.; Subeesh, A.; Upendar, K.; Salem, A.; Elbeltagi, A. Deep learning and computer vision in plant disease detection: A comprehensive review of techniques, models, and trends in precision agriculture. Artif. Intell. Rev. 2025, 58, 92. [Google Scholar] [CrossRef]

- Harakannanavar, S.S.; Rudagi, J.M.; Puranikmath, V.I.; Siddiqua, A.; Pramodhini, R. Plant leaf disease detection using computer vision and machine learning algorithms. Glob. Transitions Proc. 2022, 3, 305–310. [Google Scholar] [CrossRef]

- Paymode, A.S.; Malode, V.B. Transfer learning for multi-crop leaf disease image classification using convolutional neural network VGG. Artif. Intell. Agric. 2022, 6, 23–33. [Google Scholar] [CrossRef]

- Thakur, P.S.; Sheorey, T.; Ojha, A. VGG-ICNN: A Lightweight CNN model for crop disease identification. Multimed. Tools Appl. 2023, 82, 497–520. [Google Scholar] [CrossRef]

- Archana, U.; Khan, A.; Sudarshanam, A.; Sathya, C.; Koshariya, A.K.; Krishnamoorthy, R. Plant disease detection using resnet. In Proceedings of the 2023 International Conference on Inventive Computation Technologies (ICICT), Lalitpur, Nepal, 26–28 April 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 614–618. [Google Scholar]

- Hu, W.J.; Fan, J.; Du, Y.X.; Li, B.S.; Xiong, N.; Bekkering, E. MDFC–ResNet: An agricultural IoT system to accurately recognize crop diseases. IEEE Access 2020, 8, 115287–115298. [Google Scholar] [CrossRef]

- Tiwari, V.; Joshi, R.C.; Dutta, M.K. Dense convolutional neural networks based multiclass plant disease detection and classification using leaf images. Ecol. Inform. 2021, 63, 101289. [Google Scholar] [CrossRef]

- Priyadharshini, G.; Dolly, D.R.J. Comparative investigations on tomato leaf disease detection and classification using CNN, R-CNN, fast R-CNN and faster R-CNN. In Proceedings of the 2023 9th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 17–18 March 2023; IEEE: Piscataway, NJ, USA, 2023; Volume 1, pp. 1540–1545. [Google Scholar]

- Cynthia, S.T.; Hossain, K.M.S.; Hasan, M.N.; Asaduzzaman, M.; Das, A.K. Automated detection of plant diseases using image processing and faster R-CNN algorithm. In Proceedings of the 2019 International Conference on Sustainable Technologies for Industry 4.0 (STI), Dhaka, Bangladesh, 24–25 December 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–5. [Google Scholar]

- Gong, X.; Zhang, S. A high-precision detection method of apple leaf diseases using improved faster R-CNN. Agriculture 2023, 13, 240. [Google Scholar] [CrossRef]

- Morbekar, A.; Parihar, A.; Jadhav, R. Crop disease detection using YOLO. In Proceedings of the 2020 International Conference for Emerging Technology (INCET), Belgaum, India, 5–7 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–5. [Google Scholar]

- Ghoury, S.; Sungur, C.; Durdu, A. Real-time diseases detection of grape and grape leaves using faster r-cnn and ssd mobilenet architectures. In Proceedings of the International Conference on Advanced Technologies, Computer Engineering and Science (ICATCES 2019), Alanya, Turkey, 26–28 April 2019; pp. 39–44. [Google Scholar]

- Sun, H.; Xu, H.; Liu, B.; He, D.; He, J.; Zhang, H.; Geng, N. MEAN-SSD: A novel real-time detector for apple leaf diseases using improved light-weight convolutional neural networks. Comput. Electron. Agric. 2021, 189, 106379. [Google Scholar] [CrossRef]

- Hassija, V.; Palanisamy, B.; Chatterjee, A.; Mandal, A.; Chakraborty, D.; Pandey, A.; Chalapathi, G.; Kumar, D. Transformers for Vision: A Survey on Innovative Methods for Computer Vision. IEEE Access 2025, 13, 95496–95523. [Google Scholar] [CrossRef]

- Parez, S.; Dilshad, N.; Alghamdi, N.S.; Alanazi, T.M.; Lee, J.W. Visual intelligence in precision agriculture: Exploring plant disease detection via efficient vision transformers. Sensors 2023, 23, 6949. [Google Scholar] [CrossRef]

- Ouamane, A.; Chouchane, A.; Himeur, Y.; Miniaoui, S.; Atalla, S.; Mansoor, W.; Al-Ahmad, H. Optimized vision transformers for superior plant disease detection. IEEE Access 2025, 13, 48552–48570. [Google Scholar] [CrossRef]

- Gibril, M.B.A.; Shafri, H.Z.M.; Al-Ruzouq, R.; Shanableh, A.; Nahas, F.; Al Mansoori, S. Large-scale date palm tree segmentation from multiscale uav-based and aerial images using deep vision transformers. Drones 2023, 7, 93. [Google Scholar] [CrossRef]

- Mahmoud, H.; Ismail, T.; Alshaer, N.; Devey, J.; Idrissi, M.; Mi, D. Transformers-Based UAV Networking Approach for Autonomous Aerial Monitoring. In Proceedings of the 2025 IEEE/CIC International Conference on Communications in China (ICCC), Shanghai, China, 10–13 August 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 1–6. [Google Scholar]

- Intel Realsense D435 Page. Available online: https://www.intel.com/content/www/us/en/products/sku/128255/intel-realsense-depth-camera-d435/specifications.html (accessed on 12 January 2026).

- Ultralytics. Discover Ultralytics YOLO Models|State-of-the-Art Computer Vision. Available online: https://www.ultralytics.com/yolo (accessed on 12 January 2026).

- Ultralytics. YOLOv10: Real-Time End-to-End Object Detection. Available online: https://docs.ultralytics.com/models/yolov10/ (accessed on 12 January 2026).

- Al-Mashhadani, Z.; Park, J.H. Autonomous agricultural monitoring robot for efficient smart farming. In Proceedings of the 2023 23rd International Conference on Control, Automation and Systems (ICCAS), Yeosu, Republic of Korea, 17–20 October 2023; IEEE: New York, NY, USA, 2023; pp. 640–645. [Google Scholar]

- Mei, J.; Zhu, W. BGF-YOLOv10: Small object detection algorithm from unmanned aerial vehicle perspective based on improved YOLOv10. Sensors 2024, 24, 6911. [Google Scholar] [CrossRef]

- Wang, Q.; Yan, N.; Qin, Y.; Zhang, X.; Li, X. BED-YOLO: An Enhanced YOLOv10n-Based Tomato Leaf Disease Detection Algorithm. Sensors 2025, 25, 2882. [Google Scholar] [CrossRef]

- Tanveer, M.H.; Fatima, Z.; Khan, M.A.; Voicu, R.C.; Shahid, M.F. Real-Time Plant Disease Detection Using YOLOv5 and Autonomous Robotic Platforms for Scalable Crop Monitoring. In Proceedings of the 2024 9th International Conference on Control, Robotics and Cybernetics (CRC), Penang, Malaysia, 21–23 November 2024; IEEE: New York, NY, USA, 2024; pp. 1–4. [Google Scholar]

- Shahriar, S.; Corradini, M.G.; Sharif, S.; Moussa, M.; Dara, R. The role of generative artificial intelligence in digital agri-food. J. Agric. Food Res. 2025, 20, 101787. [Google Scholar] [CrossRef]

- Robots, U. Collaborative Robots Go from Factory to Farm to Refrigerator. Available online: https://www.universal-robots.com/pt/blog/collaborative-robots-go-from-factory-to-farm-to-refrigerator/ (accessed on 12 January 2026).

- Adamides, G.; Edan, Y. Human–robot collaboration systems in agricultural tasks: A review and roadmap. Comput. Electron. Agric. 2023, 204, 107541. [Google Scholar] [CrossRef]

- Ding, D.; Styler, B.; Chung, C.S.; Houriet, A. Development of a vision-guided shared-control system for assistive robotic manipulators. Sensors 2022, 22, 4351. [Google Scholar] [CrossRef]

- Janabi-Sharifi, F.; Marey, M. A kalman-filter-based method for pose estimation in visual servoing. IEEE Trans. Robot. 2010, 26, 939–947. [Google Scholar] [CrossRef]

- Zhong, X.; Zhong, X.; Peng, X. Robots visual servo control with features constraint employing Kalman-neural-network filtering scheme. Neurocomputing 2015, 151, 268–277. [Google Scholar] [CrossRef]

- Kim, M.S.; Ko, J.H.; Kang, H.J.; Ro, Y.S.; Suh, Y.S. Image-based manipulator visual servoing using the Kalman Filter. In Proceedings of the 2007 International Forum on Strategic Technology, Ulaanbaatar, Mongolia, 3–6 October 2007; IEEE: New York, NY, USA, 2007; pp. 581–584. [Google Scholar]

- Wilson, W. Visual servo control of robots using Kalman filter estimates of relative pose. IFAC Proc. Vol. 1993, 26, 633–638. [Google Scholar] [CrossRef]

- Liu, S.; Liu, S. Online-estimation of Image Jacobian based on adaptive Kalman filter. In Proceedings of the 2015 34th Chinese Control Conference (CCC), Hangzhou, China, 28–30 July 2015; IEEE: New York, NY, USA, 2015; pp. 6016–6019. [Google Scholar]

- Rovira-Más, F.; Saiz-Rubio, V.; Cuenca-Cuenca, A. Augmented perception for agricultural robots navigation. IEEE Sensors J. 2020, 21, 11712–11727. [Google Scholar] [CrossRef]

- Galati, R.; Mantriota, G.; Reina, G. RoboNav: An affordable yet highly accurate navigation system for autonomous agricultural robots. Robotics 2022, 11, 99. [Google Scholar] [CrossRef]

- Pfrunder, A.; Borges, P.V.; Romero, A.R.; Catt, G.; Elfes, A. Real-time autonomous ground vehicle navigation in heterogeneous environments using a 3D LiDAR. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; IEEE: New York, NY, USA, 2017; pp. 2601–2608. [Google Scholar]

| Phase | Duration (s) | Variability | Count | Notes |

|---|---|---|---|---|

| Camera acquisition & YOLO inference | 0.05 | ±0.01 | per frame | 30 FPS, <5 ms latency |

| Target selection | 0.5–2.0 | ±0.3 | per target | Depends on scene clutter |

| Arm approach trajectory | 2.0–4.5 | ±0.8 | per approach | Distance-dependent, ∼1.3 m reach |

| Visual servoing to target | 1.0–2.5 | ±0.5 | per approach | Kalman filter smoothing adds stability |

| Thermal image capture | 0.3 | ±0.05 | per sample | Required before sampling |

| Gripper engagement & sampling | 1.0–1.5 | ±0.3 | per sample | Pneumatic activation + cutting |

| Gripper retraction | 0.5 | ±0.1 | per sample | Return to safe position |

| Carousel rotation & vial positioning | 0.8 | ±0.2 | per sample | Stepper motor indexing |

| Sample deposition | 0.3 | ±0.05 | per sample | Gravity flow into vial |

| Return to search position | 1.0–2.5 | ±0.5 | per cycle | Arm repositioning |

| TOTAL per successful sample | 7.0–15.0 | ±2.0 | 13 samples | Average: ∼15 s/sample |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.

Share and Cite

Russo, M.; Santoro, C.; Santoro, F.F.; Tudisco, A. Autonomous Robotic Platform for Precision Viticulture: Integrated Mobility, Multimodal Sensing, and AI-Based Leaf Sampling. Actuators 2026, 15, 91. https://doi.org/10.3390/act15020091

Russo M, Santoro C, Santoro FF, Tudisco A. Autonomous Robotic Platform for Precision Viticulture: Integrated Mobility, Multimodal Sensing, and AI-Based Leaf Sampling. Actuators. 2026; 15(2):91. https://doi.org/10.3390/act15020091

Chicago/Turabian StyleRusso, Miriana, Corrado Santoro, Federico Fausto Santoro, and Alessio Tudisco. 2026. "Autonomous Robotic Platform for Precision Viticulture: Integrated Mobility, Multimodal Sensing, and AI-Based Leaf Sampling" Actuators 15, no. 2: 91. https://doi.org/10.3390/act15020091

APA StyleRusso, M., Santoro, C., Santoro, F. F., & Tudisco, A. (2026). Autonomous Robotic Platform for Precision Viticulture: Integrated Mobility, Multimodal Sensing, and AI-Based Leaf Sampling. Actuators, 15(2), 91. https://doi.org/10.3390/act15020091