1. Introduction

Spatial Augmented Reality (SAR) is a technology that enhances real-world tasks by projecting relevant information directly onto the surfaces of the work environment, instead of relying on external displays such as glasses or smartphones [

1]. Traditional SAR systems utilize a method of installing a fixed projector near the ceiling to secure a wide projection area; however, this approach has limitations in its application to dynamic work environments or ad-hoc spaces [

2]. To overcome these limitations, Robotic Spatial Augmented Reality (RSAR) systems, which integrate robotics technology with SAR, have been proposed [

3]. RSAR dynamically changes the position and pose of the Projector-Camera Unit (PCU) via robotic actuators, thereby offering the potential for a much larger and more flexible workspace than fixed systems.

SAR technology is being actively applied across various industrial and academic fields, demonstrating its effectiveness. For example, in the manufacturing sector, it is widely utilized as a guidance system that projects assembly sequences or part information directly onto work objects, reducing operator errors and increasing efficiency [

4]. Furthermore, pioneering research such as Shader Lamps, which precisely projects computer graphics images onto the surfaces of static physical objects to make them appear as if they are alive and moving, has significantly expanded the artistic and commercial possibilities of SAR technology [

5]. Moreover, systems that combine small, user-worn projectors with retro-reflective materials to provide personalized augmented reality information have extended the application scope of SAR into the personal domain [

6].

To overcome the limitation of a fixed projection area in SAR, recent attempts have been actively made to mobilize the system itself or to combine it with robotics technology [

7]. In the initial stages, the concept of Movable SAR was proposed, where the projector-camera unit is mounted on a movable cart to relocate the workspace as needed. This has further evolved into RSAR, where the projector is directly attached to a multi-joint robotic arm or a mobile robot, enabling an active response to changes in the work environment and objects. In particular, research combining collaborative robots with RSAR to project real-time construction information to workers in unstructured and dynamic environments, such as construction sites, is a clear example of how effectively RSAR can be used in complex real-world industrial settings [

8].

The flexibility of RSAR systems introduces fundamental challenges that are difficult to solve with traditional robotic control methods. For a typical robotic arm, a forward kinematics relationship,

, exists where the 6-Degrees-of-Freedom (6-DOF) pose

p of the end-effector is generally determined given the joint angles

q [

9]. However, in an RSAR system, the final end-effector perceived by the user is the image formed by the light projected from the projector onto the surface of the real environment. This final output depends not only on the actuator’s pose but also on the external environment, such as the position of the wall or the shape of objects where the light lands, making it impossible to establish an analytical model to predict it directly from joint angles alone.

To solve this problem, this study adopts an approach that simplifies the control pipeline. In an RSAR system, the camera and projector are fixed on each other as a single unit at the end of the robotic arm; therefore, the relative transformation relationship between them is constant. Consequently, if the 6-DOF pose of the camera relative to the world coordinate system can be accurately determined, the projector’s pose can be easily calculated analytically. Ultimately, the complex control problem of RSAR converges to the core task of “accurately predicting the camera’s 6-DOF pose, an intermediate parameter, from the actuator’s joint angle inputs.”

While the 2-DOF actuator might suggest simple kinematics, the core RSAR challenge lies in mapping these two joint inputs to a 6-DOF camera pose (

and

). This

mapping, particularly for the non-Euclidean

rotation manifold, is inherently complex and highly non-linear. User-Created Robotics (UCR) or ad-hoc assembled RSAR systems often exacerbate this challenge, making it difficult to obtain precise kinematic specifications, such as computer-aided design models, or assembly processes may introduce minute errors, causing theoretical analytical models to fail to represent the actual system. Thus, the problem is not easily solvable by lightweight regression or simple analytical models, necessitating a more powerful data-driven approach. This loose kinematic specifications problem is a common challenge in other robotics fields as well, such as soft robotics, where analytical modeling is difficult, and data-driven approaches, which directly learn the system’s input–output relationship based on actual operational data, are being researched as an effective alternative [

10].

Prior research has utilized B-spline surface fitting techniques for such data-driven control [

3]. This method approximates and represents the relationship between joint angles and camera extrinsic parameters as a B-spline surface derived from sampled data. While this demonstrated the possibility of controlling kinematics without an analytical model, B-splines alone have limitations in accurately representing the relationship when the actuator’s movement becomes complex and exhibits high non-linearity. Therefore, a more sophisticated machine learning or deep learning-based approach is required to learn the complex and subtle intrinsic patterns within the data. This study focuses on providing this foundational, data-driven kinematic model, which is a prerequisite for the subsequent implementation of dynamic controllers, especially in systems where analytical models are unavailable.

The final objective of this study is to propose an optimal data-driven model that most accurately and stably predicts the 6-DOF pose of the camera from the joint angle information of a 2-axis Pan-Tilt actuator. To this end, this paper makes the following three key contributions. First, we propose a novel deep learning model based on LSTM–Attention, which combines Long Short-Term Memory (LSTM) [

11], known for its strength in time-series data processing, with an Attention Mechanism [

12] that focuses on important features for prediction. Second, we quantitatively and qualitatively compare the performance of the proposed model with classic machine learning models such as Polynomial Regression [

13], Support Vector Regression (SVR) [

14], and Random Forest [

15], demonstrating the superior ability of the proposed model to model complex non-linear kinematic relationships. Third, we present a simulation-based verification framework. This approach is intentionally chosen to fundamentally eliminate measurement noise from physical sensors, allowing for a clear comparison of the models’ intrinsic performance and theoretical upper bounds [

16]. We acknowledge this as a clear limitation, as the study does not account for critical real-world factors such as calibration errors, mechanical backlash, or complex environmental dynamics. Therefore, this work aims to establish a methodological foundation for evaluating the theoretical validity of data-driven models, which serves as a crucial preceding step before addressing real-world robustness.

2. Materials and Methods

2.1. Principle of Pose Estimation in RSAR

The geometric modeling of the RSAR system discussed in this study is based on the pinhole camera model. To express geometric transformations in 3D space as linear transformations, a 3D point

in the world coordinate system is represented as a homogeneous coordinates vector

by adding an additional dimension (w = 1). This representation allows 3D rotation and translation transformations to be concisely combined into a single 4 × 4 matrix multiplication. The process of projecting this 3D point

onto the 2D camera image plane at pixel coordinates

is determined by the camera’s intrinsic and extrinsic parameters, and can be expressed by the following homogeneous equalityrelation:

Here, s is a non-zero scale factor proportional to the depth of the 3D point, is the camera intrinsic parameter matrix, and denotes the extrinsic parameter matrix. The above equation implies that the result of projecting the 3D point differs from the final pixel coordinates by a constant factor; the actual pixel coordinates are obtained by normalizing the resulting vector on the right-hand side by dividing by its last element.

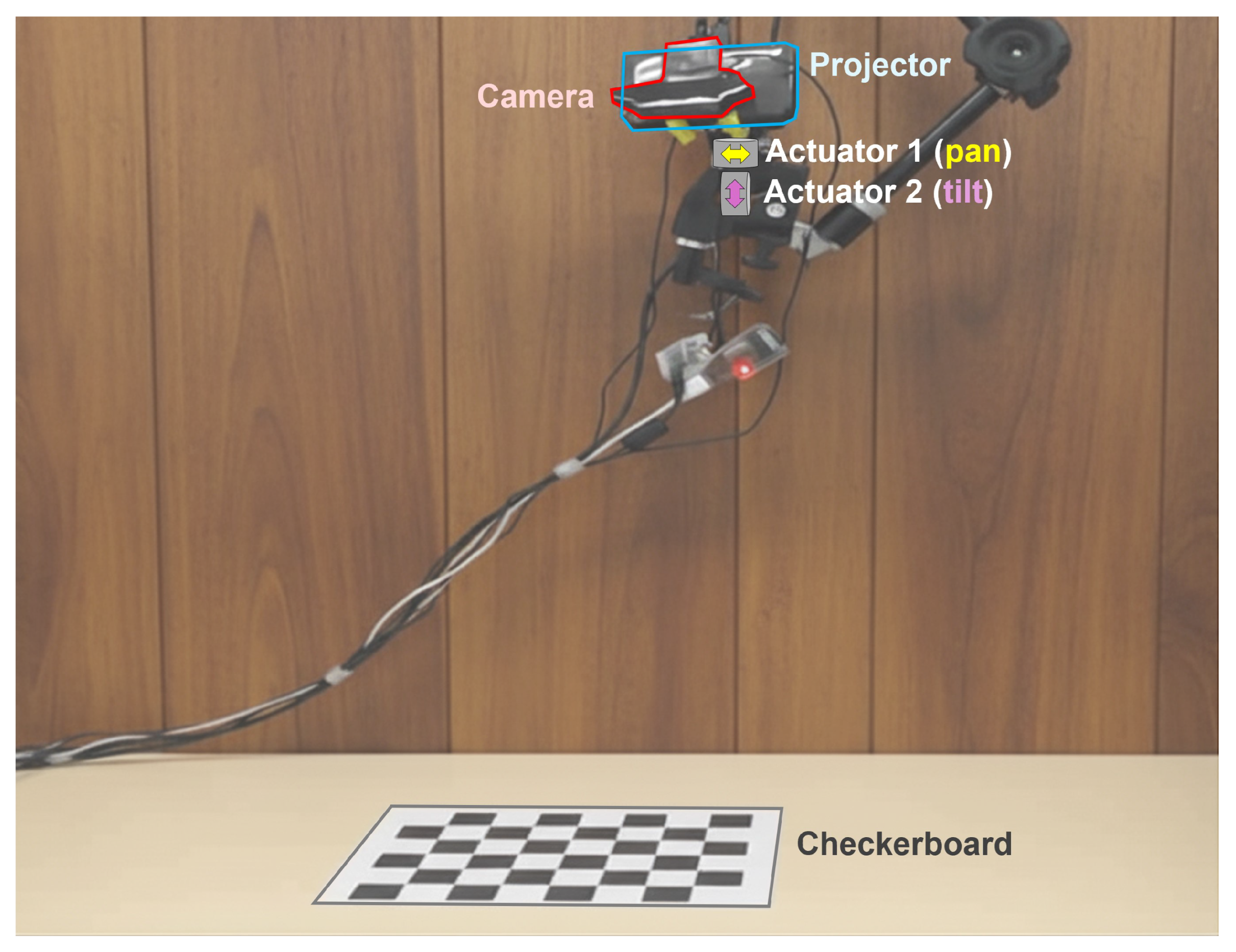

The RSAR system proposed in this study is based on a 2-axis Pan-Tilt actuator (Dynamixel XC430-T150BB-T), as shown in

Figure 1, instead of a complex multi-DOF robotic arm. This is because the primary purpose of the RSAR envisioned in this research is not complex manipulation, such as grasping or handling objects, but rather effectively changing the projector’s pose towards a target plane, like a flat wall or screen. To achieve this objective, 2-DOF rotation is sufficient to cover a wide area and determine the pose of the PCU. Therefore, the Pan-Tilt mechanism is the most suitable and efficient actuator configuration for performing the core functions of RSAR while reducing system complexity. Consequently, the control problem in this study is defined as predicting the 3D spatial pose of the PCU from the two joint angle inputs of this Pan-Tilt actuator.

The camera intrinsic parameter matrix

defines the unique optical characteristics of the camera lens; it is determined through a one-time calibration process after system assembly and remains a constant value thereafter. This matrix consists of the focal lengths (

) and the principal point (

).

In contrast, the extrinsic parameters represent the relative position and orientation of the camera with respect to the world coordinate system, and these values change in real time as the actuator moves. The extrinsic parameters are expressed as a combination of a 3 × 3 rotation matrix

R and a 3 × 1 translation vector

t and can be represented as a 4 × 4 matrix

for Homogeneous Transformation.

Here, the rotation matrix R represents the 3D orientation of the camera, and the translation vector t represents its 3D position. This combined 6-DOF pose is the target we must predict according to the actuator’s movement.

In the RSAR system’s PCU, the projector is modeled on the same principle as the camera. The core assumption of the system is that the relative transformation relationship between the camera and the projector

is fixed as a rigid-body transformation. Therefore, a clear relationship holds between the camera’s extrinsic parameters

and the projector’s extrinsic parameters

:

The above equation reduces the complexity of the RSAR control problem. Since

and

are both predetermined constant values, the only variable we need to determine as the actuator’s Pan-Tilt joint angles (

) change is the camera’s extrinsic parameter

, which varies in real-time. Therefore, the core task of this study is to learn a non-linear function

f from data that accurately estimates the camera’s extrinsic parameter matrix

from the joint angles (

).

Finding the most effective method to model this function f is the main objective of this paper.

2.2. Experimental Setup and Data Preprocessing

To train the proposed models and objectively evaluate their performance, a large-scale dataset consisting of numerous pan-tilt joint angles (

) and their precisely corresponding 6-DOF camera poses

is essential. However, fundamental limitations exist in the process of acquiring such ground-truth data using a physical RSAR system. The reference position of extrinsic parameters, such as the camera’s optical center, is nearly impossible to measure directly physically and must typically be estimated indirectly through camera calibration techniques using vision markers like checkerboards. This estimation process, however, inevitably generates data containing measurement noise due to various factors such as camera lens distortion, marker corner detection errors, and lighting variations [

17].

This data contamination problem can act as a serious confounding variable in model performance evaluation. If a model is evaluated using noisy data as the ground-truth, the result measures not only the model’s pure predictive ability but also its robustness to noise, making a fair comparison difficult. Therefore, to overcome this limitation and to most clearly compare the pure learning and prediction capabilities (intrinsic performance) of various models, this study adopted an approach of constructing an ideal simulation environment. This ensures that the experimental results aim to primarily reflect the performance of the model itself, rather than external factors.

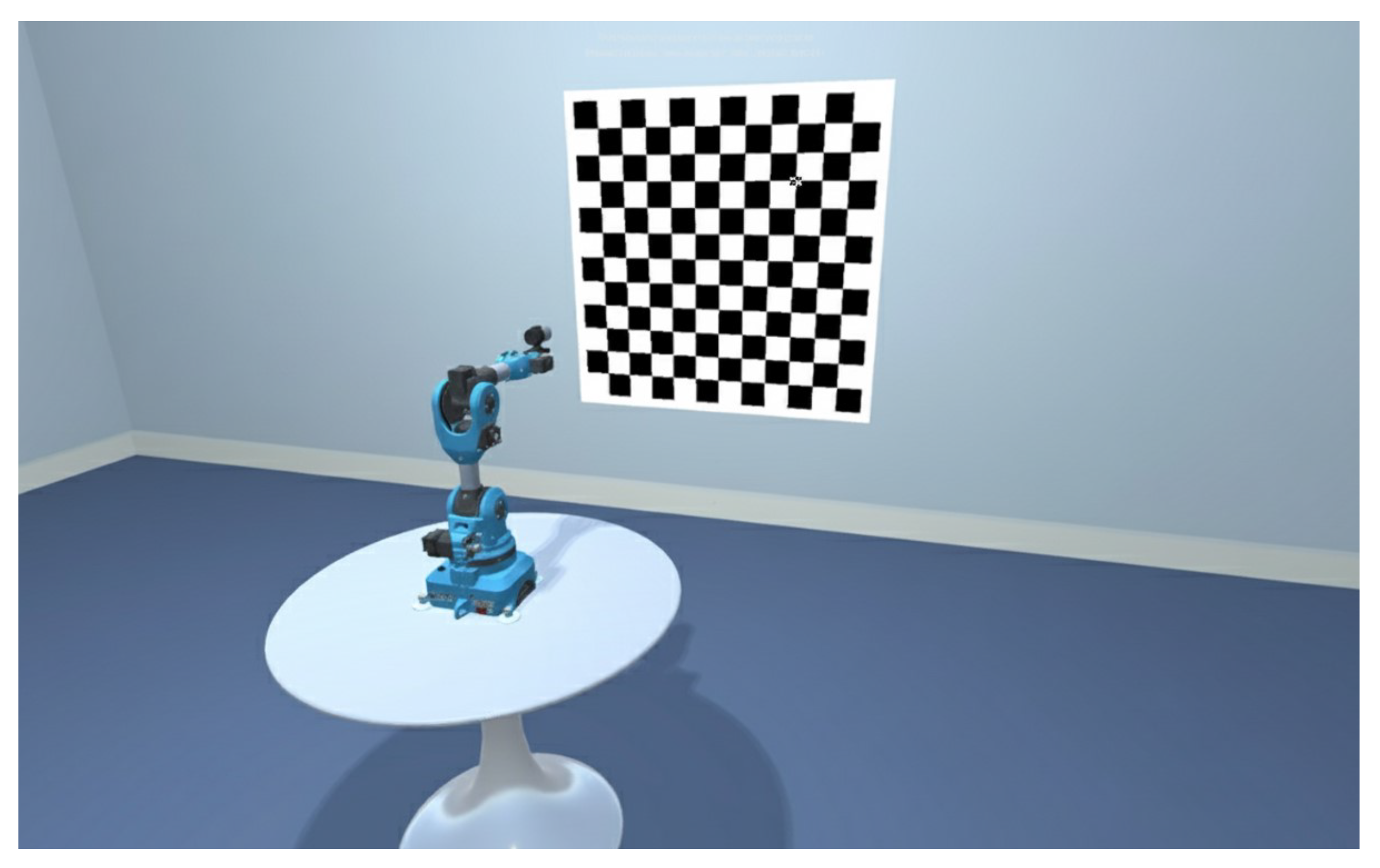

To construct a virtual RSAR system, the robot’s kinematic properties were first defined using the Unified Robot Description Format (URDF). URDF is a standard framework in robotics for describing physical specifications—such as link lengths, joint positions and rotation axes, and the relative attachment position of the PCU—in an extensible markup language format. This URDF-defined robot model was imported into the Unity game engine to configure the entire simulation environment. The Unity environment provides a precise physics engine, making it possible to issue specific joint angle commands to the virtual Pan-Tilt actuator and accurately extract the resulting 6-DOF camera pose values without error.

Figure 2 shows the Unity simulation environment constructed in this manner.

Using this simulation environment, the dataset was generated by systematically moving the Pan and Tilt joints within their respective operating ranges and recording the camera’s 6-DOF pose corresponding to each joint angle combination. Although the simulation can generate virtually limitless data, this study intentionally limited the dataset size to over 3000 samples. This was to simulate the realistic constraint that, when collecting data in a real environment, considerable time is required to capture a checkerboard and calculate the pose for each sample. Therefore, a sample size of 3000 represents a scale sufficient for comparing the performance of various machine learning models while considering realistic data acquisition costs, which enhances the practical validity of this study’s results. The structure of the generated dataset is as specified in

Table 1.

To effectively use the raw data obtained from the simulation for model training, preprocessing steps tailored to the characteristics of the input and output data were applied respectively. First, the input data, the Pan-Tilt angles (

), have a cyclical characteristic. For example, 0 degrees and 360 degrees represent the same physical direction, but the numbers themselves show a large difference. To resolve this discontinuity problem, trigonometric encoding was applied, converting each angle value into its sine and cosine values.

Through this transformation, the model can geometrically recognize that 359 degrees and 1 degree are close to each other, enabling more continuous and consistent learning.

Second, Euler angles, which represent the orientation component of the 6-DOF pose output data, are vulnerable to the Gimbal Lock phenomenon, where two or more axes overlap at specific poses, resulting in a loss of degrees of freedom [

18]. This is a major cause of instability in training regression models; therefore, this study used Quaternions (

), which represent rotation with four elements, instead of the three Euler angles. Quaternions are free from the Gimbal Lock problem and are more suitable for deep learning model training as they are advantageous for continuous rotational interpolation. Finally, all models were designed to predict a total of seven variables, combining the 3D position (

) and the 4D quaternion (

).

2.3. Proposed Model: LSTM–Attention for Pose Prediction

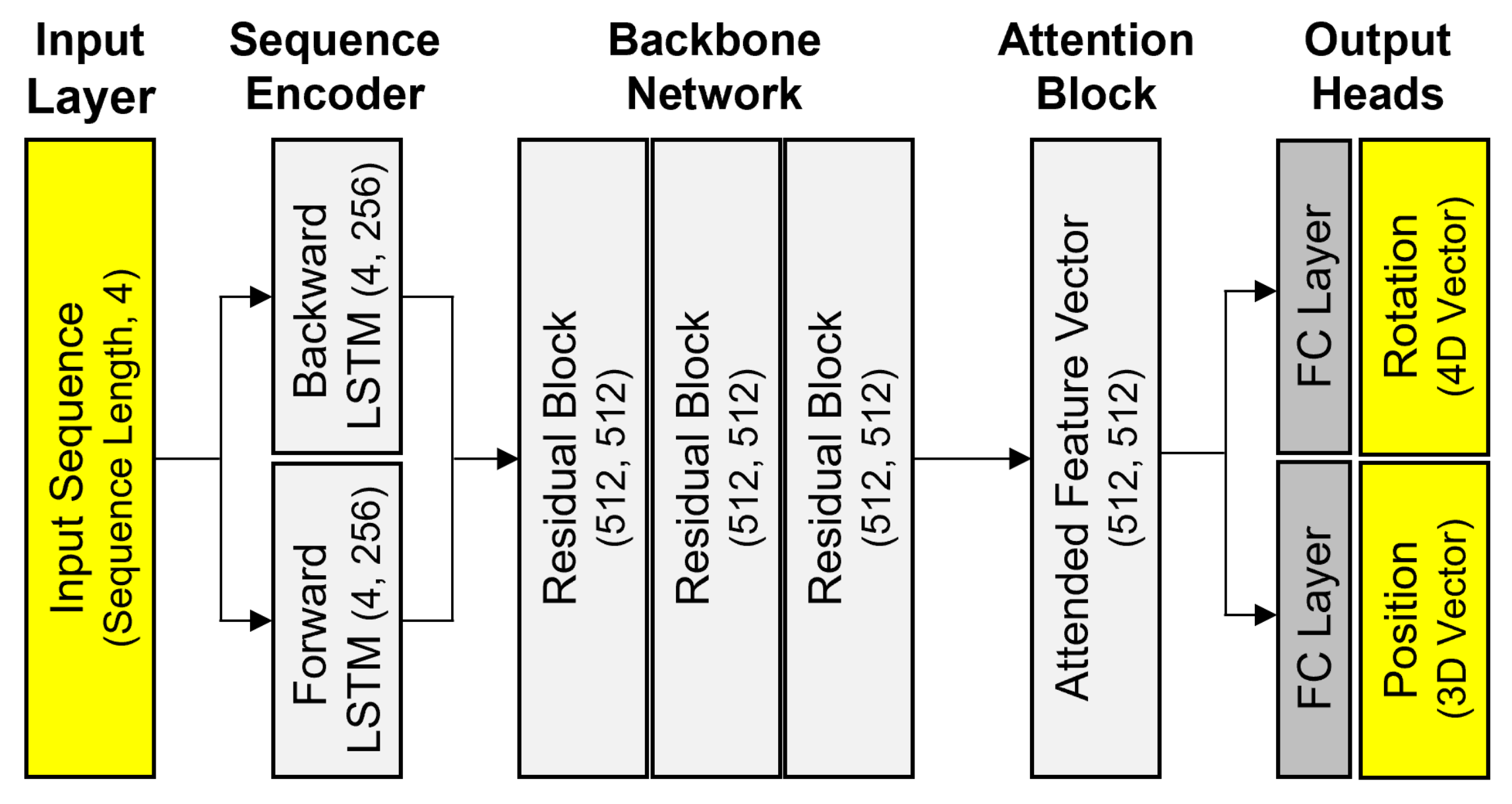

In this study, to model the complex and non-linear relationship between the Pan-Tilt actuator’s joint angles and the camera’s 6-DOF pose, we propose a novel deep learning architecture combining LSTM, which has strengths in sequential data processing, with an Attention Mechanism. The overall architecture of the proposed model is illustrated in

Figure 3, and it consists of a bidirectional LSTM layer to extract sequential features from the input data, residual blocks to deepen the expressive power of the extracted features, an attention block to re-weight the importance of features, and multi-task learning heads that independently predict position and rotation. Each component is organically designed to maximize prediction accuracy and the model’s generalization performance.

2.3.1. Model Architecture Overview

The data processing flow of the proposed model is as follows. First, the 4-dimensional input vector, preprocessed through trigonometric encoding, is expanded into 2nd-degree polynomial features to represent non-linear interactions between input features. This expanded feature vector is then structured into a sequence for time-series processing and passed to the bidirectional LSTM layer. The bidirectional LSTM learns both the forward and backward contexts of the input sequence, generating a fixed-size feature vector that compresses temporal characteristics. This feature vector passes through a backbone network composed of several residual blocks, where it is transformed into more sophisticated features via a deep network addressing the vanishing gradient problem. Subsequently, a self-attention mechanism dynamically calculates and applies weights based on the inter-relationships between elements within this feature vector. Finally, the attention-applied feature vector is fed into two independent fully-connected layers, which predict the position and rotation respectively, to output the final predictions.

2.3.2. Sequential Feature Extraction with Bidirectional LSTM and Residual Blocks

Since the actuator’s movement has continuous characteristics over time, this study adopted LSTM, a type of Recurrent Neural Network (RNN), to learn this time-series dependency [

11]. In particular, noting that the camera pose at the current time step can be influenced not only by previous angles but also by subsequent angle changes, a Bidirectional LSTM (Bi-LSTM) was used instead of a unidirectional one. Bi-LSTM processes the input sequence in both forward and backward directions and then generates the final features by concatenating the hidden states from each time step. For an input sequence

with sequence length

L and input feature dimension

, the Bi-LSTM calculates a forward hidden state

and a backward hidden state

at each time step

t. In this model, with a structure stacking two LSTM layers, the final forward hidden state (

) and final backward hidden state (

) from the last layer are concatenated to generate a feature vector

that compresses the information of the entire sequence.

This generated

vector then passes through a backbone network composed of residual blocks. The residual block is a key mechanism that helps ensure stable learning even as the network deepens by directly adding the input signal to the output via a skip connection structure [

19]. Thanks to this structure, the model can effectively extract deeper and richer features from the time-series information.

2.3.3. Attention Mechanism for Feature Refinement

The feature vector refined by passing through the residual blocks is transferred to the attention block to be further sophisticated. The attention mechanism is a technique based on the idea that not all elements of a feature vector are equally important, dynamically assigning higher weights to elements that have a more decisive impact on the final prediction [

12]. This model adopts a Multi-Head Self-Attention structure, where the query, key, and value are all derived from the same input feature vector [

20]. This allows the model to learn the inter-relationships among features from different perspectives by dividing the feature vector into multiple heads and performing attention in parallel.

When the input feature vector is denoted as

, the attention score is calculated through the dot product of the query and key, and then converted into weights

after scaling and passing through a soft-max function. Finally, the attention-applied feature vector

is obtained by a weighted sum of these weights and the value vectors. Finally, the attention-applied feature vector

is obtained by a weighted sum of these weights and the value vectors, using the standard scaled dot-product attention formula [

20]:

Here, are all generated from through a linear transformation, and is the dimension of the key vector. Through this process, the model learns to identify which feature combinations are most important in the complex mapping relationship between the Pan-Tilt angles and the camera pose, suppressing noisy features unnecessary for prediction and focusing on core features to enhance prediction accuracy.

2.3.4. Multi-Task Learning for Pose Prediction

Finally, the feature vector

, refined through attention, is split into two independent branches to predict the 6-DOF pose. This is a Multi-Task Learning structure that simultaneously learns two sub-tasks with different physical and mathematical characteristics: position (3D vector) and rotation (4D quaternion) [

21]. Instead of predicting all variables at once in a single large output layer, separate heads specialized for each task are used to induce the model to learn the unique characteristics of each task more effectively.

The position prediction head is a fully-connected layer that takes

as input and outputs a 3D position vector

. This head is trained to minimize the Mean Squared Error (MSE) between the predicted position vector

and the ground-truth position vector

t. The MSE loss function is defined as follows:

Here, N is the batch size, and denotes the squared Euclidean distance.

The rotation prediction head outputs a 4D quaternion

. Since the predicted quaternion must be a unit vector, it is normalized to a magnitude of 1 via L2 normalization before calculating the loss. To measure the quaternion rotation error, this study used a Geodesic Loss, which directly calculates the actual angular difference between two unit quaternions [

18]. The dot product of the normalized predicted quaternion

and the ground-truth quaternion

q is computed, and the absolute value of this dot product is taken to account for the two ways a quaternion can represent the same rotation (

q and

). This value is then passed through an arc-cosine (arc-cos) function to calculate the shortest angle (in radians) between the two quaternions.

In this multi-task learning structure, the two loss functions are combined via a weighted sum using the hyper-parameter

for the rotation loss to constitute the model’s final loss,

.

This integrated loss function is used to update all parameters of the model through back-propagation, and it provides a regularization effect that encourages the shared backbone network to learn generalized features useful for both the position and rotation tasks, contributing to an improvement in overall prediction performance.

2.4. Baseline Models

To objectively validate the performance of the proposed LSTM–Attention model, three classic algorithms widely used in the machine learning field were selected as baseline models. These models were trained and evaluated using data that underwent the same preprocessing steps as the proposed model. Specifically, the 4-dimensional input vector with trigonometric encoding applied was used as the input for all baseline models.

Furthermore, the 7-dimensional output vector (three for position, four for quaternion) was normalized using Standard Scaler to enhance the stability and efficiency of the training process [

22]. Standard Scaler is a standardization technique that transforms each output variable to have a mean of 0 and a variance of 1. This process prevents the error of a specific variable from having an excessive influence on the overall loss function when output variables have different scales, helping the model to learn all output variables in a balanced manner. The results predicted by the model were scaled back to the original data’s scale for final performance evaluation.

Since the baseline models used in this study were inherently designed for single-output prediction, the Multi Output Regressor wrapper from the Scikit-learn library was commonly used to apply them to the multi-output prediction problem [

22]. Multi Output Regressor is a strategy for solving multi-target regression problems, which trains one independent single model for each output variable. For example, to predict 7 output variables, 7 independent SVR models are created, and each model is trained individually to predict only one output variable. This method has the advantage of simple implementation but cannot learn the potential correlations between output variables.

2.4.1. Polynomial Regression

Polynomial Regression is a statistical technique that models non-linear relationships by assuming the relationship between independent and dependent variables is an n-degree polynomial [

13]. Whereas linear regression can only express linear relationships in data, polynomial regression can learn complex curve patterns inherent in the data by creating new features through exponentiation of input features and applying these to a linear regression model. This model has the advantages of simple implementation and low computational cost, so it is often utilized as a basic baseline for gauging the performance of complex models. However, if the degree is too high, overfitting to the training data can occur, and conversely, if it is too low, underfitting can occur, failing to sufficiently express the complexity of the data.

The mathematical model of Polynomial Regression begins by generating an expanded feature vector

for an input feature vector

. For example, when expanding 2 features

into a 2nd-degree polynomial, it is expressed as

. The predicted value

is calculated as a linear combination of this generated expanded feature vector and a weight vector

.

Here, M is the total number of expanded features, and the model aims to find the weights and bias b that minimize the loss function (typically MSE).

In this study, the four input features were expanded into a 3rd-degree polynomial using polynomial features from the Scikit-learn library. This creates a high-dimensional feature space that includes not only the original features but also interaction terms and polynomial terms between features. A standard Linear Regression model, using these expanded features as input, was trained to predict the seven output variables. The Multi Output Regressor wrapper performs the role of training an independent polynomial regression model for each of the seven output variables, thereby enabling the simultaneous prediction of multi-dimensional output.

2.4.2. Support Vector Regression

SVR is an algorithm that extends the principles of Support Vector Machines, which show excellent performance in classification problems, to regression problems [

14]. The core idea of SVR is to define an

-insensitive tube, which allows for a certain level of error between the predicted and actual values. The error of data points existing within this tube is disregarded in the loss calculation, and the model is trained to minimize only the errors of data points that fall outside the tube. Simultaneously, the model maximizes the margin, which in regression can be interpreted as an attempt to maintain the widest possible tube. This approach makes SVR less sensitive to small noise in the data and helps it achieve good generalization performance.

SVR controls model complexity by minimizing the L2-norm of the weight vector

and imposes penalties for errors exceeding the

-tube through slack variables

. The objective function for this is formulated as follows:

The above equation is minimized under the following constraints:

Here, is the regularization parameter, which adjusts the balance between model complexity and the allowable training error range. is the kernel function that maps the input data into a high-dimensional feature space.

This optimization problem is typically solved by converting it into a dual problem using Lagrangian duality, with the Lagrange multipliers

as variables. Using the solution to this dual problem, the weight vector

can be expressed as a linear combination of the input data:

. The data points

for which the

values are non-zero are called the support vectors. SVR can model non-linear relationships without explicitly transforming the input data into a high-dimensional space by using the Kernel Trick. In this study, the Radial Basis Function (RBF) kernel, which is highly effective for modeling non-linear relationships, was used, and its definition is as follows:

By substituting the dual representation of

into the prediction function

and applying the kernel trick, the final prediction function is obtained. Once training is complete, the prediction for a new input

is made through the following function, where only the support vectors (where

) contribute to the prediction.

The overall training and prediction process of SVR is shown in Algorithm 1.

In this study, SVR using the RBF kernel was adopted as a baseline model to learn the complex non-linear relationship between the Pan-Tilt angles and the camera pose. The main hyper-parameters of the model were set to the default values of the Scikit-learn library: the regularization parameter

and

, which determines the size of the

-insensitive tube. Through Multi Output Regressor, SVR models for each of the 7 output variables were trained independently, thereby effectively performing multi-dimensional pose prediction.

| Algorithm 1 Support Vector Regression (SVR) Process |

- 1:

Input: Training data , Hyperparameters - 2:

Training Phase: - 3:

Solve the optimization problem defined in Equation ( 13) to find the Lagrange multipliers and the bias b. - 4:

Identify the support vectors (data points where ). - 5:

Output: The prediction model . - 6:

Prediction Phase: - 7:

Input: A new data point . - 8:

Compute the predicted value using the support vectors and the learned parameters: - 9:

Return.

|

2.4.3. Random Forest

Random Forest is a type of ensemble learning technique that generates multiple Decision Trees during the training process and derives the final prediction value by aggregating (averaging) the prediction results of each tree [

15]. The two core features of Random Forest are Bagging (Bootstrap Aggregating) and feature randomness. Bagging is a technique that independently trains each tree using multiple bootstrap samples, which are generated by random sampling with replacement from the entire training dataset. Furthermore, when finding the optimal feature for a split at each node of a tree, instead of considering all features, it randomly selects a subset of features and finds the best split only from that subset.

These two randomness factors serve to reduce the correlation between individual trees. Consequently, this mitigates the high variance problem that a single decision tree can have and significantly reduces the model’s tendency to overfit the training data. By averaging the prediction results of numerous trees, Random Forest can build a predictive model that is robust to noise, stable, and highly accurate. The training and prediction process of Random Forest is shown in Algorithm 2.

| Algorithm 2 Random Forest Algorithm |

- 1:

for b = 1 to B (number of trees) do - 2:

Draw a bootstrap sample of size N from the training data D. - 3:

Grow a regression tree on by recursively repeating the following steps for each node, until the stopping criterion (e.g., max_depth) is met: - Select m features at random from the full set of p features. - Pick the best feature and split-point among the m features. - Split the node into two child nodes. - 4:

end for - 5:

Output: The ensemble of trees . - 6:

Prediction: For a new input , the prediction is the average of all individual tree predictions: .

|

In this experiment, a total of 100 decision trees were generated. Furthermore, to prevent over-fitting of each tree and control model complexity, the maximum depth of each tree was limited to max_depth = 10. Similar to the other baseline models, Multi Output Regressor was used to configure and train an independent Random Forest model for each of the seven output variables. This has the effect of allowing each output variable to be predicted by the ensemble of trees best suited to it.

4. Discussion

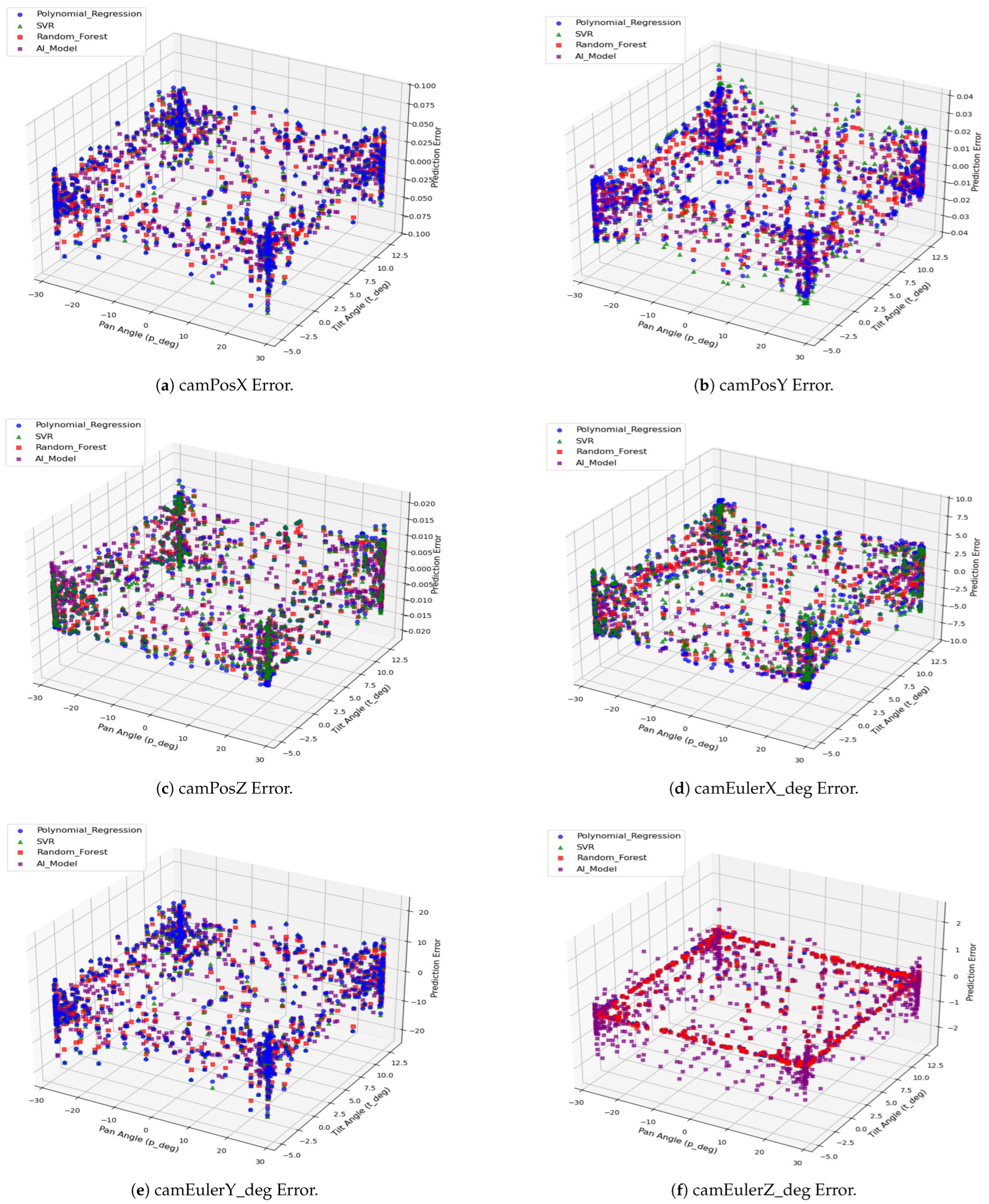

The experimental results presented in

Section 3 clearly demonstrate that the proposed LSTM–Attention model is the most effective approach for solving the forward kinematics problem of RSAR systems. In both the quantitative figures in

Table 3 and the qualitative distribution in

Figure 4, the proposed model demonstrated significant accuracy and stability over the baseline models. This performance gap demonstrates that simpler, traditional methods (e.g., lightweight regression) have clear limitations and are insufficient for this task. Our baseline models, Polynomial Regression and SVR, represent this exact category. This performance difference is analyzed to stem from the fundamental differences in the models’ architectures and learning strategies. Polynomial Regression and SVR showed clear limitations in capturing the data’s complex non-linearity, and while Random Forest showed respectable performance, it did not demonstrate as much consistency (low Std) or outlier suppression capability as the proposed model. This is interpreted as the proposed model’s bidirectional LSTM layer effectively learning the time-series dependency of the pose according to the continuous changes in Pan-Tilt angles, and the attention mechanism increasing prediction accuracy by selecting features important for the prediction.

The most core insight of this study is found in the 6-DOF component-wise error analysis of

Figure 5, particularly in

Figure 5f, which deals with the

Z-axis rotation (camEulerZ_deg) error. In the case of position error (a–c), while all models commonly show a tendency for the error to increase at the boundaries of the operating range, the error of the proposed model (purple crosses) generally remains close to 0 and is stably distributed within a very narrow bandwidth. In contrast, Polynomial Regression (blue circles) and SVR (green triangles) frequently generate spike-shaped errors where predictions deviate significantly in specific regions, clearly showing the instability of the predictions. In the rotation error (d–f) analysis, the proposed model demonstrates superior performance with a significantly lower error distribution compared to the baseline models for

X-axis (d) and

Y-axis (e) rotations. However, a contrary result appears in

Figure 5f, which shows the

Z-axis rotation error. The baseline models, including Random Forest (red squares), show errors close to almost 0, whereas the proposed model’s (purple crosses) error is relatively more widely distributed and shows the largest error. This phenomenon originates from the fundamental difference in the learning methods of the baseline models and the proposed model.

As described in

Section 2.4, the baseline models were individually trained to predict each of the seven outputs, including the

Z-axis rotation independently. Due to the characteristics of the Pan-Tilt simulation system in this study, the

Z-axis rotation (camEulerZ) value is close to almost 0 across the entire dataset; therefore, the baseline models are easily optimized to predict this

Z-axis value close to 0. In contrast, the proposed model, as defined in

Section 2.3.4, uses a multi-task learning method that minimizes the overall geodesic distance (Equation (

20)) between quaternions by integrating the 3-axis rotation. Therefore, the proposed model is trained in a direction that minimizes the error of the entire 3D rotation, including the

X-axis and

Y-axis, even if some individual error occurs in the

Z-axis. This result, as shown in

Figure 5f, occurred due to the specificity of the current simulation data where the

Z-axis error is close to 0, and this should be interpreted as a result signifying that the proposed model achieved the most accurate and robust comprehensive pose estimation performance in 3D space, as already demonstrated in

Table 3.

This study focused on verifying the ideal performance of the model based on simulation data. We acknowledge that this simulation-only approach is a significant limitation, as it does not capture the complexities of a physical system. Future research must, therefore, prioritize verifying the model’s robustness and applicability using data collected from actual RSAR hardware. This validation must address critical factors omitted in this study, such as measurement noise from physical sensors, calibration errors, and mechanical backlash inherent in the actuators. It is anticipated that such real-world noise will degrade prediction accuracy. Overcoming this sim-to-real gap will likely require adaptation strategies; for example, transfer learning could be employed by fine-tuning the simulation-trained model with a smaller, calibrated real-world dataset, or a residual model could be trained to predict the discrepancy between simulation and reality. Furthermore, incorporating online adaptation techniques to respond to real-time environmental dynamics, such as varying surface geometries, remains a crucial challenge. Exploring the generalization possibility by applying this modeling method to more complex multi-DOF robotic arms, beyond the current Pan-Tilt 2-axis system, will also be a meaningful follow-up study.

5. Conclusions

This study addressed the unique control challenge of RSAR systems, whose end-effector is a projection form, and its final position depends on both the actuator’s pose and the external environment’s geometry. This characteristic, combined with the loose kinematic specifications problem that makes analytical modeling difficult, raises the necessity for a data-driven approach to solve the system’s forward kinematics problem. To this end, a deep learning architecture based on LSTM–Attention was designed to accurately predict the 6-DOF pose of the camera from the joint angles of a 2-axis Pan-Tilt actuators. The proposed model features a bidirectional LSTM for time-series feature extraction, an Attention Mechanism for feature refinement, and a multi-task learning structure that optimizes position and rotation separately. In particular, for 3D rotation prediction, a Geodesic Loss between quaternions was applied to stably learn the pose without the Gimbal Lock problem.

To verify the proposed model’s pure intrinsic performance, a simulation environment based on Unity and URDF, which excluded actual measurement noise, was constructed to generate a benchmark dataset. As a result of comparing the proposed model’s performance with the three baseline models—Polynomial Regression, SVR, and Random Forest—it was demonstrated that the proposed model achieved the most superior performance across all quantitative evaluation metrics (MAE, RMSE, Std). The position error MAE was 18.00 mm, and the rotation error MAE was recorded at 3.723 degrees, showing performance improvements of approximately 9.5% and 17.6%, respectively, compared to the second-place model, Random Forest.

As a result of the qualitative error distribution analysis, the proposed model, unlike the baseline models, effectively suppressed the occurrence of extreme outliers and showed very stable prediction reliability. In particular, the characteristics of the Z-axis rotation error identified in the 6-DOF individual axis analysis are a key result proving that the proposed model, unlike the baselines that learn each axis independently, achieved a more accurate and holistic comprehensive pose estimation performance in 3D space. This suggests that the proposed LSTM–Attention model is an effective methodology for accurately modeling the complex non-linear kinematic relationship of the RSAR system.