1. Introduction

Over the past few years, large-scale (LS) system models have been widely studied in unmanned aerial vehicle (UAV) systems, smart transportation, microgrid systems and other fields [

1,

2,

3]. These systems are usually composed of multiple low-dimensional subsystems with complex dynamic coupling relationships. However, the traditional centralized control method cannot effectively deal with the interaction between subsystems, which threatens the stability of the whole system [

4]. Consequently, decentralized control approaches have been widely concerned, which mainly use local state information for control design, rather than relying on the global state information of the whole system [

5]. In [

6], a decentralized robust optimal control (OC) method was used for LS systems subject to constrained structural variations, and its superior performance was proved in power systems. In [

7], a neural network (NN)-based decentralized adaptive control approach is put forward to tackle the control issue of nonlinear large-scale (NLS) systems. In [

8], a novel decentralized control approach for discrete-time LS systems was presented, which ensures the passivity properties of subsystems by locally solving semi-definite programs, thereby achieving asymptotic stability for the entire system.

The OC strategy for LS systems optimizes multiple performance indicators by adjusting control parameters [

9,

10]. Different from the adaptive control strategy [

11,

12], the adaptive dynamic programming (ADP) method, which learned future optimal strategies based on current states and actions, was introduced to solve OC problems [

13,

14,

15]. In [

16], a novel ADP approach was designed to address the OC issue of unknown LS systems with application to the fuel control system for turbofan engines. In [

17], a decentralized ADP-based OC approach was developed to address the control issue for multi-agent systems. In [

18], for the nonlinear system with state constraints, the authors presented a new ADP approach for tackling OC issues. In recent years, the ADP algorithm has effectively handled uncertainties in NLS systems with matched and unmatched interconnections while offering an approach for tackling decentralized OC issues. In [

19], ADP methodology was employed to address the decentralized OC issue in NLS systems subject to unknown time delays and mismatched interconnections, for which a novel cost function was developed for converting decentralized control issues into an OC problem. In [

20], by solving a series of Hamilton–Jacobi–Bellman equations for auxiliary subsystems, a decentralized NN control scheme was proposed via the ADP algorithm. In [

21], a synchronous value iteration algorithm based on the ADP method was formulated for the restricted optimization issue of uncertain NLS systems.

On the other hand, researchers have utilized an ADP-based sliding mode control (SMC) scheme to address the fault-tolerant control (FTC) issue, which can not only eliminate the impact of actuator faults, but also guarantee OC performance of sliding-mode dynamics (SMD) [

22,

23,

24,

25]. In [

22], a comprehensive control method combining ADP algorithm and global SMC was formulated to resolve the orientation control issue for flexible spacecraft subject to actuator failure. In [

23], a novel off-line optimal SMC method based on an ADP algorithm was presented to handle the FTC problem for cascade nonlinear systems with mismatched uncertainties. In [

24], an ADP-based sliding mode FTC method was proposed with an optimizer for OC parameters. The integral SMC in this method adds an integral term to traditional SMC to improve anti-interference. In [

25], a FTC method combining fuzzy integral nonsingular terminal SMC and ADP was proposed to enhance launch vehicle flight safety in the event of actuator failure. However, the control methods in these studies have limitations in guaranteeing control performance when the system is under complex fault scenarios. To enhance the robustness for the system against uncertainty, guaranteed cost control (GCC) has been introduced in [

26], with the goal of strictly ensuring that the performance cost index does not exceed the upper bound while satisfying system stability. Over recent years, the GCC strategy has been further utilized with more complex system models and scenarios such as the study in [

27] on the finite-time GCC for LS singular systems subject to interconnected state lags. In [

28], a quantitative guaranteed cost FTC approach was developed for addressing the control issue of unknown multi-input systems with actuator failures.

An event-triggered control (ETC) method can reduce communication and computing resource consumption compared to traditional time-triggered control mode [

29,

30,

31], as it updates the controller strategies only when preset conditions are violated. Static ETC approach optimizes resources for decentralized control of NLS systems by using fixed triggering conditions. In [

32], a hierarchical sliding mode surface (SMS)-based ADP algorithm combined with the ETC approach was designed to tackle the OC issue for switched nonlinear systems. In [

33], a decentralized ETC strategy according to the ADP method was proposed for NLS systems to reduce communication costs and computing burden. However, compared with static ETC, dynamic ETC has higher resource utilization efficiency and lower communication and computing burden. In [

34], a novel ADP method under dynamic ETC mode was developed by using only agent interaction information without needing model dynamics, thus addressing the problem of resolving algebraic Riccati equations. Reference [

35] presented a new decentralized control scheme for certain continuous-time interconnected NLS systems with interconnection terms and unmodeled internal dynamics by integrating the dynamic ETC and ADP method. However, these systems do not contain failures when the operating system operates normally.

Inspired by the above discussions, this paper presents an SMC strategy based on the dynamic event-triggered ADP method for LS systems with actuator faults. The main innovations are as follows:

- 1.

This article proposes an FTC scheme for NLS systems with actuator faults using integral sliding mode control (ISMC) technology, which eliminates the effect of actuator faults and achieves stable SMD.

- 2.

This article presents a GCC approach based on the ADP algorithm, which ensures that the motion trajectories of the system stay on the SMS, while the overall performance index is less than a certain upper bound.

- 3.

Different from the ETC methods in [

31,

32,

33], to improve the utilization of communication resources of the equivalent SMD, a decentralized dynamic ETC approach combined with the ADP method is designed to optimize the control performance of NLS systems.

The subsequent sections of this article is arranged as follows.

Section 2 presents the issue description.

Section 3 develops an FTC approach utilizing ISMC methodology.

Section 4 develops a dynamic ETC scheme based on GCC via the ADP method.

Section 5 proposes a simulation example for the corresponding subsystem to verify the strategy’s performance.

Section 6 presents a conclusion of the present study.

2. Problem Statement

The uncertain NLS systems are as follows:

where the control input is denoted as

for the ith subsystem,

represents the system state vector, the actuator abrupt fault occurring at time

is represented by

. Here,

for

and

for

. Assume that

is continuous with

being bounded by

,

and

denote system functions that satisfy local Lipschitz continuity on

,

is a smooth known function, which is different from

. Moreover,

is the uncertain interconnection and

is a disturbance function that shows the architecture of the uncertain term, where

denotes the state for NLS systems (

1), with

.

Further, the nominal version of system (

1) is

Assumption 1. Assume that satisfies the Lipschitz continuity condition within a domain and suppose that system (2) is controllable.

Here, the matched uncertain term

satisfies

Here, , is an uncertain function with , and represents a known constrained function where .

This research aims to construct an adaptive control strategy that enables the NLS systems to remain stable when actuator faults occur, and there exists an upper bound of the performance index for the system dynamics on the SMS. Additionally, a dynamic ETC mechanism is developed to enhance communication resource utilization efficiency.

3. Design of FTC Strategy Approach Based on ISMC Technique

To eliminate the influence of time-varying faults in NLS systems (

1), we develop a SMC approach as follows:

with

being the nominal control for guaranteed dynamic ETC performance, and

being the control law for fault accommodation, which is utilized to eliminate the influence of actuator failures.

Assumption 2. The system functions and are bounded by and , where and are positive constants. Additionally, represents a full column rank matrix.

Remark 1. The guaranteed cost controller the under dynamic ETC method takes the form , ensuring that nonlinear system (1) achieves stability while simultaneously optimizing the adjusted cost functional during sliding mode operation.

The ISMC function is formulated as

where

and

.

To ensure the system states remain on the SMS

,

is designed as

where

and

represent a gain and a symbolic function, respectively.

where

, and

.

Theorem 1. With regard to system (1), when the SMC law is designed based on (6) and , is achievable from the beginning, where denotes the upper bound of . Proof. Define the Lyapunov candidate:

According to (

6), we have

According to the control rule (

7) and (

8), we have

where

, and if the condition

is satisfied, and

for any

. Hence, the SMS

achieves asymptotic stability.

The proof is completed. □

The objective of employing ISMC methodology is to ensure that

, hence the equivalent control input takes the form

. Substituting

to (

1), the SMD is

The nominal form of uncertain SMD (

12) is

Denote

. The control input is

. Define the cost function as

with

,

,

and

being constant matrices.

To address the decentralized cost-constrained control issue, a feedback control law is denoted by

and obtained a bounded cost functional

, i.e., satisfying

, where

represents a positive number that guarantees the stability of the system, and the cost function (

14) satisfies the constraint

.

It should be noted that the control system allows for a more concise representation. To offer a clearer illustration for the system, we have listed the following formula

and

Thus, the nonlinear system (

12) becomes

where

is the control for guaranteed dynamic ETC performance.

The nominal version of system (

15) is

The cost function (

14) becomes

where

, with the diagonal matrix

and

being constant matrices.

4. Dynamic Event-Triggered Guaranteed Cost Control Using ADP

Here, we present the innovation of a control law , which is designed by using the ADP algorithm under the dynamic ETC approach to achieve near-optimal control strategies.

Lemma 1 is introduced to demonstrate that the cost function for the NLS system (

15) can be well-defined, with its proof provided in [

36].

Lemma 1. Suppose there exist a bounded positive function , a cost function with , and a control input , so thatwith . Therefore, the local asymptotic stability of system (16) can be verified within a specific domain around the origin. Furthermore, it follows that , with representing the adjusted cost function for system (16), is given by Remark 2. Referring to [37,38,39], the bounded function iswhere is designed to handle the coupling and uncertain terms of the system. Considering system (

16), the adjusted cost function is defined as

Based on (

21) for system (

16), the Hamiltonian function is

Moreover, the optimal guaranteed cost for the NLS system (

16) is

, where

is provided in (

19) and

represents the admissible control set. In the OC design,

satisfies the HJB equation

According to the equilibrium conditions, the OC policy is derived as

The modified HJB equation is formulated as

According to (

24) and (

25), we have

Consider the NLS system (

15) with the modified index (

17), and assume that the solution to the HJB equation (

25) exists and is continuous. Denote this solution as

. Therefore, the optimal guaranteed cost is determined when

, i.e.,

. Consequently, it follows that

and

. Therefore, the optimal GCC of the original NLS system (

15) can be transformed into solving an OC issue for the nominal system.

4.1. Dynamic ETC-Based ADP Method Design

Initially, establish a strictly monotonically increasing sequence where and , comprising the activation times . For any , denote the system state as .

The triggering error is given by

The corresponding control is described by

According to (

27), the dynamic ETC strategy is expressed as

Based on (

24), the optimal dynamic ETC strategy is obtained as

where

.

Subsequently, the HJB equation (

25) can be rewritten as

Assumption 3. Suppose that the Lipschitz condition holds for such that , where ρ represents a positive constant.

Assumption 4. The cost function is bounded by in the compact set . Moreover, holds with as a positive constant.

Theorem 2. Considering the NLS system (15) and (16), if Assumptions 3 and 4 hold and the solution of the HJB equation (23) exists, the dynamic ETC near-OC signal is designed as (29) when the triggering condition is presented aswhere , , denotes the largest singular value for matrix R and is the triggering threshold. The dynamics of internal signal are denoted as Proof. For system (

15), the optimal cost function

is positive definite, while

denotes the dynamic ETC strategy. According to (

15), selecting

as the Lyapunov function, it obtains

According to (

25), it follows that

Bringing (

34) into (

33), it yields

Using Yang’s inequality, it has

Since

and (

24), we have

Using (

24), (

29), and (

38), we have

Based on Assumption 3 and Equation (

39), it has

where

denotes the largest singular value for matrix

R.

Bringing (

40) into (

39), it yields

It is clear that the constraint (

31) holds, and it follows that

where

.

The proof is finished. □

Theorem 3. Considering (15) with the cost function (17), assume that a continuously differentiable solution of HJB equation (23) exists. Then, the OC inputted under the dynamic ETC strategy is developed as (24), and the near-optimal guaranteed cost function (17) satisfies Additionally, set , and one gets Proof. By applying a control input

, it follows that

Based on (

15) and (

34), we have

According to (

24), it has

By substituting (

47) into (

46), we have

By invoking (

44), (

45), and (

48), it obtains

Substituting results in .

Therefore, the proof is concluded. □

4.2. Neural Network-Based Realization

According to the universal approximation principle of NNs within a compact region

[

40],

can be given by

where

is the estimation error,

represents the target weight for the critic NN,

denotes the activation function, and

represents the node number of the hidden layer of the critic NN. For practical scenarios, the cost function is presented as

with

being the real weight for the critic NN.

Assumption 5. The NN weight vector satisfies , and the activation function satisfies and . The residual approximation errors satisfy and , where , , , and are positive constants.

Using (

50), one has

where

and

.

Based on (

29) and (

52), the dynamic event-triggered OC law is

Subsequently, the actual control policy is

From (

25) and (

29), the approximate Hamiltonian function is

The weight adaptation strategy for the critic NN is presented as

with

and

being the update rate of the critic NN. Utilizing (

53), one gets

In the proposed approach, to relax the persistence of excitation condition, an enhanced weight adjustment strategy for the critic NN is

with

being the index of stored data

,

and

, and

Define the weights estimation error as

Based on (

59) and (

61), it follows that

with

and

representing the residual errors, and

and

.

Define the augmented state vector represented by

, incorporating the closed-loop SMD

, and then the hybrid control system becomes

with

and

with

.

Remark 3. In order to address the FTC problem for NLS systems and improve the communication resource utilization efficiency, an integrated control framework is developed which combines ISMC technology and ADP-based GCC under a dynamic ETC mechanism. The proposed approach weakens the impact of actuator failures on system stability, as well as ensures that the certain limits of the control performance indicators exist under the ETC mode.

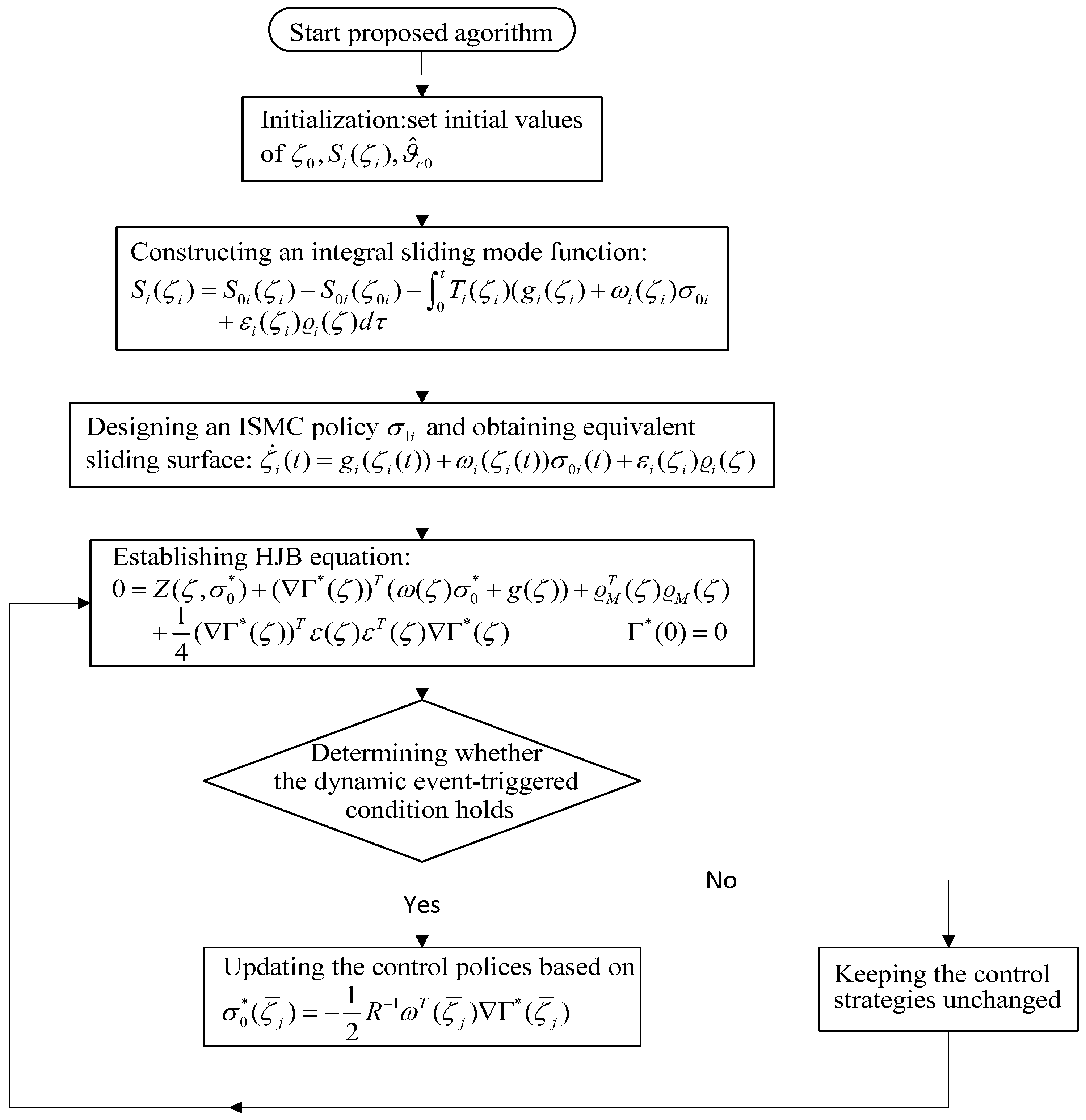

Remark 4. The simulation experiment in this paper is implemented using MATLAB R2021a. To clearly present the designed control scheme, the relevant control block diagram of the proposed control strategy is shown in Figure 1. 4.3. Stability Analysis

Theorem 4. Consider the NLS system (16), assume that Assumptions 2−5 hold and the control strategy is presented in (55). The critic NN weight updating mechanism is formulated in (59). Hence, the dynamic ETC condition is established aswhere ℏ denotes a design parameter within the interval , is the triggering threshold and the dynamics of internal signal are given by The parameter approximation error and sliding dynamics (16) both achieve UUB. Proof. The candidate Lyapunov function is

where

,

,

, and

. The complete proof involves consideration of two cases.

Case 1: During

, the events are not triggered. It has

Based on

, and according to

, we have

According to (

26), one has

Based on the fact that

, it has

Considering Assumptions 3 and 5, (

54), (

55), and assuming that

and

, it has

Based on Assumption 4, (

71), (

72), and (

73), it follows that

Furthermore, the derivative of

is

Based on the fact

, it can be derived that

with

being the residual error. Suppose that

. According to (

75), (

76), and (

77), it follows that

where

is given in (

64).

Based on (

69), (

74), and (

78), we have

The triggering condition, constructed as (

66), is activated when one of the following inequalities is satisfied

It can obtain that under the condition .

This demonstrates that the UUB property holds for both and .

Case 2: During the trigger moment

, the derivative of

is constructed as

According to case 1,

exhibits continuous and monotonic decrease within

. Therefore, it follows that

Through applying limits on each side of (

83) while establishing the definition

, it obtains

. Then we have

Additionally,

is continuous differentiability properties at triggering moments

. Based on case 1, it obtains

Considering (

84) and (

85), it follows that

.

Through the analysis of these two cases, the proof of the theorem is completed. □

5. Simulation

In this section, consider the following NLS systems:

where

and

are the system states,

and

are the control inputs. The interconnected disturbances is

and

with

and

. Here, we further choose

;

. Assuming that an actuator failure suddenly occurs at

, the fault dynamics can be represented as

In this simulation, the original states of subsystem 1 and subsystem 2 are selected as

and the relevant parameters are set to

,

,

,

. The cost function can be defined as

where

,

,

and

. Moreover, the parameters in dynamic triggering conditions are set as

,

,

,

.

Figure 2 and

Figure 3 illustrate the state trajectories of the subsystems. It is clear that all state trajectories can ultimately converge to the equilibrium point.

Figure 4 presents the SMC law

for subsystem 1, and the dynamic ETC input

for subsystem 1 is depicted in

Figure 5.

Similarly, the SMC rule

for subsystem 2 is shown in

Figure 6, while the dynamic ETC input

for subsystem 2 is illustrated in

Figure 7.

Figure 8 and

Figure 9 demonstrate the convergence behaviors of the critic NNs’ weights for subsystem 1 and subsystem 2. As shown in the figures, the weight vectors of the critic NNs for both subsystems converge within 150 s, indicating that the adaptive learning strategy designed in this article can enable the system to achieve asymptotic stability, thereby validating the UUB property of the control system.

Figure 10 presents the dynamic ETC condition.

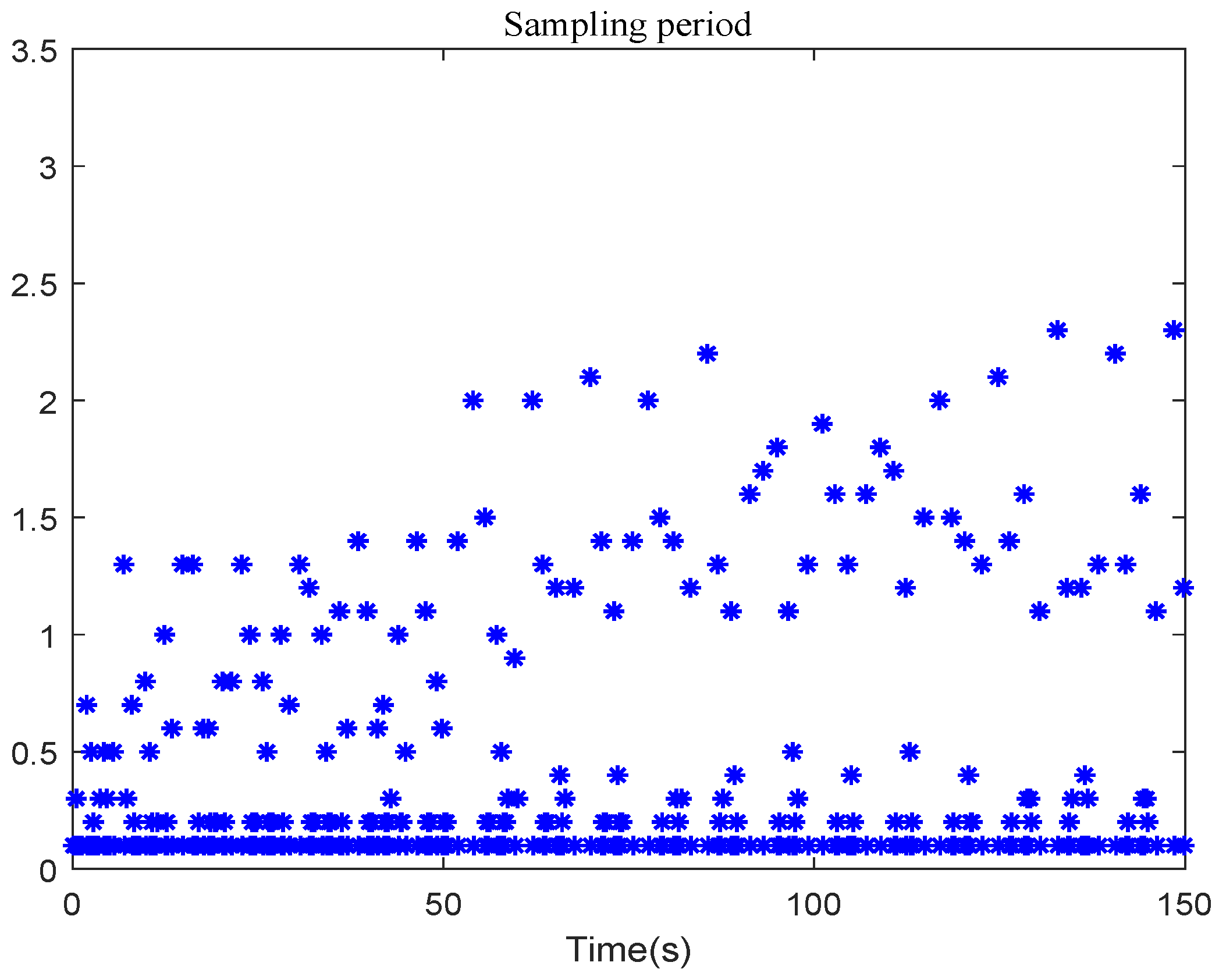

Figure 11 shows the situation of the sampling period during the learning process, which indicates that the control approach presented in this article can effectively improve the utilization of communication resources.

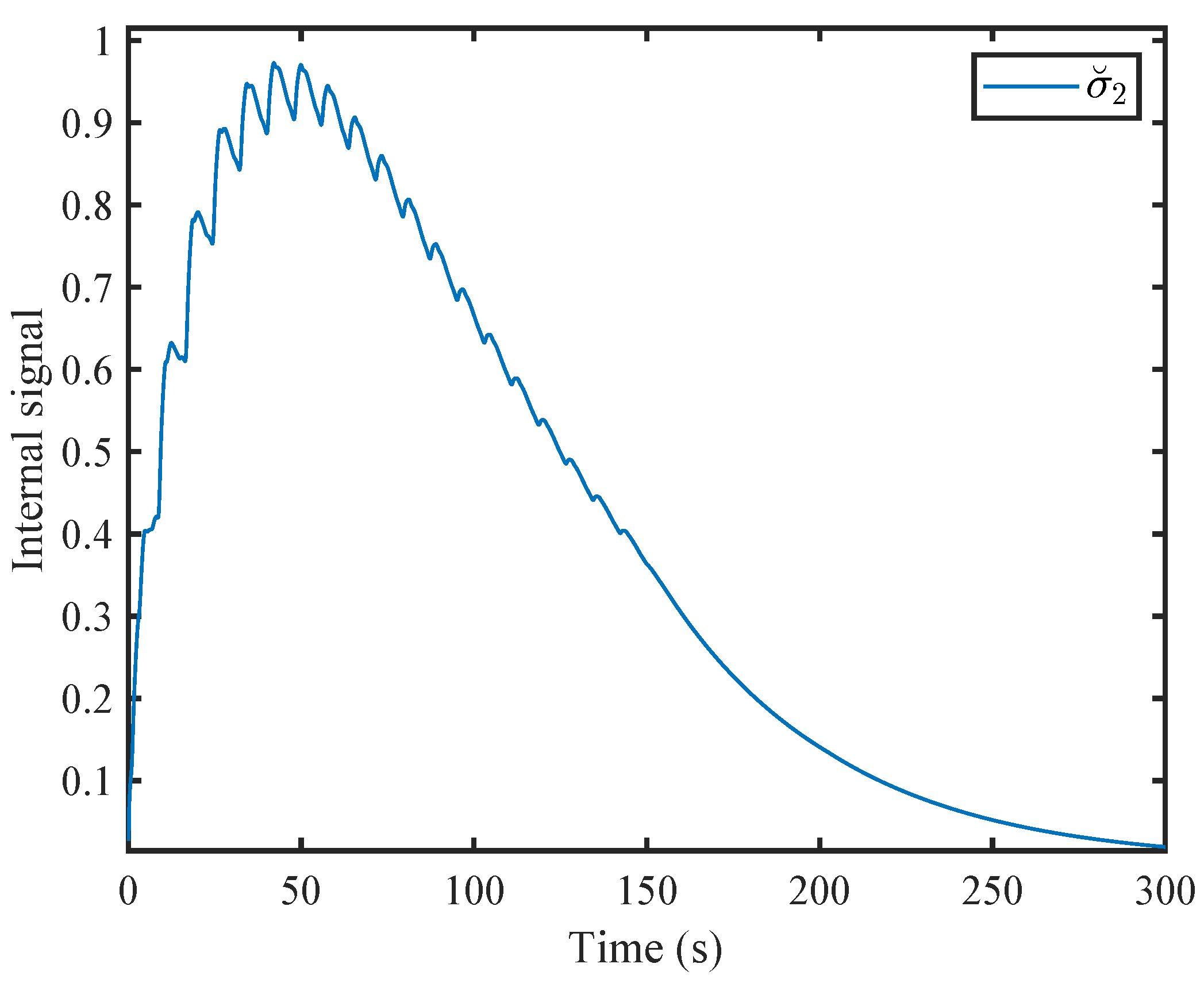

The internal signal evolution of the dynamic ETC method is depicted in

Figure 12. It is clear that the internal signal

remains positive at all times.