2.3. Model Development

The plant for hydrodesulfurization of diesel fuel is a complex industrial plant characterized by pronounced process dynamics and nonlinearity. The final product of the process is hydrodesulfurized diesel fuel with a permissible sulfur content of 10 mg/kg, in accordance with the current European Directive 2016/802/EU [

1].

The data used for the model development was taken from the plant database, in which all measured variables are continuously stored. Several periods of plant operation in 2021 and 2022 were considered. An effort was made to identify a data set with emphasized process dynamics that lasts over a continuous period of at least ten days. Due to certain downtimes in plant operation and occasional malfunctions of the online process analyzer, it was challenging to select the relevant period. Ultimately, a period of 11 days in October 2022 was selected as the most appropriate for model development.

Preprocessing of the data included detection and removal of unexplained extreme values (outliers), data filtering, and eliminations of linear trends. A comprehensive feature analysis was performed that included the calculation of Pearson correlation coefficients to assess the relationships between input variables and an output variable, as well as descriptive statistics. The appropriate sampling time was also determined. Additionally, the output variable measured by the online process analyzer was compared with laboratory analysis results, which were conducted approximately four times per day, to assess measurement reliability. Since dynamic models are developed in the work, time delays for the input variables were also defined.

Based on the process analysis and consultations with technologists and plant operators, twenty variables were initially selected as potentially influential on the output variable (

Table 1).

Empirically, the optimum number of influencing variables is between six and eight. A larger number of input variables can lead to unnecessary complexity of the model, while with a smaller number of inputs it is likely that the model will not be able to describe the comprehensive process dynamics of complex industrial plants. An important fact is that the amount of sulfur at the input of the plant directly affects the amount of sulfur at the output of the plant, i.e., in the product. If the analysis determines that the raw material entering the process contains significantly more sulfur than the current value according to which the reactor inlet temperature is set, the reactor inlet temperature should be lowered, as desulfurization reactions are exothermic and a “temperature runaway” can occur.

Table 2 shows Pearson correlation coefficients between potentially influential input variables and the output variable.

Based on the Pearson correlation coefficients presented between potential input variables and the target output variable, several variables showed moderate to strong correlation with sulfur content in the product (e.g., TC-0493, TI-0495, TC-0446, TI-0702, TI-0807).

However, to avoid redundancy and potential multicollinearity within the model, an additional analysis of the Pearson correlations between the input variables was performed. The results are shown in

Figure 5 (Pearson correlation matrix).

It was found that certain input variables were strongly correlated with each other (>0.9), especially for the temperature measurements associated with the reactor and stripping column sections. Consequently, some variables were excluded from further modeling even though they had a reasonable correlation with the target. Examples of this are as follows: TI-0702, which is strongly correlated with TI-0495 (≈0.95), TI-0807, which is strongly correlated with TC-0446 (≈0.91), and FI-0202N, which has a correlation with several flow-related variables.

The final selection of six influential input variables was based on a multi-criteria evaluation that included their correlation with the output variable, a mutual correlation, the coverage of different process sections (reactor and stripper column), and expert knowledge regarding process relevance.

Table 3 shows the six selected influencing variables (plus one output variable) that were taken into account in the further steps of model development.

The HDS process under investigation is divided into four technological sections: reactor section, stripping section, amine gas treatment section and acid-water stripping section.

Figure 6 shows the process flow diagram of the reactor section and the stripper section, which were the focus of the investigations carried out in this paper. All influential input variables and the output variable are marked in the figures.

Table 4 shows descriptive statistics for the selected 6 input variables and the output variable with a sampling period of one minute. The maximum, minimum, mean, median, variance, and standard deviation are calculated. It can be observed that most temperatures are stable and controlled, with only minor deviations. The flow rate (FC-0401) shows considerable fluctuations, while the temperature (TI-0495) and the sulfur content (AI-0151) show a higher relative variability and potential outliers.

However, not many extreme values were identified in the collected data, and these were removed “manually” by checking all data. The data was additionally filtered with a LOESS filter (locally weighted scatterplot smoothing) with a smoothing coefficient of 0.005.

The available sampling period between the measurement data is one minute. Such a short sampling period is often not necessary for model development. It is empirically sufficient to select a sampling period of three to five minutes. In order to determine the optimal sampling interval, preliminary ARX models were developed using sampling periods of one, three, and five minutes. Model accuracy was evaluated based on the correlation coefficient (“

FIT”) between the model predictions and the measured data. The results of these preliminary tests are presented in

Table 5. The model performance was found to be inferior for both one-minute and five-minute sampling intervals compared to the three-minute interval, when using identical parameter settings and training/testing data. Therefore, a sampling period of three minutes was selected for all subsequent modeling.

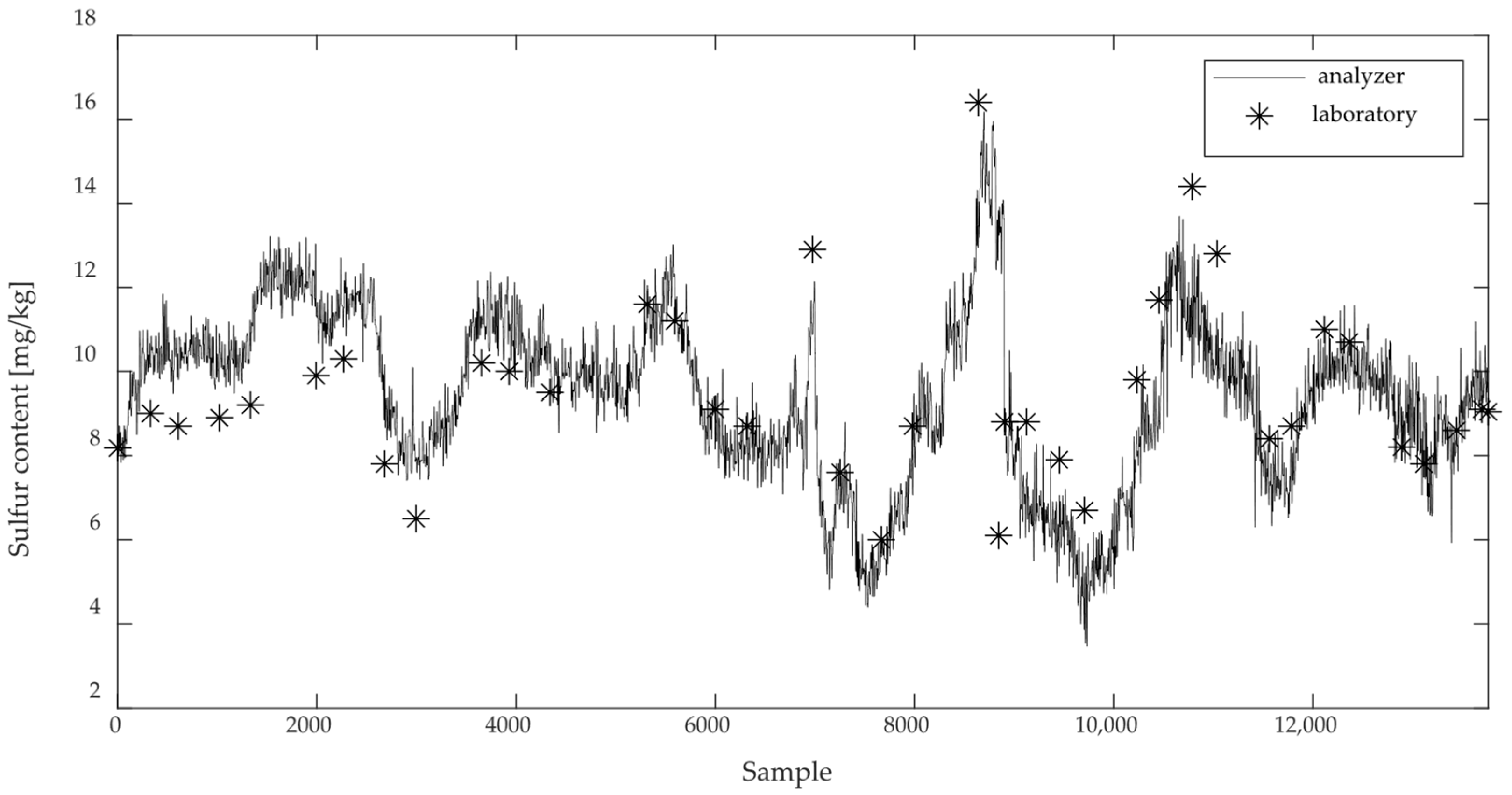

It is recommended to compare the values of the output variable (sulfur content in the product) measured by the online process analyzer with the corresponding values of the laboratory analysis. This comparison serves as the check for the correct operation of the analyzer, i.e., for the relevance of the selected data set for model development.

Figure 7 shows a comparison of laboratory analysis data and online analysis data.

It can be noted that the values of the online analyzer agree quite well with the laboratory analysis, which was performed four times a day, for the entire data set with a sampling time of 1 min. However, it is evident that the analyzer deviates strongly from the laboratory values in some areas (especially for the first 3000 data samples) and that the values sometimes exceed the standard limit for the permissible sulfur content in diesel fuel of 10 mg/kg. These findings also point to a significant challenge in choosing the relevant data period for modeling under real industrial operating conditions.

The time delays for the input variables were initially approximated according to the process knowledge and experience of the plant operators. Although precise delay determination typically requires dedicated testing, such procedures are rarely feasible in full-scale industrial plants without affecting product quality. Therefore, the actual delay values used in the dynamic polynomial models, represented as

nk parameters in the model structure equations [

1,

2,

3,

4], were identified and fine-tuned through a systematic trial-and-error approach using validation-based performance metrics. The final delay values (in minutes and sample steps) are summarized in

Table 6, assuming a sampling period of three minutes.

The development of the model is intended for real-time estimation of the current sulfur content in the product based on delayed input variables rather than for predicting future values. The model development would require a completely new optimization of the model structure parameters for a possible prediction performance.

2.3.1. Dynamic Polynomial Models

The models were developed using the MathWorks MATLAB

® System Identification Toolbox

TM, ver. 2015a. The values of the model structure parameters

na,

nb, and

nf were evaluated over the range of 0 to 12. This range was selected based on previous research and is empirically consistent with the dynamics of the process, the reasonable time required to compute the model, and the model complexity. The parameter

nk is fixed to certain values of time delays for the selected influencing variables, as shown in

Table 6. In the case of nonlinear models, parameter

n, which represents the number of nonlinear units, was also examined in the range of 0 to 12. Taking these ranges into account, the model structure parameters, including

na,

nb,

nf and the parameter

n, were selected by a systematic trial-and-error procedure. Different combinations of these parameters, including fixed

nk for all models, were iteratively tested and the final values were selected based on model performance indicators such as the correlation coefficient (“

FIT”) between the model predictions and the measured data, and residual analysis using validation data set. The finally selected model structure parameters are listed in

Table 7.

When developing linear parametric polynomial models, it is recommended to remove the linear trends of the measured input and output data. The linear trends were removed using the least squares method.

The continuous 4585 data samples were divided into two groups. The first 2967 data samples were used for model development and the rest for validation.

After determining the optimal order of the model by choosing the optimal parameters, the final model is obtained by calculating the optimal coefficients of the polynomial matrices of the model. The coefficients were calculated using optimization algorithms integrated into the MATLAB

® software package, ver. 2015a, based on the least squares method (for FIR and ARX), numerical search algorithms (for OE) and combinations of Gauss–Newton, Levenberg–Marquardt and trust-region optimization methods (for NARX and HW) [

35].

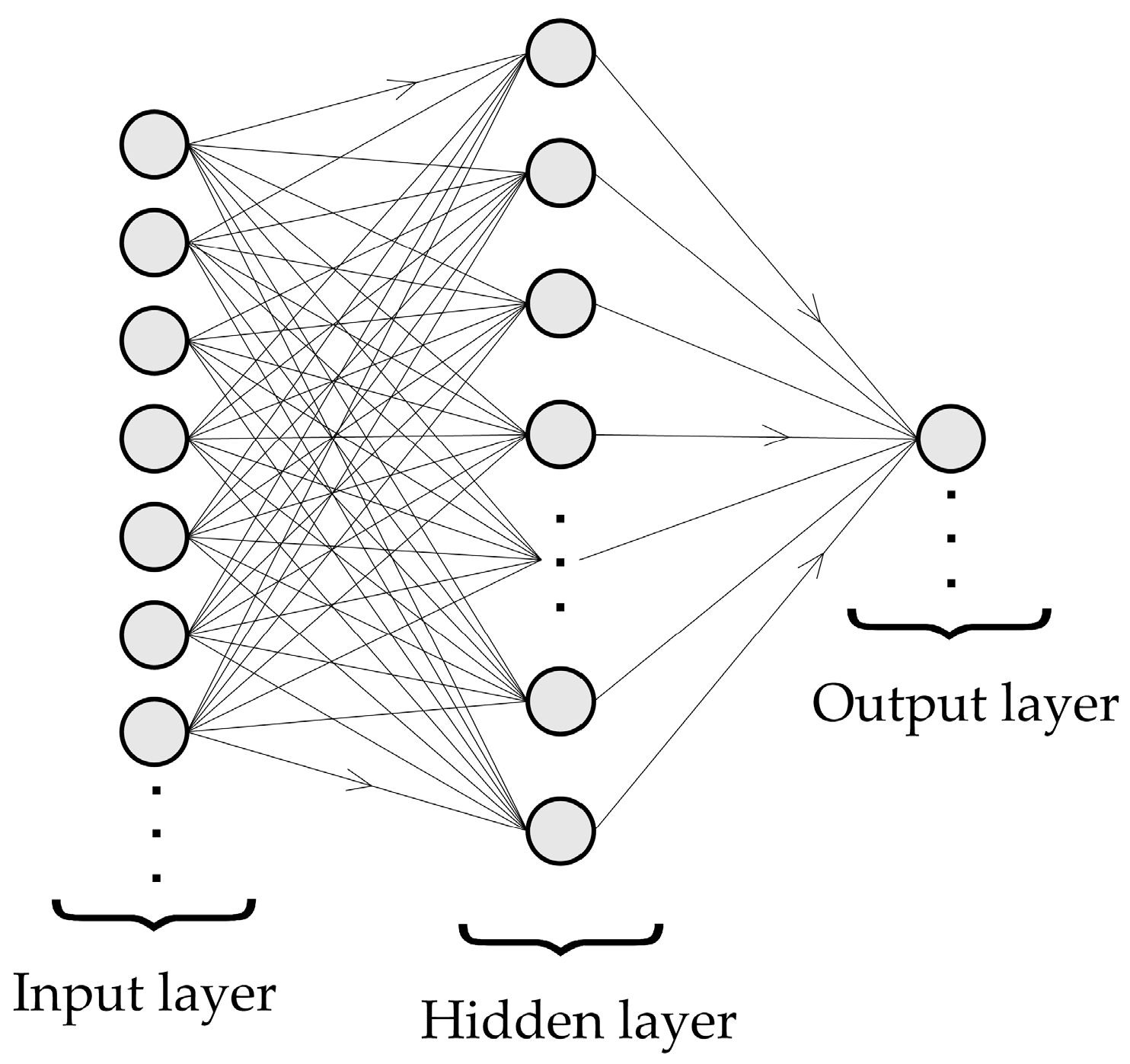

2.3.2. ANN Models

The neural network consisted of an input layer, followed by one or more hidden layers with nonlinear activation functions (rectified linear unit—ReLU or hyperbolic tangent–tanh) and a single output layer that continuously generates an output value. The final number of hidden layers and the number of neurons per layer were selected using hyperparameter optimization. The model was trained using the ADAM (adaptive moment estimation) optimization algorithm, which uses adaptive learning rates and gradient-based updates. The loss function used in training was the mean squared error (MSE), and the model learned the layer weights by forward propagation and backpropagation of the error.

Prior to training, the input features were standardized using z-score normalization. The data samples were randomized and split into a training and test set in proportion as in dynamic polynomial models, while the predictions were later reconstructed and evaluated in chronological order. The model was developed using Python 3.9.6 programming language paired with Keras Tuner library 2.13.1. To optimize the model architecture—e.g., the number of hidden layers, the number of neurons per layer, the activation functions and the learning rate, a random search strategy within K. Tuner was used, as the problem complexity did not require a more robust hyperparameter optimization method such as the Hyperband optimization algorithm.

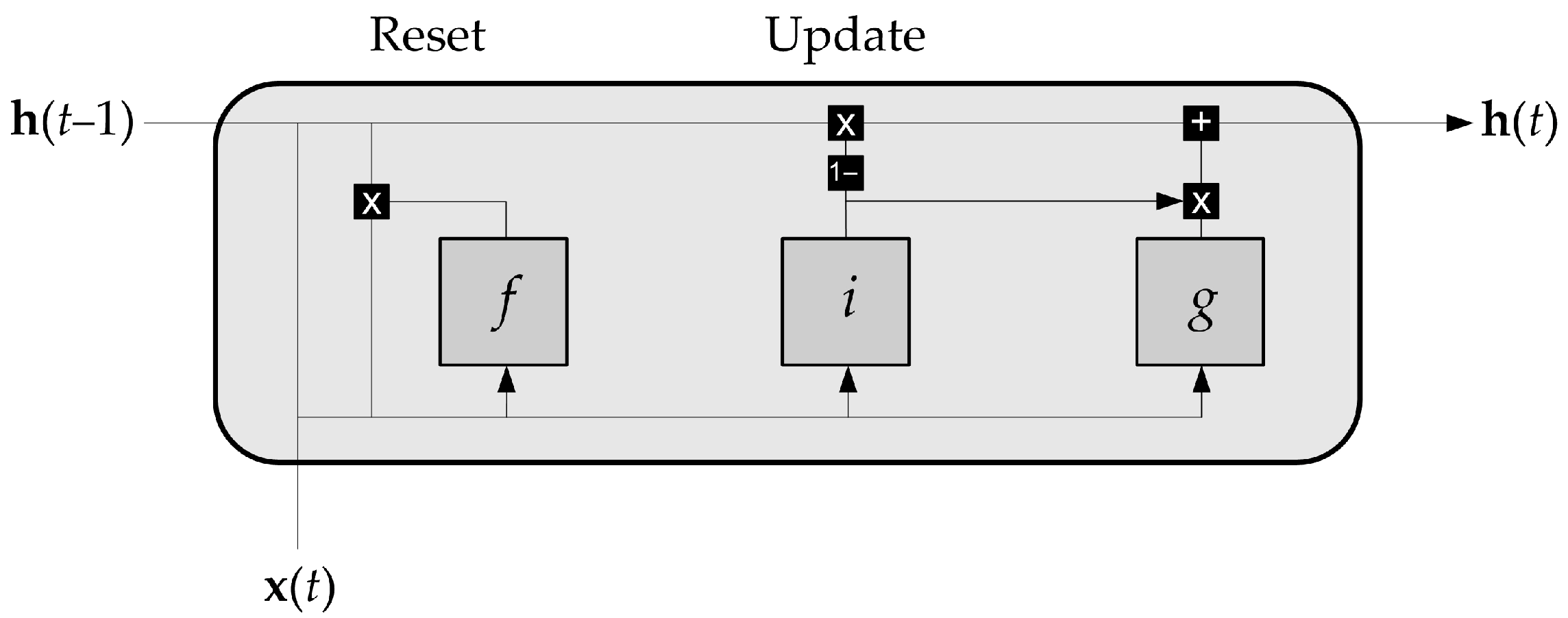

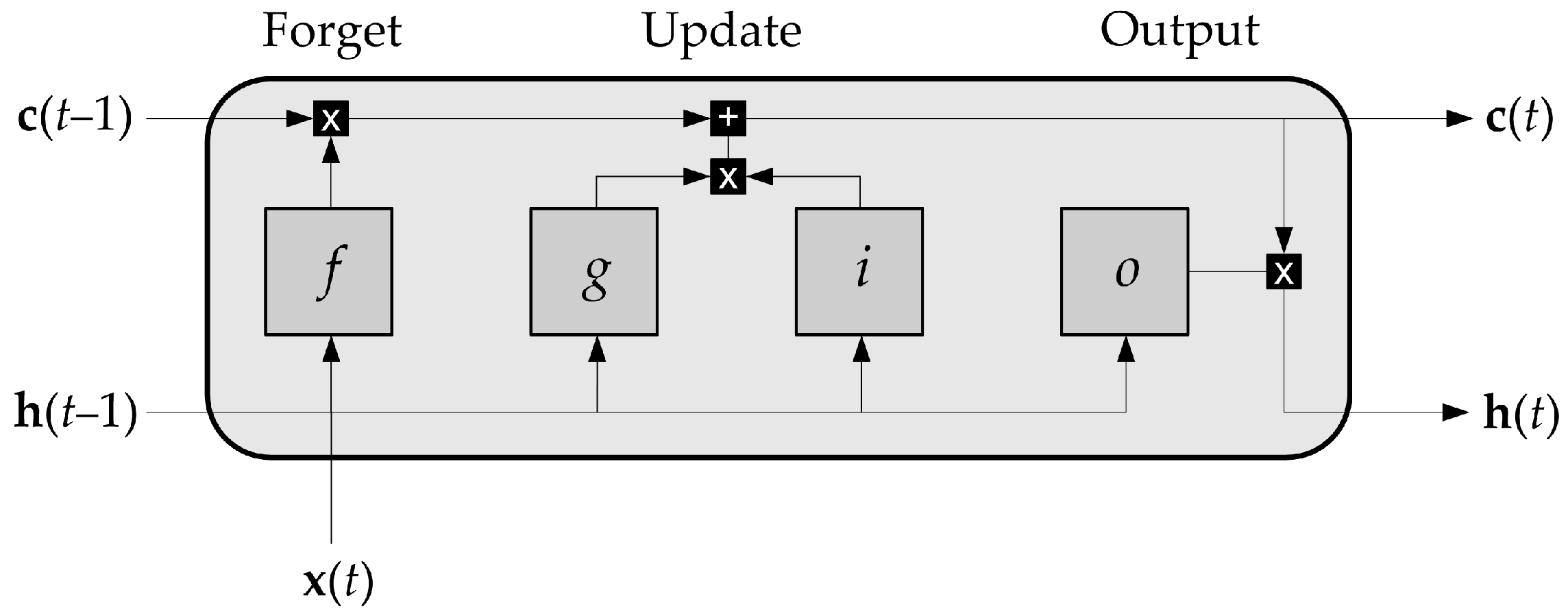

2.3.3. LSTM and GRU Networks

Although the static ANNs in this case study can provide satisfactory results, the application of LSTM is usually used to improve the potential for capturing temporal dependencies, as the process data from the plant environment is dynamic.

Modeling the dynamic behavior in the HDS process plant, an LSTM neural network was implemented in Python 3.9.6 programming language with TensorFlow and Keras libraries. The input process variables were standardized (z-score) and transformed into supervised learning sequences with a sliding time window of 100 samples due to the slow dynamics of the process. The data set was split into a training and test data set in proportion as in dynamic polynomial models, keeping the chronological order to reflect realistic plant conditions.

The LSTM hyperparameters were initially optimized using random search and then using the Keras Tuner library with the Hyperband search strategy. The hyperparameter space included the number of LSTM layers (2–3), the number of units per layer (128–256), the dropout rates (0.1–0.3), the L2 regularization coefficients (0.00001–0.001), the activation functions in the output layer (tanh or sigmoid), and the learning rates (0.0001–0.01). Batch normalization was applied after each LSTM layer to stabilize the training.

In the output layer, nonlinear activation functions were tested instead of the default linear setting to improve the representation of bounded target values. The model was trained with the ADAM optimizer and a batch size of 32, using early stopping to prevent overfitting.

A GRU model was also developed and tuned using both random and Hyperband optimization strategies to eventually improve the overall performance achieved by the LSTM model.

2.3.4. Model Validation

The performance of the models developed was evaluated using four statistical indicators: the Pearson correlation coefficient (

R), the coefficient of determination (

), the root mean square error (

RMSE), and the mean absolute error (

MAE) (Equations (10)–(13)):

where

is the measured input,

is the measured output,

is the model output,

is the mean of all measured inputs,

is the mean of all measured outputs, and

n is the number of values in the observed data set.

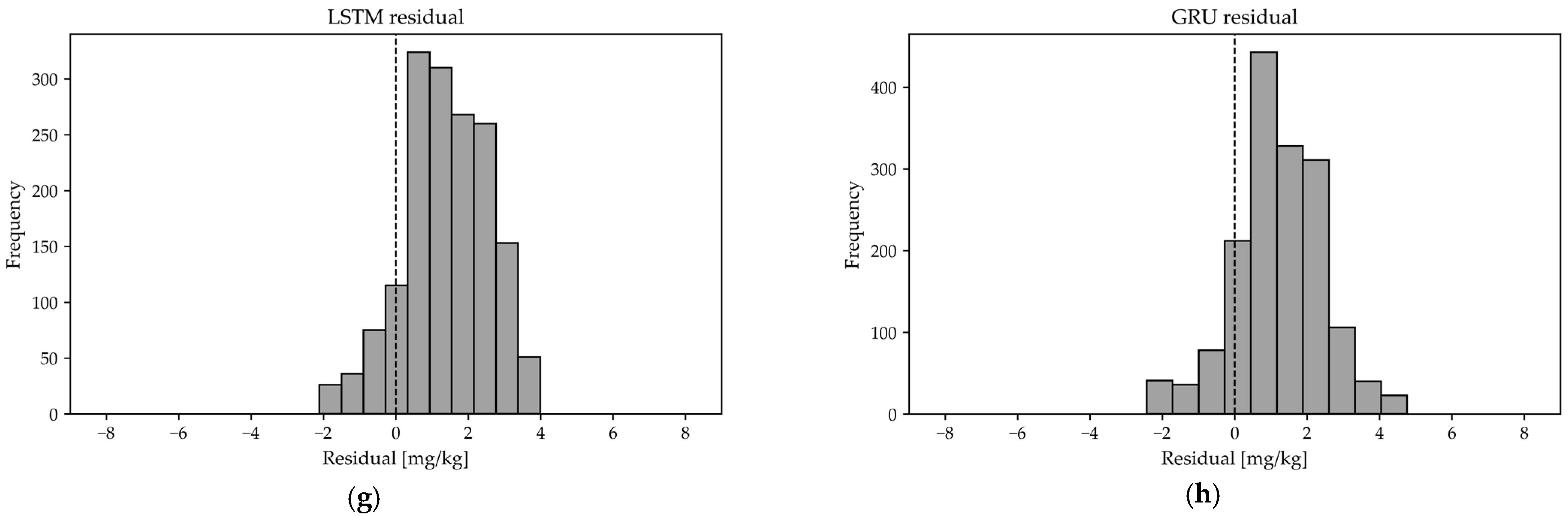

All the developed models were evaluated using the same evaluation criteria (R, , RMSE, MAE) based on the entire data set and the test set so that the developed models can be compared and interpreted. The models were also evaluated and interpreted in the form of graphical representations showing the comparison between the measured data and the model estimate of the sulfur content. The models were additionally evaluated using the histograms of the model residuals. It should be noted that all models were developed with the same number of training and test samples (2967 for training and 1618 for testing), which ensures a consistent evaluation.