Association Model-Based Intermittent Connection Fault Diagnosis for Controller Area Networks

Abstract

1. Introduction

- The proposed diagnostic method has wider applicability to fault conditions as it can completely locate IC faults in complex location scenarios that cannot be handled by existing methods, such as IC faults on multiple trunk cables.

- Fault symptom association models are developed for precisely quantifying the fault probability for each cable and the causality of each cable fault with respect to the recorded symptoms, thereby ensuring higher diagnostic accuracy than that of existing methods.

- The proposed framework has higher diagnostic efficiency as it can produce the accurate result in a single diagnostic process, whereas the existing methods require repeated diagnosis of several areas in the network.

2. Preliminaries

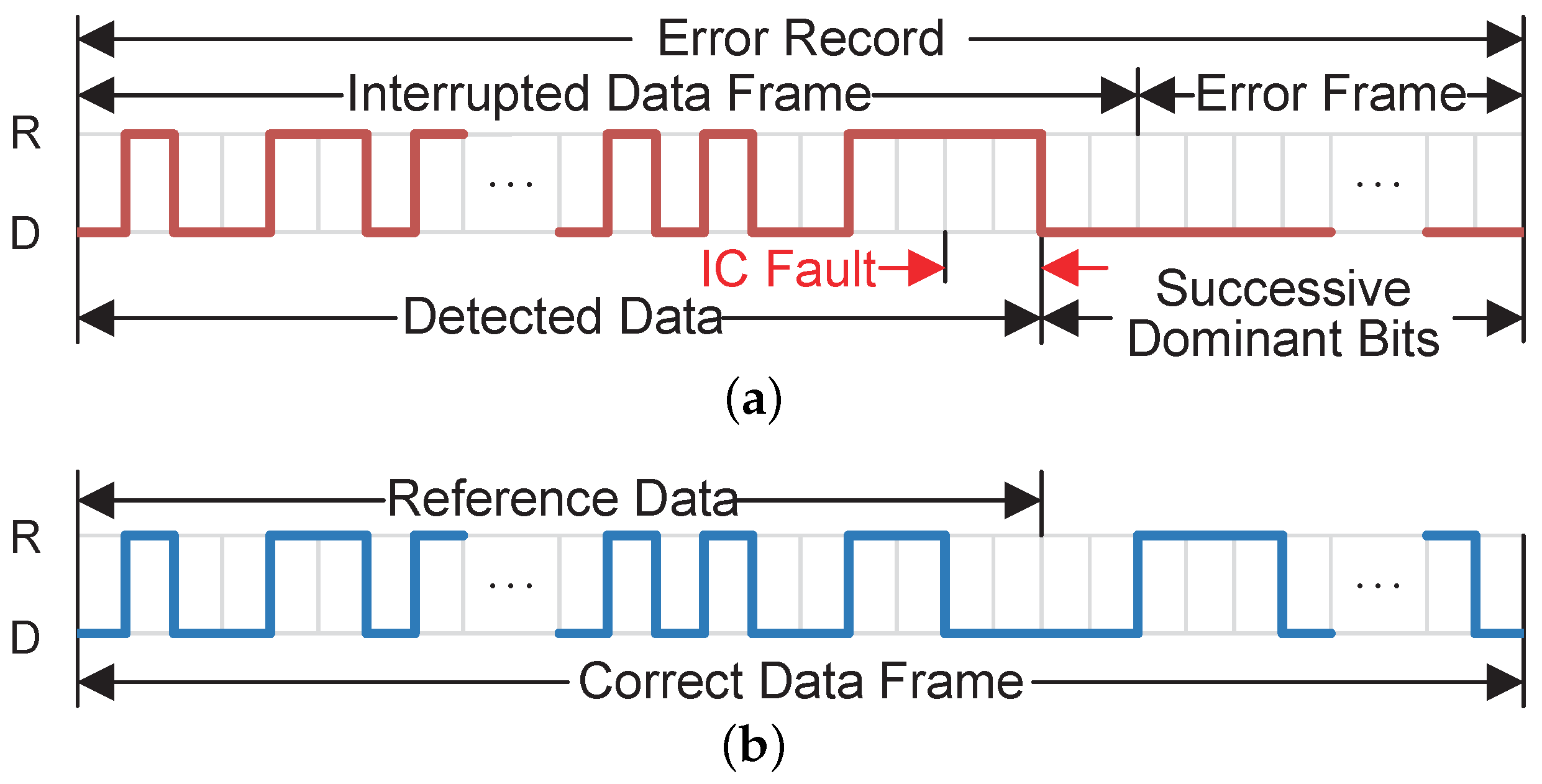

2.1. CAN Fault-Handling Mechanism

2.2. Introduction to IC Faults

2.2.1. Local IC Faults

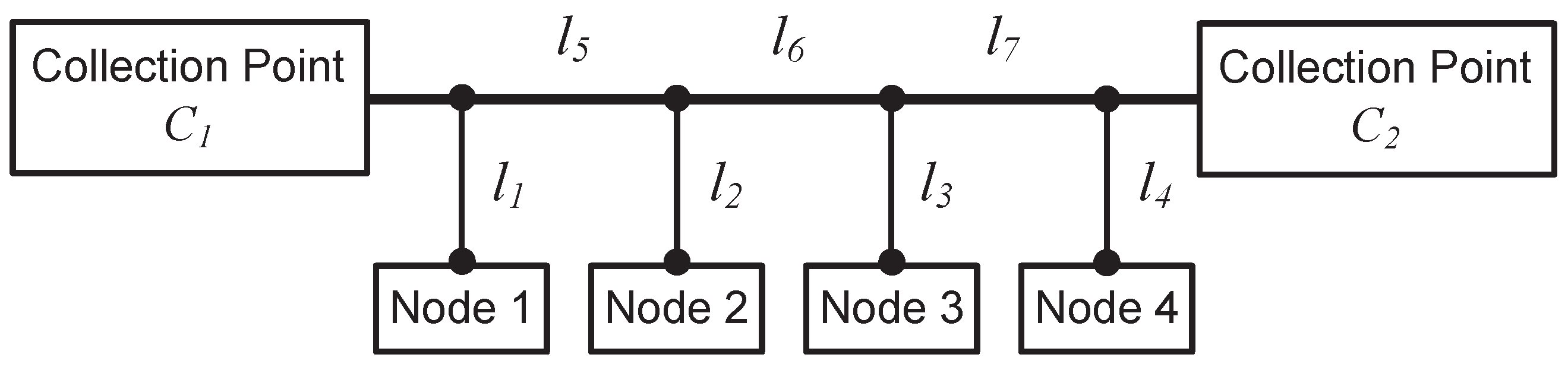

2.2.2. Trunk IC Faults

2.3. Problem Definition

- (1)

- Given a sequence of error frames, how to describe the error patterns to enable identification of the IC fault category?

- (2)

- How to determine the range of possible IC faults implied by each error pattern? In addition, how to precisely quantify the possibility of each IC fault?

- (3)

- How to filter the exact IC fault location from the fault range without misdiagnosis and missed diagnosis?

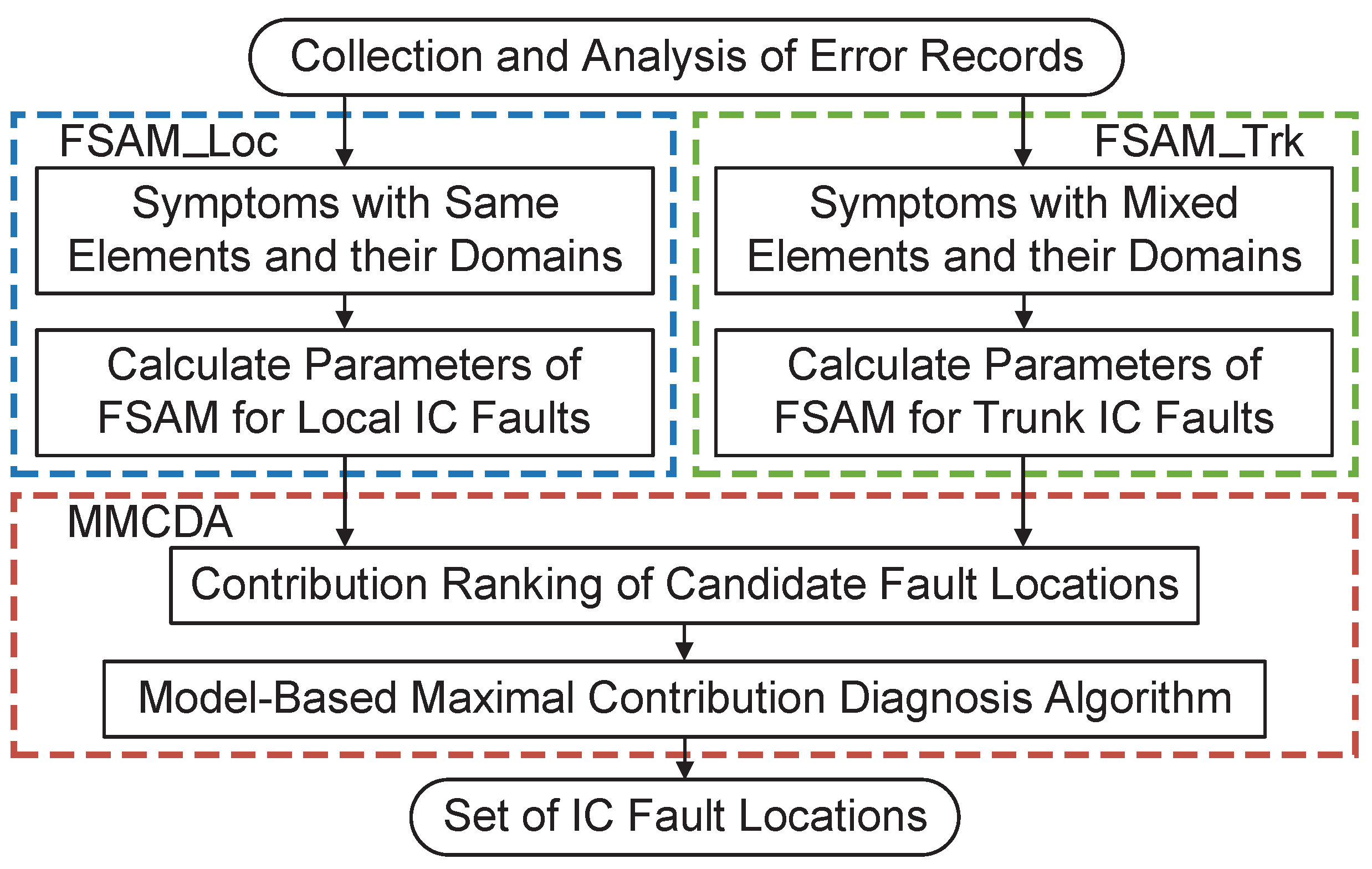

3. Methodology

3.1. Collection and Analysis of Error Records

3.2. Generation of Symptoms

3.2.1. Symptom Modes for Local IC Faults

3.2.2. Symptom Modes for Trunk IC Faults

3.3. Derivation of Symptom Domains

3.3.1. Symptom Domains for Local IC Faults

3.3.2. Symptom Domains for Trunk IC Faults

3.4. Fault Symptom Association Model (FSAM)

3.4.1. FSAM for Local IC Faults (FSAM_Loc)

3.4.2. FSAM for Trunk IC Faults (FSAM_Trk)

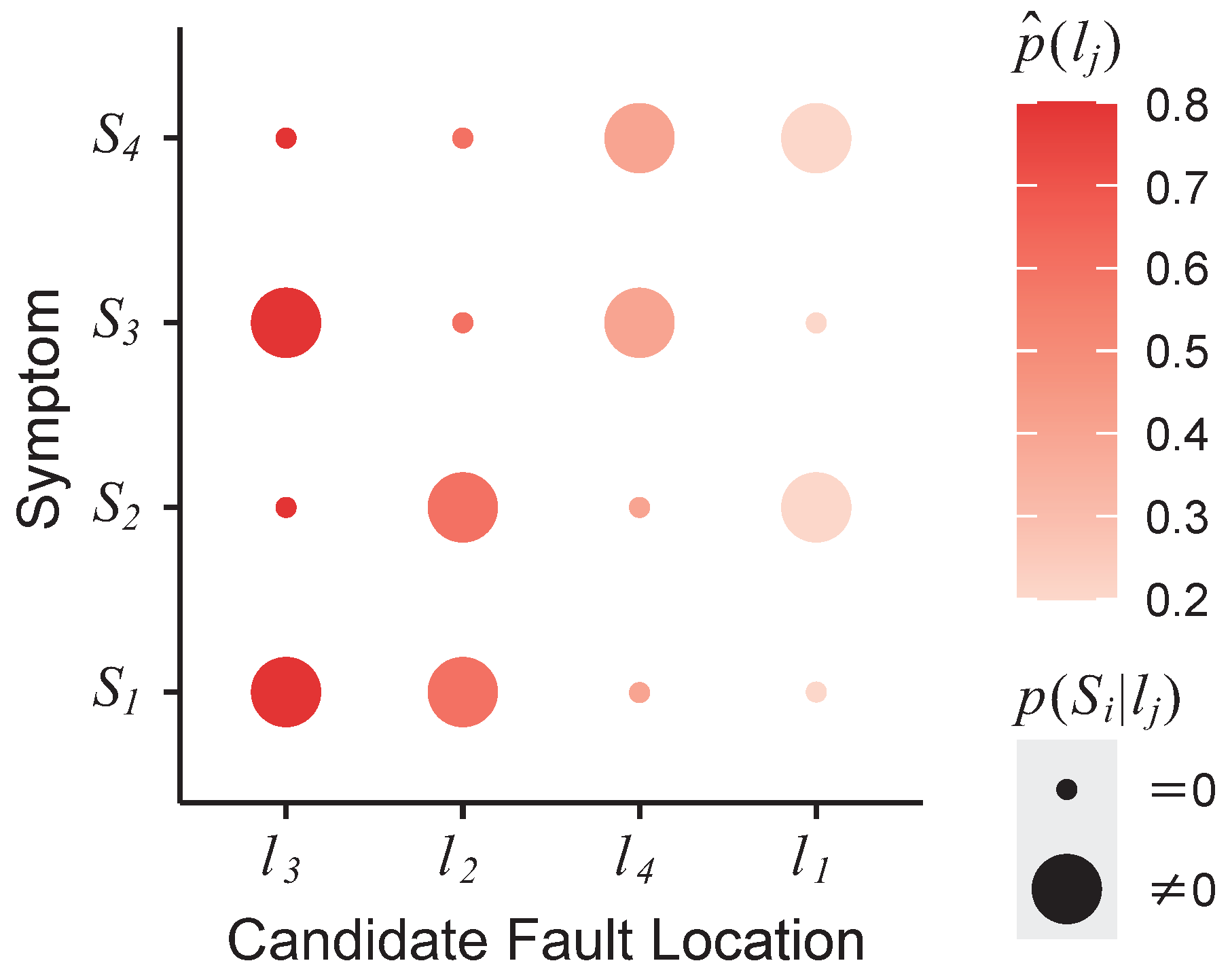

3.5. Model-Based Maximal Contribution Diagnosis Algorithm (MMCDA)

| Algorithm 1 Model-Based Maximal Contribution Diagnosis Algorithm (MMCDA) |

Input: symptom set (or ), contribution ranking Output: best-explanation fault set F

|

4. Experiments

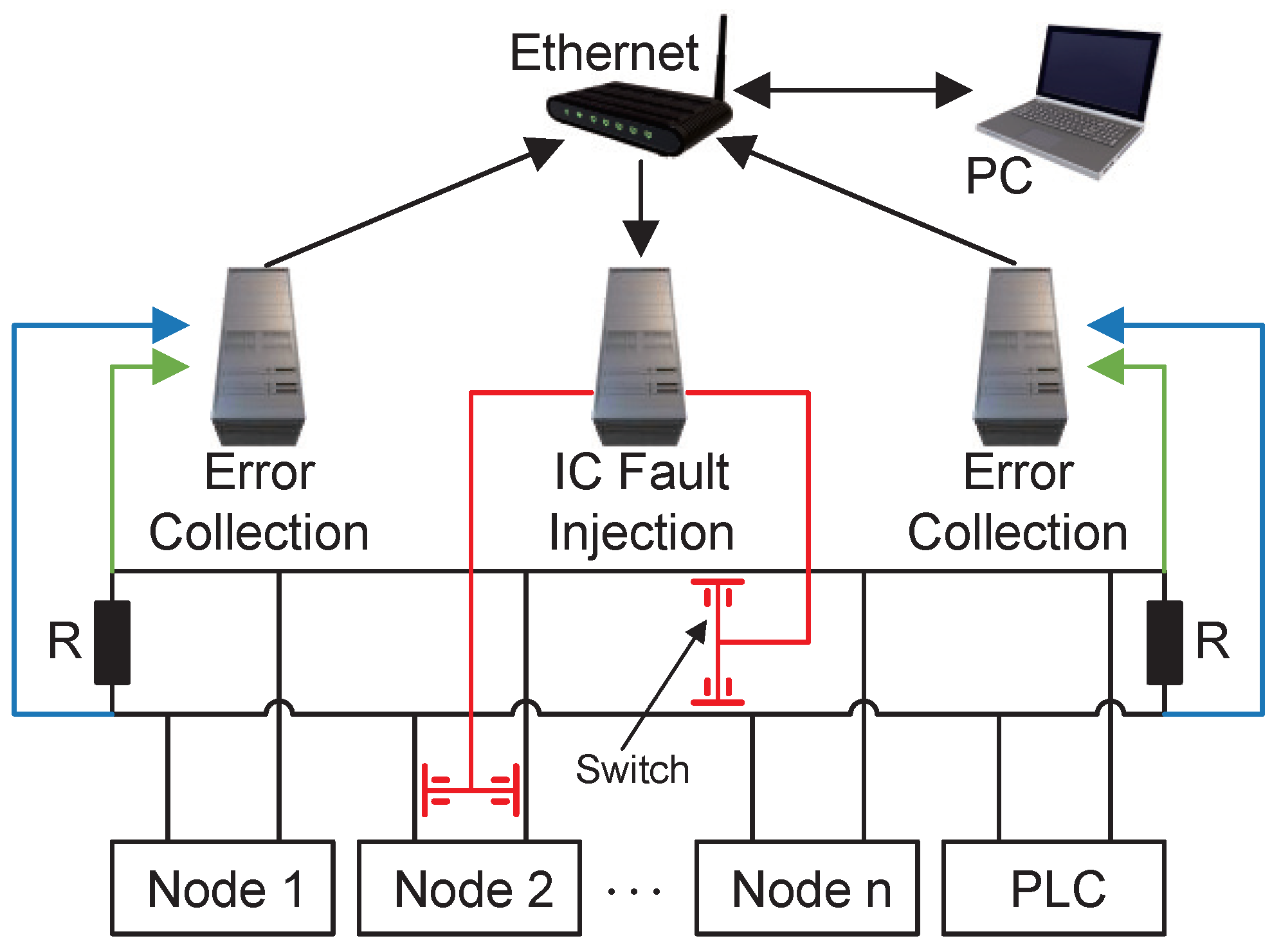

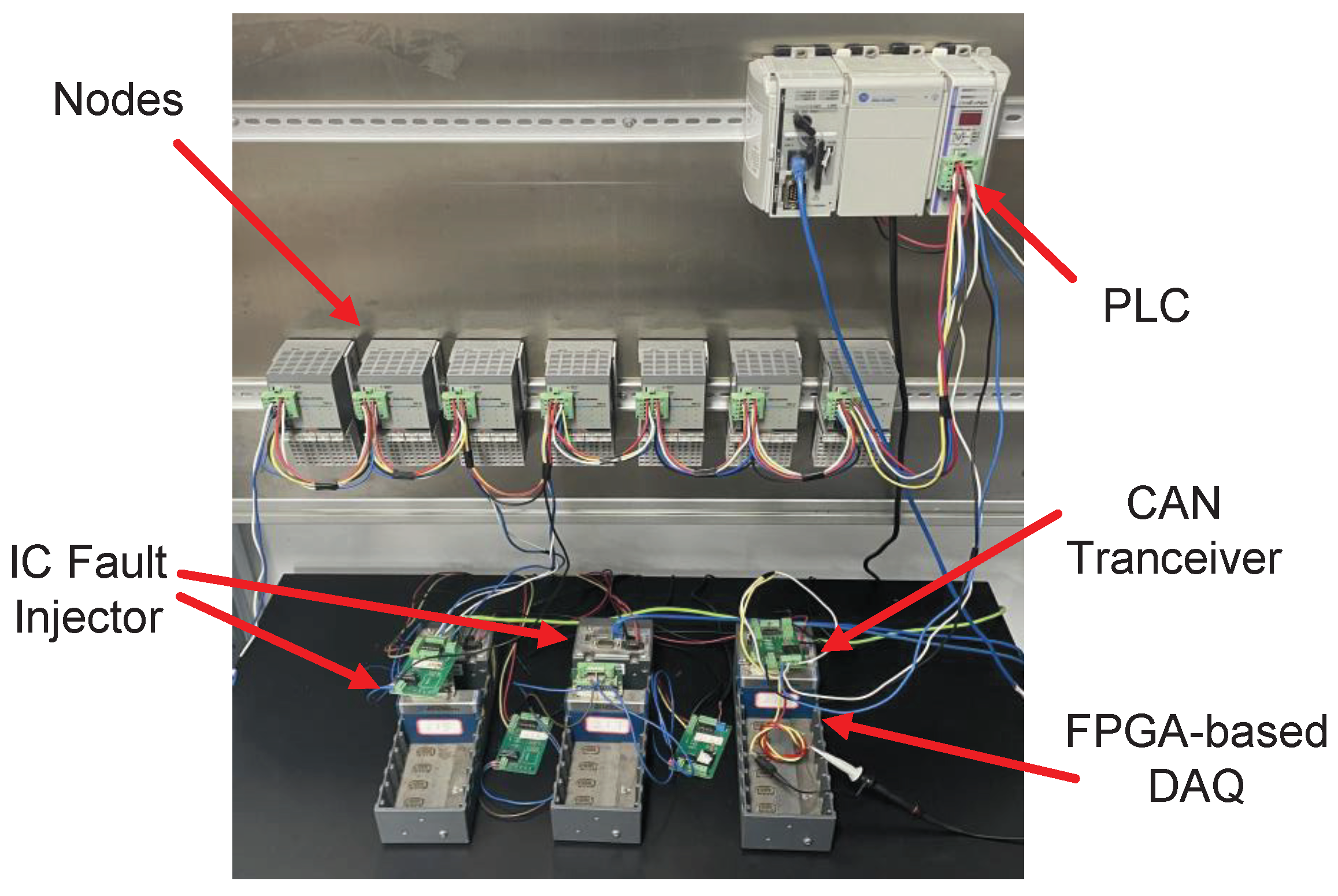

4.1. Testbed Setup

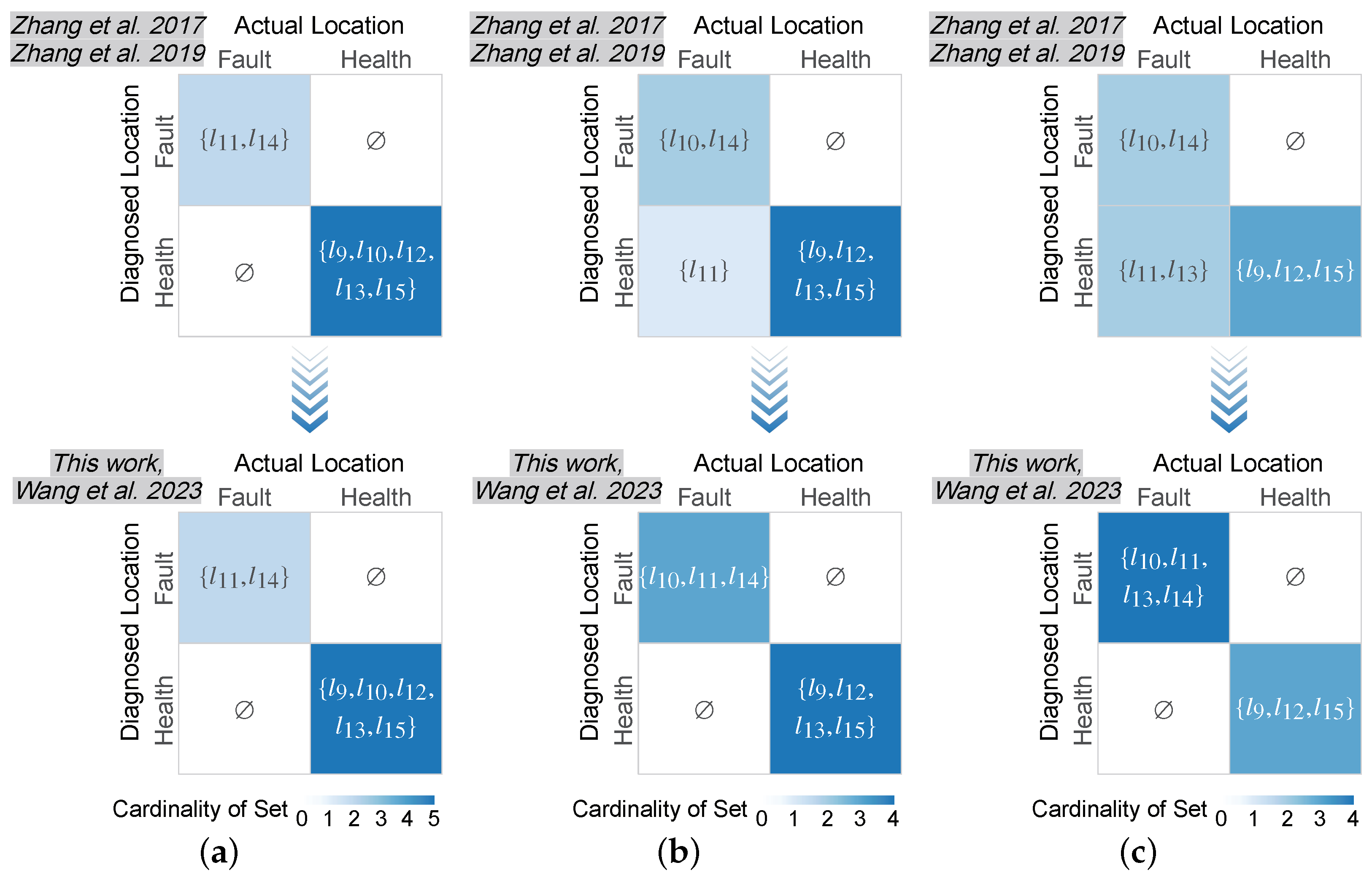

4.2. Case Study 1: Two Local IC Faults and Two Trunk IC Faults

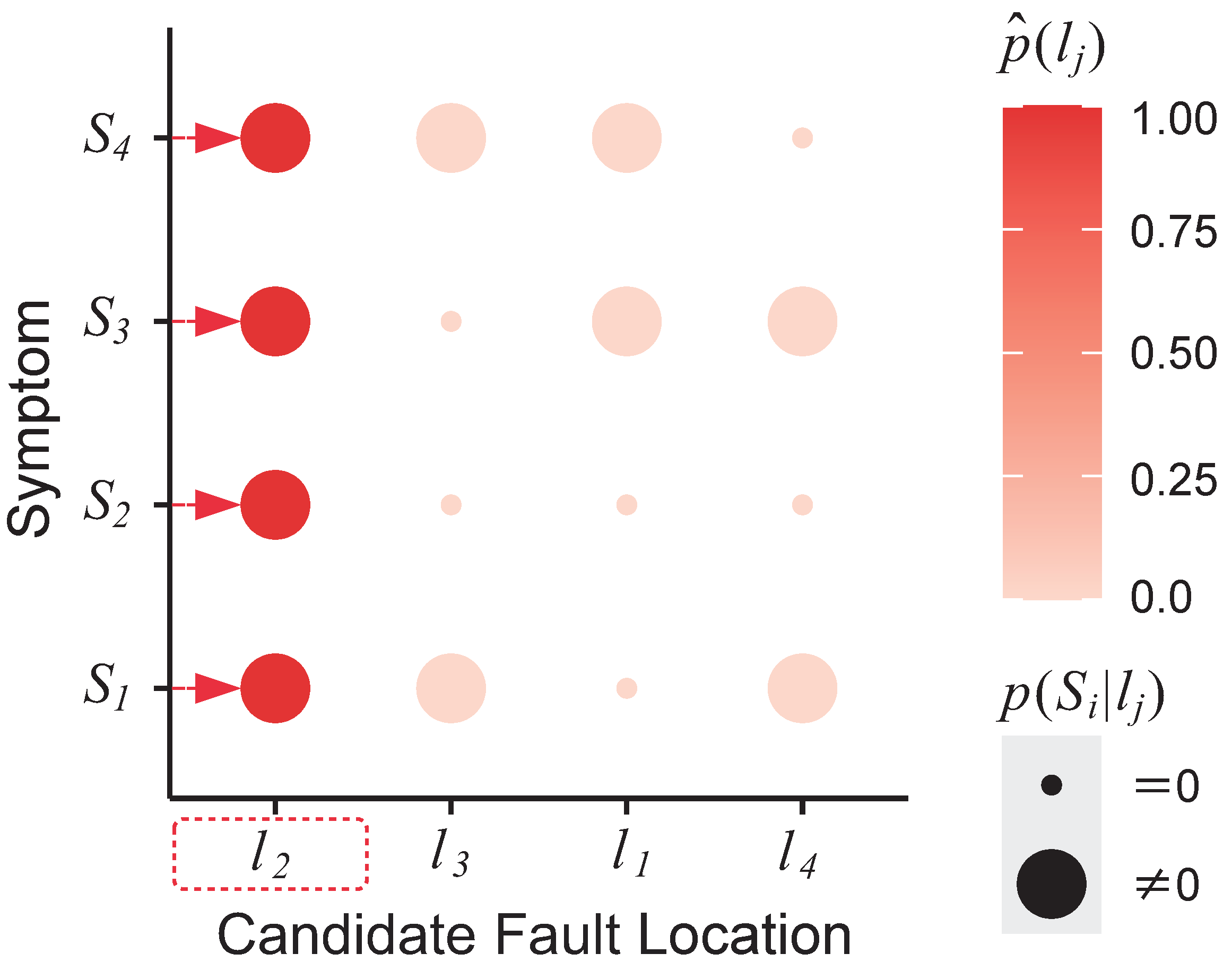

4.2.1. Local IC Fault Diagnosis

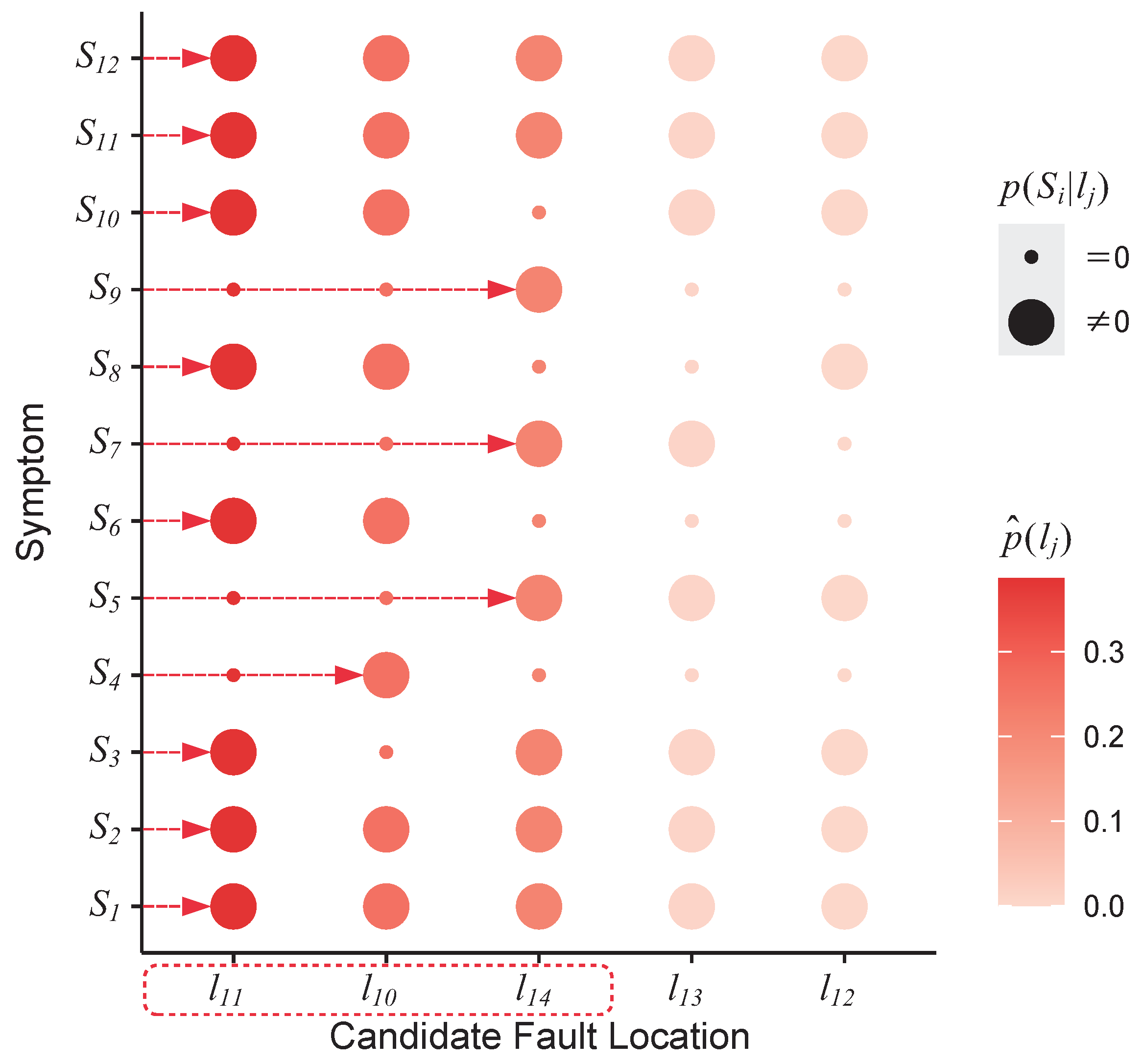

4.2.2. Trunk IC Fault Diagnosis

4.3. Case Study 2: One Local IC Fault and Three Trunk IC Faults

4.3.1. Local IC Fault Diagnosis

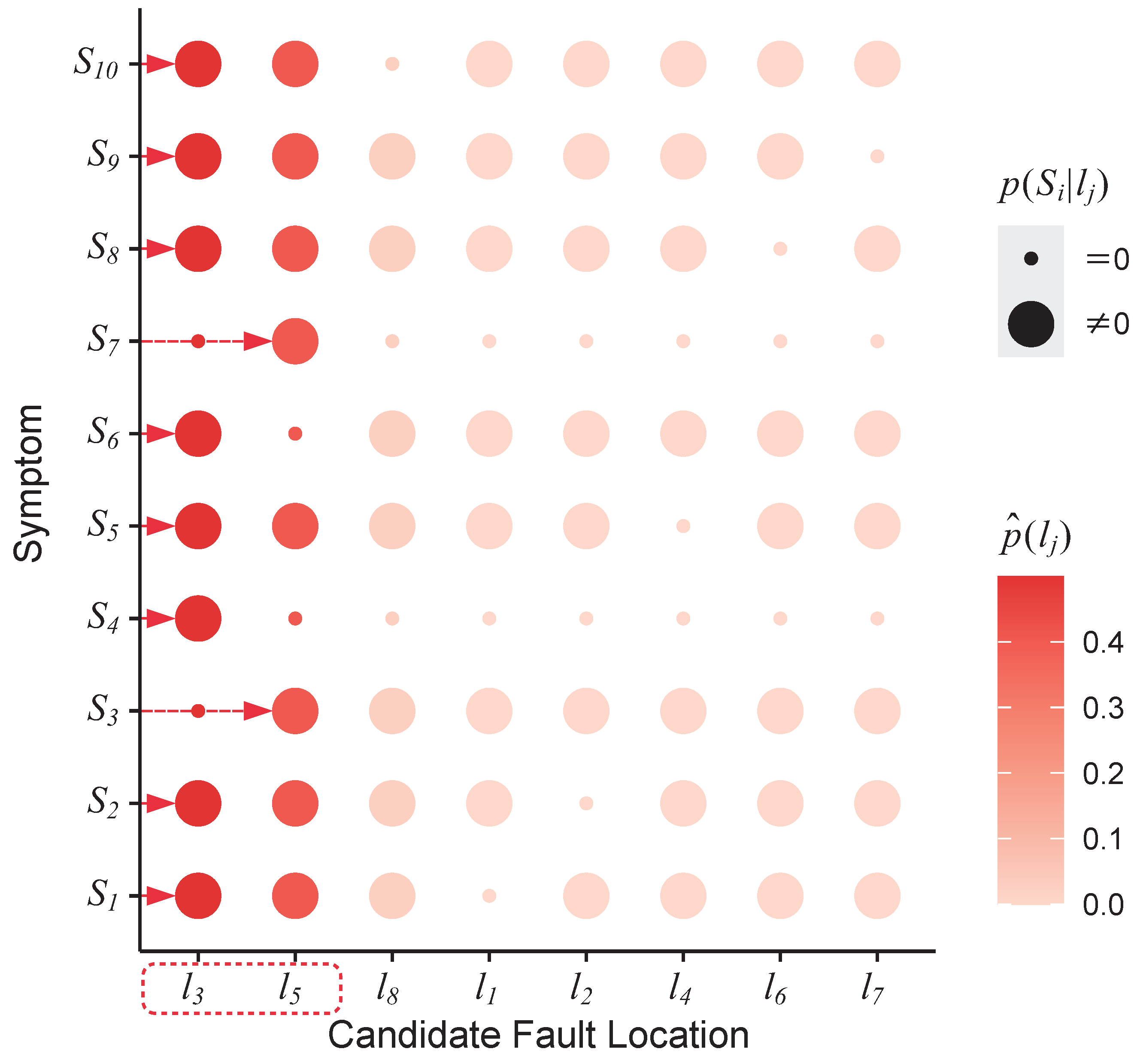

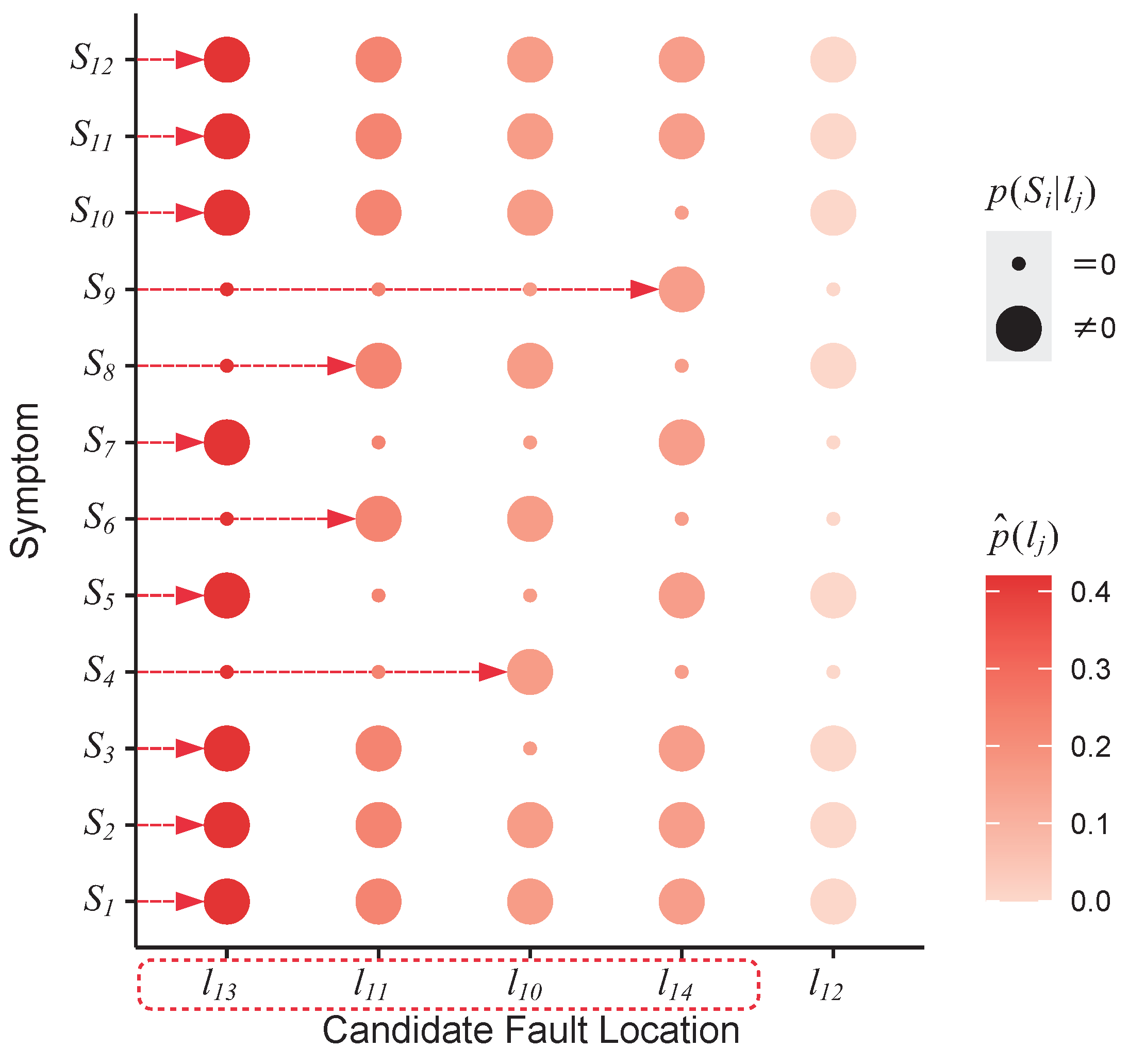

4.3.2. Trunk IC Fault Diagnosis

4.4. Case Study 3: Four Trunk IC Faults

5. Discussion

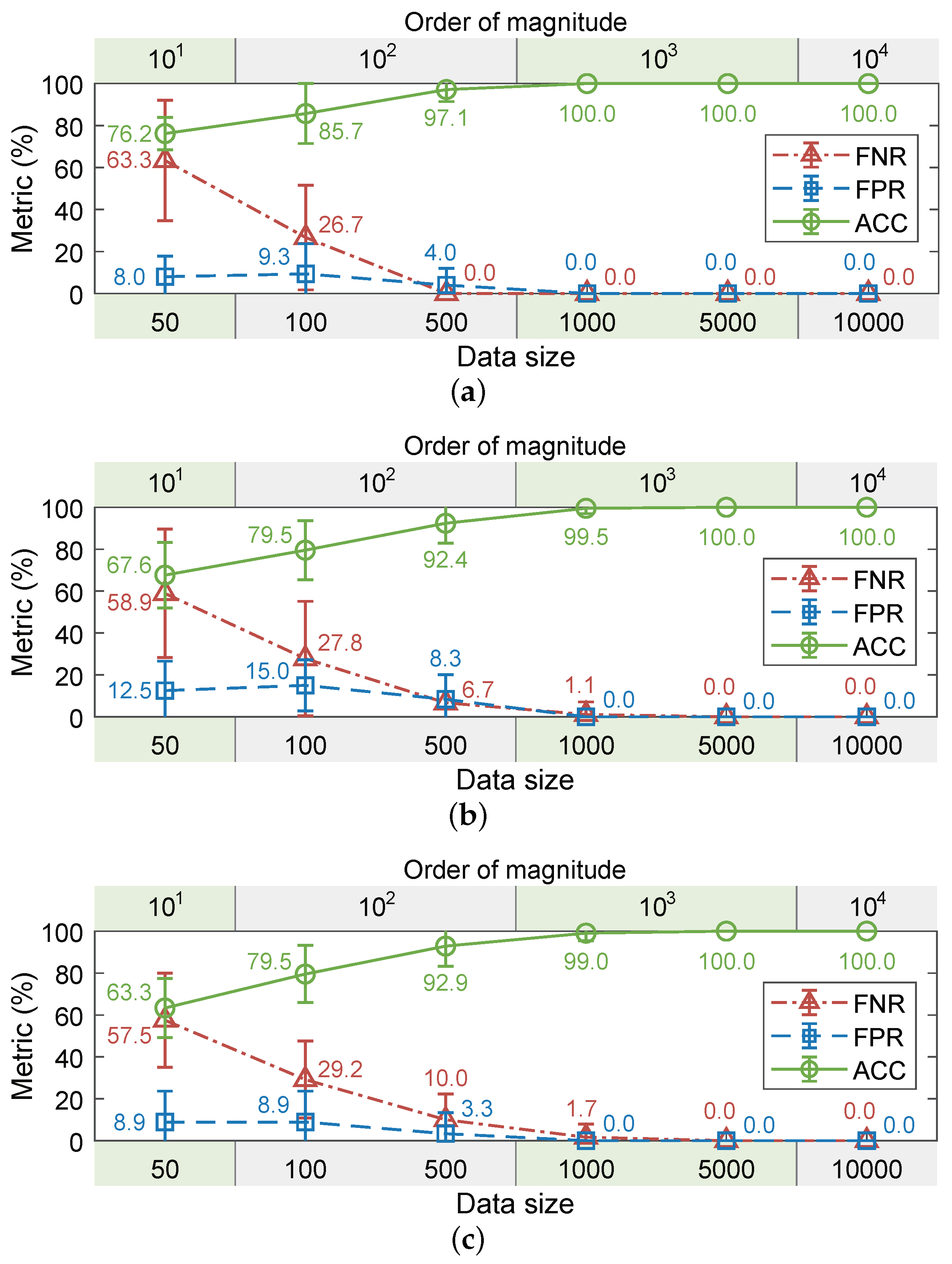

5.1. Diagnostic Accuracy

5.2. Diagnostic Efficiency

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Farsi, M.; Ratcliff, K.; Barbosa, M. An overview of controller area network. Comput. Control Eng. J. 1999, 10, 113–120. [Google Scholar] [CrossRef]

- Technical Report IEEE Standard 100-2000; The Authoritative Dictionary of IEEE Standards Terms. IEEE: Piscataway, NJ, USA, 2000.

- Ismaeel, A.A.; Bhatnagar, R. Test for detection and location of intermittent faults in combinational circuits. IEEE Trans. Reliab. 1997, 46, 269–274. [Google Scholar] [CrossRef]

- Fang, X.; Qu, J.; Tang, Q.; Chai, Y. Intermittent fault recognition of analog circuits in the presence of outliers via density peak clustering with adaptive weighted distance. IEEE Sens. J. 2023, 23, 13351–13359. [Google Scholar] [CrossRef]

- Sydor, P.; Kavade, R.; Hockley, C.J. Warranty Impacts from No Fault Found (NFF) and an Impact Avoidance Benchmarking Tool. In Advances in Through-Life Engineering Services; Redding, L., Roy, R., Shaw, A., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 245–259. [Google Scholar] [CrossRef]

- Khan, S.; Phillips, P.; Jennions, I.; Hockley, C. No fault found events in maintenance engineering Part 1: Current trends, implications and organizational practices. Reliab. Eng. Syst. Saf. 2014, 123, 183–195. [Google Scholar] [CrossRef]

- Bâzu, M.; Bǎjenescu, T. Failure Analysis: A Practical Guide for Manufacturers of Electronic Components and Systems; Wiley: Chichester, UK, 2011; pp. 37–70. [Google Scholar] [CrossRef]

- Shannon, R.; Quiter, J.; D’Annunzio, A.; Meseroll, R.; Lebron, R.; Sieracki, V. A systems approach to diagnostic ambiguity reduction in naval avionic systems. In Proceedings of the IEEE Autotestcon, Orlando, FL, USA, 26–29 September 2005; pp. 194–200. [Google Scholar] [CrossRef]

- Lei, Y. Intelligent Maintenance in Networked Industrial Automation Systems. Ph.D. Dissertation, Department of Mechanical Engineering, University of Michigan, Ann Arbor, MI, USA, 2007. [Google Scholar]

- Cauffriez, L.; Conrard, B.; Thiriet, J.; Bayart, M. Fieldbuses and their influence on dependability. In Proceedings of the IEEE 20th Instrumentation and Measurement Technology Conference, Vail, CO, USA, 20–22 May 2003; pp. 83–88. [Google Scholar] [CrossRef]

- Söderholm, P. A system view of the no fault found (NFF) phenomenon. Reliab. Eng. Syst. Saf. 2007, 92, 1–14. [Google Scholar] [CrossRef]

- Thomas, D.A.; Ayers, K.; Pecht, M. The ‘trouble not identified’ phenomenon in automotive electronics. Microelectron. Reliab. 2002, 42, 641–651. [Google Scholar] [CrossRef]

- Moffat, B.; Abraham, E.; Desmulliez, M.; Koltsov, D.; Richardson, A. Failure mechanisms of legacy aircraft wiring and interconnects. IEEE Trans. Dielectr. Electr. Insul. 2008, 15, 808–822. [Google Scholar] [CrossRef]

- Beniaminy, I.; Joseph, D. Reducing the ‘no fault found’ problem: Contributions from expert-system methods. In Proceedings of the Proceedings, IEEE Aerospace Conference, Big Sky, MT, USA, 9–16 March 2002; pp. 9–16. [Google Scholar] [CrossRef]

- He, W.; He, Y.; Li, B.; Zhang, C. Analog circuit fault diagnosis via joint cross-wavelet singular entropy and parametric t-SNE. Entropy 2018, 20, 604. [Google Scholar] [CrossRef]

- He, W.; He, Y.; Luo, Q.; Zhang, C. Fault diagnosis for analog circuits utilizing time-frequency features and improved VVRKFA. Meas. Sci. Technol. 2018, 29, 045004. [Google Scholar] [CrossRef]

- Zhang, C.; Zhao, S.; Yang, Z.; He, Y. A multi-fault diagnosis method for lithium-ion battery pack using curvilinear Manhattan distance evaluation and voltage difference analysis. J. Energy Storage 2023, 67, 107575. [Google Scholar] [CrossRef]

- Zhou, D.; Shi, J.; He, X. Review of intermittent fault diagnosis techniques for dynamic systems. Acta Autom. Sin. 2014, 40, 161–171. [Google Scholar] [CrossRef]

- Deng, G.; Qiu, J.; Liu, G.; Lyu, K. A discrete event systems approach to discriminating intermittent from permanent faults. Chin. J. Aeronaut. 2014, 27, 390–396. [Google Scholar] [CrossRef]

- Zhang, K.; Gou, B.; Xiong, W.; Feng, X. An online diagnosis method for sensor intermittent fault based on data-driven model. IEEE Trans. Power Electron. 2023, 38, 2861–2865. [Google Scholar] [CrossRef]

- Bondavalli, A.; Chiaradonna, S.; Giandomenico, F.D.; Grandoni, F. Threshold-based mechanisms to discriminate transient from intermittent faults. IEEE Trans. Comput. 2000, 49, 230–245. [Google Scholar] [CrossRef]

- Cai, B.; Liu, Y.; Xie, M. A dynamic-bayesian-network-based fault diagnosis methodology considering transient and intermittent faults. IEEE Trans. Automat. Sci. Eng. 2017, 14, 276–285. [Google Scholar] [CrossRef]

- Yu, M.; Wang, D. Model-based health monitoring for a vehicle steering system with multiple faults of unknown types. IEEE Trans. Ind. Electron. 2014, 61, 3574–3586. [Google Scholar] [CrossRef]

- Gouda, B.S.; Panda, M.; Panigrahi, T.; Das, S.; Appasani, B.; Acharya, O.; Zawbaa, H.M.; Kamel, S. Distributed intermittent fault diagnosis in wireless sensor network using likelihood ratio test. IEEE Access 2023, 11, 6958–6972. [Google Scholar] [CrossRef]

- Mahapatro, A.; Khilar, P.M. Detection and diagnosis of node failure in wireless sensor networks: A multiobjective optimization approach. Swarm Evol. Comput. 2013, 13, 74–84. [Google Scholar] [CrossRef]

- Syed, W.A.; Perinpanayagam, S.; Samie, M.; Jennions, I. A novel intermittent fault detection algorithm and health monitoring for electronic interconnections. IEEE Trans. Compon. Packag. Manuf. Technol. 2016, 6, 400–406. [Google Scholar] [CrossRef]

- Yu, M.; Wang, Z.; Wang, H.; Jiang, W.; Zhu, R. Intermittent fault diagnosis and prognosis for steer-by-wire system using composite degradation model. IEEE J. Emerg. Sel. Top. Circuits Syst. 2023, 13, 557–571. [Google Scholar] [CrossRef]

- Yaramasu, A.; Cao, Y.; Liu, G.; Wu, B. Intermittent wiring fault detection and diagnosis for SSPC based aircraft power distribution system. In Proceedings of the IEEE/ASME International Conference on Advanced Intelligent Mechatronics, Kaohsiung, Taiwan, 11–14 July 2012; pp. 1117–1122. [Google Scholar] [CrossRef]

- Huang, C.; Shen, Z.; Zhang, J.; Hou, G. BIT-based intermittent fault diagnosis of analog circuits by improved deep forest classifier. IEEE Trans. Instrum. Meas. 2022, 71, 3519213. [Google Scholar] [CrossRef]

- Furse, C.; Smith, P.; Safavi, M.; Lo, C. Feasibility of spread spectrum sensors for location of arcs on live wires. IEEE Sens. J. 2005, 5, 1445–1450. [Google Scholar] [CrossRef]

- Smith, P.; Furse, C.; Gunther, J. Analysis of spread spectrum time domain reflectometry for wire fault location. IEEE Sens. J. 2005, 5, 1469–1478. [Google Scholar] [CrossRef]

- Jiang, Y.; Miao, Y.; Qiu, Z.; Wang, Z.; Pan, J.; Yang, C. Intermittent fault detection and diagnosis for aircraft fuel system based on SVM. In Proceedings of the CSAA/IET International Conference on Aircraft Utility Systems (AUS), Online, 18–21 September 2020; pp. 1255–1258. [Google Scholar] [CrossRef]

- Alamuti, M.M.; Nouri, H.; Ciric, R.M.; Terzija, V. Intermittent fault location in distribution feeders. IEEE Trans. Power Del. 2012, 27, 96–103. [Google Scholar] [CrossRef]

- Farughian, A.; Kumpulainen, L.; Kauhaniemi, K.; Hovila, P. Intermittent earth fault passage indication in compensated distribution networks. IEEE Access 2021, 9, 45356–45366. [Google Scholar] [CrossRef]

- Li, L.; Wang, Z.; Shen, Y. Fault diagnosis for the intermittent fault in gyroscopes: A data-driven method. In Proceedings of the 35th Chinese Control Conference (CCC), Chengdu, China, 27–29 July 2016; pp. 6639–6643. [Google Scholar] [CrossRef]

- Huakang, L.; Kehong, L.; Yong, Z.; Qiu, J.; Liu, G. Study of solder joint intermittent fault diagnosis based on dynamic analysis. IEEE Trans. Compon. Packag. Manuf. Technol. 2019, 9, 1748–1758. [Google Scholar] [CrossRef]

- Yan, R.; He, X.; Wang, Z.; Zhou, D. Detection, isolation and diagnosability analysis of intermittent faults in stochastic systems. Int. J. Control 2018, 91, 480–494. [Google Scholar] [CrossRef]

- Song, J.; Lin, L.; Huang, Y.; Hsieh, S.Y. Intermittent fault diagnosis of split-star networks and its applications. IEEE Trans. Parallel Distrib. Syst. 2023, 34, 1253–1264. [Google Scholar] [CrossRef]

- Kelkar, S.; Kamal, R. Adaptive fault diagnosis algorithm for controller area network. IEEE Trans. Ind. Electron. 2014, 61, 5527–5537. [Google Scholar] [CrossRef]

- Kelkar, S.; Kamal, R. Implementation of data reduction technique in adaptive fault diagnosis algorithm for controller area network. In Proceedings of the International Conference on Circuits, Systems, Communication and Information Technology Applications, Mumbai, India, 4–5 April 2014; pp. 156–161. [Google Scholar] [CrossRef]

- Nath, N.N.; Pillay, V.R.; Saisuriyaa, G. Distributed node fault detection and tolerance algorithm for controller area networks. In Intelligent Systems Technologies and Applications; Springer International Publishing: Cham, Switzerland, 2016; pp. 247–257. [Google Scholar] [CrossRef]

- Yang, Y.; Wang, L.; Li, Z.; Shen, P.; Guan, X.; Xia, W. Anomaly detection for controller area network in braking control system with dynamic ensemble selection. IEEE Access 2019, 7, 95418–95429. [Google Scholar] [CrossRef]

- Hassen, W.B.; Auzanneau, F.; Pérės, F.; Tchangani, A.P. Diagnosis sensor fusion for wire fault location in CAN bus systems. In Proceedings of the IEEE SENSORS, Baltimore, MD, USA, 3–6 November 2013; pp. 1–4. [Google Scholar] [CrossRef]

- Hu, H.; Qin, G. Online fault diagnosis for controller area networks. In Proceedings of the Fourth International Conference on Intelligent Computation Technology and Automation, Shenzhen, China, 28–29 March 2011; pp. 452–455. [Google Scholar] [CrossRef]

- Gao, D.; Wang, Q. Health monitoring of controller area network in hybrid excavator based on the message response time. In Proceedings of the IEEE/ASME International Conference on Advanced Intelligent Mechatronics, Besacon, France, 8–11 July 2014; pp. 1634–1639. [Google Scholar] [CrossRef]

- Pohren, D.H.; Roque, A.d.S.; Kranz, T.A.I.; de Freitas, E.P.; Pereira, C.E. An analysis of the impact of transient faults on the performance of the CAN-FD protocol. IEEE Trans. Ind. Electron. 2020, 67, 2440–2449. [Google Scholar] [CrossRef]

- Roque, A.D.S.; Jazdi, N.; Freitas, E.P.D.; Pereira, C.E. A fault modeling based runtime diagnostic mechanism for vehicular distributed control systems. IEEE Trans. Intell. Transp. Syst. 2022, 23, 7220–7232. [Google Scholar] [CrossRef]

- Lei, Y.; Yuan, Y.; Zhao, J. Model-based detection and monitoring of the intermittent connections for CAN networks. IEEE Trans. Ind. Electron. 2014, 61, 2912–2921. [Google Scholar] [CrossRef]

- Lei, Y.; Djurdjanovic, D. Diagnosis of intermittent connections for DeviceNet. Chin. J. Mech. Eng. 2010, 23, 606–612. [Google Scholar] [CrossRef][Green Version]

- Lei, Y.; Yuan, Y.; Sun, Y. Fault location identification for localized intermittent connection problems on CAN networks. Chin. J. Mech. Eng. 2014, 27, 1038–1046. [Google Scholar] [CrossRef]

- Lei, Y.; Xie, H.; Yuan, Y.; Chang, Q. Fault location for the intermittent connection problems on CAN networks. IEEE Trans. Ind. Electron. 2015, 62, 7203–7213. [Google Scholar] [CrossRef]

- Zhang, L.; Lei, Y.; Chang, Q. Intermittent connection fault diagnosis for CAN using data link layer information. IEEE Trans. Ind. Electron. 2017, 64, 2286–2295. [Google Scholar] [CrossRef]

- Zhang, L.; Yang, F.; Lei, Y. Tree-based intermittent connection fault diagnosis for controller area network. IEEE Trans. Veh. Technol. 2019, 68, 9151–9161. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, L.; Lei, Y. Diagnosis of intermittent connection faults for CAN networks with complex topology. IEEE Access 2023, 11, 52199–52213. [Google Scholar] [CrossRef]

- Technical Report ISO 11898-1:2003; Road Vehicles-Controller Area Network (CAN)-Part 1: Data Link Layer and Physical Signalling. ISO: Geneva, Switzerland, 2003.

- Bosch, R. CAN Specification Version 2.0; Technical Report, Postfach; Rober Bousch GmbH: Stuttgart, Germany, 1991. [Google Scholar]

| Reference | Main Approach | Research Gap |

|---|---|---|

| [15,16,17] | Fault diagnosis methods for electronic circuits | Only permanent component faults are considered, not IFs. |

| [4,18,19,20,21,22,23,24,25,26] | IF detection and recognition methods for electrical systems | IF localization is not addressed. |

| [27,28,29,30,31,32,33,34,35,36,37,38] | IF localization methods for electrical systems | Specialized for specific systems, not applicable to IF diagnosis for CANs. |

| [39,40,41,42,43,44,45] | Fault diagnosis methods for CANs | Only permanent faults are considered, not IFs. |

| [46,47] | Analysis of IF-induced performance anomalies for CANs | IF localization is not addressed, and cable faults are not considered. |

| [48] | IC fault detection method for CANs | IC fault localization is not addressed. |

| [49,50] | Physical layer-based location methods for drop cable IC faults in CANs | IC faults on trunk cables cannot be located. |

| [51] | Physical layer-based IC fault location methods for CANs | Robustness is poor, and only one trunk cable IC fault can be located. |

| [52,53] | Data link layer-based IC fault location methods for CANs | Only up to two trunk cable IC faults can be located, and the fault probability for every cable cannot be quantified. |

| [54] | Indirect method of locating IC faults in complex topology CANs | Multiple trunk cable IC faults can be located, but it requires repeated diagnosis. |

| This work | Direct method of locating IC faults in CANs | (Covered gap) Locate multiple trunk cable IC faults accurately without need for repeated diagnosis. |

| 0 | 0.16 | 0.26 | 0.48 | |

| 0 | 0.19 | 0 | 0 | |

| 0.27 | 0.18 | 0 | 0.52 | |

| 0.73 | 0.47 | 0.74 | 0 |

| 1 |

| Node r | Symptoms for Local IC Fault | Symptoms for Trunk IC Fault | ||||||

|---|---|---|---|---|---|---|---|---|

| 1 | 538 | 3 | 611 | 3 | ||||

| 2 | 524 | − | − | 560 | 2 | |||

| 5 | 261 | 350 | 586 | − | − | |||

| 6 | 473 | 2 | 330 | 130 | ||||

| 10 | 312 | 331 | 392 | 121 | ||||

| 11 | 445 | 2 | 394 | 119 | ||||

| 12 | 540 | − | − | 513 | − | − | ||

| PLC | 2605 | 4 | 2339 | − | − | |||

| Node r | Domains of Symptoms for Local IC Fault | |

|---|---|---|

| 1 | − | |

| 2 | − | |

| 5 | ||

| 6 | − | |

| 10 | ||

| 11 | − | |

| 12 | − | |

| PLC | − | |

| Node r | Domains of Symptoms for Trunk IC Fault | |

|---|---|---|

| 1 | − | |

| 2 | − | |

| 5 | − | |

| 6 | ||

| 10 | ||

| 11 | ||

| 12 | − | |

| PLC | − | |

| Node r | Symptoms for Local IC Fault | Symptoms for Trunk IC Fault | ||||||

|---|---|---|---|---|---|---|---|---|

| 1 | 294 | − | − | 901 | − | − | ||

| 2 | 275 | 2 | 860 | 2 | ||||

| 5 | 280 | 2 | 521 | 333 | ||||

| 6 | 226 | − | − | 296 | 510 | |||

| 10 | 3 | 301 | 310 | 506 | ||||

| 11 | 205 | − | − | 299 | 515 | |||

| 12 | 251 | − | − | 853 | − | − | ||

| PLC | 1284 | 7 | 4017 | − | − | |||

| Node r | Domains of Symptoms for Trunk IC Fault | |

|---|---|---|

| 1 | − | |

| 2 | − | |

| 5 | ||

| 6 | ||

| 10 | ||

| 11 | ||

| 12 | − | |

| PLC | − | |

| Node r | Symptoms for Local IC Fault | Symptoms for Trunk IC Fault | ||||||

|---|---|---|---|---|---|---|---|---|

| 1 | 3 | 1 | 1230 | − | − | |||

| 2 | 2 | 2 | 1172 | 2 | ||||

| 5 | 3 | 2 | 869 | 332 | ||||

| 6 | 3 | − | − | 587 | 510 | |||

| 10 | 2 | 1 | 651 | 506 | ||||

| 11 | 2 | − | − | 298 | 824 | |||

| 12 | 1 | 1 | 1178 | − | − | |||

| PLC | 9 | 7 | 5600 | − | − | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, L.; Hu, S.; Lei, Y. Association Model-Based Intermittent Connection Fault Diagnosis for Controller Area Networks. Actuators 2024, 13, 358. https://doi.org/10.3390/act13090358

Wang L, Hu S, Lei Y. Association Model-Based Intermittent Connection Fault Diagnosis for Controller Area Networks. Actuators. 2024; 13(9):358. https://doi.org/10.3390/act13090358

Chicago/Turabian StyleWang, Longkai, Shuqi Hu, and Yong Lei. 2024. "Association Model-Based Intermittent Connection Fault Diagnosis for Controller Area Networks" Actuators 13, no. 9: 358. https://doi.org/10.3390/act13090358

APA StyleWang, L., Hu, S., & Lei, Y. (2024). Association Model-Based Intermittent Connection Fault Diagnosis for Controller Area Networks. Actuators, 13(9), 358. https://doi.org/10.3390/act13090358