Using a Robot for Indoor Navigation and Door Opening Control Based on Image Processing

Abstract

1. Introduction

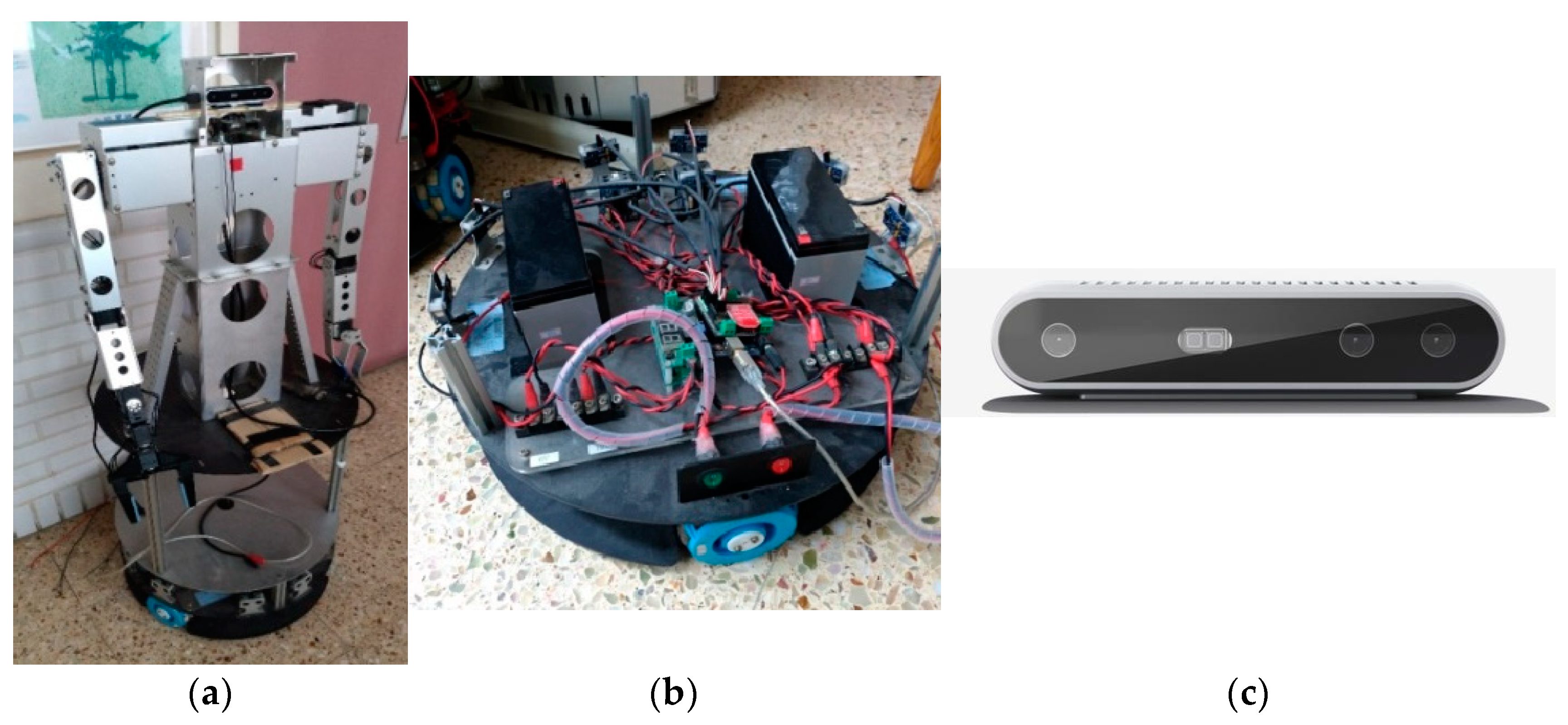

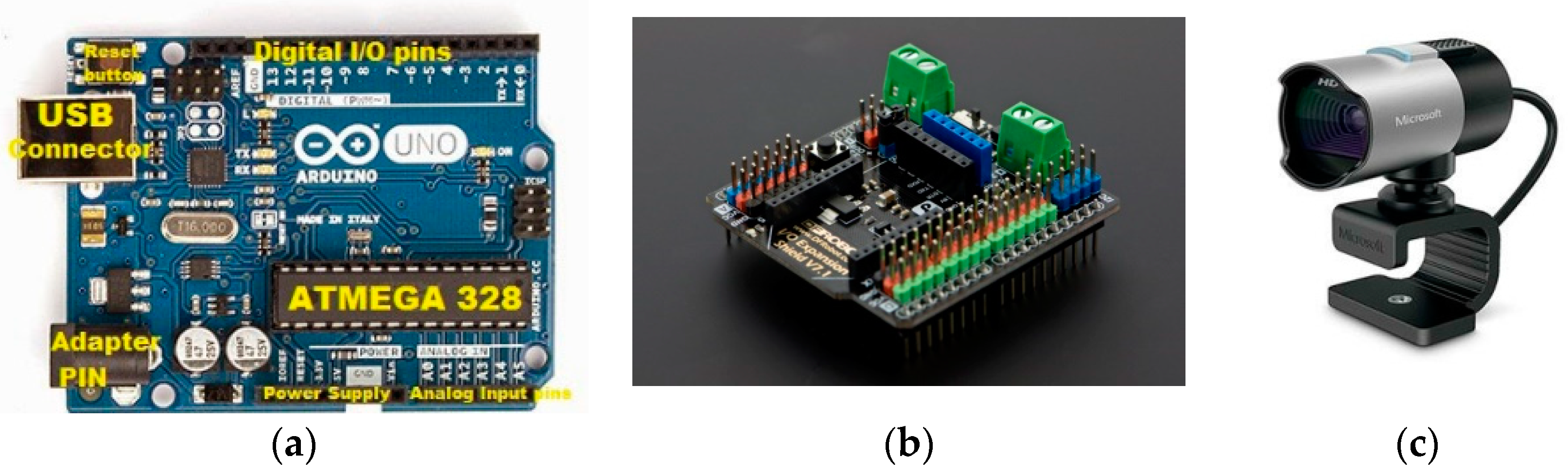

2. System Description

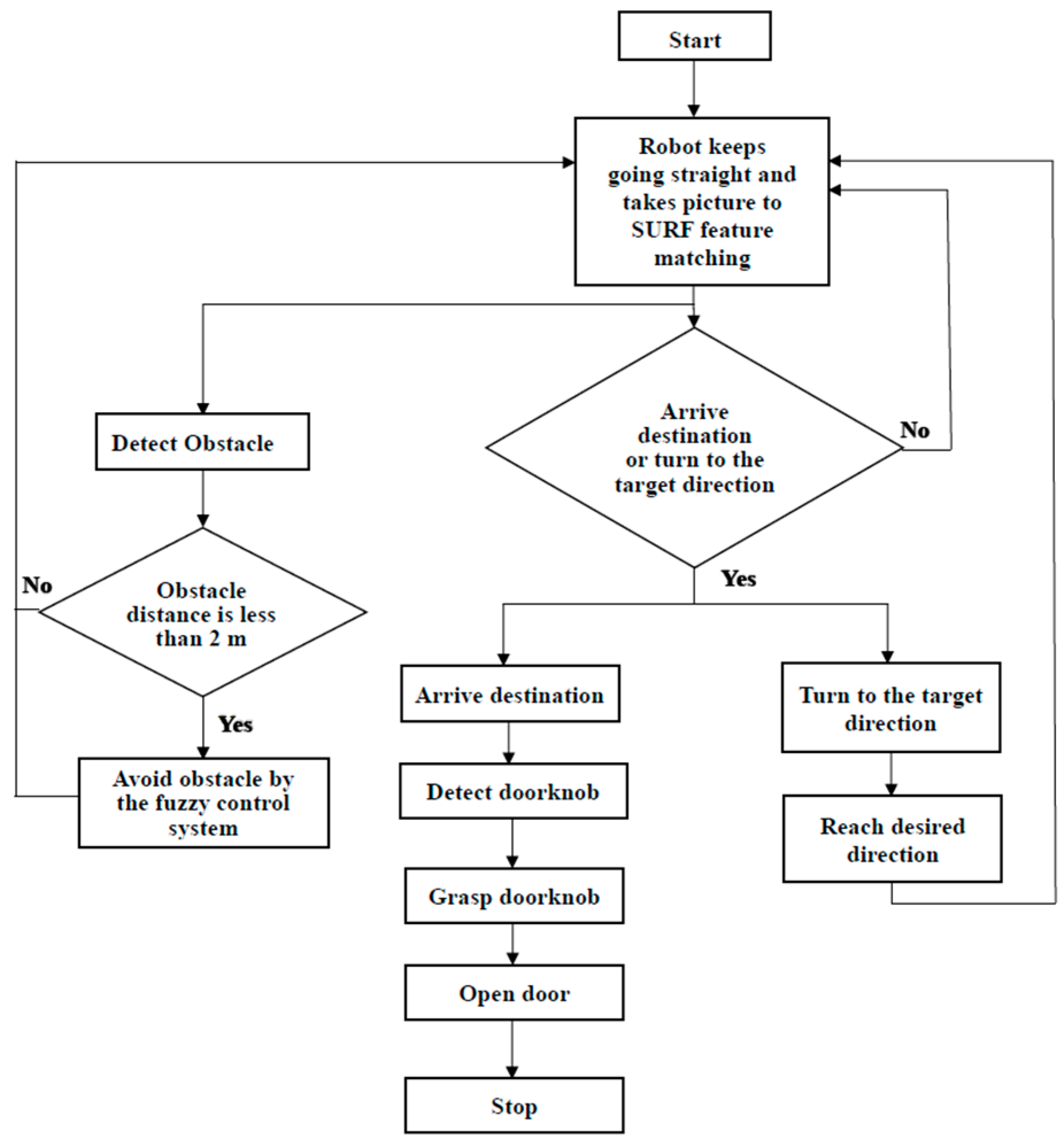

3. Image Processing and Pattern Recognition

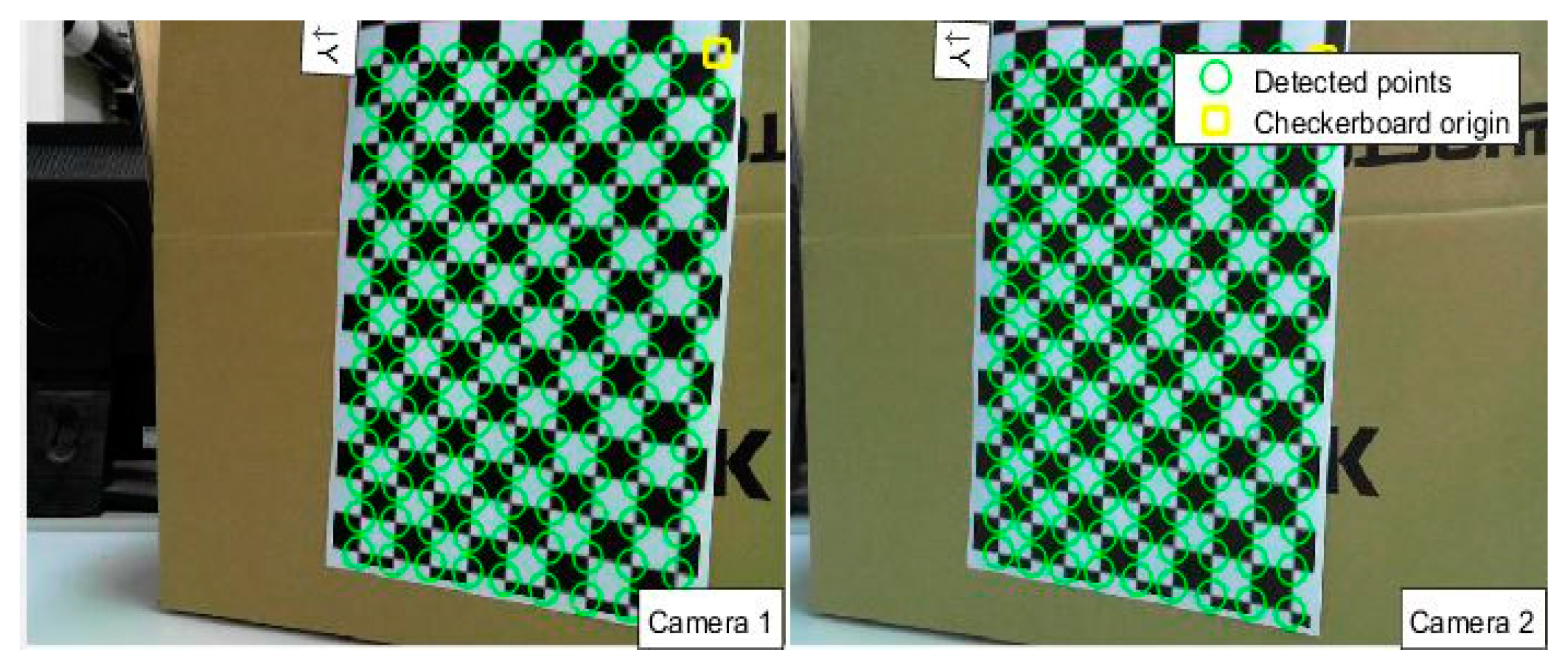

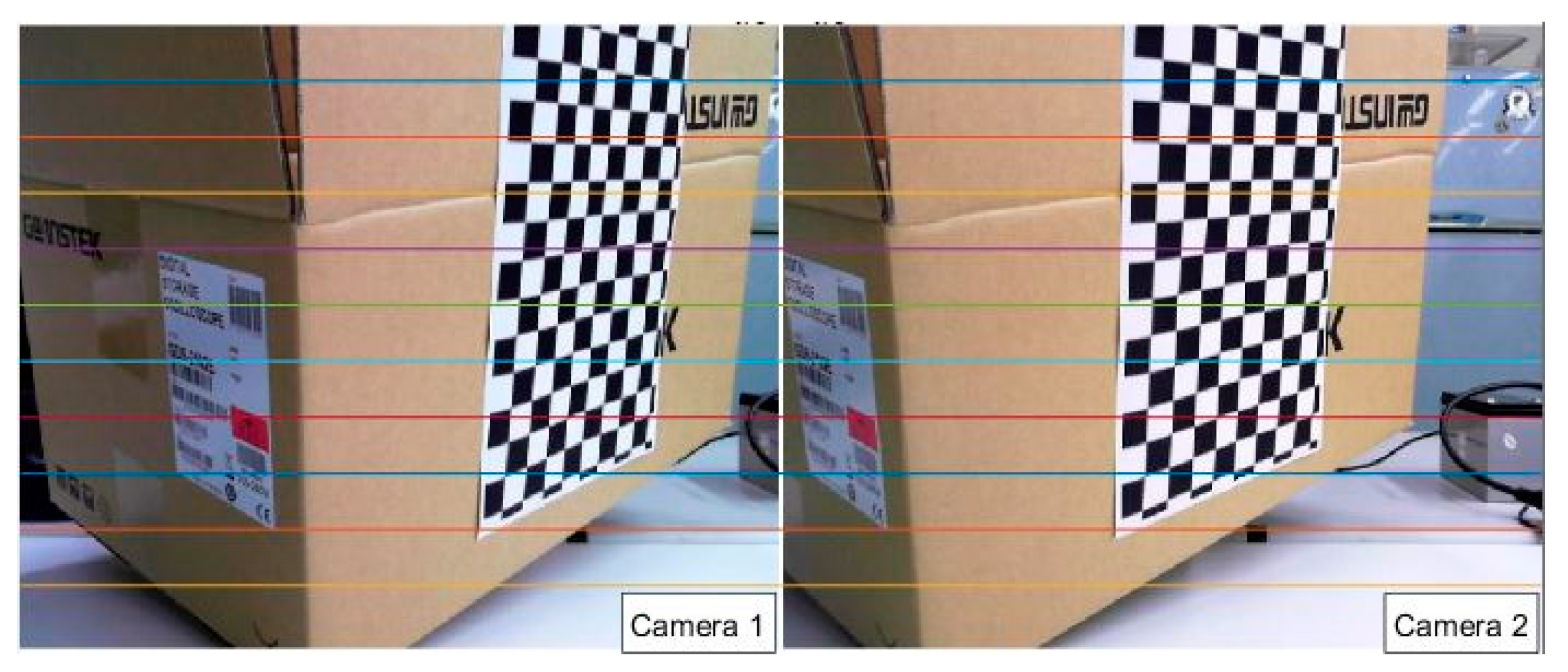

3.1. Camera Calibration

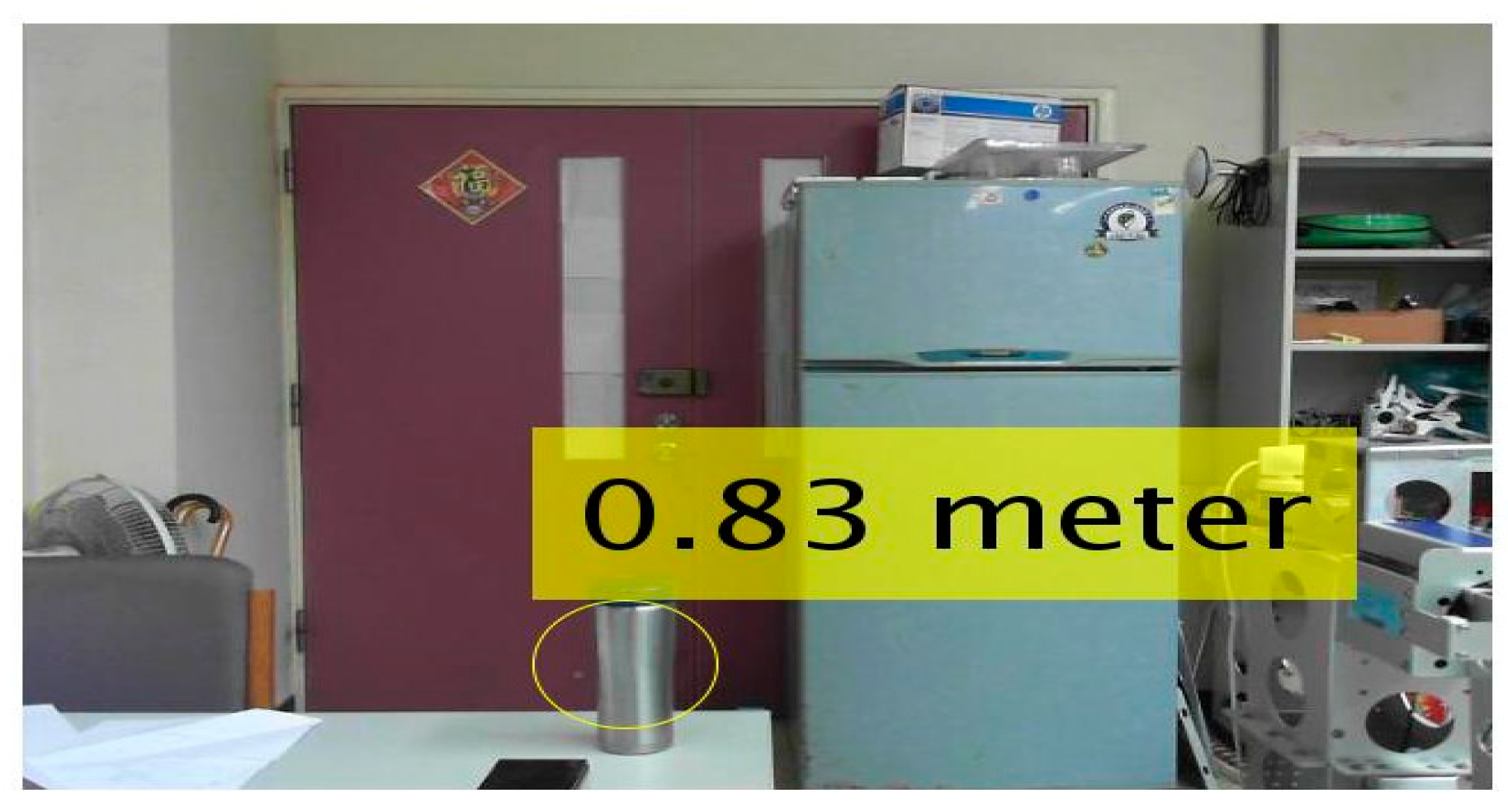

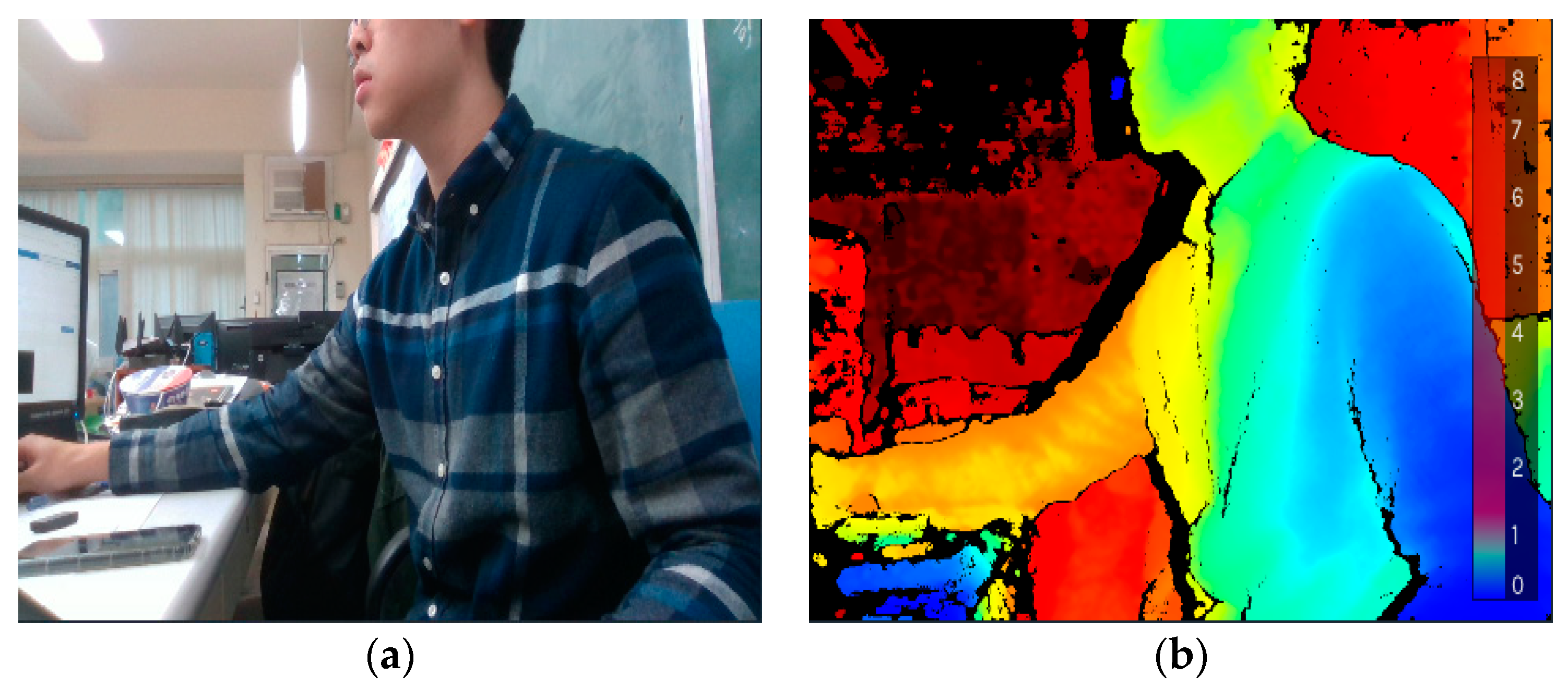

3.2. Depth Map

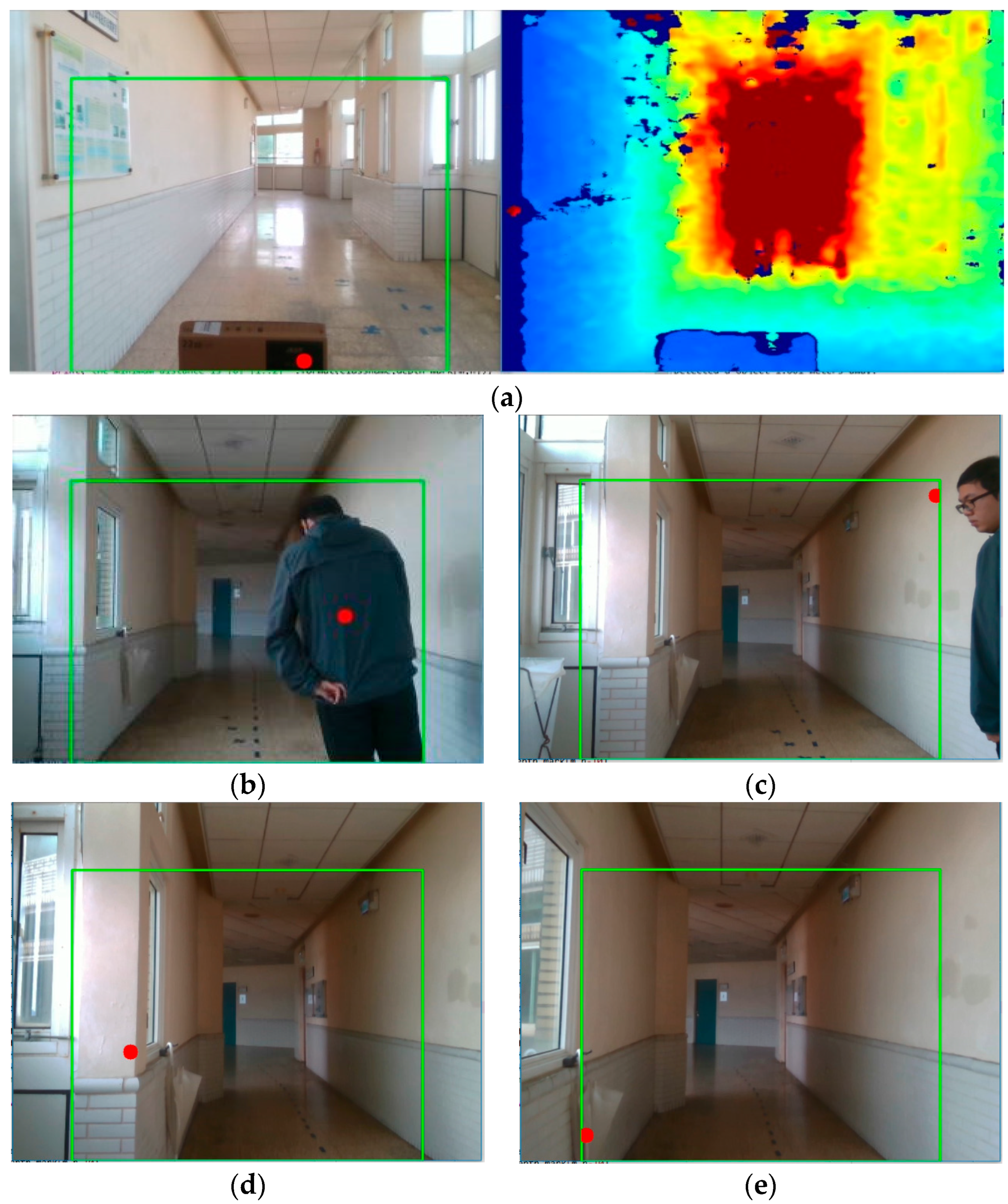

3.3. Obstacle Detection

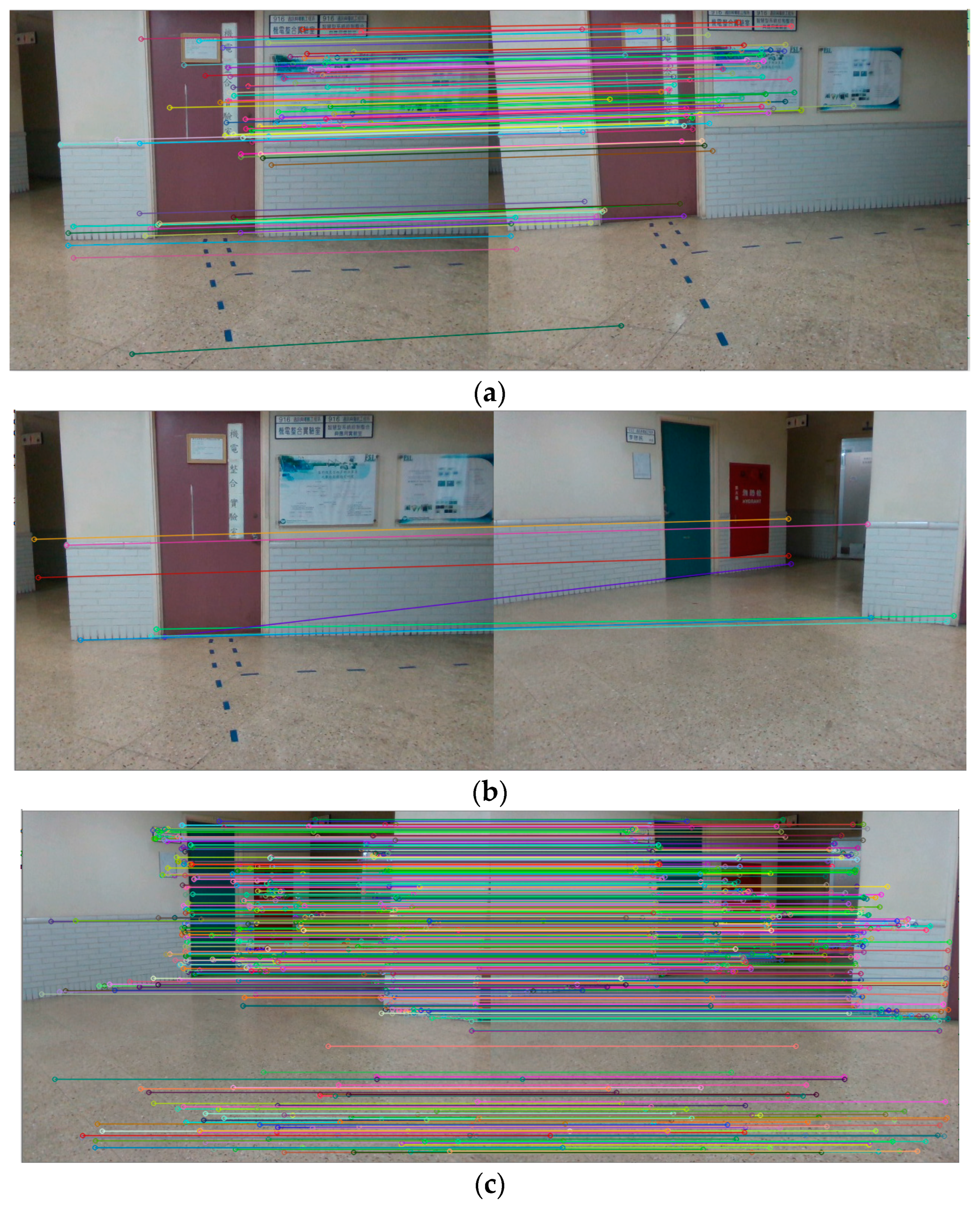

3.4. Feature Matching

3.5. Circular Doorknob Detection

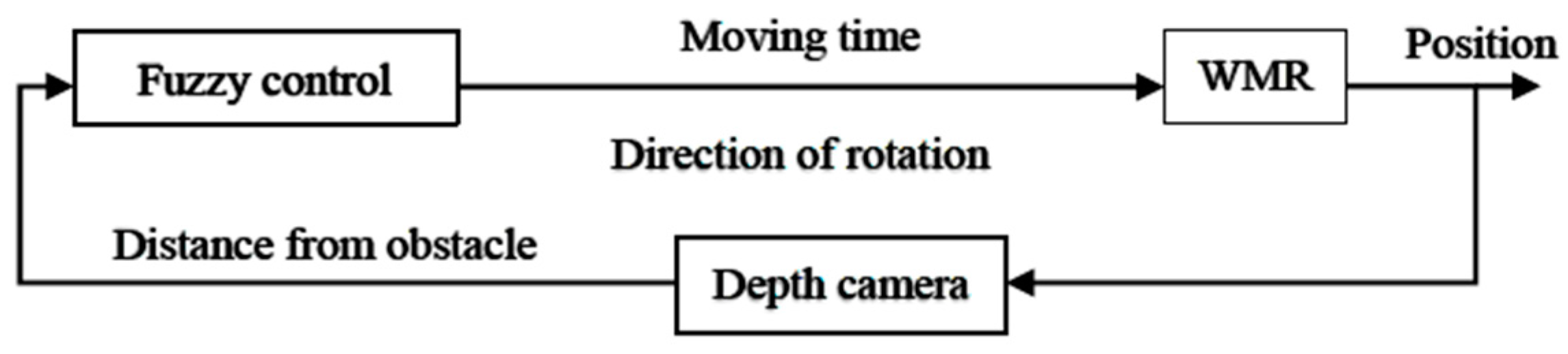

4. Control Scheme

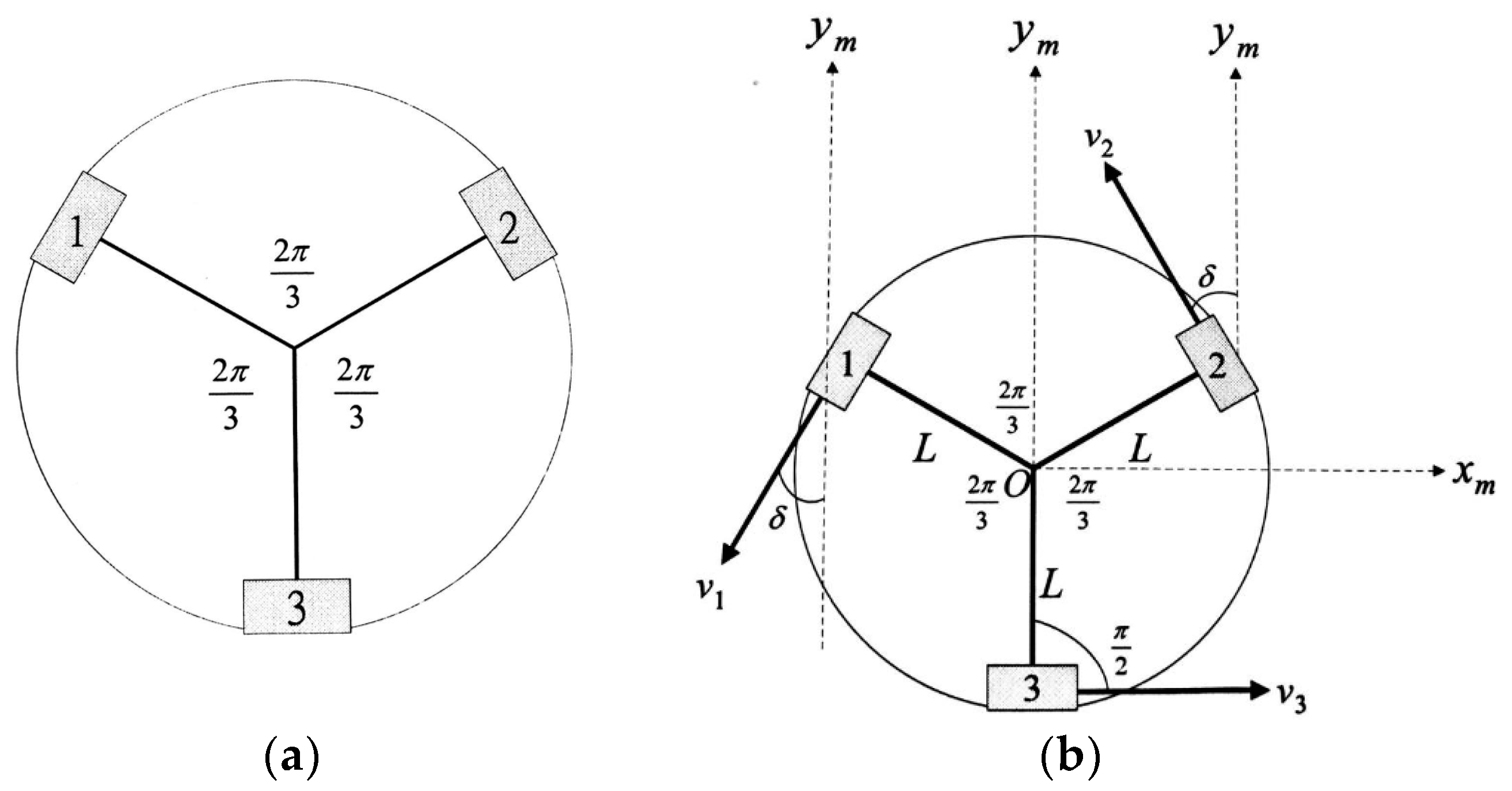

4.1. Motion Control

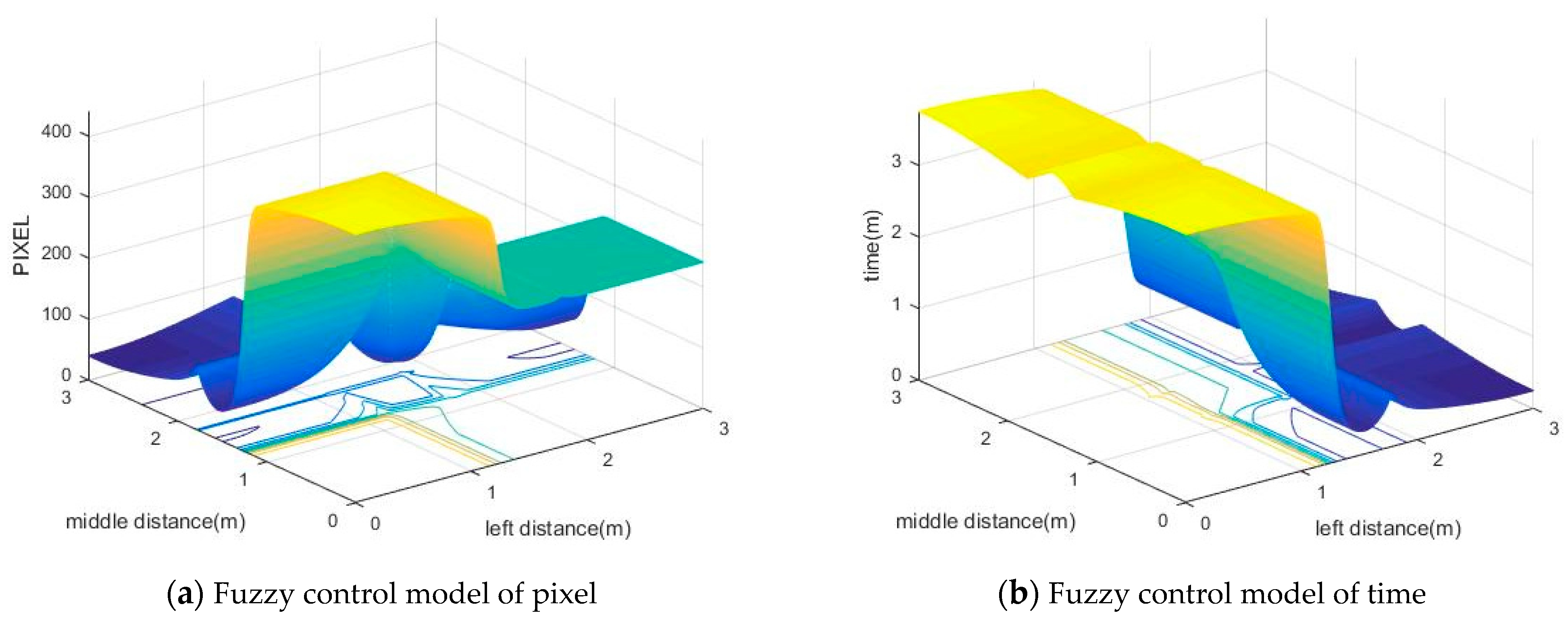

4.2. Obstacle Avoidance Control

- R1: If L is near and M is near and R is near, then T is LG and P is TR.

- R2: If L is near and M is near and R is medium, then T is LG and P is TR.

- R3: If L is near and M is near and R is far, then T is LG and P is TR.

- R4: If L is near and M is medium and R is near, then T is ST and P is TM.

- R5: If L is near and M is medium and R is medium, then T is MD and P is TR.

- R6: If L is near and M is medium and R is far, then T is MD and P is TR.

- R7: If L is near and M is far and R is near, then T is ST and P is TM.

- R8: If L is near and M is far and R is medium, then T is ST and P is TR.

- R9: If L is near and M is far and R is far, then T is ST and P is TR.

- R10: If L is medium and M is near and R is near, then T is LG and P is TL.

- R11: If L is medium and M is near and R is medium, then T is LG and P is TR.

- R12: If L is medium and M is near and R is far, then T is LG and P is TR.

- R13: If L is medium and M is medium and R is near, then T is MD and P is TL.

- R14: If L is medium and M is medium and R is medium, then T is ST and P is TM.

- R15: If L is medium and M is medium and R is far, then T is ST and P is TR.

- R16: If L is medium and M is far and R is near then, T is ST and P is TL.

- R17: If L is medium and M is far and R is medium, then T is ST and P is TM.

- R18: If L is medium and M is far and R is far, then T is ST and P is TM.

- R19: If L is far and M is near and R is near, then T is LG and P is TL.

- R20: If L is far and M is near and R is medium, then T is LG and P is TL.

- R21: If L is far and M is near and R is far, then T is LG and P is TL.

- R22: If L is far and M is medium and R is near, then T is MD and P is TL.

- R23: If L is far and M is medium and R is medium, then T is MD and P is TL.

- R24: If L is far and M is medium and R is far, then T is MD and P is TL.

- R25: If L is far and M is far and R is near, then T is ST and P is TL.

- R26: If L is far and M is far and R is medium, then T is ST and P is TM.

- R27: If L is far and M is far and R is far, then T is ST and P is TM.

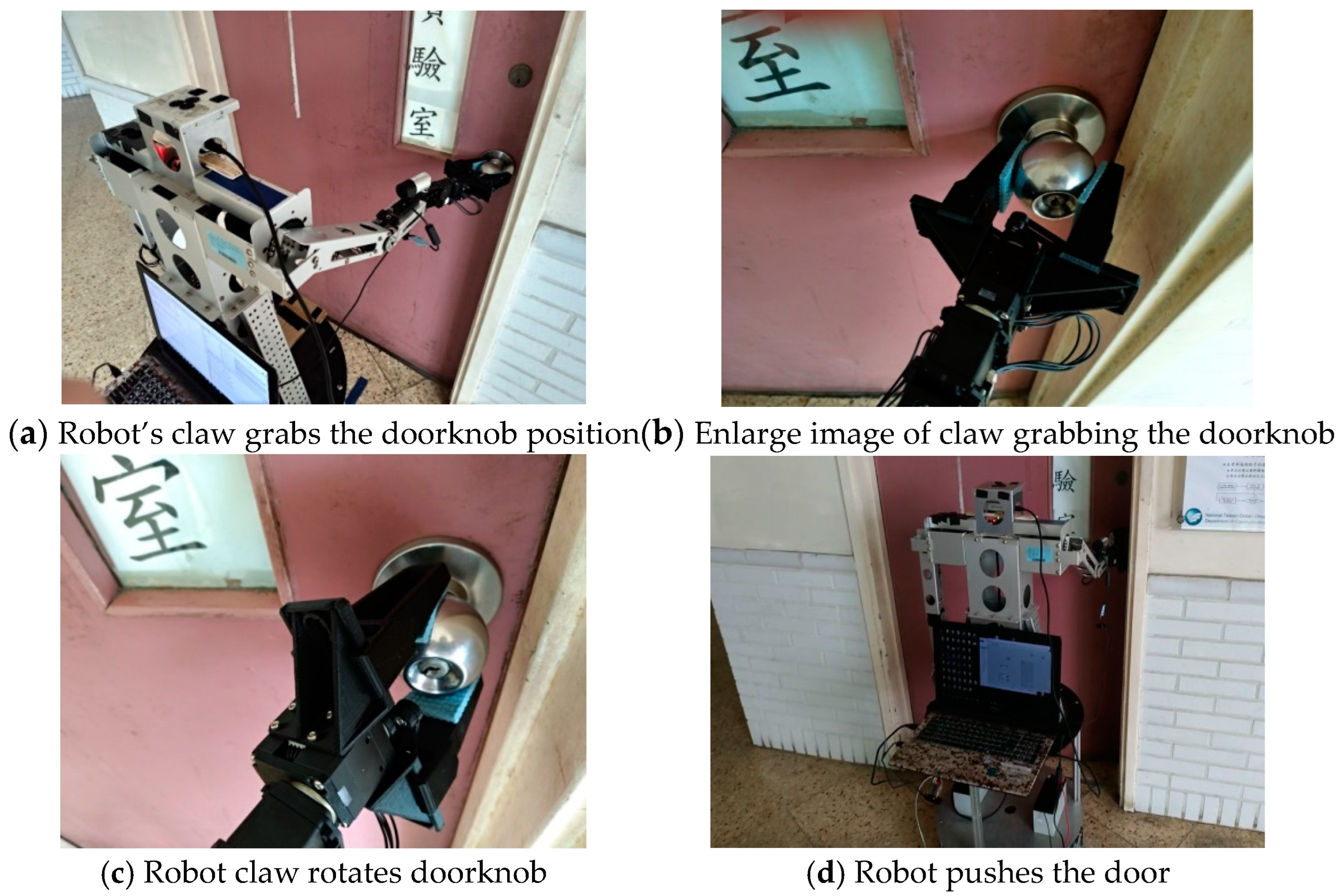

4.3. Arm Control

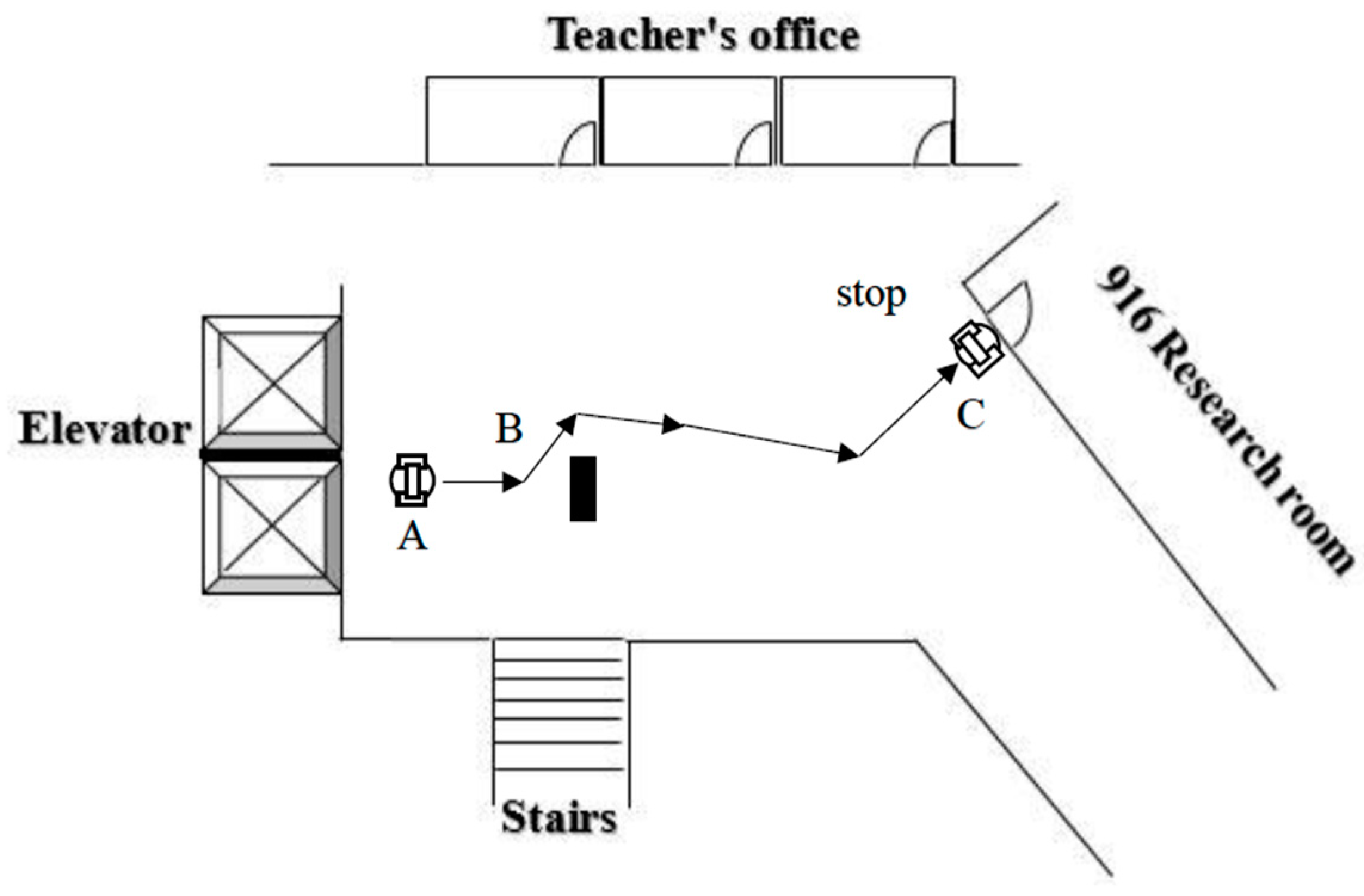

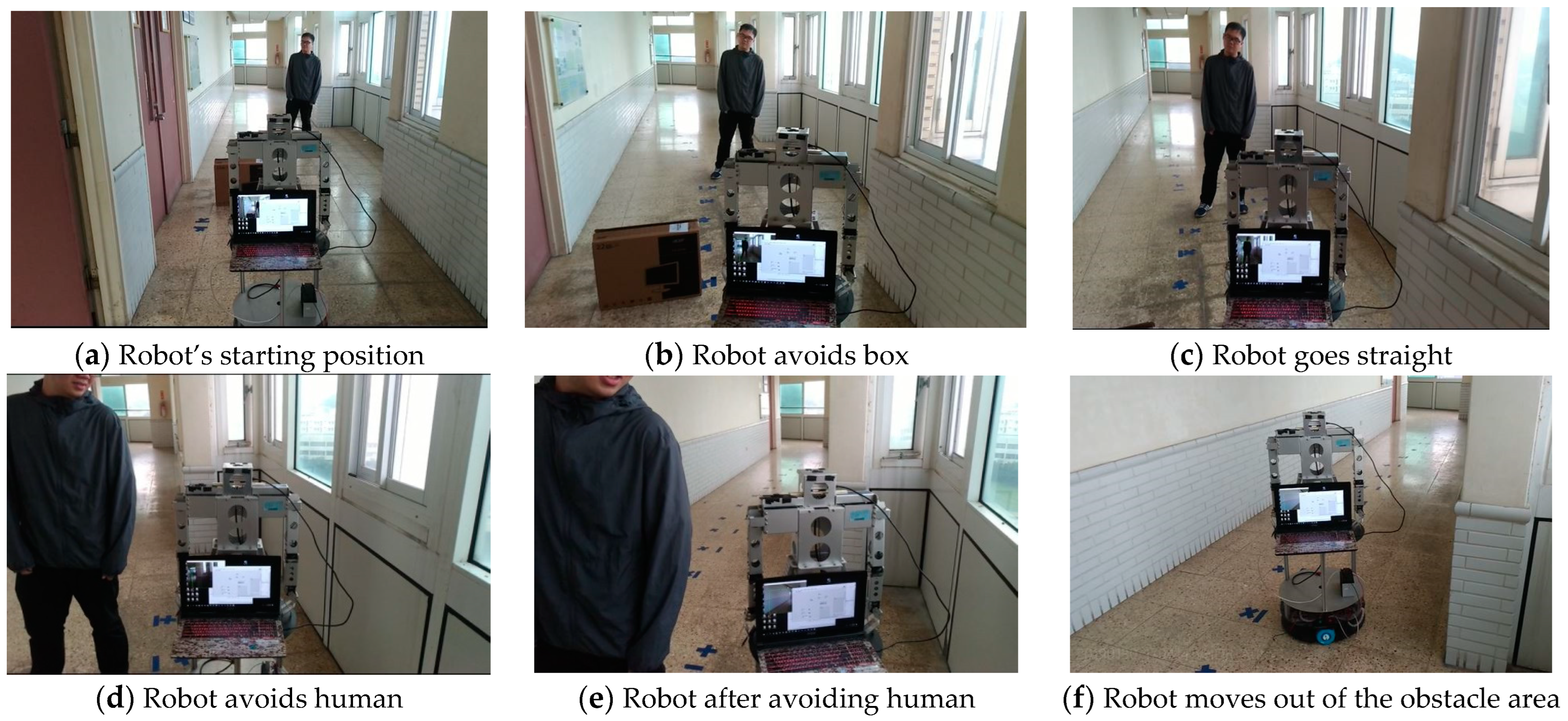

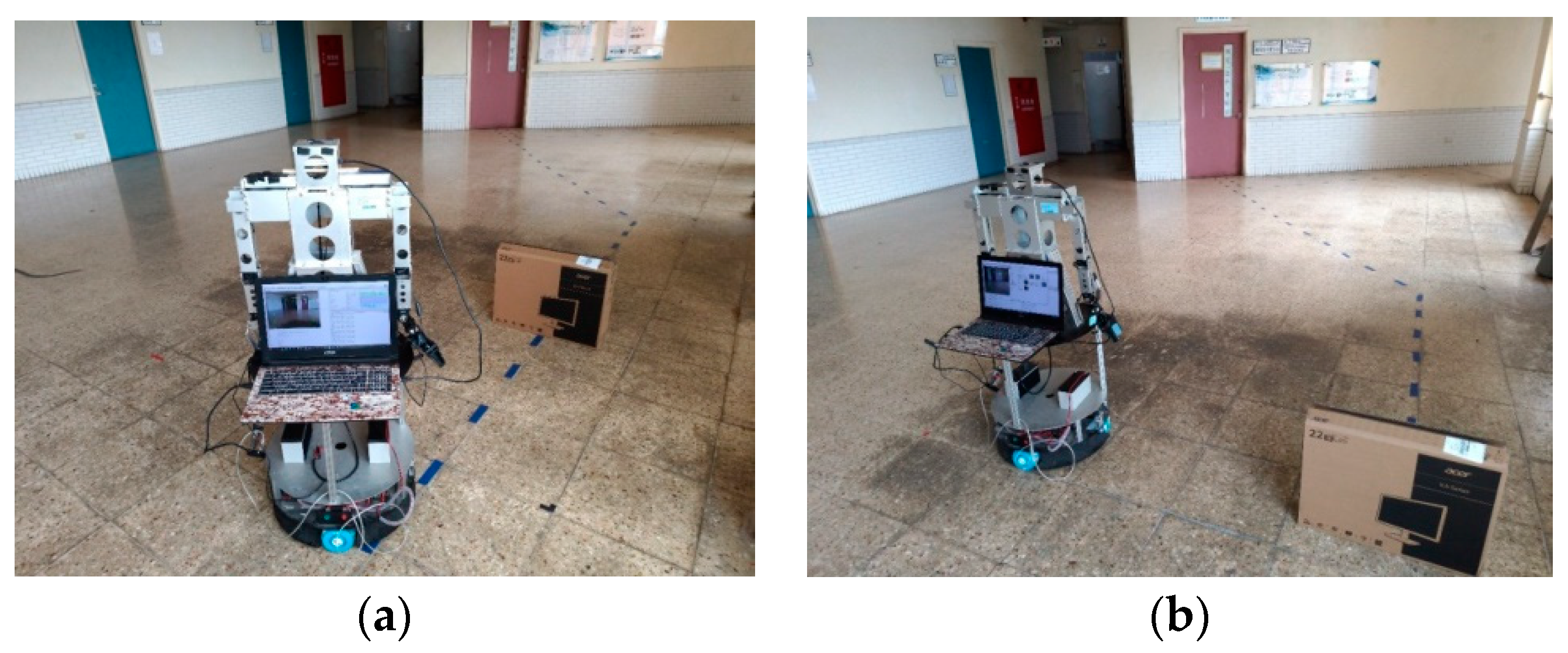

5. Experiment Result

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Belanche, D.; Casaló, L.V.; Flavián, C.; Schepers, J. Service robot implementation: A theoretical framework and research agenda. Serv. Ind. J. 2020, 40, 203–225. [Google Scholar] [CrossRef]

- Gonzalez-Aguirre, J.A.; Osorio-Oliveros, R.; Rodríguez-Hernández, K.L.; Lizárraga-Iturralde, J.; Menendez, R.M.; Ramírez-Mendoza, R.A.; Ramírez-Moreno, M.A.; Lozoya-Santos, J.d.J. Service Robots: Trends and Technology. Appl. Sci. 2021, 11, 10702. [Google Scholar] [CrossRef]

- Chi, L. Application of Real-Time Image Recognition and Feature Matching to Wheeled Mobile Robot for Room Service. Master’s Thesis, National Taiwan Ocean University, Keelung City, Taiwan, 2018. [Google Scholar]

- Najim, H.A.; Kareem, I.S.; Abdul-Lateef, W.E. Design and Implementation of an Omnidirectional Mobile Robot for Medi-cine Delivery in Hospitals during the COVID-19 Epidemic. AIP Conf. Proc. 2023, 2830, 070004. [Google Scholar]

- Bernardo, R.; Sousa, J.M.C.; Botto, M.A.; Gonçalves, P.J.S. A Novel Control Architecture Based on Behavior Trees for an Omni-Directional Mobile Robot. Robotics 2023, 12, 170. [Google Scholar] [CrossRef]

- Palacín, J.; Rubies, E.; Clotet, E.; Martínez, D. Evaluation of the Path-Tracking Accuracy of a Three-Wheeled Omnidirectional Mobile Robot Designed as a Personal Assistant. Sensors 2021, 21, 7216. [Google Scholar] [CrossRef] [PubMed]

- Jia, Q.; Wang, M.; Liu, S.; Ge, J.; Gu, C. Research and development of mecanum-wheeled omnidirectional mobile robot implemented by multiple control methods. In Proceedings of the 23rd International Conference on Mechatronics and Machine Vision in Practice, Nanjing, China, 28–30 November 2016. [Google Scholar]

- Park, S.; Ryoo, Y.; Im, D. Fuzzy Steering Control of Three-Wheels Based Omnidirectional Mobile Robot. In Proceedings of the International Conference on Fuzzy Theory and Its Applications, Taichung, Taiwan, 9–11 November 2016. [Google Scholar]

- Chung, J.H.; Yi, B.-J.; Kim, W.K.; Lee, H. The dynamic modeling and analysis for an omnidirectional mobile robot with three caster wheels. In Proceedings of the IEEE International Conference on Robotics and Automation, Taipei, Taiwan, 14–19 September 2003. [Google Scholar]

- Ruan, X.; Li, W. Ultrasonic sensor based two-wheeled self-balancing robot obstacle avoidance control system. In Proceedings of the IEEE International Conference on Mechatronics and Automation, Tianjin, China, 3–6 August 2014. [Google Scholar]

- Jin, Y.; Li, S.; Li, J.; Sun, H.; Wu, Y. Design of an Intelligent Active Obstacle Avoidance Car Based on Rotating Ultrasonic Sensors. In Proceedings of the IEEE 8th Annual International Conference on CYBER Technology in Automation, Control, and Intelligent Systems, Tianjin, China, 19–23 July 2018. [Google Scholar]

- Peng, Y.; Qu, D.; Zhong, Y.; Xie, S.; Luo, J. The Obstacle Detection and Obstacle Avoidance Algorithm Based on 2-D Lidar. In Proceedings of the IEEE International Conference on Information and Automation, Lijiang, China, 8–10 August 2015. [Google Scholar]

- Wang, T.; Bu, L.; Huang, Z. A new method for obstacle detection based on Kinect depth image. In Proceedings of the Chinese Automation Congress, Wuhan, China, 27–29 November 2015. [Google Scholar]

- Hamzah, R.A.; Rosly, H.N.; Hamid, S. An Obstacle Detection and Avoidance of a Mobile Robot with Stereo Vision Camera. In Proceedings of the International Conference on Electronic Devices, Systems and Applications, Kuala Lumpur, Malaysia, 25–27 April 2011. [Google Scholar]

- Sharifi, M.; Chen, X. Introducing a novel vision based obstacle avoidance technique for navigation of autonomous mobile robots. In Proceedings of the IEEE 10th Conference on Industrial Electronics and Applications, Auckland, New Zealand, 15–17 June 2015. [Google Scholar]

- AI-Jubouri, Q.; AI-Nuaimy, W.; AI-Taeeand, M.; Young, I. Recognition of Individual Zebrafish Using Speed-Up Robust Feature Matching. In Proceedings of the 10th International Conference on Developments in eSystems Engineering, Paris, France, 14–16 June 2017. [Google Scholar]

- Sheu, J.-S.; Tsai, W.-H. Implementation of a following wheel robot featuring stereoscopic vision. Multimed. Tools Appl. 2017, 76, 25161–25177. [Google Scholar] [CrossRef]

- Tsai, C.-Y.; Nisar, H.; Hu, Y.-C. Mapless LiDAR Navigation Control of Wheeled Mobile Robots Based on Deep Imitation Learning. IEEE Access 2021, 9, 117527–117541. [Google Scholar] [CrossRef]

- Li, C.; Wang, S.; Zhuang, Y.; Yan, F. Deep Sensor Fusion between 2D Laser Scanner and IMU for Mobile Robot Localization. IEEE Sens. J. 2019, 21, 8501–8509. [Google Scholar] [CrossRef]

- Intel Realsense Depth Camera D415. Available online: https://www.intel.com/content/www/us/en/products/sku/128256/intel-realsense-depth-camera-d415/specifications.html (accessed on 21 January 2019).

- Pin, F.; Killough, S. A new family of omnidirectional and holonomic wheeled platforms for mobile robots. IEEE Trans. Robot. Autom. 1994, 10, 480–489. [Google Scholar] [CrossRef]

- Purwin, O.; D’andrea, R. Trajectory generation and control for four wheeled omnidirectional vehicles. Robot. Auton. Syst. 2006, 54, 13–22. [Google Scholar] [CrossRef]

- Zhong, Q.H. Using Omni-Directional Mobile Robot on Map Building Application. Master’s Thesis, National Cheng Kung University, Tainan City, Taiwan, 2009. [Google Scholar]

- Arduino Uno R3. Available online: https://electricarena.blogspot.com/ (accessed on 10 January 2019).

- DFRduino IO Expansion Shield for Arduino. Available online: https://www.dfrobot.com/product-1009.html (accessed on 15 March 2019).

- Omni Wheel. Available online: http://www.kornylak.com/ (accessed on 15 March 2019).

- Color Space. Available online: https://en.wikipedia.org/wiki/Color_space (accessed on 20 April 2019).

- Dragoi, V. Chapter 14: Visual—Eye and Retina. Neurosci. Online. 2020. Available online: https://nba.uth.tmc.edu/neuroscience/m/s2/chapter14.html (accessed on 20 April 2019).

- Color Cube. Available online: https://cs.vt.edu/Undergraduate/courses.html (accessed on 20 April 2019).

- Zhang, Y.; Xu, X.; Dai, Y. Two-Stage Obstacle Detection Based on Stereo Vision in Unstructured Environment. In Proceedings of the Sixth International Conference on Intelligent Human-Machine Systems and Cybernetics, Hangzhou, China, 26–27 August 2014. [Google Scholar]

- Zhang, Z. A Flexible New Technique for Camera Calibration. Available online: https://www.microsoft.com/en-us/research/wp-content/uploads/2016/02/tr98-71.pdf (accessed on 20 January 2019).

- Bay, H.; Tuytelaars, T.; Gool, L.V. Speed Up Robust Features. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006. [Google Scholar]

- Liu, H.; Qian, Y.; Lin, S. Detecting Persons Using Hough Circle Transform in Surveillance Video. In Proceedings of the International Conference on Computer Vision Theory and Applications, Angers, France, 17–21 May 2010. [Google Scholar]

- Fang, W.; Chao, F.; Yang, L.; Lin, C.-M.; Shang, C.; Zhou, C.; Shen, Q. A recurrent emotional CMAC neural network controller for vision-based mobile robots. Neurocomputing 2019, 334, 227–238. [Google Scholar] [CrossRef]

- Wu, Q.; Lin, C.-M.; Fang, W.; Chao, F.; Yang, L.; Shang, C.; Zhou, C. Self-Organizing Brain Emotional Learning Controller Network for Intelligent Control System of Mobile Robots. IEEE Access 2018, 6, 59096–59108. [Google Scholar] [CrossRef]

- Chao, C.H.; Hsueh, B.Y.; Hsiao, M.Y.; Tsai, S.H.; Li, T.H.S. Real-Time Target Tracking and Obstacle Avoidance for Mobile Robots using Two Cameras. In Proceedings of the ICROS-SICE International Joint Conference, Fukuoka, Japan, 18–21 August 2009. [Google Scholar]

- Su, H.-R.; Chen, K.-Y. Design and Implementation of a Mobile Robot with Autonomous Door Opening Ability. Int. J. Fuzzy Syst. 2019, 21, 333–342. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hsu, C.-H.; Juang, J.-G. Using a Robot for Indoor Navigation and Door Opening Control Based on Image Processing. Actuators 2024, 13, 78. https://doi.org/10.3390/act13020078

Hsu C-H, Juang J-G. Using a Robot for Indoor Navigation and Door Opening Control Based on Image Processing. Actuators. 2024; 13(2):78. https://doi.org/10.3390/act13020078

Chicago/Turabian StyleHsu, Chun-Hsiang, and Jih-Gau Juang. 2024. "Using a Robot for Indoor Navigation and Door Opening Control Based on Image Processing" Actuators 13, no. 2: 78. https://doi.org/10.3390/act13020078

APA StyleHsu, C.-H., & Juang, J.-G. (2024). Using a Robot for Indoor Navigation and Door Opening Control Based on Image Processing. Actuators, 13(2), 78. https://doi.org/10.3390/act13020078