Cybersecurity Regulations and Software Resilience: Strengthening Awareness and Societal Stability

Abstract

1. Introduction

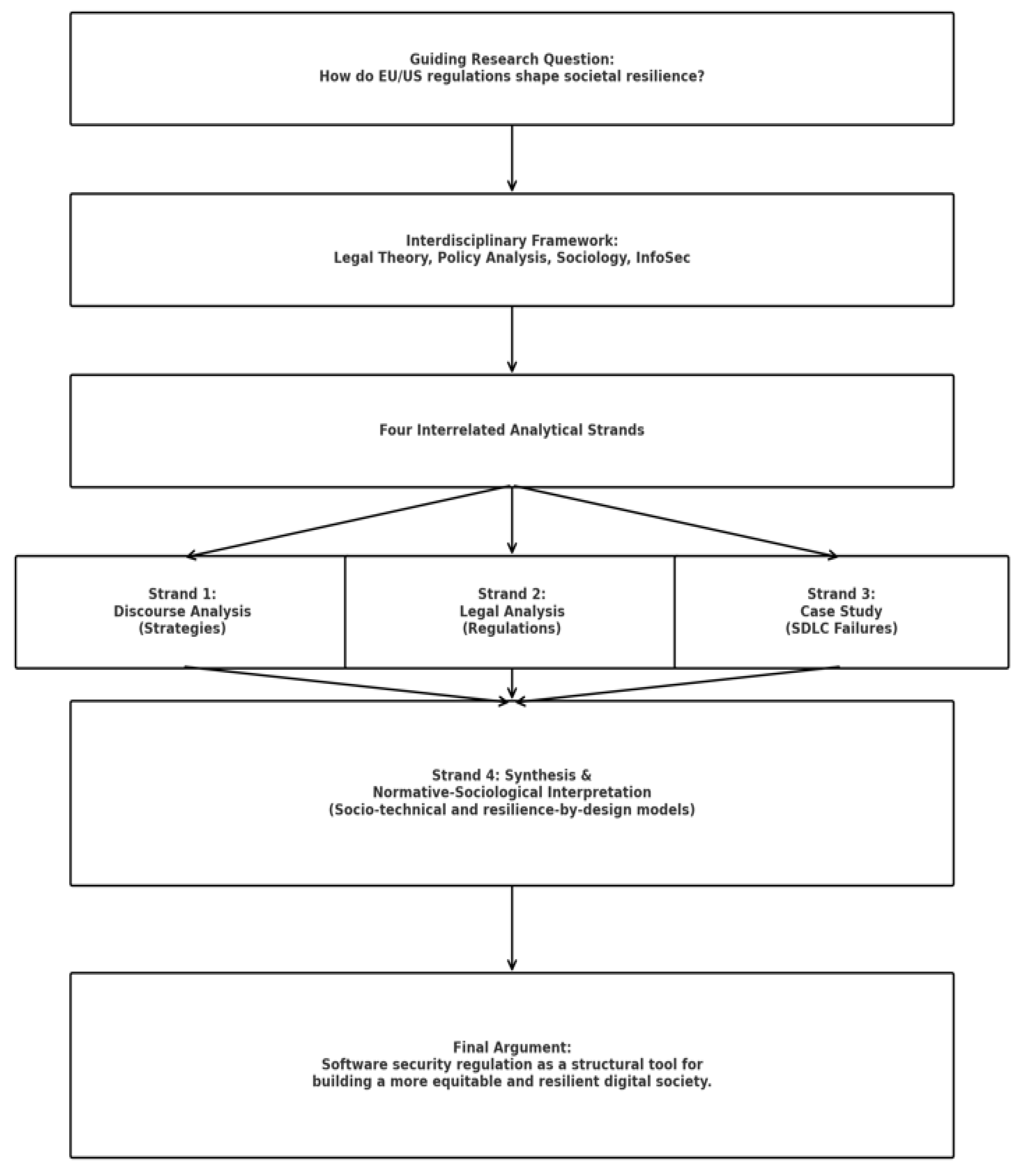

2. Materials and Methods

2.1. Discourse Analysis of Strategic Policy Documents

2.2. Comparative Legal Analysis of Regulatory Texts

2.3. Case Study Analysis of Software Vulnerabilities

2.4. Normative–Sociological Interpretation

3. Results

3.1. Proprietary vs. Open-Source Software: Contrasting Security Implications and Resilience Profiles

3.2. Lessons from Critical Vulnerabilities: The Cases of Log4Shell and Equifax

3.3. Integrating Security and Resilience Across the Software Development Lifecycle (SDLC)

- Requirements Analysis: This phase identifies functional and non-functional requirements, including clear security specifications and initial threat modelling to anticipate technical and user-based risks.

- Design: System architecture and design patterns are developed with explicit security and resilience considerations, embedding “security by design” from the outset.

- Implementation: Source code is produced following secure coding standards, accompanied by code reviews and version control practices to detect vulnerabilities early.

- Testing: Multiple levels of testing—including static and dynamic code analysis, penetration testing, and validation checks—are conducted to verify the integrity and robustness of the system.

- Deployment: Secure deployment practices ensure that the system is configured correctly, that access controls are enforced, and that protective measures are active at launch.

- Maintenance: This stage emphasizes continuous monitoring, timely patch management, and regular updates to counter emerging threats. It also requires active collaboration between developers and users to maintain system resilience in dynamic socio-technical environments.

3.4. The Role of Standards and Modern Development Practices in Operationalizing Resilience

4. Discussion

4.1. The U.S. Regulatory Approach: From Compliance to Shared Responsibility in Shaping Digital Resilience

4.2. The EU Regulatory Approach: Cybersecurity as Infrastructure for Embedding Societal Resilience

4.3. Converging Principles and the Socio-Technical Imperative in Cybersecurity Regulation

4.4. Comparative Overview

5. Conclusions

5.1. Main Findings and Implications

5.2. Limitations and Avenues for Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Correction Statement

References

- Aldaajeh, Saad, Husam Saleous, Sulaiman Alrabaee, Elhadj Barka, Frank Breitinger, and Kim-Kwang Raymond Choo. 2022. The role of national cybersecurity strategies on the improvement of cybersecurity education. Computers & Security 119: 102754. [Google Scholar] [CrossRef]

- Alghawli, Ali, and Tetyana Radivilova. 2024. Resilient Cloud cluster with DevSecOps security model, automates a data analysis, vulnerability search and risk calculation. Alexandria Engineering Journal 107: 136–49. [Google Scholar] [CrossRef]

- Andrade, Ricardo, Javier Torres, Isabel Ortiz-Garcés, Jorge Miño, and Luis Almeida. 2023. An exploratory study gathering security requirements for the software development process. Electronics 12: 3594. [Google Scholar] [CrossRef]

- Aziz, Khaldoun, Ameen Daoud, Anjali Singh, and Mohammed A. Alhusban. 2025. Integrating digital mapping technologies in urban development: Advancing sustainable and resilient infrastructure for SDG 9 achievement—A systematic review. Alexandria Engineering Journal 116: 185–207. [Google Scholar] [CrossRef]

- Bellovin, Steven M. 2023. Is Cybersecurity Liability a Liability? IEEE Security & Privacy 21: 99–100. [Google Scholar] [CrossRef]

- Boss, Steven R., Julie R. Gray, and Diane J. Janvrin. 2024. Be an Expert: A Critical Thinking Approach to Responding to High-Profile Cybersecurity Breaches. Issues in Accounting Education 39: 93–113. [Google Scholar] [CrossRef]

- Brewster, Thomas. 2016. Crooks Behind $81M Bangladesh Bank Heist Linked to Sony Pictures Hackers. Available online: https://www.forbes.com/sites/thomasbrewster/2016/05/13/81m-bangladesh-bank-hackers-sony-pictures-breach/ (accessed on 20 June 2024).

- Cañizares, Javier, Sam Copeland, and Neelke Doorn. 2021. Making Sense of Resilience. Sustainability 13: 8538. [Google Scholar] [CrossRef]

- Carmody, Seth, Andrea Coravos, Ginny Fahs, Audra Hatch, Janine Medina, Beau Woods, and Joshua Corman. 2021. Building resilient medical technology supply chains with a software bill of materials. Npj Digital Medicine 4: 34. [Google Scholar] [CrossRef]

- Cavelty, Myriam Dunn, Carina Eriksen, and Björn Scharte. 2023. Making cyber security more resilient: Adding social considerations to technological fixes. Journal of Risk Research 26: 513–27. [Google Scholar] [CrossRef]

- Chai, Wesley. 2022. Confidentiality, Integrity and Availability (CIA Triad). Available online: https://www.techtarget.com/whatis/definition/Confidentiality-integrity-and-availability-CIA (accessed on 20 June 2024).

- Chiara, Pier Giorgio. 2025. Understanding the Regulatory Approach of the Cyber Resilience Act: Protection of Fundamental Rights in Disguise? European Journal of Risk Regulation 16: 469–84. [Google Scholar] [CrossRef]

- Colabianchi, Silvia, Francesco Costantino, Giulio Di Gravio, Flavio Nonino, and Riccardo Patriarca. 2021. Discussing resilience in the context of cyber physical systems. Computers & Industrial Engineering 160: 107534. [Google Scholar] [CrossRef]

- Colonna, Liane. 2025. The end of open source? Regulating open source under the cyber resilience act and the new product liability directive. Computer Law & Security Review 56: 106105. [Google Scholar] [CrossRef]

- Dede, Georgios, Angeliki Petsa, Sotirios Kavalaris, Evangelos Serrelis, Sotirios Evangelatos, Ioannis Oikonomidis, and Theodoros Kamalakis. 2024. Cybersecurity as a contributor toward resilient Internet of Things (IoT) infrastructure and sustainable economic growth. Information 15: 798. [Google Scholar] [CrossRef]

- Easterly, Jen, and Tim Fanning. 2023. The Attack on Colonial Pipeline: What We’ve Learned & What We’ve Done Over the Past Two Years. Available online: https://www.cisa.gov/news-events/news/attack-colonial-pipeline-what-weve-learned-what-weve-done-over-past-two-years (accessed on 20 June 2024).

- Escobar, Arturo. 1994. Welcome to Cyberia: Notes on the anthropology of cyberculture. Current Anthropology 35: 211–31. [Google Scholar] [CrossRef]

- European Commission. 2025. Communication from the Commission to the European Parliament, the Council, the European Economic and Social Committee and the Committee of the Regions on ProtectEU: A European Internal Security Strategy. COM(2025) 148 Final. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:52025DC0148 (accessed on 20 June 2024).

- European Commission, and High Representative of the Union for Foreign Affairs and Security Policy. 2013. Joint communication to the European Parliament, the Council, the European Economic and Social Committee and the Committee of the Regions: Cybersecurity Strategy of the European Union: An Open, Safe and Secure Cyberspace. JOIN(2013) 1 Final. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:52013JC0001 (accessed on 20 June 2024).

- European Commission, and High Representative of the Union for Foreign Affairs and Security Policy. 2020. Joint Communication to the European Parliament and the Council: The EU’s Cybersecurity Strategy for the Digital Decade. JOIN(2020) 18 Final. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:52020JC0018 (accessed on 20 June 2024).

- European Parliament and Council. 2019. Regulation (EU) 2019/881 of the European Parliament and of the Council of 17 April 2019 on ENISA (the European Union Agency for Cybersecurity) and on Information and Communications Technology Cybersecurity Certification and Repealing Regulation (EU) No 526/2013 (Cybersecurity Act). Official Journal of the European Union L151: 15–69. Available online: https://eur-lex.europa.eu/eli/reg/2019/881/oj/eng (accessed on 20 June 2024).

- European Parliament and Council. 2022. Directive (EU) 2022/2555 of the European Parliament and of the Council of 14 December 2022 on Measures for a High Common Level of Cybersecurity Across the Union (NIS2 Directive). Official Journal of the European Union L333: 80–152. Available online: https://eur-lex.europa.eu/eli/dir/2022/2555/oj/eng (accessed on 20 June 2024).

- European Parliament and Council. 2024. Regulation (EU) 2024/2847 of the European Parliament and of the Council of 23 October 2024 on Horizontal Cybersecurity Requirements for Products with Digital Elements and Amending Regulations (EU) No 168/2013 and (EU) 2019/1020 and Directive (EU) 2020/1828 (Cyber Resilience Act). Official Journal of the European Union L2024/2847: 1–177. Available online: https://eur-lex.europa.eu/eli/reg/2024/2847/oj/eng (accessed on 20 June 2024).

- Executive Order 14028. 2021. Improving the Nation’s Cybersecurity. Federal Register 86: 26633–45. Available online: https://www.govinfo.gov/content/pkg/FR-2021-05-17/pdf/2021-10460.pdf (accessed on 20 June 2024).

- Farkas, Ádám, and László Vikman. 2024. Information operations as a question of law and cyber sovereignty. ELTE Law Journal 12: 187–206. [Google Scholar] [CrossRef]

- Federal Information Security Modernization Act (FISMA). 2014. Public Law No: 113–283. Available online: https://www.congress.gov/bill/113th-congress/house-bill/1163 (accessed on 20 June 2025).

- Gasiba, Theodora, Ulrich Lechner, and Miguel Pinto-Albuquerque. 2020. Sifu—A cybersecurity awareness platform with challenge assessment and intelligent coach. Cybersecurity 3: 24. [Google Scholar] [CrossRef]

- Guo, Wei, Yunlin Fang, Chuanhuang Huang, Haoyu Ou, Changsheng Lin, and Yanfeng Guo. 2022. HyVulDect: A hybrid semantic vulnerability mining system based on graph neural network. Computers & Security 121: 102823. [Google Scholar] [CrossRef]

- Hadi, Hanif, Noraidah Ahmad, Khaldoun Aziz, Yuan Cao, and Mohannad A. Alshara. 2024. Cost-effective resilience: A comprehensive survey and tutorial on assessing open-source cybersecurity tools for multi-tiered defense. IEEE Access 12: 194053–76. [Google Scholar] [CrossRef]

- Hernández-Ramos, Jose L., Sara N. Matheu, and Antonio Skarmeta. 2021. The Challenges of Software Cybersecurity Certification [Building Security In]. IEEE Security & Privacy 19: 99–102. [Google Scholar] [CrossRef]

- ISO (International Organization for Standardization). 2022. Information Security, Cybersecurity and Privacy Protection—Information Security Management Systems—Requirements. ISO/IEC 27001. Geneva: International Organization for Standardization.

- Janca, Tanya. 2021. Alice & Bob Learn Application Security. Indianapolis: John Wiley & Sons, Inc. [Google Scholar]

- Kamara, Irene. 2024. European cybersecurity standardisation: A tale of two solitudes in view of Europe’s cyber resilience. Innovation: The European Journal of Social Science Research 37: 1441–60. [Google Scholar] [CrossRef]

- Kang, Sanggil, and Sehyun Kim. 2022. CIA-level driven secure SDLC framework for integrating security into SDLC process. Journal of Ambient Intelligence and Humanized Computing 13: 4601–24. [Google Scholar] [CrossRef]

- Kelemen, Roland. 2023. The impact of the Russian-Ukrainian hybrid war on the European Union’s cybersecurity policies and regulations. Connections: The Quarterly Journal 22: 85–104. [Google Scholar] [CrossRef]

- Kelemen, Roland, Joseph Squillace, Richárd Németh, and Justice Cappella. 2024. The impact of digital inequality on IT identity in the light of inequalities in internet access. ELTE Law Journal 12: 173–86. [Google Scholar] [CrossRef]

- Khan, Rizwan Ullah, Siffat Ullah Khan, Muhammad Azeem Akbar, and Mohammed H. Alzahrani. 2022. Security risks of global software development life cycle: Industry practitioner’s perspective. Journal of Software: Evolution and Process 34: e2521. [Google Scholar] [CrossRef]

- Khurshid, Anum, Reem Alsaaidi, Mudassar Aslam, and Shahid Raza. 2022. EU Cybersecurity Act and IoT Certification: Landscape, Perspective and a Proposed Template Scheme. IEEE Access 10: 129932–48. [Google Scholar] [CrossRef]

- Kuday, Ahmet Dogan. 2024. Sustainable Development in the Digital World: The Importance of Cybersecurity. Disaster Medicine and Public Health Preparedness 18: e279. [Google Scholar] [CrossRef]

- Kuzior, Aleksandra, Iryna Tiutiunyk, Anna Zielińska, and Roland Kelemen. 2024. Cybersecurity and cybercrime: Current trends and threats. Journal of International Studies 17: 181–92. [Google Scholar] [CrossRef]

- Latour, Bruno. 1987. Science in Action: How to Follow Scientists and Engineers Through Society. Cambridge: Harvard University Press. [Google Scholar]

- Longo, Antonella, Ali Aghazadeh Ardebili, Alessandro Lazari, and Antonio Ficarella. 2025. Cyber–Physical Resilience: Evolution of Concept, Indicators, and Legal Frameworks. Electronics 14: 1684. [Google Scholar] [CrossRef]

- Lucini, Valentino. 2023. The Ever-Increasing Cybersecurity Compliance in Europe: The NIS 2 and What All Businesses in the EU Should Be Aware of. Russian Law Journal 11: 911. [Google Scholar] [CrossRef]

- Maurer, Fabian, and Andreas Fritzsche. 2023. Layered structures of robustness and resilience: Evidence from cybersecurity projects for critical infrastructures in Central Europe. Strategic Change 32: 139–53. [Google Scholar] [CrossRef]

- Microsoft. 2022. Podpora Proaktívneho Zabezpečenia s Nulovou Dôverou (Zero Trust) [Supporting Proactive Security with Zero Trust]. Available online: https://www.microsoft.com/sk-sk/security/business/zero-trust (accessed on 20 June 2024).

- Morales-Sáenz, Francisco Isai, José Melchor Medina-Quintero, and Miguel Reyna-Castillo. 2024. Beyond Data Protection: Exploring the Convergence between Cybersecurity and Sustainable Development in Business. Sustainability 16: 5884. [Google Scholar] [CrossRef]

- Nash, Iain. 2021. Cybersecurity in a post-data environment: Considerations on the regulation of code and the role of producer and consumer liability in smart devices. Computer Law & Security Review 40: 105529. [Google Scholar] [CrossRef]

- National Cybersecurity Strategy. 2023. Washington, DC: The White House. Available online: https://bidenwhitehouse.archives.gov/wp-content/uploads/2023/03/National-Cybersecurity-Strategy-2023.pdf (accessed on 20 June 2024).

- North Atlantic Council. 2018. Brussels Summit Declaration. Available online: https://www.nato.int/cps/en/natohq/official_texts_156624.htm (accessed on 20 June 2024).

- Peschka, Vilmos. 1988. A jog sajátossága. Budapest: Akadémiai Kiadó. [Google Scholar]

- Pigola, André, and Fernando De Souza Meirelles. 2024. Unraveling trust management in cybersecurity: Insights from a systematic literature review. Information Technology and Management 25: 26. [Google Scholar] [CrossRef]

- Popkova, Elena G., Paolo De Bernardi, Yulia G. Tyurina, and Bruno S. Sergi. 2022. A theory of digital technology advancement to address the grand challenges of sustainable development. Technology in Society 68: 101831. [Google Scholar] [CrossRef]

- Riggs, Hugo, Shahid Tufail, Imtiaz Parvez, Mohd Tariq, Mohammed Aquib Khan, Asham Amir, Kedari Vuda, and Arif Sarwat. 2023. Impact, Vulnerabilities, and Mitigation Strategies for Cyber-Secure Critical Infrastructure. Sensors 23: 4060. [Google Scholar] [CrossRef]

- Saeed, Syed Ali, Sami Ali Altamimi, Nora M. Alkayyal, Eman H. Alshehri, and Dhoha A. Alabbad. 2023. Digital transformation and cybersecurity challenges for businesses resilience: Issues and recommendations. Sensors 23: 6666. [Google Scholar] [CrossRef]

- Shaffique, Mohammed Raiz. 2024. Cyber Resilience Act 2022: A silver bullet for cybersecurity of IoT devices or a shot in the dark? Computer Law & Security Review 54: 106009. [Google Scholar] [CrossRef]

- Shan, Chaoran, Yuwei Gong, Lingxuan Xiong, Sihan Liao, and Yong Wang. 2022. A software vulnerability detection method based on complex network community. Security and Communication Networks 2022: 3024731. [Google Scholar] [CrossRef]

- Sun, Xinchen, Jiazhou Jin, Yuanyuan Yang, and Yushun Pan. 2024. Telling the “bad” to motivate your users to update: Evidence from behavioral and ERP studies. Computers in Human Behavior 153: 108078. [Google Scholar] [CrossRef]

- Tan, Zhuoran, Shameem Parambath, Christos Anagnostopoulos, Jeremy Singer, and Angelos Marnerides. 2025. Advanced Persistent Threats Based on Supply Chain Vulnerabilities: Challenges, Solutions, and Future Directions. IEEE Internet of Things Journal 12: 6371–95. [Google Scholar] [CrossRef]

- Tashtoush, Yaser M., Dana M. Darweesh, Ghaith S. Husari, Osama Y. Darwish, Yazan Y. Darwish, Lama A. Issa, and Haitham A. Ashqar. 2022. Agile approaches for cybersecurity systems, IoT and intelligent transportation. IEEE Access 10: 3944–65. [Google Scholar] [CrossRef]

- U.S. Cybersecurity and Infrastructure Security Agency (CISA). 2024. Secure Software Development Attestation Form Guidance. Available online: https://www.cisa.gov/resources-tools/resources/secure-software-development-attestation-form (accessed on 20 June 2025).

- U.S. National Telecommunications and Information Administration (NTIA). 2021. The Minimum Elements for a Software Bill of Materials (SBOM). U.S. Department of Commerce. Available online: https://www.ntia.gov/report/2021/minimum-elements-software-bill-materials-sbom (accessed on 20 June 2025).

- Van Bossuyt, Douglas L., Brian L. Hale, Robert M. Arlitt, and Nikos Papakonstantinou. 2023. Zero-trust for the system design lifecycle. Journal of Computing and Information Science in Engineering 23: 060812. [Google Scholar] [CrossRef]

- Vandezande, Niels. 2024. Cybersecurity in the EU: How the NIS2-directive stacks up against its predecessor. Computer Law & Security Review 52: 105890. [Google Scholar] [CrossRef]

- Von Solms, Sune, and Lynn Futcher. 2020. Adaption of a secure software development methodology for secure engineering design. IEEE Access 8: 113381–92. [Google Scholar] [CrossRef]

- Wang, Zhitian, Yifan Wen, Zhen Wang, and Pengyu Zhi. 2023. Resilience-based design optimization of engineering systems under degradation and different maintenance strategy. Structural and Multidisciplinary Optimization 66: 219. [Google Scholar] [CrossRef]

- World Economic Forum. 2023. The Global Risks Report 2023, 18th ed. Geneva: World Economic Forum. Available online: https://www3.weforum.org/docs/WEF_Global_Risks_Report_2023.pdf (accessed on 20 June 2024).

- Xiao, Yaping, and Jelena Spanjol. 2021. Yes, but not now! Why some users procrastinate in adopting digital product updates. Journal of Business Research 135: 701–11. [Google Scholar] [CrossRef]

| Criteria | EU: Cyber Resilience Act (CRA) | EU: Cybersecurity Act (CSA) | EU: NIS2 Directive | USA: Executive Order 14028, FISMA, SBOM Regulation |

|---|---|---|---|---|

| Responsibility Allocation | Establishes explicit duties for all stakeholders in the digital product supply chain, including manufacturers and developers, with oversight by national authorities. | Establishes a voluntary, EU-wide certification framework, with the EU Agency for Cybersecurity (ENISA) in a central coordinating role. | Places duties on operators of essential and important services to implement risk management measures, supervised by national authorities. | In the US, federal agencies are primarily responsible, but software vendors and suppliers must provide SBOMs and timely updates. |

| User & Entity Obligations | Producers are required to provide clear information, ensure security updates for the product’s expected lifetime (e.g., up to 5 years), and promptly notify users of vulnerabilities. | Provides transparency to users and organizations through voluntary cybersecurity certification schemes and labels, helping to inform purchasing decisions. | No direct obligations towards end-users. Main obligations are incident reporting to national authorities and implementing robust risk management measures. | US regulations obligate vendors to supply SBOMs, issue regular patches, and communicate vulnerabilities to federal clients. |

| Approach to SDG | Integrates digital sustainability and consumer protection, aiming for a secure and sustainable digital environment as part of its broader strategies. | Promotes institutional trust (SDG 16) and informed consumer choice, contributing to a more transparent and secure single market. | Reinforces societal resilience by securing critical infrastructure and essential services (e.g., healthcare, energy), contributing to SDG 9 and 16. | US cybersecurity law focuses mainly on national security and protection of federal systems, with less explicit reference to sustainability or SDG principles. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kelemen, R.; Squillace, J.; Medvácz, Á.; Cappella, J.; Bucko, B.; Mazuch, M. Cybersecurity Regulations and Software Resilience: Strengthening Awareness and Societal Stability. Soc. Sci. 2025, 14, 578. https://doi.org/10.3390/socsci14100578

Kelemen R, Squillace J, Medvácz Á, Cappella J, Bucko B, Mazuch M. Cybersecurity Regulations and Software Resilience: Strengthening Awareness and Societal Stability. Social Sciences. 2025; 14(10):578. https://doi.org/10.3390/socsci14100578

Chicago/Turabian StyleKelemen, Roland, Joseph Squillace, Ádám Medvácz, Justice Cappella, Boris Bucko, and Martin Mazuch. 2025. "Cybersecurity Regulations and Software Resilience: Strengthening Awareness and Societal Stability" Social Sciences 14, no. 10: 578. https://doi.org/10.3390/socsci14100578

APA StyleKelemen, R., Squillace, J., Medvácz, Á., Cappella, J., Bucko, B., & Mazuch, M. (2025). Cybersecurity Regulations and Software Resilience: Strengthening Awareness and Societal Stability. Social Sciences, 14(10), 578. https://doi.org/10.3390/socsci14100578