Approaches in Intelligent Music Production

Abstract

:1. Introduction

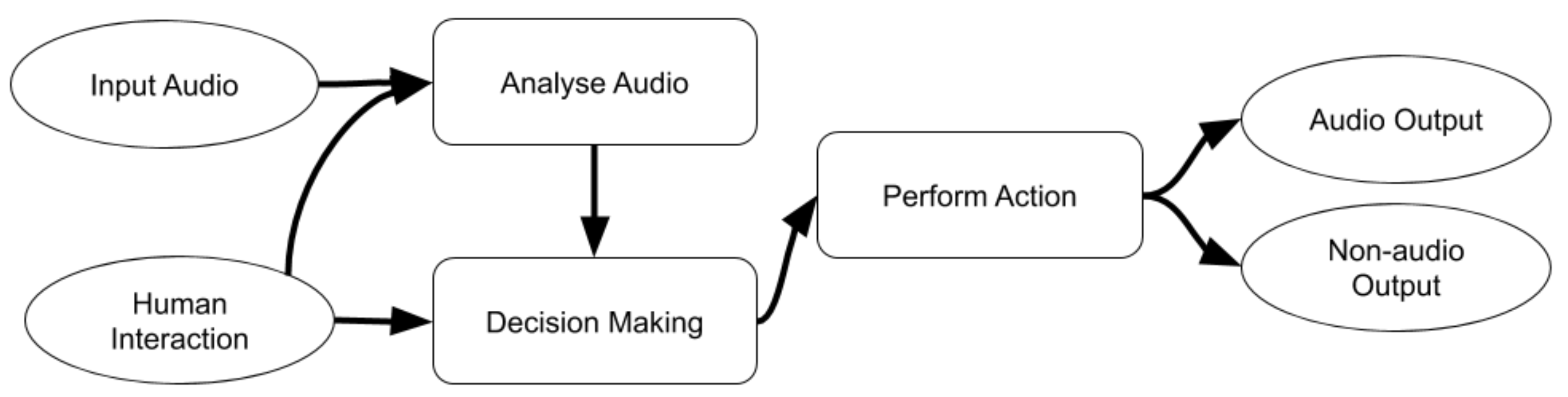

- Levels of Control

- —The extent to which the human engineer will allow the IMP system to direct the audio processing. The restrictions places on the IMP system to perform a task based on the observations made (Palladini 2018).

- Knowledge Representation

- —The approach taken to identify and parse the specific defined goals and make a decision. This aspect of the system is where some knowledge or data are represented, some analysis is performed and some decision-making is undertaken (De Man and Reiss 2013a).

- Audio Manipulation

- —This is the ability to act upon an environment or perform an action. A change is enacted on the audio, either directly, through some mid-level medium, or where suggestions towards modification are made.

2. Levels of Control

2.1. Insightive

2.2. Suggestive

2.3. Independent

2.4. Automatic

2.5. Control Level Summary

3. Knowledge Representation

3.1. Grounded Theory

3.2. Knowledge Based Systems

3.3. Data Driven

3.4. Knowledge Representation Summary

4. Audio Manipulation

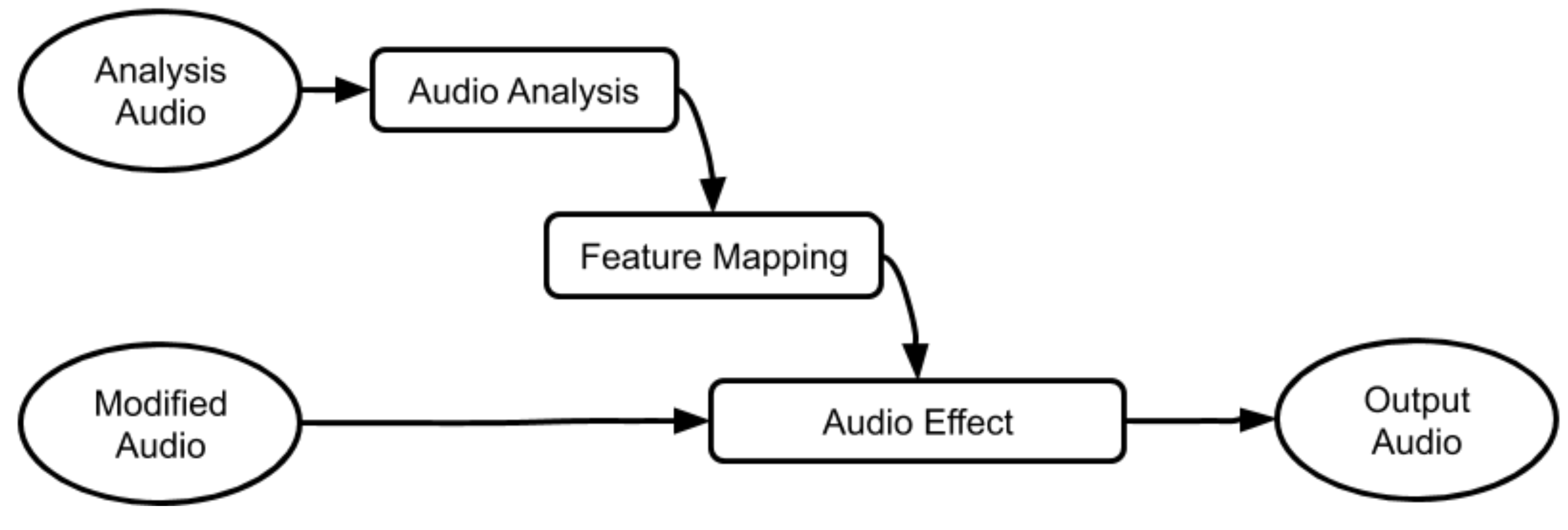

4.1. Adaptive Audio Effects

- Auto-adaptive

- —Analysis of the input audio to be modified will impact the control parameters.

- External-adaptive

- —Alternative audio tracks are used in combination with the input signal, to adjust control parameters.

- Feedback-adaptive

- —Analysis of the output audio to change the control parameters.

- Cross-adaptive

- —Alternative audio tracks are used to effect control parameters, and the input track in turn effects control parameters on external tracks.

4.2. Direct Transformation

4.3. Audio Manipulation Summary

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Arel, Itamar, Derek C. Rose, and Thomas P. Karnowski. 2010. Deep machine learning—A new frontier in artificial intelligence research. IEEE Computational Intelligence Magazine 5: 13–18. [Google Scholar] [CrossRef]

- Barchiesi, Daniele, and Josh Reiss. 2009. Automatic target mixing using least-squares optimization of gains and equalization settings. Paper presented at the 12th Conference on Digital Audio Effects (DAFx-09), Como, Italy, September 1–4. [Google Scholar]

- Bartók, Béla, and Benjamin Suchoff. 1993. Béla Bartók Essays. Lincoln: University of Nebraska Press. [Google Scholar]

- Benito, Adán L., and Joshua D Reiss. 2017. Intelligent multitrack reverberation based on hinge-loss markov random fields. Paper presented at the Audio Engineering Society Conference: 2017 AES International Conference on Semantic Audio, Erlangen, Germany, June 22–24. [Google Scholar]

- Bittner, Rachel M., Justin Salamon, Mike Tierney, Matthias Mauch, Chris Cannam, and Juan Pablo Bello. 2014. Medleydb: A multitrack dataset for annotation-intensive MIR research. Paper presented at the International Society for Music Information Retrieval (ISMIR) 2014, Taipei, Taiwan, October 27–31; vol. 14, pp. 155–60. [Google Scholar]

- Bocko, Gregory, Mark F. Bocko, Dave Headlam, Justin Lundberg, and Gang Ren. 2010. Automatic music production system employing probabilistic expert systems. In Audio Engineering Society Convention 129. New York: Audio Engineering Society. [Google Scholar]

- Bromham, Gary. 2016. How can academic practice inform mix-craft? In Mixing Music. Perspective on Music Production. Edited by Russ Hepworth-Sawyer and Jay Hodgson. Oxon: Taylor & Francis, chp. 16. pp. 245–56. [Google Scholar]

- Bromham, Gary, David Moffat, György Fazekas, Mathieu Barthet, and Mark B. Sandler. 2018. The impact of compressor ballistics on the perceived style of music. In Audio Engineering Society Convention 145. New York: Audio Engineering Society. [Google Scholar]

- Bruford, Fred, Mathieu Barthet, SKoT McDonald, and Mark B. Sandler. 2019. Groove explorer: An intelligent visual interface for drum loop library navigation. Paper presented at the 24th ACM Intelligent User Interfaces Conference (IUI), Marina del Ray, CA, USA, March 17–20. [Google Scholar]

- Cascone, Kim. 2000. The aesthetics of failure: “Post-digital” tendencies in contemporary computer music. Computer Music Journal 24: 12–18. [Google Scholar] [CrossRef]

- Chourdakis, Emmanouil Theofanis, and Joshua D. Reiss. 2016. Automatic control of a digital reverberation effect using hybrid models. In Audio Engineering Society Conference: 60th International Conference: DREAMS (Dereverberation and Reverberation of Audio, Music, and Speech). Leuven: Audio Engineering Society. [Google Scholar]

- Chourdakis, Emmanouil T., and Joshua D. Reiss. 2017. A machine-learning approach to application of intelligent artificial reverberation. Journal of the Audio Engineering Society 65: 56–65. [Google Scholar] [CrossRef]

- Cohen, Sara. 1993. Ethnography and popular music studies. Popular Music 12: 123–38. [Google Scholar] [CrossRef]

- De Man, Brecht, Nicholas Jillings, David Moffat, Joshua D. Reiss, and Ryan Stables. 2016. Subjective comparison of music production practices using the Web Audio Evaluation Tool. Paper presented at the 2nd AES Workshop on Intelligent Music Production, London, UK, September 13. [Google Scholar]

- De Man, Brecht, Kirk McNally, and Joshua D. Reiss. 2017. Perceptual evaluation and analysis of reverberation in multitrack music production. Journal of the Audio Engineering Society 65: 108–16. [Google Scholar] [CrossRef]

- De Man, Brecht, Mariano Mora-Mcginity, György Fazekas, and Joshua D. Reiss. 2014. The open multitrack testbed. Paper presented at the 137th Convention of the Audio Engineering Society, Los Angeles, CA, USA, October 9–12. [Google Scholar]

- De Man, Brecht, and Joshua D. Reiss. 2013a. A knowledge-engineered autonomous mixing system. In Audio Engineering Society Convention 135. New York: Audio Engineering Society. [Google Scholar]

- De Man, Brecht, and Joshua D. Reiss. 2013b. A semantic approach to autonomous mixing. Paper presented at the 2013 Art of Record Production Conference (JARP) 8, Université Laval, Quebec, QC, Canada, December 1. [Google Scholar]

- De Man, Brecht, Joshua D. Reiss, and Ryan Stables. 2017. Ten years of automatic mixing. Paper presented at the 3rd AES Workshop on Intelligent Music Production, Salford, UK, September 15. [Google Scholar]

- Deruty, Emmanuel. 2016. Goal-oriented mixing. Paper presented at the 2nd AES Workshop on Intelligent Music Production, London, UK, September 13. [Google Scholar]

- Dugan, Dan. 1975. Automatic microphone mixing. Journal of the Audio Engineering Society 23: 442–49. [Google Scholar]

- Eno, Brian. 2004. The studio as compositional tool. In Audio Culture: Readings in Modern Music. Edited by Christoph Cox and Daniel Warner. Londres: Continum, chp. 22. pp. 127–30. [Google Scholar]

- Fenton, Steven. 2018. Automatic mixing of multitrack material using modified loudness models. In Audio Engineering Society Convention 145. New York: Audio Engineering Society. [Google Scholar]

- Ford, Jon, Mark Cartwright, and Bryan Pardo. 2015. Mixviz: A tool to visualize masking in audio mixes. In Audio Engineering Society Convention 139. New York: Audio Engineering Society. [Google Scholar]

- Glaser, Barney, and Anselm Strauss. 1967. Grounded theory: The discovery of grounded theory. Sociology the Journal of the British Sociological Association 12: 27–49. [Google Scholar]

- Gonzalez, Enrique Perez, and Joshua D. Reiss. 2007. Automatic mixing: Live downmixing stereo panner. Paper presented at the 10th International Conference on Digital Audio Effects (DAFx’07), Bordeaux, France, September 10–15; pp. 63–68. [Google Scholar]

- Gonzalez, Enrique Perez, and Joshua D. Reiss. 2008. Improved control for selective minimization of masking using interchannel dependancy effects. Paper presented at the 11th International Conference on Digital Audio Effects (DAFx’08), Espoo, Finland, September 1–4; p. 12. [Google Scholar]

- Hargreaves, Steven. 2014. Music Metadata Capture in the Studio from Audio and Symbolic Data. Ph.D. thesis, Queen Mary University of London, London, UK. [Google Scholar]

- Jillings, Nicholas, and Ryan Stables. 2017. Automatic masking reduction in balance mixes using evolutionary computing. In Audio Engineering Society Convention 143. New York: Audio Engineering Society. [Google Scholar]

- Jun, Sanghoon, Daehoon Kim, Mina Jeon, Seungmin Rho, and Eenjun Hwang. 2015. Social mix: Automatic music recommendation and mixing scheme based on social network analysis. The Journal of Supercomputing 71: 1933–54. [Google Scholar] [CrossRef]

- King, Andrew. 2015. Technology as a vehicle (tool and practice) for developing diverse creativities. In Activating Diverse Musical Creativities: Teaching and Learning in Higher Music Education. Edited by Pamela Burnard and Elizabeth Haddon. London: Bloomsbury Publishing, chp. 11. pp. 203–22. [Google Scholar]

- Kolasinski, Bennett. 2008. A framework for automatic mixing using timbral similarity measures and genetic optimization. In Audio Engineering Society Convention 124. Amsterdam: Audio Engineering Society. [Google Scholar]

- Martínez, Ramírez Marco A., Emmanouil Benetos, and Joshua D. Reiss. 2019. A general-purpose deep learning approach to model time-varying audio effects. Paper presented at the International Conference on Digital Audio Effects (DAFx19), Birmingham, UK, September 2–6. [Google Scholar]

- Martínez Ramírez, Marco A., and Joshua D. Reiss. 2017a. Deep learning and intelligent audio mixing. Paper presented at the 3rd Workshop on Intelligent Music Production, Salford, UK, September 15. [Google Scholar]

- Martínez Ramírez, Marco A., and Joshua D. Reiss. 2017b. Stem audio mixing as a content-based transformation of audio features. Paper presented at the 19th IEEE Workshop on Multimedia Signal Processing (MMSP), Luton, UK, October 16–18; pp. 1–6. [Google Scholar]

- Martínez Ramírez, Marco A., and Joshua D. Reiss. 2019. Modeling nonlinear audio effects with end-to-end deep neural networks. Paper presented at the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, May 12–17; pp. 171–75. [Google Scholar]

- Merchel, Sebastian, M. Ercan Altinsoy, and Maik Stamm. 2012. Touch the sound: Audio-driven tactile feedback for audio mixing applications. Journal of the Audio Engineering Society 60: 47–53. [Google Scholar]

- Mimilakis, Stylianos Ioannis, Estefanıa Cano, Jakob Abeßer, and Gerald Schuller. 2016b. New sonorities for jazz recordings: Separation and mixing using deep neural networks. Paper presented at the 2nd AES Workshop on Intelligent Music Production (WIMP), London, UK, September 13; Volume 2. [Google Scholar]

- Mimilakis, Stylianos Ioannis, Konstantinos Drossos, Andreas Floros, and Dionysios Katerelos. 2013. Automated tonal balance enhancement for audio mastering applications. In Audio Engineering Society Convention 134. Rome: Audio Engineering Society. [Google Scholar]

- Moffat, David, David Ronan, and Joshusa D. Reiss. 2015. An evaluation of audio feature extraction toolboxes. Paper presented at the 18th International Conference on Digital Audio Effects (DAFx-15), Trondheim, Norway, November 30–December 3. [Google Scholar]

- Moffat, David, and Mark B. Sandler. 2018. Adaptive ballistics control of dynamic range compression for percussive tracks. In Audio Engineering Society Convention 145. New York: Audio Engineering Society. [Google Scholar]

- Moffat, David, and Mark B. Sandler. 2019. Automatic mixing level balancing enhanced through source interference identification. In Audio Engineering Society Convention 146. Dublin: Audio Engineering Society. [Google Scholar]

- Moffat, David, Florian Thalmann, and Mark B. Sandler. 2018. Towards a semantic web representation and application of audio mixing rules. Paper presented at the 4th Workshop on Intelligent Music Production (WIMP), Huddersfield, UK, September 14. [Google Scholar]

- Muir, Bonnie M. 1994. Trust in automation: Part i. theoretical issues in the study of trust and human intervention in automated systems. Ergonomics 37: 1905–22. [Google Scholar] [CrossRef]

- Ooi, Kazushige, Keiji Izumi, Mitsuyuki Nozaki, and Ikuya Takeda. 1990. An advanced autofocus system for video camera using quasi condition reasoning. IEEE Transactions on Consumer Electronics 36: 526–30. [Google Scholar] [CrossRef]

- Pachet, François, and Olivier Delerue. 2000. On-the-fly multi-track mixing. In Audio Engineering Society Convention 109. Los Angeles: Audio Engineering Society. [Google Scholar]

- Palladini, Alessandro. 2018. Intelligent audio machines. Paper presented at the Keynote Talk at 4th Workshop on Intelligent Music Production (WIMP-18), Huddersfield, UK, September 14. [Google Scholar]

- Pardo, Bryan, Zafar Rafii, and Zhiyao Duan. 2018. Audio source separation in a musical context. In Springer Handbook of Systematic Musicology. Berlin: Springer, pp. 285–98. [Google Scholar]

- Paterson, Justin, Rob Toulson, Sebastian Lexer, Tim Webster, Steve Massey, and Jonas Ritter. 2016. Interactive digital music: Enhancing listener engagement with commercial music. KES Transaction on Innovation In Music 2: 193–209. [Google Scholar]

- Pestana, Pedro Duarte, Zheng Ma, Joshua D. Reiss, Alvaro Barbosa, and Dawn A.A. Black. 2013. Spectral characteristics of popular commercial recordings 1950–2010. In Audio Engineering Society Convention 135. New York: Audio Engineering Society. [Google Scholar]

- Pestana, Pedro Duarte Leal Gomes. 2013. Automatic Mixing Systems Using Adaptive Digital Audio Effects. Ph.D. Thesis, Universidade Católica Portuguesa, Porto, Portugal. [Google Scholar]

- Prior, Nick. 2012. Digital formations of popular music. Réseaux 2: 66–90. [Google Scholar] [CrossRef]

- Reiss, Joshua, and yvind Brandtsegg. 2018. Applications of cross-adaptive audio effects: Automatic mixing, live performance and everything in between. Frontiers in Digital Humanities 5: 17. [Google Scholar] [CrossRef]

- Reiss, Joshua D. 2011. Intelligent systems for mixing multichannel audio. Paper presented at the17th International Conference on Digital Signal Processing (DSP), Corfu, Greece, July 6–8; pp. 1–6. [Google Scholar]

- Reiss, Joshua D. 2018. Do You Hear What I Hear? The Science of Everyday Sounds. Inaugural Lecture. London: Queen Mary University. [Google Scholar]

- Rödel, Christina, Susanne Stadler, Alexander Meschtscherjakov, and Manfred Tscheligi. 2014. Towards autonomous cars: The effect of autonomy levels on acceptance and user experience. Paper presented at the 6th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Seattle, WA, USA, September 17–19; New York: ACM, pp. 1–8. [Google Scholar]

- Ronan, David, Brecht De Man, Hatice Gunes, and Joshua D. Reiss. 2015. The impact of subgrouping practices on the perception of multitrack music mixes. In Audio Engineering Society Convention 139. New York: Audio Engineering Society. [Google Scholar]

- Ronan, David, Hatice Gunes, and Joshua D. Reiss. 2017. Analysis of the subgrouping practices of professional mix engineers. In Audio Engineering Society Convention 142. New York: Audio Engineering Society. [Google Scholar]

- Ronan, David, Zheng Ma, Paul Mc Namara, Hatice Gunes, and Joshua D. Reiss. 2018. Automatic minimisation of masking in multitrack audio using subgroups. arXiv. [Google Scholar]

- Ronan, David, David Moffat, Hatice Gunes, and Joshua D. Reiss. 2015. Automatic subgrouping of multitrack audio. Paper presented at the 18th International Conference on Digital Audio Effects (DAFx-15), Trondheim, Norway, November 30–December 3. [Google Scholar]

- Russell, Stuart J., and Peter Norvig. 2016. Artificial Intelligence: A Modern Approach, 3rd ed. Malaysia: Pearson Education Limited. [Google Scholar]

- Schmidt, Brian. 2003. Interactive mixing of game audio. In Audio Engineering Society Convention 115. New York: Audio Engineering Society. [Google Scholar]

- Scott, Jeffrey J., and Youngmoo E. Kim. 2011. Analysis of acoustic features for automated multi-track mixing. Paper presented at the 12th International Society for Music Information Retrieval Conference (ISMIR), Miami, FL, USA, October 24–28; pp. 621–26. [Google Scholar]

- Selfridge, Rod, David Moffat, Eldad J. Avital, and Joshua D. Reiss. 2018. Creating real-time aeroacoustic sound effects using physically informed models. Journal of the Audio Engineering Society 66: 594–607. [Google Scholar] [CrossRef]

- Sheridan, Thomas B., and William L. Verplank. 1978. Human and Computer Control of Undersea Teleoperators. Technical Report. Cambridge: Massachusetts Inst of Tech Cambridge Man-Machine Systems Lab. [Google Scholar]

- Smolka, Bogdan, K. Czubin, Jon Yngve Hardeberg, Kostas N. Plataniotis, Marek Szczepanski, and Konrad Wojciechowski. 2003. Towards automatic redeye effect removal. Pattern Recognition Letters 24: 1767–85. [Google Scholar] [CrossRef]

- Stasis, Spyridon, Nicholas Jillings, Sean Enderby, and Ryan Stables. 2017. Audio processing chain recommendation. Paper presented at the 20th International Conference on Digital Audio Effects, Edinburgh, UK, September 5–9. [Google Scholar]

- Stevens, Richard, and Dave Raybould. 2013. The Game Audio Tutorial: A Practical Guide to Creating and Implementing Sound and Music for Interactive Games. London: Routledge. [Google Scholar]

- Terrell, Michael, and Mark Sandler. 2012. An offline, automatic mixing method for live music, incorporating multiple sources, loudspeakers, and room effects. Computer Music Journal 36: 37–54. [Google Scholar] [CrossRef]

- Terrell, Michael, Andrew Simpson, and Mark Sandler. 2014. The mathematics of mixing. Journal of the Audio Engineering Society 62: 4–13. [Google Scholar] [CrossRef]

- Van Waterschoot, Toon, and Marc Moonen. 2011. Fifty years of acoustic feedback control: State of the art and future challenges. Proceedings of the IEEE 99: 288–327. [Google Scholar] [CrossRef]

- Verfaille, Vincent, Daniel Arfib, Florian Keiler, Adrian von dem Knesebeck, and Udo Zölter. 2011. Adaptive digital audio effects. In DAFX—Digital Audio Effects. Edited by Udo Zölter. Hoboken: John Wiley & Sons, Inc., chp. 9. pp. 321–91. [Google Scholar]

- Verfaille, Vincent, Udo Zolzer, and Daniel Arfib. 2006. Adaptive digital audio effects (A-DAFx): A new class of sound transformations. IEEE Transactions on Audio, Speech, and Language Processing 14: 1817–31. [Google Scholar] [CrossRef]

- Verma, Prateek, and Julius O. Smith. 2018. Neural style transfer for audio spectograms. Paper presented at the 31st Conference on Neural Information Processing Systems (NIPS 2017), Workshop for Machine Learning for Creativity and Design, Long Beach, CA, USA, December 4–9. [Google Scholar]

- Vickers, Earl. 2010. The loudness war: Background, speculation, and recommendations. In Audio Engineering Society Convention 129. San Francisco: Audio Engineering Society. [Google Scholar]

- Wichern, Gordon, Aaron Wishnick, Alexey Lukin, and Hannah Robertson. 2015. Comparison of loudness features for automatic level adjustment in mixing. In Audio Engineering Society Convention 139. New York: Audio Engineering Society. [Google Scholar]

- Wilson, Alex, and Bruno Fazenda. 2015. 101 mixes: A statistical analysis of mix-variation in a dataset of multi-track music mixes. In Audio Engineering Society Convention 139. New York: Audio Engineering Society. [Google Scholar]

- Witten, Ian H., Eibe Frank, Mark A. Hall, and Christopher J. Pal. 2016. Data Mining: Practical Machine Learning Tools and Techniques. Burlington: Morgan Kaufmann. [Google Scholar]

- Xambó, Anna, Frederic Font, György Fazekas, and Mathieu Barthet. 2019. Leveraging online audio commons content for media production. In Foundations in Sound Design for Linear Media. London: Routledge, pp. 248–82. [Google Scholar]

| 1 | |

| 2 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moffat, D.; Sandler, M.B. Approaches in Intelligent Music Production. Arts 2019, 8, 125. https://doi.org/10.3390/arts8040125

Moffat D, Sandler MB. Approaches in Intelligent Music Production. Arts. 2019; 8(4):125. https://doi.org/10.3390/arts8040125

Chicago/Turabian StyleMoffat, David, and Mark B. Sandler. 2019. "Approaches in Intelligent Music Production" Arts 8, no. 4: 125. https://doi.org/10.3390/arts8040125

APA StyleMoffat, D., & Sandler, M. B. (2019). Approaches in Intelligent Music Production. Arts, 8(4), 125. https://doi.org/10.3390/arts8040125