An Automatic Registration System Based on Augmented Reality to Enhance Civil Infrastructure Inspections †

Abstract

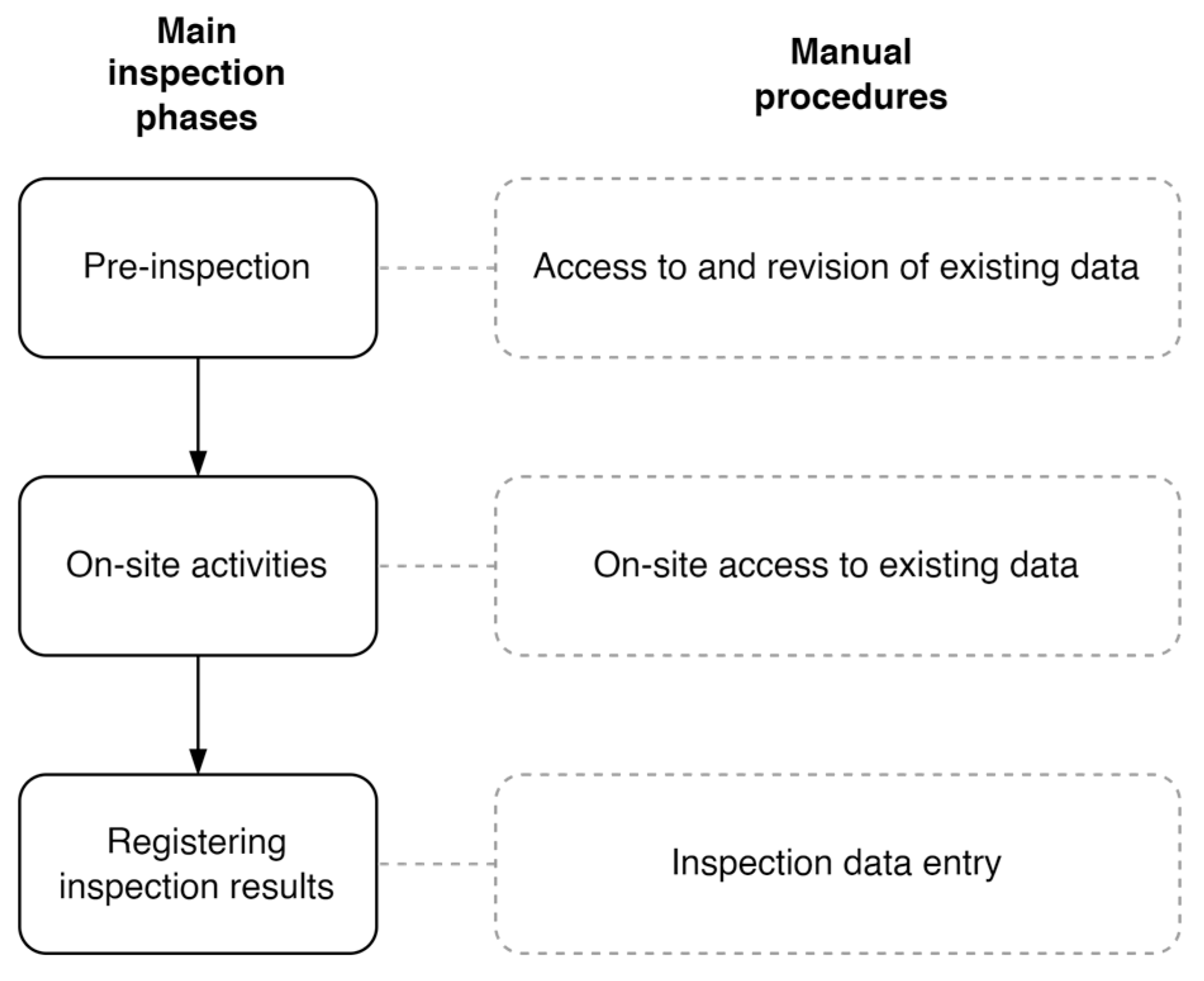

1. Introduction

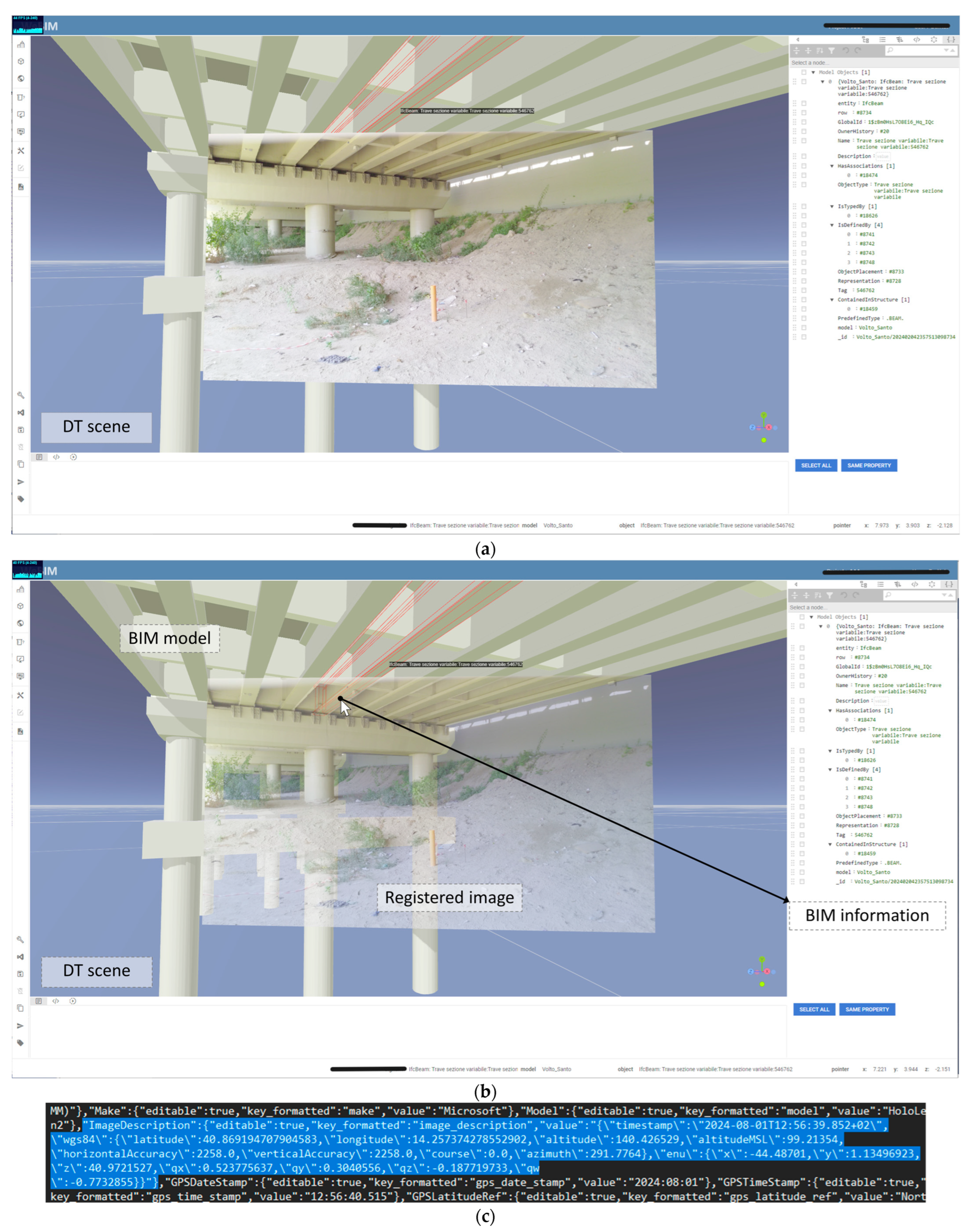

- Automatic registration of newly captured data using the AR device (i.e., images) with existing open BIM models within a DT’s georeferenced scene;

- High-accuracy, on-site AR visualization of existing DT information (i.e., BIM model), without requiring any manual procedures.

2. Background

- Updating digital models with new geometric and semantic information, and

- Accessing relevant information directly in the field.

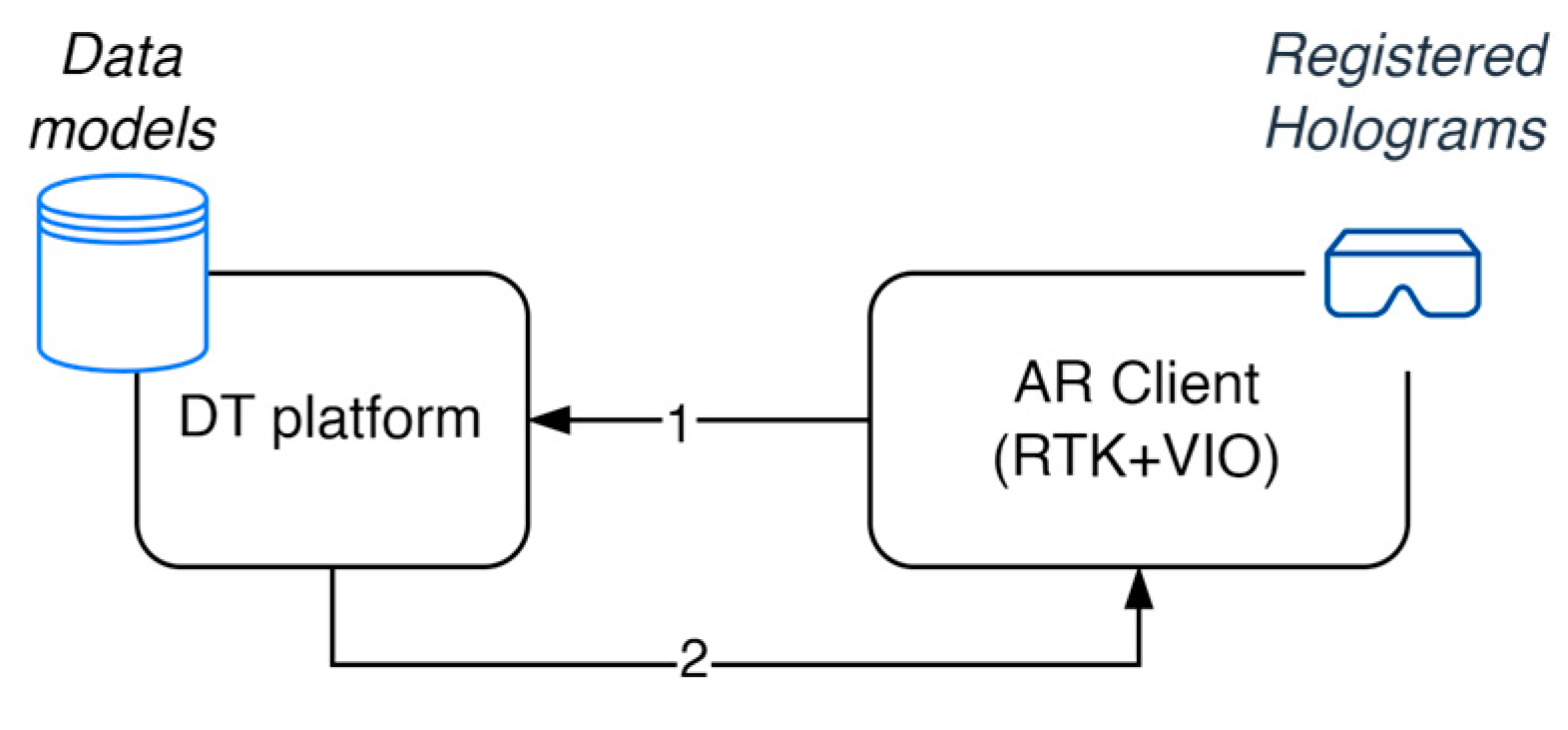

3. Research Methodology

- Field-to-virtual data registration: the automatic alignment of newly captured inspection data (e.g., images) with open BIM models to enrich the DT database,

- Virtual-to-field data registration: the high-accuracy alignment of AR holograms of open BIM models with the real asset to provide seamless access to stored information during in-field inspections.

- The registration with the real asset of AR holograms of the graph representation of the open BIM model. This was deemed valuable by domain experts for accessing and visualizing relevant information such as the geometric information of the hidden structures, in the field during inspection procedures;

- The registration of newly captured visual information (i.e., images documenting the asset’s conditions) with the graph representation of the open BIM, deemed valuable by domain experts for the evaluation of deterioration trends.

- Operators must not use manual procedures to register the 6-DoF position of the device and holograms during inspection tasks. Any manual action would cause loss of time, interference with activities, and may require expert skills.

- The system must be usable in unprepared environments. The need to prepare real and virtual environments with markers and/or other infrastructure prior to the system deployment limits the scope of the system, drastically increases deployment time, may require expert knowledge, and ultimately limits scalability.

- Inspection scenarios may include urban-canyon environments. The system must cope with the eventual temporal absence of GNSS-RTK signals (e.g., eventual lack of GNSS-RTK signals under a bridge). Any interruptions in the service and/or misalignments would lead to a limitation in the use of the system.

- The solution must not suffer drift issues, especially over medium to long distances, since activities may be spread out in wide areas. Drift is the term used to describe the accumulation of small measurement errors of the inertial system. The visual feature of the VIO system is usually sufficient to compensate the IMU errors in relatively small environments where visual features are available, such as in indoor spaces. However, the visual component fails to compensate IMU errors in completely open environments due to the size of the space and the dynamic nature of the scenarios, and also when travelling “long” distances [60]. Drift issues restrict the system’s area of use and limits scalability.

- The system must be accessible to consumers and must not be invasive.

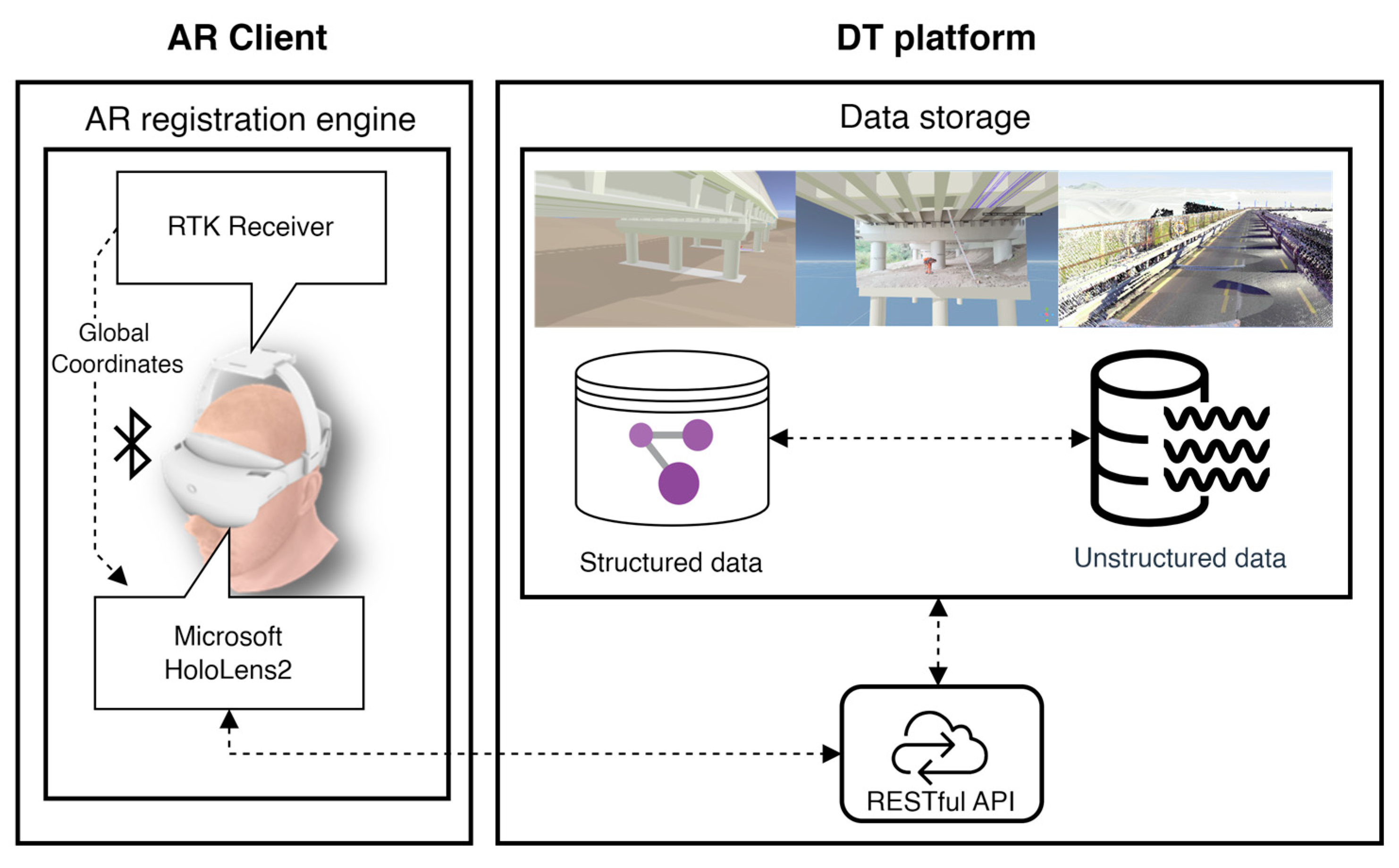

3.1. System Architecture

Hardware Components

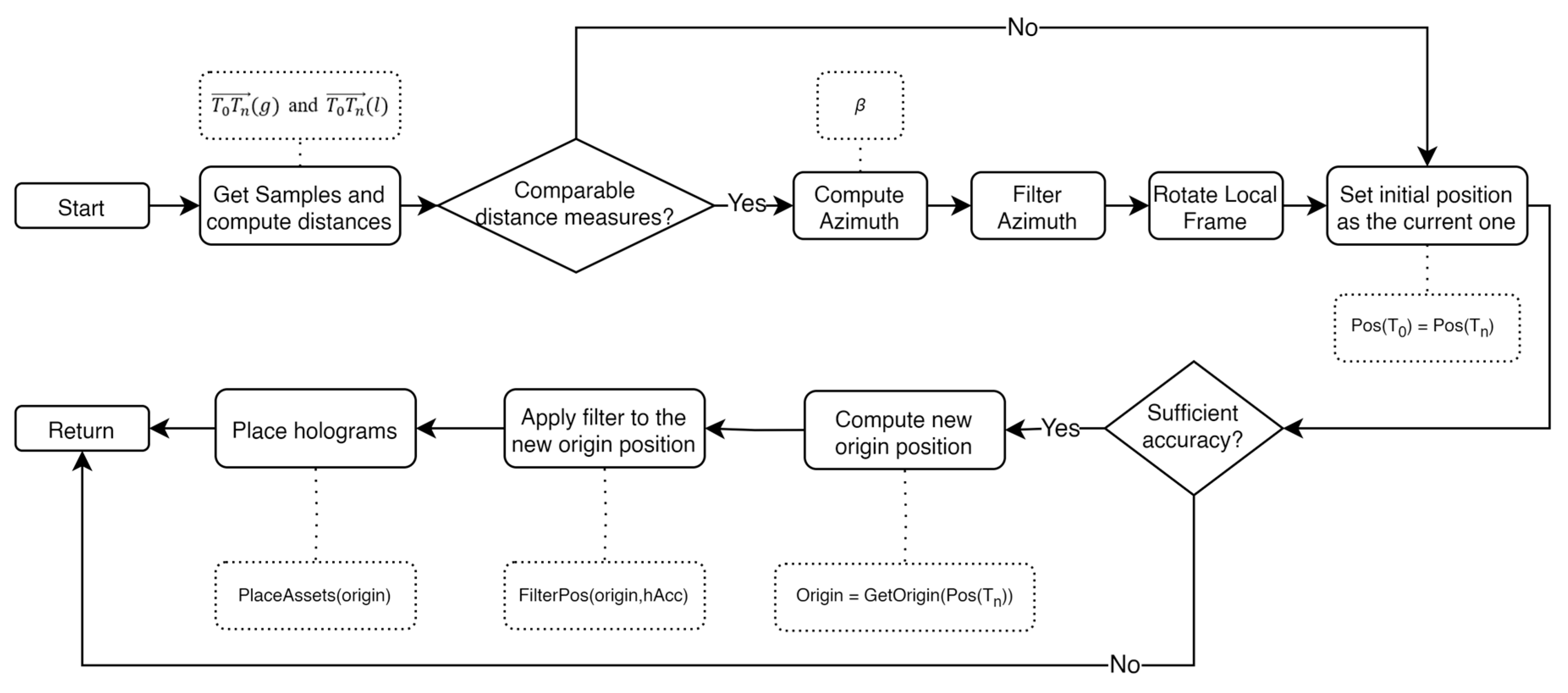

3.2. AR Registration in Large, Unprepared Open Environments

- GNSS-RTK navigation mode: uses RTK data to estimate the geographical 6-DoF pose of the AR device, compensating for the drift of the HoloLens 2’s visual–inertial tracking system, and

- VIO navigation mode: activated when RTK data do not meet the required accuracy.

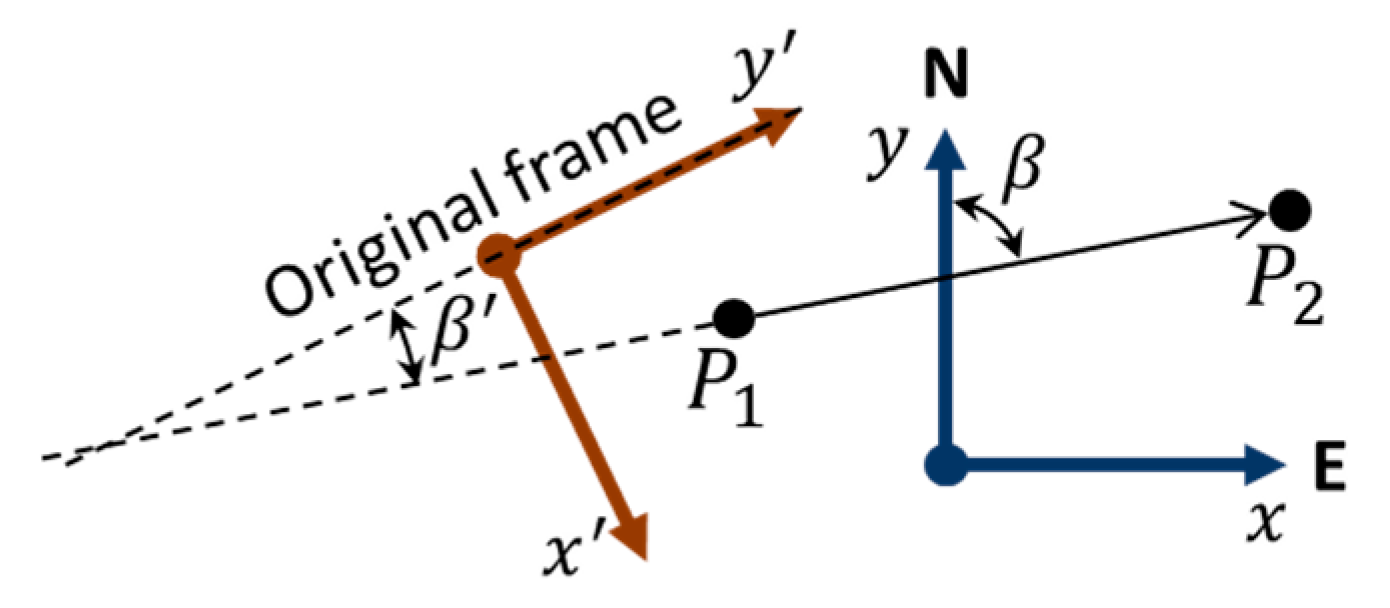

3.2.1. Azimuth Accuracy and Filtering

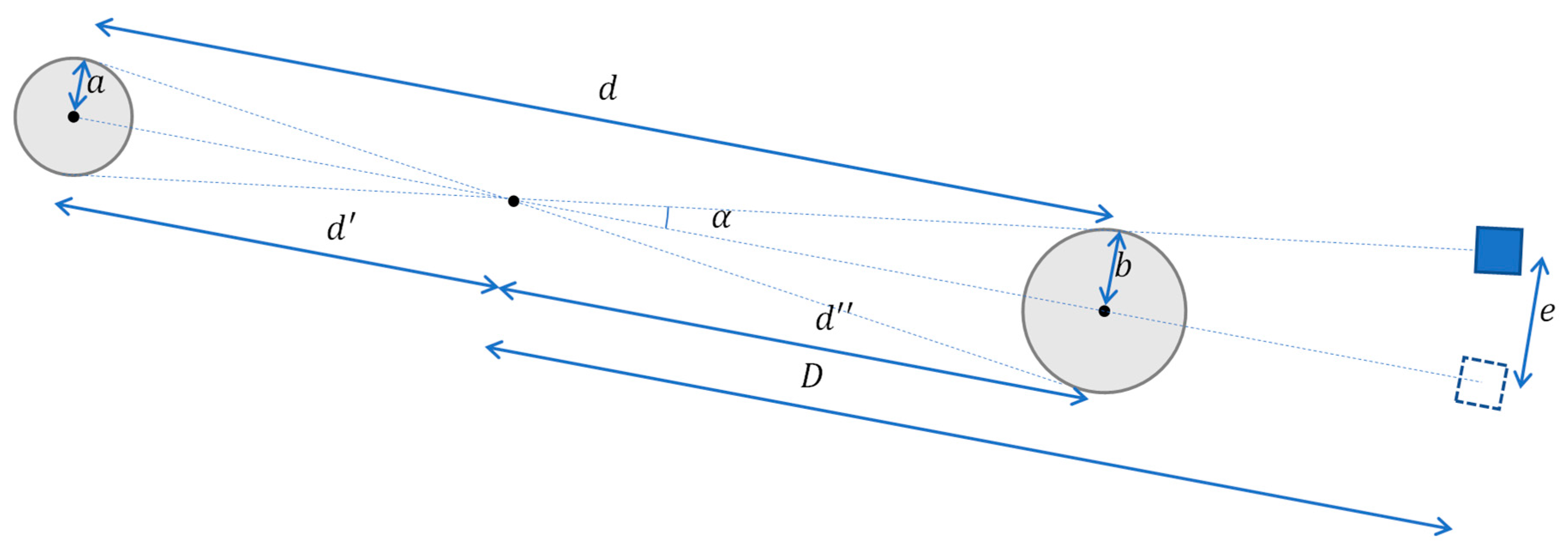

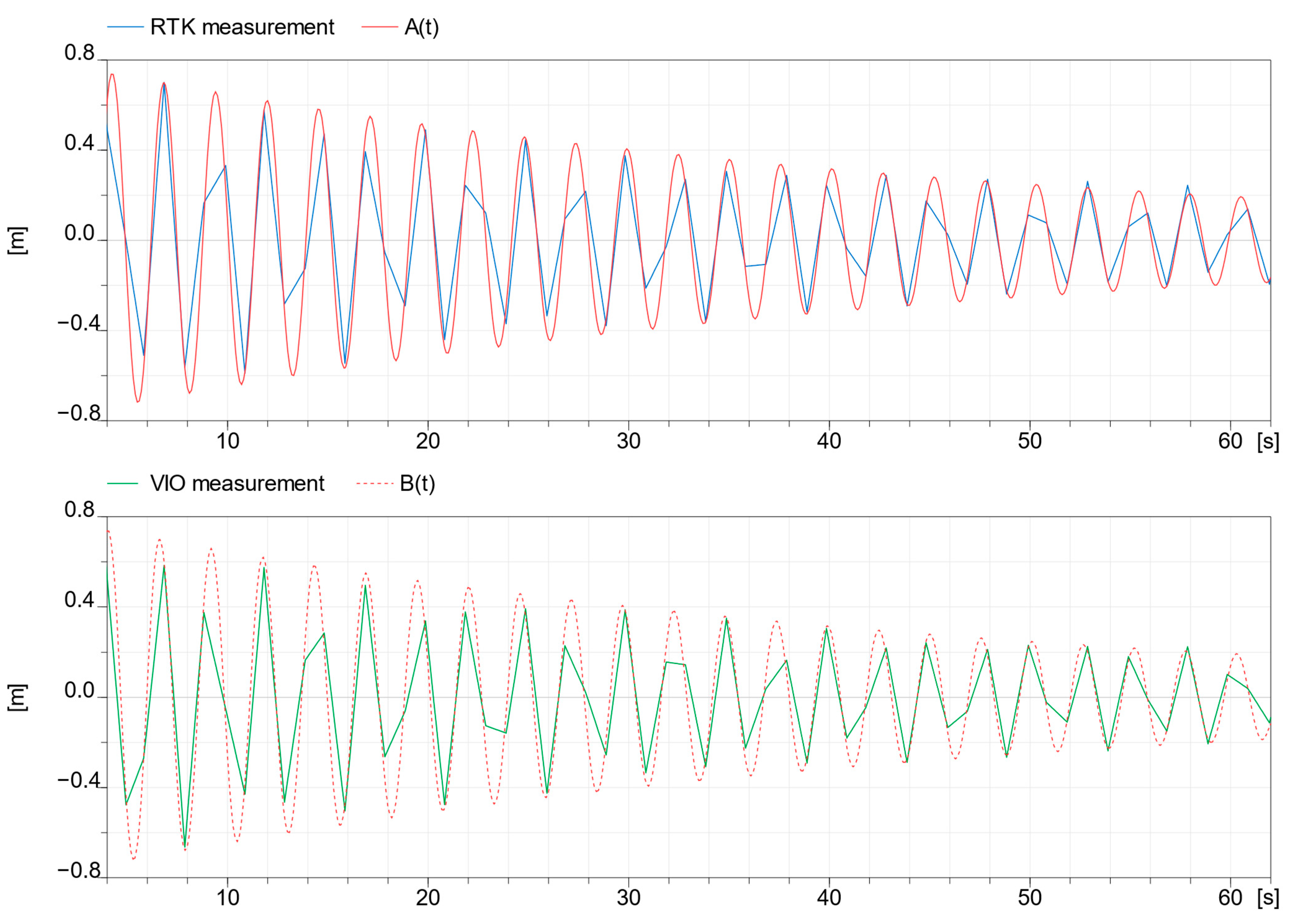

3.2.2. RTK Position Accuracy

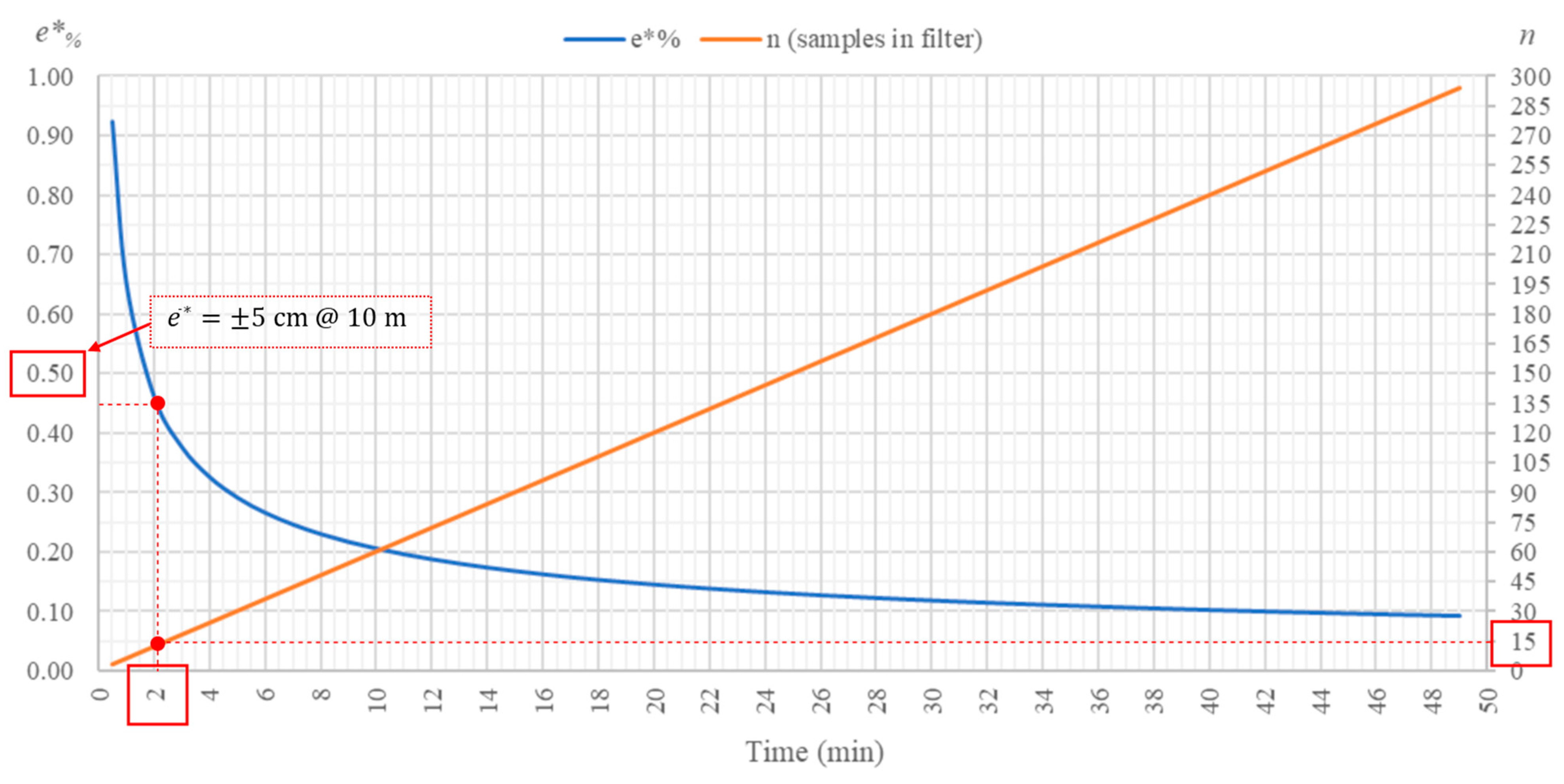

3.2.3. Filtering RTK Position

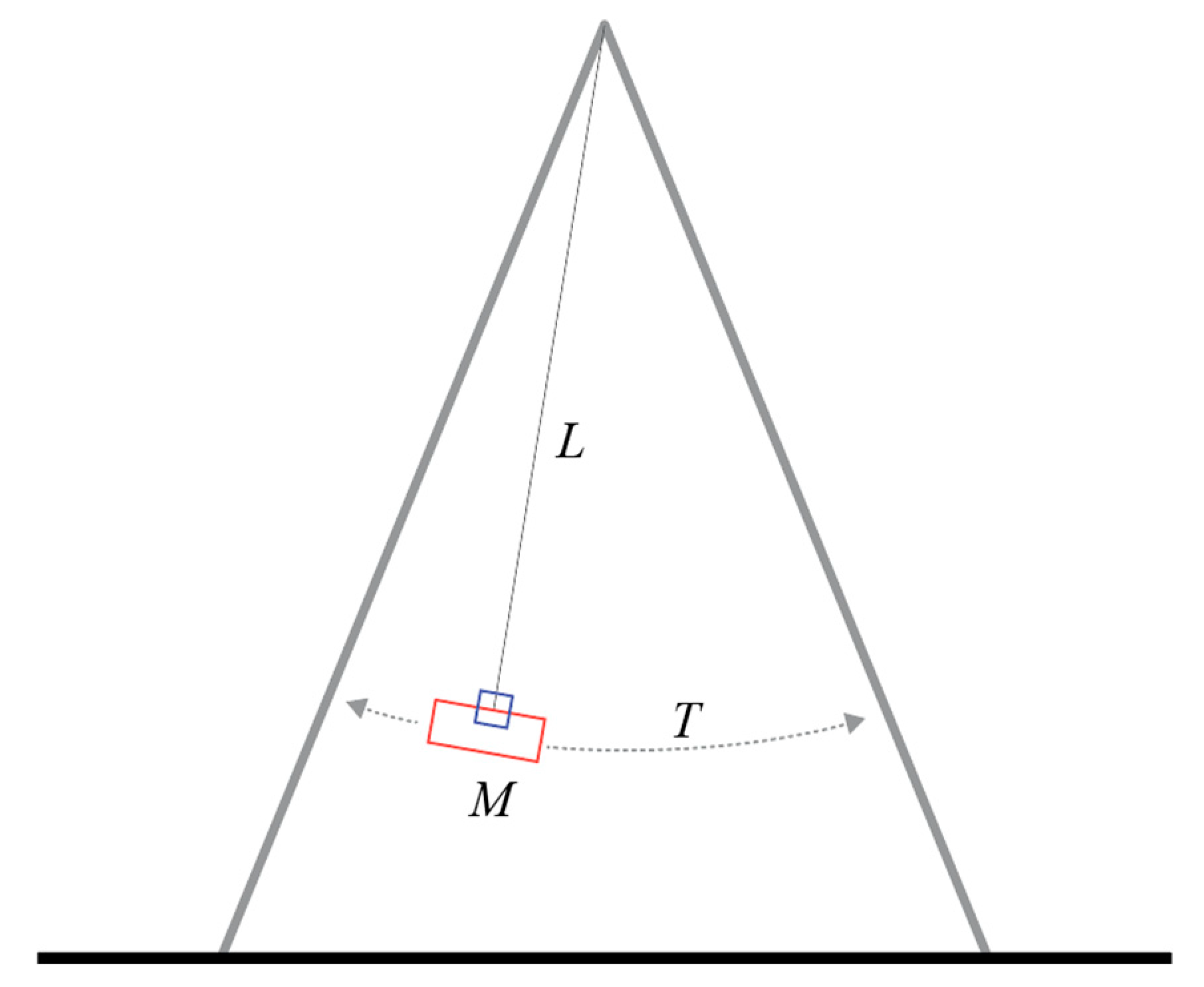

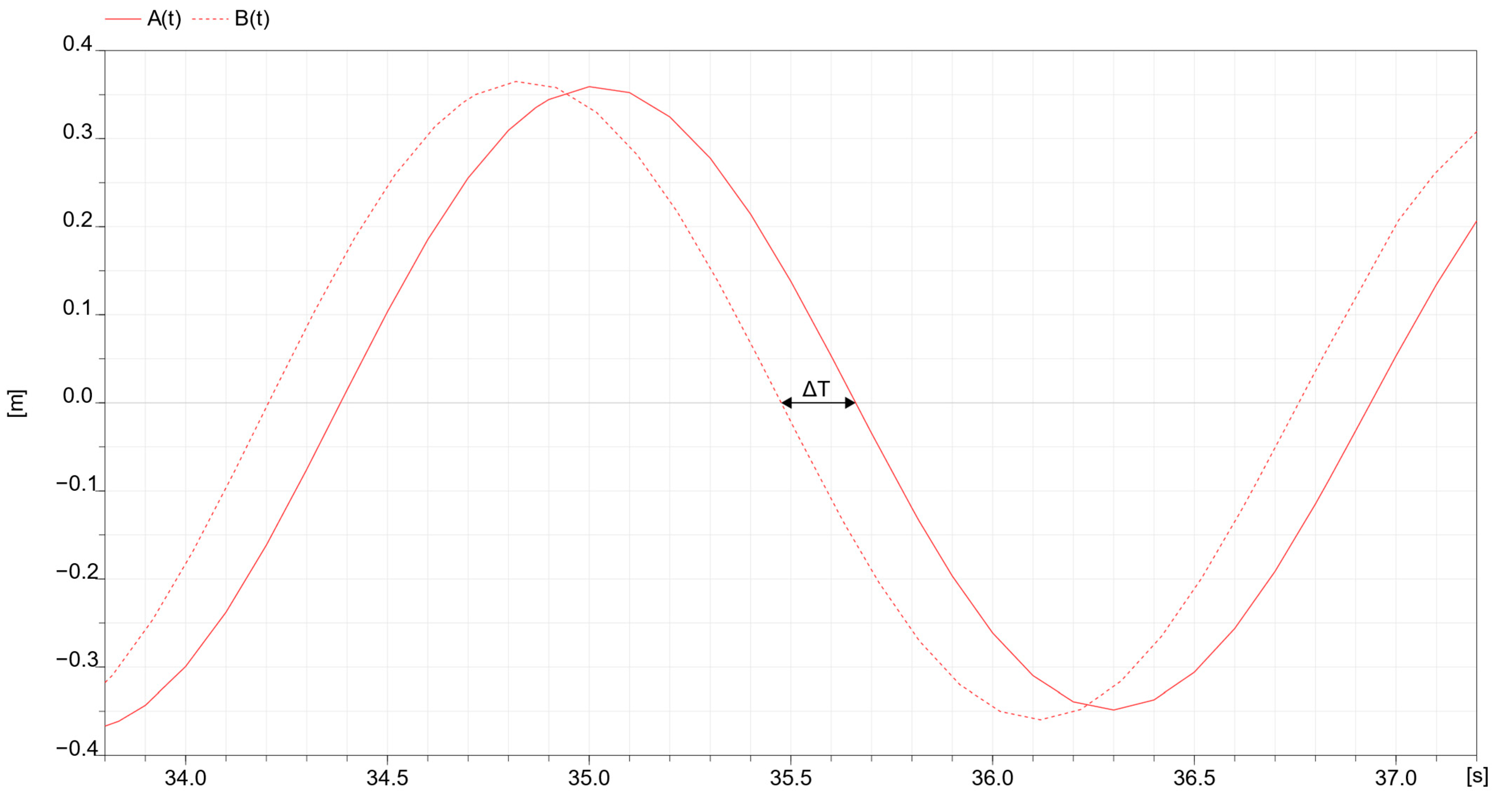

3.2.4. Latency Time Compensation

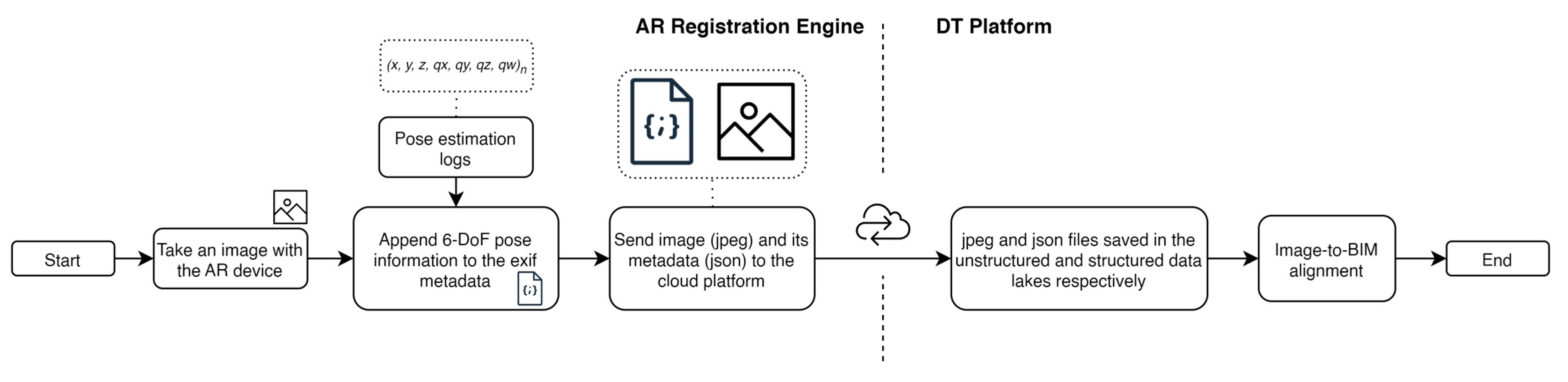

3.3. Image Registration

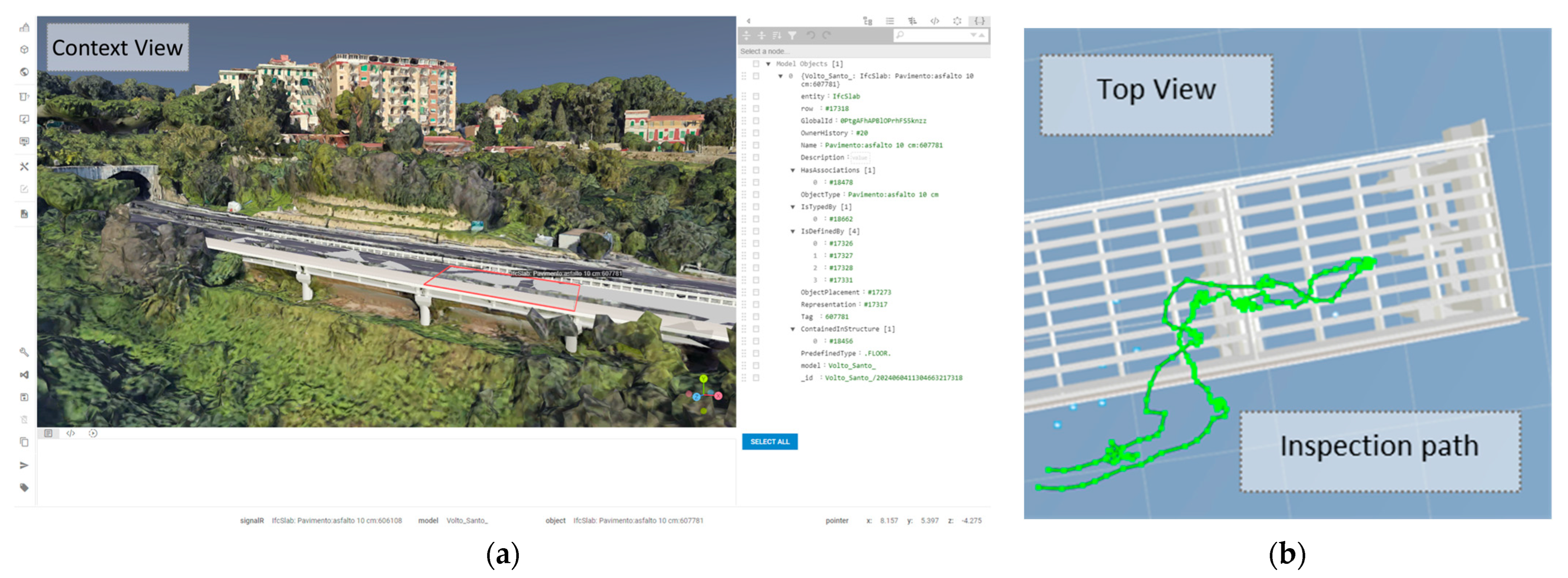

4. Experiments and Results

5. Discussion

- High-accuracy AR registration with centimeter-level positioning error () and sub-decimal orientation error ();

- No dependency on manual registration processes (in both directions, i.e., model-to-field and field-to-model) or necessity to resort to external infrastructures such as markers;

- Robust and seamless functionality in urban-canyon scenarios;

- No drift issues in open environments,

- An automatic on-site procedure to update the geographical position of the virtual models on the platform through the AR interface. This would address the ground motion issue that causes misalignment between the real and virtual entities.

- Semantic extraction (e.g., presence of damages) from the registered images. This would further enrich the models and offer on-field visualization of the damage evolution state through AR.

- Further enhancement of the pose estimation accuracy and latency compensation by validating the system under varied context conditions.

- Additional on-site testing to demonstrate the scalability of the system to other types of infrastructure assets (e.g., road pavement) and systems of infrastructures.

- The implementation of a multi-user AR visualization to foster collaboration during complex infrastructure inspection activities.

- A direct comparative usability testing with the existing Asset Management System.

- A comparison with an alternative AR solution in terms of the usability and accuracy of the systems.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Eurostat Energy, Transport and Environment Statistics 2019 Edition. Available online: https://ec.europa.eu/eurostat/documents/3217494/10165279/KS-DK-19-001-EN-N.pdf/76651a29-b817-eed4-f9f2-92bf692e1ed9?t=1571144140000 (accessed on 5 October 2023).

- Autori, R.F.; Adinolfi, F.; Peleggi, G.; Vecchio, Y.; Pauselli, G. DIVULGA Elaboration on Eurostat and World Economic Forum Data. Available online: https://www.divulgastudi.it/wp-content/uploads/2022/04/Focus_Infrastruttura-Italia.pdf (accessed on 5 October 2023).

- Frangopol, D.; Tsompanakis, Y. Maintenance and Safety of Aging Infrastructure; Frangopol, D., Tsompanakis, Y., Eds.; CRC Press: Boca Raton, FL, USA, 2014; ISBN 9780429220586. [Google Scholar]

- World Economic Forum the Global Competitiveness Report 2019. Available online: https://www3.weforum.org/docs/WEF_TheGlobalCompetitivenessReport2019.pdf (accessed on 5 October 2023).

- World Economic Forum the Global Competitiveness Report Special Edition 2020. Available online: https://www3.weforum.org/docs/WEF_TheGlobalCompetitivenessReport2020.pdf (accessed on 5 October 2023).

- Matos, J.C.; Nicoletti, V.; Kralovanec, J.; Sousa, H.S.; Gara, F.; Moravcik, M.; Morais, M.J. Comparison of Condition Rating Systems for Bridges in Three European Countries. Appl. Sci. 2023, 13, 12343. [Google Scholar] [CrossRef]

- Lin, J.J.; Ibrahim, A.; Sarwade, S.; Golparvar-Fard, M. Bridge Inspection with Aerial Robots: Automating the Entire Pipeline of Visual Data Capture, 3D Mapping, Defect Detection, Analysis, and Reporting. J. Comput. Civ. Eng. 2021, 35, 4020064. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhu, L. A Review on Unmanned Aerial Vehicle Remote Sensing: Platforms, Sensors, Data Processing Methods, and Applications. Drones 2023, 7, 398. [Google Scholar] [CrossRef]

- Lapointe, J.-F.; Allili, M.S.; Belliveau, L.; Hebbache, L.; Amirkhani, D.; Sekkati, H. AI-AR for Bridge Inspection by Drone. In Virtual, Augmented and Mixed Reality: Applications in Education, Aviation and Industry, Proceedings of the 14th International Conference, VAMR 2022, Held as Part of the 24th HCI International Conference, HCII 2022, Virtual, 26 June–1 July 2022; Chen, J.Y.C., Fragomeni, G., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2022; Volume 13318, pp. 302–313. [Google Scholar]

- Catbas, F.N.; Luleci, F.; Zakaria, M.; Bagci, U.; LaViola, J.J.; Cruz-Neira, C.; Reiners, D. Extended Reality (XR) for Condition Assessment of Civil Engineering Structures: A Literature Review. Sensors 2022, 22, 9560. [Google Scholar] [CrossRef]

- Mousavi, V.; Rashidi, M.; Mohammadi, M.; Samali, B. Evolution of Digital Twin Frameworks in Bridge Management: Review and Future Directions. Remote Sens. 2024, 16, 1887. [Google Scholar] [CrossRef]

- Lu, Q.; Xie, X.; Heaton, J.; Parlikad, A.K.; Schooling, J. From BIM Towards Digital Twin: Strategy and Future Development for Smart Asset Management. In Service Oriented, Holonic and Multi-Agent Manufacturing Systems for Industry of the Future; Borangiu, T., Trentesaux, D., Leitão, P., Boggino, A.G., Botti, V., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 392–404. [Google Scholar]

- Callcut, M.; Cerceau Agliozzo, J.-P.; Varga, L.; McMillan, L. Digital Twins in Civil Infrastructure Systems. Sustainability 2021, 13, 11549. [Google Scholar] [CrossRef]

- Naderi, H.; Shojaei, A. Digital Twinning of Civil Infrastructures: Current State of Model Architectures, Interoperability Solutions, and Future Prospects. Autom. Constr. 2023, 149, 104785. [Google Scholar] [CrossRef]

- Vignali, V.; Acerra, E.M.; Lantieri, C.; Di Vincenzo, F.; Piacentini, G.; Pancaldi, S. Building Information Modelling (BIM) Application for an Existing Road Infrastructure. Autom. Constr. 2021, 128, 103752. [Google Scholar] [CrossRef]

- Sacks, R.; Ma, L.; Yosef, R.; Borrmann, A.; Daum, S.; Kattel, U. Semantic Enrichment for Building Information Modeling: Procedure for Compiling Inference Rules and Operators for Complex Geometry. J. Comput. Civ. Eng. 2017, 31, 04017062. [Google Scholar] [CrossRef]

- Chen, J.; Lu, W.; Lou, J. Automatic Concrete Defect Detection and Reconstruction by Aligning Aerial Images onto Semantic-rich Building Information Model. Comput.-Aided Civ. Infrastruct. Eng. 2023, 38, 1079–1098. [Google Scholar] [CrossRef]

- Eastman, C.; Teicholz, P.; Sacks, R.; Liston, K. BIM Handbook: A Guide to Building Information Modeling for Owners, Managers, Designers, Engineers and Contractors; Wiley Publishing: Hoboken, NJ, USA, 2008; ISBN 0470185287. [Google Scholar]

- Luiten, B.; Bohms, M.; Alsem, D.; O’Keeffe, A. Asset Information Management Using Linked Data for the Life-Cycle of Roads. In Life Cycle Analysis and Assessment in Civil Engineering: Towards an Integrated Vision; CRC Press: London, UK, 2018. [Google Scholar]

- Ramonell, C.; Chacón, R.; Posada, H. Knowledge Graph-Based Data Integration System for Digital Twins of Built Assets. Autom. Constr. 2023, 156, 105109. [Google Scholar] [CrossRef]

- Tang, S.; Shelden, D.R.; Eastman, C.M.; Pishdad-Bozorgi, P.; Gao, X. A Review of Building Information Modeling (BIM) and the Internet of Things (IoT) Devices Integration: Present Status and Future Trends. Autom. Constr. 2019, 101, 127–139. [Google Scholar] [CrossRef]

- Grieves, M.W. Digital Twins: Past, Present, and Future. In The Digital Twin; Crespi, N., Drobot, A.T., Minerva, R., Eds.; Springer International Publishing: Cham, Switzerland, 2023; pp. 97–121. ISBN 978-3-031-21343-4. [Google Scholar]

- Broo, D.G.; Schooling, J. Digital Twins in Infrastructure: Definitions, Current Practices, Challenges and Strategies. Int. J. Constr. Manag. 2023, 23, 1254–1263. [Google Scholar] [CrossRef]

- Yin, Y.; Zheng, P.; Li, C.; Wang, L. A State-of-the-Art Survey on Augmented Reality-Assisted Digital Twin for Futuristic Human-Centric Industry Transformation. Robot Comput. Integr. Manuf. 2023, 81, 102515. [Google Scholar] [CrossRef]

- Bavelos, A.C.; Anastasiou, E.; Dimitropoulos, N.; Oikonomou, G.; Makris, S. Augmented Reality-based Method for Road Maintenance Operators in Human–Robot Collaborative Interventions. Comput.-Aided Civ. Infrastruct. Eng. 2024, 39, 1077–1095. [Google Scholar] [CrossRef]

- Messi, L.; Spegni, F.; Vaccarini, M.; Corneli, A.; Binni, L. Seamless Augmented Reality Registration Supporting Facility Management Operations in Unprepared Environments. J. Inf. Technol. Constr. 2024, 29, 1156–1180. [Google Scholar] [CrossRef]

- Salman, A.; Ahmad, W. Implementation of Augmented Reality and Mixed Reality Applications for Smart Facilities Management: A Systematic Review. Smart Sustain. Built Environ. 2023, 14, 254–275. [Google Scholar] [CrossRef]

- Behzadan, A.H.; Dong, S.; Kamat, V.R. Augmented Reality Visualization: A Review of Civil Infrastructure System Applications. Adv. Eng. Inform. 2015, 29, 252–267. [Google Scholar] [CrossRef]

- Xu, J.; Moreu, F. A Review of Augmented Reality Applications in Civil Infrastructure During the 4th Industrial Revolution. Front. Built Environ. 2021, 7, 640732. [Google Scholar] [CrossRef]

- Messi, L.; Spegni, F.; Vaccarini, M.; Corneli, A.; Binni, L. Seamless Indoor/Outdoor Marker-Less Augmented Reality Registration Supporting Facility Management Operations. In Proceedings of the 23rd International Conference on Construction Applications of Virtual Reality, Florence, Italy, 13–16 November 2023; Firenze University Press: Florence, Italy, 2023; pp. 109–120, ISBN 9791221502572. [Google Scholar]

- Binni, L.; Spegni, F.; Vaccarini, M.; Naticchia, B.; Messi, L. Enhanced Civil Infrastructure Inspections Using Augmented Reality: An Automatic Registration System. In Proceedings of the 24th International Conference on Construction Applications of Virtual Reality (CONVR 2024), Sydney, Australia, 4–6 November 2024. [Google Scholar]

- Justo, A.; Soilán, M.; Sánchez-Rodríguez, A.; Riveiro, B. Scan-to-BIM for the Infrastructure Domain: Generation of IFC-Compliant Models of Road Infrastructure Assets and Semantics Using 3D Point Cloud Data. Autom. Constr. 2021, 127, 103703. [Google Scholar] [CrossRef]

- Aktan, A.E.; Bartoli, I.; Karaman, S.G. Technology Leveraging for Infrastructure Asset Management: Challenges and Opportunities. Front. Built Environ. 2019, 5, 61. [Google Scholar] [CrossRef]

- Stöckner, M.; Brow, I.; Zwernemann, P.; Hajdin, R.; Schiffmann, F.; Blumenfeld, T.; König, M.; Liu, L.; Gavin, K. Exchange and Exploitation of Data from Asset Management Systems Using Vendor Free Format; CEDR Transnational Road Research Programme: Brussels, Belgium, 2022. [Google Scholar]

- Biswas, S.; Wright, A.; Kokot, D.; Petrovic, J.; Barateiro, J.; Marecos, V.; Adesiyun, A.; Bhusari, S. CoDEC Final Project Report; CEDR Transnational Road Research Programme: Brussels, Belgium, 2021. [Google Scholar]

- Ismail, N.; Ismail, A.; Rahmat, R. An Overview of Expert Systems in Pavement Management. Eur. J. Sci. Res. 2009, 30, 1216–1450. [Google Scholar]

- Hagedorn, P.; Liu, L.; König, M.; Hajdin, R.; Blumenfeld, T.; Stöckner, M.; Billmaier, M.; Grossauer, K.; Gavin, K. BIM-Enabled Infrastructure Asset Management Using Information Containers and Semantic Web. J. Comput. Civ. Eng. 2023, 37, 4022041. [Google Scholar] [CrossRef]

- Bizer, C.; Heath, T.; Berners-Lee, T. Linked Data—The Story So Far. In Semantic Services, Interoperability and Web Applications: Emerging Concepts; Association for Computing Machinery: New York, NY, USA, 2011; pp. 205–227. [Google Scholar]

- Vilgertshofer, S.; Mafipour, M.; Borrmann, A.; Martens, J.; Blut, T.; Becker, R.; Blankenbach, J.; Göbels, A.; Beetz, J.; Celik, F.; et al. TwinGen: Advanced Technologies to Automatically Generate Digital Twins for Operation and Maintenance of Existing Bridges. In ECPPM 2022—eWork and eBusiness in Architecture, Engineering and Construction 2022, Proceedings of the 14th European Conference on Product and Process Modelling (ECPPM 2022), Trondheim, Norway, 14–16 September 2022; CRC Press: London, UK, 2023; pp. 213–220. ISBN 9781003354222. [Google Scholar]

- Pfitzner, F.; Braun, A.; Borrmann, A. From Data to Knowledge: Construction Process Analysis through Continuous Image Capturing, Object Detection, and Knowledge Graph Creation. Autom. Constr. 2024, 164, 105451. [Google Scholar] [CrossRef]

- Lei, X.; Wu, P.; Zhu, J.; Wang, J. Ontology-Based Information Integration: A State-of-the-Art Review in Road Asset Management. Arch. Comput. Methods Eng. 2022, 29, 2601–2619. [Google Scholar] [CrossRef]

- Zhu, J.; Wu, P.; Lei, X. IFC-Graph for Facilitating Building Information Access and Query. Autom. Constr. 2023, 148, 104778. [Google Scholar] [CrossRef]

- Liu, L.; Zeng, N.; Liu, Y.; Han, D.; König, M. Multi-Domain Data Integration and Management for Enhancing Service-Oriented Digital Twin for Infrastructure Operation and Maintenance. Dev. Built Environ. 2024, 18, 100475. [Google Scholar] [CrossRef]

- Shahzad, M.; Shafiq, M.T.; Douglas, D.; Kassem, M. Digital Twins in Built Environments: An Investigation of the Characteristics, Applications, and Challenges. Buildings 2022, 12, 120. [Google Scholar] [CrossRef]

- Mascareñas, D.D.; Ballor, J.P.; McClain, O.L.; Mellor, M.A.; Shen, C.-Y.; Bleck, B.; Morales, J.; Yeong, L.-M.R.; Narushof, B.; Shelton, P.; et al. Augmented Reality for next Generation Infrastructure Inspections. Struct. Health Monit. 2021, 20, 1957–1979. [Google Scholar] [CrossRef]

- Çelik, F.; Herbers, P.; König, M. Image Segmentation on Concrete Damage for Augmented Reality Supported Inspection Tasks. In Advances in Information Technology in Civil and Building Engineering, Proceedings of the International Conference on Computing in Civil and Building Engineering (ICCCBE 2022), Cape Town, South Africa, 26–28 October 2022; Skatulla, S., Beushausen, H., Eds.; Lecture Notes in Civil Engineering; Springer: Cham, Switzerland, 2024; Volume 357, pp. 237–252. [Google Scholar]

- Nguyen, D.-C.; Nguyen, T.-Q.; Jin, R.; Jeon, C.-H.; Shim, C.-S. BIM-Based Mixed-Reality Application for Bridge Inspection and Maintenance. Constr. Innov. 2022, 22, 487–503. [Google Scholar] [CrossRef]

- Riedlinger, U.; Klein, F.; Hill, M.; Neumann, S.; Holst, R.; Oppermann, L.; Bahlau, S. Digital Support for Bridge Inspectors through Mixed Reality BIM Data Visualizations. In Proceedings of the ASCE International Conference on Computing in Civil Engineering 2021, Orlando, FL, USA, 12–14 September 2021; American Society of Civil Engineers: Reston, VA, USA, 2022; pp. 1359–1366. [Google Scholar]

- Song, J.; Li, W.; Duan, C.; Zhu, X. R2-GVIO: A Robust, Real-Time GNSS-Visual-Inertial State Estimator in Urban Challenging Environments. IEEE Internet Things J. 2024, 11, 22269–22282. [Google Scholar] [CrossRef]

- Song, J.; Li, W.; Duan, C.; Wang, L.; Fan, Y.; Zhu, X. An Optimization-Based Indoor–Outdoor Seamless Positioning Method Integrating GNSS RTK, PS, and VIO. IEEE Trans. Circuits Syst. II Express Briefs 2024, 71, 2889–2893. [Google Scholar] [CrossRef]

- Roberts, G.W.; Evans, A.; Dodson, A.H.; Denby, B.; Cooper, S.; Hollands, R. The Use of Augmented Reality, GPS and INS for Subsurface Data Visualisation. In Proceedings of the FIG XXII International Congress, Washington, DC, USA, 19–26 April 2002. [Google Scholar]

- He, Z.; Xia, Z.; Chang, Y.; Chen, W.; Hu, J.; Wei, X. Research on Underground Pipeline Augmented Reality System Based on ARToolKit. In Proceedings of the Geoinformatics 2006: Geospatial Information Technology, Wuhan, China, 28–29 October 2006; p. 642112. [Google Scholar]

- Hansen, L.H.; Fleck, P.; Stranner, M.; Schmalstieg, D.; Arth, C. Augmented Reality for Subsurface Utility Engineering, Revisited. IEEE Trans. Vis. Comput. Graph. 2021, 27, 4119–4128. [Google Scholar] [CrossRef]

- Zhao, S.; Chen, Y.; Farrell, J.A. High-Precision Vehicle Navigation in Urban Environments Using an MEM’s IMU and Single-Frequency GPS Receiver. IEEE Trans. Intell. Transp. Syst. 2016, 17, 2854–2867. [Google Scholar] [CrossRef]

- Guarese, R.L.M.; Maciel, A. Development and Usability Analysis of a Mixed Reality GPS Navigation Application for the Microsoft HoloLens. In Advances in Computer Graphics; Springer: Cham, Switzerland, 2019; Volume 11542, pp. 431–437. [Google Scholar]

- Kaizu, Y.; Choi, J. Development of a Tractor Navigation System Using Augmented Reality. Eng. Agric. Environ. Food 2012, 5, 96–101. [Google Scholar] [CrossRef]

- vGIS Inc. Engineering-Grade AR for AEC. Available online: https://www.vgis.io/augmented-reality-bim-gis-ar-aec-civil-construction-engineering-bentley-autodesk-esri/ (accessed on 20 September 2023).

- Liu, T.; Li, B.; Chen, G.; Yang, L.; Qiao, J.; Chen, W. Tightly Coupled Integration of GNSS/UWB/VIO for Reliable and Seamless Positioning. IEEE Trans. Intell. Transp. Syst. 2024, 25, 2116–2128. [Google Scholar] [CrossRef]

- Trimble Inc. Site Vision. Available online: https://sitevision.trimble.com/ (accessed on 20 September 2023).

- Hübner, P.; Clintworth, K.; Liu, Q.; Weinmann, M.; Wursthorn, S. Evaluation of HoloLens Tracking and Depth Sensing for Indoor Mapping Applications. Sensors 2020, 20, 1021. [Google Scholar] [CrossRef]

- Office of Geomatics. World Geodetic System 1984 (WGS 84). Available online: https://earth-info.nga.mil/index.php?dir=wgs84&action=wgs84 (accessed on 30 March 2025).

- Wabbena, G.; Schmitz, M.; Bagge, A. PPP-RTK: Precise Point Positioning Using State-Space Representation in RTK Networks. In Proceedings of the 18th International Technical Meeting of the Satellite Division of The Institute of Navigation (ION GNSS 2005), Long Beach, CA, USA, 13–16 September 2005; pp. 2584–2594. [Google Scholar]

- Feng, Y.; Wang, J. GPS RTK Performance Characteristics and Analysis. J. Glob. Position. Syst. 2008, 7, 1–8. [Google Scholar]

- Kalafus, R.M.; Chin, G.Y. Measures of Accuracy in the Navstar/GPS: 2drms Vs. CEP. In Proceedings of the 1986 National Technical Meeting of The Institute of Navigation, Long Beach, CA, USA, 21–23 January 1986; pp. 49–54. [Google Scholar]

- Artus, M.; Koch, C. State of the Art in Damage Information Modeling for RC Bridges—A Literature Review. Adv. Eng. Inform. 2020, 46, 101171. [Google Scholar] [CrossRef]

- Park, S.; Ryu, S.; Lim, J.; Lee, Y.-S. A Real-Time High-Speed Autonomous Driving Based on a Low-Cost RTK-GPS. J. Real Time Image Process. 2021, 18, 1321–1330. [Google Scholar] [CrossRef]

- Güney, D.; Acar, M.; Özlüdemir, M.T.; Çelik, R.N. Investigation of Post-Earthquake Displacements in Viaducts Using Geodetic and Finite Element Methods. Nat. Hazards Earth Syst. Sci. 2010, 10, 2579–2587. [Google Scholar] [CrossRef]

- Kopeć, A. Ground Displacement Caused by Natural Earthquakes, Determining Based on Differential Interferometry SAR and Multiple Aperture Interferometry Techniques. AIP Conf. Proc. 2020, 2209, 040006. [Google Scholar]

- Fobiri, G.; Musonda, I.; Muleya, F. Reality Capture in Construction Project Management: A Review of Opportunities and Challenges. Buildings 2022, 12, 1381. [Google Scholar] [CrossRef]

- Assaad, R.; El-Adaway, I.H. Bridge Infrastructure Asset Management System: Comparative Computational Machine Learning Approach for Evaluating and Predicting Deck Deterioration Conditions. J. Infrastruct. Syst. 2020, 26, 4020032. [Google Scholar] [CrossRef]

| 3.00 | 0.04 | 1.33% | 0.76 | 0.02 | 0.80% | 3.00 | 8.00 |

| 3.00 | 0.04 | 1.33% | 0.76 | 0.015 | 0.80% | 2.00 | 6.00 |

| 3.00 | 0.03 | 1.00% | 0.57 | 0.02 | 0.80% | 4.00 | 20.00 |

| 3.00 | 0.03 | 1.00% | 0.57 | 0.015 | 0.80% | 3.00 | 15.00 |

| 3.00 | 0.02 | 0.67% | 0.38 | 0.02 | 0.80% | 6.00 | −30.00 |

| 3.00 | 0.02 | 0.67% | 0.38 | 0.015 | 0.80% | 5.00 | −23.00 |

| 5.00 | 0.02 | 0.40% | 0.23 | 0.02 | 0.80% | 10.00 | −10.00 |

| 5.00 | 0.02 | 0.40% | 0.23 | 0.015 | 0.80% | 8.00 | −8.00 |

| 3.00 | 0.01 | 0.33% | 0.19 | 0.02 | 0.80% | 12.00 | −9.00 |

| 3.00 | 0.01 | 0.33% | 0.19 | 0.015 | 0.80% | 9.00 | −6.00 |

| 5.00 | 0.01 | 0.20% | 0.11 | 0.02 | 0.80% | 20.00 | −7.00 |

| 5.00 | 0.01 | 0.20% | 0.11 | 0.015 | 0.80% | 15.00 | −5.00 |

| RTK Data | ENU Coords. (m) | Orientation w.r.t. North (Quaternions) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Time | Lat (wgs84) | Lon (wgs84) | altMSL | hAcc | x | y | z | qw | qx | qy | qz |

| 11:50:40.468 | 40.86919015 | 14.25740723 | 103.944 | 2251 | −0.08639 | 1.59419 | −0.00975 | −0.98229 | −0.08168 | 0.15519 | −0.06601 |

| 11:50:41.519 | 40.86918995 | 14.25740724 | 103.9021 | 2330 | −0.0888852 | 1.59303 | −0.0139342 | −0.98668 | −0.08762 | 0.117132 | −0.0712 |

| 11:50:42.481 | 40.86919040 | 14.25740673 | 103.6788 | 2140 | −0.1267483 | 1.59043 | 0.0672287 | −0.99566 | −0.07039 | 0.02852 | −0.05378 |

| … | |||||||||||

| 11:51:47.513 | 40.86917075 | 14.25763883 | 110.1255 | 15 | −8.988399 | 5.804564 | −17.64563 | −0.3967 | 0.66831 | −0.04746 | −0.62749 |

| 11:51:48.549 | 40.86917061 | 14.25763865 | 110.1041 | 14 | −9.007008 | 5.803912 | −17.62254 | 0.81001 | −0.05809 | 0.580342 | 0.060939 |

| 11:51:49.616 | 40.86917043 | 14.25763830 | 110.051 | 14 | −9.011633 | 5.773602 | −17.59091 | 0.91234 | −0.07821 | 0.355993 | 0.186499 |

| … | |||||||||||

| 11:52:55.568 | 40.86915548 | 14.25757002 | 108.7441 | 14 | −7.673061 | 4.313261 | −11.75547 | 0.94355 | −0.07955 | 0.307677 | 0.09341 |

| 11:52:56.583 | 40.86915455 | 14.25756949 | 108.6828 | 14 | −7.765261 | 4.277766 | −11.70206 | 0.95806 | −0.12031 | 0.19106 | 0.176465 |

| 11:52:57.533 | 40.86915503 | 14.25756585 | 108.5993 | 14 | −7.572274 | 4.1696 | −11.40405 | 0.97855 | −0.10247 | 0.06182 | 0.16771 |

| 11:52:58.617 | 40.86915738 | 14.25755746 | 108.723 | 2899 | −7.308829 | 4.013108 | −10.75015 | 0.86403 | −0.09861 | 0.460826 | 0.17711 |

| 11:52:59.517 | 40.86915781 | 14.25754956 | 108.4887 | 2530 | −7.057593 | 3.886707 | −10.22061 | 0.96341 | −0.11822 | 0.171201 | 0.169002 |

| 11:53:00.532 | 40.86915920 | 14.25753970 | 108.3284 | 2134 | −6.976624 | 3.655525 | −9.40127 | −0.96792 | 0.15754 | 0.022771 | −0.19442 |

| … | |||||||||||

| 11:54:24.541 | 40.86910619 | 14.25717587 | 106.5036 | 14 | −0.0557724 | 2.656061 | 21.71217 | 0.96567 | −0.03143 | 0.238217 | 0.09872 |

| 11:54:25.502 | 40.86910791 | 14.25716441 | 106.3261 | 16 | 0.2583908 | 2.678609 | 22.42915 | 0.97779 | −0.11198 | 0.061038 | 0.166302 |

| 11:54:26.519 | 40.86910614 | 14.25715727 | 106.2735 | 14 | 0.3353666 | 2.774769 | 23.08263 | −0.97413 | 0.11486 | 0.151111 | −0.12267 |

| hAcc | ||||

|---|---|---|---|---|

| N° of Samples | Mean [mm] | StDev [mm] | Min [mm] | Max [mm] |

| 4289 | 2780 | 2057 | 14 | 8154 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Binni, L.; Vaccarini, M.; Spegni, F.; Messi, L.; Naticchia, B. An Automatic Registration System Based on Augmented Reality to Enhance Civil Infrastructure Inspections. Buildings 2025, 15, 1146. https://doi.org/10.3390/buildings15071146

Binni L, Vaccarini M, Spegni F, Messi L, Naticchia B. An Automatic Registration System Based on Augmented Reality to Enhance Civil Infrastructure Inspections. Buildings. 2025; 15(7):1146. https://doi.org/10.3390/buildings15071146

Chicago/Turabian StyleBinni, Leonardo, Massimo Vaccarini, Francesco Spegni, Leonardo Messi, and Berardo Naticchia. 2025. "An Automatic Registration System Based on Augmented Reality to Enhance Civil Infrastructure Inspections" Buildings 15, no. 7: 1146. https://doi.org/10.3390/buildings15071146

APA StyleBinni, L., Vaccarini, M., Spegni, F., Messi, L., & Naticchia, B. (2025). An Automatic Registration System Based on Augmented Reality to Enhance Civil Infrastructure Inspections. Buildings, 15(7), 1146. https://doi.org/10.3390/buildings15071146