Can Generative AI-Generated Images Effectively Support and Enhance Real-World Construction Helmet Detection?

Abstract

1. Introduction

2. Literature Review

2.1. Research Progress on Helmet Detection Using Object Detection

2.2. Object Detection Datasets for Helmet

2.3. Why This Study Was Conducted

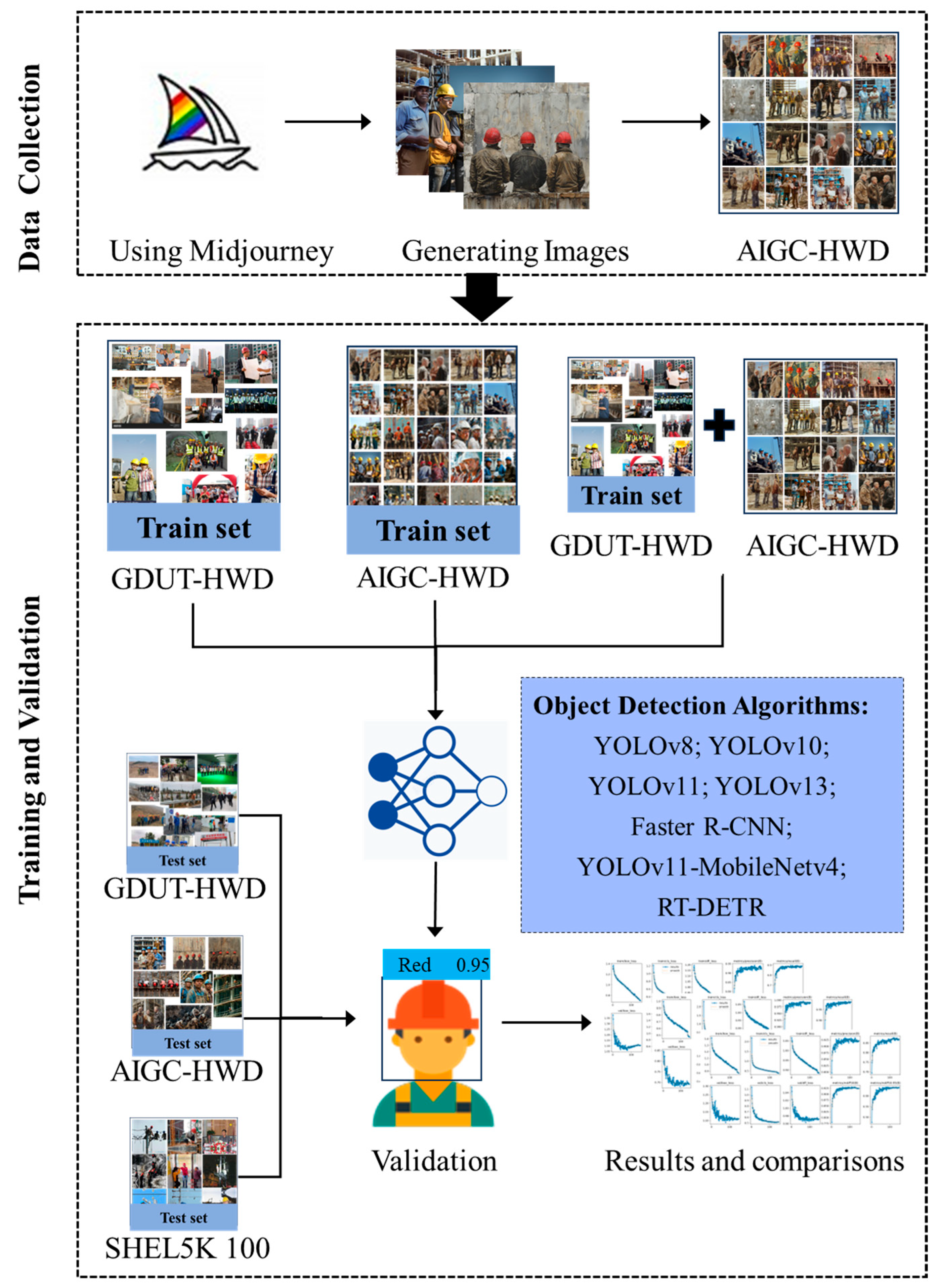

3. Methodology

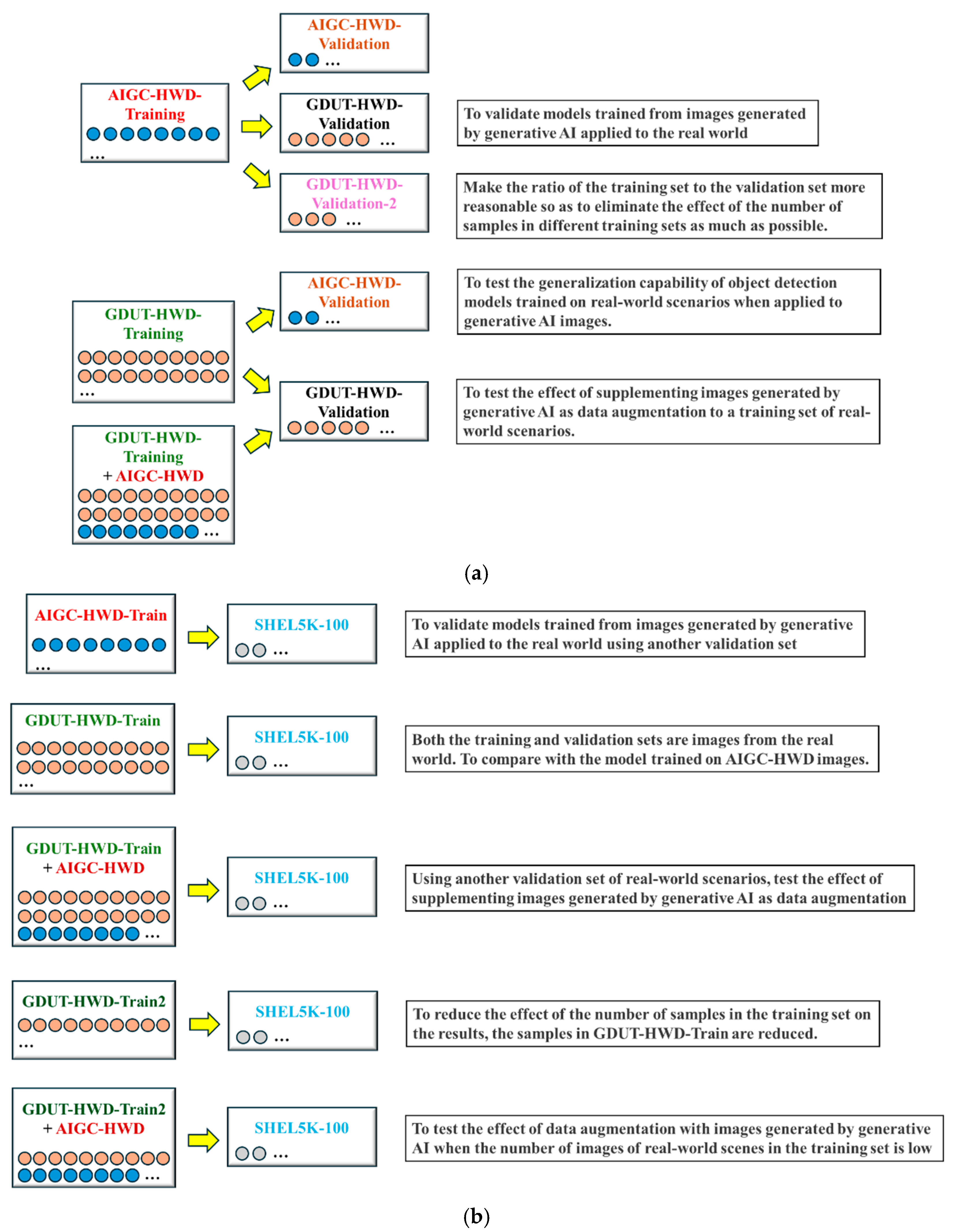

3.1. Design of Model Training and Testing Strategies

| Algorithm 1. End-to-End Pipeline for the AIGC-HWD Study (corresponding to Figure 1 and Figure 2) |

| Input: G ← Midjourney generator D_real ← {GDUT-HWD, SHEL5K-100} Models ← {YOLO v8, YOLO v10, YOLO 11, YOLO 11-MobileNet v4, YOLO v13, Faster R-CNN, RT-DETR} Output: Performance metrics (mAP@50, mAP@50:95, F1-score, AP@50 per class) --------------------------------------------------------------- # 1. Build synthetic dataset for each helmet_color in {red, yellow, blue, white, none}: images ← G.generate(prompt = helmet_color + construction_scene) images ← QualityCheck(images) labels ← ManualAnnotate(images) AIGC_HWD ← Split(images, labels, train=0.8, val=0.2) # 2. Prepare real datasets (GDUT_train, GDUT_val) ← Load(GDUT-HWD) SHEL100 ← Load(SHEL5K-100) (GDUT_train2, GDUT_val2) ← Subsample(GDUT_train, GDUT_val) # 3. Train and evaluate for each model in Models: θ_A ← Train(model, AIGC_HWD.train) Eval(model, θ_A, {AIGC_HWD.val, GDUT_val, GDUT_val2}) θ_B ← Train(model, GDUT_train) Eval(model, θ_B, {GDUT_val, AIGC_HWD_val. SHEL100}) θ_C ← Train(model, GDUT_train ∪ AIGC_HWD.train) Eval(model, θ_C, {GDUT_val, SHEL100}) θ_D ← Train(model, GDUT_train2) Eval(model, θ_D, {SHEL100}) θ_E ← Train(model, GDUT_train2 ∪ AIGC_HWD.train) Eval(model, θ_E, {SHEL100}) # 4. Compute metrics For all experiments: Compute mAP@50, mAP@50:95, AP@50_per_class Store results for comparison --------------------------------------------------------------- Return summary of improvements using AIGC data |

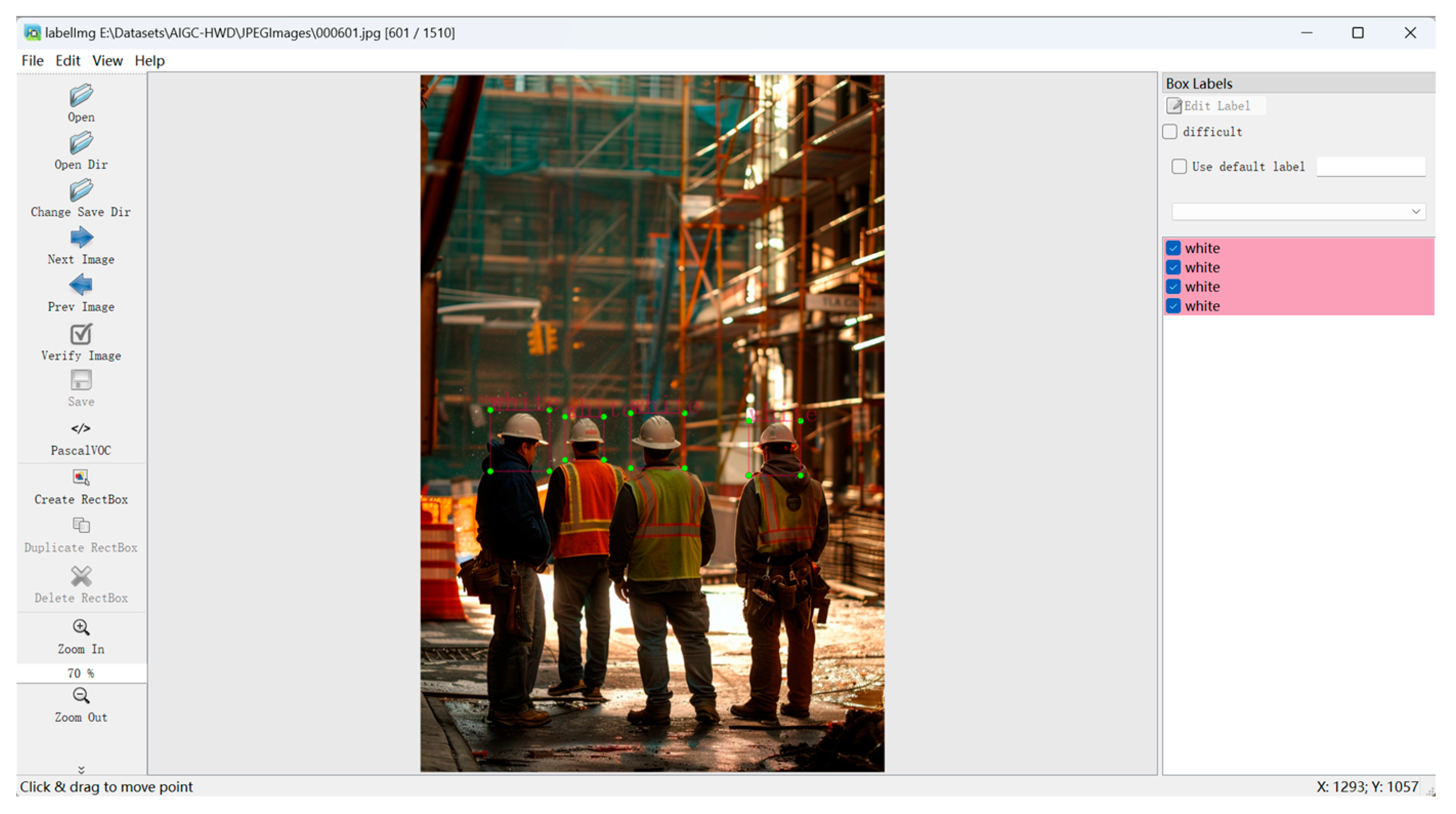

3.2. AIGC-HWD Dataset

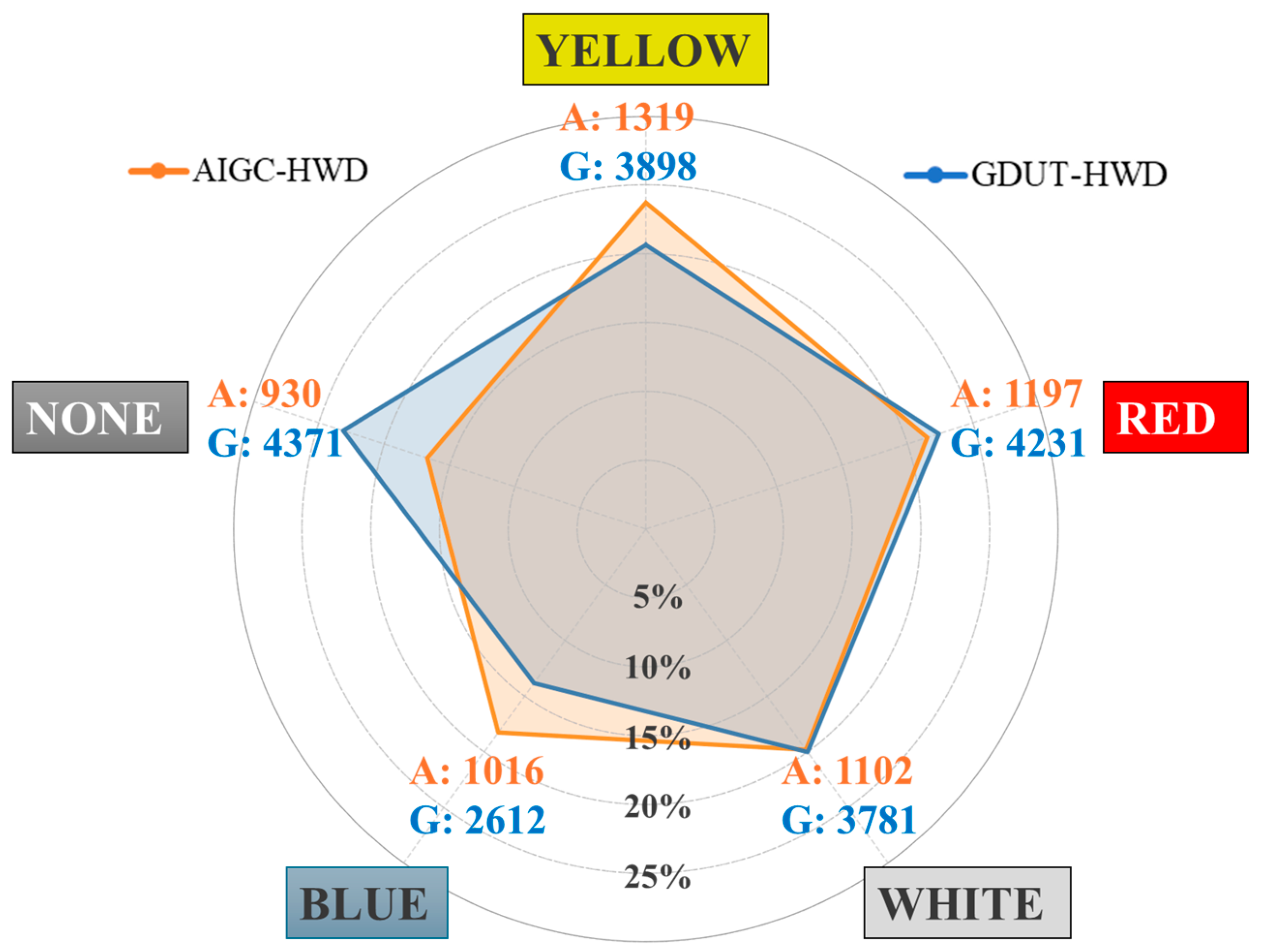

3.2.1. Datasets Overview and Comparison

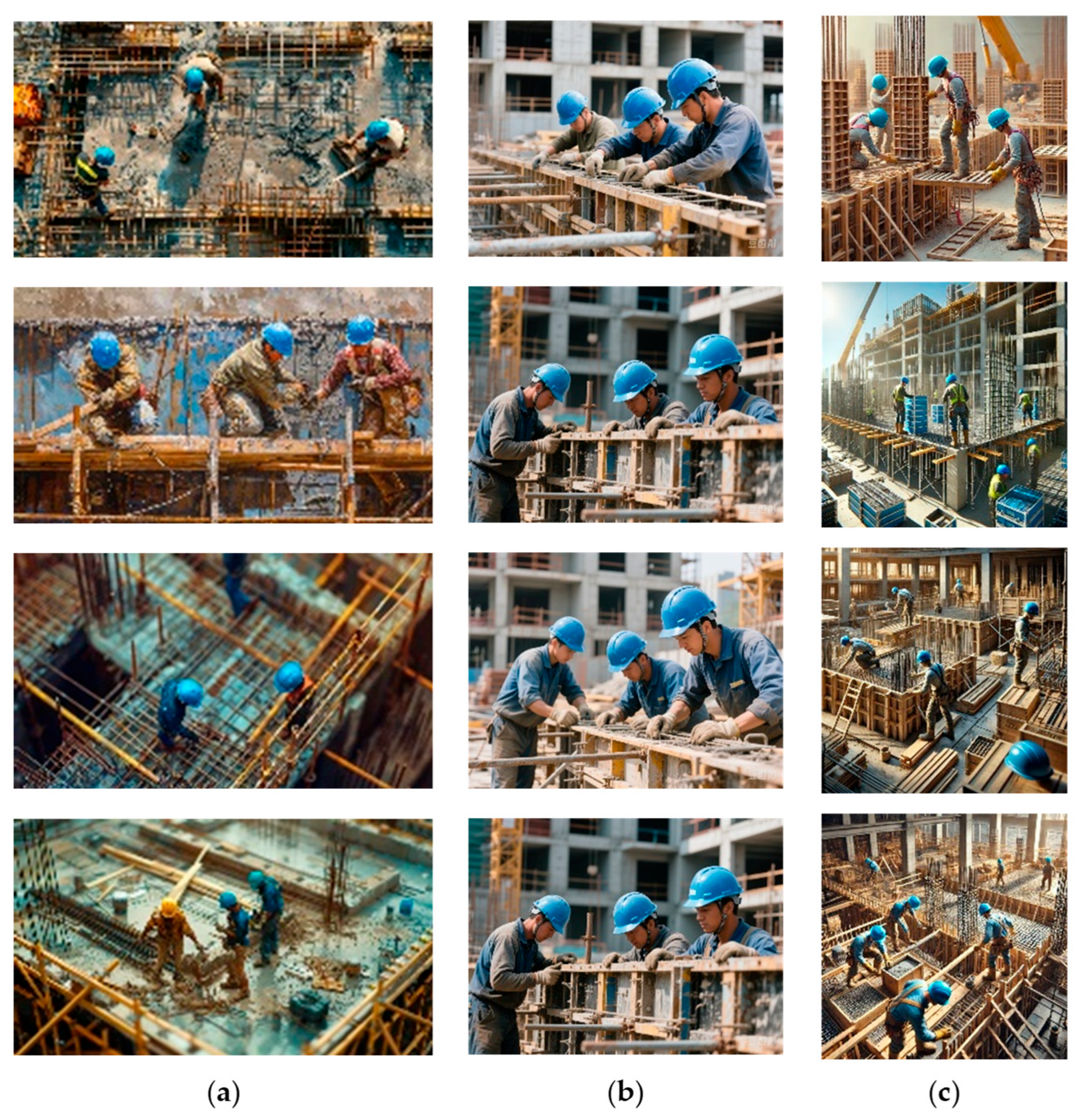

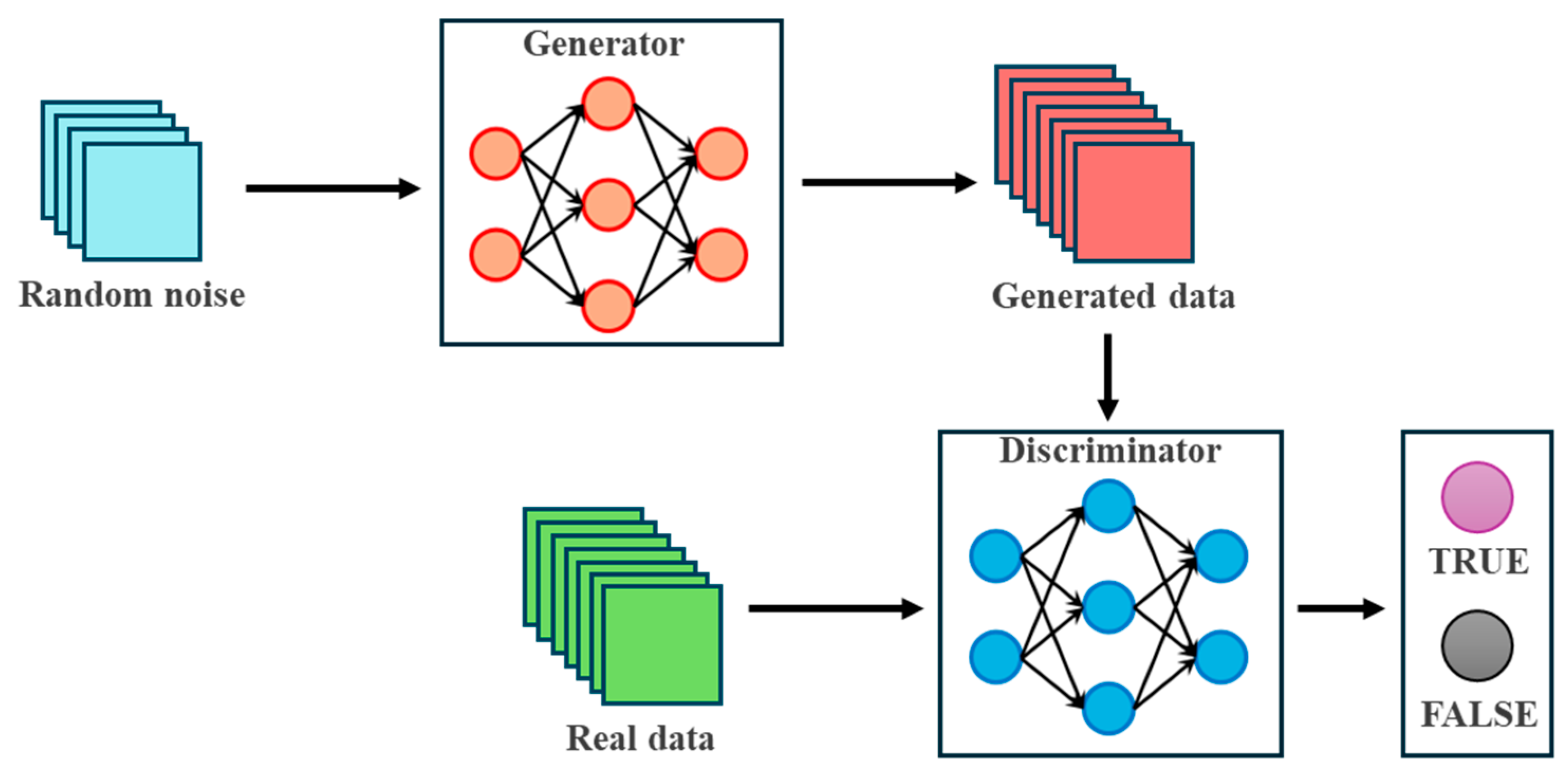

3.2.2. Image Generation Method

3.2.3. Annotation and Statistical Details

3.3. Selection and Description of Object Detection Algorithms

4. Experimental Results and Analysis

4.1. Definition of Experimental Evaluation Metrics

4.2. Results and Comparisons

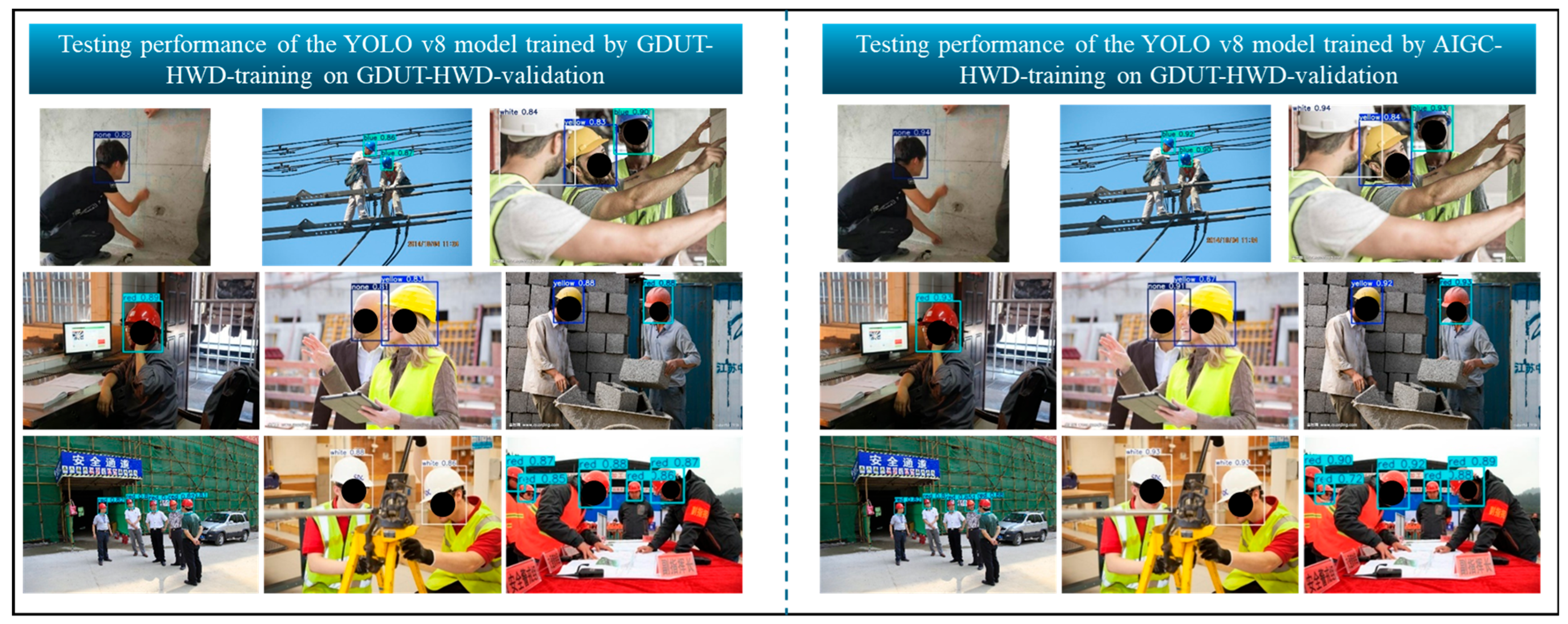

4.2.1. Generalization of AIGC-HWD-Trained Models to Real Scenes (GDUT-HWD as Validation Set)

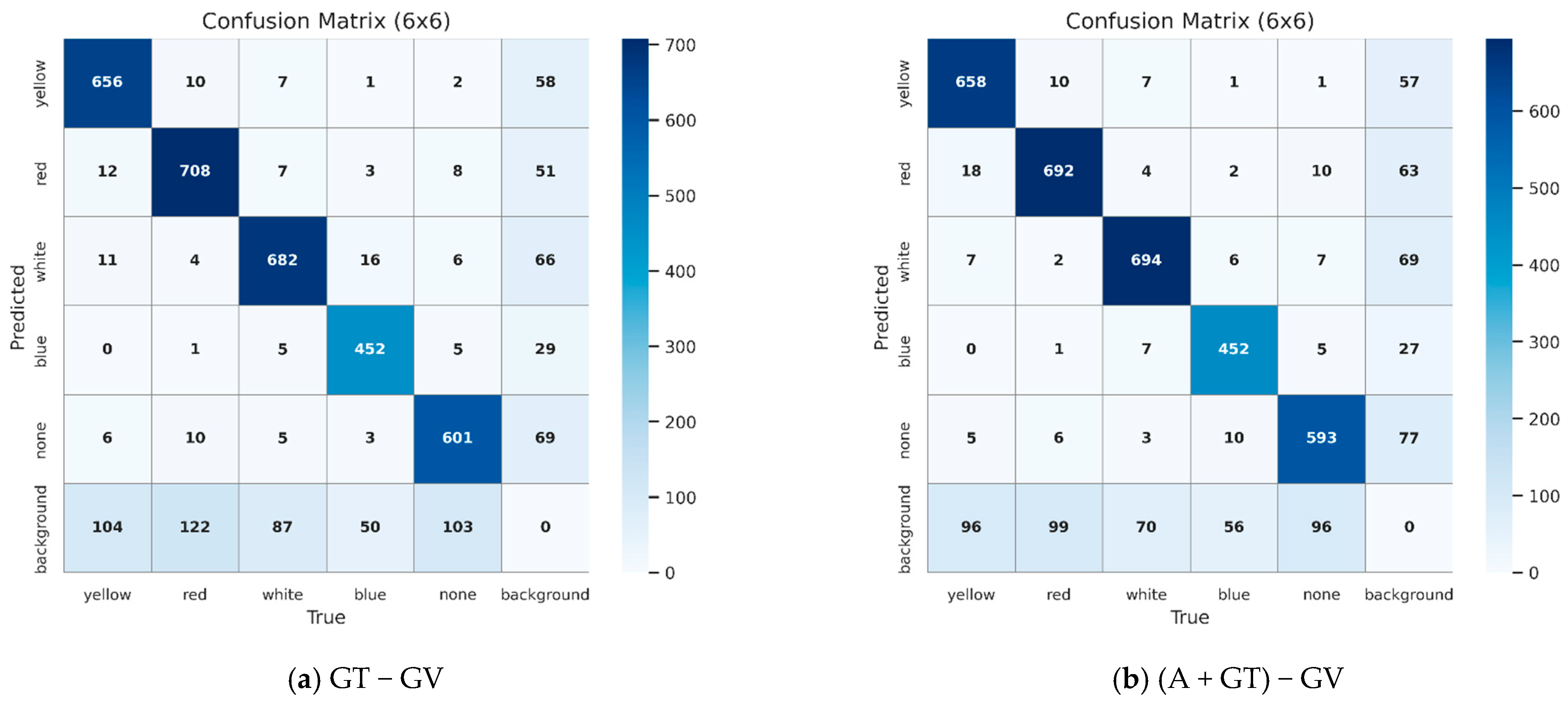

4.2.2. Performance via AIGC Data Augmentation (GDUT-HWD as Training Set)

4.2.3. Generalization of GDUT-HWD-Trained Models to AI-Generated Scenes (AIGC − HWD as Validation Set)

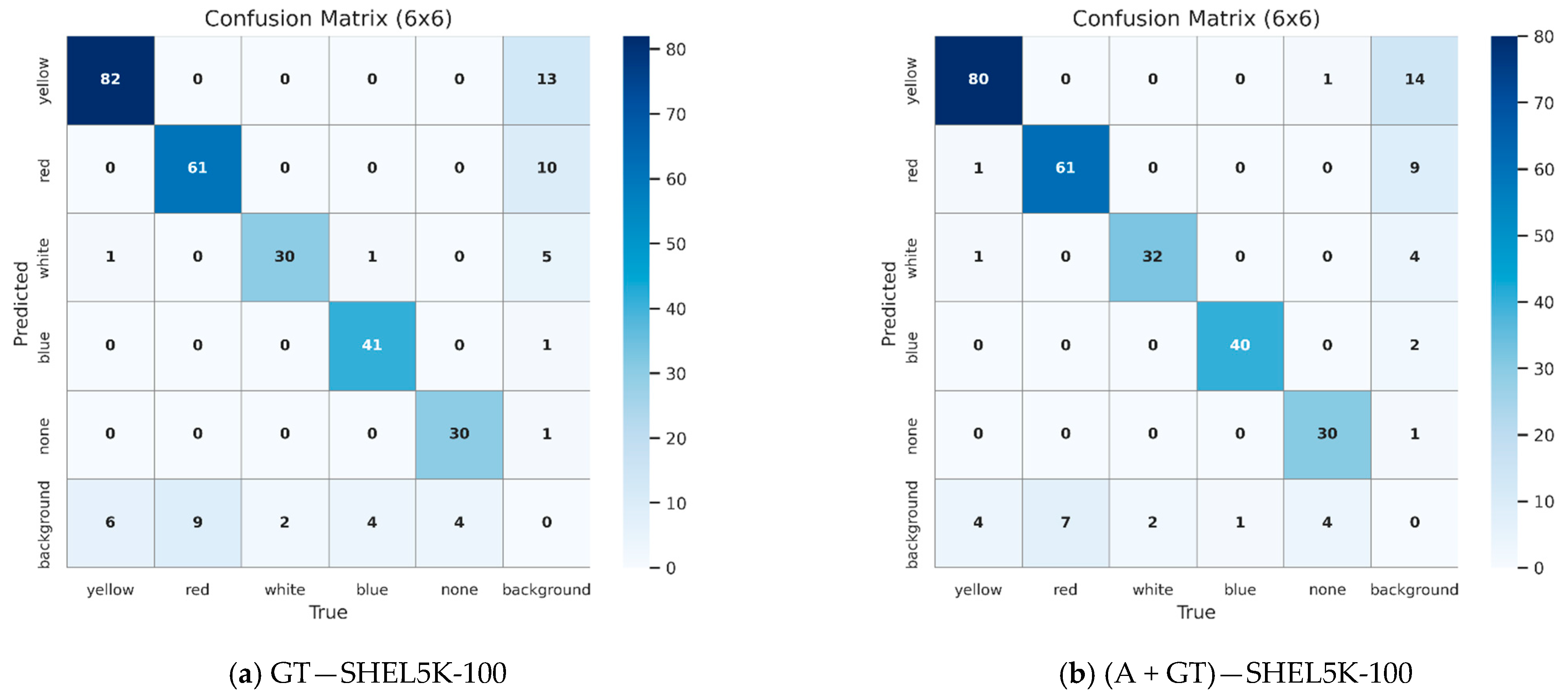

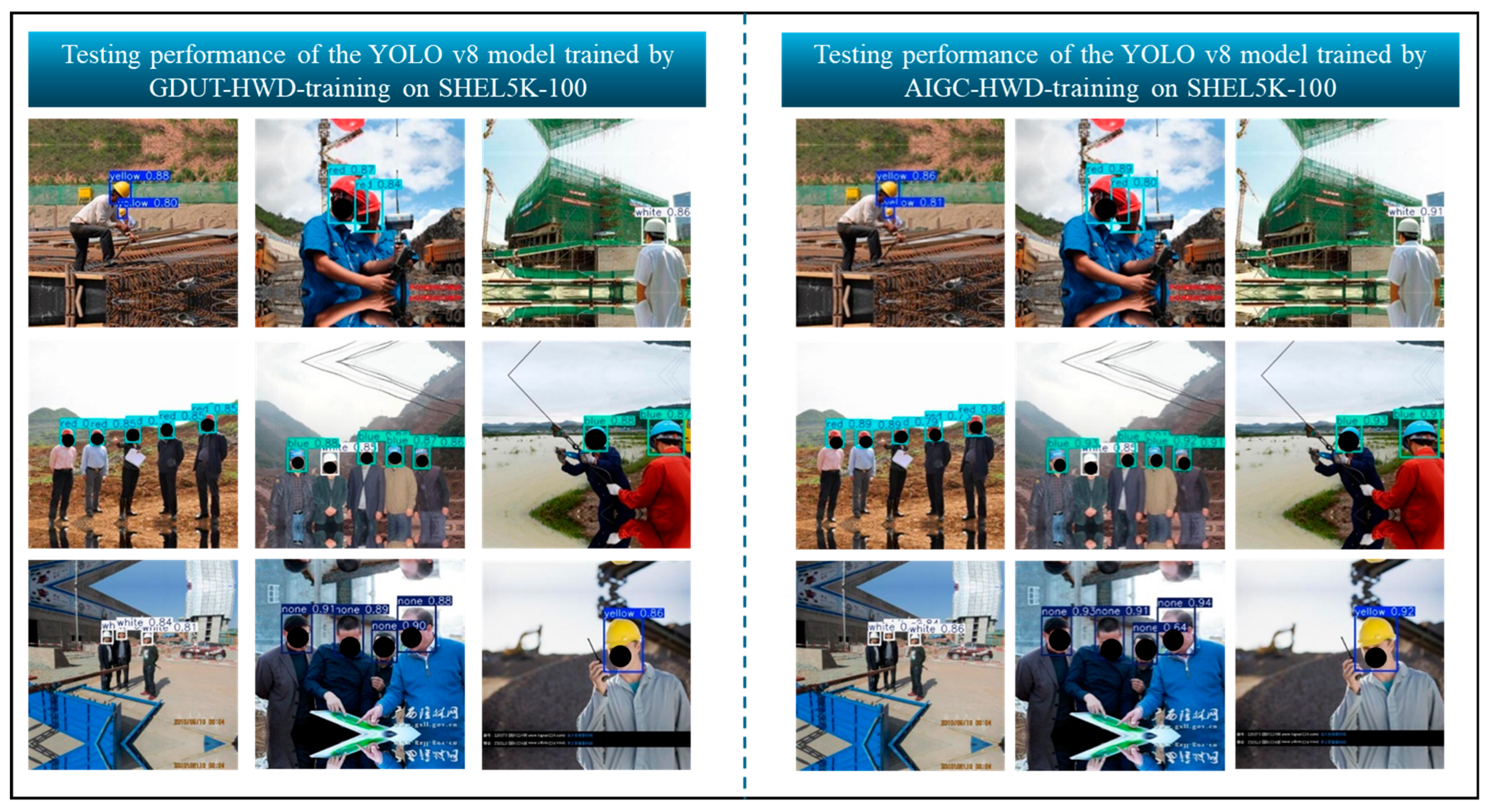

4.2.4. Cross-Dataset Validation

4.2.5. Summary

5. Discussion

5.1. Contributions to the Body of Knowledge

5.2. Limitations and Possible Future Research Directions

5.3. Interpretation of Performance Variations

5.4. Ethical and Practical Considerations of Using Synthetic Data

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, J.; Zhao, X.; Zhou, G.; Zhang, M. Standardized Use Inspection of Workers’ Personal Protective Equipment Based on Deep Learning. Saf. Sci. 2022, 150, 105689. [Google Scholar] [CrossRef]

- Konda, S.; Tiesman, H.M.; Reichard, A.A. Fatal Traumatic Brain Injuries in the Construction Industry, 2003−2010. Am. J. Ind. Med. 2016, 59, 212–220. [Google Scholar] [CrossRef]

- Team, S.I. The 5 Levels of the Hierarchy of Controls Explained; Safety International, LLC: Chesterfield, MO, USA, 2022. [Google Scholar]

- GB/T 11651-2008; Code of practice for selection of personal protective equipment. Standardization Administration of the People’s Republic of China: Beijing, China, 2008.

- 1926.100; Head Protection.|Occupational Safety and Health Administration. U.S. Department of Labor: Washington, DC, USA, 1972. Available online: https://www.osha.gov/laws-regs/regulations/standardnumber/1926/1926.100 (accessed on 9 May 2023).

- Li, J.; Miao, Q.; Zou, Z.; Gao, H.; Zhang, L.; Li, Z.; Wang, N. A Review of Computer Vision-Based Monitoring Approaches for Construction Workers’ Work-Related Behaviors. IEEE Access 2024, 12, 7134–7155. [Google Scholar] [CrossRef]

- Fang, Q.; Li, H.; Luo, X.; Ding, L.; Luo, H.; Rose, T.M.; An, W. Detecting Non-Hardhat-Use by a Deep Learning Method from Far-Field Surveillance Videos. Autom. Constr. 2018, 85, 1–9. [Google Scholar] [CrossRef]

- Li, Y.; Wei, H.; Han, Z.; Huang, J.; Wang, W. Deep Learning-Based Safety Helmet Detection in Engineering Management Based on Convolutional Neural Networks. Adv. Civ. Eng. 2020, 2020, 1–10. [Google Scholar] [CrossRef]

- Wu, J.; Cai, N.; Chen, W.; Wang, H.; Wang, G. Automatic Detection of Hardhats Worn by Construction Personnel: A Deep Learning Approach and Benchmark Dataset. Autom. Constr. 2019, 106, 102894. [Google Scholar] [CrossRef]

- Nath, N.D.; Behzadan, A.H.; Paal, S.G. Deep Learning for Site Safety: Real-Time Detection of Personal Protective Equipment. Autom. Constr. 2020, 112, 103085. [Google Scholar] [CrossRef]

- Wang, L.; Xie, L.; Yang, P.; Deng, Q.; Du, S.; Xu, L. Hardhat-Wearing Detection Based on a Lightweight Convolutional Neural Network with Multi-Scale Features and a Top-Down Module. Sensors 2020, 20, 1868. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.; Demachi, K. Towards On-Site Hazards Identification of Improper Use of Personal Protective Equipment Using Deep Learning-Based Geometric Relationships and Hierarchical Scene Graph. Autom. Constr. 2021, 125, 103619. [Google Scholar] [CrossRef]

- Liu, Z.; Cai, N.; Ouyang, W.; Zhang, C.; Tian, N.; Wang, H. CA-CentripetalNet: A Novel Anchor-Free Deep Learning Framework for Hardhat Wearing Detection. Signal Image Video Process. 2023, 17, 4067–4075. [Google Scholar] [CrossRef]

- Wei, L.; Liu, P.; Ren, H.; Xiao, D. Research on Helmet Wearing Detection Method Based on Deep Learning. Sci. Rep. 2024, 14, 7010. [Google Scholar] [CrossRef] [PubMed]

- Jiao, X.; Li, C.; Zhang, X.; Fan, J.; Cai, Z.; Zhou, Z.; Wang, Y. Detection Method for Safety Helmet Wearing on Construction Sites Based on UAV Images and YOLO v8. Buildings 2025, 15, 354. [Google Scholar] [CrossRef]

- Zhang, Y.; Lin, C.; Chen, G. Efficient Helmet Detection Based on Deep Learning and Pruning Methods. J. Electron. Imaging 2025, 34, 023006. [Google Scholar] [CrossRef]

- Zhang, Y.; Huang, S.; Qin, J.; Li, X.; Zhang, Z.; Fan, Q.; Tan, Q. Detection of Helmet Use among Construction Workers via Helmet-Head Region Matching and State Tracking. Autom. Constr. 2025, 171, 105987. [Google Scholar] [CrossRef]

- Njvisionpower. Safety-Helmet-Wearing-Dataset 2025. Available online: https://github.com/njvisionpower/Safety-Helmet-Wearing-Dataset (accessed on 21 October 2025).

- Otgonbold, M.-E.; Gochoo, M.; Alnajjar, F.; Ali, L.; Tan, T.-H.; Hsieh, J.-W.; Chen, P.-Y. SHEL5K: An Extended Dataset and Benchmarking for Safety Helmet Detection. Sensors 2022, 22, 2315. [Google Scholar] [CrossRef]

- Lee, J.-Y.; Choi, W.-S.; Choi, S.-H. Verification and Performance Comparison of CNN-Based Algorithms for Two-Step Helmet-Wearing Detection. Expert Syst. Appl. 2023, 225, 120096. [Google Scholar] [CrossRef]

- Li, B.; Liu, Y.; Wang, X. Gradient Harmonized Single-Stage Detector. In Proceedings of the Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence and Thirty-First Innovative Applications of Artificial Intelligence Conference and Ninth AAAI Symposium on Educational Advances in Artificial Intelligence, Honolulu, HI, USA, 27 January 2019; AAAI Press: Honolulu, HI, USA; Volume 33, pp. 8577–8584. [Google Scholar]

- Horvath, B. Synthetic Data for Deep Learning. Quant. Financ. 2022, 22, 423–425. [Google Scholar] [CrossRef]

- Lališ, A.; Socha, V.; Křemen, P.; Vittek, P.; Socha, L.; Kraus, J. Generating Synthetic Aviation Safety Data to Resample or Establish New Datasets. Saf. Sci. 2018, 106, 154–161. [Google Scholar] [CrossRef]

- Trigka, M.; Dritsas, E. A Comprehensive Survey of Machine Learning Techniques and Models for Object Detection. Sensors 2025, 25, 214. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-Based Learning Applied to Document Recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. arXiv 2016, arXiv:1506.01497. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLO v4: Optimal Speed and Accuracy of Object Detection 2020. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Hou, T.; Leng, C.; Wang, J.; Pei, Z.; Peng, J.; Cheng, I.; Basu, A. MFEL-YOLO for Small Object Detection in UAV Aerial Images. Expert Syst. Appl. 2025, 291, 128459. [Google Scholar] [CrossRef]

- Zhang, X.; Chen, X.; Ding, Y.; Zhang, Y.; Wang, Z.; Shi, J.; Johansson, N.; Huang, X. Smart Real-Time Evaluation of Tunnel Fire Risk and Evacuation Safety via Computer Vision. Saf. Sci. 2024, 177, 106563. [Google Scholar] [CrossRef]

- Ammour, N.; Alhichri, H.; Bazi, Y.; Benjdira, B.; Alajlan, N.; Zuair, M. Deep Learning Approach for Car Detection in UAV Imagery. Remote Sens. 2017, 9, 312. [Google Scholar] [CrossRef]

- Zheng, Z.; Zhao, X.; Zhao, P.; Qi, F.; Wang, N. CNN-Based Statistics and Location Estimation of Missing Components in Routine Inspection of Historic Buildings. J. Cult. Herit. 2019, 38, 221–230. [Google Scholar] [CrossRef]

- Zhang, Y.; Yuen, K.-V. Bolt Damage Identification Based on Orientation-Aware Center Point Estimation Network. Struct. Health Monit. 2021, 21, 147592172110042. [Google Scholar] [CrossRef]

- Sharma, H.; Kanwal, N. Intelligent Video-Based Fire Detection: A Novel Dataset and Real-Time Multi-Stage Classification Approach. Expert Syst. Appl. 2025, 271, 126655. [Google Scholar] [CrossRef]

- Dávila-Soberón, S.; Morales-Díaz, A.; Castelán, M. A Novel Image Dataset for Detecting and Classifying Mobility Aid Users. Expert Syst. Appl. 2025, 293, 128697. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- UDTIRI. Available online: https://www.kaggle.com/datasets/jiahangli617/udtiri (accessed on 21 June 2025).

- Duan, R.; Deng, H.; Tian, M.; Deng, Y.; Lin, J. SODA: A Large-Scale Open Site Object Detection Dataset for Deep Learning in Construction. Autom. Constr. 2022, 142, 104499. [Google Scholar] [CrossRef]

- Liu, C.; Zhang, W.; Xu, W.; Lu, B.; Li, W.; Zhao, X. Substation Inspection Safety Risk Identification Based on Synthetic Data and Spatiotemporal Action Detection. Sensors 2025, 25, 2720. [Google Scholar] [CrossRef]

- Pham, H.T.T.L.; Han, S. Generating Realistic Training Images from Synthetic Data for Excavator Pose Estimation. Autom. Constr. 2024, 167, 105718. [Google Scholar] [CrossRef]

- Messer, U. Co-Creating Art with Generative Artificial Intelligence: Implications for Artworks and Artists. Comput. Hum. Behav. Artif. Hum. 2024, 2, 100056. [Google Scholar] [CrossRef]

- Liu, S.; Chen, J.; Feng, Y.; Xie, Z.; Pan, T.; Xie, J. Generative Artificial Intelligence and Data Augmentation for Prognostic and Health Management: Taxonomy, Progress, and Prospects. Expert Syst. Appl. 2024, 255, 124511. [Google Scholar] [CrossRef]

- Aladağ, H. Assessing the Accuracy of ChatGPT Use for Risk Management in Construction Projects. Sustainability 2023, 15, 16071. [Google Scholar] [CrossRef]

- Cai, J.; Yuan, Y.; Sui, X.; Lin, Y.; Zhuang, K.; Xu, Y.; Zhang, Q.; Ukrainczyk, N.; Xie, T. Chatting about ChatGPT: How Does ChatGPT 4.0 Perform on the Understanding and Design of Cementitious Composite? Constr. Build. Mater. 2024, 425, 135965. [Google Scholar] [CrossRef]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-Time Object Detection 2024. arXiv 2024, arXiv:2304.08069. [Google Scholar]

| Study | Dataset Type/Name | Algorithm | Key Contributions | Limitations/Gaps |

|---|---|---|---|---|

| Fang et al. [7] | Self-built, single-class dataset | Faster R-CNN | Early use of deep learning for non-helmet detection | Only detects “no helmet”; non-public dataset |

| Li et al. [8] | Self-built, Internet + site images | MobileNet-SSD | Lightweight CNN for helmet detection | Only detects “helmet”; Non-public dataset |

| Wu et al. [9] | Open access, GDUT-HWD | SSD + RPA | Benchmark dataset for multiple colors and method for small targets | Imbalanced color classes |

| Nath et al. [10] | Open access, Pictor v3 | YOLO v3 | Three PPE detection strategies; Real-time PPE detection framework | No color labeling |

| Wang et al. [11] | Self-built | Improved MobileNet | Top-Down Module | Non-public dataset |

| Chen et al. [12] | Extended Pictor v3 | YOLO v3 + Pose estimation | Detect multiple PPE including Hard hat, Mask, Safety Glasses, and Safety belt | High-performance but complex pipeline |

| Liu et al. [13] | Open access, GDUT-HWD | CA-CentripetalNet | a novel anchor-free deep learning framework | Imbalanced color classes |

| Wei et al. [14] | Open access, SHWD | Improved YOLO v5s | BiFEL-YOLO v5s with higher accuracy | Focused on binary detection only |

| Jiao et al. [15] | Self-built UAV images | YOLO v8 | High accuracy on aerial helmet detection | Focused on binary detection only |

| Zhang et al. [16] | SHWD, GDUT-HWD | Improved YOLO v8 | High accuracy on small targets | No improvement in dataset diversity |

| Zhang et al. [17] | Extended SHWD | YOLO v9 + Pose | Combines pose estimation for verification | High-performance but complex pipeline |

| Lee et al. [20] | Hard Hat Workers (Open access), Safety Helmet Detection (Open access) | YOLO-EfficientNet | Distinguishing between safety helmets and ordinary hats | The detection speed is not fast. |

| Dataset | Source | Categories | Number of Images | Annotation Type |

|---|---|---|---|---|

| SHWD [18] | Public | Person, Hat | 7581 | Bounding box (head region) |

| Pictor v3 [10] | Public | Worker, hat, vest/Worker, worker with hat, worker with vest, worker with hat and vest | ~1500 | Bounding box (head region, and full body) |

| GDUT-HWD [9] | Public | Red, White, Yellow, Blue, None | 3174 | Bounding box (head region) |

| SHEL5K [19] | Public | Helmet, head, person with helmet, person with no helmet, head with helmet, face | 5000 | Bounding box (head region, and full body) |

| Fang et al. [7] | Self-built, non-public | No Hat | ~81,000 | Bounding box (head region) |

| Li et al. [8] | Self-built, non-public | Helmet | 3261 | Bounding box (head region) |

| Wang et al. [11] | Self-built, non-public | Hard hat, no hard hat | 7064 | Bounding box (head region) |

| Jiao et al. [15] | Self-built, non-public | Person, helmet | 1584 | Bounding box (head region, and full body) |

| Dataset | Data Source | Core Function | Number of Images | Annotation Categories |

|---|---|---|---|---|

| AIGC-HWD | Generated by Midjourney | Main experimental dataset, Data augmentation supplement | 1510 (1208 for training, 302 for validation) | Red/White/Blue/Yellow helmets, No helmet (5 categories) |

| GDUT-HWD | Real construction site scenes | Baseline model training for comparison | 3174 (2539 for training, 635 for validation) | Red/White/Blue/Yellow helmets, No helmet (5 categories) |

| GDUT-HWD-training-2 | Subset of GDUT—HWD training set | Control sample size variable | 994 | Same as GDUT—HWD |

| GDUT-HWD-validation-2 | Subset of GDUT—HWD validation set | Control sample size variable | 300 | Same as GDUT—HWD |

| SHEL5K-100 | Randomly selected from SHEL5K | Cross-validation | 100 | Adapted to 5—category label mapping |

| Prompt | Generated Images |

|---|---|

| Three construction workers wearing yellow helmets; excavator work in progress; helmet color clear; photo; richly detailed and realistic. |  |

| Building construction site; three workers wearing blue helmets working on supporting formwork; Clear colors of helmets; More detailed; more realistic; realistic details; rich and reasonable details. |  |

| Construction site; six laborers with red helmets on their heads; at work; photo. |  |

| Four construction workers wearing white helmets; working on pit support; helmet color clear; photo; rich in real details. |  |

| Three people mixing cement; not wearing helmets; photographic; realistic; richly detailed and lifelike. |  |

| Datasets | AIGC-HWD | GDUT-HWD | ||

|---|---|---|---|---|

| Training | Validation | Training | Validation | |

| Images Number | 1208 | 302 | 2539 | 635 |

| Instances Number | 1026 | 293 | 3164 | 734 |

| 927 | 270 | 3442 | 789 | |

| 925 | 177 | 2996 | 785 | |

| 825 | 191 | 2120 | 492 | |

| 733 | 197 | 3677 | 694 | |

| 4436 | 1128 | 15,399 | 3494 | |

| Datasets | Evaluation Metrics | Model | |||||||

|---|---|---|---|---|---|---|---|---|---|

| T | V | YOLO v8 | YOLO v10 | Faster R-CNN | YOLO v11 | YOLO v11-MobileNet v4 | YOLO v13 | RT-DETR | |

| AT | AV | mAP@50 | 0.989 | 0.985 | 0.976 | 0.990 | 0.977 | 0.981 | 0.988 |

| mAP@50:95 | 0.839 | 0.827 | - | 0.844 | 0.773 | 0.79 | 0.831 | ||

| F1-score | 0.99 | 0.97 | 0.894 | 0.98 | 0.96 | 0.97 | 0.98 | ||

| AP@50: yellow | 0.989 | 0.987 | 0.975 | 0.986 | 0.985 | 0.981 | 0.987 | ||

| AP@50: red | 0.983 | 0.984 | 0.976 | 0.984 | 0.980 | 0.982 | 0.983 | ||

| AP@50: white | 0.983 | 0.969 | 0.949 | 0.990 | 0.946 | 0.96 | 0.992 | ||

| AP@50: blue | 0.995 | 0.995 | 0.999 | 0.995 | 0.995 | 0.994 | 0.995 | ||

| AP@50: none | 0.994 | 0.989 | 0.982 | 0.993 | 0.978 | 0.987 | 0.985 | ||

| GV | mAP@50 | 0.701 | 0.633 | 0.627 | 0.636 | 0.553 | 0.527 | 0.583 | |

| mAP@50:95 | 0.418 | 0.382 | - | 0.378 | 0.309 | 0.294 | 0.337 | ||

| F1-score | 0.66 | 0.6 | 0.6 | 0.61 | 0.56 | 0.54 | 0.63 | ||

| AP@50: yellow | 0.758 | 0.707 | 0.663 | 0.707 | 0.654 | 0.611 | 0.683 | ||

| AP@50: red | 0.747 | 0.698 | 0.668 | 0.664 | 0.622 | 0.582 | 0.556 | ||

| AP@50: white | 0.712 | 0.654 | 0.637 | 0.696 | 0.554 | 0.52 | 0.649 | ||

| AP@50: Blue | 0.768 | 0.717 | 0.754 | 0.741 | 0.692 | 0.673 | 0.714 | ||

| AP@50: none | 0.508 | 0.388 | 0.415 | 0.370 | 0.243 | 0.247 | 0.313 | ||

| GV2 | mAP@50 | 0.711 | 0.649 | 0.651 | 0.657 | 0.587 | 0.565 | 0.612 | |

| mAP@50:95 | 0.443 | 0.404 | - | 0.404 | 0.342 | 0.328 | 0.366 | ||

| F1-score | 0.67 | 0.61 | 0.612 | 0.63 | 0.59 | 0.57 | 0.66 | ||

| AP@50: yellow | 0.819 | 0.765 | 0.706 | 0.745 | 0.699 | 0.666 | 0.758 | ||

| AP@50: red | 0.839 | 0.797 | 0.753 | 0.755 | 0.724 | 0.674 | 0.640 | ||

| AP@50: white | 0.697 | 0.621 | 0.628 | 0.684 | 0.569 | 0.558 | 0.646 | ||

| AP@50: blue | 0.780 | 0.744 | 0.771 | 0.767 | 0.738 | 0.717 | 0.732 | ||

| AP@50: none | 0.423 | 0.316 | 0.399 | 0.333 | 0.204 | 0.21 | 0.286 | ||

| Datasets | Evaluation Metrics | Model | |||||||

|---|---|---|---|---|---|---|---|---|---|

| T | V | YOLO v8 | YOLO v10 | Faster R-CNN | YOLO v11 | YOLO v11-MobileNet v4 | YOLO v13 | RT-DETR | |

| GT | GV | mAP@50 | 0.923 | 0.92 | 0.725 | 0.938 | 0.878 | 0.873 | 0.931 |

| mAP@50:95 | 0.645 | 0.639 | - | 0.672 | 0.591 | 0.57 | 0.654 | ||

| F1-score | 0.89 | 0.88 | 0.6 | 0.90 | 0.85 | 0.84 | 0.91 | ||

| AP@50: yellow | 0.914 | 0.919 | 0.703 | 0.936 | 0.877 | 0.861 | 0.931 | ||

| AP@50: red | 0.918 | 0.92 | 0.724 | 0.934 | 0.888 | 0.886 | 0.927 | ||

| AP@50: white | 0.922 | 0.919 | 0.682 | 0.945 | 0.866 | 0.866 | 0.932 | ||

| AP@50: blue | 0.954 | 0.94 | 0.818 | 0.951 | 0.920 | 0.919 | 0.949 | ||

| AP@50: none | 0.906 | 0.902 | 0.695 | 0.923 | 0.839 | 0.835 | 0.914 | ||

| A + GT | mAP@50 | 0.930 | 0.923 | 0.805 | 0.944 | 0.886 | 0.876 | 0.938 | |

| mAP@50:95 | 0.648 | 0.641 | - | 0.678 | 0.600 | 0.58 | 0.659 | ||

| F1-score | 0.89 | 0.88 | 0.646 | 0.90 | 0.85 | 0.84 | 0.91 | ||

| AP@50: yellow | 0.930 | 0.918 | 0.787 | 0.946 | 0.885 | 0.86 | 0.928 | ||

| AP@50: red | 0.928 | 0.926 | 0.788 | 0.942 | 0.908 | 0.893 | 0.926 | ||

| AP@50: white | 0.936 | 0.921 | 0.786 | 0.950 | 0.864 | 0.869 | 0.946 | ||

| AP@50: Blue | 0.944 | 0.948 | 0.872 | 0.952 | 0.918 | 0.918 | 0.955 | ||

| AP@50: none | 0.911 | 0.899 | 0.793 | 0.930 | 0.856 | 0.841 | 0.934 | ||

| Datasets | Evaluation Metrics | Model | |||||||

|---|---|---|---|---|---|---|---|---|---|

| T | V | YOLO v8 | YOLO v10 | Faster R-CNN | YOLO v11 | YOLO v11-MobileNet v4 | YOLO v13 | RT-DETR | |

| AT | AV | mAP@50 | 0.989 | 0.985 | 0.976 | 0.990 | 0.977 | 0.981 | 0.988 |

| mAP@50:95 | 0.839 | 0.827 | - | 0.844 | 0.773 | 0.79 | 0.831 | ||

| F1-score | 0.99 | 0.97 | 0.894 | 0.98 | 0.96 | 0.97 | 0.98 | ||

| AP@50: yellow | 0.989 | 0.987 | 0.975 | 0.986 | 0.985 | 0.981 | 0.987 | ||

| AP@50: red | 0.983 | 0.984 | 0.976 | 0.984 | 0.980 | 0.982 | 0.983 | ||

| AP@50: white | 0.983 | 0.969 | 0.949 | 0.990 | 0.946 | 0.96 | 0.992 | ||

| AP@50: blue | 0.995 | 0.995 | 0.999 | 0.995 | 0.995 | 0.994 | 0.995 | ||

| AP@50: none | 0.994 | 0.989 | 0.982 | 0.993 | 0.978 | 0.987 | 0.985 | ||

| GT | mAP@50 | 0.969 | 0.961 | 0.909 | 0.962 | 0.923 | 0.92 | 0.973 | |

| mAP@50:95 | 0.673 | 0.656 | - | 0.676 | 0.632 | 0.625 | 0.689 | ||

| F1-score | 0.95 | 0.95 | 0.818 | 0.93 | 0.87 | 0.88 | 0.97 | ||

| AP@50: yellow | 0.974 | 0.95 | 0.95 | 0.971 | 0.953 | 0.955 | 0.976 | ||

| AP@50: red | 0.97 | 0.958 | 0.91 | 0.97 | 0.921 | 0.933 | 0.97 | ||

| AP@50: white | 0.931 | 0.946 | 0.74 | 0.903 | 0.847 | 0.799 | 0.948 | ||

| AP@50: Blue | 0.994 | 0.995 | 0.98 | 0.993 | 0.995 | 0.994 | 0.995 | ||

| AP@50: none | 0.976 | 0.958 | 0.96 | 0.973 | 0.897 | 0.92 | 0.975 | ||

| Training Sets | Evaluation Metrics | Model | ||||||

|---|---|---|---|---|---|---|---|---|

| YOLO v8 | YOLO v10 | Faster R-CNN | YOLO v11 | YOLO v11-MobileNet v4 | YOLO v13 | RT-DETR | ||

| AT | mAP@50 | 0.813 | 0.741 | 0.696 | 0.600 | 0.652 | 0.72 | 0.549 |

| mAP@50:95 | 0.614 | 0.566 | - | 0.460 | 0.470 | 0.525 | 0.418 | |

| F1-score | 0.76 | 0.69 | 0.64 | 0.54 | 0.64 | 0.69 | 0.59 | |

| AP@50: yellow | 0.888 | 0.742 | 0.671 | 0.687 | 0.824 | 0.823 | 0.718 | |

| AP@50: red | 0.828 | 0.786 | 0.724 | 0.676 | 0.823 | 0.827 | 0.542 | |

| AP@50: white | 0.788 | 0.647 | 0.747 | 0.591 | 0.414 | 0.647 | 0.709 | |

| AP@50: blue | 0.844 | 0.797 | 0.731 | 0.688 | 0.875 | 0.869 | 0.674 | |

| AP@50: none | 0.719 | 0.732 | 0.610 | 0.336 | 0.326 | 0.434 | 0.104 | |

| GT | mAP@50 | 0.931 | 0.928 | 0.835 | 0.924 | 0.916 | 0.899 | 0.921 |

| mAP@50:95 | 0.680 | 0.7 | - | 0.695 | 0.645 | 0.613 | 0.679 | |

| F1-score | 0.91 | 0.91 | 0.63 | 0.92 | 0.89 | 0.89 | 0.91 | |

| AP@50: yellow | 0.900 | 0.903 | 0.776 | 0.907 | 0.896 | 0.862 | 0.901 | |

| AP@50: red | 0.894 | 0.895 | 0.817 | 0.884 | 0.874 | 0.844 | 0.887 | |

| AP@50: white | 0.916 | 0.906 | 0.823 | 0.902 | 0.897 | 0.884 | 0.898 | |

| AP@50: Blue | 0.976 | 0.975 | 0.912 | 0.975 | 0.967 | 0.97 | 0.978 | |

| AP@50: none | 0.963 | 0.961 | 0.841 | 0.954 | 0.948 | 0.934 | 0.941 | |

| GT2 | mAP@50 | 0.909 | 0.907 | 0.756 | 0.915 | 0.857 | 0.864 | 0.929 |

| mAP@50:95 | 0.608 | 0.661 | - | 0.657 | 0.533 | 0.569 | 0.689 | |

| F1-score | 0.90 | 0.88 | 0.616 | 0.90 | 0.83 | 0.84 | 0.92 | |

| AP@50: yellow | 0.896 | 0.881 | 0.679 | 0.894 | 0.830 | 0.852 | 0.917 | |

| AP@50: red | 0.921 | 0.863 | 0.768 | 0.864 | 0.836 | 0.827 | 0.882 | |

| AP@50: white | 0.912 | 0.88 | 0.746 | 0.895 | 0.811 | 0.797 | 0.911 | |

| AP@50: blue | 0.920 | 0.952 | 0.804 | 0.963 | 0.917 | 0.923 | 0.968 | |

| AP@50: none | 0.897 | 0.962 | 0.785 | 0.959 | 0.892 | 0.924 | 0.965 | |

| A + GT | mAP@50 | 0.941 | 0.930 | 0.877 | 0.933 | 0.919 | 0.917 | 0.928 |

| mAP@50:95 | 0.690 | 0.68 | - | 0.669 | 0.652 | 0.655 | 0.657 | |

| F1-score | 0.92 | 0.91 | 0.664 | 0.92 | 0.89 | 0.9 | 0.92 | |

| AP@50: yellow | 0.900 | 0.911 | 0.839 | 0.917 | 0.909 | 0.874 | 0.883 | |

| AP@50: red | 0.913 | 0.895 | 0.846 | 0.895 | 0.874 | 0.865 | 0.916 | |

| AP@50: white | 0.940 | 0.912 | 0.857 | 0.906 | 0.903 | 0.895 | 0.903 | |

| AP@50: blue | 0.978 | 0.973 | 0.938 | 0.979 | 0.972 | 0.984 | 0.975 | |

| AP@50: none | 0.973 | 0.961 | 0.904 | 0.967 | 0.935 | 0.965 | 0.964 | |

| A + GT2 | mAP@50 | 0.922 | 0.907 | 0.874 | 0.922 | 0.847 | 0.886 | 0.912 |

| mAP@50:95 | 0.673 | 0.675 | - | 0.656 | 0.544 | 0.642 | 0.666 | |

| F1-score | 0.91 | 0.92 | 0.622 | 0.91 | 0.81 | 0.87 | 0.9 | |

| AP@50: yellow | 0.899 | 0.871 | 0.839 | 0.900 | 0.827 | 0.829 | 0.895 | |

| AP@50: red | 0.863 | 0.874 | 0.860 | 0.877 | 0.780 | 0.858 | 0.916 | |

| AP@50: white | 0.912 | 0.863 | 0.837 | 0.912 | 0.810 | 0.843 | 0.849 | |

| AP@50: blue | 0.971 | 0.967 | 0.934 | 0.961 | 0.910 | 0.966 | 0.947 | |

| AP@50: none | 0.967 | 0.963 | 0.902 | 0.960 | 0.906 | 0.937 | 0.954 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Miao, Q.; Li, Z.; Zhang, H.; Zou, Z.; Kong, L. Can Generative AI-Generated Images Effectively Support and Enhance Real-World Construction Helmet Detection? Buildings 2025, 15, 4080. https://doi.org/10.3390/buildings15224080

Li J, Miao Q, Li Z, Zhang H, Zou Z, Kong L. Can Generative AI-Generated Images Effectively Support and Enhance Real-World Construction Helmet Detection? Buildings. 2025; 15(22):4080. https://doi.org/10.3390/buildings15224080

Chicago/Turabian StyleLi, Jiaqi, Qi Miao, Zhaobo Li, Hao Zhang, Zheng Zou, and Lingjie Kong. 2025. "Can Generative AI-Generated Images Effectively Support and Enhance Real-World Construction Helmet Detection?" Buildings 15, no. 22: 4080. https://doi.org/10.3390/buildings15224080

APA StyleLi, J., Miao, Q., Li, Z., Zhang, H., Zou, Z., & Kong, L. (2025). Can Generative AI-Generated Images Effectively Support and Enhance Real-World Construction Helmet Detection? Buildings, 15(22), 4080. https://doi.org/10.3390/buildings15224080