1. Introduction

Construction projects are becoming increasingly large-scale and complex, involving numerous stakeholders and operating under tight time and budget constraints. Additionally, the labor structure is increasingly switching from highly trained expert labor to a general purpose labor force supported by machinery. This makes the simplest construction planning tasks even more complex. Here, traditional project management strategies are reaching their limits under this rising complexity [

1,

2]. In response, researchers and industry practitioners are exploring advanced solutions such as artificial intelligence (AI) to improve construction management outcomes, but also toggle basic construction assessments as work duration, cost estimations, labour requirements, and work team structures. Work progress monitoring is a critical project control function with significant potential to benefit from AI integration. Accurate progress tracking ensures that project status is understood, that actual work can be compared against plans, and that timely management decisions can be made [

3], but also to improve reliability of future work planning. However, conventional progress monitoring methods, including manual site inspections and reporting, remain labor-intensive, subjective, and often unable to capture the full detail of ongoing activities. Therefore, this study aims to build up the level of understanding of whether and how general-purpose AI, available for free and to all users, can assist in building up the relevant work standards used for resource planning.

1.1. Traditional Norming Methods

This is especially evident in detailed operation-level tasks such as masonry, where productivity is typically measured using manual time-and-motion studies or estimated through predefined work norms. These norms, commonly expressed as labor hours per unit of work, are developed from past projects and expert knowledge [

4,

5]. While they provide a baseline for planning and control, recent research shows that they often fail to reflect actual performance under current construction methods, new technologies, or varying workforce characteristics. The COVID-19 pandemic further exposed the limitations of static norms, highlighting the need for more adaptive and data-driven approaches to manage labor disruptions, supply chain variability, and evolving site dynamics [

4,

5]. As a result, updating and standardizing resource norms using real-world data has become a key focus area, opening opportunities for AI-based approaches to support more precise and flexible productivity benchmarking in the construction sector.

In Croatia, the traditional approach to work standardization still relies on outdated norms from the Yugoslav era (1986), which is clearly inefficient. These productivity norms, based mostly on manual stopwatch measurements, fail to reflect the dynamic and ever-changing nature of construction sites [

6].

1.2. Digital Monitoring Methods

Automation is crucial for addressing longstanding challenges in the construction sector, including low productivity, labor shortages, and the increasing demand for sustainable building practices [

1,

6]. Recent advances in digital technology have introduced new opportunities for automating progress monitoring. Building information modeling (BIM) offers a structured representation of planned work, and various data acquisition tools can capture as-built progress. For instance, early efforts by El-Omari and Moselhi [

3] combined 3D laser scanning and photogrammetry to measure construction progress. Photogrammetric techniques can reconstruct point clouds of work in progress from site photos, which can then be compared against BIM or design models to quantify completed work. This approach achieves high geometric accuracy but remains time-consuming [

7]. Terrestrial LiDAR scanning provides accurate as-built point clouds as well, though it requires expensive equipment and still involves substantial data processing.

To increase agility, researchers have also looked at leveraging everyday visual data (images and videos) captured on-site. Yang et al. [

2] outline how still images, time-lapse photos, and video streams can be utilized for construction performance monitoring and foresee these becoming integral to real-time progress tracking. Drones (unmanned aerial vehicles) equipped with various sensors have emerged as a practical tool to collect such data from construction sites. Li and Liu [

8] reviewed applications of multi-rotor drones in construction management and highlighted progress monitoring as a key use case. Drones can rapidly capture high-resolution images of work areas from multiple angles, offering a comprehensive view of work progress without disrupting site activities. Similarly, other visual data recording methods can also provide rich time-series visual data of the construction process.

1.3. Computer Vision Methods

Coupled with the explosion of visual data acquisition methods, there have been rapid advances in computer vision and AI algorithms capable of interpreting construction imagery. Earlier approaches to analyzing site images employed classical machine learning techniques. For example, Rashidi et al. [

9] compared machine-learning classifiers for automatically recognizing construction materials (such as brick, concrete, and wood panels) in site photos. They found that a support vector machine (SVM) could accurately detect distinct materials like red brick, outperforming neural networks on that task. Han and Golparvar-Fard [

10] developed an appearance-based method to classify building materials in images and linked it with a 4D BIM model, enabling automated monitoring of operation-level progress (e.g., identifying which components have been installed). As image data grew in volume and complexity, researchers turned to more powerful deep learning models.

Convolutional neural networks (CNNs) and their variants have achieved state-of-the-art performance in object detection and segmentation tasks relevant to construction. Vision AI models like YOLO and Mask R-CNN have been applied to detect construction elements or workers in images and video. Other research has tackled challenges like occlusion and clutter on jobsites. For example, Yoon and Kim [

11] evaluated advanced detectors (YOLOv8 and others) for reliably spotting workers during masonry tasks even when their view is partially blocked by equipment or materials, improving the robustness of vision-based monitoring in realistic site conditions. Beyond computer vision-based approaches, the rise of large language models (LLMs) introduces new opportunities for construction monitoring and standardization.

1.4. LLM-Based Methods

While vision-based AI offers clear potential for progress monitoring, general-purpose large language models (LLMs), while widely available to all, often lack the precision and contextual grounding required for construction specific applications. Recent studies highlight several adaptation strategies that address these limitations. One direction is domain-specific fine-tuning, which improves task performance even with imperfect datasets [

12,

13]. Another is retrieval-augmented generation (RAG), where models are supported by external data sources such as codes, BIM documentation, or project logs to reduce hallucinations and improve accuracy [

14,

15]. Beyond text, multimodal integration of images, BIM, LiDAR, and 360° panoramic data are increasingly used to achieve more reliable monitoring under site conditions [

16,

17]. Finally, human-in-the-loop validation remains essential, as oversight by engineers and managers is critical for building trust and ensuring safety [

18].

Despite these advances, few studies have examined whether a general-purpose multimodal AI model without task-specific fine-tuning can provide reliable operation-level progress monitoring, such as brick-laying, under realistic site conditions. This lack of integrated, field-validated assessments, limit understanding of how far general-purpose models can currently perform before domain-specific adaptation becomes necessary.

Taken together, these developments point to an ongoing shift from construction trained AI toward hybrid systems that integrate general-purpose capabilities with targeted domain adaptation—a perspective that frames both the motivation and the scope of the investigation reported in this study.

1.5. NORMENG Project Context

The NORMENG project was initiated to address this pressing need, focusing on developing an automated resource standardization system tailored for energy-efficient construction. The lack of a unified, data-driven approach to standardization has resulted in inconsistencies in labor allocation, material usage, and equipment deployment [

6,

19]. To combat these issues, the NORMENG project was structured into two main phases. The first phase involved extensive industrial research to assess current practices and establish a robust methodology for calculating material requirements, labor needs, and machine workloads. This research laid the foundation for creating more accurate, reliable, and adaptable standards [

19].

A central element of the second phase was the construction of a pilot project in Sošice, Croatia, built specifically for the NORMENG project. This site served as a controlled environment to test methods of progress and quantity monitoring and norm standardization, with a focus on tracking the execution of works in real time. Various technologies, including photogrammetry, terrestrial LiDAR scanning, drone imaging, and manual observations, were applied to capture detailed records of all construction tasks. The Sošice pilot demonstrated both the potential and the limitations of these technologies in estimating constructed volumes and developing modern standards for energy-efficient construction [

6,

19]. Building on these achievements, this study advances automated progress monitoring by introducing an advanced multimodal generative AI model. Informed by recent literature on AI-driven vision analysis for productivity, where automated systems have demonstrated the ability to recognize worker actions and material usage to assess efficiency [

20,

21], it examines whether a single, general-purpose AI model can perform comparable monitoring tasks.

This investigation extends the second-phase work of the NORMENG project by re-evaluating the same construction activities through the lens of a commercially available multimodal AI system. In doing so, it preserves technical continuity with previous research by applying the established progress-monitoring workflow and benchmark data as reference points, while testing whether a generalist AI tool can replicate or improve upon the task-specific results achieved with conventional methods. At the same time, the study primarily focuses on testing the feasibility of this pipeline under controlled pilot-scale conditions as a step toward evaluating its potential for systematic construction control on other sites, and the limitations observed here will inform strategies for adapting and scaling it to more complex projects.

The following Methodology section details the approach, data collection procedures, technology selection criteria, and the specific framework developed for AI-driven resource standardization.

2. Materials and Methods

Research limitations at hand were that recordings used in this analysis were early exploratory effort, the NORMENG and Samoborska datasets had been collected before AI-based analysis was anticipated, image capture and quality control focused mainly on ensuring the visibility of construction elements rather than optimizing parameters such as resolution, occlusion, or geometric calibration.

2.1. Approach

2.1.1. Progress Monitoring—Sošice Pilot

At the Sošice construction site, construction progress was monitored using a diverse set of visual records, LIDAR scans, UAV-captured videos, handheld action camera footage, and site photographs captured during construction (see

Appendix A). These sources provided complementary perspectives: drone videos documented the exterior development and overall site context, action camera recordings offered continuous interior views of workspace activities, while photographs and handheld clips supplied detailed close-range observations of individual tasks. Here, LIDAR recordings were used as a control for all other data acquisition methods. (see

Appendix A). The collected material was systematically analyzed to reconstruct the sequence of activities and the timing of their execution. These recordings were used to set up the best protocol for site monitoring activities.

2.1.2. Samoborska Residential Development (Zagreb)

Data for this study was also systematically collected from the Samoborska residential development in Stenjevec, Zagreb, Croatia (see

Appendix B). This site was chosen because the construction methods, materials, and conditions closely mirrored those in the NORMENG project. The entire masonry process was recorded using a 360° camera and several fixed action cameras, providing coverage from multiple angles. This footage was later analyzed manually: the number of bricks laid was counted, and the duration of each activity was logged. These observations were compiled into detailed productivity tables (

Table 1), which served as the ground-truth dataset for validating the AI-generated outputs.

2.1.3. Quantity Monitoring Model

Building on progress monitoring, the NORMENG pilot project was also used to test the implementation of multimodal LLMs for quantity assessments. Construction site documentation was analyzed and segmented to illustrate the process of progress tracking of a specific work type—in this research, the erection of a brick wall. From these sequences, the AI model was prompted to identify the number of bricks laid. Using the standard dimensions of the masonry units, the system or the observers can estimate the volume of the constructed wall. With this data extracted in selected time sequences (1 hr, 3 hrs, 6 hrs), one could easily assess the productivity at a certain task, presuming one has the information on the available workforce at hand.

2.1.4. Validation Dataset

While these initial tests using general-purpose AI at Sošice demonstrated the feasibility of the experiment, the study required validation on a dataset with precise ground-truth records, which was addressed in the Samoborska case study.

The proposed models were first tested using independent data from a separate construction project, the Samoborska residential development in Stenjevec, Zagreb, Croatia. This dataset included manually recorded activity logs that corresponded to the observed video footage, serving as a benchmark for evaluating the AI’s output accuracy. To minimize potential human error in the ground-truth activity logs presented in

Table 1, the counts were independently performed by multiple researchers, and any discrepancies were cross-checked and reconciled before finalizing the benchmark data. The selection of the Samoborska project was a strategic decision, primarily driven by the superior clarity and quality of its visual data, which featured videos that were notably clearer and less prone to obstructions or variations in lighting. Here, the static nature of the video recordings, with cameras providing consistent viewpoints, significantly simplified the analytical task for the AI, allowing for more precise tracking and analysis of construction activities.

The key rationale for this comparative approach also lies in the fact that similar construction works were carried out at both the Sošice pilot site and the Samoborska project, particularly regarding construction technologies, materials, and work sequencing. This similarity made it possible to first test the AI-based method on the Samoborska data, validate its performance against precise, manually recorded ground-truth measurements, and subsequently apply the same method to the NORMENG dataset with greater confidence.

2.2. Technology Selection

For the purposes of this research, Google AI Studio’s Gemini 2.5 Flash model was selected for its widespread availability to all interested parties. In addition, Gemini 2.5 Flash was preferred over alternatives because it offered readily available vision-based starter apps within the Google AI Studio platform. Custom-trained AI vision models were not considered appropriate for this study, as the objective was to benchmark a general-purpose model rather than develop a bespoke algorithm. No formal comparative benchmarks were conducted, as the study’s focus was on assessing the feasibility of a readily available off-the-shelf multimodal AI for construction-specific tasks.

2.3. Processes and Objectives

Before selecting and refining the final framework, a series of initial benchmarking tests were conducted to evaluate the chosen AI model’s built-in image analysis capabilities. Using the segmentation and bounding box features, it was assessed how effectively the model could recognize and label construction-related elements from static image inputs—results of this stage are presented in the Results—Preliminary Testing section. This confirmed the feasibility of using a general-purpose multimodal AI model for activity and object recognition in construction environments, thereby establishing the basis for progressing to work-activity quantification.

The subsequent AI analysis followed a reproducible multi-step protocol:

Frame extraction: Video recordings (from both Sošice and Samoborska) were segmented into static frames;

Preprocessing: Frames were cropped to the relevant work area;

Upload environment: Images were uploaded into Google AI Studio, where the tested multimodal LLM (Gemini 2.5 Flash) was accessed;

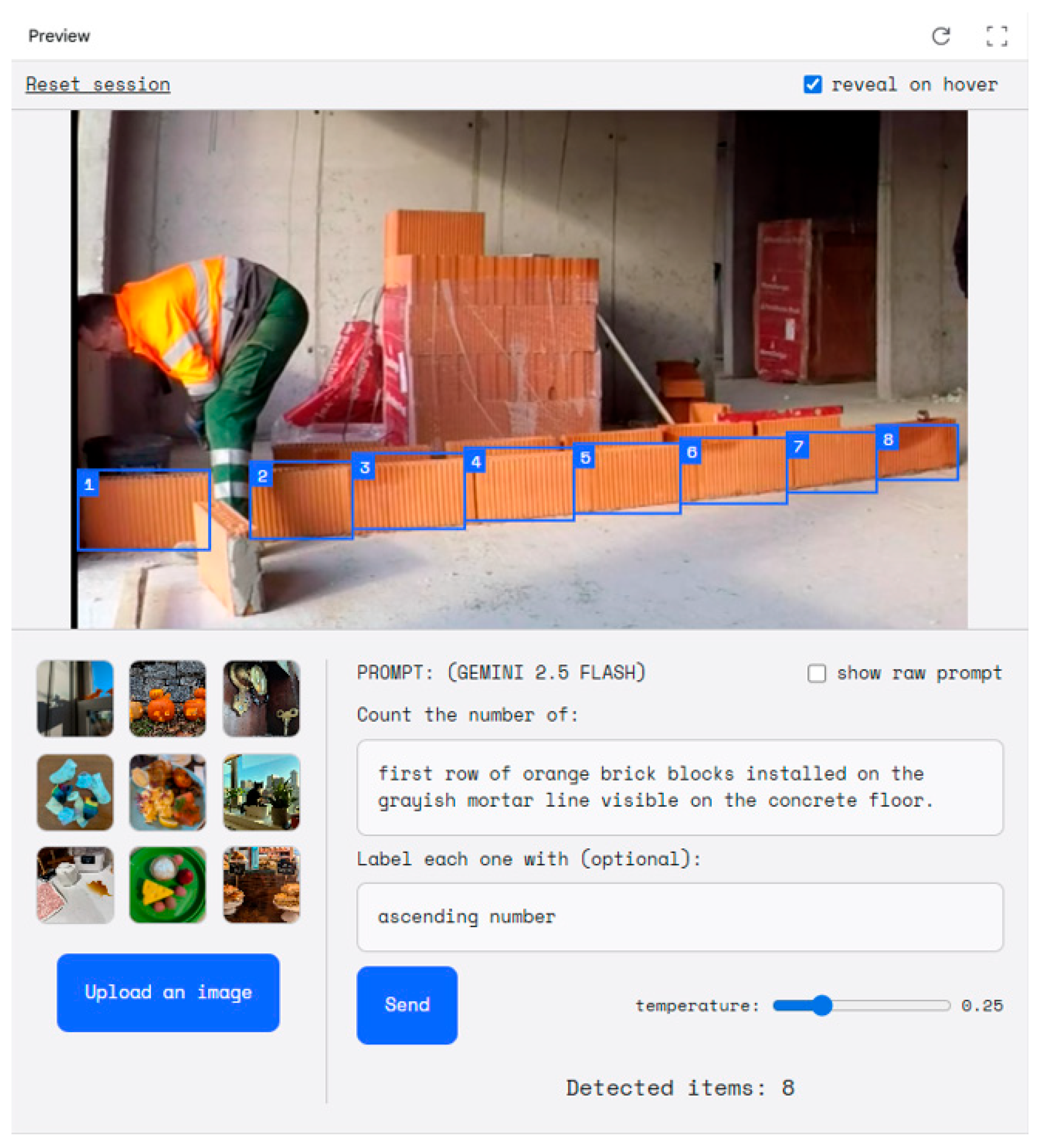

Prompt design: Clear natural-language prompts were defined, such as “Count the number of: first row or orange brick blocks installed on the grayish mortar line visible on the concrete floor.”;

Prompting strategy: Prompts were iteratively tested, and the formulations that provided the most consistent and reproducible brick-count results were selected for further analysis;

Output recording: The model returned brick counts, 2D bounding boxes, and identification points. Counts were logged at the start and end of activity segments;

Volume estimation: Using standard Wienerberger Porotherm brick dimensions, brick counts were converted into constructed wall volumes (m3);

Validation: validated against manually recorded brick counts and time logs.

The primary analytical objective was therefore to quantify progress and productivity from visual AI outputs. Ultimately, this quantitative output would enable the derivation of average productivity per worker, expressed as cubic meters of brickwork completed per day. At the Samoborska project, this workflow was benchmarked against the meticulously recorded manual dataset (

Table 1), which served as the ground-truth baseline.

In the case of the NORMENG project, a similar process was employed, beginning with the extraction of image data from construction site videos. These images were processed through a standardized AI analysis pipeline, where prompts were defined to detect and count individual bricks. The resulting brick counts were then used to estimate the constructed wall volume, which in turn enables the derivation of productivity measures over a defined work period. This approach mirrored the workflow applied in our Samoborska tests, comprising image input, object identification, and the conversion of counts into volume-based metrics.

3. Results

3.1. Preliminary Testing

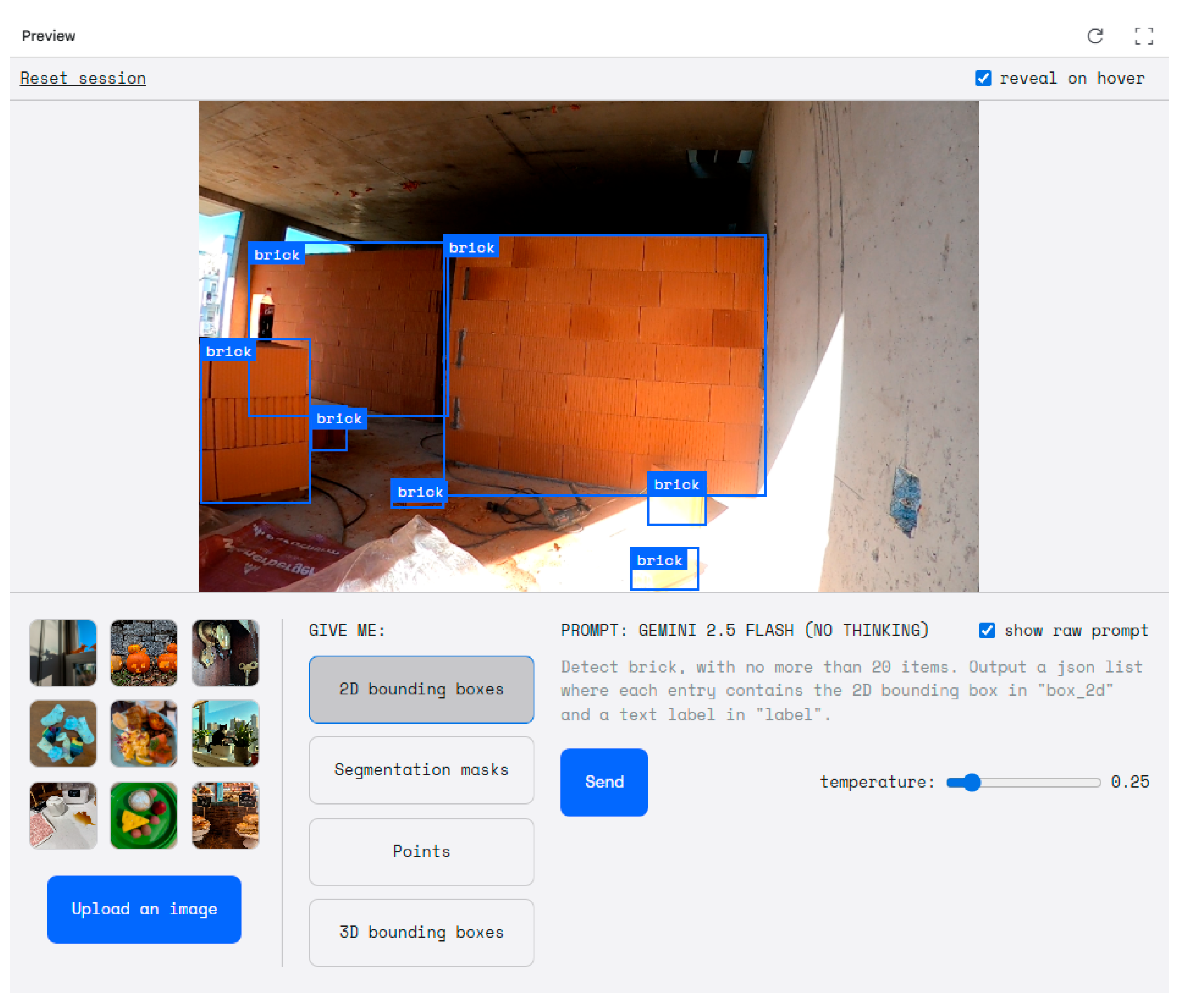

The preliminary stage of this research involved benchmarking AI’s built-in computer vision capabilities. Using the segmentation and bounding box features, it was evaluated how effectively the model could recognize and label construction-related elements from static image inputs.

Figure 1 demonstrates the model’s performance on plastering operations.

Workers were accurately identified, with semantic labels such as “worker on ladder” and “worker on ground.” Notably, the AI model applied segmentation masks and bounding boxes consistently, differentiating between labor roles and positioning. Object detection performance was further enhanced by context sensitivity; tools such as the “wheelbarrow,” “trowel,” “ladder,” and “shovel” were correctly recognized and associated with the nearby human agents. The accuracy of this segmentation implies a strong understanding of the spatial and functional relationship between tools and workers.

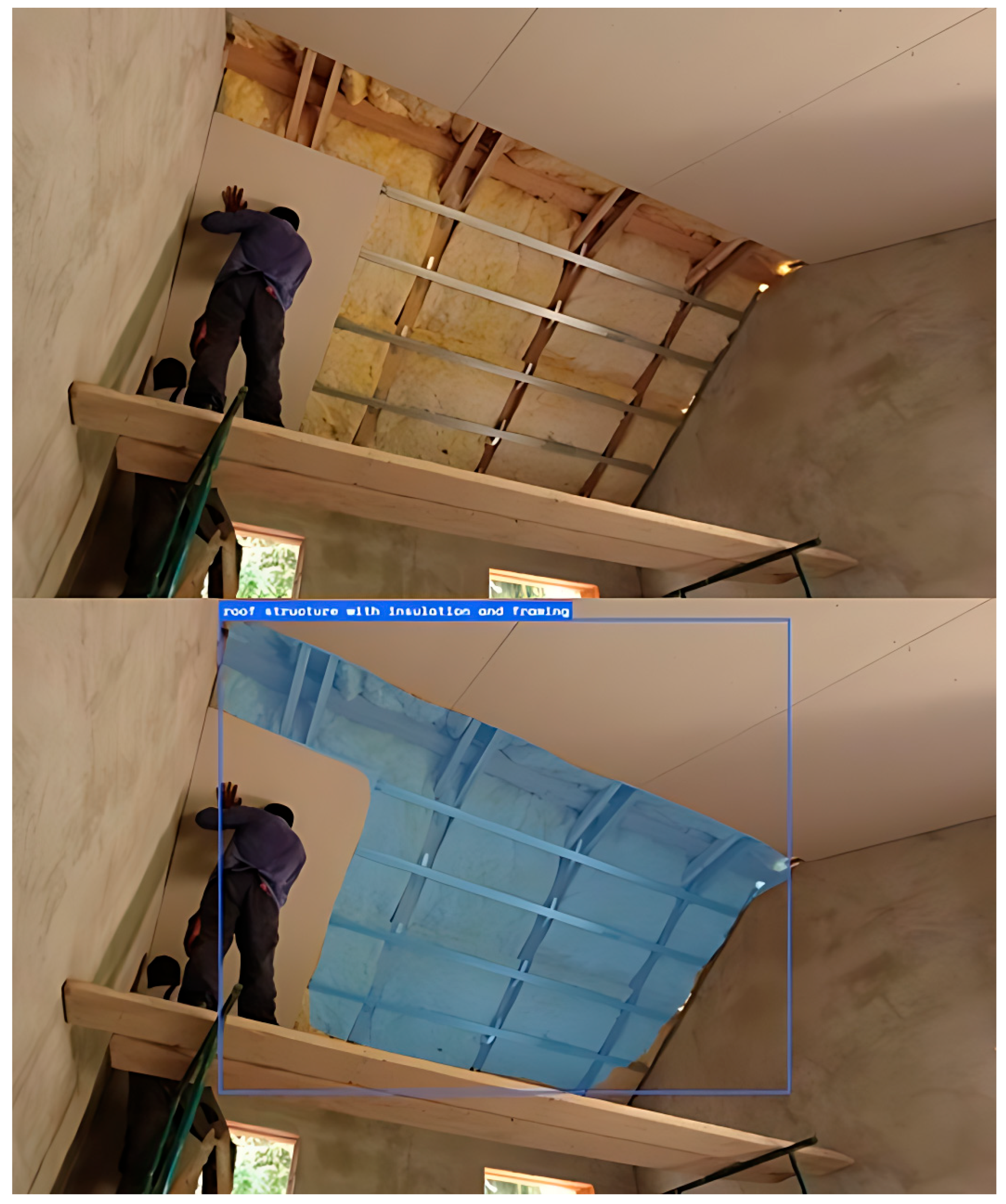

In the subsequent test (

Figure 2), the AI model successfully recognized elements involved in thermal insulation tasks.

Entities like “roof structure with insulation and framing” were detected. Despite the irregular shapes and texture overlap in the construction environment, the AI model exhibited resilience in detecting features across varied lighting conditions and perspectives. Overall, the AI’s ability to perform classification of new construction scenes without prior training on the specific context is particularly notable. These promising results established the foundation for transitioning into work activity recognition, as explored in the following sections.

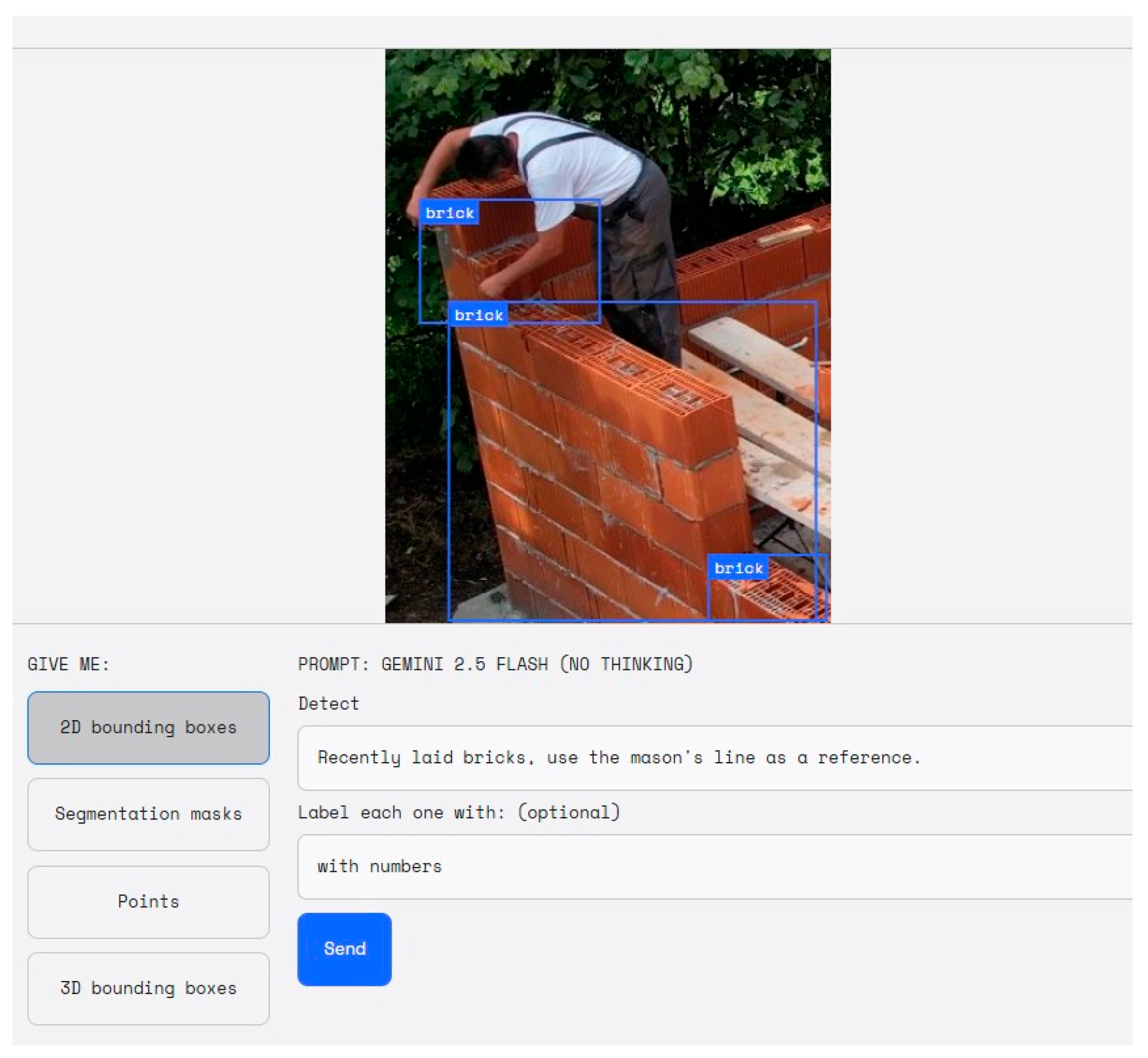

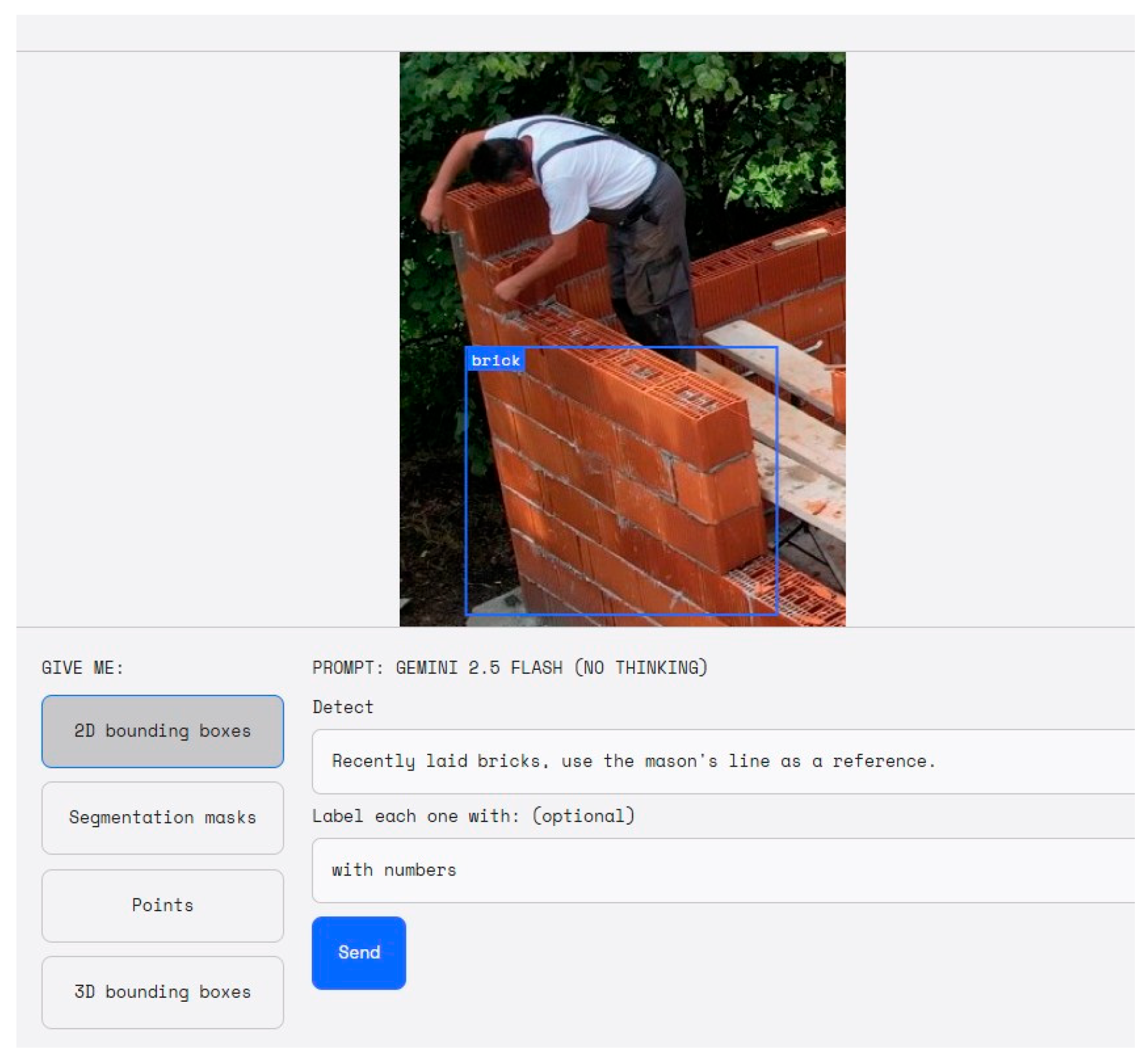

3.2. Quantity Monitoring—Samoborska Site

The AI model was tasked to count the number of bricks visible in the recorded masonry work images. This process was designed as a proof-of-concept to evaluate whether AI-driven visual analysis could support automated quantity takeoff and productivity estimation. By combining the brick count at the start and end of the workday, the known dimensions of individual bricks (Wienerberger Porotherm brick 500 × 245 × 100 mm), and the number of workers involved, the system aimed to calculate the total volume of wall constructed (in cubic meters) over a defined period. This would then allow deriving average productivity per worker, expressed as cubic meters of brickwork completed per day.

In some cases, the AI delivered promising results. For example, in one well-documented segment, the system correctly identified approximately eight bricks, which translated, when scaled across the wall dimensions and construction sequence, to an estimated ~0.1 m

3 of completed wall volume (

Figure 3 and

Figure 4). This aligned well with the manually recorded productivity data (

Table 1, highlighted in green), suggesting that the AI model could, under favorable conditions, approximate work quantities with reasonable accuracy.

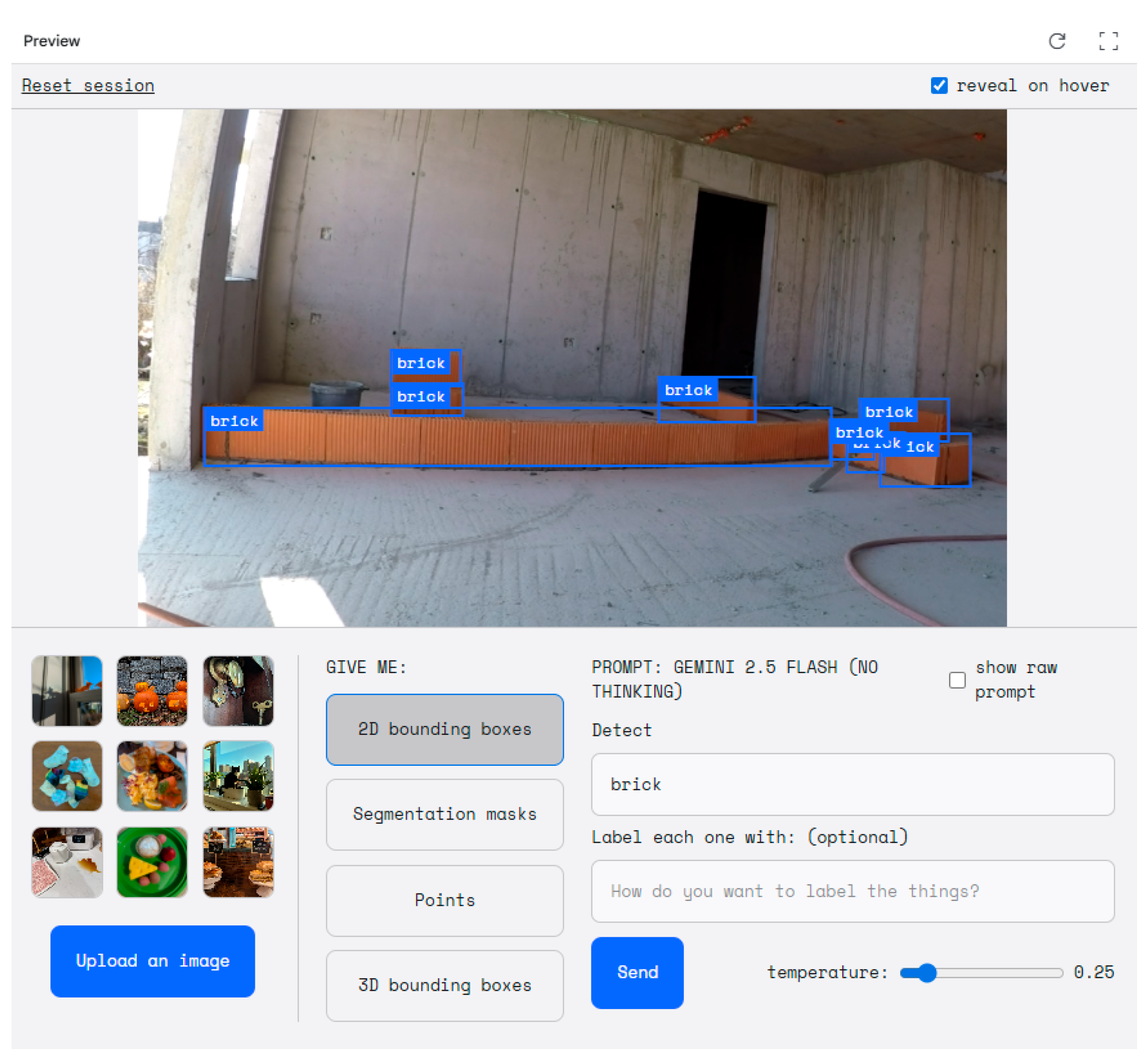

However, significant inconsistencies were observed across different image sets. The AI’s performance fluctuated depending on factors such as camera angle, lighting conditions, occlusion of bricks by tools or workers, partial wall visibility, and background clutter. In some cases, the system overcounted due to false positives, misclassifying shadows, mortar joints, or surrounding materials as bricks. In other cases, it undercounted, missing bricks that were partially obscured or located at the image edges. A particular challenge was the misinterpretation of bricks that were too close to each other, often detected as a single brick due to the AI’s difficulty in discerning narrow edges and separations (

Figure 5). Furthermore, accurately detecting separate bricks within an already constructed brick wall proved problematic (

Figure 6). These results suggest that the AI model tends to confuse:

Clutter: Unnecessary or distracting information in the background of the observed object that can lead to false positives.

Closely spaced bricks: Individual bricks within fully built walls, where discrete units are hard to separate and mortar lines are not clearly visible.

Lighting extremes: Overly bright or dark areas that distort edges and reduce the visibility of individual bricks.

While these observations indicate that such factors strongly influence model reliability, the available dataset was not large enough to establish consistent statistical trends or quantify their exact impact on accuracy.

This led to substantial variability in brick count outputs across similar tasks and work segments. The lack of task-specific training or fine-tuning on construction-specific datasets limited the AI’s ability to generalize consistently across varied site conditions. Although the AI model’s multimodal capability enabled flexible interpretation of visual and textual inputs, its performance in this context clearly highlighted the challenges of deploying general-purpose models for highly specialized industrial applications without domain-specific adaptation.

3.3. Quantity Monitoring—NORMENG Pilot Project

To extend the analysis beyond the Samoborska project, a quantity monitoring experiment was also conducted on the NORMENG pilot project.

Figure 7 shows the NORMENG pilot project site, where a similar quantity monitoring experiment was performed following the workflow established at Samoborska.

To improve recognition conditions, several image manipulations were applied before analysis. Insights gained from the Samoborska results, particularly the importance of using clearer frames with better lighting and fewer obstructions, guided these adjustments in the NORMENG tests. The test focused on the worker in a white T-shirt (top left of the image), who was in the process of laying another top row of bricks. From the corresponding image sequence, shown in

Figure 8, it was attempted to quantify the progress by tracking the placement of bricks during this activity.

The process followed the same logic: by comparing the brick count at the start and end of the activity, applying the known dimensions of the Wienerberger Porotherm structural brick (375 × 250 × 249 mm), and accounting for the number of workers involved, the system aimed to estimate the constructed wall volume in cubic meters. This, in turn, would allow the derivation of average productivity per worker, expressed as cubic meters of brickwork completed per time unit.

As in the Samoborska case, the results were mixed. After numerous unsuccessful attempts and inconsistent detections, the AI eventually produced usable outputs for specific case under certain conditions. Testing on the NORMENG site proved even more challenging, as a larger quantity of data had to be analyzed (a greater number of bricks), while the overall chaotic site conditions further complicated detection. Examples of failed attempts are shown in

Figure 9 and

Figure 10.

In the most promising example, the system correctly identified approximately three bricks, which translated, when scaled across the wall geometry and construction sequence, to an estimated ~0.07 m

3 of completed wall volume (

Figure 11).

Overall, the NORMENG pilot confirmed that AI-based quantity monitoring is technically feasible under favorable visual conditions, but its reliability remains constrained by environmental factors and the lack of construction-specific fine-tuning. These findings reinforce the need for domain adaptation and standardized data collection if such methods are to be used for automated productivity assessment in real-world projects.

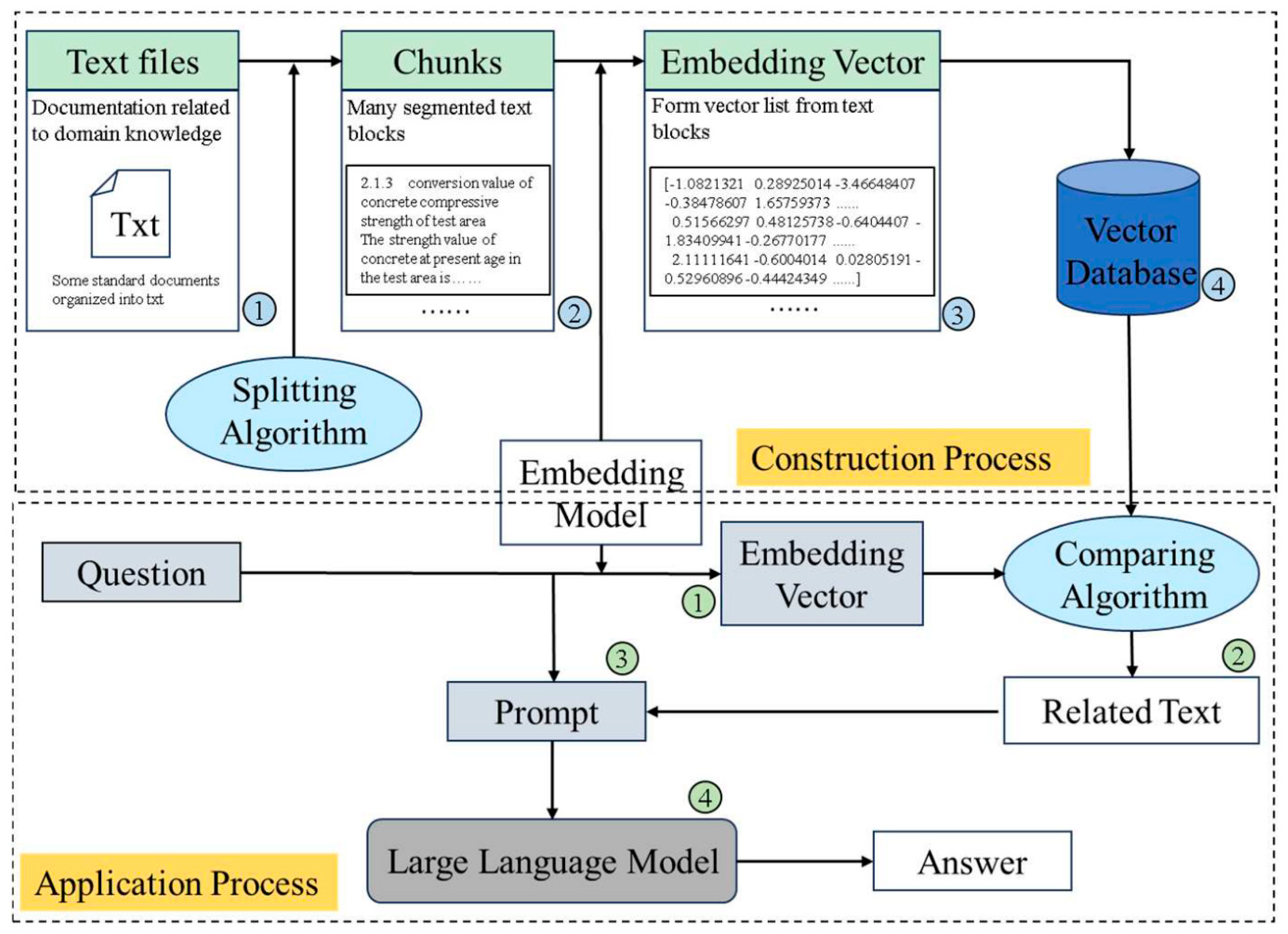

4. Discussion

The results of this study clearly indicate that while the tested general-purpose AI vision system demonstrates potential as a supportive tool for work progress monitoring and preliminary resource estimation, it is not yet sufficiently robust or precise for automated quantity takeoff or accurate productivity assessment in its current, general-purpose form.

In practice, these models often lack deep domain knowledge of construction, so their outputs can be inconsistent or inaccurate without careful contextual guidance. For example, Wang et al. [

14] note that off-the-shelf AI models “lack specialized expertise” in construction engineering and propose a construction-specific RAG (Retrieval-Augmented Generation) knowledge base to ground outputs in authoritative standards (

Figure 12).

Similarly, tests in this study show large variance in detection and counting, confirming that standard image-based AI models need significant adaptation before they can match manual or BIM-assisted surveying. This observation is consistent with the literature that stresses the influence of data quality and task-specific guidance on model stability [

14].

Many of these limitations stem from the fundamental mismatch between general AI models and specialized AI models. Generic AI models are trained on broad text datasets but have little built-in knowledge of construction workflows or the evolving site context [

14]. This “static knowledge” problem leads to outdated or hallucinated outputs if not corrected. Although errors in this study cannot be attributed to any single factor with certainty, the observed pattern supports the literature’s argument that poor context and lack of domain adaptation often accompany less reliable outputs. Building codes and progress logs change frequently, and an untrained or general-purpose AI model can easily misinterpret materials or tasks. To overcome this, a strategic shift toward construction-tailored AI is needed. The literature consistently shows that fine-tuning on domain-specific data and supplementing models with real-time information can dramatically improve relevance and accuracy [

13]. These results imply that without such targeted adaptation, automated monitoring will continue to produce errors or inconsistencies in progress and quantity estimates.

To make AI models practically useful for construction monitoring and overcome the limitations observed with general-purpose models, a short literature review was conducted. This review and analysis of current advancements and challenges in AI applications for the construction sector led to the following recommendations:

Local Training (Fine-tuning): Pre-trained models must be fine-tuned on construction-domain data to learn the relevant terminology and context. In practice, one would assemble a specialized dataset (e.g., project logs, contracts, equipment catalogs) and continue training the base model so it “remembers” how construction knowledge fits into its outputs [

13,

14]. Parameter-efficient methods like LoRA (Low-Rank Adaptation) can achieve this with minimal additional cost: they insert small trainable matrices into the model so that only a few parameters change during training [

13]. This makes fine-tuning more affordable while still significantly improving task-specific performance. Indeed, Zhou and Ma [

12] showed that even an AI model fine-tuned on noisy synthetic construction text could achieve an F1 ≈ 0.76 for named-entity recognition in construction documents. The researchers iteratively generated preliminary Named Entity Recognition (NER) data from standards and lexicons, subsequently refining the model. This methodology yielded robust results, even when faced with imperfect initial inputs. This outcome underscores that meticulously curated fine-tuning data, even if initially incomplete, can effectively impart crucial domain-specific knowledge that is typically absent in models trained on general datasets.

Retrieval-Augmented Generation (RAG): Instead of relying solely on the model’s internal knowledge, systems should retrieve real construction-specific information at query time. RAG architecture combines a retriever (e.g., a vector database or search engine over BIM/specs) with a generator (the AI model) so that answers are grounded in concrete data. By injecting authoritative text (codes, schedules, site reports) into the prompt, RAG “grounds” the AI’s output and dramatically reduces hallucinations [

14]. For example, Wang et al. [

14] note that RAG “reduces hallucinations by grounding responses in retrieved evidence” and allows models to tap updated knowledge without full retraining. In practice, one would index manuals, regulations, BIM notes, and past project logs; at inference time, the system retrieves relevant passages for the query. This is especially useful in construction for tasks like answering code-related questions or summarizing contract clauses. Challenges do remain (ensuring high-quality retrieval, low latency, etc.), but techniques like hybrid search (combining keyword and embedding lookup) and agentic RAG (where an AI agent iteratively refines search queries) are emerging to address them. In sum, a retrieval component can augment the fine-tuned model so that it always “checks” real project data, rather than relying only on its internal, potentially outdated knowledge [

14,

15].

Data Quality and Curation: The effectiveness of both fine-tuning and RAG critically depends on having accurate, representative data. Construction project data is often fragmented and inconsistent, so significant effort must go into cleaning and structuring it. The literature highlights that using messy data can actually degrade model performance. For example, the domain-adaptation study of Lu et al. [

13] reports that “using an extended dataset with varied formats and defective text led to a decline in model performance”. This implies that simply throwing more construction logs or photos at the model is not enough; one must curate high-quality examples. In practice, this means setting up pipelines to collect images, BIM exports, sensor logs, and text records in a consistent schema, and applying filtering to remove errors. Efforts like automated photogrammetry and LIDAR cleanup also help ensure that what the AI “sees” reflects reality. In short, robust data curation is foundational: without it, fine-tuning will embed mistakes, and RAG will retrieve garbage, making the AI outputs unreliable [

13].

Multimodal Integration: Construction sites generate many data types beyond camera images: BIM models, LIDAR scans, IoT sensor streams, and text reports all contain useful information. Integrating these modalities can significantly improve AI accuracy. This study’s results and the wider literature suggest that richer data fusion is needed. For example, the BIMCaP method aligns LiDAR point clouds and RGB images to a BIM, achieving sub-5 cm accuracy in indoor mapping [

16]. Similarly, combining 360° camera imagery with robotics and BIM has enabled effective automated progress monitoring: one system used a four-legged robot with a 360° camera and JobWalk app, linking the captured panoramas to BIM waypoints for real-time tracking [

17]. In that setup, the robot achieved efficient progress capture while the BIM provided spatial context. Shinde et al. [

17] reviewed numerous 360°-based monitoring studies and concluded that panoramic images plus reference models greatly enhance realism and allow automated analysis. Thus, future AI tools should be multimodal: for instance, using computer vision on images and overlaying the results on the BIM, or fusing LIDAR-derived depth with photo interpretation. This holistic approach can cross-validate findings (e.g., confirming an object’s position in the BIM via LIDAR) and overcome situations where one sensor alone might fail (e.g., a dusty camera vs. a clean laser scan).

Human-in-the-Loop Validation: Finally, despite increasing automation, expert oversight is essential. AI-generated results, especially those informing critical progress decisions or compliance, should be reviewed by trained personnel. Human reviewers can verify that any AI-extracted quantities match on-site reality and that recommended actions meet safety and code requirements. This human–AI partnership is important for building trust: as Afroogh et al. [

18] note, “trust in AI” strongly influences whether organizations will adopt new systems. By keeping humans “in the loop,” errors can be caught, ethical or contractual issues considered, and accountability maintained. In practice, this means designing interfaces where AI suggestions are accompanied by evidence (e.g., annotated images and source references) and where final approvals still rest with engineers or managers. Such a workflow ensures that AI acts as a powerful assistant rather than an unchecked authority, which is crucial for safety and quality assurance on complex construction projects.

While general-purpose AI models show promising capabilities for multimodal analysis and preliminary resource estimation, they currently face substantial limitations when applied to specialized construction tasks. Researchers report that approaches including methods such as RAG, improved data quality, and human-in-the-loop validation, could also be incorporated into this framework to enhance the reliability and contextual grounding of AI-based monitoring tools. These considerations, although beyond the scope of this exploratory study and suited for future research, highlight the gap between conceptual readiness and practical deployment that must be addressed before the outlined measures can be effectively applied in real-world construction projects.

5. Conclusions

This study provides a rigorous evaluation of the applicability of general-purpose multimodal AI for the automated monitoring and standardization of construction workflows. While prior research has highlighted the potential of AI-driven vision systems in construction, for example, in automated material detection, worker efficiency analysis, and real-time progress tracking, findings in this paper reveal both opportunities and critical limitations when applying a broad, generalist model in specialized industrial environments.

The results showed that the AI model, although impressive in its capacity to detect basic construction entities and provide preliminary estimates of quantities (e.g., brick counts), falls short in delivering consistent, fine-grained results, particularly when compared against detailed, manually recorded benchmarks. This is on par with referenced studies emphasizing that generalist AI models often suffer from the “static knowledge” problem: lacking up-to-date, domain-specific understanding and struggling with dynamic, high-variability site contexts without additional grounding mechanisms.

Critically, this work reinforces the argument made in recent literature that fine-tuning, retrieval-augmented generation (RAG), and multimodal integration are essential for achieving reliable AI performance in construction. Moreover, the study contributes empirical support to the growing consensus that high-quality data curation, not merely algorithmic sophistication, is foundational for successful AI implementation. The observed variability in AI’s performance underlines the need for meticulously structured, domain-specific training datasets, robust sensor integration (e.g., BIM, LiDAR, 360° cameras), and human-in-the-loop validation processes to ensure that AI outputs are both actionable and trustworthy in practice.

Theoretically, this study advances the understanding of AI in construction by providing one of the first empirical benchmarks of a general-purpose multimodal model on construction-specific tasks. It shows that the gap between generalist and domain-adapted AI is not merely technical but conceptual, underscoring that domain grounding, hybrid architectures, and curated datasets are essential theoretical prerequisites for reliable AI-driven workflow automation in construction.

In summary, while current AI models offer promising multimodal analytical capabilities, its current form is best suited as a supportive or exploratory tool, rather than as a standalone system for automated construction progress monitoring, quantifying, or norming. The findings strongly advocate for continued development toward hybrid systems that combine general-purpose AI models with domain-adapted layers, real-time retrieval systems, and multimodal data fusion. Such advancements are essential for meeting the precision, safety, and compliance demands of modern construction management and advancing toward truly data-driven, automated project delivery systems.

Author Contributions

Conceptualization, K.V. and Z.S.; Formal analysis, K.V. and Z.S.; Funding acquisition, I.Z.; Investigation, K.V.; Methodology, K.V., I.Z., M.M. and Z.S.; Project administration, I.Z.; Resources, Z.S., I.Z. and M.M.; Supervision, I.Z. and Z.S.; Validation, K.V.; Writing—original draft, K.V.; Writing—review and editing, Z.S., I.Z. and M.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research and the APC were funded by the European Union through the Competitiveness and Cohesion Operational Program, European Regional Development Fund, as a part of the project KK.01.1.1.07.0057 Development of automated resource standardization system for energy-efficient construction (NORMENG). The content of the publication is the sole responsibility of the University of Zagreb Faculty of Civil Engineering.

Data Availability Statement

All data generated or analyzed during this study are included in this published article. The underlying raw project data belong to the NORMENG project and are not publicly available due to project restrictions.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Appendix A. NORMENG Project Dataset (Sošice)

Figure A1.

Plastering operations at the Sošice site (used in

Section 3.1 Preliminary Testing).

Figure A1.

Plastering operations at the Sošice site (used in

Section 3.1 Preliminary Testing).

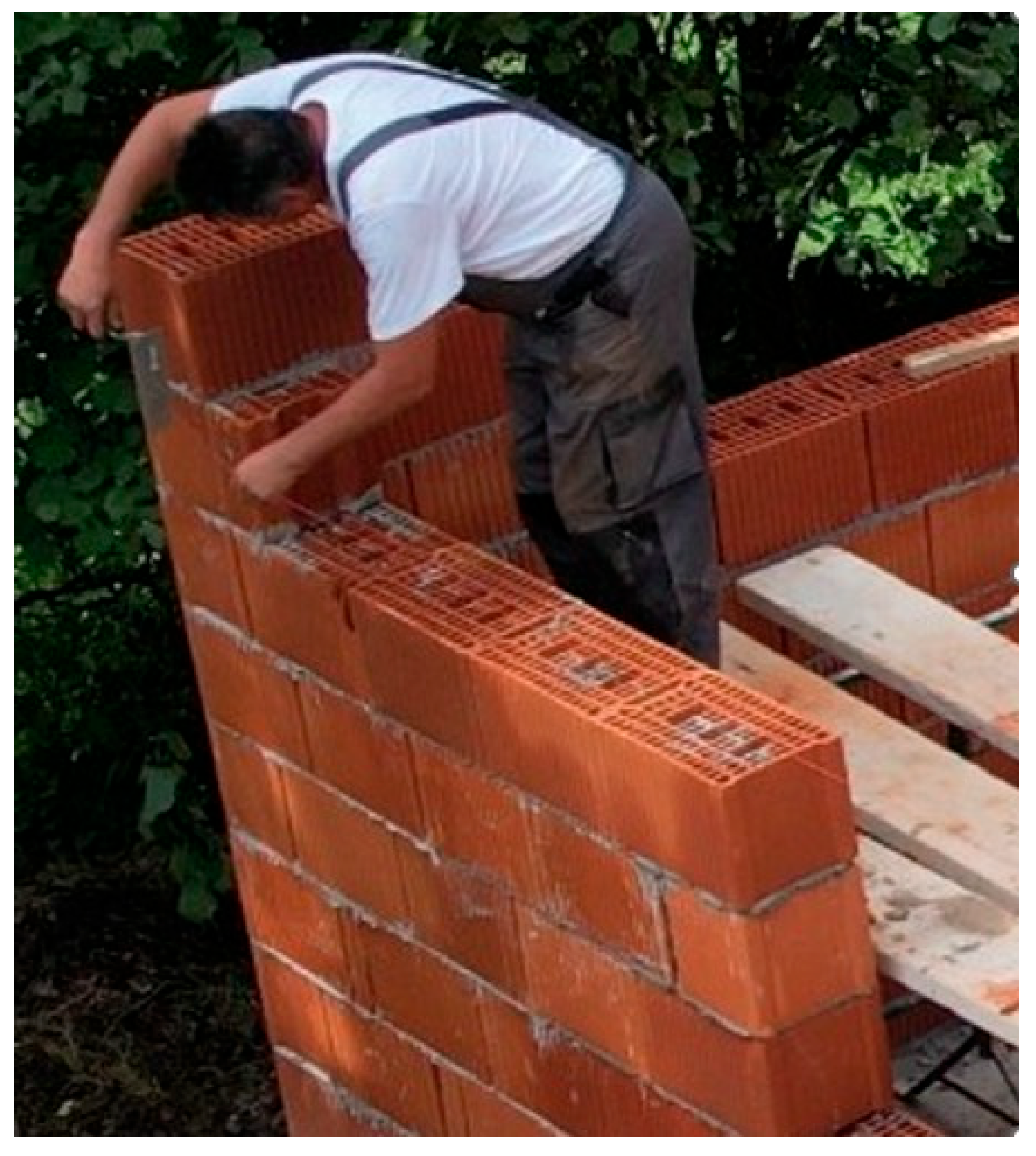

Figure A2.

Roof insulation operations at the Sošice site (used in

Section 3.1 Preliminary Testing).

Figure A2.

Roof insulation operations at the Sošice site (used in

Section 3.1 Preliminary Testing).

Figure A3.

AI-based brick wall detection at the Sošice site (used in

Section 3.3 Quantity Monitoring—NORMENG pilot project).

Figure A3.

AI-based brick wall detection at the Sošice site (used in

Section 3.3 Quantity Monitoring—NORMENG pilot project).

Appendix B. Samoborska Site Dataset

Figure A4.

AI-based brick wall detection at the Samoborska site (used in

Section 3.2 Quantity Monitoring—Samoborska site).

Figure A4.

AI-based brick wall detection at the Samoborska site (used in

Section 3.2 Quantity Monitoring—Samoborska site).

Figure A5.

AI-based brick wall detection at the Samoborska site (used in

Section 3.2 Quantity Monitoring—Samoborska site).

Figure A5.

AI-based brick wall detection at the Samoborska site (used in

Section 3.2 Quantity Monitoring—Samoborska site).

Figure A6.

AI-based brick wall detection at the Samoborska site (used in

Section 3.2 Quantity Monitoring—Samoborska site).

Figure A6.

AI-based brick wall detection at the Samoborska site (used in

Section 3.2 Quantity Monitoring—Samoborska site).

Figure A7.

AI-based brick wall detection at the Samoborska site (used in

Section 3.2 Quantity Monitoring—Samoborska site).

Figure A7.

AI-based brick wall detection at the Samoborska site (used in

Section 3.2 Quantity Monitoring—Samoborska site).

References

- Pan, Y.; Zhang, L. Integrating BIM and AI for smart construction management: Current status and future directions. Arch. Comput. Methods Eng. 2023, 30, 1081–1110. [Google Scholar] [CrossRef]

- Yang, J.; Park, M.-W.; Vela, P.A.; Golparvar-Fard, M. Construction performance monitoring via still images, time-lapse photos, and video streams: Now, tomorrow, and the future. Adv. Eng. Inform. 2015, 29, 211–224. [Google Scholar] [CrossRef]

- El-Omari, S.; Moselhi, O. Integrating 3D laser scanning and photogrammetry for progress measurement of construction work. Autom. Constr. 2008, 18, 1–9. [Google Scholar] [CrossRef]

- Guo, J.; Xia, B.; Wang, J.; Jiang, Y.; Huang, W. Post-COVID-19 recovery: An integrated framework of construction project performance evaluation in China. Systems 2023, 11, 359. [Google Scholar] [CrossRef]

- Ghansah, G.; Lu, W. Responses to the COVID-19 pandemic in the construction industry: A literature review of academic research. Constr. Manag. Econ. 2023, 41, 781–803. [Google Scholar] [CrossRef]

- Maglov, D.; Cerić, A.; Mihić, M.; Sigmund, Z.; Završki, I. Impact of automated resource standardization system for energy-efficient construction on sustainable development goals and education for civil engineers. In Proceedings of the 1st Joint Conference EUCEET and AECEF, Thessaloniki, Greece, 12 November 2021; pp. 121–128. [Google Scholar]

- Chua, W.P.; Cheah, C.C. Deep-learning-based automated building construction progress monitoring for prefabricated prefinished volumetric construction. Sensors 2024, 24, 7074. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Liu, C. Applications of multirotor drone technologies in construction management. Int. J. Constr. Manag. 2019, 19, 401–412. [Google Scholar] [CrossRef]

- Rashidi, A.; Sigari, M.H.; Maghiar, M.; Citrin, D. An analogy between various machine-learning techniques for detecting construction materials in digital images. KSCE J. Civ. Eng. 2015, 20, 1178–1188. [Google Scholar] [CrossRef]

- Han, K.K.; Golparvar-Fard, M. Appearance-based material classification for monitoring of operation-level construction progress using 4D BIM and site photologs. Autom. Constr. 2015, 53, 44–57. [Google Scholar] [CrossRef]

- Yoon, S.; Kim, H. Occlusion-aware worker detection in masonry work: Performance evaluation of YOLOv8 and SAMURAI. Appl. Sci. 2025, 15, 3991. [Google Scholar] [CrossRef]

- Zhou, J.; Ma, Z. Named entity recognition for construction documents based on fine-tuning of large language models with low-quality datasets. Autom. Constr. 2025, 174, 106151. [Google Scholar] [CrossRef]

- Lu, W.; Luu, R.K.; Buehler, M.J. Fine-tuning large language models for domain adaptation: Exploration of training strategies, scaling, model merging and synergistic capabilities. npj Comput. Mater. 2025, 11, 84. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, D.; Li, J.; Feng, Z.; Zhang, F. Entropy-optimized dynamic text segmentation and RAG-enhanced LLMs for construction engineering knowledge base. Appl. Sci. 2025, 15, 3134. [Google Scholar] [CrossRef]

- Aqib, M.; Hamza, M.; Mei, Q.; Chui, Y.-H. Fine-tuning LLMs and evaluating retrieval for building-code QA. arXiv 2025, arXiv:2505.04666v1. [Google Scholar]

- Vega-Torres, M.A.; Ribic, A.; García de Soto, B.; Borrmann, A. BIMCaP: BIM-based AI-supported LiDAR-camera pose refinement. arXiv 2024, arXiv:2412.03434v1. [Google Scholar]

- Shinde, Y.; Lee, K.; Kiper, B.; Simpson, M.; Hasanzadeh, S. A systematic literature review on 360° panoramic applications in the AEC industry. ITcon J. Inf. Technol. Constr. 2023, 28, 405–437. [Google Scholar]

- Afroogh, S.; Akbari, A.; Malone, E.; Kargar, M. Trust in AI: Progress, challenges, and future directions. Humanit. Soc. Sci. Commun. 2024, 11, 1568. [Google Scholar] [CrossRef]

- Završki, I.; Vuko, M.; Sigmund, Z.; Mihić, M. NORMENG—Automated Resource Standardization System for Energy-Efficient Construction: Project progress report. In Proceedings of the OTMC & IPMA SENET Joint Conference Proceedings, Cavtat, Croatia, 21 September–24 September 2022. [Google Scholar]

- Zhang, C.; Mao, C.; Liu, H.; Liao, Y.; Zhou, J. Moving toward automated construction management: An automated construction worker efficiency evaluation system. Buildings 2025, 15, 2479. [Google Scholar] [CrossRef]

- Magdy, R.; Hamdy, K.A.; Essawy, Y.A.S. Real-time progress monitoring of bricklaying. Buildings 2025, 15, 2456. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).