Assessment of Visual Effectiveness of Metro Evacuation Signage in Fire and Flood Scenarios: A VR-Based Eye-Movement Experiment

Abstract

1. Introduction

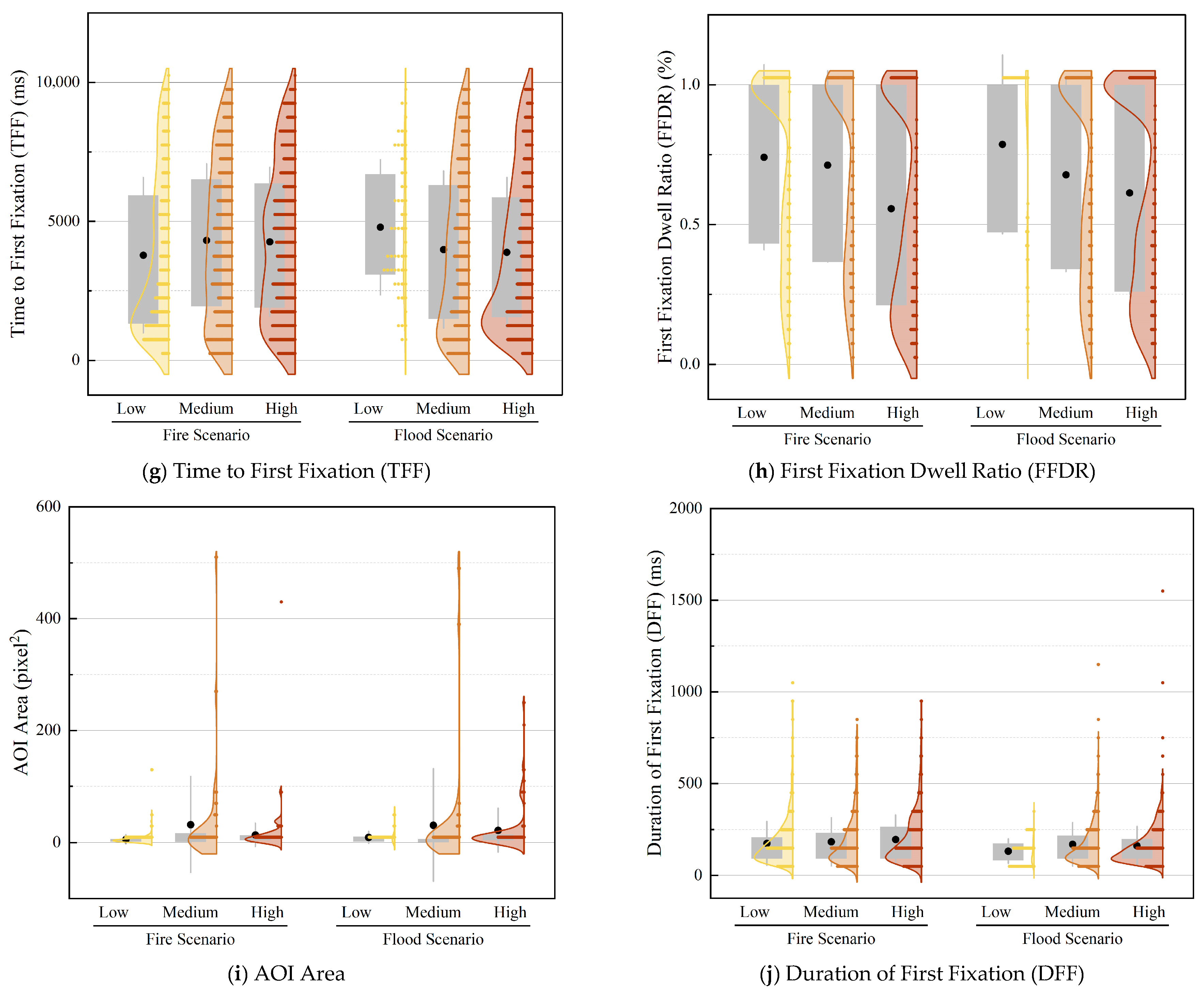

2. Theoretical Framework

2.1. The “Visual Behavior–Information Processing–Decision Formation” Cognitive Pathway

2.2. Evaluation Framework for Signage Effectiveness Based on “Importance–Immediacy”

3. Experimental Design and Data Collection

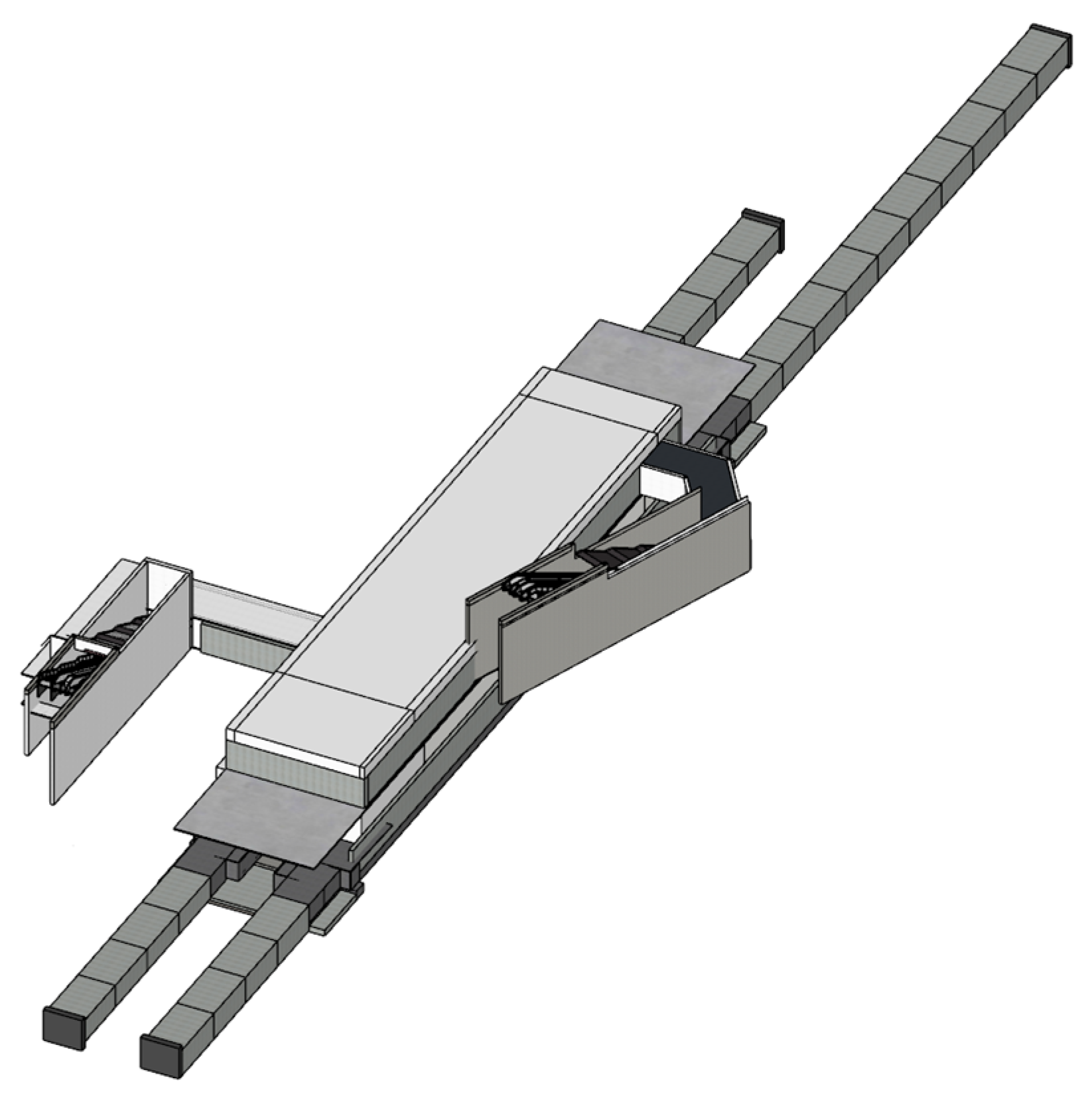

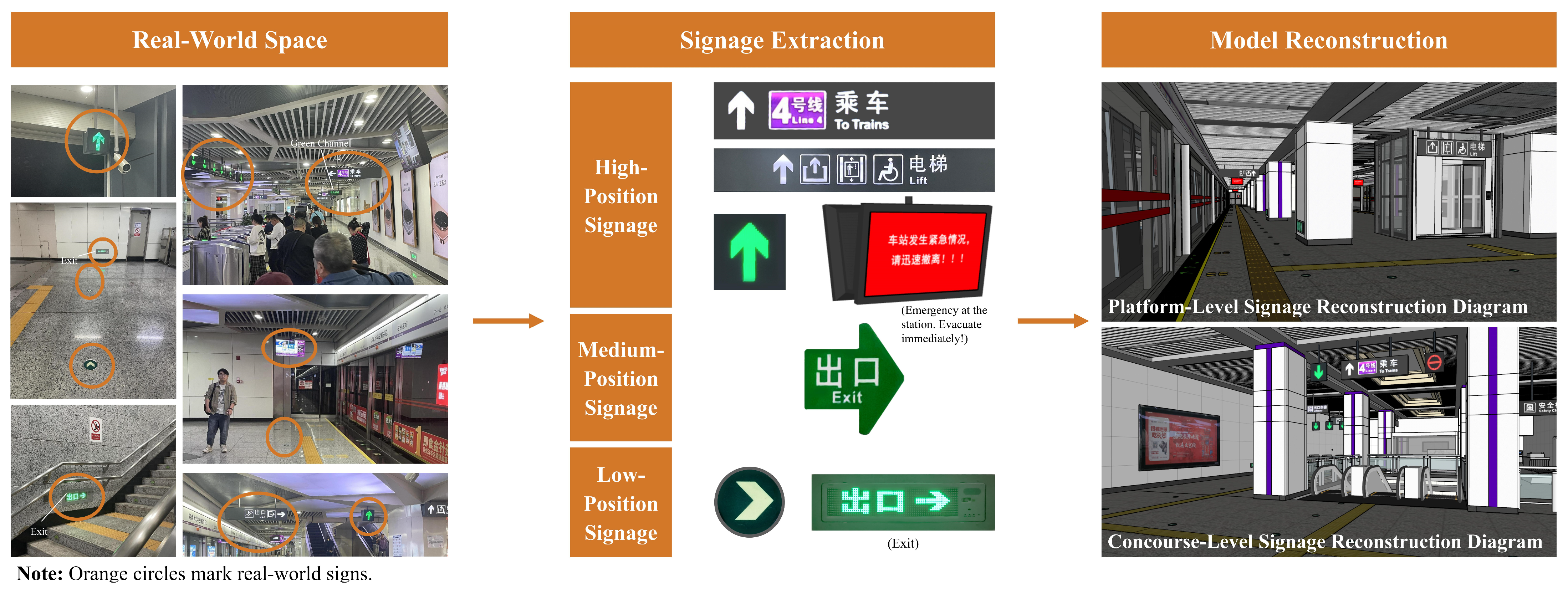

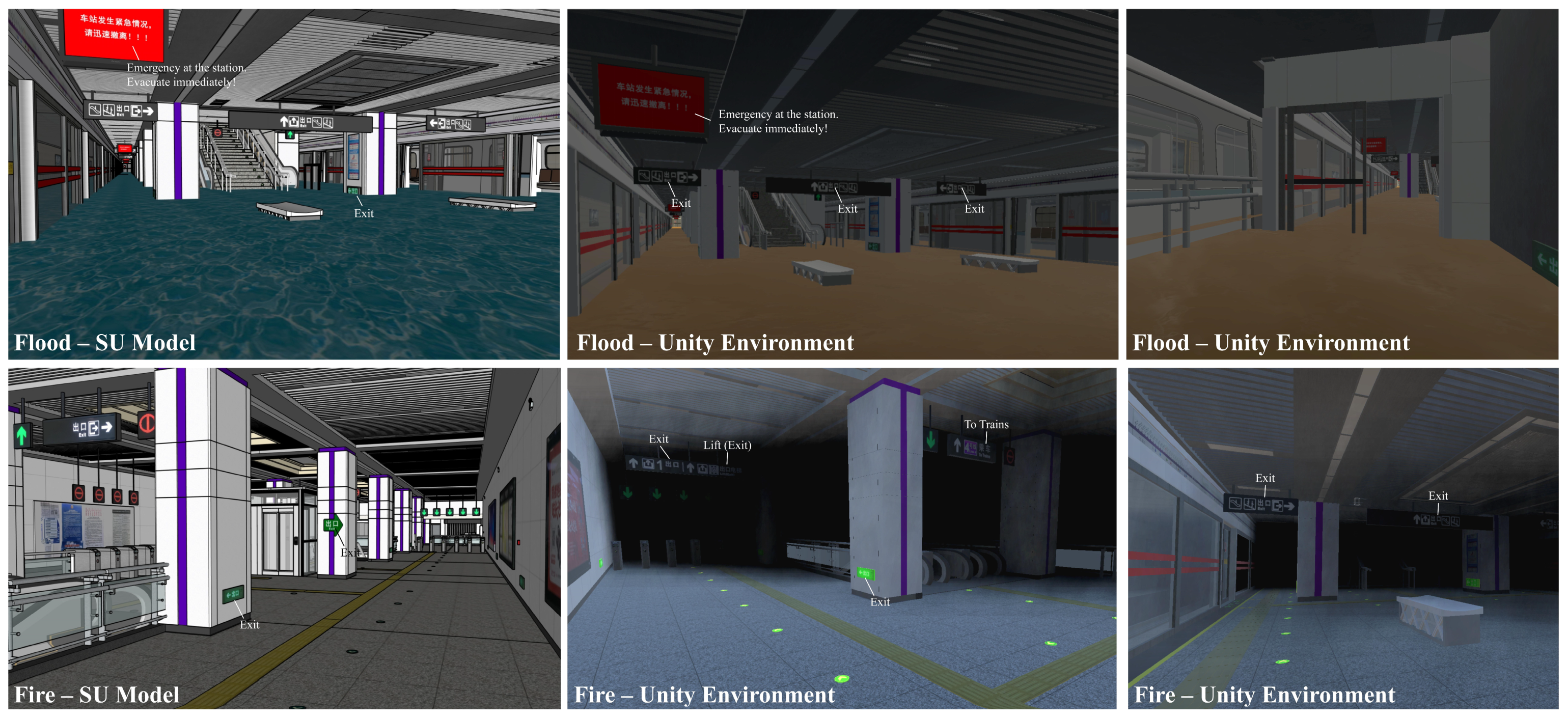

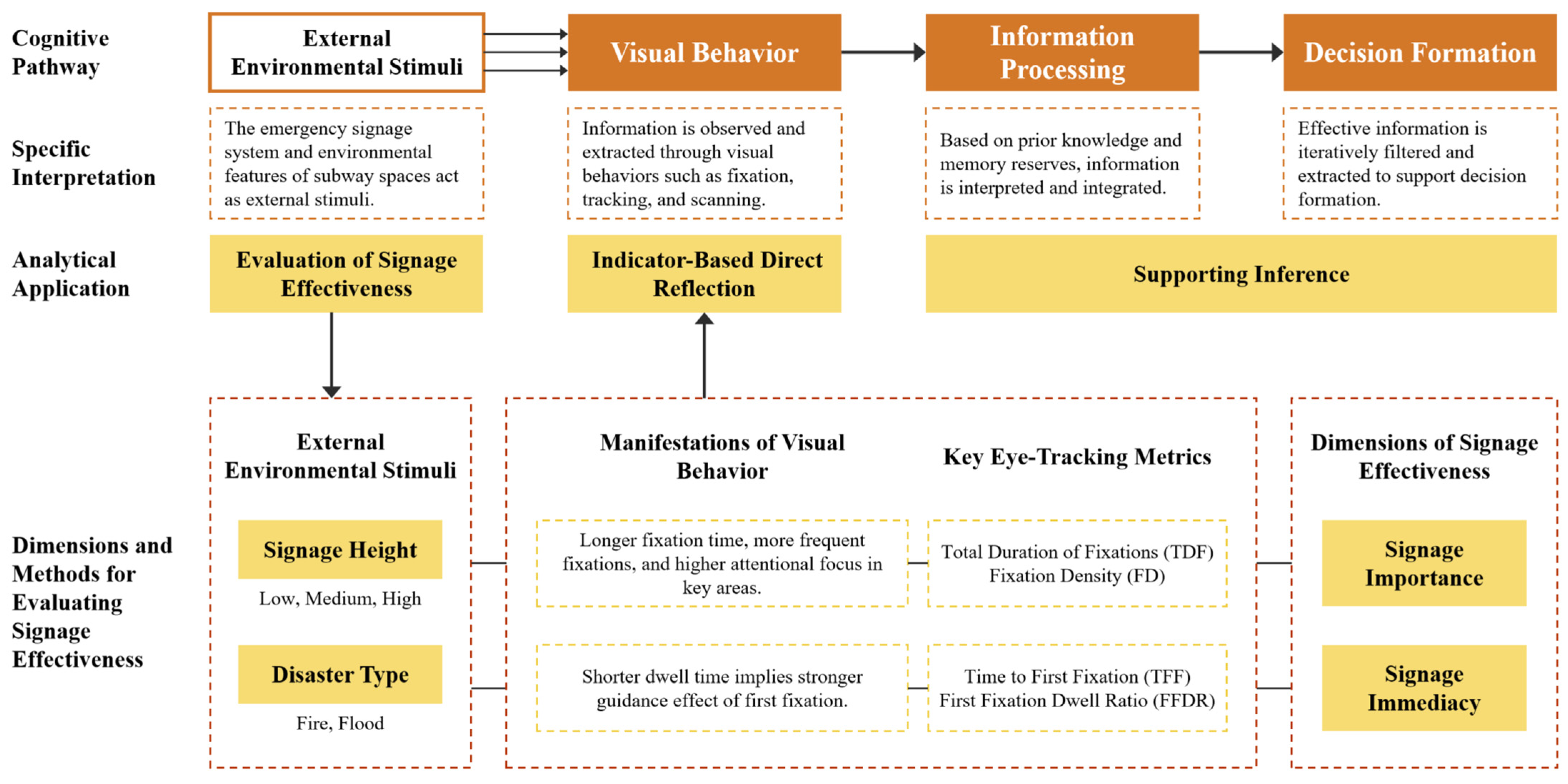

3.1. Construction of Virtual Disaster Simulation Scenarios

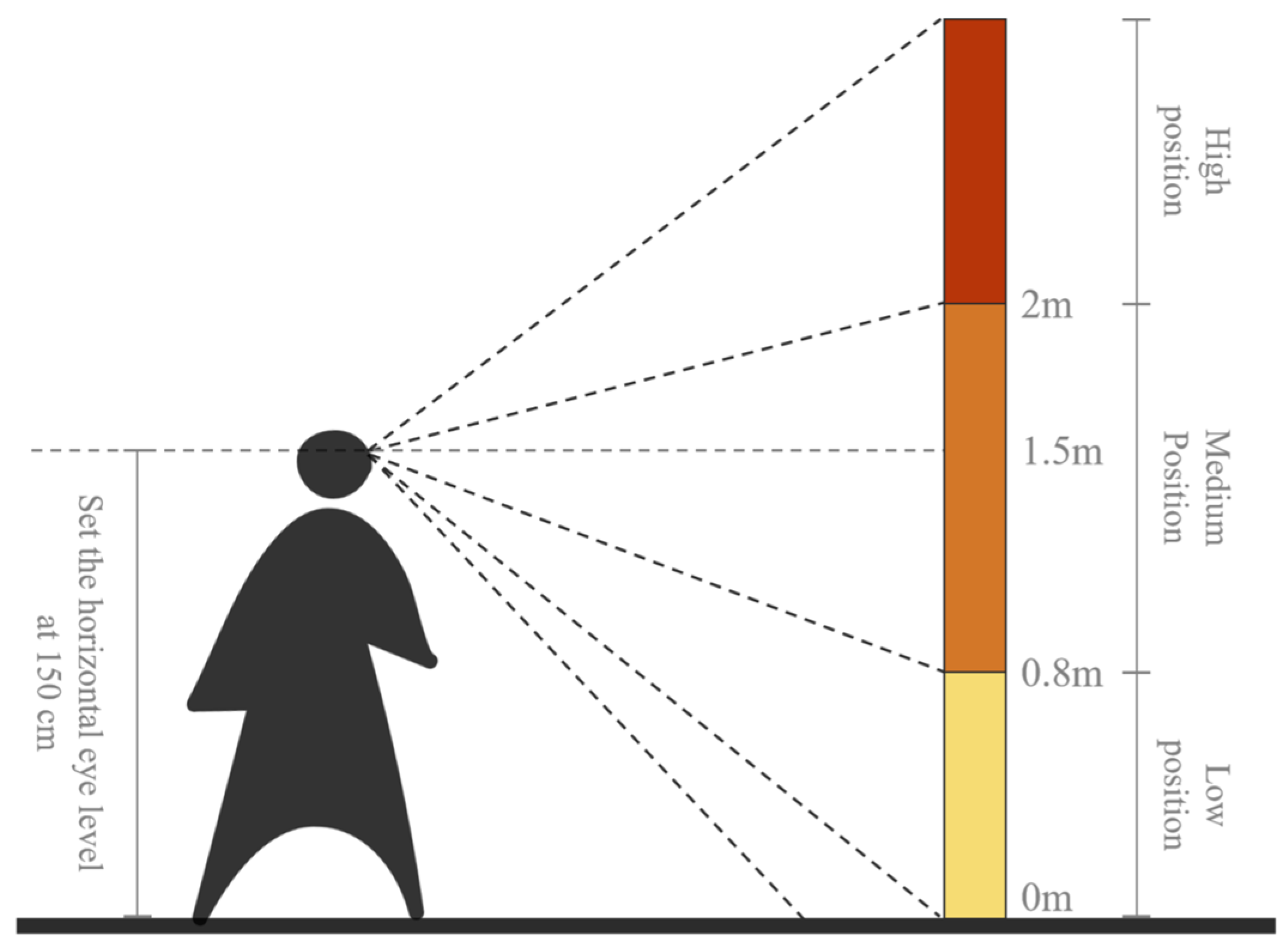

3.2. Experimental Design: Classification and Comparative Effectiveness of Signage Heights

- (1)

- Low Position: Signs placed on or near the floor, below the human eye line, approximately within 0–0.8 m above the ground.

- (2)

- Medium Position: Signs located within the natural eye-level range, approximately 0.8–2 m above the ground.

- (3)

- High Position: Signs positioned above the eye line, requiring occupants to look upward, at heights exceeding 2 m above the ground.

3.3. Experimental Procedure and Data Analysis

3.3.1. Experimental Procedure

- (1)

- Task Briefing. Before the experiment, each participant was informed of the task objective (i.e., quickly determining the correct evacuation direction) and received instructions on VR equipment usage and safety precautions. After obtaining informed consent, participants were guided to stand within the designated experimental area.

- (2)

- Eye-Tracker Calibration. Once participants understood the experimental procedure, they donned the eye-tracking headset and completed a five-point calibration, a standard procedure in VR eye-tracking research [44]. Only after successful calibration did participants proceed to the formal experiment.

- (3)

- Dual-Scenario Experiment. Each panoramic image—whether from the fire or flood scenario—was displayed for 10 s, allowing participants sufficient time to observe and perceive the environment. Participants scanned the surroundings, used the displayed evacuation signage system to identify the correct escape direction from that viewpoint, and then experienced a 4-s black-screen interlude as a buffer. Throughout the experiment, all participants remained in the same controlled environment, and apart from the emergency alarm sound, ambient conditions were kept quiet to minimize external interference.

3.3.2. Area of Interest Definition

3.3.3. Metric Selection

| Indicator Category | Indicator Name | Unit | Source | Definition and Calculation Formula |

|---|---|---|---|---|

| Perception Rate | Perception Rate (PR) | % | Calculation | Proportion of AOIs that were viewed. PR = (Number of effective AOIs/Total AOIs) × 100%. |

| Signage Importance | Total Duration of Fixations (TDF) | ms | Exported from Tobii | Total time spent fixating within a single AOI. |

| Average Duration of Fixations (ADF) | ms | Exported from Tobii | Average duration of a single fixation within an AOI. | |

| Number of Fixations (NF) | count | Exported from Tobii | Total number of fixation points within an AOI, indicating the depth of information processing. | |

| Number of Visits (NV) | count | Exported from Tobii | Number of times an AOI was visited, reflecting repeated attention to the signage. | |

| Fixation Density (FD) | count/pixel2 | Calculation | Number of fixations per unit area of the AOI. FD = NF/AOI area. | |

| Signage Immediacy | Time to First Fixation (TFF) | ms | Exported from Tobii | Time interval from stimulus onset to the first fixation on an AOI. |

| First Fixation Dwell Ratio (FFDR) | % | Calculation | Ratio of first fixation duration to total dwell time, reflecting initial information capture efficiency. FFDR = DFF/TDF × 100%. |

4. Experimental Results Analysis: Signage Importance and Immediacy

4.1. Normality Testing and Nonparametric Test Results

| Indicator | Test Dimension | Overall Comparison (Fire, Flood) (N = 4523) | Within-Group Comparison (Fire Scenario) (N = 2447) | Within-Group Comparison (Flood Scenario) (N = 2076) | |||

|---|---|---|---|---|---|---|---|

| Test Value (H) | p | Test Value (H) | p | Test Value (H) | p | ||

| TDF | Overall Test (Low, Medium, High) | 130.454 | <0.001 *** | 165.997 | <0.001 *** | 26.447 | <0.001 *** |

| Low vs. Medium | 147.390 | 0.019 * | 55.960 | 0.356 | 288.357 | 0.002 ** | |

| Low vs. High | −520.280 | <0.001 *** | −406.695 | <0.001 *** | −371.701 | <0.001 *** | |

| Medium vs. High | −372.889 | <0.001 *** | −350.734 | <0.001 *** | −83.344 | 0.007 ** | |

| FD | Overall Test (Low, Medium, High) | 219.439 | <0.001 *** | 45.746 | <0.001 *** | 187.709 | <0.001 *** |

| Low vs. Medium | 172.990 | 0.004 ** | 9.569 | 1.000 | 316.578 | <0.001 *** | |

| Low vs. High | 459.579 | <0.001 *** | 198.416 | <0.001 *** | 56.805 | 1.000 | |

| Medium vs. High | 632.568 | <0.001 *** | 207.985 | <0.001 *** | 373.383 | <0.001 *** | |

| TFF | Overall Test (Low, Medium, High) | 7.369 | 0.025 * | 22.265 | <0.001 *** | 7.129 | 0.028 * |

| Low vs. Medium | 139.848 | 0.029 * | 141.499 | <0.001 *** | −211.476 | 0.034 * | |

| Low vs. High | −115.585 | 0.067 | −137.203 | <0.001 *** | 220.567 | 0.023 * | |

| Medium vs. High | 24.263 | 1.000 | 4.295 | 1.000 | 9.090 | 1.000 | |

| FFDR | Overall Test (Low, Medium, High) | 150.391 | <0.001 *** | 136.376 | <0.001 *** | 26.023 | <0.001 *** |

| Low vs. Medium | −171.084 | 0.002 ** | −52.185 | 0.361 | −175.049 | 0.083 | |

| Low vs. High | 535.964 | <0.001 *** | 346.838 | <0.001 *** | 280.797 | 0.001 ** | |

| Medium vs. High | 364.880 | <0.001 *** | 294.653 | <0.001 *** | 105.748 | <0.001 *** | |

| Indicator | Overall Comparison (Low, Medium, High) (N = 4523) | Low Position (N = 975) | Medium Position (N = 2088) | High Position (N = 1460) | ||||

|---|---|---|---|---|---|---|---|---|

| Test Value (Z) | p | Test Value (Z) | p | Test Value (Z) | p | Test Value (Z) | p | |

| TDF | −1.586 | 0.113 | −3.141 | 0.002 ** | −2.392 | 0.017 * | −3.351 | < 0.001 *** |

| FD | −3.180 | 0.001 ** | −2.864 | 0.004 ** | −2.362 | 0.018 * | −3.875 | < 0.001 *** |

| TFF | −1.837 | 0.066 | −3.141 | 0.002 ** | −2.392 | 0.017 * | −3.351 | < 0.001 *** |

| FFDR | −2.403 | 0.016 * | −1.081 | 0.280 | −1.898 | 0.058 | −3.737 | < 0.001 *** |

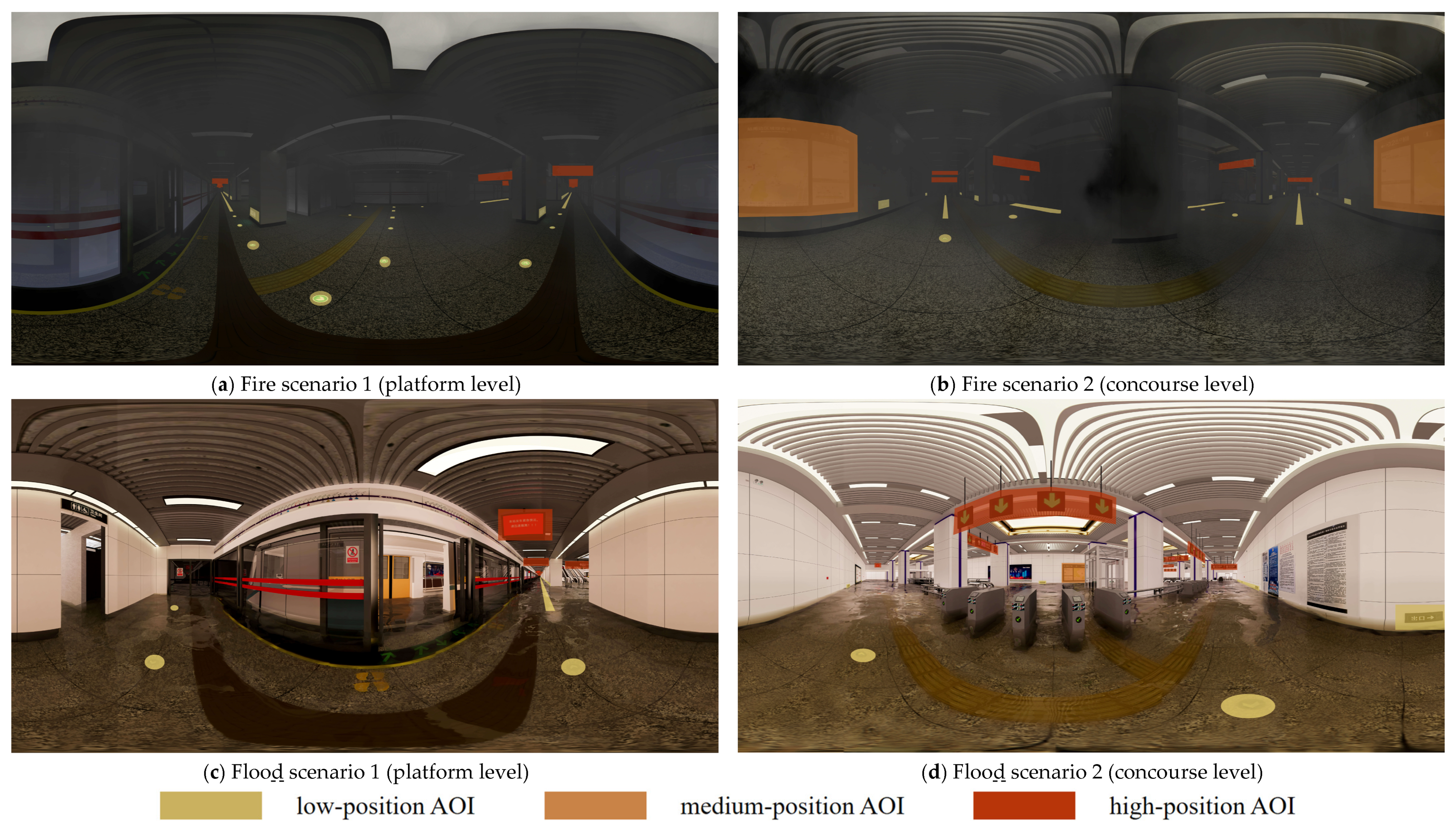

4.2. Comparative Analysis of Data for Different Signage Positions Under Fire and Flood Scenarios

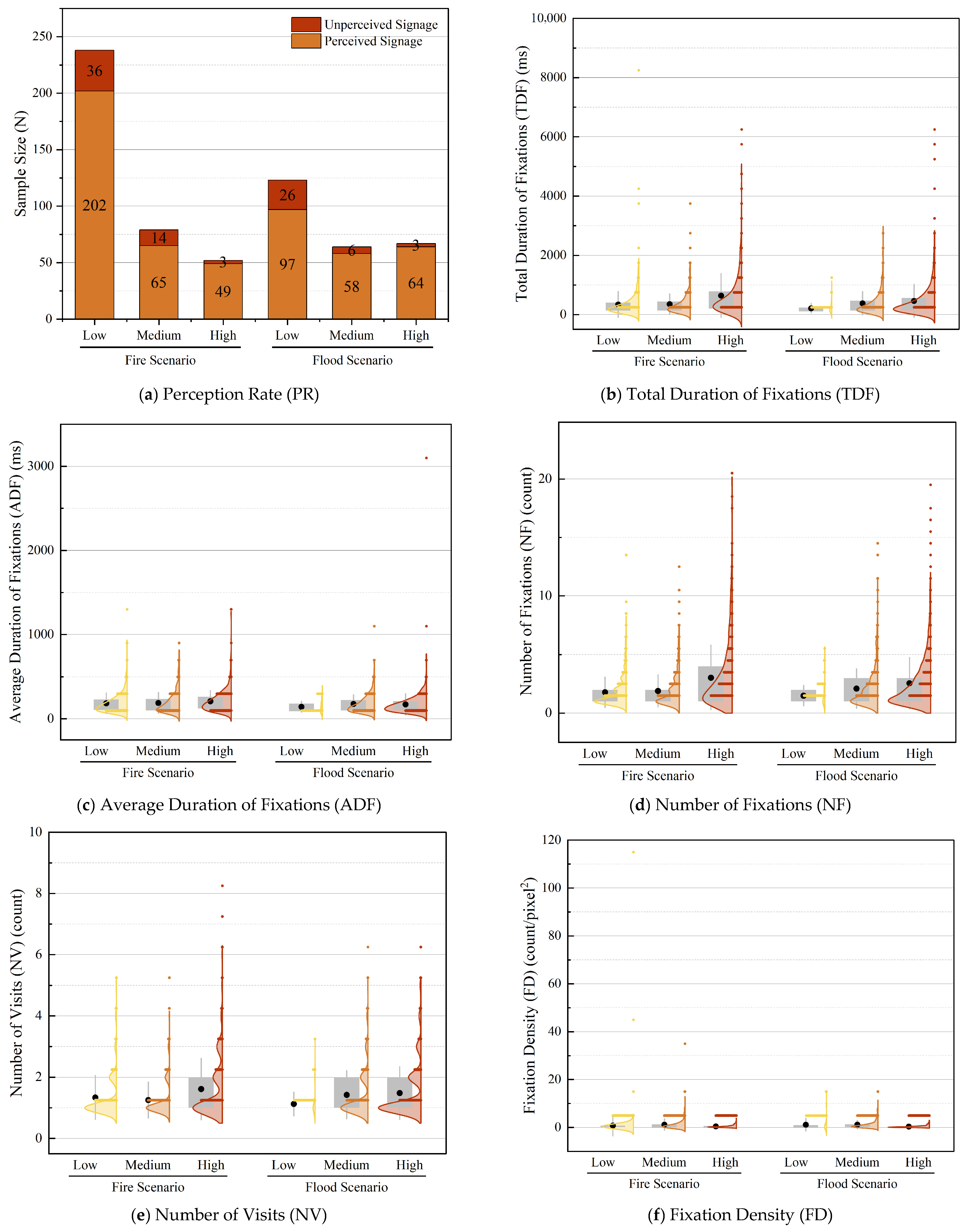

4.2.1. Analysis of Perception Rate Differences

4.2.2. Analysis of Signage Importance Differences

4.2.3. Analysis of Signage Immediacy Differences

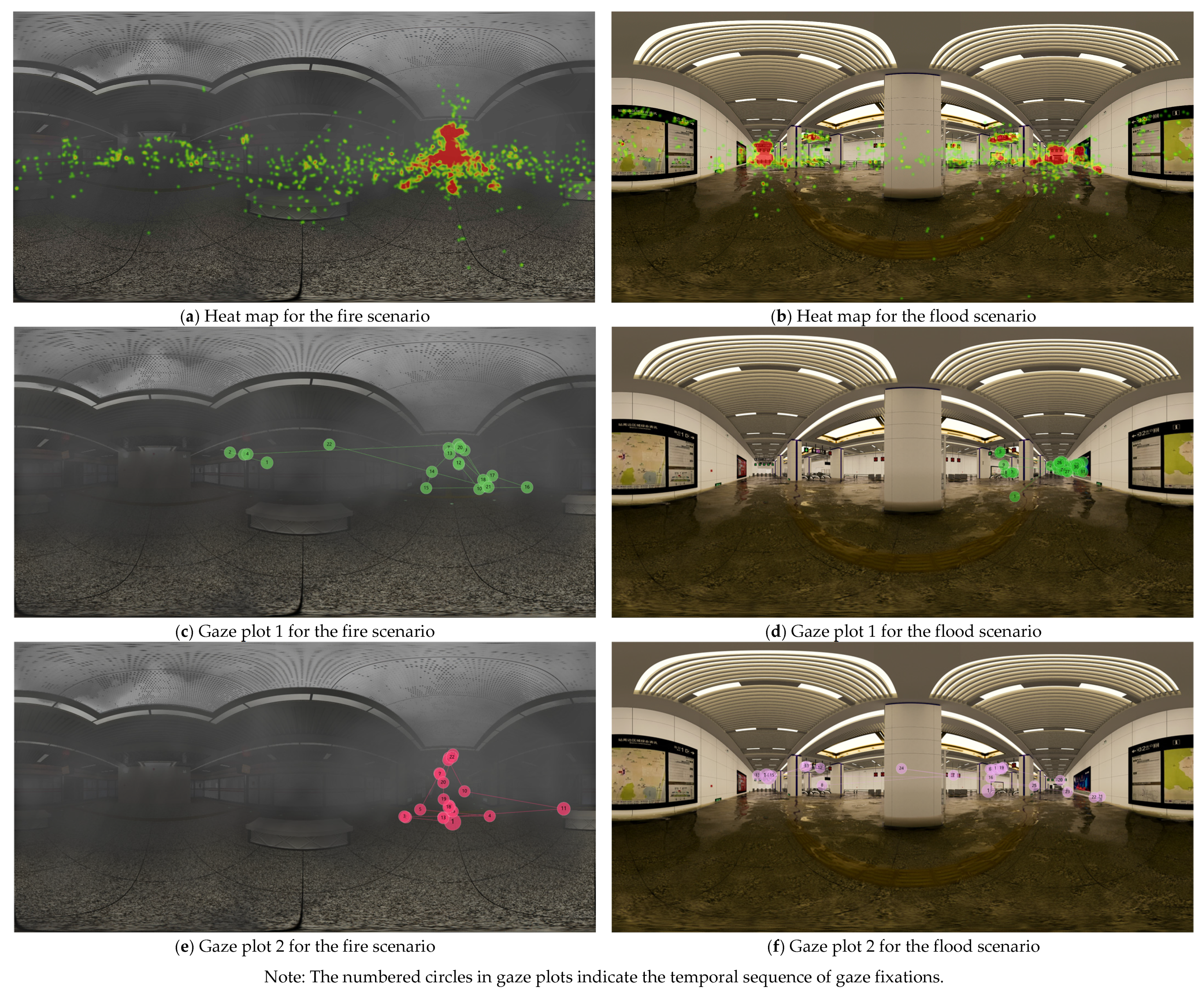

4.3. Analysis of Heat Maps and Gaze Plots

5. Optimization Strategies for Subway Emergency Evacuation Signage

5.1. Common Optimization Strategies

5.2. Hierarchical Adaptation Strategies

6. Conclusions and Discussion

6.1. Conclusions

- (1)

- Low-position signage (0–0.8 m) demonstrates significant advantages in terms of immediacy and should be used for concise, visually salient emergency-response signage. In fire scenarios, TFF was the shortest, and FFDR was the highest, indicating rapid information acquisition. However, in flood environments, the presence of turbid water notably impaired visual accessibility. To mitigate this, low-position signage should incorporate waterproof and anti-fouling coatings as well as high-brightness LED light sources to enhance resistance to environmental interference.

- (2)

- Medium-position signage (0.8–2 m) exhibits balanced performance across both importance and immediacy dimensions. It achieved high FD and moderate values across other indicators, suggesting that signage at this height should serve as a transitional guidance node between low- and high-position signage. It can effectively balance importance, immediacy, and accuracy by hosting intermediary directional cues. Additionally, medium-position signage is suitable for implementing switchable emergency guidance systems aligned with the “peacetime-emergency integration” concept.

- (3)

- High-position signage (>2 m) performs prominently in the dimension of importance. It achieved the highest values for TDF, NF, and PR, indicating its suitability for conveying global, multi-level evacuation information. In practical applications, the use of high-temperature-resistant translucent materials, phosphorescent guidance devices, and strobe lighting can enhance visibility under fire conditions, leading to improved perceptual efficiency of core evacuation information.

6.2. Discussion

- (1)

- The methodology employed in this study should be extended to additional types of disaster scenarios to explore the generalizability and variability in signage performance across different environments.

- (2)

- The current vertical classification of signage (low, medium, high) can be further refined, for instance, by using 0.5 m intervals. Additionally, the lack of consideration for horizontal positioning may influence conclusions regarding the variability in vertical signage performance. Future studies should incorporate both vertical and horizontal spatial dimensions for a more comprehensive analysis.

- (3)

- This study’s sample is limited to university students aged 18–23, which may not fully represent populations of various ages, social groups, or cultural backgrounds. These factors could influence signage perception and limit the applicability of the conclusions to groups with different wayfinding habits. Future research should include a broader sample range.

- (4)

- This study was conducted in idealized unoccupied environments, without directly simulating the impact of crowd congestion on the signage system. In crowded scenes, low-position signage may be obscured, significantly reducing its effectiveness, which is a critical issue in actual evacuations. In contrast, high-position signage maintains high visibility across all crowd densities. Therefore, in crowded situations, reliance on high-position signage should be prioritized, with medium-position signage serving as a supplementary guide.

- (5)

- Due to limitations in equipment and experimental techniques, this study collected eye-tracking data based solely on static panoramic images. This means that this study could not fully simulate the dynamic, real-time factors present in actual emergencies, leading to reduced realism and a lower sense of urgency in the experimental environment. Although the VR environment can overcome physical constraints and simulate complex scenarios that are difficult to achieve in reality, it can only approximate real scenes and cannot fully reproduce the panic and dynamic behaviors that occur during emergencies. This difference may influence the allocation of visual attention. Future research should explore methods for real-time monitoring in dynamic environments. Additionally, quantifying the effects of smoke density in fire scenarios and water turbidity in flood scenarios on signage effectiveness and visual accessibility would provide a more scientifically grounded evaluation of signage performance under actual disaster conditions.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| VR | Virtual Reality |

| AOI | Area of Interest |

| PR | Perception Rate |

| TDF | Total Duration of Fixations |

| ADF | Average Duration of Fixations |

| NF | Number of Fixations |

| NV | Number of Visits |

| FD | Fixation Density |

| TFF | Time to First Fixation |

| FFDR | First Fixation Dwell Ratio |

| DFF | Duration of First Fixation |

References

- Li, Y.; Xu, X.; Hou, S.; Dang, X.; Li, Z.; Gong, Y. Flood risk management-level analysis of subway station spaces. Water 2025, 17, 1084. [Google Scholar] [CrossRef]

- Jin, B.; Wang, J.; Wang, Y.; Gu, Y.; Wang, Z. Temporal and spatial distribution of pedestrians in subway evacuation under node failure by multi-hazards. Saf. Sci. 2020, 127, 104695. [Google Scholar] [CrossRef]

- The State Council Investigation Team. Investigation Report on the Extremely Heavy Rainfall Disaster in Zhengzhou, Henan on July 20 [R/OL]. Available online: https://www.mem.gov.cn/gk/sgcc/tbzdsgdcbg/202201/P020220121639049697767.pdf (accessed on 18 April 2025). (In Chinese)

- New York Post. Flash Flooding Causes Mayhem on NYC Streets and Subways [EB/OL]. Available online: https://nypost.com/2021/09/01/nyc-streets-subway-stations-overrun-by-flash-floods/ (accessed on 18 April 2025).

- Liao, P.; Chen, L.; Liang, Z.; Huang, Y.; Chen, H.; Sun, L. Investigating the optimal scale for subway station hall designs based on psychological perceptions and eye-tracking methods. J. Build. Eng. 2025, 103, 112175. [Google Scholar] [CrossRef]

- Zhang, M.; Xu, R.; Siu, M.F.F.; Luo, X. Human decision change in crowd evacuation: A virtual reality-based study. J. Build. Eng. 2023, 68, 106041. [Google Scholar] [CrossRef]

- Fu, M.; Liu, R.; Liu, Q. How individuals sense environments during indoor emergency wayfinding: An eye-tracking investigation. J. Build. Eng. 2023, 79, 107854. [Google Scholar] [CrossRef]

- Fan, R.; Dai, Z.; Tian, S.; Xia, T.; Huang, C. Research on spatial information transmission efficiency and capability of safety evacuation signs. J. Build. Eng. 2023, 71, 106448. [Google Scholar] [CrossRef]

- Luo, S.; Shi, J.; Lu, T.; Furuya, K. Sit down and rest: Use of virtual reality to evaluate preferences and mental restoration in urban park pavilions. Landsc. Urban Plan. 2022, 220, 104336. [Google Scholar] [CrossRef]

- Kim, J.H.; Kim, M.; Park, M.; Yoo, J. Immersive interactive technologies and virtual shopping experiences: Differences in consumer perceptions between augmented reality (AR) and virtual reality (VR). Telemat. Inform. 2023, 77, 101936. [Google Scholar] [CrossRef]

- Wang, Z.; He, R.; Rebelo, F.; Vilar, E.; Noriega, P. Human interaction with virtual reality: Investigating pre-evacuation efficiency in building emergency. Virtual Real. 2023, 27, 1039–1050. [Google Scholar] [CrossRef]

- Vukelic, G.; Ogrizovic, D.; Bernecic, D.; Glujic, D.; Vizentin, G. Application of VR technology for maritime firefighting and evacuation training—A review. J. Mar. Sci. Eng. 2023, 11, 1732. [Google Scholar] [CrossRef]

- Moreno-Arjonilla, J.; López-Ruiz, A.; Jiménez-Pérez, J.R.; Callejas-Aguilera, J.E.; Jurado, J.M. Eye-tracking on virtual reality: A survey. Virtual Real. 2024, 28, 38. [Google Scholar] [CrossRef]

- Zhang, X.; Chen, L.; Jiang, J.; Ji, Y.; Han, S.; Zhu, T.; Xu, W.; Tang, F. Risk analysis of people evacuation and its path optimization during tunnel fires using virtual reality experiments. Tunn. Undergr. Space Technol. 2023, 137, 105133. [Google Scholar] [CrossRef]

- Wang, Z.; Mao, Z.; Li, Y.; Yu, L.; Zou, L. VR-based fire evacuation in underground rail station considering staff’s behaviors: Model, system development and experiment. Virtual Real. 2023, 27, 1145–1155. [Google Scholar] [CrossRef]

- Xia, X.; Chen, J.; Zhang, J.; Li, N. How the strength of social relationship affects pedestrian evacuation behavior: A multi-participant fire evacuation experiment in a virtual metro station. Transp. Res. Part C Emerg. Technol. 2024, 167, 104805. [Google Scholar] [CrossRef]

- Xie, H.; Galea, E.R. A survey-based study concerning public comprehension of two-component EXIT/NO-EXIT signage concepts. Fire Mater. 2022, 46, 876–887. [Google Scholar] [CrossRef]

- Wong, L.T.; Lo, K.C. Experimental study on visibility of exit signs in buildings. Build. Environ. 2007, 42, 1836–1842. [Google Scholar] [CrossRef]

- Nilsson, D.; Frantzich, H.; Saunders, W.L. Coloured Flashing Lights to Mark Emergency Exits-Experiences from Evacuation Experiments. Fire Saf. Sci. 2005, 8, 569–579. [Google Scholar] [CrossRef]

- Ding, N. The effectiveness of evacuation signs in buildings based on eye tracking experiment. Nat. Hazards 2020, 103, 1201–1218. [Google Scholar] [CrossRef]

- Chen, N.; Zhao, M.; Gao, K.; Zhao, J. The physiological experimental study on the effect of different color of safety signs on a virtual subway fire escape—An exploratory case study of zijing mountain subway station. Int. J. Environ. Res. Public Health 2020, 17, 5903. [Google Scholar] [CrossRef]

- Tonikian, R.; Proulx, G.; Bénichou, N.; Reid, I. Literature review on photoluminescent material used as a safety wayguidance system. PLM V6-2 2006, 1, 3–31. [Google Scholar] [CrossRef]

- Kobes, M.; Helsloot, I.; De Vries, B.; Post, J.G.; Oberijé, N.; Groenewegen, K. Way finding during fire evacuation; an analysis of unannounced fire drills in a hotel at night. Build. Environ. 2010, 45, 537–548. [Google Scholar] [CrossRef]

- Olander, J.; Ronchi, E.; Lovreglio, R.; Nilsson, D. Dissuasive exit signage for building fire evacuation. Appl. Ergon. 2017, 59, 84–93. [Google Scholar] [CrossRef]

- Liu, Z.; Zou, R. Dynamic evacuation path planning for subway station fire based on IACO. J. Build. Eng. 2024, 86, 108828. [Google Scholar] [CrossRef]

- Zhang, N.; Liang, Y.; Zhou, C.; Niu, M.; Wan, F. Study on fire smoke distribution and safety evacuation of subway station based on BIM. Appl. Sci. 2022, 12, 12808. [Google Scholar] [CrossRef]

- Cao, S.; Wang, M.; Zeng, G.; Li, X. Simulation of crowd evacuation in subway stations under flood disasters. IEEE Trans. Intell. Transp. Syst. 2024, 25, 11858–11867. [Google Scholar] [CrossRef]

- Feng, J.R.; Gai, W.; Yan, Y. Emergency evacuation risk assessment and mitigation strategy for a toxic gas leak in an underground space: The case of a subway station in Guangzhou, China. Saf. Sci. 2021, 134, 105039. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, X.; Jiang, J. Wayfinding-oriented design for passenger guidance signs in large-scale transit center in China. Transp. Res. Rec. 2010, 2144, 150–160. [Google Scholar] [CrossRef]

- Hu, X.; Xu, L. How Guidance Signage Design Influences Passengers’ Wayfinding Performance in Metro Stations: Case Study of a Virtual Reality Experiment. Transp. Res. Rec. 2023, 2677, 1118–1129. [Google Scholar] [CrossRef]

- Wang, K.; Shen, C.; Li, M.; Li, J. Research on Users’ Willingness to Use the Urban Subway Wayfinding Signage System Based on the DeLone & McLean Model Theory: A Case Study of Wuxi Subway. Systems 2024, 12, 529. [Google Scholar] [CrossRef]

- Zhou, M.; Dong, H.; Wang, X.; Hu, X.; Ge, S. Modeling and simulation of crowd evacuation with signs at subway platform: A case study of Beijing subway stations. IEEE Trans. Intell. Transp. Syst. 2022, 23, 1492–1504. [Google Scholar] [CrossRef]

- Cavanagh, P. Visual cognition. Vis. Res. 2011, 51, 1538–1551. [Google Scholar] [CrossRef]

- Wickens, C.D.; Carswell, C.M. Information processing. In Handbook of Human Factors and Ergonomics; John Wiley & Sons: Hoboken, NJ, USA, 2021; pp. 114–158. [Google Scholar] [CrossRef]

- Schneck, C.M. Visual perception. Occup. Ther. Child. 2005, 3, 357–386. [Google Scholar]

- Bitzer, S.; Park, H.; Maess, B.; Von Kriegstein, K.; Kiebel, S.J. Representation of perceptual evidence in the human brain assessed by fast, within-trial dynamic stimuli. Front. Hum. Neurosci. 2020, 14, 9. [Google Scholar] [CrossRef] [PubMed]

- Hasanzadeh, S.; Esmaeili, B.; Dodd, M.D. Examining the relationship between construction workers’ visual attention and situation awareness under fall and tripping hazard conditions: Using mobile eye tracking. J. Constr. Eng. Manag. 2018, 144, 04018060. [Google Scholar] [CrossRef]

- Guo, F.; Ding, Y.; Liu, W.; Liu, C.; Zhang, X. Can eye-tracking data be measured to assess product design?: Visual attention mechanism should be considered. Int. J. Ind. Ergon. 2016, 53, 229–235. [Google Scholar] [CrossRef]

- Carter, B.T.; Luke, S.G. Best practices in eye tracking research. Int. J. Psychophysiol. 2020, 155, 49–62. [Google Scholar] [CrossRef]

- Chen, Y.; Jia, J.; Che, G. Simulation of large-scale tunnel belt fire and smoke characteristics under a water curtain system based on CFD. ACS Omega 2022, 7, 40419–40431. [Google Scholar] [CrossRef]

- Wan, Z.; Zhou, T.; Luo, N. A Literature Review on Layout of Emergency Evacuation Signs of Exhibition Hall in Exhibition and Convention Center Based on Visibility. Build. Sci. 2020, 36, 160–168. (In Chinese) [Google Scholar] [CrossRef]

- Wan, Z.; Zhou, T.; Xiong, J.; Pan, G. Smart Safety Design for Evacuation Signs in Large Space Buildings Based on Height Setting and Visual Range of Evacuation Signs. Buildings 2024, 14, 2875. [Google Scholar] [CrossRef]

- Shi, J.; Ding, N.; Zhang, Z. Evacuation Decision-Making in Fire Situation: Base on Eye Movement Experiment in Physical and Virtual Environments. Case Stud. Therm. Eng. 2025, 71, 106148. [Google Scholar] [CrossRef]

- Tang, Z.; Liu, X.; Huo, H.; Tang, M.; Qiao, X.; Chen, D.; Dong, Y.; Fan, L.; Wang, J.; Du, X.; et al. Eye movement characteristics in a mental rotation task presented in virtual reality. Front. Neurosci. 2023, 17, 1143006. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Wang, P.; Li, L.; Liu, J. The influence of architectural heritage and tourists’ positive emotions on behavioral intentions using eye-tracking study. Sci. Rep. 2025, 15, 1447. [Google Scholar] [CrossRef] [PubMed]

- Hao, S.; Hou, R.; Zhang, J.; Shi, Y.; Zhang, Y.; Wang, C. Visual behavior characteristics of historical landscapes based on eye-tracking technology. J. Asian Arch. Build. Eng. 2025, 24, 487–506. [Google Scholar] [CrossRef]

- Ding, N.; Zhong, Y.; Li, J.; Xiao, Q. Study on selection of native greening plants based on eye-tracking technology. Sci. Rep. 2022, 12, 1092. [Google Scholar] [CrossRef]

- Lin, W.; Mu, Y.; Zhang, Z.; Wang, J.; Diao, X.; Lu, Z.; Guo, W.; Wang, Y.; Xu, B. Research on cognitive evaluation of forest color based on visual behavior experiments and landscape preference. PLoS ONE 2022, 17, e0276677. [Google Scholar] [CrossRef]

- Xu, J.; Baliutaviciute, V.; Swan, G.; Bowers, A.R. Driving with hemianopia X: Effects of cross traffic on gaze behaviors and pedestrian responses at intersections. Front. Hum. Neurosci. 2022, 16, 938140. [Google Scholar] [CrossRef]

- Miscenà, A.; Arato, J.; Rosenberg, R. Absorbing the gaze, scattering looks: Klimt’s distinctive style and its two-fold effect on the eye of the beholder. J. Eye Mov. Res. 2020, 13, 1–13. [Google Scholar] [CrossRef]

- Tobii. [Eye Tracking Has No Problem] Issue 8—How to Calculate AOI Area? [EB/OL]. Available online: https://zhuanlan.zhihu.com/p/621346101 (accessed on 18 April 2025). (In Chinese).

- Linka, M.; Broda, M.D.; Alsheimer, T.; De Haas, B.; Ramon, M. Characteristic fixation biases in Super-Recognizers. J. Vis. 2022, 22, 17. [Google Scholar] [CrossRef]

- Villegas, E.; Fonts, E.; Fernández, M.; Fernández-Guinea, S. Visual Attention and Emotion Analysis Based on Qualitative Assessment and Eyetracking Metrics—The Perception of a Video Game Trailer. Sensors 2023, 23, 9573. [Google Scholar] [CrossRef]

- Galea, E.R.; Xie, H.; Lawrence, P.J. Experimental and survey studies on the effectiveness of dynamic signage systems. Fire Saf. Sci. 2014, 11, 1129–1143. [Google Scholar] [CrossRef]

- Cheimaras, V.; Trigkas, A.; Papageorgas, P.; Piromalis, D.; Sofianopoulos, E. A low-cost open-source architecture for a digital signage emergency evacuation system for cruise ships, based on IoT and LTE/4G technologies. Future Internet 2022, 14, 366. [Google Scholar] [CrossRef]

- Kinkel, E.; van der Wal, C.N.; Hoogendoorn, S.P. The effects of three environmental factors on problem-solving abilities during evacuation. J. Build. Eng. 2025, 99, 111546. [Google Scholar] [CrossRef]

- Zhao, H.; Schwabe, A.; Schläfli, F.; Thrash, T.; Aguilar, L.; Dubey, R.K.; Karjalainen, J.; Hölscher, C.; Helbing, D.; Schinazi, V.R. Fire evacuation supported by centralized and decentralized visual guidance systems. Saf. Sci. 2022, 145, 105451. [Google Scholar] [CrossRef]

- Hong, C.; Li, J.; Zhang, J.; Zhai, R.; Fei, B.; Zhou, C. Rational design of a novel siloxane-branched waterborne polyurethane coating with waterproof and antifouling performance. Prog. Org. Coat. 2024, 194, 108576. [Google Scholar] [CrossRef]

- Jeon, G.Y.; Hong, W.H. An experimental study on how phosphorescent guidance equipment influences on evacuation in impaired visibility. J. Loss Prev. Process Ind. 2009, 22, 934–942. [Google Scholar] [CrossRef]

- GB/T 33668-2017; Code for Safety Evacuation of Metro [S/OL]. China Standards Press: Beijing, China, 2017. Available online: https://openstd.samr.gov.cn/bzgk/gb/newGbInfo?hcno=27AA44A76DD3ADF2A3F5701238E3951A&refer=outter (accessed on 1 April 2025). (In Chinese)

| Indicator Category | Indicator Name | Fire Scenario | Flood Scenario | |||||

|---|---|---|---|---|---|---|---|---|

| Low | Medium | High | Low | Medium | High | |||

| Perception Rate | Perception Rate (PR) | Mean | 84.87% | 82.28% | 94.23% | 78.86% | 90.63% | 95.52% |

| Signage Importance | Total Duration of Fixations (TDF) | Mean | 342.58 | 354.83 | 645.22 | 214.45 | 383.32 | 463.26 |

| SD | 437.17 | 347.40 | 739.67 | 168.94 | 397.39 | 559.31 | ||

| Average Duration of Fixations (ADF) | Mean | 185.73 | 190.48 | 210.71 | 144.91 | 178.73 | 173.55 | |

| SD | 120.99 | 125.28 | 126.61 | 65.33 | 109.76 | 125.80 | ||

| Number of Fixations (NF) | Mean | 1.79 | 1.90 | 3.03 | 1.49 | 2.10 | 2.56 | |

| SD | 1.31 | 1.38 | 2.75 | 0.88 | 1.69 | 2.21 | ||

| Number of Visits (NV) | Mean | 1.34 | 1.25 | 1.61 | 1.13 | 1.43 | 1.48 | |

| SD | 0.72 | 0.59 | 1.01 | 0.39 | 0.79 | 0.86 | ||

| Fixation Density (FD) | Mean | 0.82 | 1.24 | 0.49 | 1.19 | 1.20 | 0.39 | |

| SD | 4.34 | 2.42 | 0.65 | 2.69 | 1.76 | 0.50 | ||

| Signage Immediacy | Time to First Fixation (TFF) | Mean | 3786.32 | 4316.71 | 4265.93 | 4791.24 | 3987.44 | 3889.01 |

| SD | 2801.65 | 2761.67 | 2685.51 | 2436.35 | 2832.09 | 2697.02 | ||

| First Fixation Dwell Ratio (FFDR) | Mean | 74.08% | 71.26% | 55.71% | 78.70% | 67.80% | 61.36% | |

| SD | 0.33 | 0.34 | 0.37 | 0.32 | 0.35 | 0.36 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Men, T.; Ran, J.; Chen, X.; Wu, K.; Zhao, L.; Xu, H.; Liao, H. Assessment of Visual Effectiveness of Metro Evacuation Signage in Fire and Flood Scenarios: A VR-Based Eye-Movement Experiment. Buildings 2025, 15, 3771. https://doi.org/10.3390/buildings15203771

Li Y, Men T, Ran J, Chen X, Wu K, Zhao L, Xu H, Liao H. Assessment of Visual Effectiveness of Metro Evacuation Signage in Fire and Flood Scenarios: A VR-Based Eye-Movement Experiment. Buildings. 2025; 15(20):3771. https://doi.org/10.3390/buildings15203771

Chicago/Turabian StyleLi, Yi, Tongyu Men, Jing Ran, Xingtong Chen, Kaiqi Wu, Li Zhao, Haohao Xu, and Hua Liao. 2025. "Assessment of Visual Effectiveness of Metro Evacuation Signage in Fire and Flood Scenarios: A VR-Based Eye-Movement Experiment" Buildings 15, no. 20: 3771. https://doi.org/10.3390/buildings15203771

APA StyleLi, Y., Men, T., Ran, J., Chen, X., Wu, K., Zhao, L., Xu, H., & Liao, H. (2025). Assessment of Visual Effectiveness of Metro Evacuation Signage in Fire and Flood Scenarios: A VR-Based Eye-Movement Experiment. Buildings, 15(20), 3771. https://doi.org/10.3390/buildings15203771