1. Introduction

Cementitious composites, particularly those incorporating waste plastic, have emerged as promising avenues in the construction sector, driven by the global push for sustainable and environmentally friendly materials [

1]. Plastic-modified mortars are sustainable but limited by low strength [

1,

2]. The strength reduction is mainly due to the weak bond between plastic particles and the cement matrix, as well as the lower stiffness and durability of plastic compared to natural aggregates such as sand or gravel [

3]. Despite these challenges, substituting industrial waste, including waste plastic, for cement in PBMs presents an environmentally preferable and long-term sustainable alternative [

4]. This approach not only reduces the demand for virgin materials but also mitigates the environmental impact of plastic waste accumulation, which is a growing concern worldwide [

4,

5]. According to recent studies, the construction industry consumes approximately 40% of global raw materials, making the adoption of recycled materials a critical step toward sustainability [

6]. The incorporation of waste plastic into cementitious composites is motivated by the need to address the environmental consequences of plastic pollution [

7]. Globally, over 300 million tons of plastic waste are generated annually, with a significant portion ending up in landfills or incinerators, contributing to environmental degradation. By repurposing this waste into construction materials, the industry can reduce landfill dependency, lower carbon emissions associated with cement production, and promote a circular economy [

8]. However, the trade-offs in mechanical performance, such as reduced compressive and tensile strength, pose a significant barrier. For instance, studies have shown that replacing natural aggregates with plastic can lead to a strength reduction of up to 30% in some cases, depending on the type and proportion of plastic used [

9]. This limitation necessitates innovative approaches to enhance the performance of PBMs while maintaining their environmental benefits [

10].

The advent of machine learning (ML) has revolutionized material science, offering powerful tools to address the limitations of cementitious composites with waste plastic [

3]. ML techniques enable researchers to model complex relationships between material composition, processing parameters, and mechanical properties, which are often difficult to capture using traditional experimental methods [

11]. By leveraging large datasets from experimental studies, ML algorithms can predict the performance of PBMs, optimize mix designs, and identify the most effective combinations of waste plastic and other industrial by-products [

12]. For example, supervised learning models, such as regression and neural networks, have been used to predict compressive strength based on variables like plastic content, curing time, and cement type [

13]. These models provide insights into the fac tors that most significantly impact performance, enabling engineers to design composites with improved strength and durability. Moreover, ML facilitates the exploration of sustainable alternatives by reducing the need for extensive and costly experimental trials. Through predictive modeling, researchers can simulate the behavior of PBMs under various conditions, such as different environmental exposures or loading scenarios, without the need for physical testing [

14,

15]. This capability is particularly valuable in the context of waste plastic, where variability in plastic type, size, and chemical composition introduces significant uncertainty. For instance, ML algorithms can account for the heterogeneity of recycled plastic, which may include polyethylene terephthalate (PET), high-density polyethylene (HDPE), or polypropylene (PP), each with distinct mechanical and chemical properties. In addition, the type of plastic waste plays a crucial role in determining the performance of PBMs [

14]. For example, polyethylene terephthalate (PET) generally enhances durability but may reduce compressive strength due to its smooth surface texture, whereas high-density polyethylene (HDPE) often leads to lower stiffness because of its low bonding capacity with the cement matrix [

16]. Polypropylene (PP), on the other hand, can improve toughness and crack resistance but may negatively influence workability. These differences highlight the need for tailored mix designs depending on the plastic type employed, which this study seeks to address [

17]. By analyzing these variables, ML can guide the development of PBMs that balance environmental benefits with structural performance. Despite the advantages of ML, traditional approaches have certain limitations when ap plied to cementitious composites with waste plastic [

18]. One major challenge is the reliance on high-quality, comprehensive datasets. Experimental data on PBMs are often limited, scattered, or inconsistent due to variations in testing conditions, material sources, and mix proportions [

19]. This scarcity can lead to overfitting or poor generalization in ML models, reducing their predictive accuracy. Additionally, traditional ML models may struggle to capture the complex, non-linear interactions between the diverse components of PBMs, such as the interfacial bonding between plastic and cement or the effects of chemical additives [

20]. These shortcomings have prompted researchers to explore hybrid ma chine learning approaches, which combine multiple algorithms or integrate ML with other computational techniques, such as finite element modeling or physics-based simulations [

21]. Hybrid ML methods offer several advantages over traditional approaches. For example, ensemble methods, which combine predictions from multiple models (e.g., random forests, gradient boosting, and neural networks), can improve accuracy and robustness by leveraging the strengths of individual algorithms [

22]. Similarly, integrating ML with domain-specific knowledge, such as material science principles or empirical equations, can enhance model interpretability and performance [

23]. For instance, a hybrid model might use ML to predict the mechanical properties of PBMs while incorporating physical constraints, such as the maximum allowable plastic content to maintain structural integrity [

7]. These approaches have shown promise in addressing the variability and complexity of waste plastic composites, enabling more reliable predictions and optimized designs [

24].

While ML approaches represent a significant advancement, they are not without their challenges [

25]. One general shortcoming is the computational complexity and resource intensity of these methods, which can be prohibitive for large-scale applications [

26]. Additionally, the interpretability of models remains a concern, as complex algorithms like deep neural networks often function as “black boxes”, making it difficult to understand the underlying relationships between inputs and outputs [

27]. This lack of transparency can hinder the adoption of ML-based solutions in the construction industry, where engineers prioritize reliability and traceability [

28]. Furthermore, the generalizability of models across different types of waste plastic or environmental conditions requires further validation, as most existing studies focus on specific plastic types or laboratory-controlled settings [

29]. These challenges underscore the need for continued research into the application of ML techniques for cementitious composites with waste plastic [

30]. The significance of this research lies in its potential to bridge the gap between sustainability and performance in the construction sector [

30,

31]. By developing robust, interpretable, and scalable ML models, this study aims to optimize the use of waste plastic in PBMs, thereby reducing environmental impact while meeting the structural requirements of modern construction [

32]. The research will focus on addressing the limitations of existing ML approaches, exploring novel methods, and validating their applicability across diverse plastic waste streams [

33]. Ultimately, this work seeks to contribute to the development of sustainable construction materials that align with global efforts to combat plastic pollution and promote resource efficiency [

34]. In summary, cementitious composites incorporating waste plastic offer a sustainable alternative for the construction industry, but their limited mechanical performance necessitates innovative solutions [

35]. Machine learning, particularly approaches, provides a powerful framework for optimizing these materials, addressing their complexities, and overcoming the limitations of traditional methods [

36]. However, challenges such as data scarcity, model interpretability, and computational demands highlight the need for further advancements [

37].

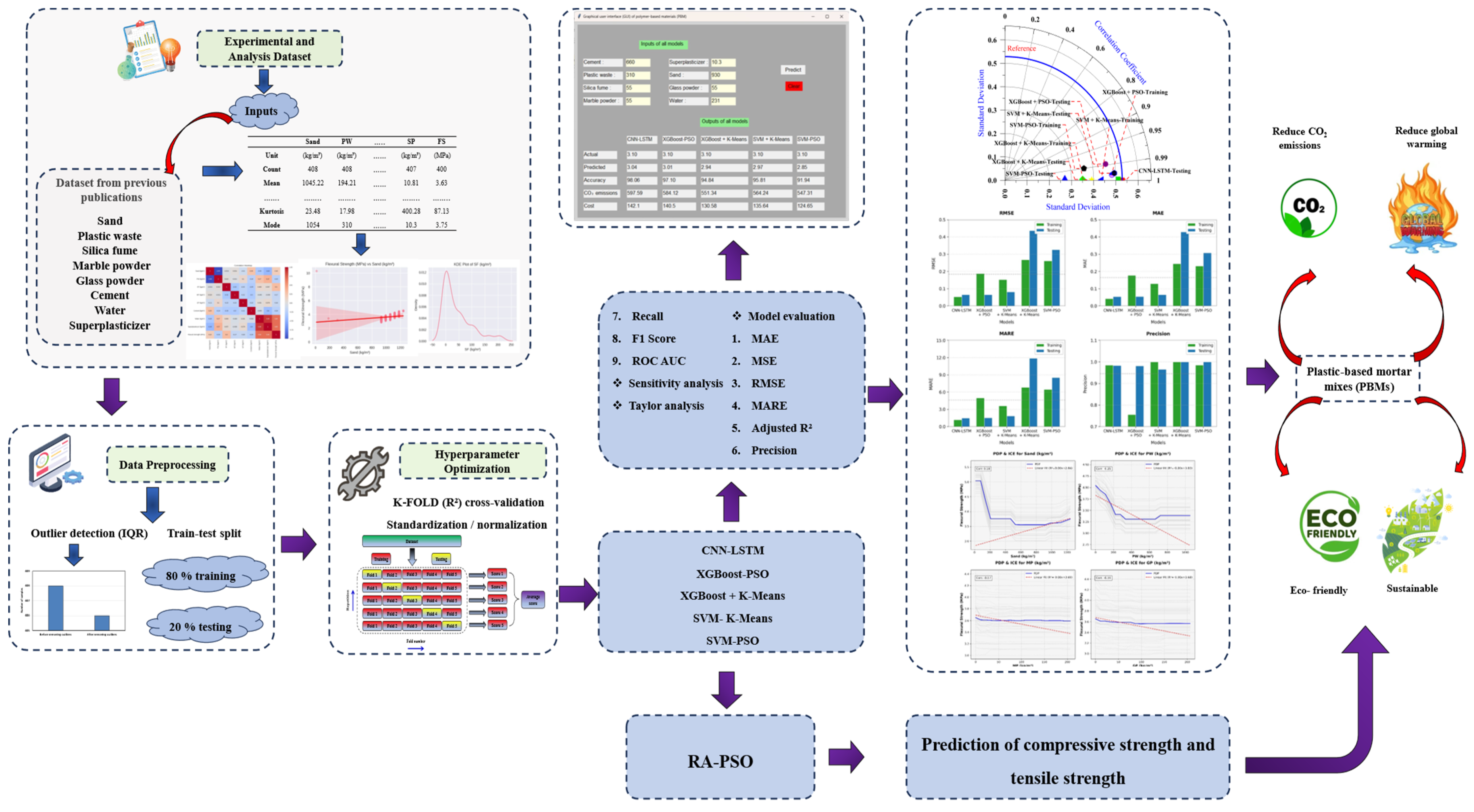

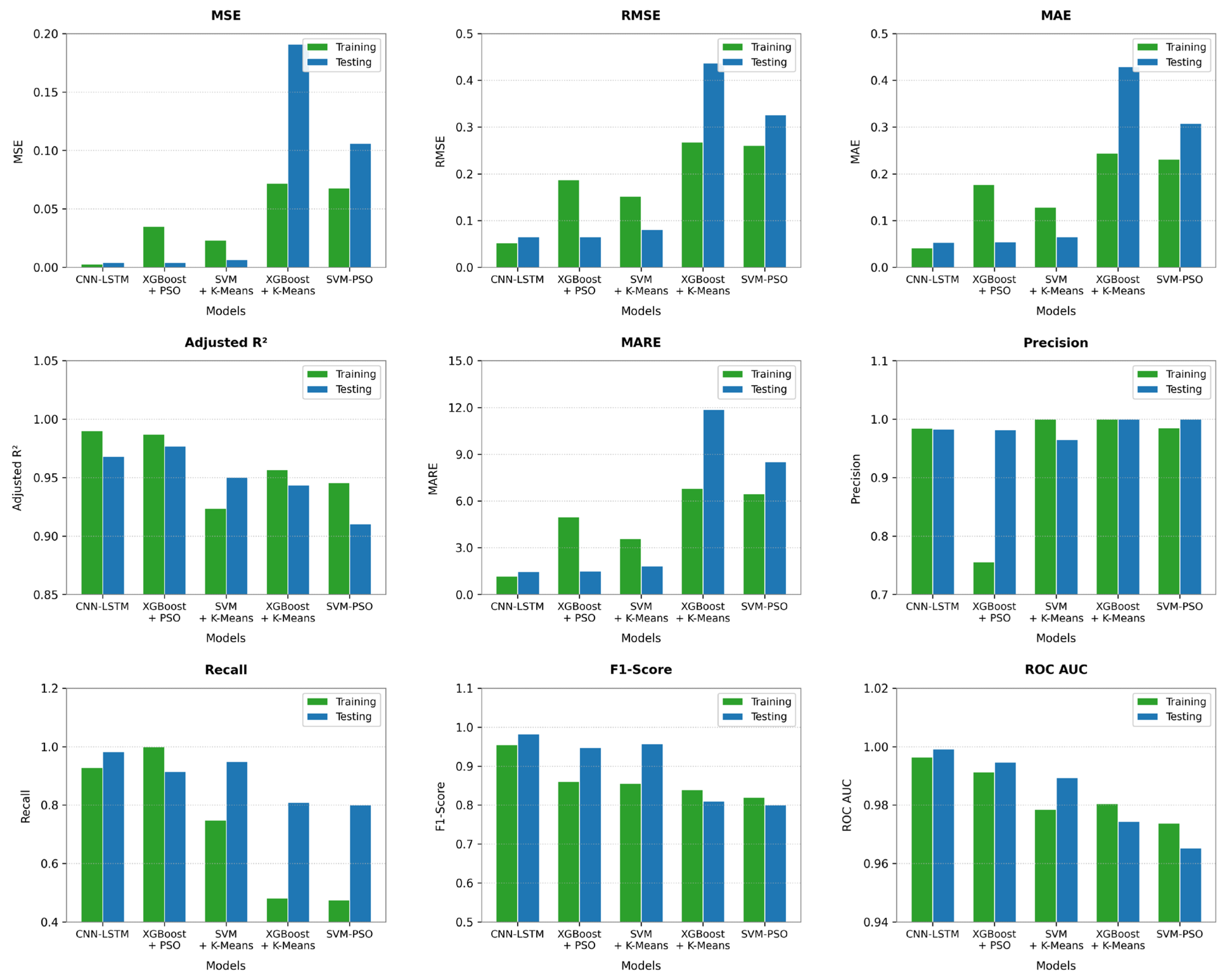

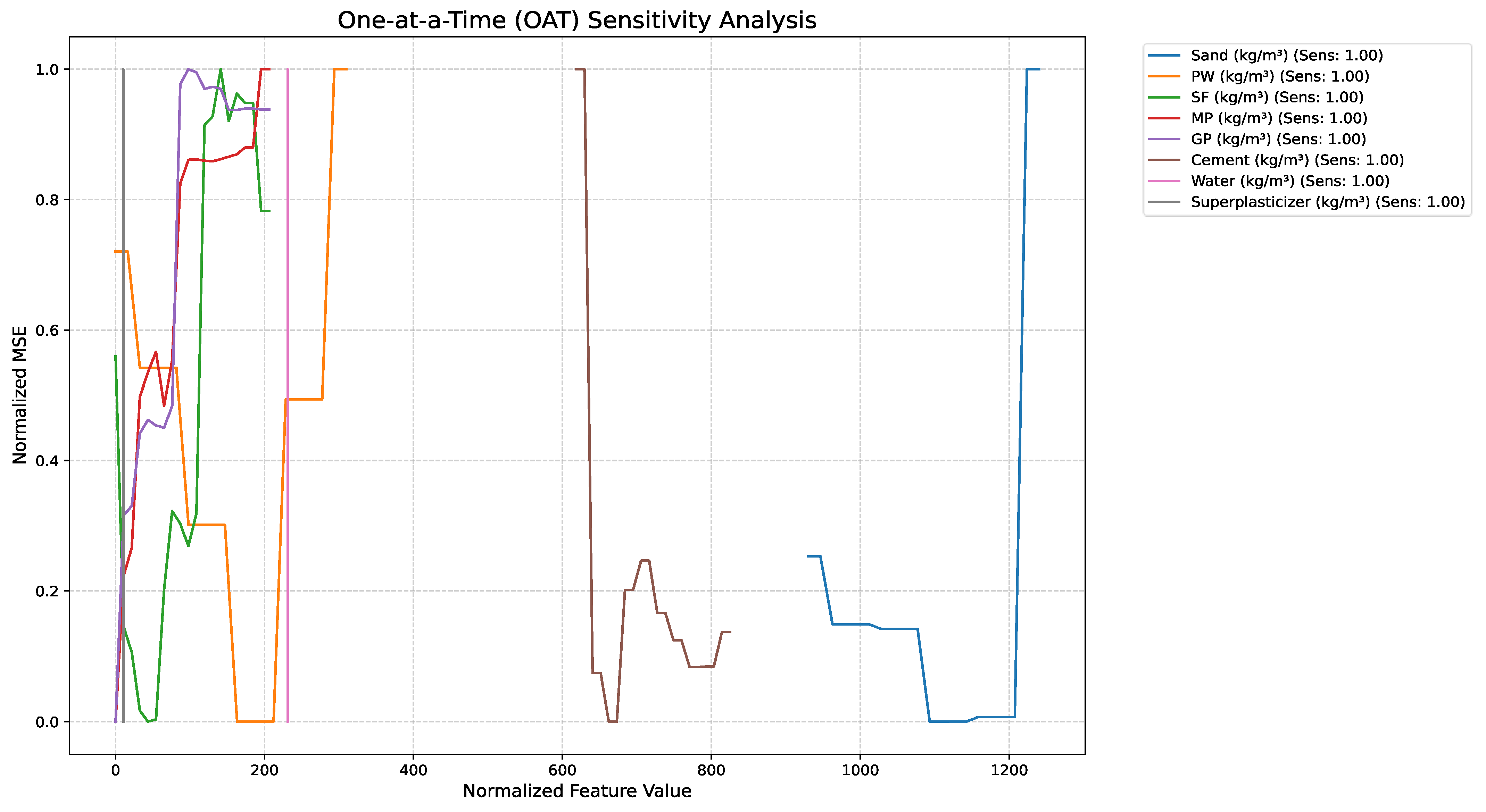

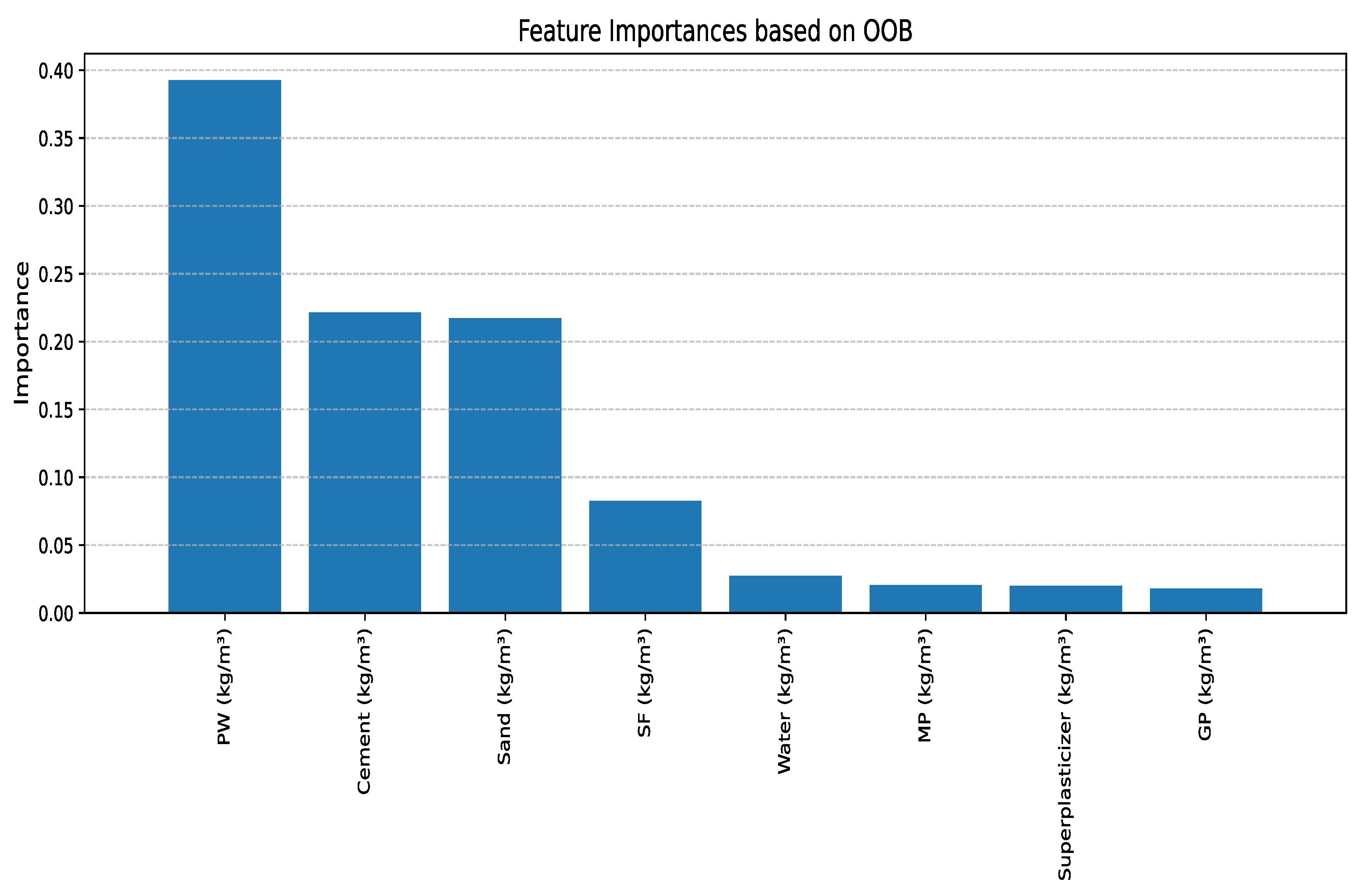

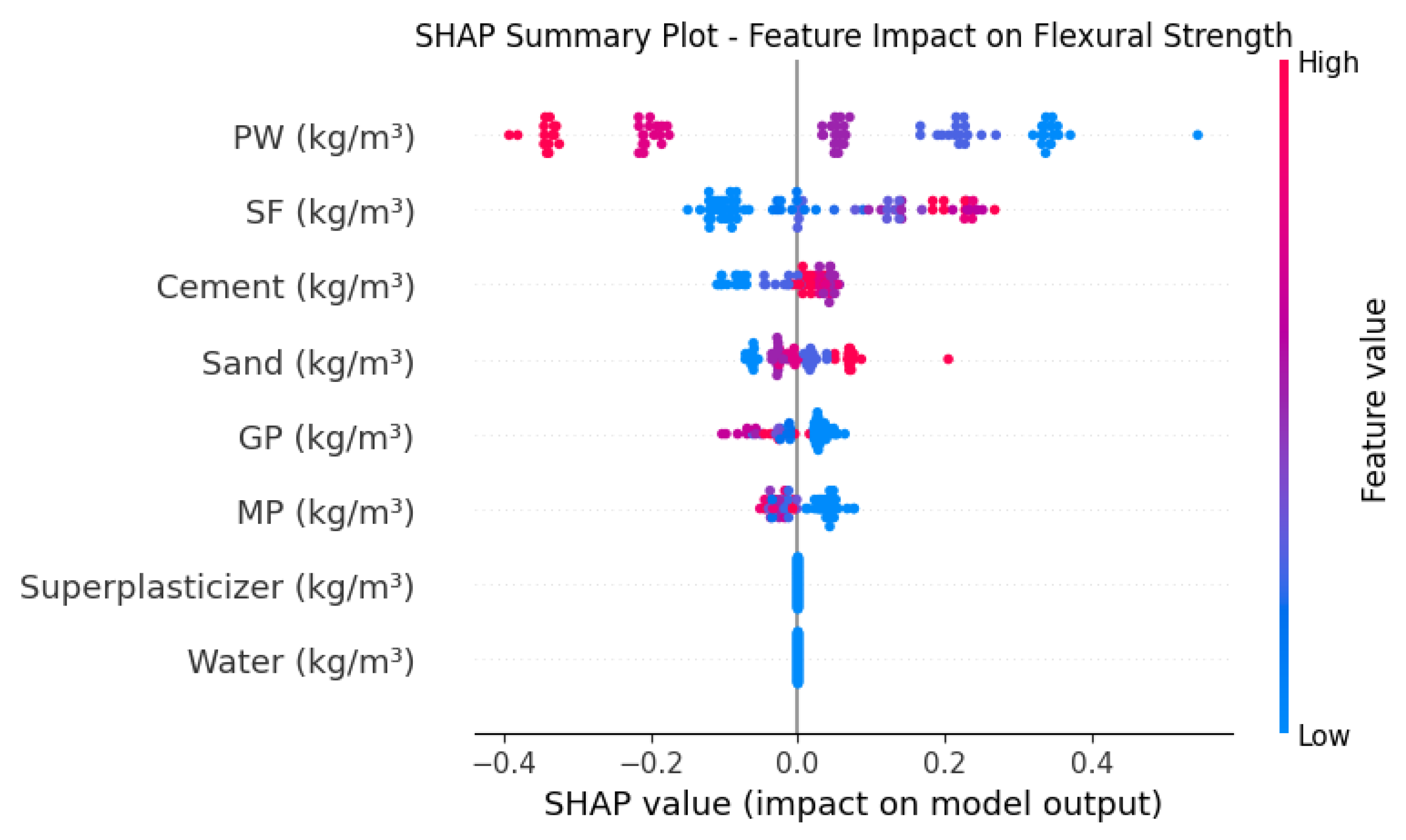

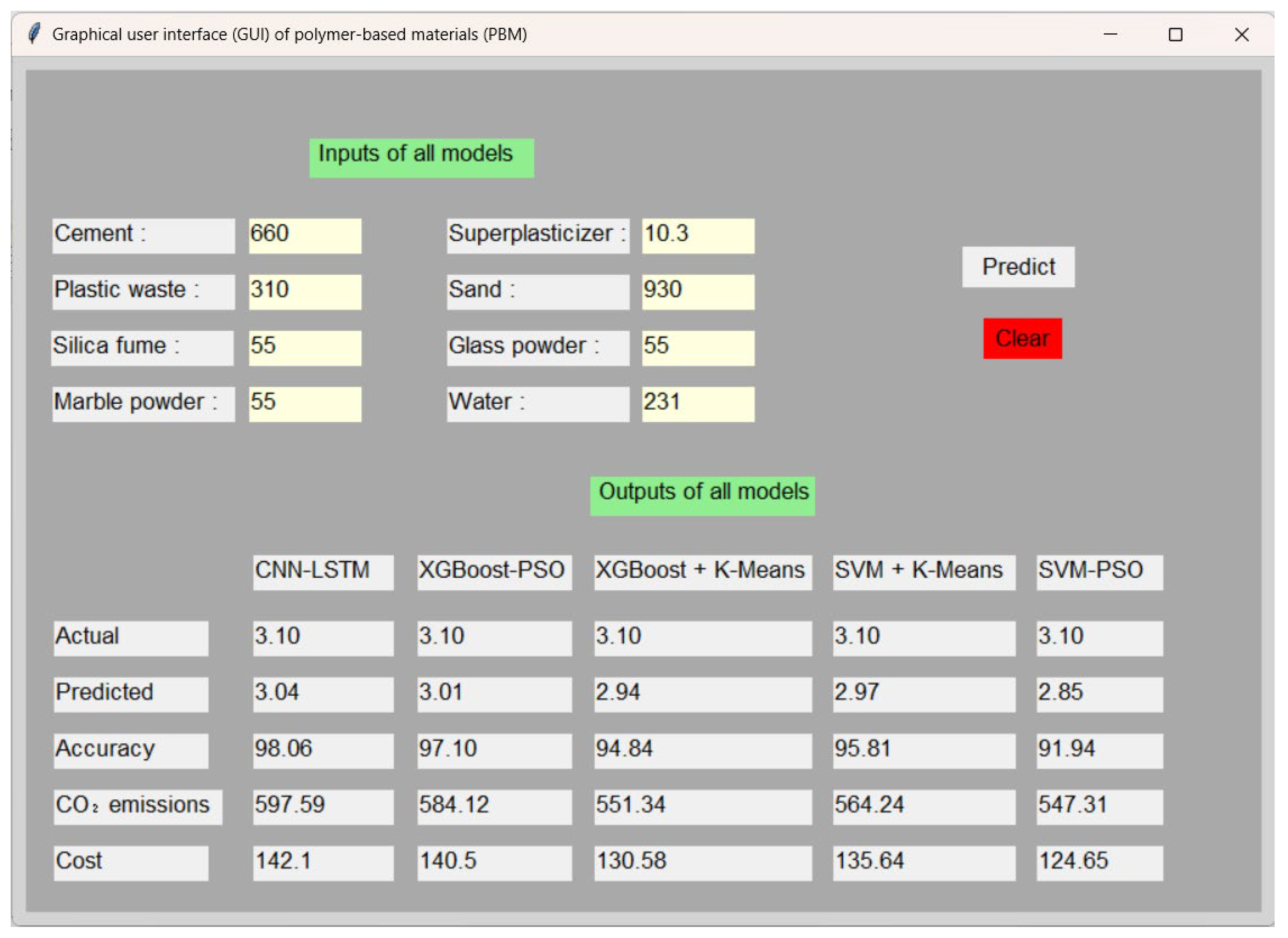

Instead of previous studies that focused solely on predicting the performance of a single objective, this research adopted hybrid predictive modeling to provide comprehensive tools for predicting flexural strength, production cost, and CO2 emissions in PBMs to bridge the knowledge gaps. Approaches such as CNN-LSTM, XGBoost-PSO, SVM + K-Means, XGBoost + K-Means, and SVM-PSO were used to achieve this, with detailed explanations of their computational concepts. All hybrid models are based on a large-scale database compiled from peer-reviewed scientific publications to develop a unified framework for predicting flexural strength, production cost, and CO2 emissions in plastic-based mortar mixtures. The study used two types of statistical metrics—regression metrics such as adjusted R2, MSE, RMSE, MARE, and MAE, and classification metrics such as precision, recall, F1 score, and ROC AUC—to evaluate and compare the performance of all models. Out-of-Bag (OOB) error, stepwise sensitivity analysis (OAT), feature importance, and ICE plots with PDPs were used to evaluate the influence of input parameters on the prediction of the flexural strength model. The RA-PSO model was applied to predict compressive and tensile forces by estimating the actual values using empirical equations and then predicting them. Furthermore, machine learning can estimate carbon dioxide emissions and concrete production costs, contributing to the promotion of sustainable construction practices. A graphical user interface (GUI) was developed using Python (version 3.9.13) based on the model, highlighting the originality of this study.

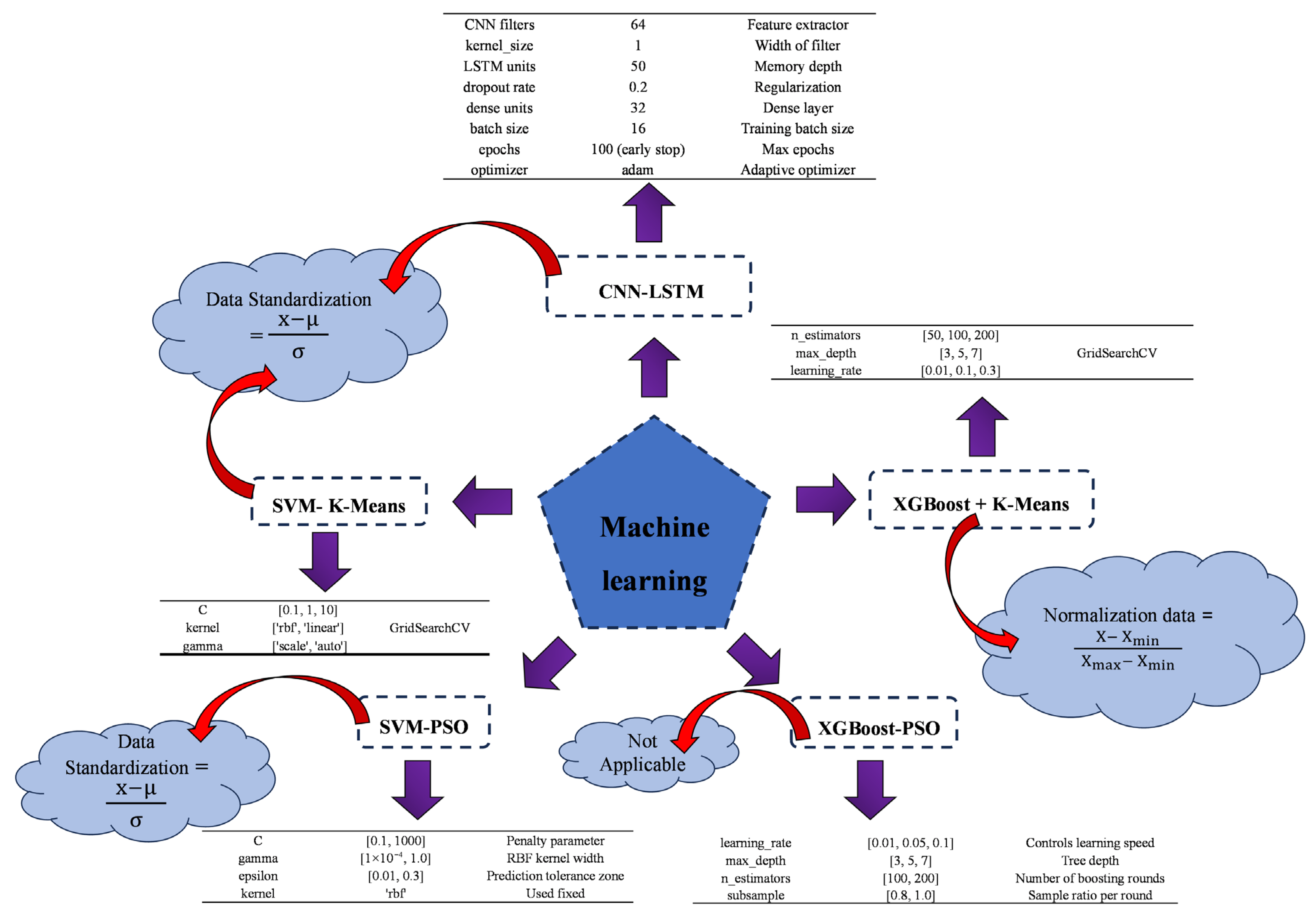

3. Description of ML Models

CNN LSTM blends convolutional neural networks’ spatial feature collecting with LSTM units’ temporal sequence modelling. A 1000-vocabulary embedding layer creates 10-sized output vectors and receives 5-sized inputs [

56]. Following that, a one-dimensional convolutional layer with 128 filters and a kernel size of 2 retrieves local patterns from the input sequence [

57]. ReLU activation functions improve training convergence over sigmoid functions [

58]. A max-pooling layer with a pooling size of 2 decreases dimensionality and retains important information after convolution [

59]. A 100-memory-unit LSTM layer efficiently manages feature map sequential dependencies in small datasets [

60]. LSTM output becomes final forecasts with fully connected dense layers. The Adam optimiser trains the model using MAE loss metric for 200 epochs [

61]. SVMs are preprocessed using K-Means clustering to better capture complex cementitious material system interactions in this hybrid method [

62]. SVM is adept at handling high-dimensional, nonlinear relationships by identifying optimal separating hyperplanes [

63], making it suitable for applications involving heterogeneous materials like concrete. By grouping samples with similar characteristics such as porosity, mix ratios, and admixture levels—K-Means introduces structural segmentation into the dataset [

64]. These clusters can either be used to train specialized SVMs per group or serve as categorical features that enrich the input representation [

64,

65]. This combined approach enables SVM to adapt more precisely to localized data patterns. The synergy between SVM’s classification strength and K-Means’ pattern detection enhances model adaptability and interpretability [

66].

The SVM PSO approach fuses the classification power of Support Vector Machines with the parameter search capabilities of Particle Swarm Optimization [

67]. In this model, PSO automates the selection of critical SVM hyperparameters (C, gamma, and epsilon) by simulating the social learning behavior of particles in a swarm [

68]. This optimization mechanism helps achieve better regression performance by reducing manual intervention and enhancing generalization [

69]. The use of kernel functions enables the model to learn nonlinear relationships effectively, even with limited data. However, this approach introduces computational overhead due to the iterative nature of PSO [

70]. Additionally, model interpretability may be compromised, as the decision boundaries are influenced by transformed high-dimensional feature spaces [

71]. XGBoost PSO combines the decision-tree-based gradient boosting framework of XGBoost with the adaptive global search capabilities of PSO [

72]. XGBoost leverages an ensemble of trees to learn from structured datasets, capturing both linear and nonlinear relationships with high predictive accuracy [

73]. However, its effectiveness is tightly linked to well-tuned hyperparameters such as learning rate, max depth, and number of estimators [

74]. To automate this tuning process, PSO explores the hyperparameter space by adjusting each particle’s configuration based on personal and collective best performance. This self-organizing optimization enhances model accuracy while minimizing the need for exhaustive manual tuning procedures like grid search [

75]. XGBoost PSO is particularly effective for high-dimensional problems due to its built-in regularization mechanisms, feature importance capabilities, and resilience to multicollinearity [

76]. Nonetheless, the model may demand substantial computational resources, and the interpretability of results may be reduced when dealing with large ensembles [

77]. The integration of Extreme Gradient Boosting (XGBoost) with K-Means clustering represents a powerful hybrid framework that combines the strengths of both supervised and unsupervised learning techniques [

78]. XGBoost is appropriate for complex regression problems with nonlinear and high-dimensional feature interactions due to its accuracy and overfitting resilience [

79]. Unsupervised K-Means groups data by feature similarity to uncover structures that supervised learning may miss [

80]. Applying K-Means to water-to-cement ratio, lithium carbonate concentration, porosity, and material density reveals natural groups associated to hydration mechanisms, mixture formulations, and curing dynamics. XGBoost has many uses for these data clusters. Cluster IDs can be used to train models inside each cluster to focus on local behaviours or broaden input space as categorical characteristics [

81].

Overall, the XGBoost + K-Means model provides a robust tool for modeling advanced cement-based materials, supporting more precise design strategies and reliable forecasting of performance under varying compositional and environmental conditions. The Randomized Adaptive Particle Swarm Optimization (RA-PSO) technique improves convergence and prevents stagnation by combining adaptive control and randomization [

82]. This technique adjusts particle placements based on personal and global best experiences and dynamic and stochastic updates to sustain swarm variety [

83]. This randomization helps the algorithm avoid local minima. This paper trains an ANN by globally optimizing weights and biases with RA-PSO [

82]. A particle represents an ANN configuration, and the fitness function measures the network’s prediction error. RA-PSO excels at complex, nonlinear regression applications where gradient-based learning fails [

84]. Exploration and exploitation are balanced by the adaptive process, boosting learning stability and convergence reliability. The model’s repetitive evaluations over a huge population require a lot of processing power [

85]. RA-PSO enhances prediction accuracy but does not provide feature influence interpretability.

Figure 4 shows the architecture of CNN-LSTM, XGBoost-PSO, SVM + K-Means, XGBoost + K-Means, RA-PSO and SVM-PSO.

Model Evaluation Indicators

In addition to conventional regression metrics (MAE, MSE, RMSE, MARE), classification metrics (Precision, Recall, F1, ROC-AUC) were intentionally employed after discretizing the continuous outputs into performance categories using domain-specific thresholds. This dual evaluation strategy was adopted for two main reasons: (i) to reflect the practical decision-making context, where mixtures must be judged as “acceptable” or “unacceptable” rather than only by exact numerical accuracy, and (ii) to ensure that the model not only predicts values close to the ground truth but also reliably distinguishes between critical performance classes [

52]. While regression metrics capture overall numerical fidelity, classification metrics highlight the robustness of the model in identifying edge cases and critical categories that are most relevant in engineering practice [

78]. Such hybrid evaluation frameworks have also been reported in prior studies, and therefore our approach aims to provide both numerical accuracy and practical relevance.

Table 2 lists seven indicators for evaluating proposed ML model prediction performance [

86,

87].

5. Discussion

PBMs are composite cementitious materials incorporating recycled plastics such as PET, PE, PP, or PVC as partial replacements for fine aggregates or additives to enhance specific properties [

128]. Typically, 5–20% of the fine aggregate volume is substituted with plastic particles in the form of fibers, granules, flakes, or powder, while maintaining standard cement, water, and admixture ratios [

129]. This approach promotes sustainability by reducing landfill waste and conserving natural aggregates, aligning with circular economy principles [

130]. PBMs offer lower density than conventional mortars, making them suitable for lightweight applications such as partition walls or panels [

130,

131]. Plastics, especially when used as fibers, can improve flexibility, crack resistance, and thermal/acoustic insulation [

25]. However, challenges remain: high plastic contents reduce compressive strength due to the lower stiffness of plastics and their weak interfacial bonding with the cement matrix. Workability is also impaired at higher plastic dosages, sometimes requiring additional superplasticizers. The heterogeneity of recycled plastics further complicates achieving consistent performance, restricting PBMs primarily to non-structural applications. These complexities underline the necessity of predictive modeling approaches to optimize mix designs.

In light of the results obtained in this study, the observed experimental and predictive trends can be coherently explained through the underlying materials science mechanisms. A relatively higher water-to-binder ratio, when combined with reactive admixtures such as silica fume or fly ash, enhances particle dispersion, facilitates hydration, and leads to additional C–S–H gel formation [

132]. This densifies the microstructure and improves compressive strength. Mineral and chemical admixtures also refine pore structure and reduce capillary porosity, thereby enhancing durability and strength [

133]. Conversely, excessive plastic incorporation diminishes performance because plastics are chemically inert and hydrophobic, generating weak interfacial transition zones and limiting load transfer [

134]. Likewise, higher cement content accelerates hydration and heat release, which can promote microcracking and autogenous shrinkage that compromise mechanical integrity [

135]. These mechanistic insights provide a robust physical basis for the statistical outcomes, demonstrating that the improvements and reductions in strength align with well-established materials science principles rather than representing mere numerical correlations [

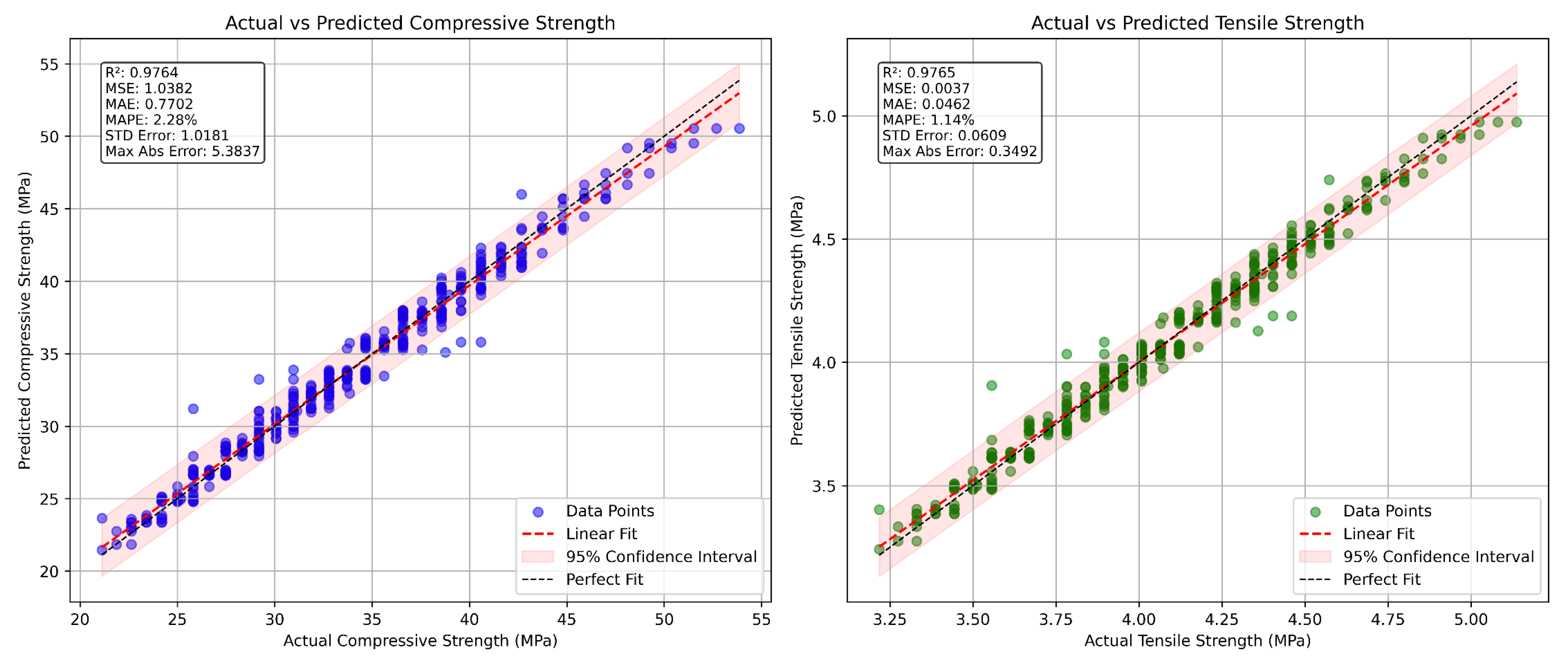

123]. Given these mechanistic complexities, accurate prediction of compressive strength becomes essential, yet challenging. Predicting compressive strength in PBMs is complex due to non-linear relationships between mix components, plastic properties, and mechanical performance [

92]. Traditional empirical models often fail to capture these complexities, leading to the adoption of hybrid machine learning methods like CNN-LSTM, XGBoost-PSO, SVM + K-Means, XGBoost + K-Means, and SVM-PSO. These methods leverage flexural strength as a key input, alongside variables like plastic content, curing time, and water-cement ratio, to predict compressive strength accurately [

99]. Hybrid models combine the strengths of multiple algorithms, handling heterogeneous data and improving prediction reliability [

72]. They reduce reliance on costly lab experiments, accelerating mix design and supporting sustainable construction material development [

136].

Table 5 show the comparison of statistical parameters for flexural strength prediction using hybrid ML models.

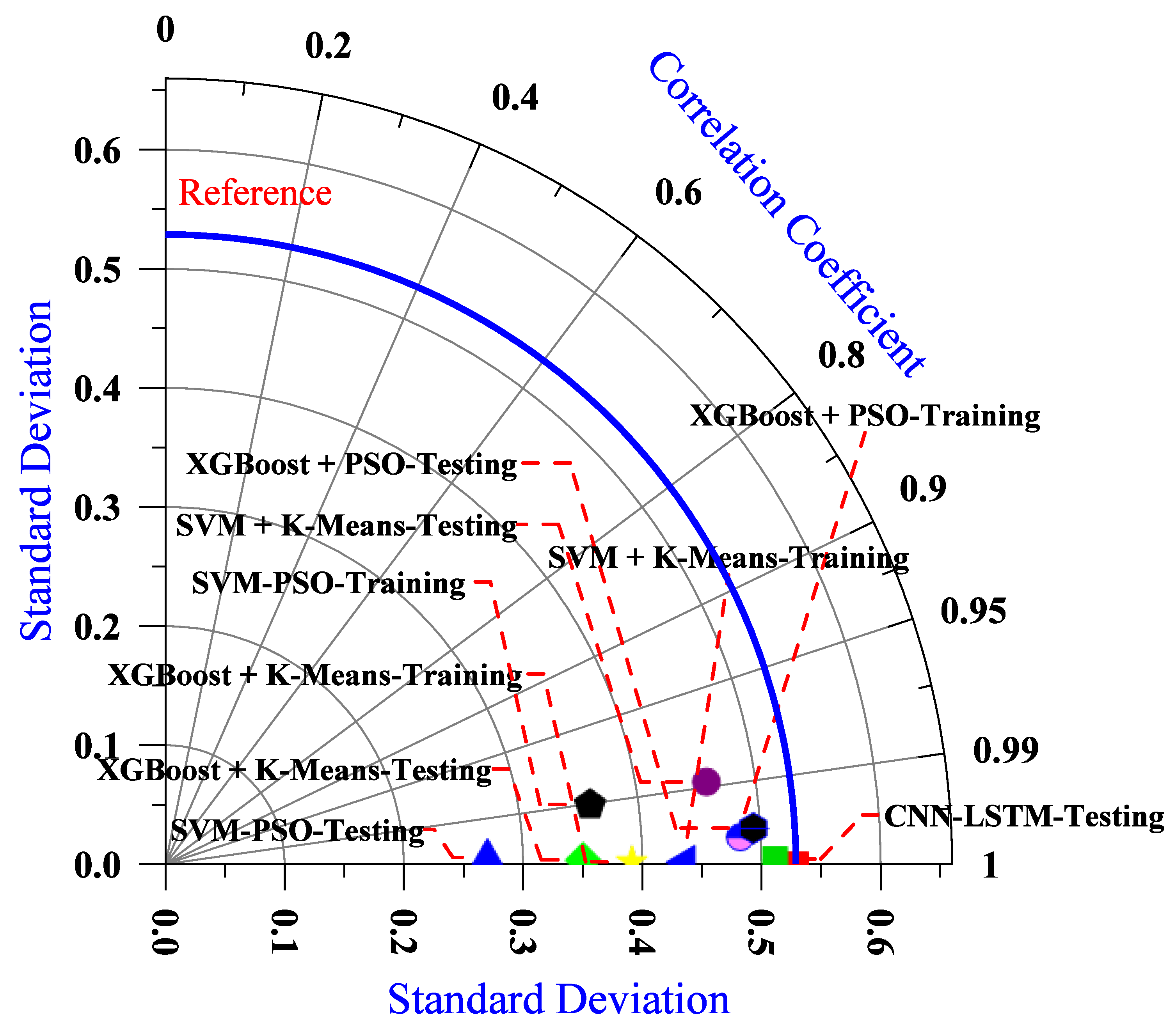

The comparative performance

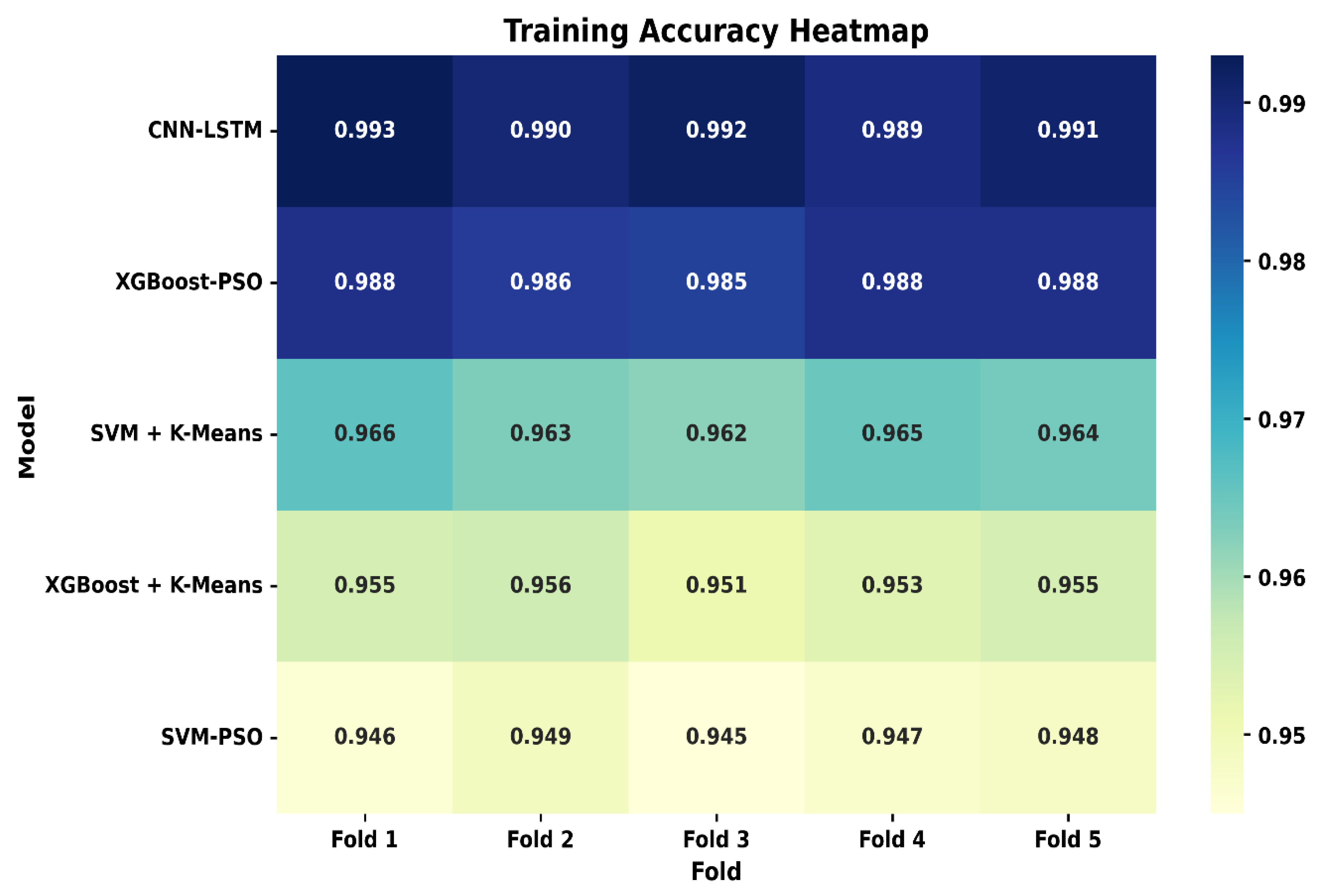

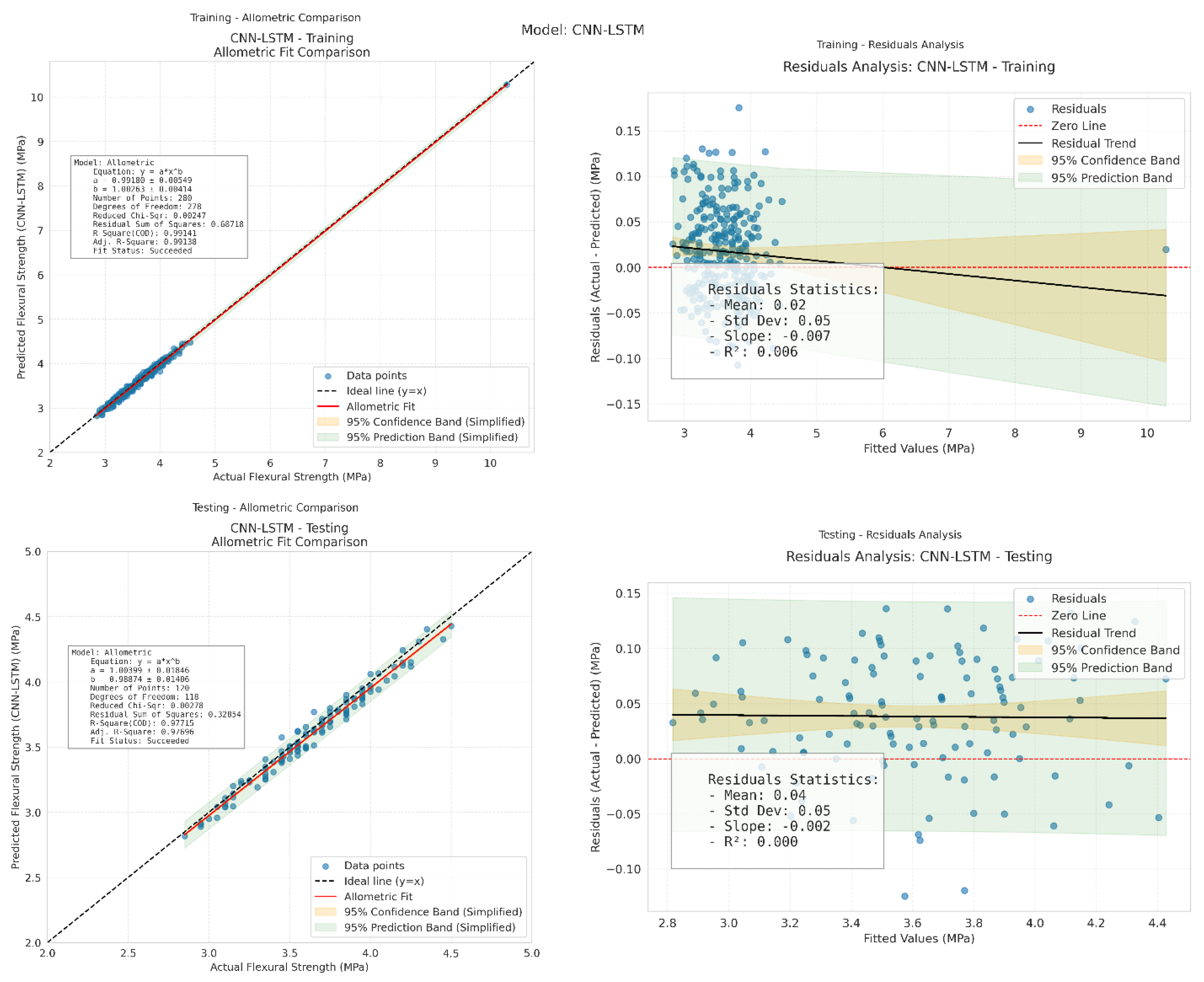

Table 6 above illustrates the superiority of the CNN-LSTM hybrid model in terms of the coefficient of determination (R

2) for both training and testing datasets. The CNN-LSTM model achieved an R

2 of 0.99141 on training data and 0.97715 on testing data, outperforming all other models evaluated in this study. The XGBoost-PSO model showed the closest performance to CNN-LSTM, with only a 0.43% reduction in training R

2 and a 0.90% reduction in testing R

2, indicating that while it is effective, it still falls short of capturing the underlying data relationships as well as CNN-LSTM. More traditional models like SVM combined with K-Means and SVM optimized with PSO, exhibited significantly lower R

2 values. In particular, the SVM-PSO model demonstrated the weakest performance, with a 4.28% decrease in training R

2 and a 6.75% drop in testing R

2, indicating limited generalization capabilities and a higher likelihood of underfitting or misrepresenting the data structure. The CNN-LSTM model’s strong generalization is primarily due to its architecture, which combines Convolutional Neural Networks (CNNs) for extracting spatial features and Long Short-Term Memory (LSTM) networks for capturing temporal or sequential dependencies in the data. This hybrid structure enables the model to learn both local and long-range patterns effectively, making it particularly suitable for complex regression tasks involving high-dimensional or structured datasets. In contrast, ensemble models such as XGBoost + K-Means and XGBoost-PSO, although powerful, tend to rely heavily on feature engineering and optimization techniques, which may not fully capture intricate patterns as deep learning-based models do. Overall, the CNN-LSTM model not only achieves the highest predictive accuracy but also demonstrates robust consistency across training and testing phases, thereby validating its effectiveness for real-world applications where model generalization is critical.

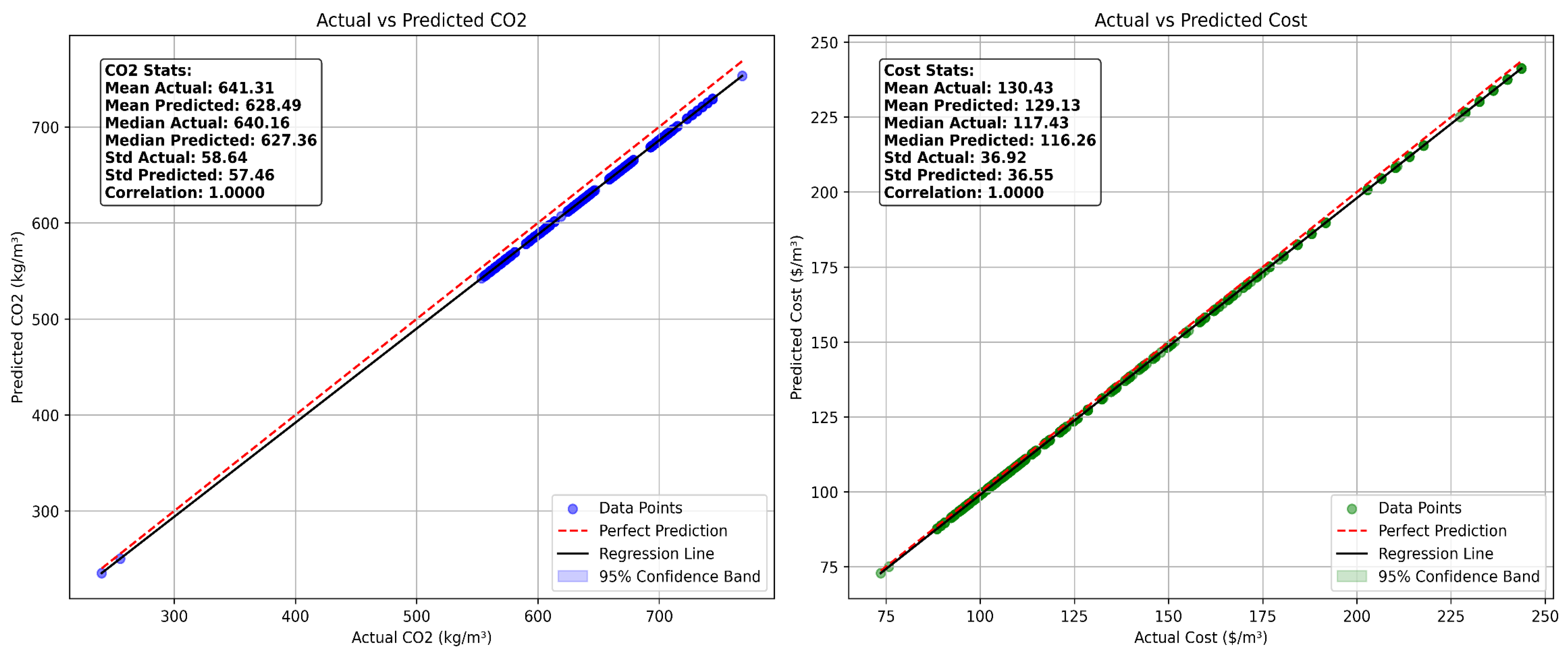

Predicting production costs and CO

2 emissions associated with PBMs is a critical step toward achieving sustainable material design. Machine learning (ML) models, including algorithms such as XGBoost and artificial neural networks (ANNs), offer robust tools for forecasting these metrics based on input variables like raw material composition, processing methods, and energy usage [

33]. Furthermore, manufacturing processes such as injection molding and resin transfer molding are energy-intensive, with energy demands ranging between 5 and 20 kilowatt-hours per kilogram, thereby contributing to increased environmental impacts [

138]. The integration of recycled fillers, including waste plastics and rubber, has been shown to reduce material costs by 10 to 20 percent and decrease associated emissions by 15 to 30 percent [

139]. Leveraging such data, ML models have demonstrated high predictive accuracy, with coefficients of determination (R

2) exceeding 0.90 in many cases [

140]. For instance, XGBoost optimized with Particle Swarm Optimization (PSO) has been employed to predict production costs with a root mean square error (RMSE) of approximately 5 US dollars per cubic meter and CO

2 emissions with an RMSE near 10 kg per cubic meter. These findings highlight the potential of ML-driven frameworks to support decision-making in eco-efficient material development. To strengthen the external validation,

Table 7 compares the predicted cost and CO

2 emissions of PBMs in this study with independent datasets and regional scenarios from the literature. The results demonstrate consistency with previously reported ranges, confirming that the model outputs are both realistic and generalizable.

Figure 20 show the Benefits of machine learning for PBMs. Although this study predicts CO

2 emissions and production costs, it does not provide a complete life cycle assessment (LCA), which would require considering environmental impacts across the entire service life of the material, including transportation, use, and end-of-life stages. Nevertheless, the presented framework offers a critical first step to-ward integrating predictive modeling with LCA by quantifying key indicators at the production stage. Future research can extend this approach to full LCA models to pro-vide a more comprehensive sustainability evaluation.

From a practical engineering perspective, the findings have significant implications. The developed hybrid ML framework and the accompanying GUI can support decision-making by enabling engineers, designers, and material producers to balance mechanical performance, cost efficiency, and environmental impact when selecting mixture designs. Such tools can contribute to optimizing material use in real construction projects, promoting sustainable practices, and aligning with emerging green building codes and policies [

141]. In this way, the study not only advances predictive modeling techniques but also highlights their direct relevance to practical engineering applications.

Table 7.

The cost and CO2 emissions of sustainable PBMs.

Table 7.

The cost and CO2 emissions of sustainable PBMs.

| Type of Concrete Mix | CO2 Emissions (kg CO2/kg of Concrete) | Production Cost (USD/Ton) | Notes | Ref. |

|---|

| Conventional Concrete (no plastic) | 160 | 140 | Based on cement (0.9 tons CO2/ton cement) and traditional aggregates. Costs vary by region. | [142] |

| Concrete with 20% Virgin Plastic | 250 | 200 | Slightly higher emissions due to virgin plastic production (2–3 tons CO2/ton plastic). Higher cost due to plastic price. | [143] |

| Concrete with 20% Recycled Plastic | 250 | 145 | Lower emissions due to recycled plastic (1–1.5 tons CO2/ton). Lower cost from using waste materials. | [144] |

| Concrete with 20% Bio-based Plastic (e.g., PLA) | 420 | 180 | Lower emissions from renewable sources (0.5–1 ton CO2/ton). Higher cost due to PLA production complexity. | [145] |

| Concrete with 20% CO2-based Plastic | 520 | 220 | Very low or negative emissions due to CO2 sequestration. Higher cost due to advanced catalyst technologies. | [146] |

| This study | 240.18 | 73.61 | | |