1. Introduction

Numerical modeling represents the dynamic behavior of buildings through assumptions about materials, boundaries, and loads, typically using finite element and modal analysis to estimate vibrations, natural frequencies, and mode shapes. Despite its scalability, it is computationally intensive and may lead to inaccuracies [

1]. Wang et al. developed a response-surface–based finite element model–updating technique for a 120 m super high-rise, reducing the error between the measured and simulated natural frequencies to <5% and thereby establishing a reliable benchmark for subsequent damage detection [

2]. Identifying structural parameters accurately is critical for ensuring safety, optimizing performance during earthquakes, and enabling effective health monitoring, vibration control, and maintenance.

Hybrid techniques combine methods such as dynamic and ambient vibration testing to refine numerical models, leveraging their respective strengths while introducing added complexity and cost [

3]. Accurate modeling and parameter estimation are vital for designing control laws like state feedback and active disturbance rejection control [

4,

5] and for enabling effective algebraic observers for state estimation [

6].

Several approaches have been proposed for parameter identification under seismic excitation. Ji et al. [

7] introduced an iterative Least Squares (LS) technique to jointly estimate structural parameters and unknown ground motions, achieving noise robustness. Similarly, ref. [

8] presented an adaptive LS-based method for tracking time-varying parameters for damage detection. Concha et al. used adaptive observers with integral filters to estimate damping/mass and stiffness/mass ratios, ensuring positivity via projection techniques [

9], while [

10] applied modal analysis to estimate natural frequencies.

On the other hand, another approach provides a sophisticated approach that combines wavelet analysis with mode decomposition for improved accuracy in identifying critical structural parameters under dynamic loading conditions.

Optimization-based parametric identification aims to minimize discrepancies between measured and modeled structural responses [

11]. Gradient-based algorithms such as Gauss–Newton [

12], Levenberg–Marquardt [

13,

14], and conjugate gradients [

15] require derivative calculations. In contrast, heuristic methods like GA [

14,

16], PSO [

14], and simulated annealing are better suited for global, non-convex problems. Bayesian methods, including MCMC [

17], offer probabilistic parameter estimation in nonlinear models [

18].

Soft computing techniques—fuzzy logic, neural networks, swarm intelligence, and evolutionary computing—support modeling, uncertainty management, and structural reliability evaluation [

19]. They have been applied in simulation and optimization tasks, such as topological and shape optimization. Studies comparing NSGA-II and PSO for multi-objective design under seismic loads show both improved performance and reduced weight, with PSO often yielding better results [

20]. Soft computing also aids in inverse identification problems and managing uncertainties, using fuzzy logic to model stress–strain behavior [

19]. For example, Chisari et al. proposed a GA to calibrate FE models of base-isolated bridges by fitting Young’s modulus and isolator stiffness [

21], and Quaranta et al. used DE and PSO to identify Bouc–Wen model parameters of seismic isolators, with DE outperforming PSO [

22]. Marano et al. applied a modified GA to large systems, improving performance under noisy data [

23]. Károly et al. showed that DE outperformed PSO in estimating parameters of a simplified nonlinear building model, though the model’s simplification limited applicability to complex structures [

24]. Salaas et. al. optimized a hybrid vibration-control system that couples base isolation with a tuned liquid column damper (TLCD) using metaheuristic search; for a benchmark tall building they reported up-to-40% drops in peak floor accelerations relative to an isolated-only baseline [

25].

Parametric identification of stiffness and damping remains challenging due to modeling and measurement uncertainties. Traditional methods like GA and PSO are moderately complex, but become resource-intensive in multi-objective contexts. In contrast, quantum-inspired algorithms (QGA, QPSO, QNSGA-II, QDE, QSA) exploit superposition to explore solution spaces more effectively. These methods, though computationally demanding, improve convergence and are valuable in structural simulations [

26], provided that resource constraints are considered. Lee et al. introduced a quantum-based harmony-search (QbHS) algorithm for simultaneous size and topology optimization of truss structures; on 20-, 24-, and 72-bar trusses the QbHS consistently converged to lighter designs than classical evolutionary methods while respecting frequency and displacement limits [

27]. In conclusion, various optimization techniques for parametric identification include deterministic methods like Least Squares Estimation (LSE) and Kalman Filtering, which are robust but noise-sensitive, and heuristic algorithms, such as GA and PSO, which better handle complexity but may face premature convergence issues. Despite the increasing popularity of quantum-inspired metaheuristics in optimization, their application to structural parameter identification remains limited, particularly in civil structures subject to seismic loading. Moreover, while several works have compared classical algorithms like GA and PSO, few have addressed the robustness and reproducibility of results through formal statistical validation frameworks.

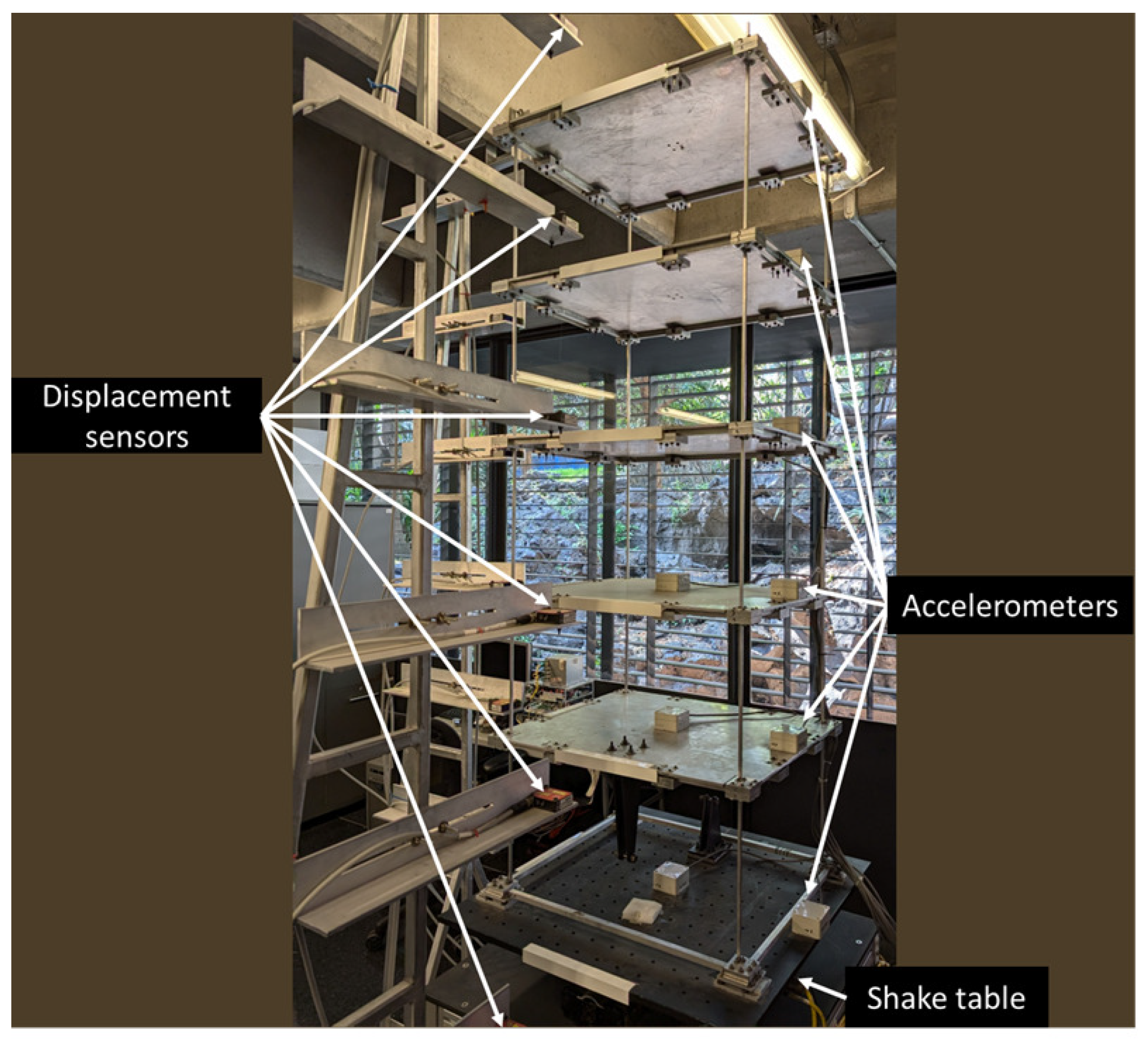

This study addresses this gap by proposing a multi-objective optimization approach for the identification of stiffness and damping parameters in a five-story civil structure using both classical and quantum-inspired metaheuristics. The methodology includes a comparative analysis of seven algorithms and incorporates a comprehensive statistical validation process, including tests for normality, variance homogeneity, and ranking consistency. The contribution of this work lies not only in demonstrating the performance of quantum-inspired models such as QNSGA-II and QSA, but also in offering a statistically rigorous and replicable framework for structural parameter estimation, with potential applications in structural health monitoring, vibration control, and seismic engineering. Each method was applied over 30 independent runs using experimental seismic data, and the performance was assessed based on the accuracy of the identified parameters and statistical robustness. Error metrics include MSE, RMSE, MAE, and . Normality was assessed with the Shapiro–Wilk test; variance homogeneity was checked using Levene and Bartlett tests. ANOVA with Tukey–Bonferroni post hoc comparisons and Bonferroni-corrected t-tests were used to identify significant inter-model differences while controlling for Type I errors.

The paper is organized as follows:

Section 2 introduces the mathematical model and multi-objective function.

Section 3 details the optimization methods.

Section 4 presents the experimental and statistical results.

Section 5 discusses the findings and future works, and

Section 6 concludes the work.

3. Optimization Algorithms

This section summarizes the used algorithms to optimize multi-objective functions to find the parameters presented in

Section 2, given the inherent complexity of directly estimating model parameters from experimental data.

3.1. Genetic Algorithm

Genetic Algorithm (GA) is inspired by the principles of natural selection to determine the optimal structural parameters. The GA operates by iteratively evolving a population of candidate solutions through selection, crossover, mutation, ordering, and migration processes, aiming to minimize a predefined function. The algorithm effectively navigates complex solution spaces for parameter identification in structural engineering, creating accurate and robust models that predict structural behavior under different loading conditions.

3.2. Particle Swarm Optimization

Particle Swarm Optimization (PSO) simulates animal social behaviors using a swarm of particles that navigate the solution space. Each particle updates its position based on its personal best

and the swarm’s best

, promoting convergence towards optimal solutions. PSO effectively balances exploration and exploitation, making it valuable for identifying parameters in complex structural models. Particle movement depends on velocity and position at each iteration.

where

and

represent the velocity and position of particle

i at iteration

t. The cognitive coefficient

guides the particle toward its previously discovered optimal position, while the social coefficient

directs it to the best-known position in the swarm. This fosters a balance between individual exploration and collective knowledge. Random variables

and

, distributed between 0 and 1, add stochasticity to the search process, enhancing exploration. The inertia weight

w affects the impact of previous velocity as follows:

where

is a forgetting factor, as specified in [

14].

3.3. Quantum-Inspired Genetic Algorithm

The Quantum Genetic Algorithm (QGA) integrates the principles of genetic algorithms with quantum computing. QGA characterizes chromosomes as quantum bits (qubits) capable of existing in a superposition of states, enabling the simultaneous representation of multiple possibilities.

A qubit can assume the state of 1, 0, or a superposition of both states. The representation of a qubit’s state can be expressed as

where the states

and

represent the classical binary values 0 and 1, with complex coefficients

and

satisfying

. Here,

is the probability of the qubit being in state 0, and

is the probability of it being in state 1. Quantum gates influence the evolution of the quantum state by updating the qubit amplitudes based on a look-up table that considers the fitness function and current state. This process helps adjust the amplitudes for better performance, steering the qubit towards an optimal solution and thereby directing the evolutionary process of the quantum chromosome [

29].

3.4. Quantum-Inspired Particle Swarm Optimization

In addressing the limitations inherent in traditional PSO methods applied in discrete spaces, Quantum Particle Swarm Optimization (QPSO) incorporates qubits to represent the positions of particles. Furthermore, QPSO utilizes a randomized observation mechanism dependent on the state of the qubit, thereby eliminating the need for the sigmoid function that is commonly employed in discrete PSO algorithms. This modification not only simplifies the algorithm but also enhances its computational efficiency. In conclusion, QPSO is adept at resolving continuous optimization challenges and can be tailored with various probability distributions to optimize performance. Specifically, particles are directed by a probability distribution typically centered around a mean best position that is derived from the average of all personal best positions.

3.5. Quantum-Inspired Non-Dominated Sorting Genetic Algorithm 2

Quantum-Inspired NSGA-II (QNSGA-II) represents an advancement of the classical multi-objective optimization algorithm NSGA-II, incorporating principles from quantum computing to enhance both solution diversity and convergence efficiency. In contrast to traditional NSGA-II, which processes solutions as real-valued vectors, QNSGA-II utilizes quantum registers for population encoding, thereby facilitating parallel exploration of multiple potential solutions. Within the QNSGA-II framework, each individual in the population is characterized as a quantum chromosome comprised of a collection of qubits arranged within a register. This register is defined as

where each qubit

encodes a probabilistic decision variable that evolves over generations. The state of the quantum register at any time is determined by a vector of probability amplitudes, which can be updated through quantum-inspired operators. To guide the optimization process, QNSGA-II employs quantum rotation gates that adjust the probability distributions associated with each qubit. Given a qubit state represented by an amplitude vector

, its evolution is governed by the update rule

where

is a quantum rotation matrix that dynamically modifies the probability amplitudes based on the dominance relationships and the distance between the crowdings in the Pareto front [

30].

The core operations in QNSGA-II follow the standard selection, crossover, and mutation steps of NSGA-II but incorporate quantum update rules. The quantum rotation mechanism allows the algorithm to steer the probability distributions toward promising solutions while maintaining a diverse set of potential candidates in the Pareto front.

3.6. Quantum-Inspired Differential Evolution

Quantum Differential Evolution (QDE) is an enhanced version of the classical Differential Evolution algorithm that incorporates quantum-inspired techniques to improve optimization efficiency. Unlike traditional DE, which uses real-valued solution vectors, QDE utilizes quantum probability distributions for encoding candidate solutions, leading to better exploration and exploitation in the optimization process.

In QDE, each decision variable is represented not as a single numerical value but as a probability density function (PDF) influenced by quantum-inspired operators. A solution vector

X is described as

where each variable

follows a probability distribution that evolves throughout the optimization process. The quantum representation of each variable is expressed in terms of its probability density:

where

is the quantum wave function associated with the decision variable

and

represents the probability of sampling a particular value. Instead of relying on fixed numerical values, QDE dynamically updates these probability distributions based on fitness evaluations [

31].

3.7. Quantum-Inspired Simulated Annealing

Classical Simulated Annealing (SA) is an optimization algorithm inspired by the annealing process in metallurgy, where a system is slowly cooled to reach a stable, low-energy state. Quantum-Inspired Simulated Annealing (QSA) extends this concept by incorporating quantum probability principles, allowing for an adaptive and flexible exploration of the solution space.

As described in previous quantum-inspired models, candidate solutions in QSA are encoded using quantum probability amplitudes rather than deterministic numerical values. However, unlike QNSGA-II and QDE, which focus on evolutionary mechanisms, QSA introduces a temperature-dependent adaptation that influences quantum state transitions dynamically.

To model this behavior, QSA replaces classical probability distributions with quantum state transitions controlled by temperature-dependent quantum rotation matrices. The evolution of each quantum-encoded solution follows the update rule

where

represents a temperature-dependent quantum rotation matrix that adjusts probability amplitudes based on the annealing schedule [

32]. As the temperature

T decreases, the probability distributions contract, allowing for a gradual refinement of solutions while maintaining global search capabilities.

A distinguishing feature of QSA is its acceptance criterion, which integrates classical annealing principles with quantum interference effects. Instead of relying solely on Boltzmann probabilities, QSA introduces a hybrid acceptance function:

where the first term corresponds to the traditional Boltzmann factor and

represents a quantum correction function that dynamically adjusts acceptance probabilities [

32]. This mechanism enhances the ability to escape local minima while maintaining an efficient convergence rate.

Table 2 summarizes the hyperparameters and formulations of cost function used in each optimization model, enabling a clear comparison of their configurations and approaches.

5. Discussion

5.1. Algorithm Performance and Parameter Identification Insights

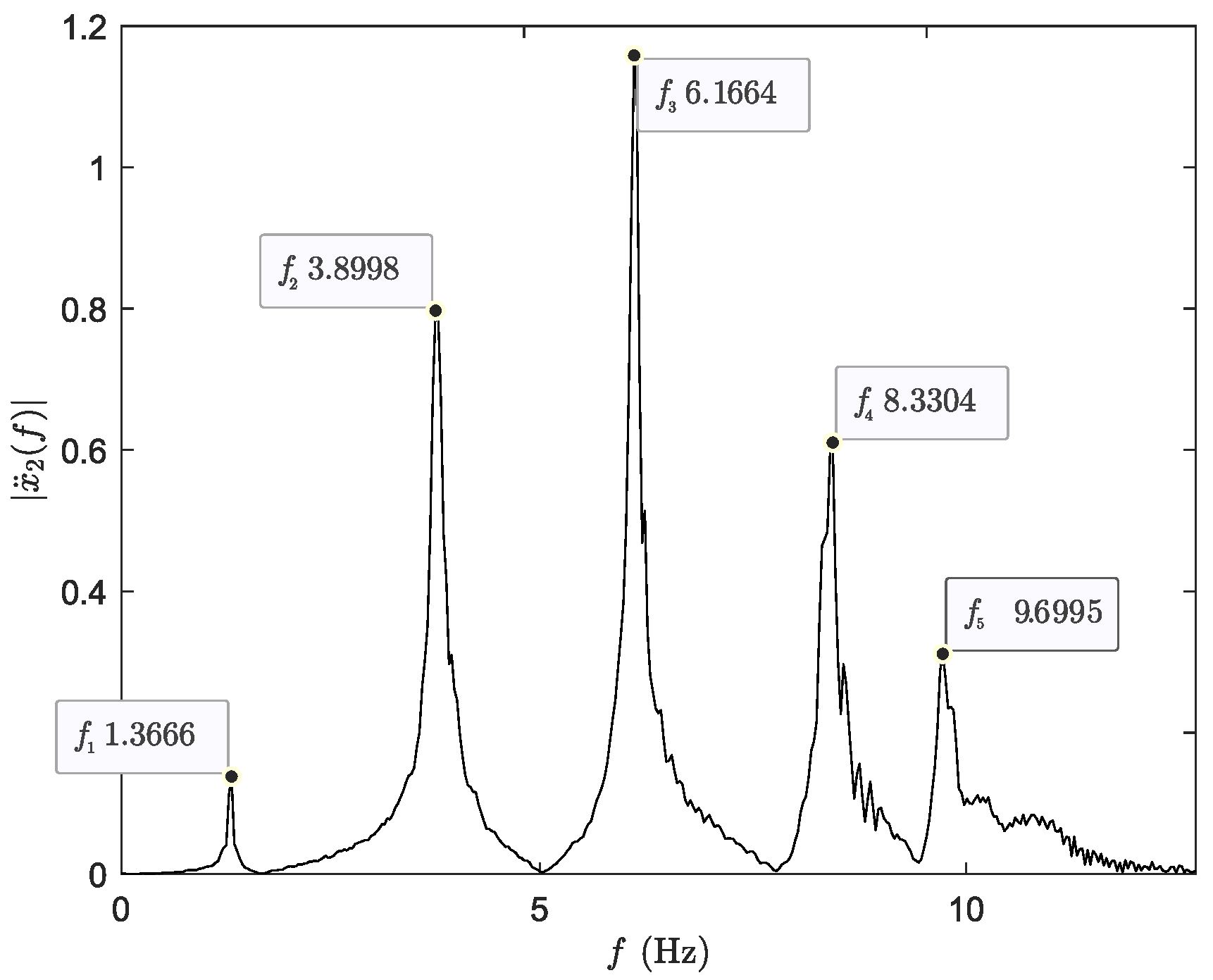

Identifying structural parameters in civil structures, such as natural frequencies and damping ratios, is essential for the design, maintenance, and safety assessment of buildings and bridges. Accurate estimation of these parameters enables early detection of structural degradation, reduces the risk of failure under dynamic loads, and supports optimized designs in terms of strength, efficiency, and cost-effectiveness. As shown in

Table 3, the correct identification of stiffness and damping values contributes directly to structural health monitoring and model calibration.

In this work, a five-story linear mass–spring–damper model was used as a controlled benchmark to evaluate the performance of various optimization algorithms. Although simplified, this model captures the dominant modal dynamics and facilitates repeatable testing. Nevertheless, it omits key features of real structures, including geometric and material nonlinearities, stiffness degradation, soil–structure interaction, and non-viscous damping mechanisms. These simplifications limit direct applicability to full-scale buildings. To enhance generalizability, future work should incorporate high-fidelity finite element models and field data from instrumented structures. Additionally, adapting the objective function to account for model uncertainties and nonlinear behavior would increase the relevance of this approach for practical applications.

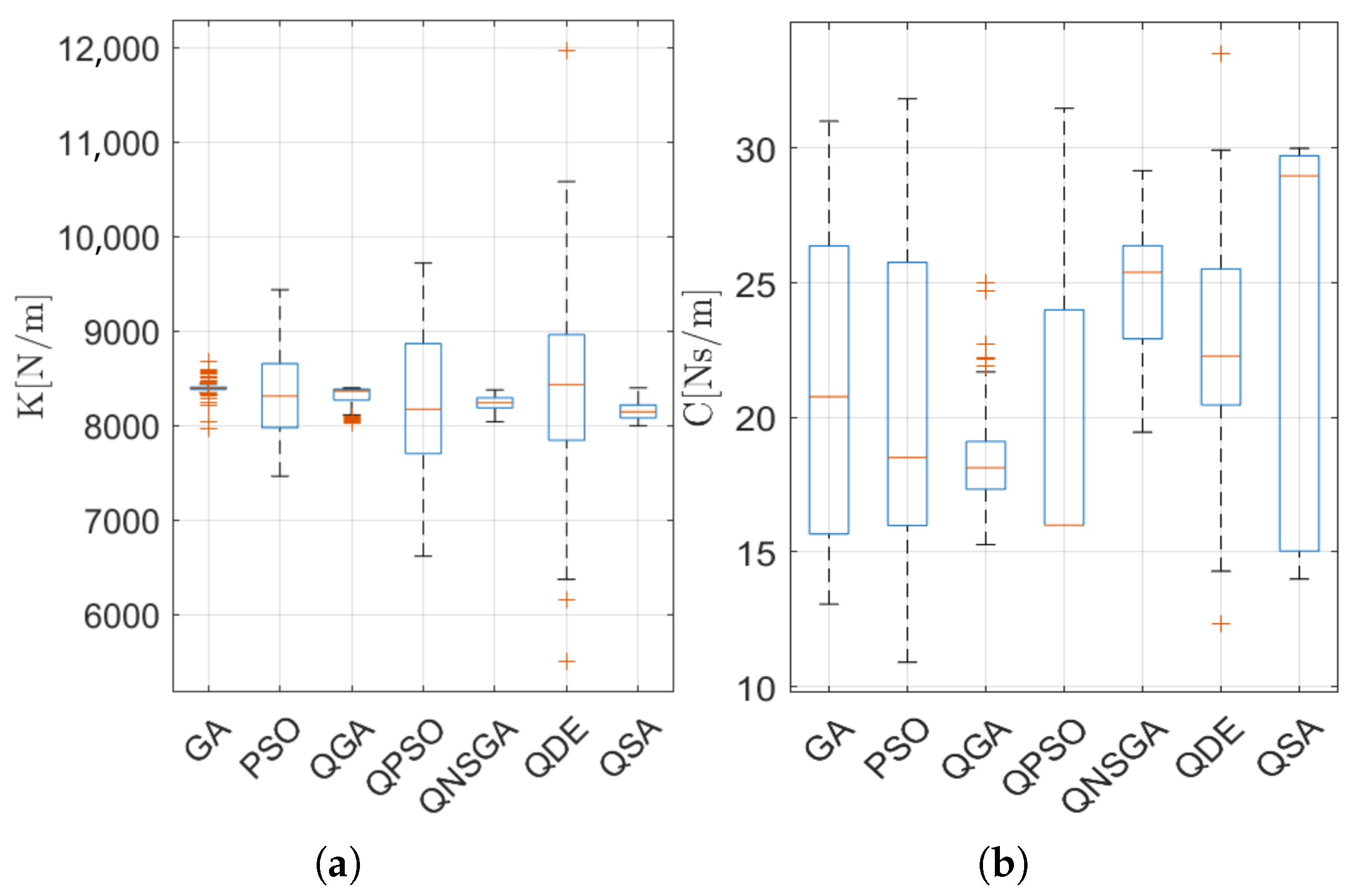

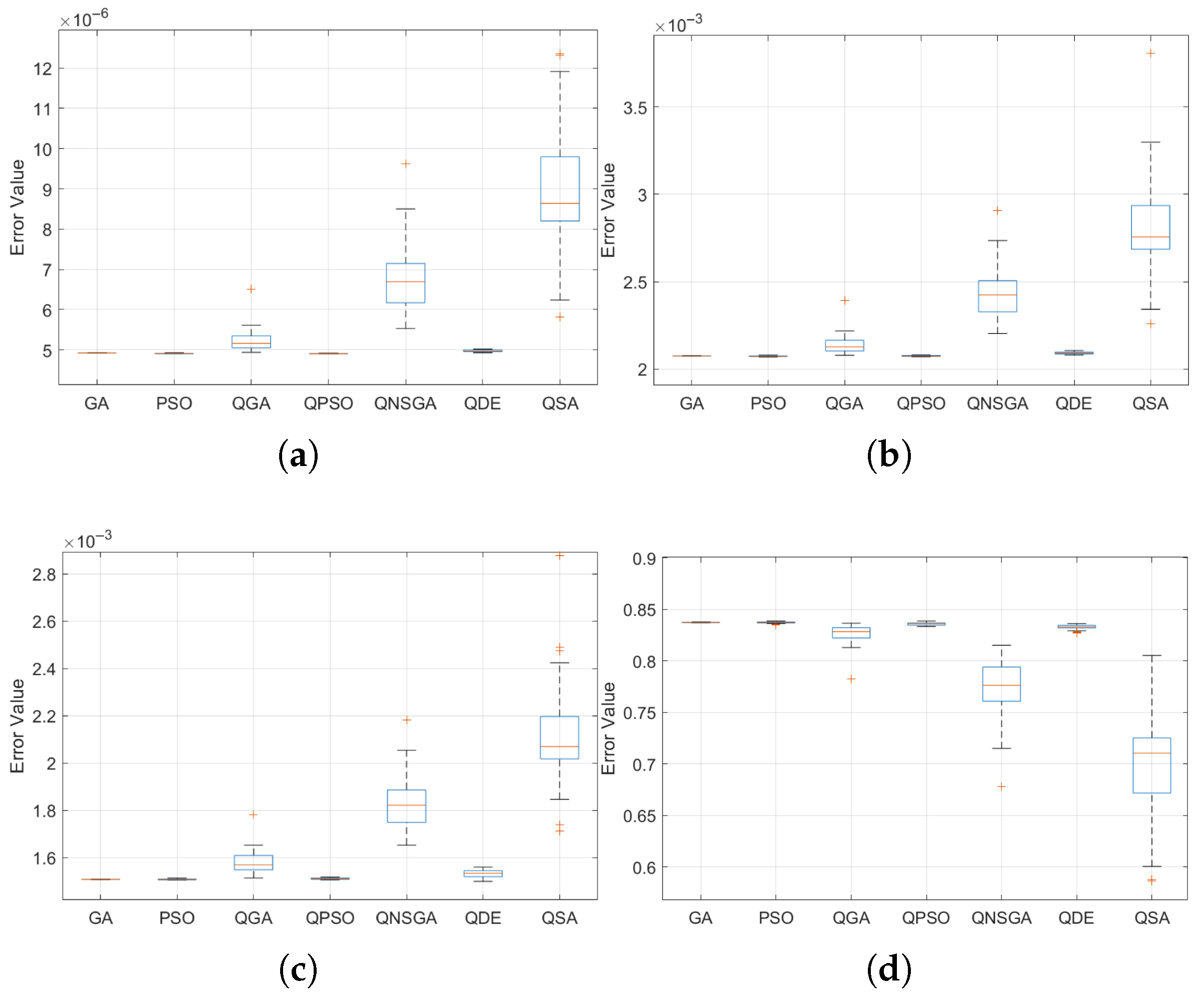

The performance results revealed interesting differences among algorithms. GA exhibited the highest average ranking (26.4%) according to the Borda count in

Table 9, while QSA, despite achieving the lowest MSE and RMSE values, had the lowest ranking probability (23.2%). This divergence points to the distinction between accuracy and robustness. While QSA can converge to highly accurate solutions, its variability across runs reduces its overall reliability. In contrast, models such as QNSGA-II and QDE delivered more consistent results across executions, even if their mean errors were slightly higher. This reinforces the importance of evaluating both dimensions when assessing optimization performance: accuracy in individual runs and robustness across repeated trials.

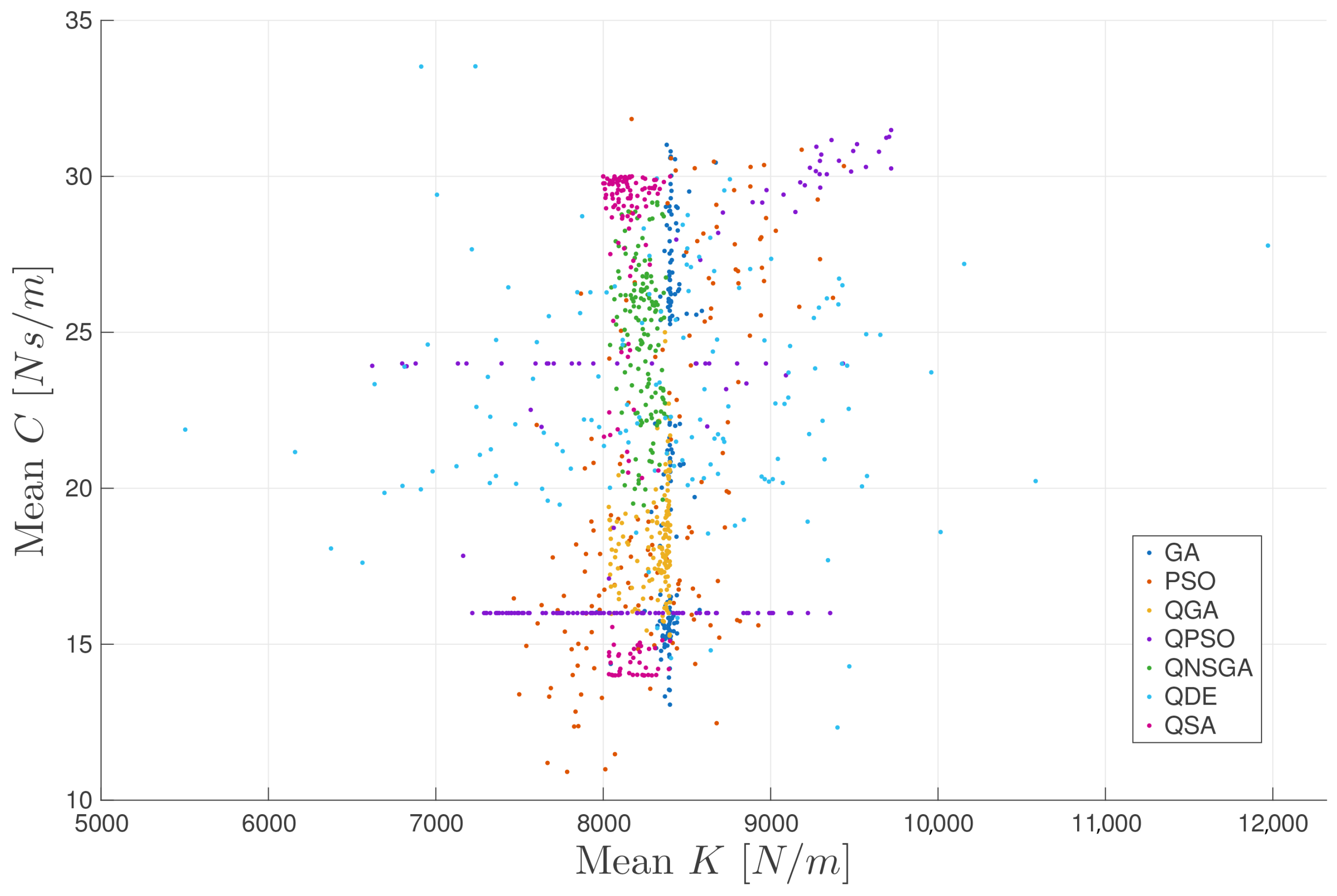

A detailed analysis of the identified stiffness (K) and damping (C) coefficients revealed distinct algorithmic sensitivities toward each parameter according to

Figure 7. Stiffness, which governs the natural frequencies of the structure, tends to dominate the system’s dynamic response and provides clearer optimization gradients, as shown in

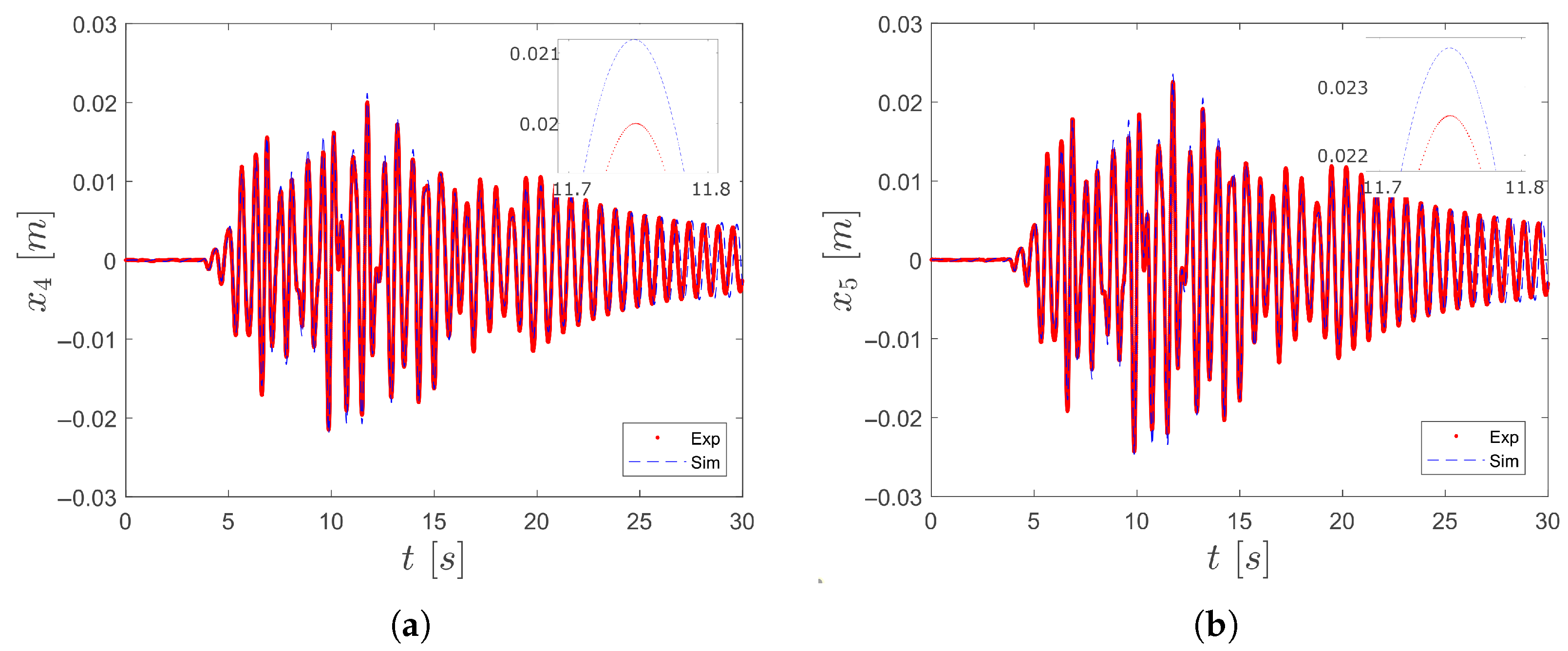

Figure 5. In contrast, damping primarily influences amplitude attenuation and is more challenging to estimate, particularly when velocity is derived from filtered displacement signals. Algorithms with broader exploration capabilities, such as QDE and QSA, are better suited to capture subtle damping effects, whereas others may prioritize convergence speed at the expense of sensitivity. These findings highlight the importance of designing multi-objective cost functions and validation strategies that evaluate stiffness and damping in a balanced manner.

Although the optimization strategies employed were not explicitly adapted or redesigned for the inverse problem, the analysis incorporated key characteristics specific to structural parameter identification. For instance, the differing sensitivities in the estimation of stiffness and damping coefficients revealed underlying issues such as parameter coupling and differential observability (see

Figure 6). Damping estimation, in particular, was influenced by noise and the need to reconstruct velocity from filtered displacement, making it more vulnerable to variability across optimization runs. Rather than modifying the internal logic of each algorithm, the proposed evaluation framework captures these behaviors through multi-criteria assessment and statistically robust comparisons. This design enables the extraction of problem-specific insights from general-purpose algorithms and contributes to bridging method-driven exploration with problem-driven interpretation.

While a formal Pareto front was not constructed due to the scalar nature of the objective function, the trade-off between displacement and velocity errors was embedded directly into the cost design using fixed weights (1000 and 100, respectively). This approach reflects engineering priorities and enables the optimization process to balance precision in displacement tracking with the sensitivity required for accurate velocity estimation. Particularly in the case of QNSGA-II, which explores diverse solutions across objective dimensions, the algorithm implicitly samples the trade-off space even when only the weighted sum is evaluated. Although the individual error components were not retained to visualize a Pareto front, the selection of solutions and comparative performance analysis provide meaningful insight into this multi-objective behavior.

5.2. Methodological Contributions and Validation Framework

While the optimization algorithms applied in this study are not novel, the methodological contribution lies in the systematic integration of quantum-inspired metaheuristics within a unified framework for structural parameter identification. Unlike prior studies limited to isolated comparisons, the proposed approach evaluates algorithm performance across several dimensions—accuracy, robustness, and sensitivity to structural parameters—under a consistent benchmark. Furthermore, the inclusion of both parametric and non-parametric statistical analyses, including Shapiro–Wilk tests, ANOVA with post hoc corrections, Bootstrap resampling, and Borda count aggregation, enhances the rigor and reproducibility of the evaluation. This framework not only supports quantitative performance comparison but also serves as a transferable protocol for assessing optimization strategies in engineering problems characterized by uncertainty and multi-criteria behavior.

To ensure the statistical validity of the results, this study incorporated a rigorous validation framework. Normality of residuals was verified using the Shapiro–Wilk test (see

Table 5), which confirmed that MSE, RMSE, and MAE were normally distributed, while

occasionally deviated from normality. Homogeneity of variance across floors was assessed with Levene and Bartlett tests, satisfying assumptions for ANOVA. Based on these conditions, one-way ANOVA with Tukey–Bonferroni post hoc tests was used to identify significant differences among algorithms. To complement this, Bootstrap resampling (1000 iterations) was performed to compute mean rankings, standard deviation, and coefficient of variation. The Borda count method was applied as a non-parametric aggregation strategy, providing a robust comparative framework. Although non-parametric alternatives such as Kruskal–Wallis were not applied here, they are worth exploring in future studies involving more irregular or field-acquired data.

5.3. Limitations and Future Research

In terms of computational performance, quantum-inspired algorithms—especially QSA, QDE, and QNSGA-II—required substantially more runtime than classical methods. While GA and PSO completed within 15 min, quantum models often exceeded one hour due to their population-based nature and complex search dynamics. These differences, observed using high-performance computing (64-core AMD EPYC CPU and Tesla M4 GPU), currently limit the use of quantum-inspired methods in real-time monitoring. However, they remain well-suited for offline assessment and post-seismic evaluation.

From a practical standpoint, quantum-inspired algorithms—despite requiring longer execution times—offer highly accurate and stable solutions that are particularly suitable for non-real-time applications, such as post-seismic structural assessments, calibration of digital twins, and structural health diagnostics in critical infrastructure. In contrast, classical methods like GA and PSO may be preferred for real-time scenarios due to their faster convergence. This differentiation highlights the importance of aligning algorithm selection with the temporal and computational constraints of the target engineering application.

While quantum-inspired algorithms did not consistently outperform classical methods across all error metrics—particularly in RMSE and MAE—their performance was more stable across repeated trials. This was evidenced by lower coefficients of variation and tighter bootstrap ranking distributions. In scenarios involving noisy or incomplete data, such consistency can be as valuable as absolute accuracy. Therefore, rather than claiming global superiority, the present study emphasizes the complementary strengths of quantum methods: robustness, exploratory capability, and performance under uncertainty. The evaluation framework adopted here highlights these trade-offs explicitly, allowing for a nuanced understanding of how different optimization strategies behave in the context of structural parameter identification. Consequently, the study underlines the enhanced accuracy and robustness of quantum-inspired algorithms for structural parameter identification, meanwhile acknowledging their limitations due to a specific applicability boundary. Algorithms such as QNSGA-II and QDE require significant computational resources, making them unsuitable for real-time applications where rapid decision-making is essential. Instead, their use is most appropriate for offline assessments and model updates, particularly in contexts where accuracy is prioritized over speed. This includes post-earthquake evaluations or the long-term monitoring of a structure’s health. The high computational cost is justified in these specific, high-stakes situations because these methods excel at thoroughly exploring complex, high-dimensional, and non-convex solution spaces, providing more reliable results than traditional heuristic approaches.

Beyond the current benchmark model, the proposed framework is applicable to a wide range of civil engineering problems involving parameter identification, such as model updating of bridges, high-rise buildings, and structural systems subjected to environmental degradation or retrofitting. The modular nature of the algorithmic structure and validation protocol allows for straightforward adaptation to problems with different boundary conditions, sensor configurations, or degrees of nonlinearity. This versatility supports its deployment in both academic research and practical scenarios involving seismic diagnostics, fatigue assessment, and long-term infrastructure monitoring.

To guide future work, a prioritized research roadmap is proposed. The most immediate goal is to reduce computational demands through adaptive hyperparameter tuning, surrogate modeling, or hybrid classical–quantum strategies. These enhancements would improve feasibility in practical applications and open the door to near-real-time analysis. In a second phase, extending the methodology to nonlinear multi-degree-of-freedom models using finite element approaches and experimental validation will enable more realistic deployment. Finally, long-term objectives include the integration of reinforcement learning for dynamic optimization control and cloud-based infrastructures for scalable implementation in large civil structures. Collaboration with industry stakeholders will be critical in translating these developments into robust monitoring systems.

6. Conclusions

A multi-objective optimization framework integrating both classical (GA, PSO) and quantum-inspired (QGA, QPSO, QNSGA-II, QDE, QSA) metaheuristic algorithms was implemented to address the problem of structural parameter identification in civil engineering. The methodology was applied to a five-story building prototype subjected to seismic excitation, enabling the estimation of stiffness and damping coefficients with high resolution.

One of the most salient observations was that quantum-inspired algorithms generally outperformed classical methods in minimizing the discrepancy between experimental and simulated responses. This superior performance can be attributed to the increased exploration capability and probabilistic search spaces inherent in quantum formulations, which help to avoid premature convergence and enable a more comprehensive traversal of the solution domain. As a result, quantum models achieve lower error metrics, indicating better alignment with the system’s true dynamic behavior.

A second key finding was the differential behavior in algorithm robustness across multiple runs. While QSA achieved high accuracy in individual executions, its performance ranking was less consistent, whereas QNSGA-II and QDE maintained greater stability despite slightly higher average errors. This phenomenon is explained by the stochastic variability of each algorithm: QSA’s search process is sensitive to initial conditions due to its annealing-inspired dynamics, while QNSGA-II and QDE incorporate mechanisms that promote convergence toward Pareto-optimal fronts with better diversity preservation. This reinforces the importance of analyzing both accuracy and robustness as independent but complementary dimensions of algorithmic performance.

The statistical validation framework proved effective in confirming the significance and reliability of the results. Through the use of Shapiro–Wilk, Levene, and Bartlett tests, the assumptions for parametric analysis were rigorously evaluated. ANOVA with Tukey–Bonferroni corrections revealed statistically significant differences among models. Additionally, Bootstrap resampling and Borda count analysis confirmed that quantum-inspired models exhibited more consistent rankings and lower coefficients of variation. These findings highlight that robust validation is essential when comparing metaheuristics, particularly in inverse problems that are sensitive to noise and model simplification.

From a computational perspective, quantum-inspired models presented significantly higher runtimes compared to classical algorithms. This is explained by their reliance on larger populations, more complex update rules, and iterative refinement schemes that explore a wider solution space. While GA and PSO completed optimization in under 15 min, models such as QNSGA-II and QSA required upwards of one hour, even using high-performance computing environments. Such computational cost currently limits their applicability in real-time structural monitoring, although they remain suitable for offline analyses and post-event assessments.

Another relevant observation concerned the variability in the estimation of stiffness (K) and damping (C) coefficients. Stiffness values were generally more stable and accurately identified across methods, whereas damping coefficients showed higher sensitivity and dispersion. This is due to the fact that stiffness directly governs natural frequencies, which are more easily captured through spectral analysis, while damping affects amplitude attenuation and is harder to infer from noisy or filtered velocity data. The results suggest that stiffness contributes more dominantly to the cost function gradient, guiding the optimization more effectively, while damping estimation remains a more delicate task requiring additional regularization or hybrid measurement strategies.

A prioritized roadmap for future research is proposed based on these findings. The most immediate priority is to reduce the computational burden of quantum-inspired methods through techniques such as adaptive hyperparameter tuning, surrogate modeling, and hybrid classical–quantum formulations. These strategies are likely to improve feasibility for near-real-time applications. In the medium term, extending the methodology to nonlinear, multi-degree-of-freedom models and validating it with experimental or in situ data will enhance generalizability. Long-term goals include the integration of reinforcement learning to autonomously adapt search strategies and the implementation of cloud-based or distributed computing platforms to enable scalable deployment in large infrastructure systems. These directions are technically justified by the current limitations observed in model complexity, execution time, and deployment capacity.