Abstract

In open-network environments of smart buildings and urban infrastructure, abnormal traffic from security and energy monitoring systems is critical for operational safety and decision reliability. We can develop malware that exploits building automation protocols to simulate attacks involving the falsification or modification of chiller controller commands, thereby endangering the entire network infrastructure. Intrusion detection systems rely on abundant labeled abnormal traffic data to detect attack patterns, improving network system reliability. However, transmitting such data faces two major challenges: single-feature representations fail to capture comprehensive traffic features, limiting the information representation for artificial intelligence (AI)-based detection models, and unconcealed abnormal traffic is easily intercepted by firewalls or intrusion detection systems, hindering cross-departmental sharing. Existing methods struggle to balance feature integrity and transmission stealth, often sacrificing one for the other or relying on easily detectable spatial-domain steganography. To address these gaps, we propose a multi-channel imaging hiding method that reconstructs abnormal traffic into multi-channel images by combining three mappings to generate grayscale images that depict traffic state transitions, dynamic trends, and internal similarity, respectively. These images are combined to enhance feature representation and embedded into frequency-domain adversarial examples, enabling evasion of security devices while preserving traffic integrity. Experimental results demonstrate that our method captures richer information than single-representation approaches, achieving a PSNR of 44.5 dB (a 6.0 dB improvement over existing methods) and an SSIM of 0.97. The high-fidelity reconstructions enabled by these gains facilitate the secure and efficient sharing of abnormal traffic data, thereby enhancing AI-driven security in smart buildings.

1. Introduction

Integrating the internet and cloud has transformed the way smart buildings process data [1], enhancing perception, understanding, and responsiveness to complex operations. Integration plays a crucial role in tasks such as unauthorized access detection, equipment behavior analysis, and vulnerability identification in building automation systems [2]. Data has become the oil of the information era. By leveraging real-time, multi-location Internet of Things (IoT) sensor data from building components, we can uncover the value of streams captured by sensors embedded in walls, elevators, and energy meters [3,4]. This enables deep learning (DL) in automated tasks such as classifying building traffic patterns, detecting anomalies in fire alarm communications, and semantically segmenting occupancy-related network behaviors, improving the efficiency and accuracy of smart building traffic data processing [5].

As AI-enabled smart buildings become more prevalent, securing and ensuring the reliability of building network traffic becomes increasingly important [6]. It is critical for the data backbone that supports access control, energy monitoring, and fire safety, as abnormal traffic can disrupt building decision-making and put the safety of occupants at risk.

Modern building automation systems increasingly rely on AI-driven analytics for energy optimization and predictive maintenance. For example, we can develop targeted malware that exploits building automation protocols with AI to simulate falsifying or modifying attacks on the chiller controller, thereby endangering the entire network infrastructure [7]. However, the transmission of critical operational data over public or converged networks amplifies vulnerabilities [8]. Intelligent buildings incorporate multiple heterogeneous networks, such as building automation, energy management, and security monitoring. The heterogeneous integration of communication technologies exacerbates these vulnerabilities by expanding the attack surface. AI-based security systems are not invulnerable to attack. Adversarial perturbations can deceive DL-powered intrusion detection models, leading to misclassification of malicious traffic as normal and compromising the reliability of network monitoring systems [9]. These evolving threats underscore the urgent need for robust, adaptive security frameworks.

AI models, including traffic classification models and intrusion detection systems (IDS), rely on abundant labeled abnormal traffic data to learn patterns, enabling automated, high-precision, real-time detection. Lacking high-quality samples harms generalization, leading to missed detections or false alarms against new variant attacks and weakening overall defense. Acquiring abnormal traffic data is crucial for training robust AI detectors, and the quality of data acquisition directly affects AI security effectiveness [10].

Architectural anomaly traffic data often contains sensitive information such as device vulnerabilities and attack paths, and direct transmission may trigger security policies or be maliciously intercepted. Existing encryption technologies can protect content, but cannot hide transmission behavior itself [11]. However, sharing abnormal traffic data on open networks presents challenges. Security devices such as firewalls, IDS, and antivirus software have predefined rules for known abnormal traffic, such as ports, payload characteristics, and behavioral patterns [12]. When abnormal traffic triggers these rules during transmission, they will be intercepted, truncated, or even isolated. This makes it challenging to share real abnormal traffic samples for model training and verification. This creates a data island effect, where key abnormal traffic data cannot be transmitted safely, hindering the upgrade of AI-based IDS models. Unusual traffic may involve attack details or sensitive information, and transmission may also pose compliance risks, making it even more challenging to share [13,14].

To address the challenges of sharing abnormal traffic data, this paper proposes a secure, undetectable traffic transmission framework. Steganography is the art of concealing secrets within everyday media such as audio, images, or text to enable covert communication [15]. Distributing critical information over public networks increases the risk of cyberattacks. Steganography embeds secret messages within pixels to protect sensitive assets and maintain intellectual property authenticity. The abundant image data provides a perfect platform for generating and transmitting AEs.

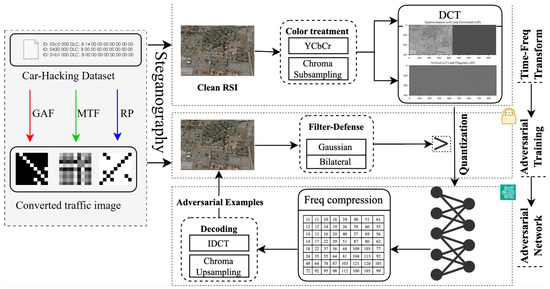

Figure 1 shows the proposed method for creating container images, which then embeds abnormal traffic into frequency-domain adversarial perturbations through steganography to create visually imperceptible stego images. Clean examples follow natural distribution, while adversarial examples (AEs) deviate from the original distribution by adding subtle perturbations [16]. We embed abnormal traffic in AEs through steganography [17]. Perturbations in AEs can exceed AI model recognition boundaries, making them less detectable by traditional IDS tools. When abnormal traffic is transformed into pixel perturbations or frequency-domain data in containers like images, its original characteristics are masked, bypassing firewall rules based on traffic features. This approach not only employs AEs to evade AI detection but also conceals covert traffic transmission with steganography, enabling cross-network and cross-entity sharing of abnormal data. The integrated protection scheme, which blends camouflage in the frequency domain with steganographic embedding, protects against interception during cross-network or cross-departmental transmission. This approach ensures the accuracy of the extracted traffic, which is crucial for training robust building security models and addressing the sample island limitation [18]. The main contributions of this work are threefold:

Figure 1.

The framework of the proposed method. The method presents frequency-domain adversarial steganography to embed abnormal traffic into ordinary images, achieving content concealment and avoiding transmission behavior detection, providing a secure and compliant cross-domain data sharing solution.

- We propose a hidden transmission method for network abnormal traffic based on multi-channel image reconstruction, which improves the limited information-carrying capacity in current abnormal traffic transmission. The method begins by extracting one-dimensional feature sequences from abnormal traffic. These sequences are then transformed into two-dimensional grayscale images using three distinct mapping algorithms. The multi-channel traffic images are steganographically embedded within the frequency domain.

- We propose an adversarial steganography method that embeds traffic images in the frequency domain to evade security systems. Compared to traditional steganography in the time domain, frequency-domain embedding can disperse information features and enhance concealment.

- Experiments show that our framework is effective and combines high- and low-frequency traffic image information to protect against high-fidelity adversarial attacks (AAT). This, in turn, enables secure and undetectable sharing of abnormal traffic data across open networks.

The rest of this paper is structured as follows: Section 2 provides a comprehensive review of current literature on sensing images in security. In Section 3, we introduce our proposed method and explain how we integrate frequency quantization and image enhancement techniques to produce adversarial steganography samples. Section 4 showcases the benefits of our approach. In conclusion, we summarize the paper and discuss future directions.

2. Literature Review

Detecting Abnormal Traffic within Smart Building Environments. BACnet protocol is the core communication protocol for critical equipment such as HVAC (heating, ventilation, and air conditioning) and fire alarm systems in smart buildings. To overcome the lack of real BACnet protocol traffic datasets, which is a key limitation for HVAC system IDS development in smart buildings due to confidentiality, Seyed et al. [19] propose a framework that injects real collected data into a scenario-based virtual controller simulator to generate BACnet traffic. Tsion et al. [20] propose countermeasures based on network and physical security, and develop an intrusion detection system based on tree-based algorithms that are tailored to the characteristics of BACnet devices in industrial control networks. Desta et al. [21] take long–short-term memory (LSTM) networks to detect anomalies in automotive control data. Kang et al. [22] build a classifier with a deep belief network and test it on simulated datasets. Traditional detection methods based on rules or single-feature analysis struggle to effectively detect cross-system collaborative attacks. Our embedding preserves the multidimensional features of abnormal traffic.

DL has undergone swift development. There have been concerns raised about the complexity and inscrutable aspects of DL-based models, including their susceptibility and lack of a rationale behind their decision-making process. Sharma et al. [23] find that removing low-frequency adversarial perturbations can make AEs easier to detect. Duan et al. [16] propose a novel attack method called AdvDrop, which optimizes a quantization table to generate AEs. However, the visual quality of the generated AEs is often poor. Luo et al. [24] propose a low-frequency constrained method that adds adversarial perturbations to the high-frequency components of an image, resulting in better visual effects. However, this method is optimization-based and requires more iterations compared to gradient-based attack methods. Li et al. [25] introduced a low-frequency AAT. Since it only requires one transformed example per iteration, it may lead to overfitting on the substitute model. Du et al. [26] develop a fast C&W algorithm for image recognition. The rapid C&W algorithm’s adversarial noise mainly concentrates near the target area, resulting in positive outcomes in image recognition attacks. DL-based models are vulnerable when faced with AEs caused by adding imperceptible perturbations. Despite various strategies proposed to address AEs, the issue of enhancing defense against unknown attacks remains unresolved. To address this, Gong et al. [27] employ image reconstruction to train classifiers, eliminating any biases. AEs often mislead DL, leading to high confidence but inaccurate classifications and hindering the utilization of DL. To address this, Zhang et al. [9] employ adversarial training (AT) based on an adaptive frequency-domain transform to strengthen deep models against AEs.

Our Solution against Secure Traffic Recognition. Existing research on network anomaly detection often focuses on isolated aspects such as temporal modeling with LSTMs, statistical features like frequency or entropy. However, these methods exhibit critical limitations: they rely on single-mode data representations that fail to capture the multidimensional characteristics of abnormal traffic, lack mechanisms for secure cross-domain data sharing without triggering alerts, sacrifice visual quality for adversary effectiveness, and require computationally expensive optimization processes. Most techniques address either detection accuracy or data hiding separately, without integrating both objectives into a unified framework.

To address these gaps, this paper proposes a novel multi-channel imaging hiding method that transforms abnormal traffic into enriched visual representations. Figure 1 utilizes an information fusion approach that minimizes noticeable perturbations during adversarial steganography, enhancing image robustness against potential attacks. By fusing these temporal and structural features into a unified image representation and embedding it into frequency-based AEs, our approach simultaneously enhances feature integrity for accurate detection and enables stealthy transmission that evades both conventional security systems and AI-based monitors. The proposed framework advances information fusion for a traffic data secret-sharing mechanism, ensuring statistically undetectable pixel distributions.

3. Proposed Method

3.1. Threat Model

The threat model for this work focuses on passive adversaries in smart building networks, including external eavesdroppers capable of monitoring inter-domain traffic and internal security devices such as IDS and firewalls equipped with rule-based or AI-driven detection capabilities. Adversaries may perform deep packet inspection, traffic feature analysis, or steganalysis to identify abnormal traffic patterns, but they lack knowledge of specific steganographic keys, frequency-domain perturbation parameters, or multi-channel fusion strategies. The model aims to protect the integrity and stealth of abnormal traffic during cross-departmental sharing while ensuring that the covert transmission process does not trigger false alarms or disrupt legitimate building automation systems such as BACnet-based control loops. It assumes that effective communication security is in place and ignores active attacks such as data manipulation or poisoning.

3.2. Traffic Image Generation

The traffic image fusion system combines images generated from traffic data. Existing methods directly map network traffic data into images. However, storing one-dimensional data to generate two-dimensional images often produces images that lack practical significance, making it challenging to extract the full characteristics of traffic data and resulting in insufficient information extraction. This is because the generated traffic images are fundamentally different from natural images. The spatial structures of directly converted traffic images, such as edges, color blocks, and textures, lack real physical meaning, making it challenging to leverage their built-in prior knowledge for effective feature extraction and learning.

While RGB is the most commonly used color space, it may not be the best choice for traffic data representation as traffic visualization does not align with the red, green, and blue channels as natural images do. We propose to convert one-dimensional data into two-dimensional images, including Markov transition fields (MTF), recursive plots (RP), and Gram angular fields (GAF). Each method has unique features that effectively extract information and enhance the detail in traffic images. The core idea of MTF is to convert one-dimensional sequence data into a two-dimensional image representation based on the transition probability of the Markov chain. The transition patterns are analyzed to create a visual representation of the data. We divide the given data into n equal-sized quantile bins, each bin contains the same number of data points, and each data point is in a unique bin according to Equation (1):

Let the original one-dimensional traffic feature sequence be . To convert it into a Markov Transition Field (MTF), we first partition the values of X into Q discrete quantile bins. Each bin (where ) contains an equal number of data points. The MTF matrix M is then constructed as a matrix, where each element represents the transition probability of a value in quantile bin at time t being followed by a value in the quantile bin at time . This probability is empirically estimated from the frequency of transitions observed across the sequence X.

The size of the matrix, which is also the size of the generated image, is . represents the transition probability from quantile bin to quantile bin .

RP are constructed through phase space reconstruction, where a one-dimensional time series is embedded into an m-dimensional space with a time delay of . This transformation creates state vectors that capture the system’s dynamics and controlling the temporal resolution of the embedded trajectory.

While MTF emphasizes state transition patterns and RP highlights inherent similarities, they do not account for temporal dynamics, frequency distribution, and abrupt change patterns. Single-representation images have low information entropy, causing models like DL to miss critical attack features during feature extraction, such as concurrent DDoS connection spikes or abnormal R2L login sequences. Training accuracy and generalization may decline, hindering the adaptation to the evolving landscape of internet attacks.

GAF leverages the angular relationships among data points to reveal the dynamics and structure of the input data. It calculates the cosines of angles between data points in the sequence and maps these values to a two-dimensional image, capturing features and patterns. The conversion maps the traffic data to or to obtain . It then generates polar coordinates by timestamp as the radius and arccosine as the angular component. After transforming to the polar system, the Gram matrix is constructed from the cosine or sine of the inter-point angles according to Equation (2).

where represents the angle of the polar coordinate for data point i. and represent and , respectively. The traffic data can be transformed into an image where each matrix element is displayed in color. GASF focuses capturing overall trends, while GADF focuses on local changes. As a result, GASF is better suited for binary classification tasks.

3.3. Traffic Steganography

The multi-channel traffic image is then converted to a JPEG-encoded image. To reduce transmission bandwidth and storage space requirements during image transmission, various image compression techniques are employed. JPEG has become the dominant format for image transmission [28], owing to its high compression efficiency and widespread applicability [29]. However, while JPEG provides efficient transmission, its complex compression algorithm can result in quality degradation and data loss when decompressed. These issues are crucial for secure image processing, as even minor image quality degradation can affect image decoding and analysis accuracy.

To ensure secure and efficient covert transmission of the generated multi-channel traffic images, we take an adaptive steganographic framework that minimizes an additive distortion function. We adopt the J-UNIWARD (JPEG Universal Wavelet Relative Distortion) algorithm [30], widely regarded as one of the most secure distortion measures for JPEG-domain steganography. J-UNIWARD computes embedding distortion from changes in spatial-domain wavelet coefficients, enabling the embedding to adapt to local content and favor complex, textured regions that better conceal perturbations.

The human eye is more sensitive to brightness than color, so reducing color pixels has little impact. To achieve this, we can transform an image from to space, keeping the luminance at levels 0–255, and subsampling the chrominance and . Then, we perform chroma subsampling at , compressing 50% of the storage space compared to the original image.

Encoding traffic data as images enables steganographic embedding within AEs. This integration exploits the invisibility of adversarial perturbations to further conceal the traffic data and reduce suspicion. As AEs already contain subtle, human-perceptible modifications, embedding traffic images within them further reduces the perception of hidden information. By masking traffic features within these perturbations, the fused data is less likely to be detected by security mechanisms, enabling secure transmission of sensitive information while preserving the integrity of both the AE and the embedded data for subsequent analysis.

3.4. Frequency Compression-Based Adversarial Examples

The core challenge in concealing mixed-traffic images for covert transmission is to balance the dual requirements of preserving the embedded traffic features and maintaining the visual imperceptibility of the stego image, which is crucial for seamless integration and evasion. Traditional spatial-domain steganography and frequency-domain modification methods face trade-offs: altering low-frequency coefficients can degrade overall image quality and trigger detection in smart building monitoring, while changing high-frequency coefficients could risk robustness. Minor transmission noise or compression may corrupt embedded traffic data, leading to feature loss when extracting anomalies for building a security model training. For mixed-traffic images representing multi-dimensional smart building features, any loss or distortion of embedded information undermines their value for training security models, exacerbating the sample-island problem.

By fusing temporal and structural features into a unified image representation and embedding it in frequency-based AEs, our approach enhances feature integrity for accurate detection and enables stealthy transmission. Given a DL model and an original input , an adversarial attack seeks a perturbation such that

where is the allowable perturbation space. is the upper bound on perturbations to ensure imperceptibility. For each iteration , the perturbation is updated as follows:

where is the projection operation constraining the perturbation within the ball which is the norm. is the step size. is the loss function with the final adversarial example being computed as after T iterations.

The initial value of the AEs is usually determined by a random perturbation near the original sample x. Improving the attack success rate can be achieved through random initialization and avoiding local optima:

where is a random perturbation following a uniform distribution over . The initial sample must first be projected by to ensure it conforms to the legitimacy of the original sample. For the t-th iteration (), we compute the gradient of the loss function with respect to the current sample , denoted as . Then, update the sample along the gradient direction and restrict the perturbation range through the projection:

where its perturbation range is constrained to prevent the updated sample from exceeding the pre-set boundaries.

We further optimize the frequency-domain transformation and modification strategy. We divide an image into blocks and transform each block from the spatial domain to the frequency domain. We choose discrete cosine transform (DCT) as the transformation, which transforms cosine functions of different frequencies to represent finite sequences of data points. After the DCT transformation, the image information is concentrated in a few low-frequency coefficients, while texture and edge details are distributed in the mid-to-high-frequency coefficients. The DCT equation can be expressed as follows:

where both u and v are both equal to 0, and and are equal to . When u and v take any other values, and are equal to 1. Equation (9) computes the uth, vth entry of ; is the value on image coordinates . J-UNIWARD embeds traffic features into the quantized DCT coefficients of the AE cover image. The objective of embedding is to minimize the sum of relative changes in wavelet coefficients between cover and stego images, preserving statistical undetectability.

Noting that the DCT transformation is lossless and invertible, information loss may occur during the subsequent quantization step. After the spatial domain transformation, attacks are initiated strategically during the quantization phase due to information modification in the quantization process. This modification may lead to misclassifications by classification models.

For the adversarial loss , we design the loss function [31] as follows:

where represents an AE generated by attacking a clean sample, and represents the confidence score of the AE for the target model f on classes other than the target class k. and represent the confidence scores of the AE for the target model f on the original label class y and the target label class , respectively.

Abnormal traffic is concealed by generating AEs. Without active training, these samples may be misclassified as real attacks by other IDS, triggering false alarms or defensive actions that disrupt operations and maintenance. Incorporating AEs into training enhances the robustness of the internal security detection module, enabling it to distinguish malicious concealed traffic from benign business data. This not only ensures the reliability of the concealed transmission link but also prevents unnecessary resources or operational interruptions due to false reports.

The AT can be expressed as the following min-max optimization problem:

where the inner maximization finds the worst-case perturbation within a -ball that maximizes the loss . The outer minimization optimizes the model parameters to minimize the expected loss under these worst-case perturbations. The robust model learns to perform well not just on clean examples, but on adversarially perturbed versions of them, thereby improving its robustness against attacks.

4. Experimental Results

In this section, we will examine the intersection of adversarial attack and defense for data analysis. First, we provide an overview of the experimental setup and evaluate attack performance, generalization, and attack distribution. We also assess the quality of generated AEs by calculating various perceptual similarities.

4.1. Experimental Settings

4.1.1. Experimental Details

Our experimental work is conducted on a Linux server equipped with an Intel Xeon Gold 5218R CPU, along with a cluster of GTX 2080Ti GPUs. The experiments are performed by the PyTorch 2.4 framework. The binning policy in Equation (1) is . The parameters , are provided for RP. The normalization method maps values to via min-max scaling. The resolution of the resulting image is fixed at pixels for all methods. The three resulting grayscale images from MTF, RP, and GAF are concatenated along the channel dimension to form a multi-channel RGB-like image.

4.1.2. Dataset

We test our multi-channel imaging and steganographic framework on real BACnet traffic, including both normal operations and attack scenarios [19]. The dataset has clearly labeled types such as Normal, Covert Channel Attack (CCA), and Falsifying Attack. BACnet protocol is the core communication protocol for critical equipment such as HVAC and fire alarm systems in smart buildings [19]. The dataset is widely recognized for its diverse and realistic attack scenarios, making it highly suitable for evaluating the generalization capability of intrusion detection methods in contemporary network environments, including smart building infrastructures, such as the simulation of the control unit of the air handling device and Covert Channel Attacks in chiller controllers.

The cybersecurity of smart buildings extends beyond equipment controlled by the BACnet protocol. This involves complex data interaction scenarios across systems and protocols. In building networks, multiple protocols are often run at the same time, forming a multi-protocol hybrid environment. Two representative traffic datasets have been adopted to evaluate the proposed method’s performance in both industrial control and general network anomaly scenarios. We take the real car hacking attack dataset Car-Hacking [32], which can be divided into five categories: normal, denial of service attack (DDoS), fuzzy attack, gear deception attack, and instrument deception attack (RPM). Modern intelligent buildings are tightly integrated with the smart city infrastructure. To ensure a comprehensive evaluation and facilitate comparison with prior work, we also employ the widely used NSL-KDD dataset, in addition to the Car-Hacking dataset [33]. NSL-KDD is a classic benchmark dataset for IDS. There are five different types of traffic in the NSL-KDD dataset: normal, denial of service (DoS), probe, user to root (U2R), and remote to local (R2L).

If the chosen images are related to intelligent buildings, such as surveillance footage or equipment diagrams, they would trigger priority security investigations due to the correlation between transmission content and abnormal traffic, violating the original design goal of covert transmission. In cross-department intelligent building data sharing, remote sensing imagery of ground objects can support park planning communication, while agricultural disease imagery can support cafeteria supply chain monitoring. Both meet the criteria for reasonable transmission content. Embedding abnormal traffic in these contexts aligns with the actual requirement of employing regular data to conceal sensitive information.

The container images in our experiment are sourced from classification datasets, including the RSSCN7 and Corn pathology datasets [34]. The task of classification is essential for gaining a deeper understanding of an image that has undergone extensive study with AEs. The diversity of scene images in RSSCN7 presents significant challenges, as they are captured in different seasons and weather conditions, and sampled at different scales. Images for each category are sampled at four different scales, with 100 images for each scale. Each class contains 400 images, which totals 2800. The diseases have varying degrees of impact on rice growth and yield. The Corn pathology dataset is a dataset on maize leaf diseases designed to help researchers identify and classify different types of maize leaf diseases. Compared to single-color or structurally simple images, the natural visual features of the two datasets are reduced, which increases the chance of adversarial samples not being identified, supporting the implementation of the double concealment mechanism.

4.1.3. Evaluation Metrics

In IDS classification tasks, common evaluation metrics such as accuracy, Precision, Recall, and F1-score are appropriate for binary and multi-class problems. Prediction results fall into four categories: (1) TP (True Positive): correctly predicted positives; (2) FP (False Positive): incorrectly predicted positives (negatives); (3) FN (False Negative): incorrectly predicted negatives (positives); (4) TN (True Negative): correctly predicted negatives.

The Accuracy (Acc) is the proportion of correctly predicted samples to the total number of samples:

The Precision (Pre) is the proportion of true positive examples to predicted positive examples:

The Recall (Rec) is the proportion of correctly predicted positive examples to all actual positive examples:

The F1-score (F1) is the harmonic mean of the recall rate and the precision rate to balance Recall and Precision:

To evaluate data integrity in our steganographic transmission, we report the BER, which measures the accuracy of the hidden abnormal traffic data recovered from the steganographic image after embedding and extraction. Bits per pixel (BPP) is a core metric for measuring the embedding capacity in steganography. It shows the average number of secret information bits per pixel. The visual quality preservation is evaluated with the peak signal-to-noise ratio (PSNR) and the structural similarity index metric (SSIM) [35]. We also present the undetectability results of our method against the modern SRNet steganalyzer [36].

4.2. Evaluation of Our Fusion Method

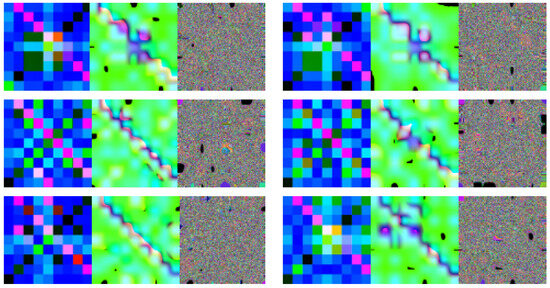

When transforming data into images, we use various color space models, including MTF, HSV, and LAB, to represent traffic images. The resulting images showcase the richness and detail of the underlying information. Examples can be seen in Figure 2.

Figure 2.

Images in different color spaces (every three images represent a network traffic, from left to right representing it in MTF, HSV, and LAB color spaces, respectively).

Table 1 shows a fused color channel combination that achieves a classification accuracy of 0.99, outperforming both the HSV and LAB combinations. This improvement can be attributed to its enhanced color representation and greater informational richness.

Table 1.

Performance comparison of different feature representation methods across datasets. Fused channels provide comprehensive color information, enabling the model to learn discriminative features more effectively for classification tasks.

4.3. Generalization Evaluation

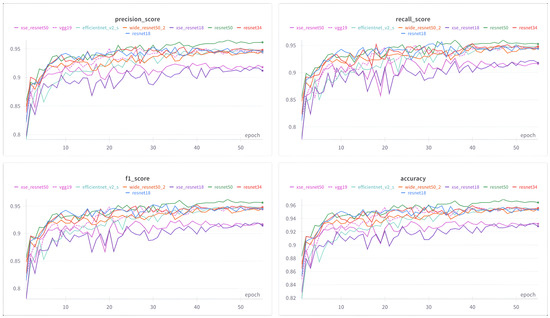

We evaluate the recognition performance of the RSSCN7 dataset with a ResNet50 model pre-trained on ImageNet [37]. The ResNet50 model, known for its deep architecture and feature extraction, is widely used in image processing. Its F1-score, which measures the balance of recall and precision, improves consistently with increasing training epochs in Figure 3. The convergence speed at which image classification converges with the pre-trained model is accelerated.

Figure 3.

Convergence curves and performance metrics for network intrusion classification model on the RSSCN7 Dataset.

Table 2 presents the recognition results of eight deep learning models, including ResNet18, ResNet34, ResNet50, Xse_ResNet18, XseResNet50, WideResNet50, EfficientNet, and VGG19 on two image datasets: Corn Pathology and RSSCN7. Most models achieve high accuracy on the clean Corn Pathology dataset, underscoring the effectiveness of DL in corn disease diagnosis. ResNet50 achieved the highest accuracy and F1-score, demonstrating superior performance on this dataset. On the RSSCN7 dataset, ResNet34 performs strongly, achieving competitive scores across multiple metrics. ResNet18 delivered consistent results on both datasets, slightly outperforming both ResNet50 and ResNet34. The modified Xse_ResNet architectures did not yield significant performance gains. EfficientNet performed robustly on both datasets, but slightly trailed behind the ResNet-based and WideResNet models.

Table 2.

Recognition performance of traffic images with eight backbones.

Table 3 shows that the models on clean images fail to effectively recognize AEs. The employment of AT improves recognition accuracy for both adversarial and clean samples. AT highlights the key features of images. AT enhances the accuracy in detecting subtle variations and increases its sensitivity to adversarial perturbations. Recognition accuracy can be improved more effectively through reliable separation between adversarial and clean samples. We compared the performance of a standard model, a PGD/0 clean model, and a PGD/1 AT model on clean and adversarial inputs. To enhance robustness, we introduce the PGD/0 AT model. Through training with adversarial examples, the PGD/1 model learns to withstand perturbations and accurately classify AEs. This improvement is achieved through incorporating AEs during training, which enables the model to resist adversarial interference and focus on critical image features.

Table 3.

Adversarial training on the recognition performance of clean and adversarial examples. /0 denotes a standard model and /1 denotes a model fortified with adversarial training.

We further evaluate and compare several intrusion detection methods on the Car-Hacking dataset in Table 4. The reported results are from our replication of these methods with their canonical inputs, not our fused images. We focus on models that also perform well on NSL-KDD. We take classic algorithms such as KNN (K-Nearest Neighbor) and SVM (Support Vector Machine), which are widely employed in intrusion detection due to their strong classification capability and good generalization. We include deep learning models that have shown outstanding performance on NSL-KDD, such as XYF-K, SAIDuCANT, and SSAE (Stacked Sparse Autoencoder) [38]. These models demonstrate strong network intrusion detection performance. The robust feature extraction and learning can effectively handle complex attack patterns and data distributions. The experimental results show that the proposed method exhibits excellent performance across all evaluation metrics, ensuring that the majority of the data are correctly classified.

Table 4.

Experimental results of anomaly traffic detection comparison on the Car-Hacking dataset.

The proposed method outperforms traditional 4-bit-LSB steganography in all datasets compared to the proposed method in Table 5. On the BACnet dataset, our method achieves a PSNR of 44.5 dB, which is a 6.0 dB improvement over LSB, SSIM of 0.97, and reduces BER from 15.24% to 3.47%. Similar improvements have been observed on NSL-KDD and Car-Hacking datasets, demonstrating the effectiveness of our J-UNIWARD-based approach for secure traffic data hiding.

Table 5.

Performance comparison of steganography methods for different network traffic datasets on the RSSCN7 dataset.

4.4. Few-Shot Experiments

The rapid advancement of DL has spurred numerous frameworks that accelerate progress in image recognition and related fields. This growth is driven by large-scale datasets that enable effective training and optimization. In traffic analysis for smart buildings, data collection is often costly and time-consuming, yielding small datasets. Therefore, it is crucial to evaluate model performance in few-shot learning scenarios. To ensure robust generalization under low-data conditions, we conduct few-shot experiments to test our framework. We compare our approach to several classic DL networks: ResNet34, ResNet50, VGG19, and EfficientNet. Models are trained using 50, 100, and 200 samples and the results are summarized in Table 6. Few-shot experiments show a significant decline in performance for classical CNN models, particularly when the sample size is reduced to 50. Thus, our method maintains strong recognition in the few-shot regime.

Table 6.

Performance comparison (standard deviation over 5 runs) of a small number of samples on the Car-Hacking dataset.

4.5. Visualization Evaluation

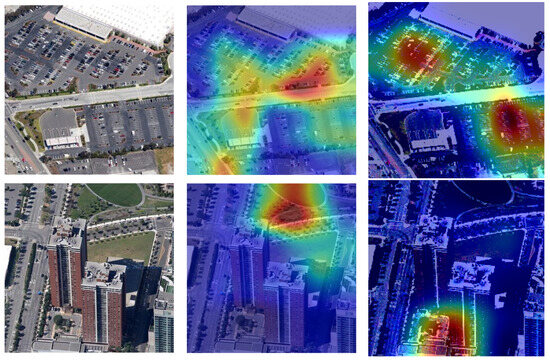

We apply Grad-CAM [39] to identify the discriminative regions of different models. We randomly select two images from the RSSCN7 dataset. Grad-CAM visualizations from the final convolutional layer of a ResNet-50 model are applied to 224 × 224 input images. Figure 4 shows the heatmaps of clean samples with attention distribution in different parts of images. In a parking scenario, areas with high vehicle density often exhibit elevated thermal signatures, whereas open or vegetated regions tend to show lower heat levels. In the heatmap of AEs generated during the FGSM attack (right column), a noticeable shift in attention regions can be observed. This indicates that, although the AE appears nearly identical to the original, the attention of DL-based models alters when processing such inputs. This redistribution in focus may lead to image misclassification. For instance, on the RSSCN7 dataset, recognition accuracy decreased under these conditions.

Figure 4.

The heatmap of clean samples and adversarial samples.

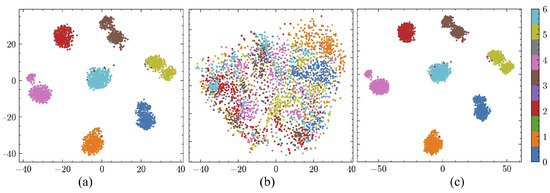

Figure 5 presents a comparison of three 2D t-SNE projections. t-SNE projections generated with perplexity = 30, learning rate = 200, 1000 iterations, and a fixed random seed of 42. The clean samples (left) form well-separated, compact clusters with distinct boundaries, demonstrating effective feature discrimination. Conversely, AEs (middle) display complete intermixing of classes with uniform color distribution and no discernible cluster structure, demonstrating how adversarial perturbations disrupt feature representation. Adversarial perturbations disrupt the high-dimensional feature extraction process, causing similar samples to lose cohesion and dissimilar samples to overlap. This intermixing highlights its vulnerability to AEs, as the feature space loses its semantic coherence. The robust samples (right) show partially recovered cluster structures with improved separation, indicating that defense mechanisms can mitigate the impact of adversarial perturbations.

Figure 5.

The clustering visualization of the RSSCN7 dataset. (a) The clustering of clean examples, (b) the clustering of adversarial examples, and (c) the clustering of robust examples.

4.6. Security Evaluation

Table 7 presents the results of undetectability of our method against the modern SRNet steganalyzer. The experiments compare three configurations: Stego Only (applying only the J-UNIWARD embedding), AE Only (applying only the adversarial perturbation), and our full method (AE+Stego). The results demonstrate that our complete approach (AE+Stego) achieves near-optimal security performance with the highest average detection error and lowest statistical detectability across diverse image domains and payload sizes. This confirms that integrating adversarial perturbations optimized for the frequency domain enhances the stealth of the steganographic payload, making it substantially more resistant to detection.

Table 7.

Steganalysis ablation study evaluating undetectability against SRNet. Performance is measured by the average detection error rate across various payload sizes.

4.7. Ablation Evaluation

Table 8 evaluates the classification performance on the NSL-KDD dataset after individually removing each channel from the fused multi-channel input. The results indicate that the removal of any single channel leads to a statistically significant decrease in performance compared to the full model. For example, excluding the RP channel, which captures structural similarities and periodicity, resulted in the most noticeable decline in detecting R2L and U2R attacks, accompanied by a drop in F1-score. Similarly, removing the GAF channel, responsible for encoding temporal correlations, impaired performance, with the most substantial impact on the detection of Probe and DoS attacks. The absence of the MTF channel, which models state transition probabilities, reduced overall accuracy. These results confirm that each channel contributes unique and complementary information pertaining to abnormal traffic, and that integration is critical to the robust and high-performance detection capability achieved by our comprehensive framework.

Table 8.

Ablation study on the contribution of individual channels in the multi-channel fusion method.

We also identify two primary factors contributing to these errors: (1) the inherent challenge in capturing and representing extremely sparse and brief anomalous events within the fixed-length segments used for image reconstruction, which can lead to a loss of critical temporal detail. (2) This analysis underscores that while our multi-channel representation enhances feature integrity for most traffic types, attacks with very low signal-to-noise ratios remain challenging.

5. Conclusions

The increasing sophistication of smart building networks, which combine critical systems such as access control, energy monitoring, and HVAC management, creates a pressing demand for robust anomaly detection and secure data exchange. However, current approaches struggle with two fundamental weaknesses. Single-mode traffic analysis often overlooks the complex nature of multi-faceted attacks, and traditional secure transmission methods like encryption or VPNs inadvertently highlight the very data they aim to protect, rendering it vulnerable to interception. In response to these limitations, we introduce a novel framework designed for both secure and undetectable traffic transmission. Our solution leverages a two-stage process. First, it converts anomalous traffic patterns into comprehensive visual representations through complementary mappings to ensure no feature loss. Second, it conceals these representations within ordinary cover images using a frequency-domain steganographic technique that preserves the statistical properties of the carrier, leaving no detectable trace. To address these limitations, we propose a novel framework that enables secure and undetectable traffic transmission. Our solution employs a two-stage approach. First, it transforms anomalous traffic patterns into comprehensive visual representations through complementary mappings to prevent any feature loss. Second, it embeds these representations into ordinary cover images using a frequency-domain steganographic technique. This method maintains the statistical properties of the carrier image, ensuring that no visible traces are left. Experimental evaluations confirm the effectiveness of the proposed framework. It maintains a remarkably low BER for data recovery while simultaneously evading detection by standard security appliances. This dual capability facilitates the reliable and covert sharing of critical operational intelligence across smart building infrastructures.

In future work, we will explore the framework’s application to encrypted traffic and pursue real-time optimizations for large-scale networks. Furthermore, as a complementary direction beyond the cover-based steganography methods used in this work, we will investigate carrier-free steganography mechanisms based on deep learning to achieve enhanced secrecy.

Author Contributions

Z.Q., Conceptualization; Supervision; Validation; Writing—original draft; Writing—review and editing. F.L., Writing—review and editing; Validation; Investigation, Funding Acquisition. M.H., Writing—original draft; Data curation; Resources; Validation. D.Z., Writing—original draft; Investigation; Software. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported in part by the China Southern Power Grid’s major network-level scientific and technological project (Research and Application of Multi-dimensional Active Defense Technology for Digital Grid), project number 037800KC24040002 (GDKJXM20240428).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The study described in this paper is based on public datasets, which have been already referenced before in the manuscript. This information is repeated here: The traffic dataset is described and available in [20,32,33]. The image dataset is described and available in [34].

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could appear to influence the work reported in this paper.

References

- Díaz-Rodríguez, N.; Del Ser, J.; Coeckelbergh, M.; López de Prado, M.; Herrera-Viedma, E.; Herrera, F. Connecting the dots in trustworthy Artificial Intelligence: From AI principles, ethics, and key requirements to responsible AI systems and regulation. Inf. Fusion 2023, 99, 101896. [Google Scholar] [CrossRef]

- He, X.; Dong, H.; Yang, W.; Li, W. Multi-source information fusion technology and its application in smart distribution power system. Sustainability 2023, 15, 6170. [Google Scholar] [CrossRef]

- Alhomayani, F.; Mahoor, M.H. Deep learning methods for fingerprint-based indoor positioning: A review. J. Locat. Based Serv. 2020, 14, 129–200. [Google Scholar] [CrossRef]

- Verma, A.; Prakash, S.; Srivastava, V.; Kumar, A.; Mukhopadhyay, S.C. Sensing, controlling, and IoT infrastructure in smart building: A review. IEEE Sensors J. 2019, 19, 9036–9046. [Google Scholar] [CrossRef]

- Bansal, M.; Chana, I.; Clarke, S. A Survey on IoT Big Data: Current Status, 13 V’s Challenges, and Future Directions. ACM Comput. Surv. 2021, 6, 53. [Google Scholar] [CrossRef]

- Zhuang, X.; Tang, Z.; Lin, S.; Ding, Z. Prediction and Optimization of the Restoration Quality of University Outdoor Spaces: A Data-Driven Study Using Image Semantic Segmentation and Explainable Machine Learning. Buildings 2025, 15, 2936. [Google Scholar] [CrossRef]

- Chaudhari, P.; Xiao, Y.; Cheng, M.M.C.; Li, T. Fundamentals, algorithms, and technologies of occupancy detection for smart buildings using IOT sensors. Sensors 2024, 24, 2123. [Google Scholar] [CrossRef]

- Wen, W.; Fan, J.; Zhang, Y.; Fang, Y. APCAS: Autonomous Privacy Control and Authentication Sharing in Social Networks. IEEE Trans. Comput. Soc. Syst. 2023, 10, 3169–3180. [Google Scholar] [CrossRef]

- Zhang, D.; Liang, Y.; Huang, Q.; Huang, X.; Liao, P.; Yang, M.; Zeng, L. FreqAT: An Adversarial Training Based on Adaptive Frequency-Domain Transform. In Advanced Data Mining and Applications; Sheng, Q.Z., Dobbie, G., Jiang, J., Zhang, X., Zhang, W.E., Manolopoulos, Y., Wu, J., Mansoor, W., Ma, C., Eds.; Springer: Singapore, 2025; pp. 287–301. [Google Scholar] [CrossRef]

- Manivannan, D. Recent endeavors in machine learning-powered intrusion detection systems for the internet of things. J. Netw. Comput. Appl. 2024, 229, 103925. [Google Scholar] [CrossRef]

- Ruhil, S.; Bhadauria, S.; Kushwaha, J.P. Encrypted Network Traffic Classification using Deep Learning. In Proceedings of the 2024 15th International Conference on Computing Communication and Networking Technologies (ICCCNT), Kamand, India, 24–28 June 2024; pp. 1–6. [Google Scholar]

- Huotari, M.; Malhi, A.; Främling, K. Machine learning applications for smart building energy utilization: A survey. Arch. Comput. Methods Eng. 2024, 31, 2537–2556. [Google Scholar] [CrossRef]

- Zhao, L.; Fan, X.; Hawbani, A.; Xu, L.; Yu, K.; Liu, Z.; Alfarraj, O. Generative abnormal data detection for enhancing cellular vehicle-to-everything-based road safety. IEEE Trans. Green Commun. Netw. 2024, 8, 1466–1478. [Google Scholar] [CrossRef]

- Zhang, D.; Shafiq, M.; Srivastava, G.; Gadekallu, T.R.; Wang, L.; Gu, Z. STBCIoT: Securing the Transmission of Biometric Images in Customer IoT. IEEE Internet Things J. 2024, 11, 16279–16288. [Google Scholar] [CrossRef]

- Peng, Y.; Hu, D.; Wang, Y.; Chen, K.; Pei, G.; Zhang, W. StegaDDPM: Generative Image Steganography based on Denoising Diffusion Probabilistic Model. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 7143–7151. [Google Scholar] [CrossRef]

- Duan, R.; Chen, Y.; Niu, D.; Yang, Y.; Qin, A.K.; He, Y. Advdrop: Adversarial attack to dnns by dropping information. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 7506–7515. [Google Scholar]

- Baluja, S. Hiding Images within Images. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 1685–1697. [Google Scholar] [CrossRef]

- Zhang, S.; Lin, Y.; Yu, J.; Zhang, J.; Xuan, Q.; Xu, D.; Wang, J.; Wang, M. HFAD: Homomorphic Filtering Adversarial Defense Against Adversarial Attacks in Automatic Modulation Classification. IEEE Trans. Cogn. Commun. Netw. 2024, 10, 880–892. [Google Scholar] [CrossRef]

- Moosavi, S.A.; Asgari, M.; Kamel, S.R. Developing a comprehensive BACnet attack dataset: A step towards improved cybersecurity in building automation systems. Data Brief 2024, 57, 111192. [Google Scholar] [CrossRef] [PubMed]

- Yimer, T.; Toutsop, O.; Kornegay, K. Secure BACnet Device Communication and Verification for MS/TP Networks. In Proceedings of the 2024 IEEE World AI IoT Congress (AIIoT), Seattle, WA, USA, 29–31 May 2024; pp. 0068–0076. [Google Scholar] [CrossRef]

- Desta, A.K.; Ohira, S.; Arai, I.; Fujikawa, K. MLIDS: Handling raw high-dimensional CAN bus data using long short-term memory networks for intrusion detection in in-vehicle networks. In Proceedings of the 2020 30th International Telecommunication Networks and Applications Conference (ITNAC), Melbourne, VIC, Australia, 25–27 November 2020; pp. 1–7. [Google Scholar]

- Kang, M.J.; Kang, J.W. Intrusion detection system using deep neural network for in-vehicle network security. PLoS ONE 2016, 11, e0155781. [Google Scholar] [CrossRef] [PubMed]

- Sharma, Y.; Ding, G.W.; Brubaker, M. On the effectiveness of low frequency perturbations. arXiv 2019, arXiv:1903.00073. [Google Scholar] [CrossRef]

- Luo, C.; Lin, Q.; Xie, W.; Wu, B.; Xie, J.; Shen, L. Frequency-driven imperceptible adversarial attack on semantic similarity. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 15294–15303. [Google Scholar]

- Li, T.; Li, M.; Yang, Y.; Deng, C. Frequency domain regularization for iterative adversarial attacks. Pattern Recognit. 2023, 134, 109075. [Google Scholar] [CrossRef]

- Du, C.; Huo, C.; Zhang, L.; Chen, B.; Yuan, Y. Fast C&W: A Fast Adversarial Attack Algorithm to Fool SAR Target Recognition with Deep Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4010005. [Google Scholar] [CrossRef]

- Cheng, G.; Sun, X.; Li, K.; Guo, L.; Han, J. Perturbation-Seeking Generative Adversarial Networks: A Defense Framework for Remote Sensing Image Scene Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5605111. [Google Scholar] [CrossRef]

- Shin, R.; Song, D. Jpeg-resistant adversarial images. In Proceedings of the NIPS 2017 Workshop on Machine Learning and Computer Security, Long Beach, CA, USA, 8 December 2017; Volume 1, p. 8. [Google Scholar]

- Wang, Y.; Zou, D.; Yi, J.; Bailey, J.; Ma, X.; Gu, Q. Improving adversarial robustness requires revisiting misclassified examples. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 27–30 April 2020. [Google Scholar]

- Su, A.; Ma, S.; Zhao, X. Fast and secure steganography based on J-UNIWARD. IEEE Signal Process. Lett. 2020, 27, 221–225. [Google Scholar] [CrossRef]

- Carlini, N.; Wagner, D. Towards evaluating the robustness of neural networks. In Proceedings of the 2017 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–26 May 2017; pp. 39–57. [Google Scholar]

- Song, H.M.; Woo, J.; Kim, H.K. In-vehicle network intrusion detection using deep convolutional neural network. Veh. Commun. 2020, 21, 100198. [Google Scholar] [CrossRef]

- Meena, G.; Choudhary, R.R. A review paper on IDS classification using KDD 99 and NSL KDD dataset in WEKA. In Proceedings of the 2017 International Conference on Computer, Communications and Electronics (Comptelix), Jaipur, India, 1–2 July 2017; pp. 553–558. [Google Scholar]

- Singh, D.; Jain, N.; Jain, P.; Kayal, P.; Kumawat, S.; Batra, N. PlantDoc: A Dataset for Visual Plant Disease Detection. In Proceedings of the 7th ACM IKDD CoDS and 25th COMAD, Hyderabad, India, 5–7 January 2020. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Boroumand, M.; Chen, M.; Fridrich, J. Deep Residual Network for Steganalysis of Digital Images. IEEE Trans. Inf. Forensics Secur. 2019, 14, 1181–1193. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Olufowobi, H.; Young, C.; Zambreno, J.; Bloom, G. SAIDuCANT: Specification-based automotive intrusion detection using controller area network (CAN) timing. IEEE Trans. Veh. Technol. 2019, 69, 1484–1494. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).