Bridge Structural Health Monitoring: A Multi-Dimensional Taxonomy and Evaluation of Anomaly Detection Methods

Abstract

1. Introduction

1.1. Motivation for Conducting a Systematic Literature Review on SHM Anomaly Detection

1.2. Existing Literature Reviews on Abnormal Data Detection in SHM of Bridges

1.3. Contributions

- Multi-Dimensional Taxonomy: A novel four-dimensional taxonomy is proposed, encompassing real-time capability, multivariate support, analysis domain (time, frequency, time-frequency), and detection methodology (distance-based, predictive, and image processing). It enables a holistic and operationally relevant classification of anomaly detection methods.

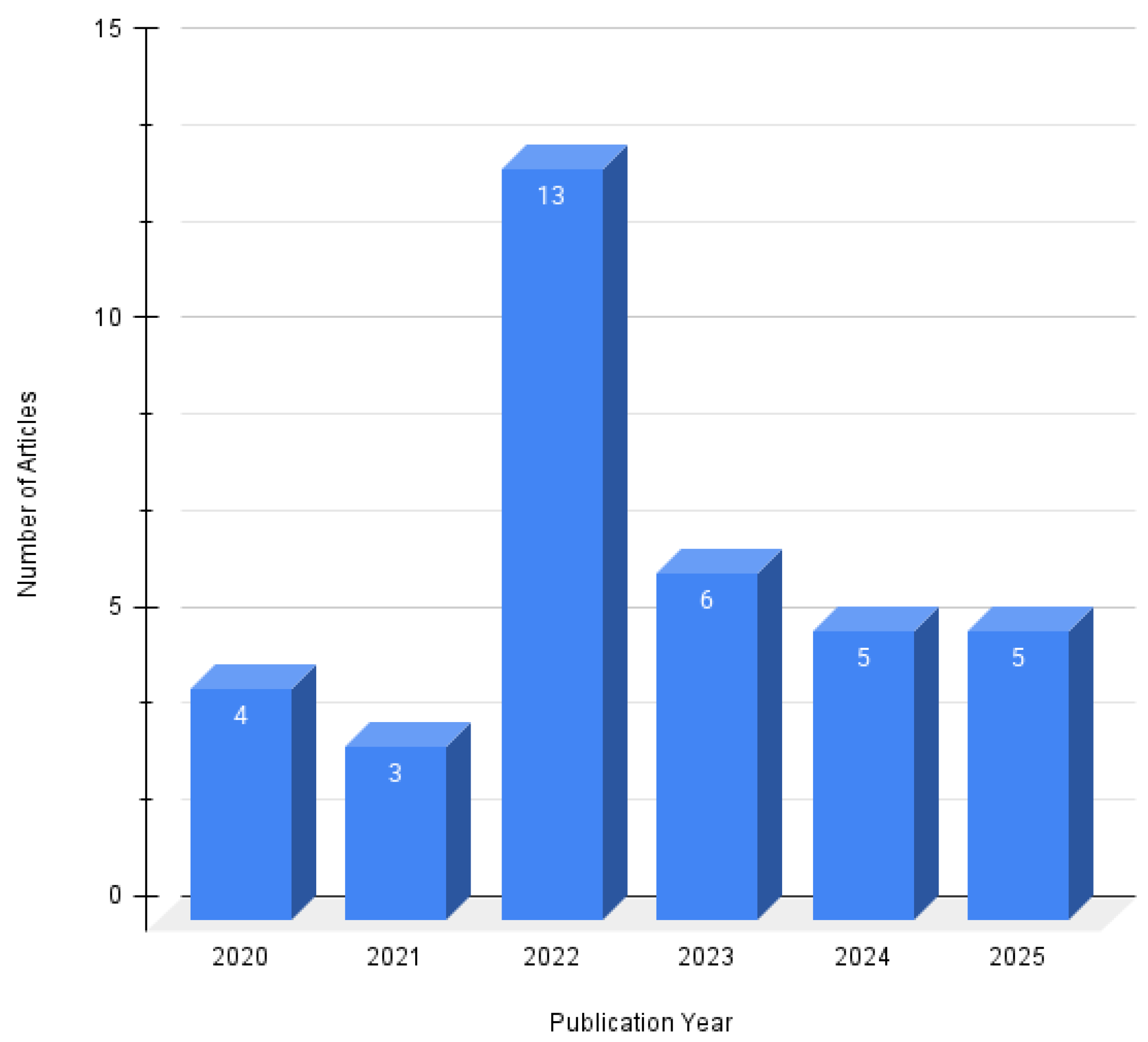

- Comparative Analysis: The study systematically evaluates 36 peer-reviewed articles published between 2020 and 2025 using standardized performance metrics (accuracy, precision, recall, F1-score, offering a detailed synthesis of strengths, limitations, and deployment feasibility across different detection paradigms.

- Identification of Underexplored Areas: The review highlights critical gaps in current research, including limited adoption of real-time processing (only 11 studies), sparse use of multivariate sensor fusion (only 8 studies), and under-utilization of frequency and time-frequency domain analysis.

- Deployment-Oriented Insights: Robustness, scalability, interpretability, and data dependency of each detection method are assessed, providing actionable guidance for real-world SHM system integration.

- Future Research Directions: The study outlines pathways for advancing SHM anomaly detection, including hybrid frameworks, lightweight and explainable AI models, multi-modal sensor fusion, domain adaptation, and the need for benchmark datasets and standardized evaluation protocols.

- RQ1: How frequently are different abnormal data detection techniques used in SHM studies, and which method dominates current research?

- RQ2: How do various abnormal data detection methods perform in terms of real-time capability, analysis domain, and multivariate analysis?

- RQ3: How do anomaly detection methods for bridge SHM compare in terms of detection performance across different fault types and study contexts?

- RQ4: What are the key challenges in abnormal data detection, and how can emerging advancements improve detection accuracy in future research?

1.4. Overview of the Systematic Literature Review Approach

2. Research Methodology

2.1. Categories Definition

2.1.1. Abnormal Data Detection Methods

Distance-Based Method

Predictive Methods

Image Processing Methods

Embedded Role of Statistical Methods in SHM Anomaly Detection Frameworks

2.1.2. Real-Time Capability

2.1.3. Domain Analysis

2.1.4. Multivariate Analysis

2.2. Review Protocol Development

2.2.1. Selection and Rejection Criteria

2.2.2. Search Process

3. Classification Results

3.1. Abnormal Data Detection Methods

3.2. Integration of Real-Time Processing in Structural Health Monitoring Frameworks

3.2.1. Recent Advances in Real-Time Anomaly Detection for Bridge SHM

3.2.2. Real-Time SHM: Bottlenecks and Deployment Hurdles

3.3. Analysis Domain Investigations

3.4. Multivariate Analysis Capability Investigations

- Multivariate time series models: Studies such as [17,30,40] employ multivariate time series models to analyze structural response data captured from multiple sensors over time. These methods are particularly effective in capturing temporal dependencies and cross-sensor correlations, which are critical for detecting subtle anomalies under dynamic structural conditions.

- Multivariate feature-based learning via CNN: Studies including [41,43,54,57] utilize CNNs to process structured multivariate input features. These features typically consist of time-domain and frequency-domain indicators extracted from raw monitoring signals. The CNN architecture allows for joint learning across these input dimensions, enabling the model to detect a variety of abnormal patterns.

- Multivariate machine learning approaches: The study by [60] presents a comprehensive machine learning-based framework that integrates multivariate analysis for anomaly detection. It processes diverse signal descriptors (such as strain, displacement, vibration, and environmental parameters) from multiple sensor types. By applying classification models that capture inter-dependencies among these signals, the framework enhances detection accuracy and robustness. This approach is particularly effective under varying environmental and loading conditions, offering improved generalization while maintaining computational efficiency suitable for real-time SHM applications.

4. Analysis of Abnormal Data Detection Methods

4.1. Distance-Based Methods

4.2. Predictive Methods

4.2.1. Bayesian Methods

4.2.2. Regression Methods

4.2.3. Neural Network Methods

4.3. Image Processing Methods

4.3.1. Two-Dimensional Image Input Classes

4.3.2. Hybrid Input Classes

5. Comparative Evaluation of Various Anomaly Detection Methods

5.1. Robustness

5.2. Scalability

5.3. Real-World Deployment

5.4. Interpretability

5.5. Data Dependency

6. Challenges and Future Research Directions

6.1. Key Challenges in Abnormal Data Detection for SHM Systems

- Computational Complexity and Real-Time Limitations: Deep learning-based image processing techniques have shown excellent accuracy in detecting difficult and complex anomalies in SHM systems [32,38,57,59,60]. These methods often use large neural networks such as CNNs, which require significant computing power. As a result, running these models on low-power devices such as edge systems or embedded hardware becomes very challenging. This limits their use in real-time applications, where fast responses are necessary to prevent serious damage or failure. Studies such as [13,16] have tried to reduce this delay by using faster algorithms, but the trade-off between speed and detection accuracy remains a major issue. Therefore, designing lightweight models that can work efficiently on limited hardware without sacrificing performance is still a big challenge.

- Lack of Interpretability: Neural networks have demonstrated strong capabilities in capturing complex patterns within SHM datasets [14,32]. However, their decision-making processes are often opaque, functioning as “black-box” models with limited interpretability. When an anomaly is detected, it is unclear which input features contributed to the decision or how the internal representations led to the output. This lack of transparency poses challenges in safety-critical contexts such as bridge monitoring, where understanding the rationale behind alerts is essential. In SHM applications, particularly during emergency evaluations or maintenance planning, practitioners require interpretable outputs to support timely and reliable decision-making. Consequently, despite their high detection accuracy, the limited explainability of neural networks remains a major limitation, hindering their broader adoption in operational SHM systems.

- Under-utilization of Multivariate and Domain Analysis: Many SHM studies rely on data from a single type of sensor, such as acceleration or strain. However, combining data from different types of sensors such as temperature, displacement, and humidity can provide a more complete picture of a bridge’s condition. Still, only a small number of studies have used this approach in their detection systems [17,57,60]. In addition, most studies analyze data only in the time domain. Time-domain methods are simple and fast, but they can miss important features that show up only when data is transformed into other forms. Frequency-domain and time–frequency-domain techniques, such as Fourier Transform or Wavelet Transform, can uncover hidden or subtle faults that are not obvious in raw signals. These methods are very useful, especially for detecting early or small-scale changes in structures. However, they are not widely applied in current research [46,58]. As a result, valuable insights may be lost, and some types of damage may go undetected.

- Class Imbalance and Fault Diversity: Many SHM studies rely on datasets that contain a large number of samples for common fault types, but very few instances of rare yet critical faults [32,39,48]. This imbalance skews model learning, leading to poor detection of minority classes. Models trained on frequent faults often misclassify or miss rare anomalies, undermining reliability (especially in emergency scenarios). While a few reviewed studies have attempted to address class imbalance using techniques such as synthetic oversampling, cost-sensitive learning, or data augmentation, these approaches are not consistently applied across the literature. For instance, Qu et al. [55] used attention mechanisms and small sample augmentation to boost minority class recall. Similarly, Du et al. [37] designed a CNN framework that explicitly handles imbalance under limited data. Mao et al. [49] applied GANs and autoencoders to improve detection in skewed datasets. However, these strategies are not widely adopted. Most studies report overall metrics without fault-type breakdowns, masking gains in rare fault detection. Moreover, performance metrics rarely highlight improvements for minority classes. Accuracy and F1 scores are often aggregated, limiting visibility into fault-specific performance. Fault diversity is another concern. Many models target specific fault types and fail to detect others such as bias, drift, gain errors, or environmental noise [15,31]. This narrow focus reduces generalization across bridge types and conditions. Addressing both imbalance and fault diversity is key to building robust, transferable SHM systems.

- Data Quality and Labeling Constraints: Training supervised learning models requires large amounts of labeled data. However, in SHM systems, especially for rare or unusual anomalies, labeled data is often very limited [37,49]. This makes it hard for the models to learn effectively and detect these uncommon but important faults. Another challenge is that labeling data by hand is time-consuming, costly, and can sometimes introduce errors. It also requires expert knowledge, which is not always available. As a result, many datasets remain partially labeled or entirely unlabeled. To solve this issue, more research is focusing on unsupervised and semi-supervised learning approaches. These methods can learn patterns from unlabeled data or from just a small number of labeled samples. This makes them more practical for SHM where obtaining labeled data is difficult or expensive [37,49].

6.2. Future Research Directions

- Multi-modal and Adaptive Frameworks: No single method can handle all types of anomalies in SHM data. Statistical, distance-based, predictive, and image-based techniques each offer unique advantages. Distance-based methods are simple and interpretable. However, they often fail with high-dimensional or large-scale datasets. Deep learning models can detect complex patterns, but they require labeled data and high computational resources. Hybrid methods combine multiple techniques to balance these trade-offs. For example, integrating CNNs with handcrafted statistical features improves detection accuracy. It also keeps the model lightweight and easier to interpret [32,39]. Sensor fusion further enhances performance. It merges data from different sources such as strain, vibration, and temperature sensors. This fusion captures complementary features and reduces false positives. Transfer learning is also essential. It helps models adapt to new bridges or sensor layouts. Domain adaptation techniques allow anomaly detectors to generalize without retraining [59]. This reduces deployment time and improves scalability. Future SHM systems should be both multi-modal and adaptive. They must select or combine methods based on data type, resource constraints, and latency requirements. This adaptability ensures robust and efficient anomaly detection in diverse bridge environments.

- Lightweight and Explainable Models: While many deep learning models (CNNs, LSTM etc.) exhibit strong performance in detecting anomalies, they often require substantial computational resources. Consequently, it is difficult to deploy these models on embedded or low-power devices commonly used in field-based bridge monitoring. To address this, researchers have explored model compression techniques such as pruning (removing redundant neurons or layers) and quantization (reducing the numerical precision of weights and activations). These methods reduce model size and computational load without significantly compromising accuracy [52,54]. In addition to model compression, another challenge is interpretability. In safety-critical applications like bridge SHM, the lack of transparency (interpretability) can hinder trust and adoption. It is important for engineers to understand why a model flagged a particular anomaly and which features contributed most to the decision. Therefore, explainability is essential, not only for validation and debugging but also for regulatory compliance and operational confidence. Techniques such as saliency maps, layer-wise relevance propagation, and SHAP (SHapley Additive exPlanations) are increasingly being used to visualize and interpret model behavior [69,70]. In addition to improving interpretability, ensemble machine learning models offer practical advantages in SHM. These models combine multiple learners (such as decision trees, support vector machines, or neural networks) to improve robustness and generalization [75]. Ensemble approaches are particularly valuable in SHM, where sensor data can be noisy, heterogeneous, and context-dependent. By employing diverse model architectures and learning strategies, ensemble methods can mitigate overfitting, improve fault tolerance, and provide more stable outputs. Moreover, some ensemble models, like Random Forests or Gradient Boosting Machines, offer built-in feature importance metrics, which contribute to interpretability and help engineers understand which sensor inputs are most influential.

- Multi-modal and Multivariate Fusion: In many bridge SHM systems, researchers rely on a single type of sensor data. Common examples include acceleration or strain signals (as shown in different tables of Section 4 in this manuscript). While these can detect certain faults, they often miss more complex issues. Using multiple sensor types together, known as multi-modal fusion, offers a deeper view of the bridge’s condition. For instance, combining acceleration, strain, temperature, and displacement data allows for richer insights. This approach helps uncover hidden or subtle anomalies that may not appear in single-sensor readings. To manage this large volume of data, multivariate analysis techniques are useful. Methods like PCA and ICA reduce data size. They also highlight key features that are most relevant for fault detection. Studies show that combining sensor fusion with multivariate analysis improves detection accuracy. It also enhances reliability in identifying structural problems [17,30]. Despite its promise, this approach is not yet common in SHM research. There is a need for better algorithms that can handle diverse data sources. Researchers must also develop user-friendly frameworks that support real-time analysis of multi-modal and multivariate data.

- Domain Adaptation and Transfer Learning: In many SHM projects, models are trained using data from one specific bridge. These models often perform well on the original structure. However, when applied to a different bridge, their accuracy usually drops. This happens because the new data may contain unfamiliar patterns, features, or noise levels. The model has not seen this type of data before, so it struggles to make correct predictions. Transfer learning offers a solution to this problem. It allows a model trained on one bridge to be reused on another bridge. This process requires little or no extra training. As a result, it saves time and reduces the need for large labeled datasets in each new deployment. Several recent studies have shown that this method works well in SHM applications [57,58,59]. Another useful method is domain adaptation. It helps the model understand both the original and new data. This is achieved by aligning the data distributions between the two bridges. When the distributions are similar, the model can perform better on both. Together, transfer learning and domain adaptation make SHM systems more flexible. They help models generalize across different environments. This reduces the need to collect and label new data for every bridge. It also makes it easier to deploy AI-based monitoring systems in real-world conditions.

- Robust Detection under Noise and Uncertainty: In real-world SHM systems, sensor data often contains noise or missing information due to weather, communication problems, or sensor faults. This makes it hard for models to correctly detect true anomalies. If a model is too sensitive, it may raise false alarms. If it is not sensitive enough, it may miss important faults. To handle this, future SHM systems should use probabilistic models that can deal with uncertainty in the data. For example, Bayesian models can estimate how confident the system is when it labels a data point as an anomaly [17]. They can also update their decisions as new data arrives, making them more flexible. Other approaches, such as Gaussian process models, can provide not just predictions but also a measure of uncertainty in those predictions [16]. This helps engineers better understand whether a warning is strong evidence of failure or just a weak signal. Similarly, newer methods try to combine uncertainty estimation with deep learning models to improve reliability under noisy conditions [52]. Using these ideas, future frameworks can become more robust and trustworthy, even when the input data is incomplete or unreliable.

- Benchmark Datasets and Standardized Evaluation: One of the key challenges in SHM research is the lack of open and well-annotated datasets. Most studies rely on private datasets collected from specific bridges, which are not shared with the research community. This makes it hard to compare different methods fairly and slows down progress in the field. Publicly available datasets that cover various bridge types, fault categories, and environmental conditions would allow researchers to develop, test, and improve their methods on common ground [39]. In addition to datasets, there is also a need for standardized evaluation metrics. Right now, different studies use different ways to measure accuracy, precision, recall, and other performance indicators. This makes it difficult to judge which method is actually better or more reliable. For example, some works report only accuracy, while others use more detailed metrics such as F1-score or processing time [40]. Having a common set of benchmarks and evaluation criteria would help the community perform consistent comparisons and drive progress toward more dependable SHM systems.

7. Answers to Formulated Research Questions and Limitations of the Research

7.1. Answers to Formulated Research Questions

7.2. Limitations of the Research

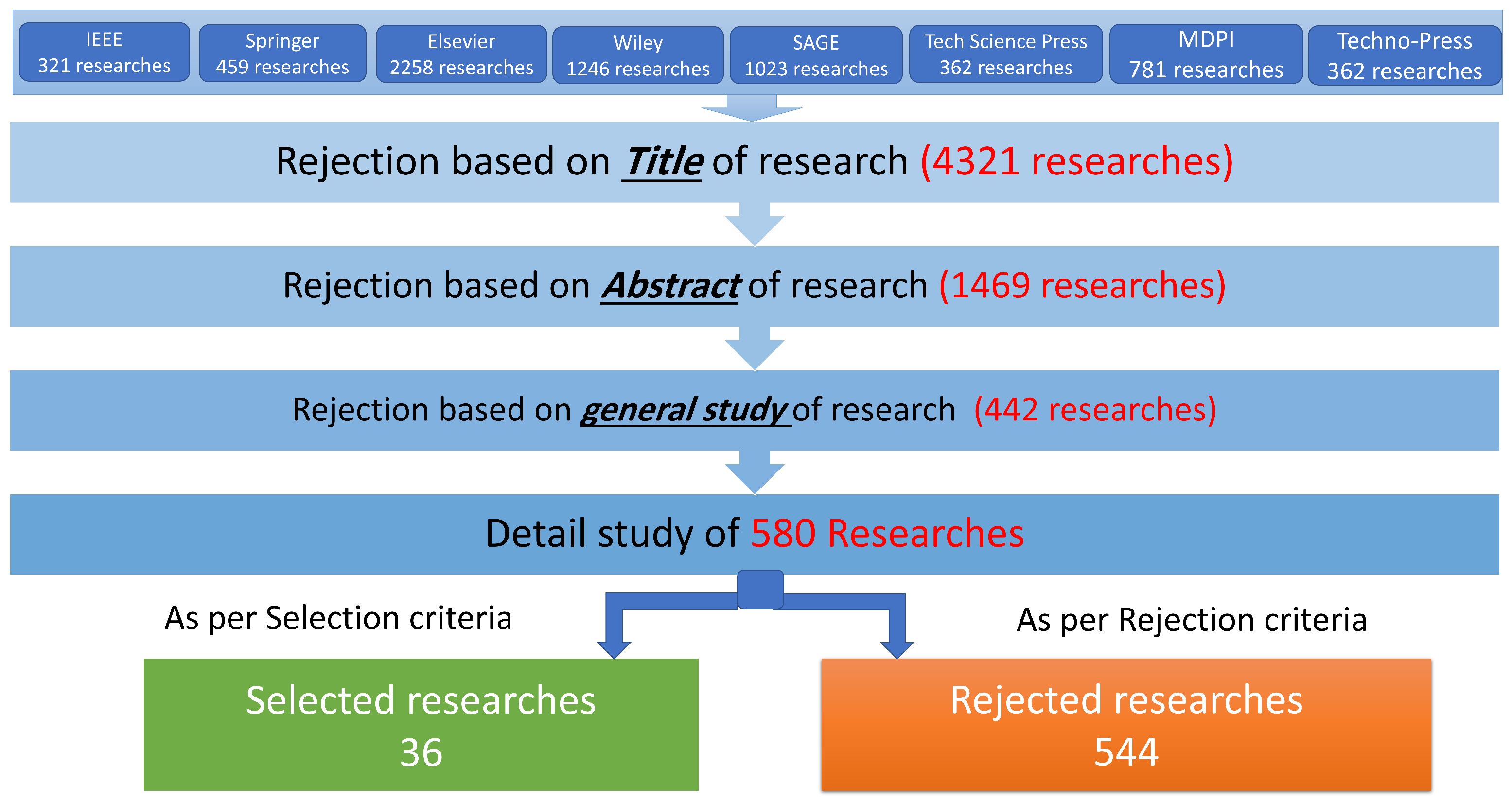

- Search Process: We utilized defined search terms across selected databases and applied systematic filtering. Nevertheless, thousands of results made exhaustive screening infeasible. Additionally, article exclusion based solely on titles may have omitted relevant studies with non-explicit titles.

- Databases Selection: While our study considered eight highly regarded databases (IEEE, Springer, Elsevier, SAGE, MDPI, Wiley, Tech Science Press, Techno-Press), we acknowledge the possibility of overlooking pertinent work indexed elsewhere. Nonetheless, due to the breadth and prestige of the selected repositories, we believe the findings of this SLR remain representative and impactful.

- Scope and Selection Limitations: The number of selected papers may seem small compared to the growing research in SHM and AI. However, this review focused on quality rather than quantity. Many studies were excluded because they lacked strong validation or repeated similar ideas. We also limited our scope to bridge-specific SHM to keep the review focused. Future reviews can expand the coverage by including more sources and using broader criteria. This will help capture newer trends and emerging techniques. Despite these limitations, the selected corpus of studies offers a comprehensive and credible foundation for evaluating abnormal data detection in SHM systems. Acknowledging these constraints also highlights valuable opportunities for further meta-analytical exploration and deeper cross-database synthesis in future research.

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Varghese, A.M.; Pradhan, R.P. Transportation infrastructure and economic growth: Does there exist causality and spillover? A Systematic Review and Research Agenda. Transp. Res. Procedia 2025, 82, 2618–2632. [Google Scholar] [CrossRef]

- Faris, N.; Zayed, T.; Fares, A. Review of Condition Rating and Deterioration Modeling Approaches for Concrete Bridges. Buildings 2025, 15, 219. [Google Scholar] [CrossRef]

- Azhar, A.S.; Kudus, S.A.; Jamadin, A.; Mustaffa, N.K.; Sugiura, K. Recent vibration-based structural health monitoring on steel bridges: Systematic literature review. Ain Shams Eng. J. 2024, 15, 102501. [Google Scholar] [CrossRef]

- Gharehbaghi, V.R.; Noroozinejad Farsangi, E.; Noori, M.; Yang, T.; Li, S.; Nguyen, A.; Málaga-Chuquitaype, C.; Gardoni, P.; Mirjalili, S. A critical review on structural health monitoring: Definitions, methods, and perspectives. Arch. Comput. Methods Eng. 2022, 29, 2209–2235. [Google Scholar] [CrossRef]

- He, Z.; Li, W.; Salehi, H.; Zhang, H.; Zhou, H.; Jiao, P. Integrated structural health monitoring in bridge engineering. Autom. Constr. 2022, 136, 104168. [Google Scholar] [CrossRef]

- Brighenti, F.; Caspani, V.F.; Costa, G.; Giordano, P.F.; Limongelli, M.P.; Zonta, D. Bridge management systems: A review on current practice in a digitizing world. Eng. Struct. 2024, 321, 118971. [Google Scholar] [CrossRef]

- Deng, Y.; Zhao, Y.; Ju, H.; Yi, T.H.; Li, A. Abnormal data detection for structural health monitoring: State-of-the-art review. Dev. Built Environ. 2024, 17, 100337. [Google Scholar] [CrossRef]

- Sonbul, O.S.; Rashid, M. Algorithms and Techniques for the Structural Health Monitoring of Bridges: Systematic Literature Review. Sensors 2023, 23, 4230. [Google Scholar] [CrossRef]

- Rashid, M.; Sonbul, O.S. Towards the Structural Health Monitoring of Bridges Using Wireless Sensor Networks: A Systematic Study. Sensors 2023, 23, 8468. [Google Scholar] [CrossRef]

- Qu, C.; Zhang, H.; Zhang, R.; Zou, S.; Huang, L.; Li, H. Multiclass Anomaly Detection of Bridge Monitoring Data with Data Migration between Different Bridges for Balancing Data. Appl. Sci. 2023, 13, 7635. [Google Scholar] [CrossRef]

- Choi, K.; Yi, J.; Park, C.; Yoon, S. Deep Learning for Anomaly Detection in Time-Series Data: Review, Analysis, and Guidelines. IEEE Access 2021, 9, 120043–120065. [Google Scholar] [CrossRef]

- Mejri, N.; Lopez-Fuentes, L.; Roy, K.; Chernakov, P.; Ghorbel, E.; Aouada, D. Unsupervised anomaly detection in time-series: An extensive evaluation and analysis of state-of-the-art methods. Expert Syst. Appl. 2024, 256, 124922. Available online: https://www.sciencedirect.com/science/article/pii/S0957417424017895 (accessed on 28 September 2025). [CrossRef]

- Zhang, Y.M.; Wang, H.; Wan, H.P.; Mao, J.X.; Xu, Y.C. Anomaly detection of structural health monitoring data using the maximum likelihood estimation-based Bayesian dynamic linear model. Struct. Health Monit. 2021, 20, 2936–2952. [Google Scholar] [CrossRef]

- Zhang, Y.; Lei, Y. Data Anomaly Detection of Bridge Structures Using Convolutional Neural Network Based on Structural Vibration Signals. Symmetry 2021, 13, 1186. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, J.; Wu, Z. Long-Short Term Memory Network-Based Monitoring Data Anomaly Detection of a Long-Span Suspension Bridge. Sensors 2022, 22, 6045. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Y.C.; Zheng, Y.W.; Xiong, W.; Li, J.X.; Cai, C.S.; Jiang, C. Online Bridge Structural Condition Assessment Based on the Gaussian Process: A Representative Data Selection and Performance Warning Strategy. Struct. Control Health Monit. 2024, 2024, 5579734. [Google Scholar] [CrossRef]

- Xu, X.; Forde, M.C.; Ren, Y.; Huang, Q.; Liu, B. Multi-index probabilistic anomaly detection for large span bridges using Bayesian estimation and evidential reasoning. Struct. Health Monit. 2023, 22, 948–965. [Google Scholar] [CrossRef]

- Gao, K.; Chen, Z.D.; Weng, S.; Zhu, H.p.; Wu, L.Y. Detection of multi-type data anomaly for structural health monitoring using pattern recognition neural network. Smart Struct. Syst. 2022, 29, 129–140. [Google Scholar] [CrossRef]

- Fan, Z.; Tang, X.; Chen, Y.; Ren, Y.; Deng, C.; Wang, Z.; Peng, Y.; Shi, C.; Huang, Q. Review of anomaly detection in large span bridges: Available methods, recent advancements and future trends. Adv. Bridge Eng. 2024, 5, 2. [Google Scholar] [CrossRef]

- Ayadi, A.; Ghorbel, O.; Obeid, A.M.; Abid, M. Outlier detection approaches for wireless sensor networks: A survey. Comput. Netw. 2017, 129, 319–333. [Google Scholar] [CrossRef]

- Makhoul, N. Review of data quality indicators and metrics, and suggestions for indicators and metrics for structural health monitoring. Adv. Bridge Eng. 2022, 3, 17. [Google Scholar] [CrossRef]

- Shahrivar, F.; Sidiq, A.; Mahmoodian, M.; Jayasinghe, S.; Sun, Z.; Setunge, S. AI-based bridge maintenance management: A comprehensive review. Artif. Intell. Rev. 2025, 58, 135. [Google Scholar] [CrossRef]

- Kitchenham, B. Procedures for Performing Systematic Reviews; Keele University: Keele, UK, 2004; p. 33. [Google Scholar]

- Rashid, M.; Anwar, M.W.; Khan, A.M. Toward the tools selection in model based system engineering for embedded systems—A systematic literature review. J. Syst. Softw. 2015, 106, 150–163. [Google Scholar] [CrossRef]

- Rashid, M.; Imran, M.; Jafri, A.R.; Al-Somani, T.F. Flexible architectures for cryptographic algorithms—A systematic literature review. J. Circuits, Syst. Comput. 2019, 28, 1930003. [Google Scholar] [CrossRef]

- Imran, M.; Bashir, F.; Jafri, A.R.; Rashid, M.; ul Islam, M.N. A systematic review of scalable hardware architectures for pattern matching in network security. Comput. Electr. Eng. 2021, 92, 107169. [Google Scholar] [CrossRef]

- Tasadduq, I.A.; Rashid, M. Toward Intelligent Underwater Acoustic Systems: Systematic Insights into Channel Estimation and Modulation Methods. Electronics 2025, 14, 2953. [Google Scholar] [CrossRef]

- Chandola, V.; Banerjee, A.; Kumar, V. Anomaly Detection: A Survey. ACM Comput. Surv. (CSUR) 2009, 41, 1–58. [Google Scholar] [CrossRef]

- Knorr, E.M.; Ng, R.T.; Tucakov, V. Distance-based outliers: Algorithms and applications. VLDB J. 2000, 8, 237–253. [Google Scholar] [CrossRef]

- Lei, Z.; Zhu, L.; Fang, Y.; Li, X.; Liu, B. Anomaly detection of bridge health monitoring data based on KNN algorithm. J. Intell. Fuzzy Syst. 2020, 39, 5243–5252. [Google Scholar] [CrossRef]

- Jeong, S.; Jin, S.S.; Sim, S.H. Modal Property-Based Data Anomaly Detection Method for Autonomous Stay-Cable Monitoring System in Cable-Stayed Bridges. Struct. Control Health Monit. 2024, 2024, 8565150. [Google Scholar] [CrossRef]

- Zhang, H.; Lin, J.; Hua, J.; Gao, F.; Tong, T. Data Anomaly Detection for Bridge SHM Based on CNN Combined with Statistic Features. J. Nondestruct. Eval. 2022, 41, 28. [Google Scholar] [CrossRef]

- Yuen, K.V.; Ortiz, G.A. Outlier detection and robust regression for correlated data. Comput. Methods Appl. Mech. Eng. 2017, 313, 632–646. [Google Scholar] [CrossRef]

- Zhang, X.; Zhou, W. Structural Vibration Data Anomaly Detection Based on Multiple Feature Information Using CNN-LSTM Model. Struct. Control Health Monit. 2023, 2023, 3906180. [Google Scholar] [CrossRef]

- Shajihan, A.; Wang, S.; Zhai, G.; Spencer, B. CNN based data anomaly detection using multi-channel imagery for structural health monitoring. Smart Struct. Syst. 2022, 29, 181–193. [Google Scholar] [CrossRef]

- Chou, J.Y.; Fu, Y.; Huang, S.K.; Chang, C.M. SHM data anomaly classification using machine learning strategies: A comparative study. Smart Struct. Syst. 2022, 29, 77–91. [Google Scholar] [CrossRef]

- Du, Y.; Li, L.; Hou, R.; Wang, X.; Tian, W.; Xia, Y. Convolutional Neural Network-based Data Anomaly Detection Considering Class Imbalance with Limited Data. Smart Struct. Syst. 2022, 29, 63–75. [Google Scholar] [CrossRef]

- Liu, G.; Niu, Y.; Zhao, W.; Duan, Y.; Shu, J. Data anomaly detection for structural health monitoring using a combination network of GANomaly and CNN. Smart Struct. Syst. 2022, 29, 53–62. [Google Scholar] [CrossRef]

- Zhang, Y.; Tang, Z.; Yang, R. Data anomaly detection for structural health monitoring by multi-view representation based on local binary patterns. Measurement 2022, 202, 111804. [Google Scholar] [CrossRef]

- Yang, K.; Ding, Y.; Jiang, H.; Zhao, H.; Luo, G. A two-stage data cleansing method for bridge global positioning system monitoring data based on bi-direction long and short term memory anomaly identification and conditional generative adversarial networks data repair. Struct. Control Health Monit. 2022, 29, e2993. [Google Scholar] [CrossRef]

- Son, H.; Jang, Y.; Kim, S.E.; Kim, D.; Park, J.W. Deep Learning-Based Anomaly Detection to Classify Inaccurate Data and Damaged Condition of a Cable-Stayed Bridge. IEEE Access 2021, 9, 3100419. [Google Scholar] [CrossRef]

- Qu, B.; Liao, P.; Huang, Y. Outlier Detection and Forecasting for Bridge Health Monitoring Based on Time Series Intervention Analysis. Struct. Durab. Health Monit. 2022, 16, 323–341. [Google Scholar] [CrossRef]

- Kim, S.Y.; Mukhiddinov, M. Data Anomaly Detection for Structural Health Monitoring Based on a Convolutional Neural Network. Sensors 2023, 23, 8525. [Google Scholar] [CrossRef]

- Ni, F.; Zhang, J.; Noori, M.N. Deep learning for data anomaly detection and data compression of a long-span suspension bridge. Comput.-Aided Civ. Infrastruct. Eng. 2020, 35, 685–700. [Google Scholar] [CrossRef]

- Yang, J.; Liu, D.; Zhao, L.; Yang, X.; Li, R.; Jiang, S.; Li, J. Improved stochastic configuration network for bridge damage and anomaly detection using long-term monitoring data. Inf. Sci. 2025, 700, 121831. [Google Scholar] [CrossRef]

- Deng, Y.; Ju, H.; Zhong, G.; Li, A.; Ding, Y. A general data quality evaluation framework for dynamic response monitoring of long-span bridges. Mech. Syst. Signal Process. 2023, 200, 110514. [Google Scholar] [CrossRef]

- Deng, Y.; Ju, H.; Zhong, G.; Li, A. Data quality evaluation for bridge structural health monitoring based on deep learning and frequency-domain information. Struct. Health Monit. 2023, 22, 2925–2947. [Google Scholar] [CrossRef]

- Zhao, M.; Sadhu, A.; Capretz, M. Multiclass anomaly detection in imbalanced structural health monitoring data using convolutional neural network. J. Infrastruct. Preserv. Resil. 2022, 3, 10. [Google Scholar] [CrossRef]

- Mao, J.; Wang, H.; SpencerJr, B.F. Toward data anomaly detection for automated structural health monitoring: Exploiting generative adversarial nets and autoencoders. Struct. Health Monit. 2021, 20, 1609–1626. [Google Scholar] [CrossRef]

- Jian, X.; Zhong, H.; Xia, Y.; Sun, L. Faulty data detection and classification for bridge structural health monitoring via statistical and deep-learning approach. Struct. Control Health Monit. 2021, 28, e2824. [Google Scholar] [CrossRef]

- Lei, X.; Xia, Y.; Wang, A.; Jian, X.; Zhong, H.; Sun, L. Mutual information based anomaly detection of monitoring data with attention mechanism and residual learning. Mech. Syst. Signal Process. 2023, 182, 109607. [Google Scholar] [CrossRef]

- Xu, J.; Dang, D.; Qian, M.; Liu, X.; Han, Q. A novel and robust data anomaly detection framework using LAL-AdaBoost for structural health monitoring. J. Civ. Struct. Health Monit. 2022, 12, 305–321. [Google Scholar] [CrossRef]

- Hao, C.; Gong, Y.; Liu, B.; Pan, Z.; Sun, W.; Li, Y.; Zhuo, Y.; Ma, Y.; Zhang, L. Data anomaly detection for structural health monitoring using the Mixture of Bridge Experts. Structures 2025, 71, 108039. [Google Scholar] [CrossRef]

- Wang, L.; Kang, J.; Zhang, W.; Hu, J.; Wang, K.; Wang, D.; Yu, Z. Online diagnosis for bridge monitoring data via a machine learning-based anomaly detection method. Measurement 2025, 245, 116587. [Google Scholar] [CrossRef]

- Qu, C.X.; Yang, Y.T.; Zhang, H.M.; Yi, T.H.; Li, H.N. Two-stage anomaly detection for imbalanced bridge data by attention mechanism optimisation and small sample augmentation. Eng. Struct. 2025, 327, 119613. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, X.; Wu, W.; Xia, Y. Anomaly detection of sensor faults and extreme events by anomaly locating strategies and convolutional autoencoders. Struct. Health Monit. 2025. [Google Scholar] [CrossRef]

- Pan, Q.; Bao, Y.; Li, H. Transfer learning-based data anomaly detection for structural health monitoring. Struct. Health Monit. 2023, 22, 3077–3091. [Google Scholar] [CrossRef]

- Qu, C.X.; Zhang, H.M.; Yi, T.H.; Pang, Z.Y.; Li, H.N. Anomaly detection of massive bridge monitoring data through multiple transfer learning with adaptively setting hyperparameters. Eng. Struct. 2024, 314, 118404. [Google Scholar] [CrossRef]

- Wang, X.; Wu, W.; Du, Y.; Cao, J.; Chen, Q.; Xia, Y. Wireless IoT Monitoring System in Hong Kong–Zhuhai–Macao Bridge and Edge Computing for Anomaly Detection. IEEE Internet Things J. 2024, 11, 4763–4774. [Google Scholar] [CrossRef]

- Kang, J.; Wang, L.; Zhang, W.; Hu, J.; Chen, X.; Wang, D.; Yu, Z. Effective alerting for bridge monitoring via a machine learning-based anomaly detection method. Struct. Health Monit. 2024. [Google Scholar] [CrossRef]

- Arif, M.; Rashid, M. A Literature Review on Model Conversion, Inference, and Learning Strategies in EdgeML with TinyML Deployment. Comput. Mater. Contin. 2025, 83, 13–64. [Google Scholar] [CrossRef]

- Beale, C.; Niezrecki, C.; Inalpolat, M. An adaptive wavelet packet denoising algorithm for enhanced active acoustic damage detection from wind turbine blades. Mech. Syst. Signal Process. 2020, 142, 106754. [Google Scholar] [CrossRef]

- Nikkhoo, A.; Karegar, H.; Mohammadi, R.K.; Hajirasouliha, I. An acceleration-based approach for crack localisation in beams subjected to moving oscillators. J. Vib. Control 2021, 27, 489–501. [Google Scholar] [CrossRef]

- Hou, Z.; Hera, A.; Noori, M. Wavelet-based techniques for structural health monitoring. In Health Assessment of Engineered Structures: Bridges, Buildings and Other Infrastructures; World Scientific: Singapore, 2013; pp. 179–202. [Google Scholar]

- Moghaddass, R.; Sheng, S. An anomaly detection framework for dynamic systems using a Bayesian hierarchical framework. Appl. Energy 2019, 240, 561–582. [Google Scholar] [CrossRef]

- Wan, H.P.; Ni, Y.Q. Bayesian Modeling Approach for Forecast of Structural Stress Response Using Structural Health Monitoring Data. J. Struct. Eng. 2018, 144, 04018130. [Google Scholar] [CrossRef]

- Pang, J.; Liu, D.; Peng, Y.; Peng, X. Anomaly detection based on uncertainty fusion for univariate monitoring series. Measurement 2017, 95, 280–292. [Google Scholar] [CrossRef]

- Kim, C.; Lee, J.; Kim, R.; Park, Y.; Kang, J. DeepNAP: Deep neural anomaly pre-detection in a semiconductor fab. Inf. Sci. 2018, 457–458, 1–11. [Google Scholar] [CrossRef]

- Gramegna, A.; Giudici, P. SHAP and LIME: An Evaluation of Discriminative Power in Credit Risk. Front. Artif. Intell. 2021, 4, 752558. [Google Scholar] [CrossRef]

- Mane, D.; Magar, A.; Khode, O.; Koli, S.; Bhat, K.; Korade, P. Unlocking Machine Learning Model Decisions: A Comparative Analysis of LIME and SHAP for Enhanced Interpretability. J. Electr. Syst. 2024, 20, 1252–1267. [Google Scholar] [CrossRef]

- German, S.; Brilakis, I.; DesRoches, R. Rapid entropy-based detection and properties measurement of concrete spalling with machine vision for post-earthquake safety assessments. Adv. Eng. Inform. 2012, 26, 846–858. [Google Scholar] [CrossRef]

- Kabir, S. Imaging-based detection of AAR induced map-crack damage in concrete structure. NDT E Int. 2010, 43, 461–469. [Google Scholar] [CrossRef]

- Mumuni, A.; Mumuni, F. Data augmentation: A comprehensive survey of modern approaches. Array 2022, 16, 100258. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Kazemi, F.; Asgarkhani, N.; Jankowski, R. Optimization-based stacked machine-learning method for seismic probability and risk assessment of reinforced concrete shear walls. Expert Syst. Appl. 2024, 255, 124897. [Google Scholar] [CrossRef]

| Ref. | Year | Focus | Limitations |

|---|---|---|---|

| [20] | 2017 | Outlier detection in wireless sensor networks using statistical methods | Lacks AI, real-time analysis, multivariate support, and domain-level assessment |

| [11] | 2021 | DL-based anomaly detection in time-series with benchmark and training analysis | No statistical-DL integration; overlooks computational efficiency and real-time deployment |

| [21] | 2022 | Focuses on SHM data quality indicators and a generic evaluation framework | Lacks AI-driven enhancements and detailed performance analysis across different methods |

| [7] | 2024 | Focuses on taxonomy and evaluation of anomaly detection methods for SHM | Lacks emphasis on various models and AI-driven approaches for enhanced accuracy |

| [19] | 2024 | Focuses on anomaly detection in large-span bridges through structural metrics | Lacks AI integration, detailed performance analysis, and uncertainty management strategies |

| [22] | 2025 | Explores AI applications in bridge maintenance, highlighting efficiency and sustainability | Lacks coverage of multi-sensor fusion and real-time AI deployment in large-scale monitoring |

| Criterion | Rationale |

|---|---|

| Subject Relevance | Ensures that selected studies directly address anomaly detection in bridge SHM and contribute to the research questions. |

| Publication Date (2020–2025) | Focuses the review on recent advancements and excludes outdated methodologies that may not reflect current trends. |

| Publisher | Limits selection to reputable sources indexed in eight major scientific databases (IEEE, Springer, Elsevier, SAGE, MDPI, Wiley, Tech Science Press, Techno-Press) to ensure quality. |

| Impactful Contributions | Prioritizes studies that demonstrate practical relevance and deployment potential for abnormal data detection in bridge SHM. |

| Results-Oriented | Filters out studies lacking empirical validation or rigorous experimentation, ensuring reliability of reported findings. |

| Avoid Repetition | Prevents duplication by selecting only one representative study in cases of overlapping research within the same context. |

| Search Terms | Op. | IEEE | Spr. | Els. | SAGE | Wiley | MDPI | TSP | TP |

|---|---|---|---|---|---|---|---|---|---|

| ‘Bridges’ ‘SHM’ ‘Anomaly detection’ | AND | 12 | 16 | 115 | 94 | 63 | 23 | 8 | 55 |

| OR | 113 | 129 | 498 | 523 | 289 | 135 | 92 | 256 | |

| ‘Bridges’ ‘SHM’ ‘Data cleansing’ | AND | 19 | 33 | 132 | 124 | 74 | 38 | 11 | 69 |

| OR | 73 | 94 | 387 | 412 | 245 | 112 | 45 | 254 | |

| ‘Bridges’ ‘SHM’ ‘Abnormal Data detection’ | AND | 48 | 52 | 195 | 210 | 149 | 72 | 23 | 126 |

| OR | 152 | 164 | 487 | 475 | 321 | 174 | 111 | 295 | |

| ‘Bridges’ ‘SHM’ ‘Abnormal Data detection’ | AND | 69 | 83 | 314 | 264 | 212 | 93 | 22 | 164 |

| OR | 312 | 364 | 952 | 865 | 658 | 352 | 163 | 648 | |

| ‘Bridges’ ‘SHM’ ‘Outlier detection’ | AND | 18 | 26 | 216 | 169 | 87 | 36 | 8 | 54 |

| OR | 132 | 185 | 543 | 532 | 404 | 198 | 59 | 354 | |

| ‘Bridges’ ‘SHM’ ‘Data quality management’ | AND | 54 | 74 | 227 | 214 | 162 | 72 | 34 | 147 |

| OR | 127 | 236 | 678 | 632 | 545 | 258 | 67 | 497 | |

| ‘Bridges’ ‘SHM’ ‘Anomalous data detection’ | AND | 28 | 52 | 207 | 182 | 132 | 65 | 14 | 106 |

| OR | 263 | 325 | 529 | 654 | 552 | 365 | 125 | 516 |

| Detection Methods | No. of Studies | Cited References |

|---|---|---|

| Distance-Based | 2 | [30,31] |

| Predictives | 12 | [13,14,15,16,17,32,40,41,42,43,44,45] |

| Image Processing | 22 | [18,34,35,36,37,38,39,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60] |

| Real-Time Capability | No. of Studies | Associated References |

|---|---|---|

| Yes | 11 | [13,15,16,18,40,42,43,53,54,59,60] |

| No | 25 | [14,17,30,31,32,34,35,36,37,38,39,41,44,45,46,47,48,49,50,51,52,55,56,57,58] |

| Ref. | Methods | Accuracy (%) | Latency (s) | HW | Key Highlights |

|---|---|---|---|---|---|

| [13] | BDLM | 98.96 | 0.024 | NG | Subspace detection with adaptive thresholding |

| [15] | LSTM | >93 | NG | NG | Correlation coefficient with double thresholds method |

| [16] | GPR | 96 | 0.10 | CPU | Representative data selection with operational variations |

| [18] | PRNN | 96.4 | NG | NG | Feature extraction from long time-series data |

| [43] | CNN | 97.6 | NG | NG | Layer-wise training with gradual refinement |

| [40] | Bi-LSTM + cGAN | 95 to 98 | NG | NG | GPS data cleansing across multi-sensor datasets |

| [42] | SARIMA | 95 | NG | NG | Forecasting outlier effects for early warnings |

| [53] | Multi Models | 99.35 | 0.145 | NG | Combination of MobileViT and the BST |

| [54] | 2D time-series | 98 | NG | Server | Multi-channel data conversion |

| [59] | Transfer Learning | 98 | 0.3 | Edge | Edge-based domain-adaptive anomaly detection |

| [60] | Neural Network | >95 | NG | NG | Real-time alerting |

| Analysis Domain | No. of Studies | Associated References |

|---|---|---|

| Time | 25 | [13,14,15,17,18,30,32,34,35,36,38,39,40,41,42,43,44,48,49,51,55,56,57,58,60] |

| Frequency | 14 | [16,31,32,34,35,38,44,45,47,50,54,57,58,59] |

| Time-Frequency | 5 | [36,37,46,52,53] |

| Multivariate Usage | No. of Studies | References and Technique Category |

|---|---|---|

| Yes | 8 | [17,30,40]—Multivariate Time Series Models [41,43,54,57]—CNN Feature-Based Learning [60]—Multivariate Machine Learning Framework |

| No | 28 | [13,14,15,16,18,31,32,34,35,36,37,38,39,42,44,45,46,47,48,49,50,51,52,53,55,56,58,59] |

| Ref. | Dataset | Bridge Type | Sensor Data Type | Anomaly Type | Methodology |

|---|---|---|---|---|---|

| [31] | Real bridge (60,000) | Cable-stayed | Acceleration | Low-quality, abnormal beh. | Uses MCD for distance-based anomaly detection |

| [30] | Simulation (40,000) | General | Temperature, deflection, train, humidity, disp. | Outlier | Applies KNN by measuring distances to identify anomalies |

| Ref. | Dataset | Bridge Type | Sensor Data Type | Anomaly Type | Accuracy | Methodology |

|---|---|---|---|---|---|---|

| [13] | Real bridge (144) | Long-span cable-stayed | Acceleration, strain | Spikes, baseline shift | 98.96% | BDLM with subspace detection |

| [17] | Real bridge (245) | Large span | Anemometers, temperature sensors, anchor load cells, connected pipe | Sensor fault | – | PDFs with certainty index |

| Ref. | Dataset | Bridge | Sensor data | Anomaly Type | Methodology |

|---|---|---|---|---|---|

| [42] | Real bridge (105) | Oblique arch | Strain | Outlier | SARIMA model for anomaly detection |

| [16] | Real bridge (7048) | Long-span cable-stayed | Stress | Noise | Gaussian process regression with data selection |

| Ref. | Dataset | Bridge | Sensor Data | Anomaly Type | Arch. | Methodology | Performance Evaluation | |||

|---|---|---|---|---|---|---|---|---|---|---|

| P (%) | R (%) | F1 | A (%) | |||||||

| [14] | Real bridge (28,272) | Long-span cable-stayed | Acceleration | Miss, minor, outlier, square, trend, drift | CNN | Subspace-enhanced features | 86.65 | 92.96 | 0.89 | 95 |

| [15] | Real bridge (4320) | Long-span cable-stayed | Acceleration | Outlier, minor, missing, trend, drift, break | LSTM | Dual-threshold with point-wise comparison | >90 | >92 | – | >93 |

| [40] | Real bridge (86,400) | Suspension bridge | GPS | Miss, outlier, drift, trend | Bi-LSTM | Data cleaning by integrating multi-modals | 88.9 | 95.40 | 0.92 | 98.26 |

| [41] | Real bridge (691,200) | Long-span cable-stayed | Cable tension | Outlier | LSTM | Anomaly scores from reconstruction | 95.6 | 92.01 | 0.938 | 99.98 |

| [43] | Real bridge (54,720) | Cable-stayed | Acceleration | Missing, minor, outlier, square, trend, drift | CNN | feature learning from acceleration | >70 | >85 | >0.77 | 97.6 |

| [44] | Real bridge (14,400) | Long-span cable-stayed | Acceleration | Abnormal data | CNN | data compression | 97.93 | 97.13 | 0.975 | 99.15 |

| [45] | Real bridge (3000) | Twin-box girder | Acceleration | Outlier | SCN | Random Node Removal | >95 | >96 | >0.96 | >99 |

| [32] | Real bridge (54,720) | – | Acceleration | Missing, minor, outlier, square, trend, drift | CNN | CNN with statistical features | >72 | >85 | – | 94.26 |

| Ref. | Dataset | Bridge | Sensor Data | Anomaly Type | Arch. | Methodology | Performance Evaluation | |||

|---|---|---|---|---|---|---|---|---|---|---|

| P (%) | R (%) | F1 | A (%) | |||||||

| [47] | Real bridge (7200) | – | Acceleration | FDC, drift, square, missing, trend | CNN | GAF and FFT | >84 | >92 | 0.94 | >96 |

| [46] | Real bridge (7200) | Long-span cable | Acceleration | TFC, drift, square, trend, missing, minor | CNN | CWT | >84 | >94 | 0.97 | 97.1 |

| [35] | Real bridge (54,720) | Long-span cable | Acceleration | trend, drift, minor, missing, outlier, square | CNN | FFT | >92 | >92 | - | >96 |

| [36] | Real bridge (54,720) | Long-span cable | Acceleration | trend, drift, minor, missing, outlier, square | Ensemble network | FFT | - | - | - | 97 |

| [37] | Real bridge (54,720) | Long-span cable | Acceleration | missing, minor, outlier, square, trend, drift | CNN | Grayscale | 95 | 95 | - | 98.3 |

| [38] | Real bridge (54,720) | Long-span cable | Acceleration | missing, minor, outlier, square, trend, drift | CNN | GAF and FFT | - | - | 0.94 | 98.2 |

| [48] | Real bridge (54,720) | Long-span cable | Acceleration | missing, minor, outlier, square, trend, drift | CNN | Grayscale | >85 | >73 | >0.82 | 97.74 |

| [49] | Real bridge (22,320) | Long-span cable | Acceleration | trend, shift, spikes, constant, trend, drift | GAN and autoencoders | GAF | - | - | - | >94 |

| [53] | Real bridge (142,848) | long-span railway and cable | Acceleration | missing, drift, amplitude, constant | CNN | Transition Field | >85.48 | >68.18 | 0.76 | 98.94 |

| [55] | Real bridge (20,160) | Large-span cable | Acceleration | local gain, outlier, drift, missing | CNN, GAN | Adversarial Network | - | >95 | - | 99.1 |

| [57] | Real bridge (675,432) | Long-span | Acceleration, strain, displacement, humidity, temperature | missing, outlier, mutation, missing, trend, square | CNN | RGB format | 93.76 | >89.9 | 0.94 | 93.28 |

| [58] | Real bridge (8250) | Large-span cable | Acceleration | local gain, drift, missing, noise, outlier | CNN | Grayscale | 95 | 95 | 0.95 | 96.8 |

| [59] | Real bridge (54,720) | Long-span | Acceleration | missing, minor, outlier, square, trend, drift | CNN | Grayscale | 78.66 | 85.5 | - | 95 |

| Ref. | Dataset | Bridge | Sensor Data | Anomaly Type | Arch. | Methodology | Performance Evaluation | |||

|---|---|---|---|---|---|---|---|---|---|---|

| P (%) | R (%) | F1 | A (%) | |||||||

| [39] | Real bridge (54,720) | Long-span cable | Acceleration | missing, minor, outlier, drift square, trend | N/A | Multi-view binary patterns Random forest | - | - | - | 97.5 |

| [50] | Real bridge (180,000) | Large-span arch & cable | Acceleration | missing, minor, outlier, noise, biased | CNN | Relative FDH | 88.29 | 81.54 | 0.84 | 99.39 |

| [18] | Real bridge (54,720) | Long-span cable | Acceleration | missing, minor, outlier, drift square, trend | FFN | Feature extraction FFN | 90.5 | 88.07 | - | 97 |

| [51] | Real bridge (21,600) | Long-span cable | Acceleration | missing, minor, outlier, drift normal, biased | CNN | Mutual information correlation analysis | >97 | >97 | >0.97 | 99.45 |

| [52] | Real bridge (72,000) | Long-span cable | Acceleration | missing, minor, outlier, drift square, trend | Ensemble | Active learning and AdaBoost algorithm | 90.67 | 94.24 | 0.92 | 97.95 |

| [34] | Real bridge (2160) | Long-span suspension | Acceleration | outlier, square missing, minor | CNN- LSTM | Model integration | >96 | >96 | >0.96 | 97.87 |

| [54] | Real bridge – | Box girder | strain displacement vibration | Noise | - | Data conversion | 90.62 | 97.55 | 0.93 | 99.36 |

| [56] | Real bridge (54,720) | Long-span, footbridge | Acceleration | missing, minor, outlier, drift square, trend | Auto– encoders | Encoder-decoder modeling | >99 | >99 | >0.99 | >99 |

| [60] | Real bridge (51,840) | Box girder | strain displacement vibration temperature humidity | Outlier | Encoder– decoder | Data conversion | 92.1 | 92.4 | 0.92 | 94.9 |

| Method | Robust. | Scalab. | Deploy. | Interp. | Data Dep. |

|---|---|---|---|---|---|

| Distance-Based | Moderate; threshold-sensitive | Low in high-D; scalable for small sets | High; edge-suitable | High; intuitive, limited depth | Low; minimal labels, feature-sensitive |

| Regression Models | Moderate; data-sensitive | High; efficient at scale | High; lightweight | High; traceable math | Moderate; needs calibrated input |

| Bayesian Models | High; uncertainty-aware | Moderate; low-mid D efficient | Moderate; tunable for embedded use | High; probabilistic traceability | Moderate; priors help sparse data |

| Neural Networks | High; noise-resilient | High; multivariate scalable | Moderate; fast inference, costly training | Low; black-box limits clarity | High; needs large labeled sets |

| 2D Image-Based | High; noise-tolerant | High; GPU-accelerated | Low; costly and preprocessing-heavy | Low; obscures raw signals | High; label diversity critical |

| Hybrid Inputs | High; method-integrated | High; efficient transforms | High; edge-suitable | Moderate–High; structured inputs aid traceability | Moderate; transfer learning helps |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sonbul, O.S.; Rashid, M. Bridge Structural Health Monitoring: A Multi-Dimensional Taxonomy and Evaluation of Anomaly Detection Methods. Buildings 2025, 15, 3603. https://doi.org/10.3390/buildings15193603

Sonbul OS, Rashid M. Bridge Structural Health Monitoring: A Multi-Dimensional Taxonomy and Evaluation of Anomaly Detection Methods. Buildings. 2025; 15(19):3603. https://doi.org/10.3390/buildings15193603

Chicago/Turabian StyleSonbul, Omar S., and Muhammad Rashid. 2025. "Bridge Structural Health Monitoring: A Multi-Dimensional Taxonomy and Evaluation of Anomaly Detection Methods" Buildings 15, no. 19: 3603. https://doi.org/10.3390/buildings15193603

APA StyleSonbul, O. S., & Rashid, M. (2025). Bridge Structural Health Monitoring: A Multi-Dimensional Taxonomy and Evaluation of Anomaly Detection Methods. Buildings, 15(19), 3603. https://doi.org/10.3390/buildings15193603