Systematic Parameter Optimization for LoRA-Based Architectural Massing Generation Using Diffusion Models

Abstract

1. Introduction

1.1. Research Background and Objectives

1.2. Research Scope and Method

2. Theoretical Background and Literature Review

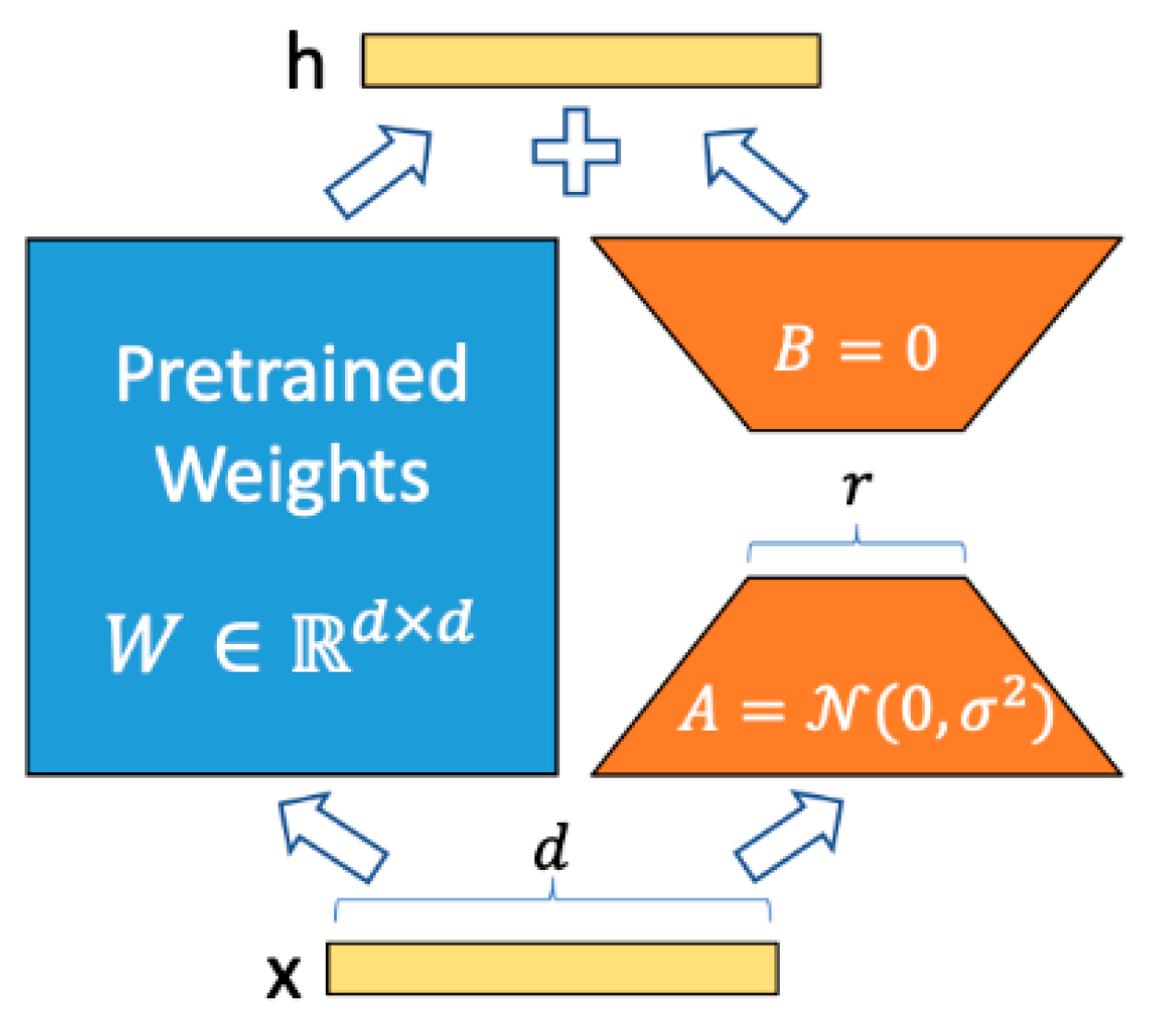

2.1. Fine-Tuning for Architectural Knowledge

2.2. Diffusion Models in Architectural Image Generation

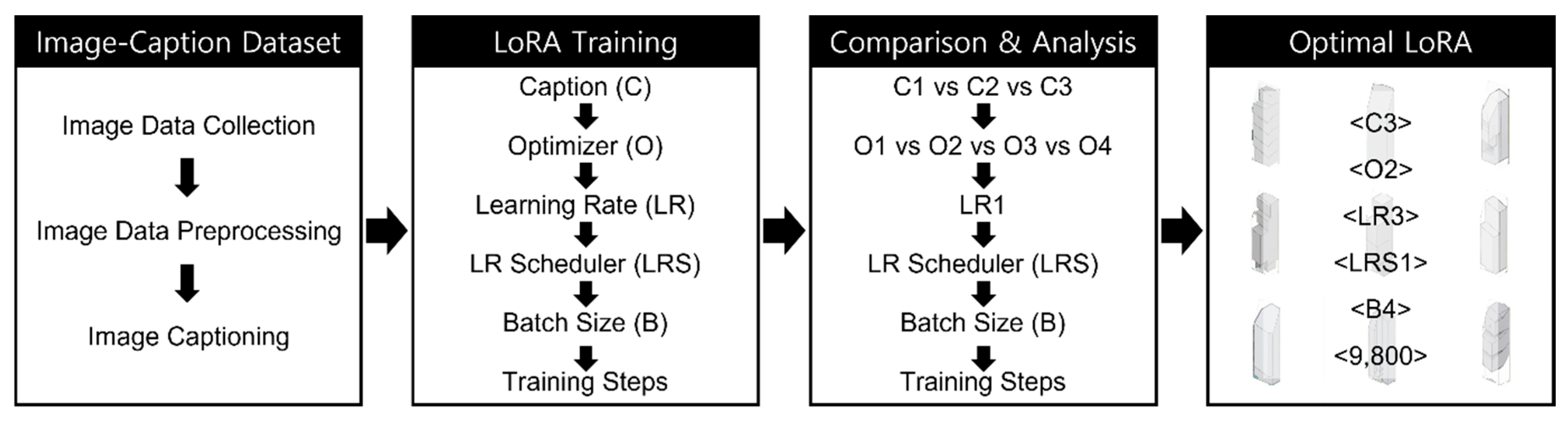

3. Methodology for Architectural Knowledge Learning Using LoRA Technique

3.1. LoRA Training Method

3.1.1. Training Environment and Tool

3.1.2. Training Procedure

3.2. Building of Architectural Mass Image-Caption Dataset

3.2.1. Image Data Collection and Preprocessing

3.2.2. Image Captioning

3.3. Setting of Hyperparameters

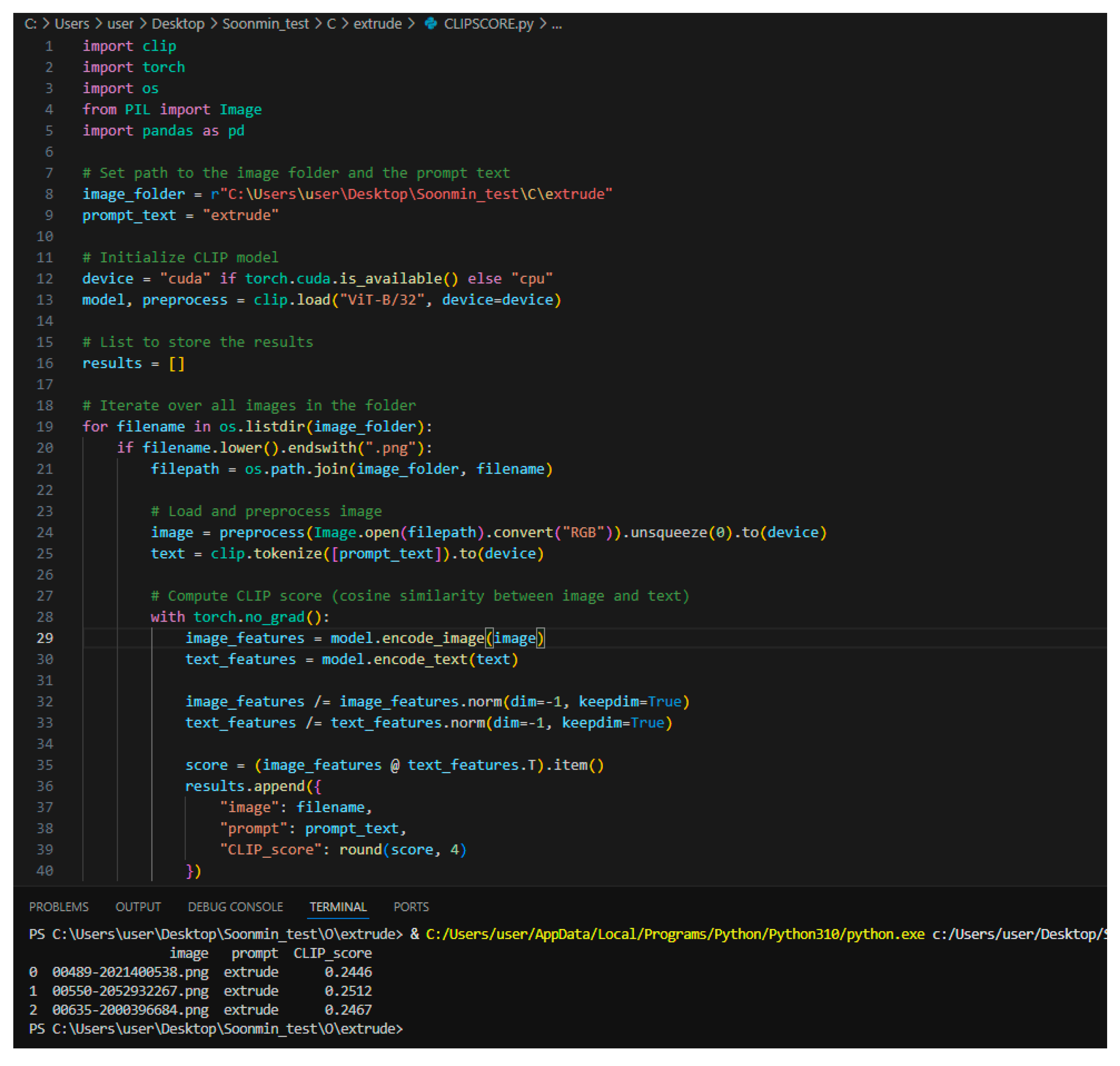

3.4. Evaluation Criteria and Metrics

4. Generation and Validation of LoRA Model Performance

4.1. Comparative Analysis Based on Architectural Mass Image-Caption Dataset

4.2. Comparative Analysis Based on LoRA Training Parameter Settings

4.2.1. Optimizer

4.2.2. Learning Rate

4.2.3. LR Scheduler

4.2.4. Batch Size

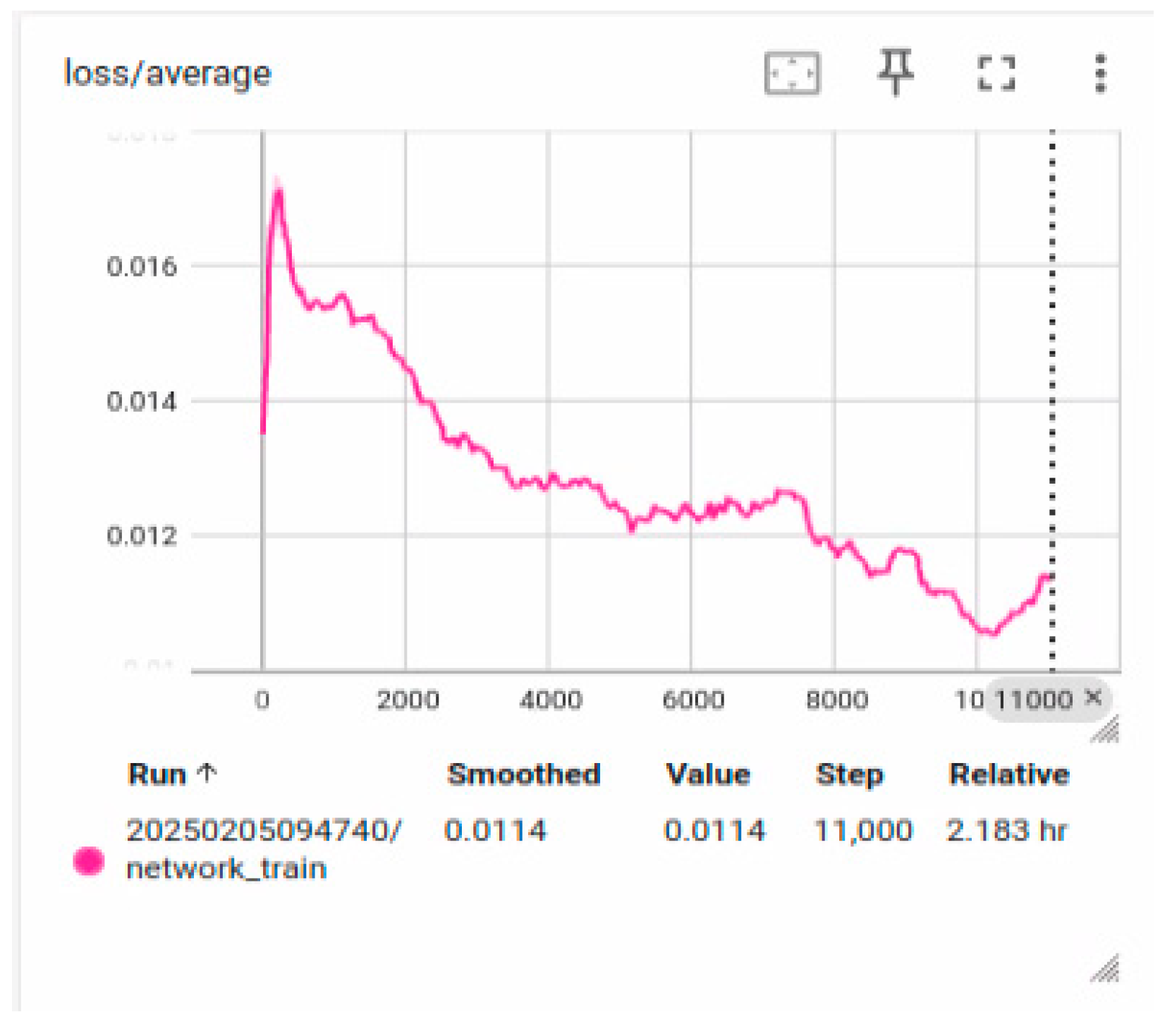

4.2.5. Training Steps

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Carpo, M. Beyond Digital: Design and Automation at the End of Modernity; MIT Press: Cambridge, MA, USA, 2023. [Google Scholar]

- Bao, Y.; Xiang, C. Exploration of Conceptual Design Generation Based on the Deep Learning Model—Discussing the Application of AI Generator to the Preliminary Architectural Design Process. In Proceedings of the International Conference on Architecture and Urban Planning, Suzhou, China, 11–12 November 2023; Springer Nature Singapore Pte Ltd.: Singapore, 2024; pp. 171–178. [Google Scholar] [CrossRef]

- Luhrs, M. Using Generative AI Midjourney to Enhance Divergent and Convergent Thinking in an Architect’s Creative Design Process. Des. J. 2024, 27, 677–699. [Google Scholar] [CrossRef]

- Almaz, A.F.H.; El-Agouz, E.A.E.; Abdelfatah, M.T.; Mohamed, I.R. The Future Role of Artificial Intelligence (AI) Design’s Integration into Architectural and Interior Design Education is to Improve Efficiency, Sustainability, and Creativity. Civ. Eng. Archit. 2024, 12, 1749–1772. [Google Scholar] [CrossRef]

- Cao, Y.; Abdul Aziz, A.; Mohd Arshard, W.N.R. Stable diffusion in architectural design: Closing doors or opening new horizons? Int. J. Archit. Comput. 2024, 23, 339–357. [Google Scholar] [CrossRef]

- Sadek, M.M.; Hassan, A.Y.; Diab, T.O.; Abdelhafeez, A. Creating Images with Stable Diffusion and Generative Adversarial Networks. Int. J. Telecommun. 2024, 4, 1–14. [Google Scholar] [CrossRef]

- Leach, N. Architecture in the Age of Artificial Intelligence: An Introduction to AI for Architects; Bloomsbury Visual Arts: London, UK, 2022. [Google Scholar]

- Kim, F.; Johanes, M.; Huang, J. Text2Form Diffusion: Framework for learning curated architectural vocabulary. In Proceedings of the 41st Conference on Education and Research in Computer Aided Architectural Design in Europe (eCAADe), Graz, Austria, 20–23 September 2023. [Google Scholar]

- Li, S.; Su, S.; Lin, X. Optimizing the hyper-parameters of deep reinforcement learning for building control. Build. Simul. 2025, 18, 765–789. [Google Scholar] [CrossRef]

- Manmatharasan, P.; Bitsuamlak, G.; Grolinger, K. AI-driven design optimization for sustainable buildings: A systematic review. Build. Environ. 2025, 310, 112707. [Google Scholar] [CrossRef]

- Ma, Z.; Cui, S.; Joe, I. An Enhanced Proximal Policy Optimization-Based Reinforcement Learning Method with Random Forest for Hyperparameter Optimization. Appl. Sci. 2022, 12, 7006. [Google Scholar] [CrossRef]

- Gero, J.S.; Jupp, J. Strategic use of representation in architectural massing. Build. Res. Inf. 2003, 31, 429–437. [Google Scholar] [CrossRef]

- Park, J.; Hong, S.M.; Choo, S.Y. A Generation Method and Evaluation of Architectural Facade Design Using Stable Diffusion with LoRA and ControlNet. J. Archit. Inst. Korea Plan. Des. 2025, 41, 85–96. [Google Scholar] [CrossRef]

- Panigrahi, A.; Saunshi, N.; Zhao, H.; Arora, S.K. Task-Specific Skill Localization in Fine-tuned Language Models. arXiv 2023, arXiv:2302.06600. [Google Scholar] [CrossRef]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 10684–10695. [Google Scholar]

- Yang, Y.; Bhatt, N.; Ingebrand, T.; Ward, W.; Carr, S.; Wang, Z.; Topcu, U. Fine-Tuning Language Models Using Formal Methods Feedback. arXiv 2023, arXiv:2310.18239. [Google Scholar] [CrossRef]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, L.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. arXiv 2021, arXiv:2106.09685. [Google Scholar]

- Bernstein, P. Machine Learning: Architecture in the Age of Artificial Intelligence; RIBA Publishing: London, UK, 2022. [Google Scholar]

- Stiny, G.; Gips, J. Shape Grammars and the Generative Specification of Painting and Sculpture. In Proceedings of the IFIP Congress, Ljubljana, Yugoslavia, 23–28 August 1971; North-Holland: Amsterdam, The Netherlands, 1972. [Google Scholar]

- Schumacher, P. Parametricism as Style—Parametricist Manifesto. In Proceedings of the 11th Architecture Biennale, Venice, Italy, 14 September–23 November 2008. [Google Scholar]

- Hong, S.M. Development of an AI-Based Architectural Mass Design System for Enhancing Creative Thinking. Ph.D. Thesis, Kyungpook National University, Daegu, Republic of Korea, 2025. [Google Scholar]

- del Campo, M. Ontology of diffusion models: Tools, language and architecture design. In Diffusions in Architecture: Artificial Intelligence and Image Generators; del Campo, M., Ed.; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2024; pp. 44–54. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. arXiv 2014, arXiv:1409.0575. [Google Scholar] [CrossRef]

- Ahmad, I.S.; Siddiqui, N.; Boufama, B. A Comparative Study of Text-to-Image Generative Models. In Proceedings of the 2024 IEEE 12th International Symposium on Signal, Image, Marrakech, Morocco, 21–23 May 2024., Video and Communications (ISIVC). [CrossRef]

- Sudha, L.; Aruna, K.; Sureka, V.; Niveditha, M.; Prema, S. Semantic Image Synthesis from Text: Current Trends and Future Horizons in Text-to-Image Generation. EAI Endorsed Trans. Internet Things 2024, 11. [Google Scholar] [CrossRef]

- Çelik, T. Generative design experiments with artificial intelligence: Reinterpretation of shape grammar. Open House Int. 2023, 49, 123–135. [Google Scholar] [CrossRef]

- Horvath, A.S.; Pouliou, P. AI for conceptual architecture: Reflections on designing with text-to-text, text-to-image, and image-to-image generators. Front. Archit. Res. 2024, 13, 593–612. [Google Scholar] [CrossRef]

- Wu, Y. ArchDiff: Streamlining Architectural Design with Diffusion-Based Style Generation. Lect. Notes Comput. Sci. 2024, 14871, 300–315. [Google Scholar]

- Ma, H.; Zheng, H. Text Semantics to Image Generation: A Method of Building Facades Design Based on Stable Diffusion Model. In Phygital Intelligence, Computational Design and Robotic Fabrication; Springer: Berlin/Heidelberg, Germany, 2024. [Google Scholar]

- You, Y.; Lee, J. Generative AI-Based Construction of Architect’s Style-trained Models and its Application for Visualization of Residential Houses. Soc. Des. Converg. 2023, 22, 103–116. [Google Scholar] [CrossRef]

- Steenson, M.W. Architectural Intelligence: How Designers and Architects Created the Digital Landscape; MIT Press: Cambridge, MA, USA, 2017. [Google Scholar]

- Di Mari, A.; Yoo, N. Operative Design: A Catalogue of Spatial Verbs; BIS Publishers: Amsterdam, The Netherlands, 2013. [Google Scholar]

- Di Mari, A. Conditional Design: An Introduction to Elemental Architecture; BIS Publishers: Amsterdam, The Netherlands, 2014. [Google Scholar]

- Steen, J. Bridging the Gap Between Generative Artificial Intelligence and Innovation in Footwear Design. Master’s Thesis, Delft University of Technology, Delft, The Netherlands, 2024. [Google Scholar]

- Lin, H.; Hong, D.; Ge, S.; Luo, C.; Jiang, K.; Jin, H.; Wen, C. RS-MoE: Mixture of Experts for Remote Sensing Image Captioning and Visual Question Answering. arXiv 2024, arXiv:2407.02233. [Google Scholar]

- Chang, Z.-Y.; Han, J.-W. Analysis on prompt engineering structure of AI-generated architectural/interior design images. In Proceedings of the Korean Institute of Interior Design Spring Conference, Bucheon, Republic of Korea, 18 May 2024; Volume 26, pp. 150–154. [Google Scholar]

- Wang, S.; Korolija, I.; Rovas, D. Development of Approach to an Automated Acquisition of Static Street View Images Using Transformer Architecture for Analysis of Building Characteristics. Sci. Rep. 2025, 15, 29062. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Korolija, I.; Rovas, D. Impact of Traditional Augmentation Methods on Window State Detection. In Proceedings of the 14th REHVA HVAC World Congress CLIMA 2022, Rotterdam, The Netherlands, 22–25 May 2022. [Google Scholar] [CrossRef]

- Wang, S.; Korolija, I.; Rovas, D. Transformer-Based Building Façade Recognition Using Static Street View Images. In Proceedings of the European Conference on Computing in Construction (EC3), Crete, Greece, 10–12 July 2023. [Google Scholar] [CrossRef]

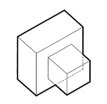

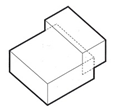

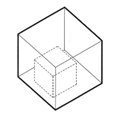

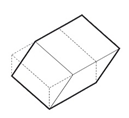

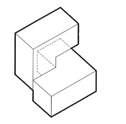

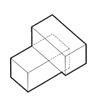

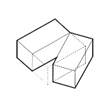

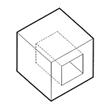

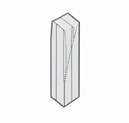

| Expand | Extrude | Inflate | Branch | Merge |

|---|---|---|---|---|

|  |  |  |  |

| Nest | Bend | Skew | Twist | Interlock |

|  |  |  |  |

| Intersect | Lift | Lodge | Overlap | Rotate |

|  |  |  |  |

| Shift | Carve | Compress | Fracture | Grade |

|  |  |  |  |

| Notch | Pinch | Shear | Taper | Embed |

|  |  |  |  |

| Extract | Inscribe | Puncture | Split | Reflect + Expand |

|  |  |  |  |

| Pack + Inflate | Array + Stack + Rotate | Array + Taper | Join + Array + Pinch | Join + Split |

|  |  |  |  |

| Expand | Extrude | |

|---|---|---|

| ChatGPT-4o |  |  |

| Common | 2. Medium: illustration 3. Environment: Abstract/Conceptual 4. Lighting: Standard technical drawing lighting with shading to indicate depth 5. Color: Monochromatic (shades of grey with some darker shading/edges) 6. Mood: Neutral, technical | |

| Feature | 1. Subject: 3D geometric shape (expansion of a smaller rectangular prism from a larger rectangular base with an internal hollow section) | 1. Subject: 3D geometric shape (extrusion of a smaller rectangular prism from a larger stepped rectangular base with an internal hollow section) |

| 7. Composition: Isometric view, showing the expansion of a smaller rectangular prism from a larger rectangular base with an internal hollow section, with dashed lines to indicate the hidden edges and shading to emphasize depth and dimension | 7. Composition: Isometric view, showing the extrusion of a smaller rectangular prism from a larger stepped rectangular base with an internal hollow section, with dashed lines to indicate the hidden edges and shading to emphasize depth and dimension | |

| Image | Caption Level | ||

|---|---|---|---|

| No | Simple | Detailed | |

| - | expand | expand, expansion of a smaller rectangular prism from a larger rectangular base with an internal hollow section, illustration, conceptual, standard technical drawing lighting, monochromatic, neutral mood, isometric view |

| - | extrude | extrude, extrusion of a smaller rectangular prism from a larger rectangular base with an internal hollow section, illustration, conceptual, standard technical drawing lighting, monochromatic, neutral mood, isometric view |

| - | reflect_expand | reflect_expand, combination of reflecting and expanding rectangular prisms forming a complex, interlocked structure, illustration, conceptual, standard technical drawing lighting, monochromatic, neutral mood, isometric view |

| - | join_split | join_split, a joined and split rectangular prism forming a zigzag pattern, extended further, illustration, conceptual, standard technical drawing lighting, monochromatic, neutral mood, isometric view |

| - | array, stack_rotate | array, stack_rotate, combination of stacking and rotating rectangular prisms forming a cubic, interlocked structure, illustration, conceptual, standard technical drawing lighting, monochromatic, neutral mood, isometric view |

| - | join, array_pinch | join, array_pinch, combination of joined and pinched rectangular prisms forming a complex, interlocked structure, illustration, conceptual, standard technical drawing lighting, monochromatic, neutral mood, isometric view |

| Hyperparameter | LoRA Experiment | Value |

|---|---|---|

| Caption | C1 | No Caption |

| C2 | Simple Caption | |

| C3 | Detailed Caption | |

| Optimizer | O1 | AdamW |

| O2 | Adafactor | |

| O3 | Prodigy | |

| O4 | Lion | |

| Learning Rate | LR1 | 0.0001 |

| LR2 | 0.0002 | |

| LR3 | 0.0003 | |

| LR4 | 0.0004 | |

| LR Scheduler | LRS1 | constant |

| LRS2 | cosine | |

| LRS3 | linear | |

| LRS4 | adafactor | |

| Batch Size | B1 | 1 |

| B2 | 2 | |

| B3 | 4 | |

| B4 | 8 | |

| Training Steps | Analysis of Loss Graph | 5500 |

| 9800 | ||

| 11,000 |

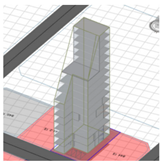

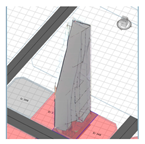

| Prompt | img2img | Inpaint | |

|---|---|---|---|

| Extrude Branch Twist |  |  |  |

| No. Images | Optimizer | Learning Rate | LR Scheduler | Batch Size | Epoch | Repeats | |

|---|---|---|---|---|---|---|---|

| value | 220 | Adafactor | 0.0003 | constant | 4 | 5 | 20 |

| Prompt | C1 | C2 | C3 |

|---|---|---|---|

| extrude (CLIP Score) [LPIPS Score] |  (0.2467) [0.350381] |  (0.2446) [0.346895] |  (0.2512) [0.353704] |

(0.2127) [0.647027] |  (0.2127) [0.650432] |  (0.2247) [0.647987] | |

| branch (CLIP Score) [LPIPS Score] |  (0.1979) [0.326616] |  (0.2107) [0.333401] |  (0.2159) [0.326094] |

(0.1812) [0.688126] |  (0.1849) [0.677643] |  (0.1856) [0.675901] | |

| twist (CLIP Score) [LPIPS Score] |  (0.2038) [0.214463] |  (0.2044) [0.191077] |  (0.2228) [0.197385] |

(0.1890) [0.670984] |  (0.1914) [0.675118] |  (0.1939) [0.684472] | |

| Average CLIP Score | 0.2052 | 0.2081 | 0.2157 |

| Average LPIPS Score | 0.482933 | 0.479108 | 0.481524 |

| Prompt | O1 | O2 | O3 | O4 |

|---|---|---|---|---|

| extrude (CLIP Score) [LPIPS Score] |  (0.2435) [0.343182] |  (0.2555) [0.349378] |  (0.2433) [0.354583] |  (0.2481) [0.363083] |

(0.2100) [0.651672] |  (0.2177) [0.645908] |  (0.2016) [0.648482] |  (0.2166) [0.651206] | |

| branch (CLIP Score) [LPIPS Score] |  (0.1766) [0.343366] |  (0.2135) [0.333571] |  (0.1943) [0.337466] |  (0.2280) [0.344603] |

(0.1905) [0.674829] |  (0.1920) [0.677852] |  (0.1792) [0.678657] |  (0.1913) [0.673498] | |

| twist (CLIP Score) [LPIPS Score] |  (0.1901) [0.214797] |  (0.2165) [0.214462] |  (0.1961) [0.212614] |  (0.2188) [0.219594] |

(0.1813) [0.675989] |  (0.1888) [0.676852] |  (0.1857) [0.686007] |  (0.1950) [0.675635] | |

| Average CLIP Score | 0.1987 | 0.2140 | 0.2000 | 0.2163 |

| Average LPIPS Score | 0.483972 | 0.483004 | 0.486301 | 0.487936 |

| Prompt | LR1 | LR2 | LR3 | LR4 |

|---|---|---|---|---|

| extrude (CLIP Score) [LPIPS Score] |  (0.2341) [0.350163] |  (0.2287) [0.350193] |  (0.2380) [0.351559] |  (0.2447) [0.342769] |

(0.2201) [0.642323] |  (0.2130) [0.634285] |  (0.2137) [0.642483] |  (0.2067) [0.642551] | |

| branch (CLIP Score) [LPIPS Score] |  (0.2037) [0.347179] |  (0.2141) [0.340617] |  (0.2074) [0.333245] |  (0.2026) [0.333787] |

(0.1895) [0.667573] |  (0.1745) [0.661532] |  (0.1916) [0.676919] |  (0.1873) [0.670242] | |

| twist (CLIP Score) [LPIPS Score] |  (0.2170) [0.199774] |  (0.1947) [0.207238] |  (0.2163) [0.211201] |  (0.2095) [0.210177] |

(0.1827) [0.681593] |  (0.1909) [0.676819] |  (0.1916) [0.6712] |  (0.1921) [0.678881] | |

| Average CLIP Score | 0.2078 | 0.2026 | 0.2098 | 0.2072 |

| Average LPIPS Score | 0.481434 | 0.478447 | 0.481103 | 0.479749 |

| Prompt | LRS1 | LRS2 | LRS3 | LRS4 |

|---|---|---|---|---|

| extrude (CLIP Score) [LPIPS Score] |  (0.2562) [0.347824] |  (0.2496) [0.360708] |  (0.2446) [0.34897] |  (0.2518) [0.360331] |

(0.2149) [0.643275] |  (0.2118) [0.640745] |  (0.2231) [0.643537] |  (0.2107) [0.634892] | |

| branch (CLIP Score) [LPIPS Score] |  (0.1937) [0.331238] |  (0.1964) [0.336442] |  (0.1931) [0.35139] |  (0.2167) [0.342915] |

(0.1978) [0.667002] |  (0.1891) [0.677781] |  (0.1934) [0.670332] |  (0.1884) [0.671802] | |

| twist (CLIP Score) [LPIPS Score] |  (0.2201) [0.198131] |  (0.2096) [0.202021] |  (0.2120) [0.168319] |  (0.2141) [0.212691] |

(0.1935) [0.680731] |  (0.1927) [0.675404] |  (0.1807) [0.682539] |  (0.1843) [0.683273] | |

| Average CLIP Score | 0.2127 | 0.2082 | 0.2078 | 0.2110 |

| Average LPIPS Score | 0.478034 | 0.482184 | 0.477515 | 0.484317 |

| Prompt | B1 | B2 | B3 | B4 |

|---|---|---|---|---|

| extrude (CLIP Score) [LPIPS Score] |  (0.2448) [0.350233] |  (0.2574) [0.359554] |  (0.2485) [0.350928] |  (0.2472) [0.356032] |

(0.2164) [0.635002] |  (0.2101) [0.649085] |  (0.2118) [0.648677] |  (0.2094) [0.633884] | |

| branch (CLIP Score) [LPIPS Score] |  (0.1988) [0.325867] |  (0.2167) [0.336992] |  (0.2173) [0.331515] |  (0.2092) [0.342192] |

(0.1850) [0.675247] |  (0.1875) [0.66697] |  (0.1873) [0.68546] |  (0.1766) [0.661859] | |

| twist (CLIP Score) [LPIPS Score] |  (0.2134) [0.191182] |  (0.2116) [0.192647] |  (0.2173) [0.206828] |  (0.2183) [0.204495] |

(0.1931) [0.676824] |  (0.1821) [0.675579] |  (0.1918) [0.673779] |  (0.1886) [0.671848] | |

| Average CLIP Score | 0.2086 | 0.2109 | 0.2123 | 0.2082 |

| Average LPIPS Score | 0.475726 | 0.480138 | 0.482865 | 0.478385 |

| No. Images | Optimizer | Learning Rate | LR Scheduler | Batch Size | Epoch | Repeats | |

|---|---|---|---|---|---|---|---|

| value | 220 | Adafactor | 0.0003 | constant | 4 | 10 | 20 |

| No. Images | Optimizer | Learning Rate | LR Scheduler | Batch Size | Epoch | Repeats | |

|---|---|---|---|---|---|---|---|

| value | 280 | Adafactor | 0.0003 | constant | 4 | 10 | 14 |

| Prompt | Without LoRA | The Optimal LoRA Model | ||

|---|---|---|---|---|

| extrude |  |  |  |  |

|  |  |  | |

| branch |  |  |  |  |

|  |  |  | |

| twist |  |  |  |  |

|  |  |  | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hong, S.M.; Choo, S. Systematic Parameter Optimization for LoRA-Based Architectural Massing Generation Using Diffusion Models. Buildings 2025, 15, 3477. https://doi.org/10.3390/buildings15193477

Hong SM, Choo S. Systematic Parameter Optimization for LoRA-Based Architectural Massing Generation Using Diffusion Models. Buildings. 2025; 15(19):3477. https://doi.org/10.3390/buildings15193477

Chicago/Turabian StyleHong, Soon Min, and Seungyeon Choo. 2025. "Systematic Parameter Optimization for LoRA-Based Architectural Massing Generation Using Diffusion Models" Buildings 15, no. 19: 3477. https://doi.org/10.3390/buildings15193477

APA StyleHong, S. M., & Choo, S. (2025). Systematic Parameter Optimization for LoRA-Based Architectural Massing Generation Using Diffusion Models. Buildings, 15(19), 3477. https://doi.org/10.3390/buildings15193477