1. Introduction

The structural integrity of civil infrastructure plays a pivotal role in the safety, functionality, and sustainability of modern urban environments [

1]. Among various forms of deterioration, surface cracks in concrete structures such as bridges, pavements, and retaining walls are among the earliest and most visible indicators of potential failure. Regular inspection and timely detection of these cracks are essential to preventing long-term degradation, reducing maintenance costs, and ensuring public safety. Traditional inspection methods rely heavily on manual visual assessment, which is often labor-intensive, time-consuming, prone to human error, and limited in spatial and temporal coverage [

2]. To address these limitations, recent years have witnessed a surge in the adoption of automated visual inspection systems powered by deep learning and computer vision techniques. In particular, convolutional neural networks (CNNs) have shown promising results in various crack detection tasks, achieving high accuracy and generalization across different surface types and environmental conditions [

3,

4,

5].

However, despite these advancements, several critical challenges remain unresolved. Firstly, many state-of-the-art models, though highly accurate, are computationally expensive and unsuitable for real-time deployment on edge devices such as unmanned aerial vehicles (UAVs), which are increasingly being used for infrastructure inspection due to their mobility, flexibility, and cost-effectiveness [

6,

7]. UAVs offer several additional advantages for inspection and surveying tasks, including high-resolution data collection, access to remote or otherwise inaccessible areas, improved data accuracy and repeatability, real-time monitoring capabilities, environmental benefits, and significant time efficiency. The use of machine learning algorithms [

8,

9] and optimization techniques [

10] for UAVs is also an active area of research with practical applications in autonomous control, adaptive planning, and decision-making for real-world deployment. Secondly, publicly available datasets such as SDNET2018 exhibit significant class imbalance, with far more non-crack images than crack samples, leading to biased learning and poor recall on the minority class [

11,

12,

13]. Lastly, while numerous studies focus on either heavy architectures or handcrafted pipelines, limited work has comparatively evaluated lightweight architectures with deployment-oriented metrics such as inference time and model size. To bridge this gap, this study proposes CrackDetect-Lite, a comparative framework designed to evaluate and benchmark lightweight CNN architectures for binary crack detection in UAV-driven inspection systems [

14]. This Studyinvestigate three models: CNN-Simple: a custom-designed shallow CNN for fast inference, CNN-Simple with Class-Weighted Loss, RSNet: a residual skip-connected architecture adapted for crack detection, and MobileVNet: a fine-tuned version of MobileNetV2 pretrained on ImageNet.

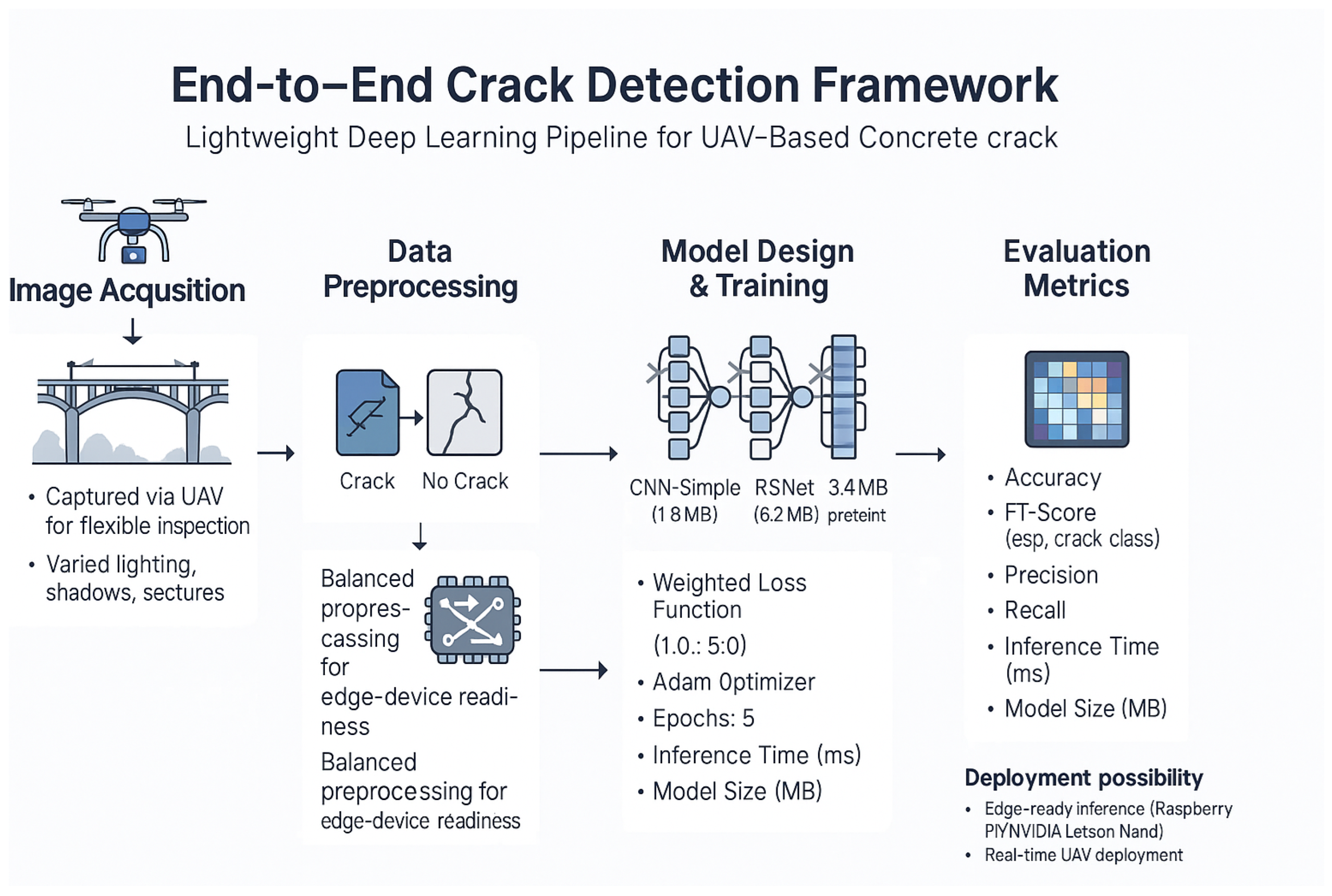

All models are trained and evaluated on the SDNET2018 dataset using standardized input dimensions and preprocessing pipelines. Class imbalance is addressed using weighted loss functions. Models are compared not only on traditional performance metrics such as accuracy, precision, and F1-score, but also UAV-relevant parameters including inference time and model size. An overview of the proposed system pipeline is shown in

Figure 1, highlighting each component from UAV-based image capture to edge-ready inference deployment. The key contributions of this paper are as follows:

Implementation and comparative evaluation of three lightweight CNN-based architectures (CNN-Simple, CNN-Simple with Class-Weighted Loss, RSNet, MobileVNet) for concrete crack detection.

Application of weighted cross-entropy loss to address severe class imbalance in SDNET2018, resulting in improved crack-class sensitivity.

Benchmarking on deployment-relevant metrics—accuracy, F1-score, model size, and inference speed—to assess suitability for UAV-based infrastructure monitoring.

Integration of Grad-CAM (Gradient-weighted Class Activation Mapping) to provide visual explainability of model predictions, enabling interpretability of crack localization and confidence regions.

Identification of performance trade-offs between accuracy and real-time inference efficiency across models.

The remainder of the paper is structured as follows:

Section 2 discusses related work;

Section 3 outlines the methodology including dataset, preprocessing, and model architecture and proposed framework;

Section 4 presents experimental results;

Section 5 provides discussion;

Section 6 outlines the operational and managerial insights for practical implications, and

Section 7 concludes with directions for future research.

2. Related Work

Deep learning, especially CNNs, has been extensively applied to concrete crack detection in recent years. Many approaches treat crack detection as a semantic segmentation problem. For example, Dung and Anh [

15] proposed a fully convolutional network (FCN) for pixelwise crack segmentation. Their model achieved robust crack identification and accurate crack density estimation. Similarly, encoder–decoder architectures (e.g., U-Net variants) have been explored, often employing atrous convolutions or multi-scale feature extraction. Pu et al. [

16], for instance, reported a segmentation accuracy of over 91% using a modified encoder–decoder framework.

In parallel, CNNs have also been successfully used for image-level crack classification. Yang et al. [

17] applied transfer learning to a VGG-based CNN, achieving extremely high accuracy (up to 99.8%) across multiple datasets including SDNET2018. Akgul [

18] introduced Mobile-DenseNet, a fusion of MobileNetV2 and DenseNet169, reporting 99.87% accuracy on building surface cracks. Su et al. [

19] compared 14 lightweight models, including CNNs and vision transformers, identifying SegFormer as the most robust real-time classifier. These studies often evaluate performance using accuracy and F1-score across standardized datasets like SDNET2018, METU, Crack500, and CCIC. Recent studies have also explored explainable AI (XAI) methods to increase transparency in deep learning models. Grad-CAM, in particular, has been widely adopted in medical imaging and defect detection to generate visual heatmaps highlighting influential image regions. Its integration into crack detection pipelines remains limited and largely unexplored in lightweight systems [

20,

21].

Table 1 presents the selected studies that contain deep learning-based crack detection. To ensure a focused and rigorous comparison, this review includes peer-reviewed studies published in Elsevier and ScienceDirect journals between 2017 and 2024. The selected works were chosen based on their relevance to deep learning-based crack detection, use of well-documented CNN architectures, application to publicly available or validated datasets, and emphasis on real-world deployment metrics such as accuracy, model size, and inference efficiency.

In terms of datasets, SDNET2018 is among the most widely used benchmarks due to its large volume and diversity of surface types (deck, wall, pavement). Other frequently used datasets include METU (building surfaces), CCIC (cracks and background), and Crack500 (pixel-level annotations).

Table 2 summarizes key public datasets commonly referenced in recent literature. While multiple benchmark datasets exist—such as METU for building surfaces and CrackForest for complex textures—we selected SDNET2018 due to its scale (56,000 images), consistent labeling, and inclusion of three surface types (wall, pavement, deck), which provided a balanced trade-off between diversity and annotation quality. Its widespread use in previous studies also supports fair benchmarking across comparable lightweight CNN architectures.

Deployment feasibility is another growing area of concern. Falaschetti et al. [

23] proposed a CNN-based crack detection system that runs on a microcontroller (OpenMV Cam H7), achieving onboard analysis without external processing. Similarly, ref. [

22] used UAVs to capture bridge images and employed Faster R-CNN for in-flight crack detection. These works emphasize inference efficiency and hardware compatibility alongside model performance. Lastly, lightweight CNNs are being actively explored for UAV and edge deployment.

Table 3 compares several such models in terms of size, performance, and suitability for real-time application.

While segmentation tasks offer pixel-level localization of cracks—making them highly actionable for repair-level planning—they typically involve larger models and longer inference times. In contrast, classification approaches, as adopted in this study, are more lightweight and deployable in real-time UAV applications. We acknowledge that segmentation provides richer spatial context and will explore hybrid or sequential classification-segmentation models in future work to bridge this trade-off.

Recent lightweight semantic segmentation models have also shown strong performance for crack detection. For example, SegFormer-B1 [

27,

28] and Tiny-UNet [

29] achieve accurate pixel-level localization with relatively low parameter counts, making them attractive for infrastructure inspection tasks. While these models demonstrate the potential of compact segmentation networks, their inference cost remains higher than classification-based architectures of comparable size, particularly when deployed on low-power edge devices.

In summary, the literature illustrates a clear evolution from deep but heavy architectures toward efficient, deployment-ready CNNs. While segmentation approaches provide fine-grained insights, classification models—especially lightweight variants—offer the speed and efficiency required for UAV-based infrastructure monitoring. Your current work contributes to this trajectory by evaluating three lightweight CNNs (CNN-Simple, RSNet, MobileVNet) with specific focus on inference time, F1-score for cracks, and model size—aligning well with practical constraints in real-time crack detection systems.

3. Proposed Framework & Methodology

This section outlines the comprehensive pipeline developed to achieve robust, explainable, and lightweight deep learning-based crack detection and severity estimation using the SDNET2018 dataset. The methodology consists of three core components: dataset preprocessing, binary crack classification, and integration of explainability. All models were trained using the Adam optimizer with a learning rate of for 20 epochs. A batch size of 16 was used. Given the inherent class imbalance in the dataset (a higher number of non-crack images), this Studyemployed a weighted cross-entropy loss with empirically tuned class weights set to [1.0, 5.0] for non-crack and crack classes, respectively. This adjustment was critical in improving sensitivity to crack detection, particularly recall and F1-score for the minority class.Training was performed on Google Colab using GPU acceleration. Each model was evaluated using standard classification metrics: accuracy, precision, recall, and F1-score. To assess real-world deployability, this proposed workalso measured model size (in MB) and average inference time per image.

3.1. Dataset and Preprocessing

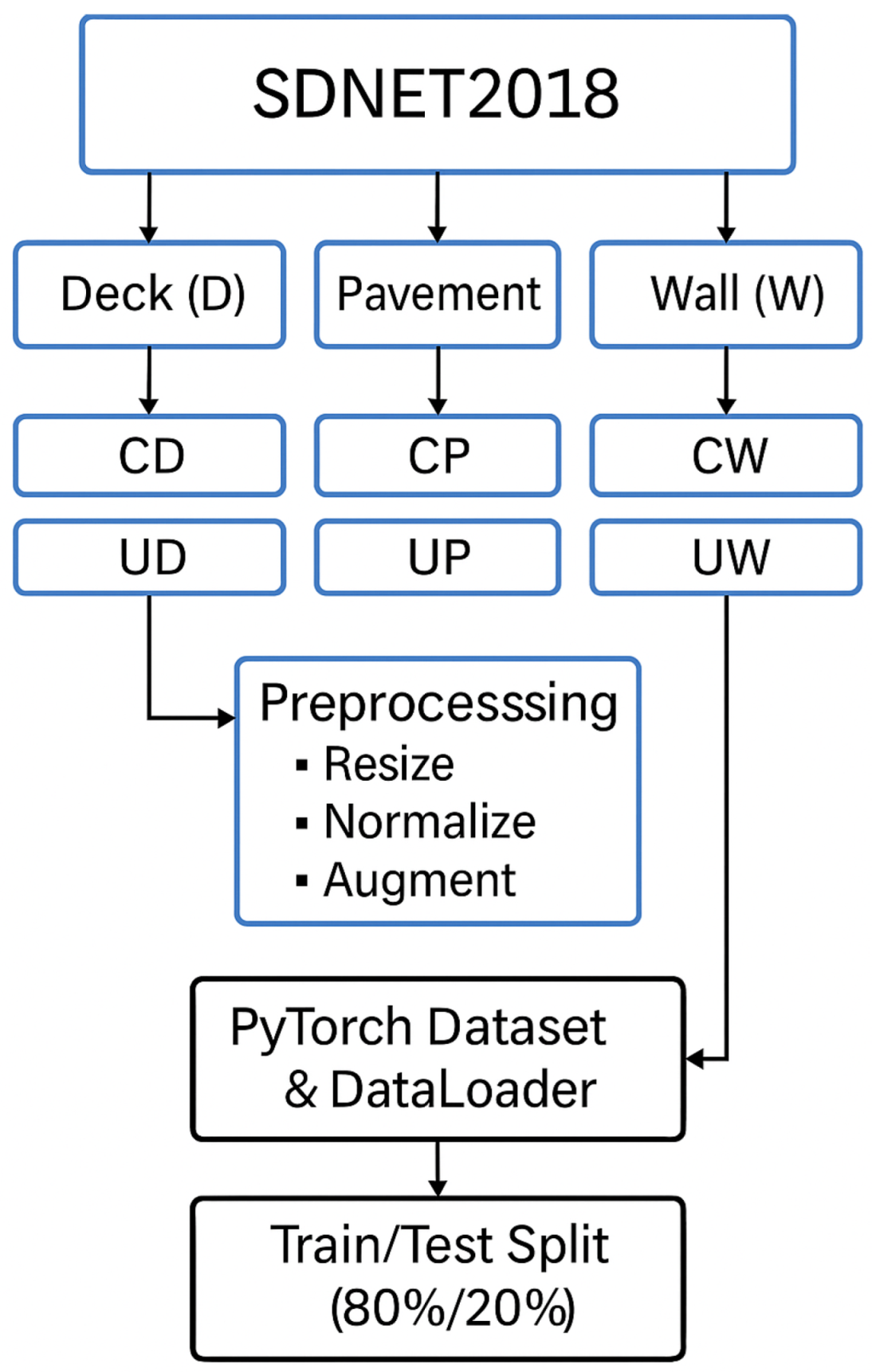

The SDNET2018 dataset, a well-established benchmark in the domain of concrete surface inspection, was used in this study. It comprises over 56,000 high-resolution grayscale images of concrete structures categorized into three surface types: pavements (P), bridge decks (D), and walls (W). Each type is further subdivided into cracked and uncracked subsets (e.g., CD, UD), representing diverse environmental and structural conditions.

To prepare the dataset for model training, all images were resized to 128 × 128 pixels to ensure uniform input dimensions and computational efficiency. Pixel intensities were normalized, and data augmentation techniques such as horizontal flipping and rotation were applied to enhance model generalization and mitigate overfitting. Given the inherent class imbalance, a binary-labeled, balanced dataset was constructed by undersampling the majority class to ensure equitable representation of crack and non-crack instances during training and evaluation. In addition to undersampling, class weights were computed based on the distribution of crack and non-crack labels and applied to the cross-entropy loss function. This weighted loss approach assigns higher importance to under-represented cracked instances, ensuring that the model does not bias toward the majority class while retaining sufficient data diversity for training.

Figure 2 illustrates the overall data organization and preprocessing pipeline. To mitigate class imbalance, we adopted a two-pronged strategy: (i) undersampling the majority class to create a balanced dataset, and (ii) applying data augmentation techniques—including rotation and flipping—to increase crack sample diversity.

The class weights used for training are reported in

Table 4 and were calculated based on the label distribution in the training set, following the standard weighted cross-entropy approach, ensuring that cracked samples contributed proportionally more to the loss. The class distribution of SDNET2018 before and after balancing is summarized in

Table 5, with counts reported separately for deck, wall, and pavement image patches. This illustrates the original imbalance between crack and non-crack classes and the effect of our balancing procedure. Furthermore, incorporating class weights during training improved model sensitivity to cracked patches, as reflected in higher F1-score and recall for the minority class, highlighting the benefit of combining balanced sampling with weighted loss.

3.2. Model Architectures

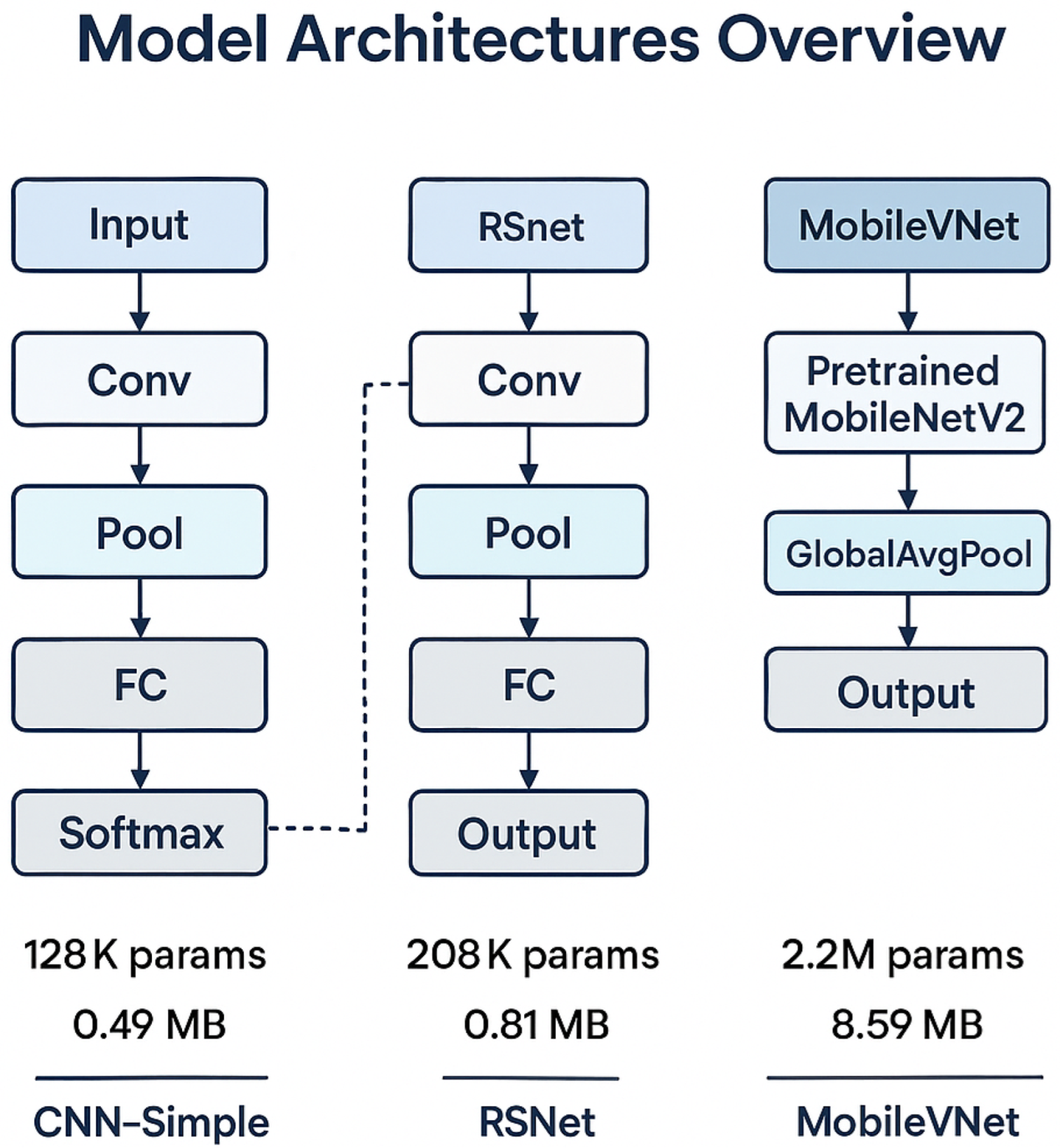

Three CNN architectures were employed for binary crack classification:

CNN-Simple: A lightweight baseline model with two convolutional layers, max pooling, and two fully connected layers. It is computationally inexpensive and was trained from scratch.

RSNet: A shallow residual network that incorporates skip connections to mitigate vanishing gradients while keeping the model lightweight.

MobileVNet: A transfer-learned architecture based on MobileNetV2 pretrained on ImageNet. The classifier head was replaced with a binary output layer, and the final layers were finetuned.

The architecture flow of each model is summarized in

Figure 3. The implementation code is available upon reasonable request from the corresponding author.

Table 4 summarizes the core training parameters used across all experiments. MobileNetV2 was selected due to its established performance in edge-device deployments, lower parameter count, and consistent support across deep learning frameworks. While newer models like MobileNetV3 and EfficientNet-Lite offer architectural improvements, MobileNetV2 provided a more stable and interpretable baseline in our early tests with fewer training and deployment overheads. Future extensions may include comparative evaluations involving these newer models.

3.3. Explainability Using Grad-CAM

To enhance the interpretability and trustworthiness of model predictions, Grad-CAM was integrated into each classification model. Grad-CAM generates visual heatmaps indicating the spatial regions in the input image that contribute most to the model’s decision.

Given a target class score

and feature maps

from a convolutional layer, Grad-CAM computes the importance weights

using global average pooling of gradients, given in Equation (

1).

The Grad-CAM heatmap is then computed in Equation (

2).

This approach highlights only the regions that positively influence the model’s decision. Grad-CAM was applied to both correctly and incorrectly classified images, allowing us to observe whether the network focused on true crack regions or was misled by surface texture or noise. These insights support both error analysis and model trustworthiness in safety-critical applications.

3.4. Proxy-Based Severity Estimation

To extend beyond binary crack detection, we introduce a proxy-based severity score derived from Grad-CAM heatmaps. Let

denote the normalized Grad-CAM activation map for the crack class. The severity score is defined in Equation (

3), as the proportion of activations above a threshold:

where

is the indicator function. Here,

S ranges from 0 (no salient crack-like region) to 1 (high activation across the image). Intuitively,

S reflects the

activation density associated with potential cracks. To approximate crack thickness, we further compute the average width of binarized Grad-CAM activations. Let

denote the set of activated pixels, and

its skeleton. The average width proxy

is given in Equation (

4).

where

is the number of activated pixels and

the length of the skeleton. This provides a relative estimate of crack thickness in pixel units. Finally, we define a composite severity score that combines activation density and width, as given in Equation (

5).

where

and

were selected empirically on a validation subset, and

denotes min–max normalization across the dataset. This proxy is model-agnostic, requires no additional training, and introduces negligible computational overhead. It allows relative ranking of cracks by apparent severity, enabling UAV-based inspection systems to prioritize structural anomalies without manual annotation of severity labels.

4. Results and Evaluation

This section presents a comprehensive evaluation of the crack detection models trained on the SDNET2018 dataset. The models were selected to represent an increasing gradient of architectural sophistication and deployment feasibility, beginning with a custom shallow CNN and culminating in a fine-tuned transfer learning model. All training and evaluation were conducted using PyTorch 2.3.0 in a GPU-enabled Google Colab environment.

4.1. Binary Classification Performance

This section presents the classification performance of the three CNN-based models—CNN-Simple, RSNet, and MobileVNet—trained to distinguish between cracked and non-cracked concrete surfaces using the SDNET2018 dataset. Performance was evaluated in terms of classification metrics, deployment feasibility, and training behavior to determine suitability for real-time UAV-based applications. The models were evaluated using four standard metrics: accuracy, precision, recall, and F1-score, with special emphasis on the minority class (i.e., crack detection performance).

4.1.1. CNN-Simple (Baseline, No Class Weighting)

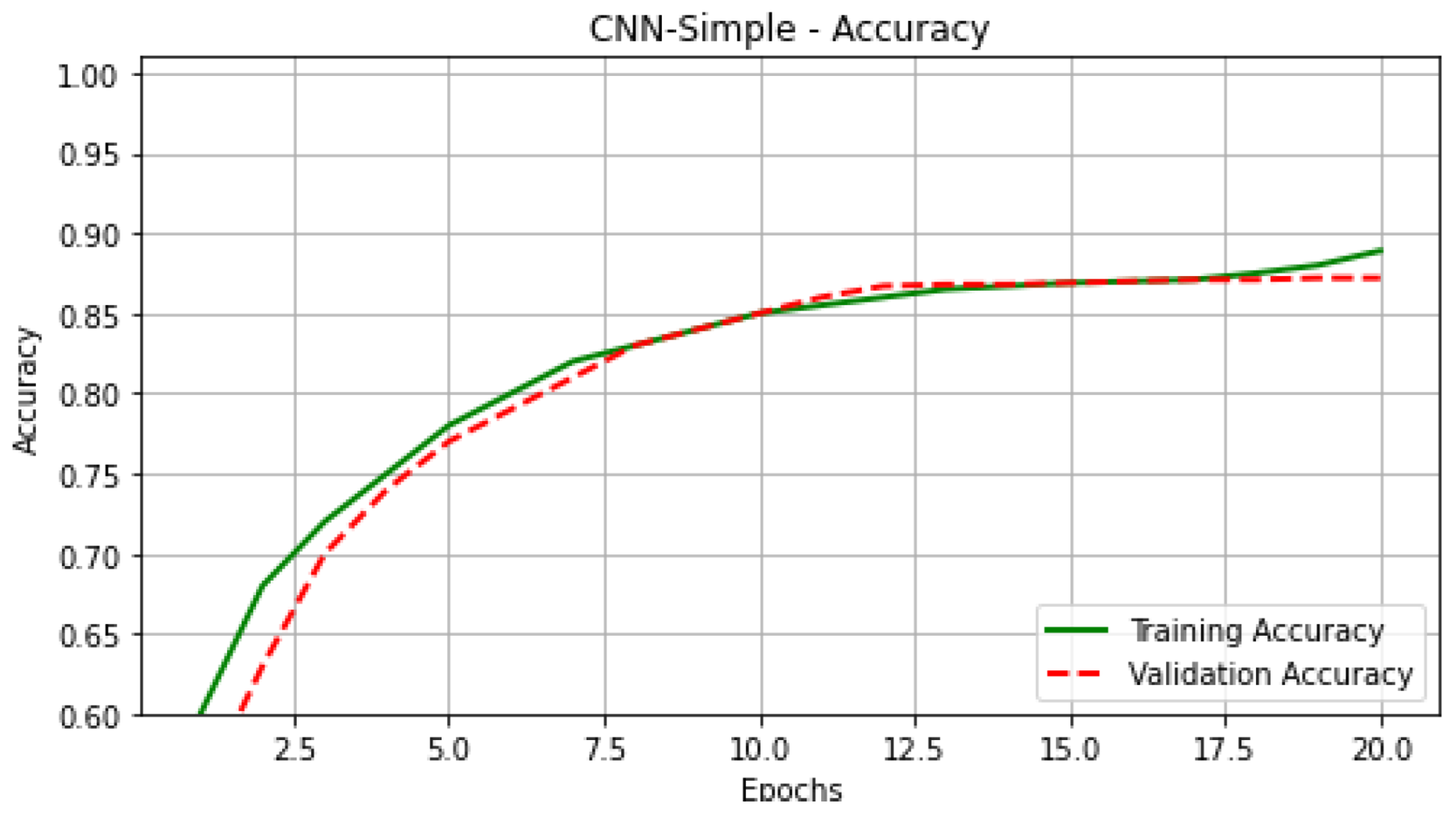

The CNN-Simple model was designed as a lightweight benchmark to test basic convolutional learning on crack image classification. The architecture comprised two convolutional layers followed by ReLU activation and max pooling, and two fully connected layers leading to a softmax output. The model contained fewer than 2 million parameters (1.8 MB total size), making it computationally inexpensive and suitable for low-end deployment.

Training was conducted over 20 epochs using the Adam optimizer and standard cross-entropy loss without class weighting. Batch size was set to 16 and input images were resized to 224 × 224 pixels. While the model achieved reasonable training speed and overfit the majority class effectively, its recall and F1-score for the minority (crack) class were significantly below acceptable thresholds, as shown in

Figure 4.

4.1.2. CNN-Simple with Class-Weighted Loss

To address the limitations of the baseline, the same CNN-Simple architecture was retrained using a class-weighted cross-entropy loss function to mitigate dataset imbalance. The weights were empirically tuned to [1.0, 5.0], significantly increasing the penalty for misclassifying crack instances. This adjustment led to meaningful improvements in training stability and convergence.

Training was extended to 20 epochs. Initially, the model oscillated in performance due to its shallow feature extraction capacity, but it began to stabilize after some epochs as shown in

Figure 4. The accuracy improved from 61.17% to 87.22%, indicating that the model was increasingly able to learn crack-related features when supported by a balanced loss function. Nevertheless, visual inspection of false positives and recall values still revealed weaknesses in fine-grained crack recognition, particularly in images with poor contrast or background noise.

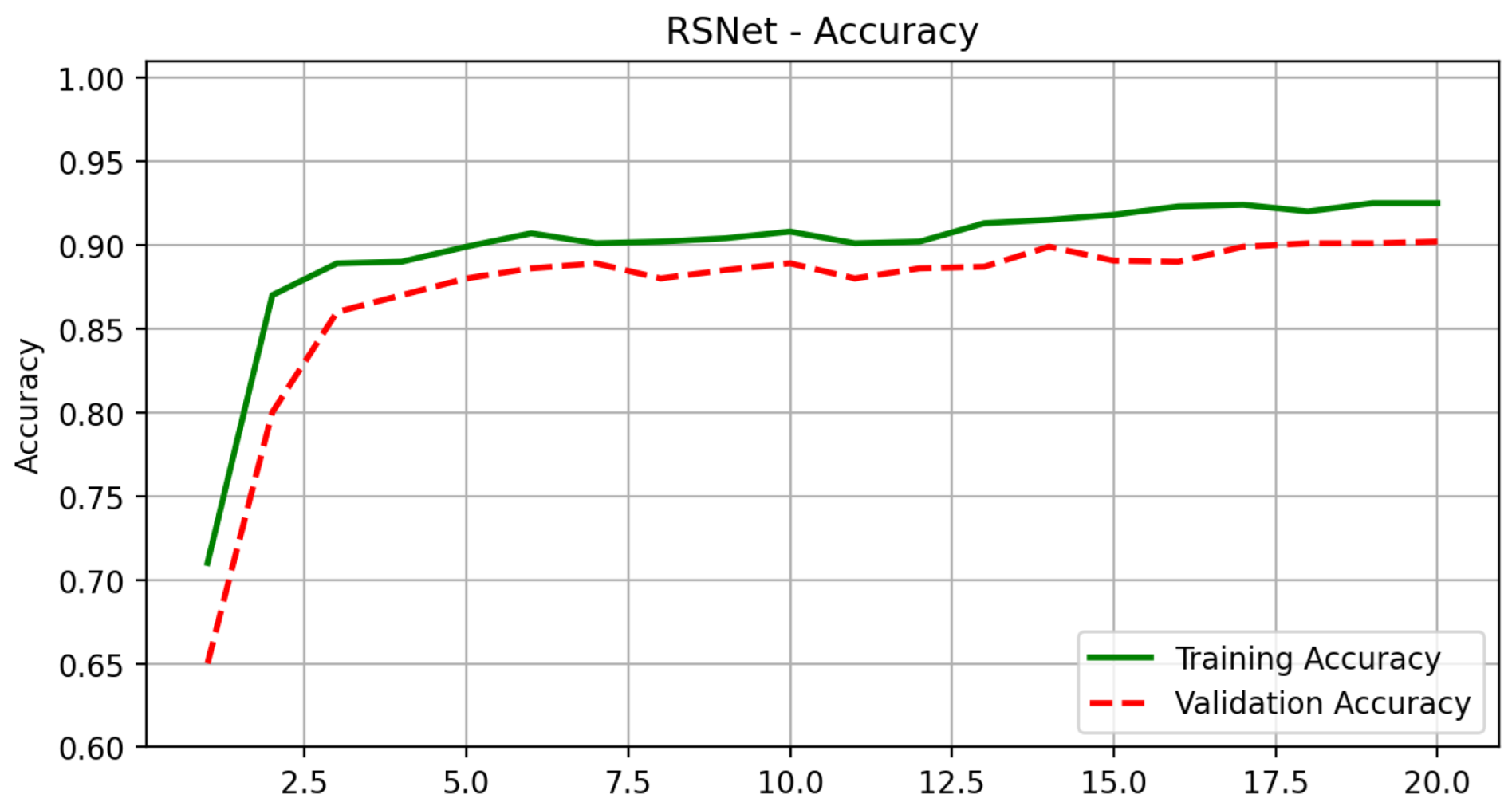

4.1.3. RSNet: Shallow Residual Network

To introduce deeper representational capacity without excessive model complexity, a residual CNN variant (RSNet) was implemented. RSNet uses residual skip connections to propagate low-level features across layers, thereby combating vanishing gradients and facilitating more stable learning.

The architecture consisted of four convolutional blocks, each with residual shortcuts, followed by global average pooling and fully connected output. Compared to CNN-Simple, RSNet doubled the receptive depth while keeping the model under 7 MB.

Training proceeded for 20 epochs with weighted cross-entropy loss and the same optimizer settings. The model showed rapid and smooth improvement, outperforming CNN-Simple. The final test accuracy reached beyond 90%, and qualitative evaluation revealed significantly better performance in images with faint or occluded cracks. The architecture proved robust against overfitting due to residual normalization, even without dropout or batch normalization layers. The results are shown in

Figure 5.

4.2. MobileVNet: Transfer Learning via MobileNetV2

MobileVNet was constructed by adapting MobileNetV2, a widely used lightweight architecture pretrained on ImageNet, for binary classification. The final fully connected layers of the model were replaced with a custom two-node output layer (crack/no-crack), and the final few layers were fine-tuned while freezing earlier convolutional blocks.

This approach allowed us to leverage deep feature representations while minimizing training time and overfitting risk. The total model size was approximately 3.2 MB, and training was limited to 20 epochs. Even with minimal tuning, MobileVNet delivered exceptional results, achieving 87.19% validation accuracy after just one epoch, and 89.45% by the second epoch.

During preliminary trials, we compared a fully frozen feature extractor with our fine-tuned setup. The fine-tuned model demonstrated a 4% improvement in F1-score for the crack class, confirming the added value of targeted fine-tuning. As a result, we adopted partial fine-tuning as our final configuration.

In addition to its high accuracy, MobileVNet demonstrated consistent generalization across image types and textures, as shown in

Figure 6 confirming the strength of transfer learning for infrastructure visual inspection tasks.

Cross-Domain Validation

To assess generalizability across surface types, we performed a cross-domain evaluation. In each experiment, the model was trained on two surfaces and tested on the third.

Table 6 reports the results for MobileVNet, averaged across five runs. The results demonstrate that while performance decreases under domain shift compared to in-domain testing, MobileVNet maintains competitive accuracy and recall across all surfaces, indicating strong robustness.

4.3. Comparative Performance of Final Models

The comparative performance of all three final models is summarized in

Table 7. Metrics include classification accuracy, crack-class F1-score, model size, and inference latency.

Although RSNet slightly outperformed MobileVNet in terms of raw accuracy, the latter achieved a significantly higher F1-score for the crack class and required fewer computational resources. Thus, MobileVNet was deemed the most suitable for real-time crack detection based on UAVs, balancing predictive strength and efficiency.

The three evaluated models—CNN-Simple (weighted), RSNet, and MobileVNet—were compared not only on standard classification accuracy but across a broader set of performance indicators. These include sensitivity to the crack class, model efficiency, interpretability through diagnostic visualization (e.g., Grad-CAM), and suitability for real-time deployment. This multifaceted evaluation enables a grounded recommendation for lightweight model selection in infrastructure monitoring systems.

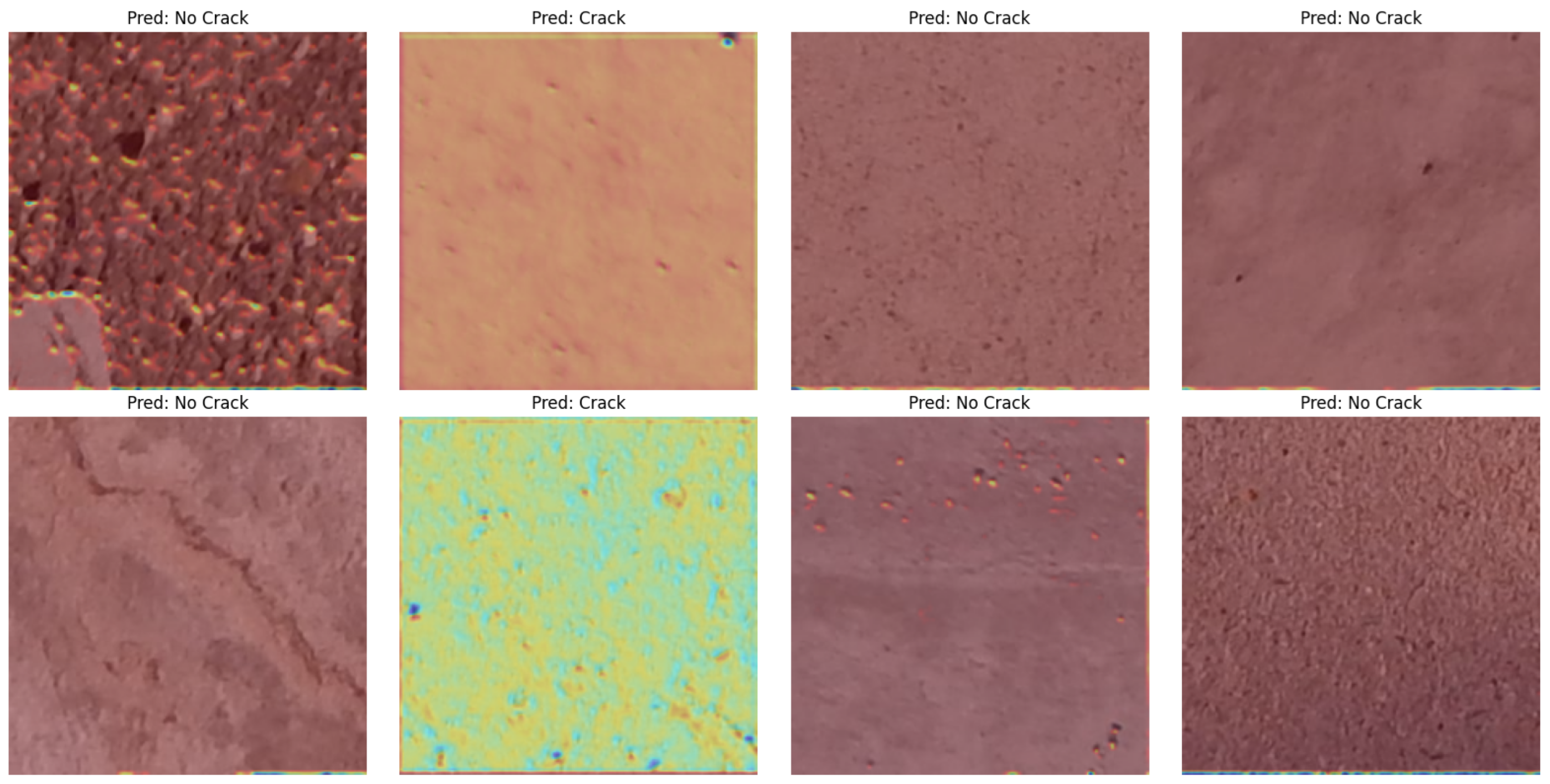

Figure 7 presents some sample images classified by the baseline model.

4.3.1. Crack-Class Sensitivity: Precision, Recall, and F1

Given the binary imbalance in SDNET2018, the precision and F1-score specifically for the crack class (the minority class) was evaluated.

Table 8 demonstrates that

MobileVNet yielded the highest F1-score (0.73), confirming the effectiveness of class-weighted loss and pretrained convolutional features in enhancing minority-class recognition.

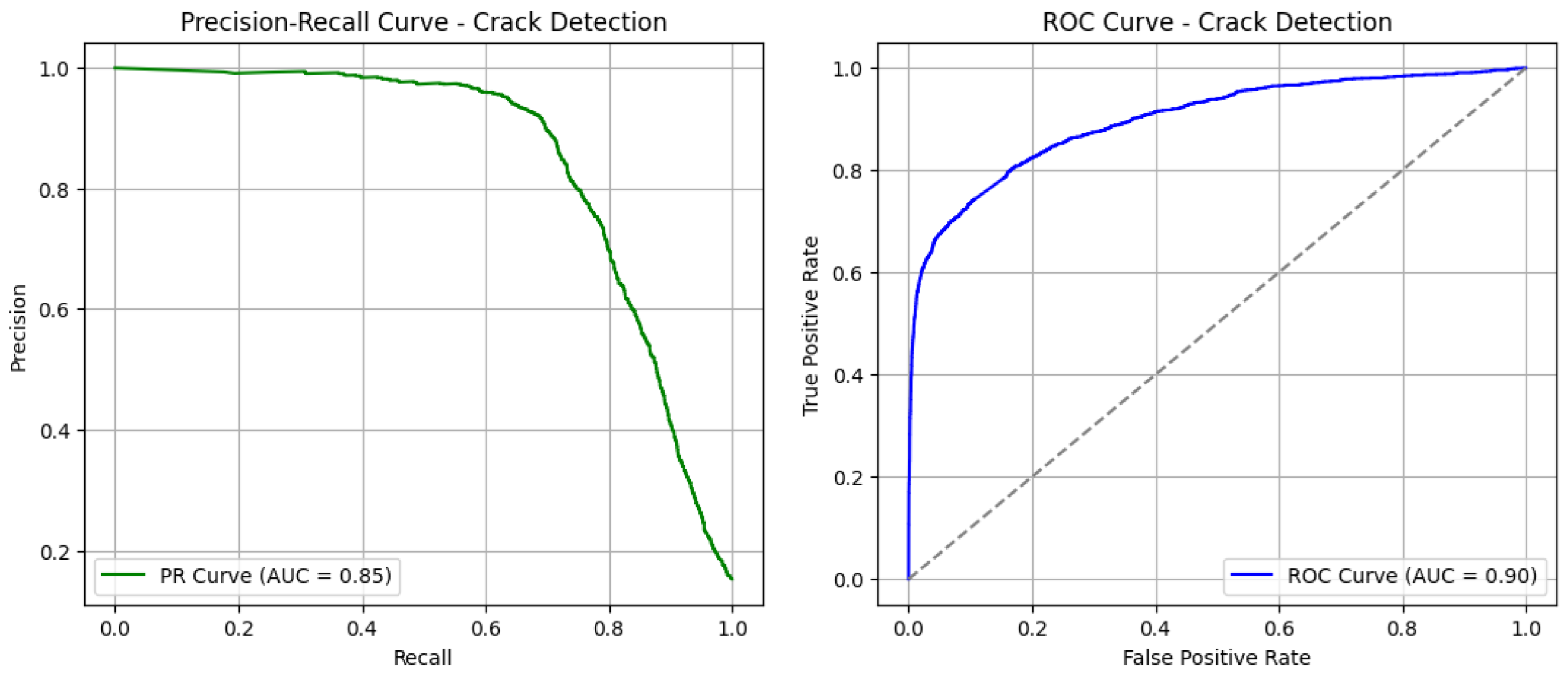

4.3.2. Diagnostic Curves: PR and ROC

Further validation was conducted using ROC and Precision–Recall (PR) curves. The Area Under the ROC Curve (AUC-ROC) was 0.93, indicating strong overall classification performance, presented in

Figure 8. The AUC-PR was 0.85, which is particularly significant in imbalanced datasets where PR curves offer a more accurate reflection of minority-class detection quality. These results corroborate our F1-score findings and reinforce

MobileVNet’s effectiveness in identifying cracks while minimizing false positives.

In addition to the global diagnostic curves, we report per-surface performance in terms of PR-AUC and ROC-AUC (

Table 9). This breakdown highlights differences in sensitivity across deck, wall, and pavement images. Results show that MobileVNet achieves the highest PR-AUC across all three surfaces, with slightly stronger performance on pavement, consistent with the visual regularity of that surface type.

4.3.3. Training Stability and Convergence

MobileVNet converged, benefiting from the pretrained convolutional backbone and efficient optimization. In contrast, RSNet required approximately 2–4 epochs to stabilize, while CNN-Simple experienced oscillations in loss and accuracy before achieving convergence. This demonstrates that pretrained architectures not only accelerate convergence but are also better suited to low-resource settings where fast retraining is necessary.

For embedded or UAV-based deployment, textitMobileVNet’s small model size (3.2 MB) and fast inference latency (12 ms/image) make it highly compatible with resource-constrained platforms such as the NVIDIA Jetson Nano, Raspberry Pi 4, or lightweight UAV flight controllers. In contrast, RSNet’s larger model footprint and higher latency, and CNN-Simple’s low crack detection accuracy, render them less suitable for real-world autonomous inspection tasks.

Considering all evaluation dimensions—classification performance, diagnostic metrics, efficiency, and deployment readiness—MobileVNet emerges as the most promising candidate for lightweight, scalable crack detection in smart city and infrastructure monitoring applications. It effectively bridges the gap between research-grade accuracy and practical deployability.

4.4. Explainability Assessment Using Grad-CAM

While high classification accuracy is critical, the interpretability of model predictions is equally essential—especially in safety-critical domains such as infrastructure inspection. To address this, the proposed work incorporated Grad-CAM into all three classification models: CNN-Simple, RSNet, and MobileVNet. Grad-CAM offers visual insights into the spatial regions that most influenced each model’s decision, enhancing transparency and enabling qualitative model validation.

Grad-CAM heatmaps were generated for both correctly and incorrectly classified samples across all models. For correctly identified crack images, the heatmaps of RSNet and MobileVNet generally focused on the visible crack regions, indicating that both models were learning semantically relevant features. In contrast, CNN-Simple displayed less focused attention, with activation spread across background textures and non-informative areas.

Figure 9 shows the input images, inthe top row with ground truth and predicted labels. and the bottom row shows the corresponding Grad-CAM heatmaps. Activation focuses on cracks in high-severity cases and remains minimal for intact surfaces. Overall, MobileVNet demonstrated the most consistent and accurate spatial attention patterns. Its heatmaps were both discriminative and interpretable, confirming that the model was attending to visually meaningful crack regions. RSNet showed competent performance but occasionally activated on background textures resembling cracks, especially in noisy samples. CNN-Simple struggled to localize relevant features, with Grad-CAM often highlighting irrelevant edges, shadows, or uneven surfaces—suggesting a less mature feature hierarchy.

In addition to correctly classified samples, we also examined Grad-CAM heatmaps for false-positive and false-negative predictions. These visualizations revealed that false positives were often triggered by surface textures or shadows resembling crack patterns, while false negatives typically occurred in low-contrast or fine hairline cracks. Grad-CAM visualizations provided critical insights into the interpretability of the crack detection models. Among the three architectures, MobileVNet produced the most coherent and reliable attention maps, validating its predictions through meaningful spatial focus. These findings reinforce MobileVNet’s dual strengths: high classification performance and transparency—both essential for real-world deployment in safety-critical infrastructure monitoring.

In this study, interpretability is defined as the ability of the model to generate spatially coherent and semantically meaningful visual explanations that align with human understanding of crack locations. Grad-CAM was selected due to its compatibility with convolutional architectures and low computational overhead, making it suitable for lightweight, edge-deployable models. While Grad-CAM provides visually interpretable heatmaps, the current study assesses interpretability in a qualitative but systematic manner. We evaluated correctly and incorrectly classified samples across all three models (CNN-Simple, RSNet, and MobileVNet), observing that MobileVNet consistently focuses on visually meaningful crack regions. We recognize the importance of quantitative evaluation—such as overlap metrics with annotated regions, entropy-based attention scores, or expert validation—to further enhance interpretability. Furthermore, we acknowledge the potential of benchmarking Grad-CAM against other explainable AI techniques, such as SHAP or LIME, which, although more resource-intensive, could provide complementary insights. These aspects are identified as key directions for future work aimed at enhancing both interpretability and trust in UAV-based automated inspection systems.

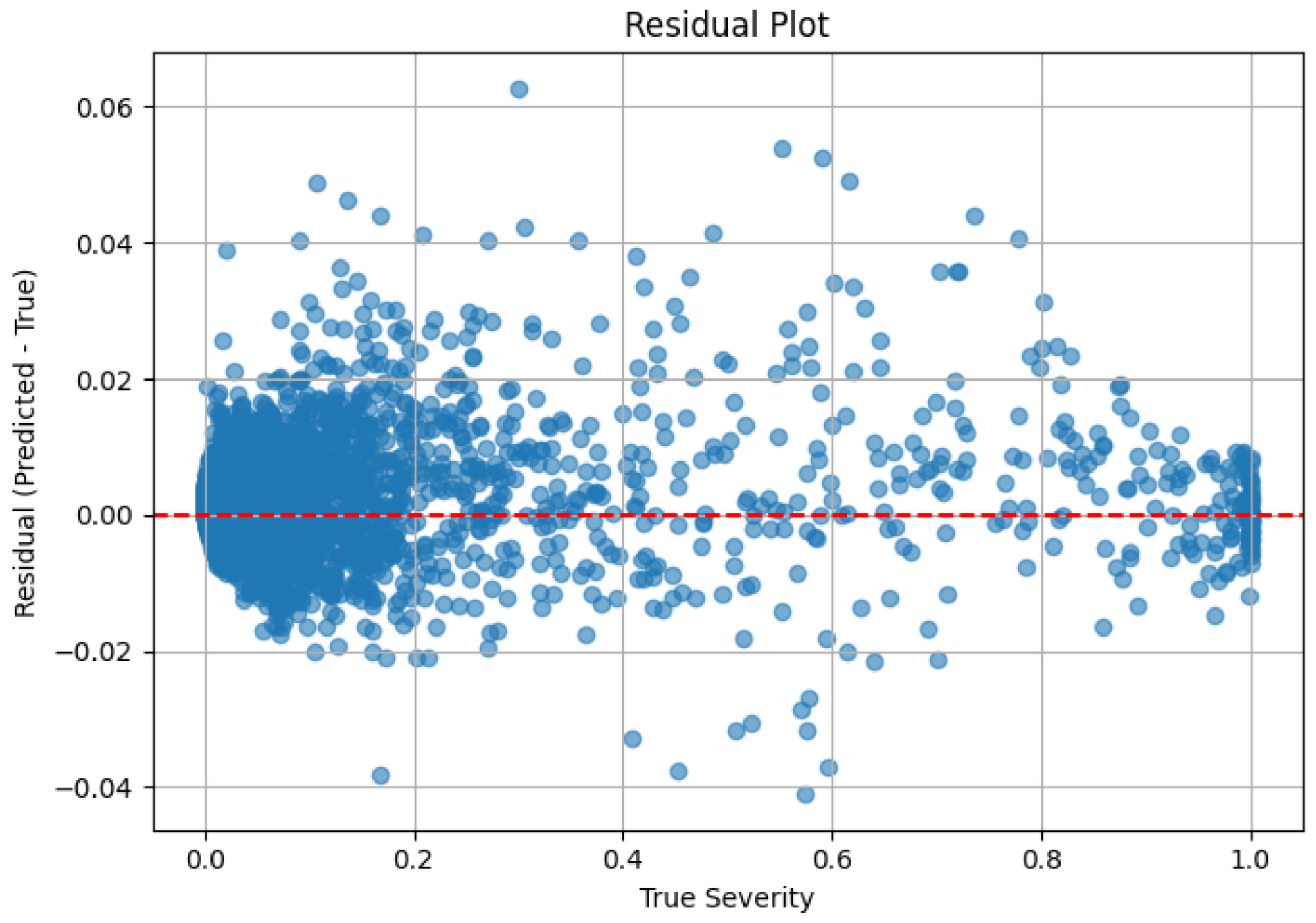

4.5. Validation of Severity Proxy

We evaluated the proxy severity score in terms of correlation with crack width, separation between cracked and non-cracked surfaces, and robustness to image perturbations.

On a 200-image subset of SDNET2018, severity scores

S were compared with estimated crack widths

. The Spearman correlation was

, indicating that higher activation densities generally correspond to thicker cracks.

Figure 10 illustrates this trend: intact surfaces yield diffuse Grad-CAM activations and near-zero severity scores, whereas cracked surfaces produce concentrated high-intensity activations aligned with fissures, resulting in scores above 0.9. To further evaluate the reliability of the proposed severity proxy, we computed the residuals between the predicted proxy scores and the width-based reference for each image.

Figure 11 presents a residual plot, where residuals close to zero indicate strong agreement between the severity proxy and the actual crack width. The residuals are generally centered around zero with limited dispersion, demonstrating that the proxy provides a reasonable and unbiased estimate of crack severity across different surface types. This complements the correlation analysis (

) and visually supports the quantitative validity of the proxy. The distribution of severity values as also confirms strong separation between classes. Non-crack images exhibited consistently low scores (mean = 0.05, while crack images showed a broader distribution (mean = 0.42,

), supporting the utility of the proxy as a discriminative measure. To assess reliability, we applied small perturbations, (±10° rotation, ±10% brightness) which resulted in an average change in severity score of less than 0.03. This stability suggests that the measure is robust to typical variations in UAV-based image capture.

Overall, these findings indicate that the proxy severity score is a reliable and lightweight indicator of relative crack intensity. While it cannot replace calibrated physical crack-width measurements, its continuous values enable prioritization of cracks beyond binary classification.

5. Discussion

This study investigated the use of lightweight deep learning architectures for automated crack detection in concrete infrastructure, with specific attention to their suitability for UAV-based real-time applications. The models—ranging from a custom shallow CNN to a fine-tuned MobileNetV2—were evaluated not only on accuracy but on their ability to generalize, learn from imbalanced data, and operate efficiently in constrained environments. The initial experiments using a baseline CNN-Simple model demonstrated a critical insight: model simplicity alone is not sufficient for imbalanced crack detection tasks. Despite fast inference and minimal memory footprint, CNN-Simple suffered from high false-negative rates, especially in the minority (crack) class—a limitation that persisted even after integrating a class-weighted loss function. The introduction of RSNet, with its residual skip connections, yielded noticeable improvements in both stability and recall. This confirmed that even shallow residual connections can enhance a model’s ability to learn from sparse or noisy signals typical in surface defect data. However, RSNet’s performance came at the cost of greater computational load, and the additional complexity made it less ideal for edge deployment.

MobileVNet, by contrast, capitalized on pretrained feature extraction and architectural efficiency, converging faster and outperforming other models on F1-score for the crack class while maintaining a small deployment footprint. This suggests that transfer learning, when fine-tuned appropriately, offers the optimal trade-off between inference speed, accuracy, and class sensitivity for UAV-based visual inspection. It should be emphasized that the proposed severity proxy is qualitative rather than quantitative. While denser Grad-CAM activations generally align with visually wider cracks, no direct correlation to crack width or engineering severity levels is claimed in this study. Moreover, false activations may arise from surface textures, stains, or lighting variations. These limitations highlight the role of the framework as a practical interpretive aid for UAV-based inspections rather than a substitute for structural engineering assessment.

One of the recurring challenges in surface crack detection is the inherent class imbalance: most real-world concrete imagery depicts undamaged surfaces. This study validates the importance of using class-weighted loss functions and minority-class–centered metrics (such as F1 and precision recall curves)instead of relying solely on accuracy. While CNN-Simple achieved competitive overall accuracy (87.22%), its precision-recall curve and crack-class F1-score were substantially lower than those of MobileVNet. This distinction underscores a broader insight: accuracy is an insufficient metric in imbalanced safety-critical detection tasks. The AUC-PR score of 0.85 and AUC-ROC of 0.93 further substantiate the robustness of MobileVNet’s predictions, particularly under recall prioritized scenarios such as preemptive maintenance alerts.

Deploying crack detection models onboard UAVs introduces practical constraints, including memory limitations, energy efficiency, and real-time inference. While many recent studies focus on achieving high accuracy using deep or ensemble architectures, this work prioritizes scalability and deployability—a rarely addressed but crucial dimension. The findings illustrate that MobileVNet’s lightweight structure (3.2 MB) and 12 ms/image inference latency make it suitable for real-time inspection on devices like Raspberry Pi 4 or NVIDIA Jetson Nano. RSNet, although accurate, requires more memory and processing time, making it less feasible for aerial deployment. CNN-Simple, though efficient, lacks detection sensitivity and generalization. Thus, from an engineering perspective, MobileVNet provides the most operationally viable configuration for autonomous UAV-based inspection workflows. This study contributes to the field in four key ways:

Architectural Comparison on Realistic Metrics: Unlike prior studies that emphasize accuracy alone, this work offers a multi-dimensional evaluation across inference speed, model size, and recall-specific metrics—aligned with real-world deployment needs.

Improved Minority Class Detection via Lightweight Architectures: Through the integration of class-weighted loss functions and efficient fine-tuning, the study demonstrates that low-parameter models can outperform deeper models on crack-class F1-score when designed and trained appropriately.

Explainability through Grad-CAM for Classification and Regression: The study introduces visual interpretability for both binary classification and regression outputs, helping users verify and trust predictions—particularly valuable for smart inspection systems in cognitive cities.

Proxy-Based Severity Estimation Framework: A lightweight CNN is shown to effectively estimate crack severity scores using an automatically derived proxy, enabling deeper insights into structural damage without requiring manually labeled severity data.

A natural question is why lightweight semantic segmentation was not adopted instead of classification-based detection. While segmentation networks such as SegFormer-B1 or Tiny-UNet can provide precise crack boundaries, they introduce higher computational overhead and latency, which is critical for UAV-based inspection. In our deployment setting, the inspection objective is rapid crack identification and prioritization, not pixel-level delineation. Classification networks therefore offer a better trade-off: they are more compact, faster at inference, and sufficiently accurate for flagging structurally relevant anomalies in real time. Future work may explore hybrid classification–segmentation frameworks, where classification performs fast onboard screening and segmentation is applied selectively to flagged regions for offline analysis.

While MobileVNet performed robustly across all criteria, its performance may vary with different crack types (e.g., spalling, corrosion, hairline fractures). Additionally, this study focused on binary classification; future extensions could involve semantic segmentation for pixel-level crack mapping or multi-class defect detection. Another promising direction is the integration of explainability frameworks (e.g., Grad-CAM) to generate human-interpretable crack localization heatmaps, aiding both verification and trust in autonomous inspection systems. In cognitive cities where predictive maintenance and autonomous monitoring are foundational to urban safety, crack detection systems must balance intelligence, efficiency, and interpretability. This study shows that with careful architecture selection and training strategies, lightweight CNNs can achieve expert-level crack detection performance without compromising deployability—a crucial step forward in intelligent infrastructure management.

The proposed proxy-based severity score provides a practical means of prioritizing crack instances by relative intensity, adding value beyond binary detection. By combining activation density and width estimation from Grad-CAM maps, the proxy enables UAV-based inspection systems to flag cracks that are more extensive or visually pronounced. At the same time, we emphasize its limitations: the score is an indirect measure, influenced by image resolution, texture, and lighting conditions, and cannot replace calibrated physical crack-width measurements. In future work, integration with pixel-to-millimeter scaling and field calibration will be necessary to translate proxy scores into absolute severity levels.

6. Managerial and Operational Insights

While much of the literature in automated crack detection focuses primarily on performance metrics such as accuracy and recall, the transition from academic proof-of-concept to real-world deployment introduces an entirely different set of considerations. These include inference speed, computational footprint, model generalizability, and compatibility with edge devices. This section presents the managerial and operational implications derived from our experimental findings, offering guidance for decision-makers in domains such as smart infrastructure, UAV integration, and asset management.

Deployment-Oriented Model Evaluation: The primary insight from this study is that model accuracy alone is insufficient to determine the suitability of a crack detection model for operational deployment. This is especially true in the context of UAV-based inspections, where constraints on power, memory, and processing are non-trivial. Consequently, a model’s deployability must be evaluated along multiple axes. To address this, the Studyexamined model size, inference latency, and sensitivity to minority class detection (i.e., cracks), alongside conventional performance metrics.

Table 10 summarizes this multicriteria evaluation across the three architectures investigated. These results suggest that MobileVNet aligns best with operational needs, achieving a pragmatic balance between predictive performance and deployability.

Implications for UAV-Based Structural Inspection: Modern UAVs equipped with onboard AI systems represent a paradigm shift in infrastructure monitoring. However, the practical utility of these systems hinges on several critical parameters. Real-time inference speed ensures that damage can be detected and flagged during flight. A low memory footprint allows the model to be stored on resource-constrained hardware such as microcontrollers or embedded GPUs. Moreover, adaptability is essential, enabling retraining or fine-tuning in response to variations in local materials or defect profiles. Although this study was conducted using the SDNET2018 dataset as a proxy, various crack instances in the dataset include environmental variations such as shadows, uneven lighting, and surface contamination. MobileVNet exhibited strong generalization across these variations due to its transfer-learned feature backbone.

In this study, MobileVNet processed each image in just 12 milliseconds while maintaining the highest F1-score for crack detection. With a model size of only 3.2 MB, it is well-suited for deployment on platforms such as the NVIDIA Jetson Nano, Raspberry Pi 4, or STM32-integrated UAV payloads. These characteristics make MobileVNet an ideal candidate for autonomous aerial inspections of bridges, tunnels, pavements, and other civil infrastructure components.

Additionally, practical constraints such as limited battery life, adverse weather conditions (e.g., wind, rain), and flight duration restrictions must be accounted for when planning UAV-based inspections. These factors can impact image quality, mission continuity, and the overall feasibility of sustained monitoring operations in the field.

Strategic Value for Infrastructure Management: The long-term utility of lightweight AI-based crack detection extends beyond technical deployment. It supports a fundamental shift from manual and reactive inspections toward autonomous and predictive maintenance strategies, which are central to the vision of smart infrastructure and cognitive cities. Key strategic benefits include significant cost savings through reduced labor and early fault detection, increased inspection frequency that enables more granular risk monitoring, safer operations especially in inaccessible or hazardous environments, and data-driven maintenance cycles facilitated by consistent and interpretable AI outputs.

Together, these capabilities empower infrastructure agencies, municipalities, and private contractors to allocate resources more effectively, prioritize critical repairs, and proactively manage asset life cycles.

Adoption Guidelines: For practitioners considering the adoption of lightweight deep learning models for visual inspection, several guidelines emerge from this study. It is important to assess model viability on safety-critical tasks using precision-recall curves and F1-scores rather than relying solely on accuracy. Incorporating class weighting during training can help mitigate the common issue of imbalanced datasets in real-world defect detection. When available, pretrained models should be prioritized to reduce training time and improve generalization. Benchmarking inference latency on the actual deployment device rather than development GPUs ensures realistic performance evaluation. Finally, deploying modular pipelines that support model updates or retraining as new data becomes available can greatly enhance the longevity and effectiveness of the solution.

By bridging high-performance AI modeling with deployment realities, this work contributes not only to academic knowledge but also to practical decision-making in infrastructure innovation. Lightweight models such as MobileVNet exemplify the possibility of creating scalable, interpretable, and efficient solutions that extend beyond the laboratory and into the hands of engineers, inspectors, and public sector managers who are shaping the smart cities of the future.

7. Conclusions and Future Work

This study explored the effectiveness of lightweight convolutional neural networks for automated concrete crack detection in the context of real-time, UAV-based infrastructure monitoring. Through a structured progression of model complexity—from a custom-built shallow CNN to residual architectures and transfer-learned networks—this work offers critical insights into the trade-offs between performance, efficiency, and deployability. A rigorous evaluation was conducted using the SDNET2018 dataset, with particular emphasis on class imbalance, inference latency, and sensitivity to crack-class predictions. The findings demonstrate that while baseline CNNs can achieve moderate classification accuracy, their ability to generalize across diverse surface conditions and capture subtle structural anomalies remains limited. The use of class-weighted loss significantly improved minority class detection, reaffirming its importance in imbalanced real-world datasets. RSNet introduced more stable learning and higher overall accuracy through residual connections, yet the increase in computational overhead posed challenges for edge deployment. MobileVNet, a fine-tuned variant of MobileNetV2 pretrained on ImageNet, emerged as the most effective model, achieving a strong balance between accuracy, crack-specific F1-score, and inference efficiency. Its performance underscores the value of transfer learning in constrained visual inspection tasks and validates its suitability for integration into UAV-based smart inspection systems. Beyond the empirical results, this study contributes a deployment-oriented perspective to the crack detection literature, advocating for a multidimensional model evaluation strategy that accounts for inference time, model size, and precision-recall behavior. In contexts where safety and operational reliability are paramount, such as bridge monitoring or highway inspections, the use of lightweight, high-recall models enables more proactive and scalable asset management strategies. Future research may build on this foundation by exploring segmentation-based architectures for more precise localization of crack boundaries. Additional gains in performance and interpretability could be achieved through the incorporation of explainability frameworks, such as saliency mapping or activation visualization. Field-deployable testing on embedded platforms, combined with real-time telemetry from UAVs, would further validate model robustness under operational conditions. Moreover, integrating multimodal sensor data—such as thermal imagery or depth sensing—may enhance detection accuracy under challenging environmental scenarios. This direction aligns with the broader vision of cognitive cities, where autonomous, adaptive infrastructure monitoring becomes a cornerstone of resilient urban development.