1. Introduction

Assessing earthquake-induced damage to buildings constitutes a critical aspect of both seismic risk mitigation and post-earthquake recovery management [

1,

2]. After a major earthquake, the requirement to evaluate and categorize structural damage patterns is essential for assisting emergency response, effective resource allocation, coordination, and long-term urban planning [

3,

4,

5,

6,

7]. The catastrophic Kahramanmaraş earthquakes (Mw 7.7 and 7.6), which occurred on 6 February 2023, in southeast Turkey, provide an opportunity to advance damage assessment methodologies with the help of large datasets that include detailed building observations at a large scale and comprehensive multi-station seismic records [

2,

8,

9,

10]. This earthquake sequence stands as one of the most devastating seismic events in Türkiye’s recent history, resulting in structural damage across 11 provinces and affecting millions of people [

11]. Occurring along the same fault system, the sequence generated complex ground motions marked by pronounced spatial variability in seismic intensity, duration, and frequency content [

12,

13,

14]. This unique seismic scenario offers valuable insights into the damage mechanisms triggered by sequential strong ground motions and serves as an important calibration dataset for advanced predictive models.

Traditional approaches to seismic damage estimation have primarily relied on empirical fragility curves, intensity-based correlations, and simplified ground motion parameters, such as peak ground acceleration (PGA) or spectral acceleration at fundamental periods [

15,

16,

17]. However, these methods often overlook the complex, multidimensional interactions among ground motion characteristics, site conditions, local geology, and structural response [

18,

19,

20]. The limitations of single-parameter approaches become particularly evident during complex earthquake sequences involving multiple events, heterogeneous site conditions, and diverse structural typologies [

21].

Recent advancements in machine learning (ML) algorithms and artificial intelligence techniques have enabled seismic damage assessments based on extensive multidimensional datasets and the identification of nonlinear patterns that classical statistical methods cannot capture [

22,

23,

24]. These ML algorithms integrate multiple ground motion parameters, site characteristics, and structural attributes to develop more robust predictive models [

25,

26]. Nevertheless, many ML-based damage assessments remain constrained by limited dataset sizes, oversimplified feature representations, or reliance on single-station seismic records [

27,

28,

29].

The use of multi-station seismic data represents a major advancement in seismic damage estimation. Studies have reported that ground motion characteristics can vary substantially across short distances, owing to directivity effects, basin amplification, topographic influences, and local site conditions [

30,

31,

32]. These effects were also investigated in terms of spectral demands in Kahramanmaraş earthquakes for industrial facilities [

33]. These spatial gradients are not captured by single-station techniques, potentially resulting in biased damage estimates [

34]. In contrast, multi-station analysis—by integrating data from seismic instruments installed near affected structures—potentially enables a more comprehensive characterization of the local seismic environment [

35,

36]. Damage evaluation relies on multiple parameters that reflect distinct dimensions of seismic intensity and structural demand [

37]. Among such parameters, PGA has traditionally been the most widely used, owing to its computational simplicity and accessibility; however, it only captures peak acceleration amplitude and neglects duration and frequency content [

38]. Meanwhile, distance-based parameters—such as Joyner–Boore distance (Rjb), rupture distance (Rrup), epicentral distance (Repi), and hypocentral distance (Rhyp)—offer supplementary insight into source-to-site geometry and attenuation characteristics [

39].

Site conditions, commonly represented by the mean shear wave velocity in the upper 30 m (Vs30), play a central role in ground motion amplification and structural response [

40,

41]. Soil classifications based on Vs30 measurements offer a standardized framework for incorporating site effects into damage assessment models [

42,

43]. Integrating these heterogeneous site parameters into ML models facilitates the development of more comprehensive damage prediction frameworks.

Despite notable advances in seismic engineering and ML, critical gaps persist in earthquake damage assessment research. First, many earlier studies have relied on relatively small datasets (containing data of hundreds to thousands of buildings), limiting the development of robust models capable of generalizing across varied seismic conditions and structural typologies [

44,

45]. Second, most ML-based approaches have used reduced feature descriptions or single-site ground motion records, potentially overlooking meaningful spatial variability in seismic demand [

46,

47].

Third, only a few studies have undertaken systematic comparisons of ML algorithms for seismic damage classification, while most have focused on individual models rather than conducting comprehensive evaluations [

23,

48]. Fourth, ensemble learning methods, which have demonstrated considerable promise in other domains, have received limited attention in seismic damage assessment applications [

49,

50]. Recent advances in machine learning applications for seismic damage assessment have demonstrated promising results across various structural systems and prediction frameworks. Ref. [

51] developed machine learning algorithms for structural damage prediction of reinforced concrete frames under both single and multiple seismic events, highlighting the capability of ML approaches to capture cumulative damage effects. Similarly, Ref. [

52] proposed a real-time seismic damage prediction framework based on machine learning for earthquake early warning systems, demonstrating the potential for immediate damage assessment during seismic events. Recent advances in physics-informed neural networks have demonstrated significant potential in seismic demand prediction by integrating physical laws with machine learning algorithms [

53]. Additionally, analytical approaches incorporating curvature distribution models and matrix-based predictive frameworks have shown promising results for structural resilience assessment and damage prediction [

54,

55].

Hariri-Ardebili and Sattar (2025) [

56] conducted comprehensive data-driven analysis of post-earthquake reconnaissance findings from the 2023 Türkiye earthquake sequence, employing AutoML approaches on 242 buildings with detailed structural parameters including age, height, and column/wall indices. Their study demonstrated the effectiveness of building-specific vulnerability assessment through SHAP interpretability analysis. Complementary to this approach, ensemble learning methods have shown considerable promise for large-scale seismic damage assessment. Ref. [

57] developed a hybrid stacked ensemble model combining multiple machine learning algorithms for rapid damage classification using both building features and seismic data as input parameters. Similarly, Ref. [

58] presented an explainable ensemble learning framework using bootstrap aggregating to predict structural damage in masonry buildings during seismic events, emphasizing the importance of model interpretability in damage assessment applications. Furthermore, Ref. [

45] investigated multi-station seismic parameter approaches for regional building damage prediction, demonstrating the potential of comprehensive ground motion characterization from multiple recording stations for large-scale damage assessment scenarios.

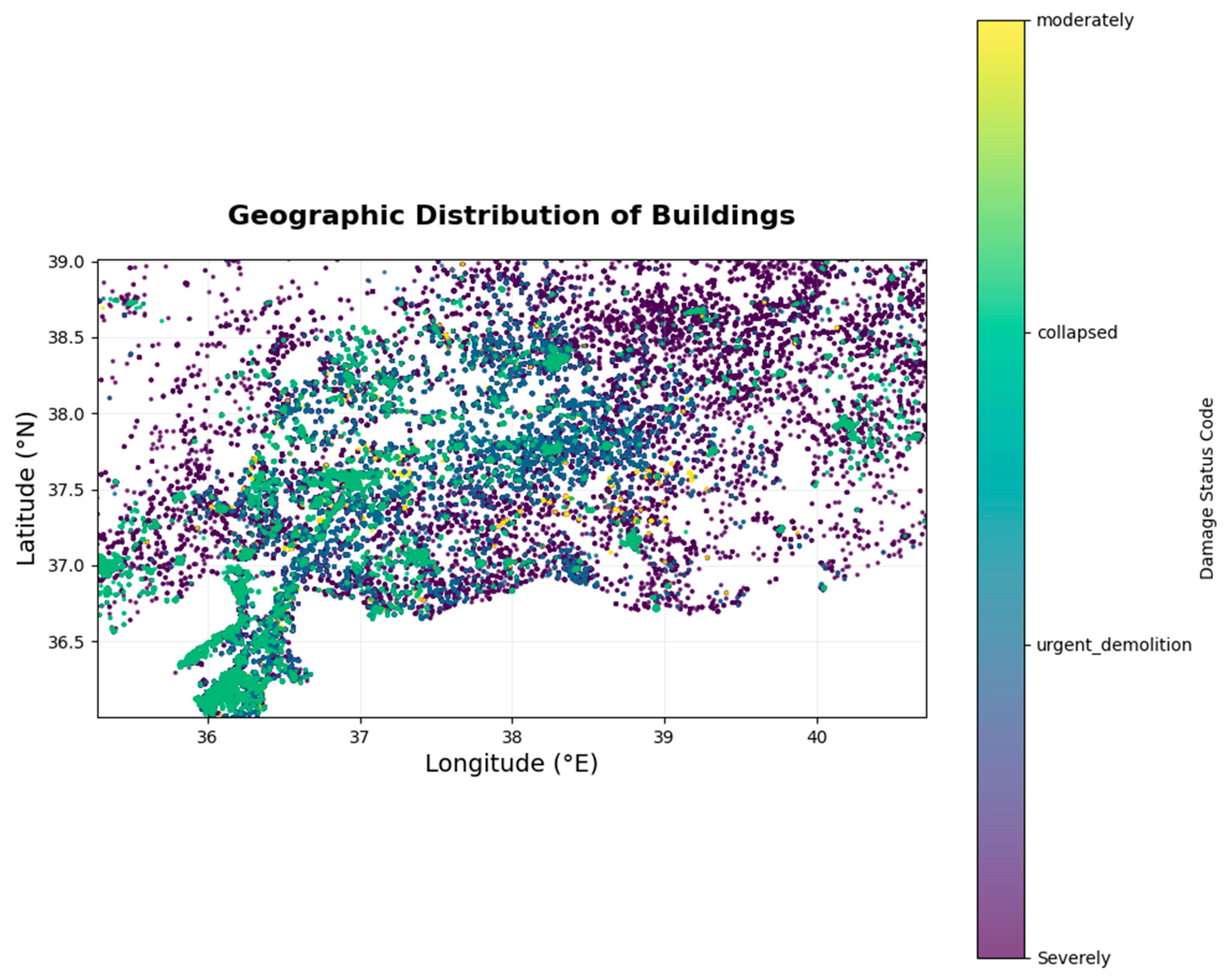

This study addresses these gaps by examining the largest recorded dataset of building damage from a single earthquake series in Türkiye, comprising 304,299 buildings affected by the 2023 Kahramanmaraş earthquakes. The unprecedented scale of this dataset enables the development of robust statistical models and supports comprehensive comparisons of ML algorithms.

The primary objective of this study is to develop an integrated ML framework comparing 10 distinct algorithms for multiclass building damage classification, using the extensive seismic dataset of the 2023 Kahramanmaraş earthquake sequence. We incorporate ground motion data from the six closest seismic stations to each structure to capture spatial variation in seismic demand and perform a comprehensive feature importance analysis to identify the most influential parameters for seismic damage classification. This investigation makes several notable contributions to the field of seismic damage assessment:

The ML-based analysis of 304,299 buildings, representing the largest damage dataset from a single earthquake, enables robust statistical inference and model validation.

The integration of 66 seismic parameters from multiple recording stations enables a detailed characterization of local ground motion conditions.

The comprehensive evaluation of 10 ML algorithms, conducted using standardized datasets, preprocessing procedures, and evaluation metrics, enables objective performance assessment.

The study develops sophisticated feature sets that incorporate multidirectional PGA values, multiple distance metrics, soil velocity measurements, and site classification parameters.

Ensemble methods achieve 79.6% classification accuracy and demonstrate practical improvements over individual algorithms.

A detailed evaluation based on multiple metrics, including accuracy, precision, recall, F1-score, and cross-validation, provides a thorough assessment of model performance.

The acquired insights are directly applicable to earthquake damage assessment, emergency response protocols, and seismic risk mitigation strategies.

The remainder of this paper is structured as follows:

Section 2 presents the comprehensive methodology including data collection, preprocessing procedures, feature engineering approaches, and machine learning algorithm implementations;

Section 3 provides detailed results including exploratory data analysis, model performance comparisons, and ensemble learning outcomes;

Section 4 discusses the implications of findings, comparisons with the existing literature, and practical applications;

Section 5 concludes with key findings, limitations, and recommendations for future research directions.

4. Results and Analysis

4.1. Model Performance Overview

Our comparison of 11 ML algorithms (

Table 6,

Figure 7) revealed notable differences in performance. The results highlighted the effectiveness of ensemble and tree-based algorithms in addressing earthquake damage classification.

4.2. Algorithm Performance Analysis

The experimental results presented in

Table 5 and

Figure 7 indicate the performance levels of each evaluated algorithm. As indicated, random forest achieved the highest accuracy (79.65%) with exceptionally high stability (CV_Std = 0.0016), benefiting from its ensemble nature, which combines multiple decision trees with feature randomization to ensure robust classification and resistance to overfitting and class imbalance. The voting ensemble followed closely with 79.62% accuracy by effectively combining random forest, extra trees, and decision tree models through hard voting, which outperformed soft voting and demonstrated the advantages of model diversity in ensemble learning. Extra trees achieved 79.54% accuracy through its highly randomized split selection strategy, enabling fast training and solid generalization performance.

The mid-performing group included decision trees, which achieved 78.70% accuracy and performed reliably as a standalone model, despite high variance, and KNN, which attained 77.46% accuracy, demonstrating the effectiveness of instance-based learning while remaining sensitive to feature scaling and dimensionality. Gradient boosting algorithms demonstrated mixed performance: XGBoost achieved 75.62% accuracy, and LightGBM attained 74.30% accuracy—both lower than typically expected for models of this class. Despite their consistent cross-validation stability, these results suggest a potential need for hyperparameter tuning.

Linear models delivered modest yet stable performance, with logistic regression (67.31%) and LDA (67.25%) yielding nearly identical outcomes. Their lower accuracy likely reflects the limitations imposed by linear decision boundaries in a problem of this complexity. The poorest performers were QDA, with 41.71% accuracy, and naive Bayes, with 35.35% accuracy. The former likely struggled with insufficient data per class and overfitting in high-dimensional space, while the latter was hampered by strong feature correlations that violated its conditional independence assumption. These findings emphasize the importance of selecting algorithms based on both data characteristics and underlying theoretical assumptions.

4.3. Cross-Validation vs. Test Performance Analysis

As depicted in

Figure 8, the cross-validation results closely align with test performance across all model categories. Gradient boosting algorithms demonstrate the most reliable behavior, with CV–test gaps under 0.3%, followed by tree-based ensembles (<0.5%) and individual tree models (<0.4%). Linear models exhibit minimal gaps below 0.1%, though their generalization capacity remains limited. Instance-based algorithms maintain moderate consistency, with gaps below 0.6%. In contrast, probabilistic models exhibit the largest discrepancies, exceeding 1.0%, suggesting potential overfitting issues.

4.4. Confusion Matrix Analysis

The confusion matrix analysis (

Figure 9) reflects the classification performance of the tested algorithms across all damage categories, with detailed evaluation metrics summarized in

Table 7. The matrix displays a clear diagonal pattern, indicating accurate predictions, with some misclassification observed between neighboring damage levels.

The severely damaged class was classified with high accuracy, likely due to its large sample size, while the collapsed class exhibited conservative predictions, characterized by high precision but relatively low recall. Although class imbalance had a notable effect on performance, the model preserved essential safety detection capabilities in identifying severe damage.

4.5. Feature Importance Analysis

The random forest feature importance analysis (

Figure 10) indicates that distance-based parameters overwhelmingly dominate the prediction model, with the top six features corresponding to proximity measurements to various seismic stations. Station1_Distance_km is identified as the most influential predictor, with an importance score of approximately 0.12, followed closely by distances to Stations 2 through 6. This pattern suggests that spatial proximity to recording stations is the primary determinant of building damage severity. The remaining top 10 features consist of station identification codes and Joyner–Boore distance metrics (Rjb), while ground motion parameters and site characteristics display lower importance, highlighting the strong predictive power of geometric distance relationships for earthquake damage assessment in this dataset. The prominence of geometric distance parameters (Station_Distance_km) from the nearest stations reflects their superior ability to capture local ground motion variations compared to traditional seismological distance measures. While epicentral distance (Repi) provides general proximity to earthquake sources, the simple geometric distances to recording stations appear to better represent the actual ground motion intensity experienced by buildings, possibly due to complex wave propagation effects and site-specific amplification patterns in the heterogeneous geological environment of the study region.

4.6. ROC Curve Analysis

The ROC curve analysis (

Figure 11) reflects differing degrees of discriminatory power across algorithmic approaches, with corresponding area under the curve (AUC) values presented in

Table 8.

4.7. Model Complexity vs. Performance Analysis

The relationship between model complexity and performance (

Figure 12) identifies optimal zones in which accuracy peaks without incurring substantial computational cost. As shown in

Table 9, different complexity levels yield distinct trade-offs among accuracy, training time, and interpretability.

Model complexity is determined by evaluating the computational resources and parameters required for each algorithm. For tree-based models such as random forest and decision tree, complexity is calculated based on the number of trees, tree depth, and nodes per tree. Linear models like logistic regression and linear discriminant analysis have complexity determined by the number of features and output classes. Instance-based algorithms like K-Nearest Neighbors depend on the training dataset size and feature dimensionality. Probabilistic models such as naive Bayes have relatively simple complexity based on feature-class combinations. Ensemble methods combine the complexities of their constituent models.

The complexity–performance relationship (

Figure 12) reveals optimal complexity zones where performance peaks without excessive computational overhead. For this study, with 304,299 samples and 73 features, complexity values range from simple models with minimal parameters to complex ensemble methods requiring substantial computational resources. As shown in

Table 9, different complexity levels offer distinct trade-offs between accuracy, training time, and interpretability.

4.8. Learning Curves Analysis

The learning curves of the random forest model (

Figure 13) display training scores consistently above 0.95 across all dataset sizes, while validation scores steadily increase from approximately 0.74–0.79 as more training data are introduced. The small gap between the training and validation curves indicates strong generalization with minimal overfitting. The observed performance plateau beyond approximately 100,000 samples suggests that the dataset is sufficiently large for effective model training.

4.9. Ensemble Learning Performance

The ensemble learning strategy integrated the complementary strengths of random forest’s robust feature selection, extra trees’ enhanced variance reduction, and decision tree’s interpretable decision boundaries. The hard voting ensemble achieved 79.62% accuracy, outperforming soft voting (79.24%) and surpassing the average individual model performance by 1.2%. It also exhibited high consistency, with a cross-validation standard deviation of only 0.0016, underscoring the effectiveness of combining diverse algorithms to enhance predictive accuracy.

4.10. Computational Performance

The computational efficiency analysis (

Table 10) displays substantial variation in training and prediction times across algorithms.

The results demonstrate that tree-based ensemble methods achieve optimal performance in earthquake damage classification, with random forest and ensemble models providing the best trade-off among accuracy, stability, and computational efficiency. The dominance of distance-based features underscores the critical role of proximity to seismic sources in shaping damage patterns.

5. Discussion

5.1. Algorithm Performance and Methodological Insights

Extensive testing of the 10 ML methods indicates that tree-based ensemble methods perform optimally in classifying earthquake damage, with random forest (79.65%) and voting ensemble (79.62%) achieving the highest accuracy. This reflects their ability to capture complex nonlinear relationships in earthquake damage, where small differences in ground motion parameters can result in substantially different damage outcomes. Unlike linear models that assume monotonic relationships, tree-based methods inherently identify critical damage thresholds through recursive partitioning without requiring prior assumptions about functional form.

The ensemble’s performance reinforces the principle that combining multiple models can enhance predictive accuracy relative to individual algorithms. Although the margin over the best single model is small, it remains practically valuable for scaling to full damage assessment applications. The ensemble integrates random forest (bagging with random features), extra trees (enhanced split randomization), and decision tree (interpretable boundaries) to offer complementary strengths that reduce overall prediction variance.

5.2. Implications of Feature Importance and Seismic Engineering

The dominance of distance-based parameters in the importance rankings provides substantial support for fundamental seismic engineering principles. The presence of distances to multiple stations—not just the nearest—among the top predictors confirms that a multi-station approach is more effective than traditional single-station methods. This finding suggests that spatial averaging of ground motion characteristics offers a considerable advantage in improving damage prediction accuracy and supports the development of more sophisticated seismic characterization methods.

Interestingly, the relatively reduced importance of PGA parameters compared to distance-based indicators contradicts conventional engineering practice and may suggest that proximity to the fault rupture is more influential than specific acceleration values within the observed range. This implies that simplified damage assessment methods based on distance metrics may be unexpectedly effective for regional-scale applications, potentially reducing computational demands in emergency response contexts.

5.3. Class Performance and Emergency Response Applications

The variation in performance across damage classes is attributed to both class imbalance issues and the characteristics of post-earthquake surveys. The favorable performance for severely damaged buildings (F1 = 0.891) indicates reliable identification of structures requiring urgent action, while the conservative classification of collapsed buildings (high precision, low recall) reflects adequate caution. These outcomes directly inform emergency response protocols and facilitate more efficient resource allocation and triage procedures in disaster scenarios.

The high recall rate for severely damaged buildings (94.1%) ensures that most structures requiring immediate intervention are identified, allowing for effective deployment of disaster response resources. By distinguishing among damage types, the proposed model supports the use of more advanced resource allocation strategies than those relying solely on simple damaged/undamaged classifications, thereby equipping emergency managers with actionable intelligence to coordinate response efforts.

5.4. Comparison with the Literature and Practical Applications

Compared to earlier studies on earthquake damage assessment, the 79.65% accuracy achieved in this study reflects a marked improvement. The use of a dataset containing 304,299 buildings also represents an unprecedented scale in this domain. The systematic evaluation of multiple algorithms under strict validation protocols enables objective performance comparison—an aspect previously limited by smaller datasets. Most prior studies have focused on a single algorithm or relatively small datasets, reducing their generalizability and limiting their practical utility. This performance aligns well with recent methodological advances, where Hariri-Ardebili and Sattar (2025) [

56] achieved comparable accuracy using AutoML on building-specific structural parameters for 242 buildings from the same earthquake dataset. While their approach excelled in detailed vulnerability analysis with comprehensive structural inventories, our multi-station seismic approach demonstrates that similar accuracy can be achieved across substantially larger building populations using readily available seismic parameters. This comparison highlights complementary methodological paradigms serving different operational needs, with ensemble learning methods showing particular promise, as demonstrated by recent studies [

57,

58] and validated through our comprehensive algorithm evaluation.

The proposed method demonstrates immediate applicability in emergency response networks by enabling rapid post-earthquake damage assessment within hours, using only building coordinates and seismometer data. This capability can substantially enhance situational awareness and support more effective resource deployment during the critical post-earthquake window. Beyond emergency scenarios, the method is also applicable to future risk prediction, supporting scenario-based earthquake modeling, the development of building codes, and the prioritization of seismic retrofitting efforts.

5.5. Limitations and Future Directions

Several limitations constrain the scope and applicability of this study. The geographical specificity of the training data restricts the transferability of the model to regions with differing seismic characteristics or building typologies. Common seismic input metrics, such as PGA and distance, fail to fully capture the complexity of ground motion, including effects related to duration and frequency content. Moreover, the limited availability of detailed building inventories prevents the model from incorporating structural variations that substantially influence damage patterns. Additionally, the rapid visual inspection methodology, while standardized and conducted by trained engineers, may introduce observational uncertainties compared to detailed structural engineering evaluations. These assessments, although practical for large-scale surveys, may miss subtle structural damage or misclassify damage severity in complex structural configurations where visual indicators may not fully represent actual structural integrity.

The damage classification process, while conducted by trained engineers and technical staff following standardized protocols established by the Ministry of Environment, Urbanization and Climate Change, is subject to additional sources of potential bias and assessment uncertainty. Field-based damage evaluation can be influenced by subjective interpretation of damage severity boundaries, time constraints during large-scale emergency assessments, accessibility limitations in heavily damaged areas, variations in assessment team experience, and potential inconsistencies in applying standardized criteria across different teams and regions. Environmental conditions during post-earthquake assessments may also affect evaluation accuracy. These potential inaccuracies in ground truth labels could introduce noise in machine learning model training and validation processes, though the large dataset size (304,299 buildings) helps mitigate the impact of individual assessment variations on overall model performance.

The study focuses exclusively on immediate post-earthquake damage states without considering progressive damage evolution, aftershock effects, or cumulative structural deterioration over time. The temporal dynamics of damage progression and the influence of multiple seismic events on structural performance remain outside the scope of the current analysis.

Furthermore, while the ensemble methods demonstrate superior predictive accuracy, their computational complexity and inherently reduced interpretability present challenges for operational deployment in time-critical emergency response scenarios. The “black-box” nature of sophisticated ensemble models may limit their acceptance by emergency management agencies and regulatory bodies that require transparent decision-making processes for critical infrastructure applications.

To enhance generalizability, future research should expand the geographical coverage, integrate robust building inventories to support improved structural characterization, and develop physics-informed ML frameworks that merge empirical data with established seismic engineering principles. Combining real-time seismic data streams with autonomous damage assessment systems also presents a promising direction for operational deployment.