Artificial Intelligence in Human–Robot Collaboration in the Construction Industry: A Scoping Review

Abstract

1. Introduction

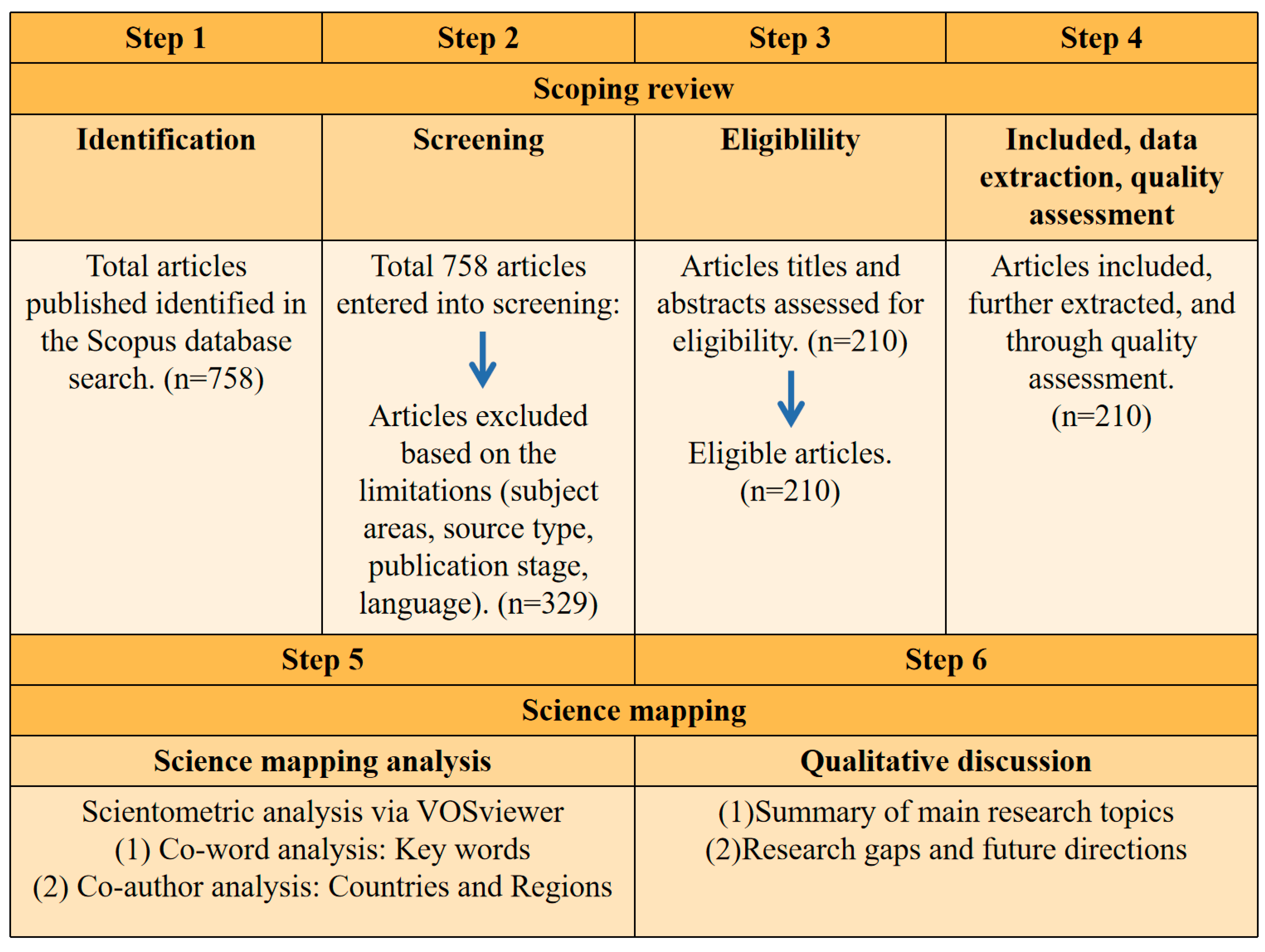

2. Research Methods

2.1. Search Strategy

2.2. Literature Screening

2.3. Selection Process

2.4. Included, Data Extraction, and Quality Assessment

2.5. Scientometric Analysis

2.6. Qualitative Discussion

3. Results

3.1. Annual Publication Trend of Articles

3.2. Keyword Co-Occurrence Analysis

- Cluster 1 (AI techniques in robotic systems)—The development of AI techniques (e.g., DL, ML, and NLP) is an important driver of robotic systems. The application of DL can effectively improve the performance of service robots [37]. The application of ML and other AI techniques is crucial for robot gesture recognition, which facilitates communication between humans and robots [38]. Natural communication and language understanding play a significant role in the development of robots and human–machine communication [39]. A typical scenario is that large language models (LLMs) are also being utilized for voice communication to perform general tasks [40].

- Cluster 2 (Extended reality (XR) in HRC applications)—Augmented reality (AR) and mixed reality (MR) are often closely related technologies in the context of HRC. Employees can use glasses with MR and AR capabilities to respond appropriately to scanned components and perform necessary maintenance [41]. A classification visualization scheme based on MR and AR technology can reduce the cognitive burden on robots [42]. The integration of VR and AR systems in terms of perception-decision-making-control can achieve real-time process optimization [43].

- Cluster 3 (HRC in construction safety)—Safety is a key research concern of HRC. Mohammadi Amin et al. [44] developed a visual perception system to help robots recognize human behavior and increase the safety of dynamic changes. Different HRI models also increase the safety of autonomous vehicles and have a positive impact on road safety [45].

- Cluster 4 (Human perceptions in HRC applications)—Trust between humans and robots is the key to ensuring the safety of HRC. The summary of the trust factors and advanced trust models helps humans to enhance the level of trust with robots [46]. It was reported that participants have a significant decrease in trust in autonomous systems when dealing with real-world consequences [47]. Zhang and Yang [48] found that robots with physical human-like appearance were perceived as having lower levels of anthropomorphism and intelligence. Furthermore, the design of interaction functions on robots did not significantly increase these perceptions.

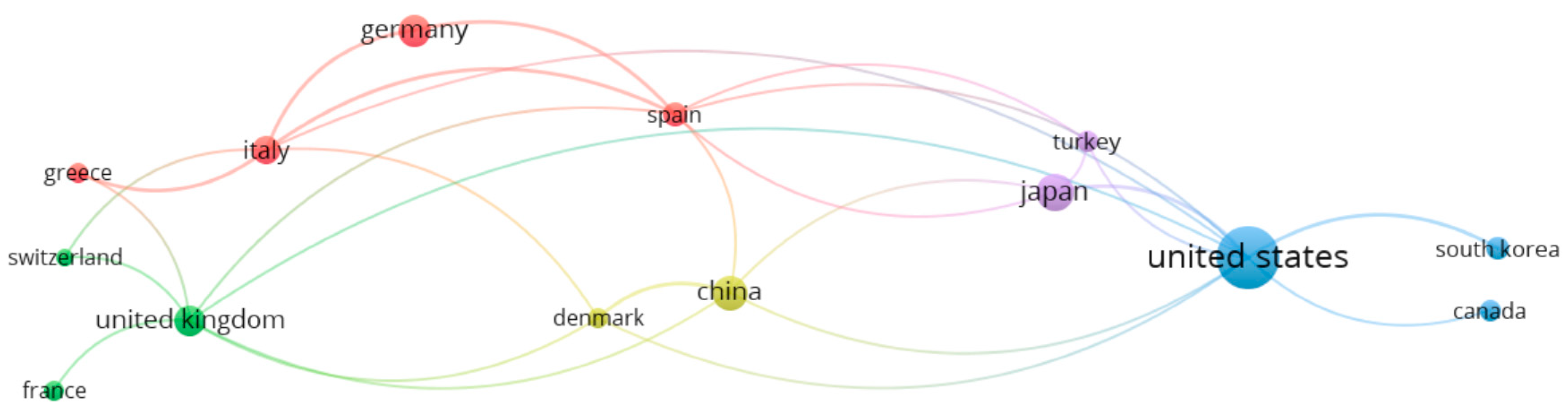

3.3. Countries/Regions Co-Occurrence Analysis

4. Discussion

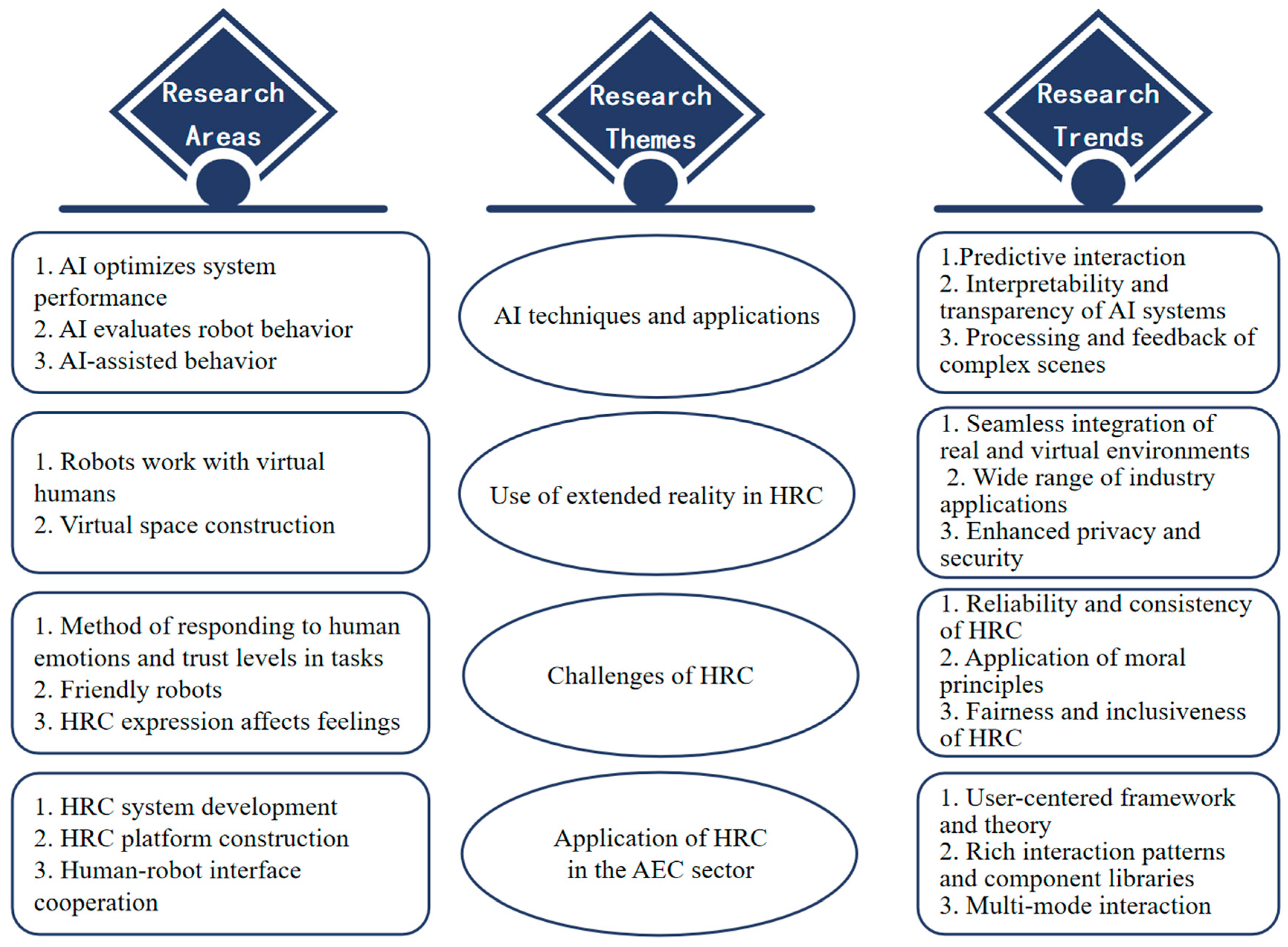

4.1. Mainstream Topics of Articles

4.1.1. AI Techniques and Applications

- 1.

- Machine learning (ML)ML is a major branch of AI techniques. It enables computers to make predictions or decisions about new data by learning patterns and features from data using algorithms. The most popular type of ML algorithm discussed in the articles is neural networks (NN). This computational technique, inspired by biological nervous systems, mimics how the human brain processes information. Of the 16 articles discussing ML, seven dealt with the use of different types of NN, including traditional NN and artificial neural networks (ANN). For example, Zhang et al. [49] collected facial motions through a facial motion unit analyser to provide data input to the feedforward NN. Supervised ML algorithms (e.g., random forest (RF), support vector machine (SVM), K-nearest neighbor (KNN), etc), reinforcement learning, and transfer learning were applied in previous HRC studies to develop HRI models or frameworks. For example, Sugiyama et al. [50] used a model based on RF to estimate the response obligation in human-computer multi-party dialogue. The experiment shows that, compared with the traditional methods, this model reduces the false response rate to 17.3%, a decrease of 21% from the baseline, and increases the F1 score by 9.2%. Wang et al. [51] used KNN to build a real-time framework for human intention estimation and cooperative motion planning of robotic manipulators. Liu et al. [16] proposed a physiological HRC framework to enhance safety during construction tasks based on ML algorithms.

- 2.

- Deep learning (DL)DL is a subfield of ML that relies primarily on the structure of NNs. DL models can automatically learn complex representations from large datasets without the need to manually extract features. The most frequently discussed DL model in the included articles was convolutional neural networks (CNNs). Other topics such as image semantic analysis, agent model, and active learning were also discussed. CNN has strong learning and data processing abilities; therefore, it is mostly used to solve problems in complex situations or to improve the interaction and enhance the ability of HRC. For example, CNNs were used to improve the accuracy and real-time performance of gesture recognition in complex environments [52]. Their research employed stacked convolutional layers and an improved bottleneck block for feature extraction. Compared to traditional models, its efficiency increased by 54%, and in the 3D space capture experiment, the success rate reached 98.5%. CNN was also used to support HRI frameworks and improve the perception level of HRC [44]. Garcia et al. [53] developed an HRC framework based on CNN to sense and predict collaborative workspace and human behavior captured from RGB camera data for decision-making on an assembly process. For other DL models, Toda and Kubota [54] used spiking neural networks (SNNs) with high energy consumption properties combined with time series location data of people and objects to estimate human behavior. Guinot et al. [55] used a variable long short-term memory (LSTM) of the recurrent neural network (RNN) model to analyze and learn subtle cues in human movement. The experiment showed that the success rate for the wiping task was 93%, for the grasping and placing task it was 90%, and for the combined task it was 79%. In industrial settings, on assembly lines, it is possible to reduce worker distraction by 40%. Image semantic analysis enriched with knowledge-level spatial information was used to assist robots in making navigation choices [56] or verbal and gesture interactions [57].

- 3.

- Natural language processing (NLP)NLP aims to enable computers to understand, interpret, and generate human language, covering several techniques from basic string processing to complex semantic understanding and generation. In the included articles, NLP is mainly used as a foundational method to form a system or environment. Examples include natural, humanized conversation environments driven by NLP that embed dialogue strategies into the generation of human–machine dialogue strategies [58], and agile integrated approaches to HMI and knowledge management [59]. In addition, the Transformer architecture is also widely applied in NLP. Schirmer et al. [60] integrated a transformer-based NLP model for safety hazard identification during assembly steps through a decision-making process by human safety experts. In recent years, LLMs have developed rapidly. Ranasinghe et al. [40] developed a voice interaction framework based on LLMs, which helps robots collaborate. Research based on GPT-4o has proposed a dual-agent LLM framework. Compared with the traditional single-agent framework, the error detection rate has increased by 93.5%, and the task completion rate has increased by 141.7%.

- 4.

- Fuzzy techniquesFuzzy logic is a type of logic system that deals with uncertainty and fuzzy concepts. It is widely used in natural language description, uncertainty in human decision-making process, and modeling of complex systems. For example, fuzzy logic was combined with robotics to propose a damping controller that improves the safety of HRC [61]. For instance, by combining fuzzy logic with robotics technology, a damping controller was proposed, which enhanced the safety of HRC [61]. Under the condition of a sudden load increase, the speed overshoot decreased by 42%, and the power fluctuation range was reduced to ±2.3W. However, there is still a delay in the high-speed task. Jiang and Wang [62] proposed a fuzzy logic controller to design a human decision-making model that encompasses psychological risk effects involving tasks between humans and robots.

4.1.2. The Use of Extended Reality (XR) in HRC

- 1.

- Augmented reality (AR)By superimposing virtual information into the visual presentation of the real world, AR creates a rich and deep interactive environment for users. In the included articles, AR was combined with AI and other technologies for HRC applications [63] and to enhance the flexibility and adaptability of operating systems [64].

- 2.

- Virtual reality (VR)VR creates a completely virtual environment where users are fully immersed in this computer-generated world via a head-mounted display (HMD). In the included articles, VR was mainly used to assist in the recognition of realistic robots [65] and to improve the working ability (operational capacity) and efficiency of robots [66].

- 3.

- Mixed reality (MR)MR technology is a combination of VR and AR which not only superimposes virtual images on the user’s real environment, but which can also interact with the real world. In the included papers, MR is mainly applied to assisted robot interaction [67] and the construction and verification of hybrid map frameworks [42]. This MR environment that integrates virtual and real elements can achieve bidirectional interactive control between physical robots and virtual avatars, ensuring the safety and traceability of multimodal interaction [43].

- 4.

- Symmetric reality (SR)SR is a relatively new concept in the field of XR, which explores a more balanced way of HRC, which is neither completely dominated by the real world nor the virtual world. Instead, it emphasizes balance and symmetry between the two realms. Among the included articles, there is only one study on SR, focusing on the analysis of task definition. In the SR application, the robot is seen as a virtual agent with the same status in the physical environment. As such, HRC in SR is a process of equivalent interaction between two virtual and physical environments [68].

4.1.3. Challenges of HRC

- 1.

- TrustThe issue of trust is a key challenge affecting technology acceptance and the effectiveness of cooperation. Research on trust issues accounts for more than half of the 27 articles on the challenges of HRC. Trust between HRC is multifaceted, covering everything from design and emotion to generational differences and methodological considerations. The degree of trust generated by users based on perception may affect the shape design of humanoid robots [48]. St-Onge et al. [69] designed a reverse interface for robots to act as real controllers in HRC through experiments. For emotions, Guo et al. [70] explored how human trust in robots can be improved by designing robots to actively reason and respond to human emotions and trust levels in shared tasks. Robb et al. [71] reported on different generations’ perceptions of robotics and AI, pointing out the subtle nature of trust between different age groups. Research has shown that trust is a key component to the acceptance and effective use of AI and HRC systems, and future studies are needed. Parron et al. [72] developed a database to calculate and analyze the relationship between robot performance factors and human trust levels.

- 2.

- SafetySafety issues ensure the welfare of workers’ lives, the implementation of technology, and the progress of projects. Technological advances are important in ensuring the safety of modern production systems, with a focus on control safety measures and physical robotic assistance to protect human workers in complex manufacturing environments. Islam and Lughmani [73] investigated the strategies to improve the safety of cyber-physical production systems (CPPS), highlighting the potential risks involved. Cen et al. [74] proposed a framework for mobile robotic arms designed to assist human workers, addressing a range of safety concerns by providing robotic support that can adapt to dynamic industrial environments. Beyond that, attempts to give robots human-like senses are effective. Perception modules based on vision and touch can largely measure the distance between humans and robots and adjust the speed of robots. Mohammadi Amin et al. [44] developed a vision-based system to detect intentional and accidental interactions between humans and robots, which can improve safety and the robot’s perception of human intentions. Islam et al. [75] have developed a flexible connection decision-making framework for Cyber-Physical systems, which is capable of responding promptly to dynamic changes and resisting sudden dangers.

- 3.

- EthicsEthical issues are not only about the application of technology but also about social norms, principles, and values. Morality and ethics can affect the public’s trust and acceptance of HRC. Consequently, several articles have explored various dimensions of ethics. For example, Komatsu [76] revealed the moral responsibility of AI developers and the public’s expectations of AI for ethical decision-making by comparing how people with different responsibilities in a task judge moral error. Lewis and Minior [77] discussed multiple forms of ethical deliberation based on ontology, which have played a role in studying the ethical and social implications of HRC. Metzler and Lewis [78] highlighted the role of personal morality and religious beliefs in shaping the social integration of robots. However, at the same time, there are still many ethical challenges associated with AI. For instance, Dajani, et al. [79] pointed out that in the HRC, machines might obtain supervisory rights, leading to excessive collection of personal data and threatening privacy rights and autonomy. Algorithmic bias may be embedded in robot learning systems, leading to discriminatory decisions, and the existing legal framework is difficult to clearly define liability [80].

- 4.

- FairnessThe decisions, behaviors, and interactions of AI systems need to ensure that they are fair to all users and free from bias and discrimination. Claure et al. [81] encouraged researchers to use existing theories, practices, and formalized equity indicators to promote fairness in HRC and transparency in AI. Londono et al. [80] proposed a framework for classifying the sources of bias and the resulting types of discrimination. They also studied scenarios of unfair outcomes and strategies for mitigating these situations.

4.1.4. Application of Human–Robot Collaboration (HRC) in Architecture, Engineering, and Construction (AEC) Sector

- 1.

- SystemIn HRC, the system refers to the overall computing environment where users interact, which can include hardware, software, user interfaces, and underlying supporting technologies. In this paper, HRC-related systems have a high diversity and depth, reflecting the characteristics of interdisciplinary integration in this field. For example, Podpora et al. [82] designed a custom Internet of Things (IoT) subsystem to obtain information before the actual interaction occurs. Othman and Yang [83] outlined the HRC system to solve the collaborative interaction requirements of humans and robots in intelligent manufacturing. Jaroonsorn et al. [84] proposed a robot control system for collaborative object-handling tasks. Liang et al. [85] proposed a digital twin (DT) framework that can simulate and optimize human–machine collaboration on building construction sites in real time. The digital binary-based system proposed by Lee et al. [86] enabled dynamic robots to rapidly perform adaptive task assignments in construction environments. Ryu et al. [87] combined bionics and dynamics to develop a humanoid robot system that enables robots to carry out more accurate path planning in complex built environments.

- 2.

- FrameworkFrameworks typically guide the collection of theories, methods, and tools that are used to design, implement, and evaluate interactions between users and computer systems. The articles can be categorized based on the following types of frameworks. (1) Operation and control framework. Wang and Zhu [88] proposed a visual-based framework to capture and interpret the gestures of construction workers. (2) Cognitive and learning frameworks. Shukla et al. [89] developed a robot framework that uses graph data to encapsulate knowledge structure and realizes new task learning through knowledge transfer. (3) Theory and application development framework. Breazeal et al. [90] proposed a theoretical framework based on joint intention theory and cooperative discourse theory that allows humans to use speech, gesture, and expression cues to cooperate with humanoid robots to complete joint tasks. Peltason and Wrede [91] combined insights from dialogue modeling with software engineering requirements for robotic systems to propose a generalized framework that can be applied to new scenarios. Scibilia et al. [92] developed an ANN framework that helps robots predict human intentions during collaborative operations.

- 3.

- PlatformA Platform usually refers to the hardware and/or software environment that supports the running of software applications. Platforms typically provide a set of tools, services, and standards that enable developers and users to build, run, and interact with the environment. In the included articles, platforms can be divided into the following types. (1) Robot platform: robotic solutions for assisting HRC and personal care [93]. (2) Digital cloud platform: it communicates through a 5G network, transmits mechanical parameters and construction instructions of unmanned bulldozers, and achieves ultra-low-delay earth-moving monitoring [94]. (3) HRI platform: a platform that enables HRC in industrial tasks [58] (4) Database: constructing a conversational corpus [95].

- 4.

- InterfaceAn HRC interface is a medium between a user and a computer or machine to facilitate effective communication and interaction. Some of the included articles focused on new HRC operating interfaces, such as user interfaces for autonomous vehicles [96], and intelligent human–machine interfaces that recognize human gestures and hand/arm movements [97]. Construction workers can use a manual interface to control the force to guide the robot to install the glass [98]. Other studies focus on technical aspects, such as fusing information on and around video windows [99].

- 5.

- IndexIn the field of HRC, it is very important to evaluate the quality of robotic systems and interactions. These metrics help researchers and developers quantify and evaluate robot performance, the effectiveness of interactions, and user experience and satisfaction. The articles include research related to metric development [100,101], collation [102], and overview [103]. Park et al. [104] expanded the TAM-IDT model, improved the understanding of architects on the intention to use HRC, and found the tendency of perceived usefulness and perceived ease of use.

- 6.

- SolutionSome of the included articles focused on technologies, methods, systems, or procedures designed to solve specific problems or needs, which are collectively referred to as solutions. These solutions include but are not limited to (1) HRC technologies and methods [41]; (2) robot control and intent expression (e.g., knowledge transfer and robot control [105]); (3) interactive perception and cognition (e.g., joint attention and non-verbal cues [106]); and (4) communication and comprehension (e.g., Communication-based Discourse understanding model [107]). In the construction sector, collaborative robots are widely used to replace workers performing dangerous tasks. Xu et al. [108] used semi-autonomous robots for high-altitude drilling and ceiling drywall installation in project management.

4.2. Research Gaps of AI in HRC in Construction

4.2.1. Problems Related to AI Applications

- How can domain knowledge and human expertise be systematically integrated into AI models to compensate for limited training data?

- How can AI-driven robots achieve context-aware decision-making without constant human intervention?

- How can meta-learning strategies generalize robustness across diverse HRC scenarios without task-specific retraining?

- What standardized frameworks or modular designs would enable scalable, interoperable AI systems for HRC across industries?

4.2.2. Limitations of Extended Reality (XR)

- How can real-time risk-monitoring systems be designed to align with the flexibility of industrial XR applications?

- What hardware innovations (e.g., edge computing, neuromorphic chips) could overcome GPU bottlenecks in AR/VR devices?

- How should interdisciplinary guidelines (e.g., ethics-by-design for virtual agents) be structured to preempt emergent risks?

4.2.3. New Challenges for HRC

- What methodologies (e.g., synthetic data augmentation, active learning) can optimize sample efficiency while ensuring model generalizability across diverse HRC scenarios?

- How can modular design principles enable frameworks to evolve with emerging technologies (e.g., quantum computing, embodied AI)?

- What cross-cultural ethical benchmarks (e.g., region-specific value hierarchies) should guide HRC design to mitigate religious or ideological biases?

4.2.4. Research Gaps in HRC

- How can adaptive control algorithms be designed to dynamically balance speed and precision in human–robot collaborative environments?

- How should knowledge representation frameworks (e.g., ontologies, graph databases) be standardized to enable interoperability across diverse robotic systems?

- Where can domain-specific, ethically sourced datasets (e.g., construction sites, healthcare) be systematically curated to reflect real-world HRC complexities?

4.3. Future Research Directions for AI in HRC in Construction

- Improve future robots’ understanding of human intentions and needs through AI technologies (e.g., NLP and improved perception systems).

- Equip robots with more advanced learning algorithms to enable them to learn from experience and optimize their performance by allowing automatic adjustment of behavioral strategies in response to changing tasks and new operating environments.

- Integrate advanced security protocols and ethical decision-making frameworks for supervising robot behavior.

- Develop an interdisciplinary development framework for HRC systems to help develop humane, safer, and ethical cooperative robots to adapt to the rapidly changing construction environment.

- Implement strict ethical standards and review mechanisms to establish and improve the transparency, reliability, and consistency of HRC systems.

- Use of XR technologies to simulate construction tasks for planning and training, problem identification, and optimizing HRC strategies.

- Use of SR to promote the integration of physical space and virtual space, and develop intelligent building systems that allow users to perceive and operate virtual elements in physical space, such as viewing building models through AR to facilitate planning and layout.

- Wearable devices can be used to monitor the user’s physiological state and robot systems in real time, where the operation of the robot is to adapt to the user’s current health state and stress level.

- Integrate different types of technologies such as IoT, big data, and cloud computing to enable functions such as task management, data analytics, and resource optimization.

5. Conclusions

5.1. Contribution to Theory

5.2. Contribution to Practice

5.3. Limitations of the Study

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, M.; Xu, R.; Wu, H.; Pan, J.; Luo, X. Human–robot collaboration for on-site construction. Autom. Constr. 2023, 150, 104812. [Google Scholar] [CrossRef]

- Bauer, A.; Wollherr, D.; Buss, M. Human–robot collaboration: A survey. Int. J. Humanoid Robot. 2008, 5, 47–66. [Google Scholar] [CrossRef]

- Wei, H.H.; Zhang, Y.; Sun, X.; Chen, J.; Li, S. Intelligent robots and human-robot collaboration in the construction industry: A review. J. Intell. Constr. 2023, 1, 1–12. [Google Scholar] [CrossRef]

- ec.europa.eu. (n.d.) Accidents at Work Statistics. Available online: https://ec.europa.eu/eurostat/statistics-explained/index.php?title=Accidents_at_work_statistics#Incidence_rates (accessed on 1 January 2020).

- Tomori, M.; Ogunseiju, O.; Nnaji, C. A Review of Human-robotics interactions in the construction industry. In Proceedings of the Construction Research Congress, Des Moines, IA, USA, 20–23 March 2024; pp. 903–912. [Google Scholar] [CrossRef]

- Ahsan, S.; El-Hamalawi, A.; Bouchlaghem, D.; Ahmad, S. Mobile technologies for improved collaboration on construction sites. Archit. Eng. Des. Manag. 2007, 3, 257–272. [Google Scholar] [CrossRef]

- Simmons, A.B.; Chappell, S.G. Artificial intelligence-definition and practice. IEEE J. Ocean. Eng. 1988, 13, 14–42. [Google Scholar] [CrossRef]

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E. Deep learning for computer vision: A brief review. Comput. Intell. Neurosci. 2018, 2018, 7068349. [Google Scholar] [CrossRef]

- Mirzaei, K.; Arashpour, M.; Asadi, E.; Masoumi, H.; Bai, Y.; Behnood, A. 3D point cloud data processing with machine learning for construction and infrastructure applications: A comprehensive review. Adv. Eng. Inform. 2022, 51, 101501. [Google Scholar] [CrossRef]

- Fernandes, L.L.D.A.; Costa, D.B. A conceptual model for measuring the maturity of an Intelligent Construction Environment. Archit. Eng. Des. Manag. 2024, 20, 1403–1426. [Google Scholar] [CrossRef]

- Li, Y.; Antwi-Afari, M.F.; Anwer, S.; Mehmood, I.; Umer, W.; Mohandes, S.R.; Wuni, I.Y.; Abdul-Rahman, M.; Li, H. Artificial intelligence in net-zero carbon emissions for sustainable building projects: A systematic literature and science mapping review. Buildings 2024, 14, 2752. [Google Scholar] [CrossRef]

- Wang, J.; Antwi-Afari, M.F.; Tezel, A.; Antwi-Afari, P.; Kasim, T. Artificial Intelligence in Cloud Computing technology in the Construction industry: A bibliometric and systematic review. J. Inf. Technol. Constr. 2024, 29, 480–502. [Google Scholar] [CrossRef]

- Zhang, X.; Antwi-Afari, M.F.; Zhang, Y.; Xing, X. The impact of artificial intelligence on organizational justice and project performance: A systematic literature and science mapping review. Buildings 2024, 14, 259. [Google Scholar] [CrossRef]

- Ohri, K.; Kumar, M. Review on self-supervised image recognition using deep neural networks. Knowl.-Based Syst. 2021, 224, 107090. [Google Scholar] [CrossRef]

- Hamilton, K.; Nayak, A.; Božić, B.; Longo, L. Is neuro-symbolic AI meeting its promises in natural language processing? A structured review. Semantic Web 2024, 15, 1265–1306. [Google Scholar] [CrossRef]

- Liu, Y.; Habibnezhad, M.; Jebelli, H. Brainwave-driven human-robot collaboration in construction. Autom. Constr. 2021, 124, 103556. [Google Scholar] [CrossRef]

- Attalla, A.; Attalla, O.; Moussa, A.; Shafique, D.; Raean, S.B.; Hegazy, T. Construction robotics: Review of intelligent features. Int. J. Intell. Robot. Appl. 2023, 7, 535–555. [Google Scholar] [CrossRef]

- Ma, X.; Mao, C.; Liu, G. Can robots replace human beings?—Assessment on the developmental potential of construction robot. J. Build. Eng. 2022, 56, 104727. [Google Scholar] [CrossRef]

- Feng, C.; Xiao, Y.; Willette, A.; McGee, W.; Kamat, V.R. Vision guided autonomous robotic assembly and as-built scanning on unstructured construction sites. Autom. Constr. 2015, 59, 128–138. [Google Scholar] [CrossRef]

- Zhang, R.; Lv, Q.; Li, J.; Bao, J.; Liu, T.; Liu, S. A reinforcement learning method for human-robot collaboration in assembly tasks. Robot. Comput.-Integr. Manuf. 2022, 73, 102227. [Google Scholar] [CrossRef]

- Fu, Y.; Chen, J.; Lu, W. Human-robot collaboration for modular construction manufacturing: Review of academic research. Autom. Constr. 2024, 158, 105196. [Google Scholar] [CrossRef]

- Rodrigues, P.B.; Singh, R.; Oytun, M.; Adami, P.; Woods, P.J.; Becerik-Gerber, B.; Soibelman, L.; Copur-Gencturk, Y.; Lucas, G.M. A multidimensional taxonomy for human-robot interaction in construction. Autom. Constr. 2023, 150, 104845. [Google Scholar] [CrossRef]

- Marinelli, M. From industry 4.0 to construction 5.0: Exploring the path towards human–robot collaboration in construction. Systems 2023, 11, 152. [Google Scholar] [CrossRef]

- Borboni, A.; Reddy, K.V.V.; Elamvazuthi, I.; AL-Quraishi, M.S.; Natarajan, E.; Azhar Ali, S.S. The expanding role of artificial intelligence in collaborative robots for industrial applications: A systematic review of recent works. Machines 2023, 11, 111. [Google Scholar] [CrossRef]

- Pan, Y.; Zhang, L. Roles of artificial intelligence in construction engineering and management: A critical review and future trends. Autom. Constr. 2021, 122, 103517. [Google Scholar] [CrossRef]

- Regona, M.; Yigitcanlar, T.; Xia, B.; Li, R.Y.M. Opportunities and adoption challenges of AI in the construction industry: A PRISMA review. J. Open Innov. Technol. Mark. Complex. 2022, 8, 45. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. BMJ 2009, 339, b2535. [Google Scholar] [CrossRef]

- Katoch, R. IoT research in supply chain management and logistics: A bibliometric analysis using vosviewer software. Mater. Today Proc. 2022, 56, 2505–2515. [Google Scholar] [CrossRef]

- Chadegani, A.A.; Salehi, H.; Yunus, M.M.; Farhadi, H.; Fooladi, M.; Farhadi, M.; Ebrahim, N.A. A comparison between two main academic literature collections: Web of Science and Scopus databases. arXiv 2013, arXiv:1305.0377. [Google Scholar] [CrossRef]

- Zhang, F.; Xu, B.; Zeng, X.; Ding, K. Gesture-driven interaction service system for complex operations in digital twin manufacturing cells. J. Eng. Des. 2024. [Google Scholar] [CrossRef]

- Lee, S.; Lee, S.; Park, H. Integration of Tracking, Re-Identification, and Gesture Recognition for Facilitating Human–Robot Interaction. Sensors 2024, 24, 4850. [Google Scholar] [CrossRef]

- Schewior, J.; Grefen, R.; Verde, R.; Ergardt, A.; Zhao, Y.; Kullmann, W.H. Speech-Controlled Robot Enabling Cognitive Training and Stimulation in Dementia Prevention for Severely Disabled People. Curr. Dir. Biomed. Eng. 2024, 10, 543–546. [Google Scholar] [CrossRef]

- Harrison, R.; Jones, B.; Gardner, P.; Lawton, R. Quality assessment with diverse studies (QuADS): An appraisal tool for methodological and reporting quality in systematic reviews of mixed-or multi-method studies. BMC Health Serv. Res. 2021, 21, 1–20. [Google Scholar] [CrossRef]

- Jiang, Q.; Antwi-Afari, M.F.; Fadaie, S.; Mi, H.Y.; Anwer, S.; Liu, J. Self-powered wearable Internet of Things sensors for human-machine interfaces: A systematic literature review and science mapping analysis. Nano Energy 2024, 131, 110252. [Google Scholar] [CrossRef]

- Van Eck, N.J.; Waltman, L. Visualizing bibliometric networks. In Measuring Scholarly Impact: Methods and Practice; Springer International Publishing: Cham, Switzerland, 2014; pp. 285–320. [Google Scholar] [CrossRef]

- Lu, M.; Antwi-Afari, M.F. A scientometric analysis and critical review of digital twin applications in project operation and maintenance. Eng. Constr. Archit. Manag. 2024. [Google Scholar] [CrossRef]

- Ma, Q.; Ma, T.; Lu, C.; Cheng, B.; Xie, S.; Gong, L.; Fu, Z.; Liu, C. A cloud-based quadruped service robot with multi-scene adaptability and various forms of human-robot interaction. IFAC-PapersOnLine 2020, 53, 134–139. [Google Scholar] [CrossRef]

- Chang, J.Y.; Tejero-de-Pablos, A.; Harada, T. Improved optical flow for gesture-based human-robot interaction. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 7983–7989. [Google Scholar] [CrossRef]

- Bamdale, R.; Sahay, S.; Khandekar, V. Natural human robot interaction using artificial intelligence: A survey. In Proceedings of the 2019 9th annual Information Technology, Electromechanical Engineering and Microelectronics Conference (IEMECON), Jaipur, India, 13–15 March 2019; pp. 297–302. [Google Scholar] [CrossRef]

- Ranasinghe, N.; Mohammed, W.M.; Stefanidis, K.; Martinez Lastra, J.L. Large Language Models in Human-Robot Collaboration With Cognitive Validation Against Context-Induced Hallucinations. IEEE Access 2025, 13, 77418–77430. [Google Scholar] [CrossRef]

- Mueller, R.; Vette, M.; Masiak, T.; Duppe, B.; Schulz, A. Intelligent real time inspection of rivet quality supported by human-robot-collaboration. SAE Int. J. Adv. Curr. Pract. Mobil. 2019, 2, 811–817. [Google Scholar] [CrossRef]

- Das, A.; Kol, P.; Lundberg, C.; Doelling, K.; Sevil, H.E.; Lewis, F. A rapid situational awareness development framework for heterogeneous manned-unmanned teams. In Proceedings of the NAECON 2018-IEEE National Aerospace and Electronics Conference, Dayton, OH, USA, 23–26 July 2018; pp. 417–424. [Google Scholar] [CrossRef]

- Xie, J.; Liu, Y.; Wang, X.; Fang, S.; Liu, S. A new XR-based human-robot collaboration assembly system based on industrial metaverse. J. Manuf. Syst. 2024, 74, 949–964. [Google Scholar] [CrossRef]

- Mohammadi Amin, F.; Rezayati, M.; van de Venn, H.W.; Karimpour, H. A mixed-perception approach for safe human–robot collaboration in industrial automation. Sensors 2020, 20, 6347. [Google Scholar] [CrossRef]

- Mokhtarzadeh, A.A.; Yangqing, Z.J. Human-robot interaction and self-driving cars safety integration of dispositif networks. In Proceedings of the 2018 IEEE International Conference on Intelligence and Safety for Robotics (ISR), Shenyang, China, 24–27 August 2018; pp. 494–499. [Google Scholar] [CrossRef]

- Li, J.; Zhu, E.; Lin, W.; Yang, S.X.; Yang, S. A novel digital twins-driven mutual trust framework for human–robot collaborations. J. Manuf. Syst. 2025, 80, 948–962. [Google Scholar] [CrossRef]

- Pedersen, B.K.M.K.; Andersen, K.E.; Köslich, S.; Weigelin, B.C.; Kuusinen, K. Simulations and self-driving cars: A study of trust and consequences. In Proceedings of the Companion of the 2018 ACM/IEEE international conference on human-robot interaction, New York, NY, USA, 5–8 March 2018; pp. 205–206. [Google Scholar] [CrossRef]

- Zhang, A.; Yang, Q. To be human-like or machine-like? An empirical research on user trust in AI applications in service industry. In Proceedings of the 2022 8th International Conference on Automation, Robotics and Applications (ICARA), Prague, Czech Republic, 18–20 February 2022; pp. 9–15. [Google Scholar] [CrossRef]

- Zhang, L.; Jiang, M.; Farid, D.; Hossain, M.A. Intelligent facial emotion recognition and semantic-based topic detection for a humanoid robot. Expert Syst. Appl. 2013, 40, 5160–5168. [Google Scholar] [CrossRef]

- Sugiyama, T.; Funakoshi, K.; Nakano, M.; Komatani, K. Estimating response obligation in multi-party human-robot dialogues. In Proceedings of the 2015 IEEE-RAS 15th International Conference on Humanoid Robots (Humanoids), Seoul, Republic of Korea, 3–5 November 2015; pp. 166–172. [Google Scholar] [CrossRef]

- Wang, Y.; Sheng, Y.; Wang, J.; Zhang, W. Human intention estimation with tactile sensors in human-robot collaboration. In Proceedings of the Dynamic Systems and Control Conference, Tysons, VA, USA, 11–13 October 2017; Volume 58288, p. V002T04A007. [Google Scholar] [CrossRef]

- Bose, S.R.; Kumar, V.S.; Sreekar, C. In-situ enhanced anchor-free deep CNN framework for a high-speed human-machine interaction. Eng. Appl. Artif. Intell. 2023, 126, 106980. [Google Scholar] [CrossRef]

- Garcia, P.P.; Santos, T.G.; Machado, M.A.; Mendes, N. Deep learning framework for controlling work sequence in collaborative human–robot assembly processes. Sensors 2023, 23, 553. [Google Scholar] [CrossRef]

- Toda, Y.; Kubota, N. Attention Allocation for Multi-modal Perception of Human-friendly Robot Partners. IFAC Proc. Vol. 2013, 46, 324–329. [Google Scholar] [CrossRef]

- Guinot, L.; Ando, K.; Takahashi, S.; Iwata, H. Analysis of implicit robot control methods for joint task execution. ROBOMECH J. 2023, 10, 12. [Google Scholar] [CrossRef]

- Halilovic, A.; Lindner, F. Visuo-textual explanations of a robot’s navigational choices. In Proceedings of the 2023 ACM/IEEE International Conference on Human-Robot Interaction, Stockholm, Sweden, 13–16 March 2023; pp. 531–535. [Google Scholar] [CrossRef]

- Matuszek, C.; Pronobis, A.; Zettlemoyer, L.; Fox, D. Combining world and interaction models for human-robot collaborations. In Proceedings of the Workshops at the 27th AAAI Conference on Artificial Intelligence (AAAI 2013), Bellevue, WA, USA, 14–18 July 2013; Volume 1415. [Google Scholar] [CrossRef]

- Li, C.; Park, J.; Kim, H.; Chrysostomou, D. How can i help you? an intelligent virtual assistant for industrial robots. In Proceedings of the 2021 ACM/IEEE International Conference on Human-Robot Interaction, Boulder, CO, USA, 8–11 March 2021; pp. 220–224. [Google Scholar] [CrossRef]

- Preece, A.; Braines, D. Conversational services for multi-agency situational understanding. In Proceedings of the 2017 AAAI Fall Symposium, Arlington, VA, USA, 9–11 November 2017; Volume FS-17-01, pp. 179–186, ISBN 978-157735794-0. [Google Scholar]

- Schirmer, F.; Kranz, P.; Manjunath, M.; Raja, J.J.; Rose, C.G.; Kaupp, T.; Daun, M. Towards a conceptual safety planning framework for human-robot collaboration. In Proceedings of the 42nd International Conference on Conceptual Modeling: ER Forum, 7th Symposium on Conceptual Modeling Education, SCME 2023, Lisbon, Portugal, 17 November 2023; Volume 3618. [Google Scholar]

- Van Toan, N.; Kim, J.J.; Kim, K.G.; Lee, W.; Kang, S. Application of fuzzy logic to damping controller for safe human-robot interaction. In Proceedings of the 2017 14th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Jeju, Republic of Korea, 28 June–1 July 2017; pp. 109–113. [Google Scholar] [CrossRef]

- Jiang, L.; Wang, Y. A personalized computational model for human-like automated decision-making. IEEE Trans. Autom. Sci. Eng. 2021, 19, 850–863. [Google Scholar] [CrossRef]

- Chu, C.H.; Liu, Y.L. Augmented reality user interface design and experimental evaluation for human-robot collaborative assembly. J. Manuf. Syst. 2023, 68, 313–324. [Google Scholar] [CrossRef]

- Umbrico, A.; Orlandini, A.; Cesta, A.; Faroni, M.; Beschi, M.; Pedrocchi, N.; Scala, A.; Tavormina, P.; Koukas, S.; Zalonis, A.; et al. Design of advanced human–robot collaborative cells for personalized human–robot collaborations. Appl. Sci. 2022, 12, 6839. [Google Scholar] [CrossRef]

- Aschenbrenner, D.; Van Tol, D.; Rusak, Z.; Werker, C. Using virtual reality for scenario-based responsible research and innovation approach for human robot co-production. In Proceedings of the 2020 IEEE International Conference on Artificial Intelligence and Virtual Reality (AIVR), Utrecht, The Netherlands, 14–18 December 2020; pp. 146–150. [Google Scholar] [CrossRef]

- Rahman, S.M. An IoT-based common platform integrating robots and virtual characters for high performance and cybersecurity. In Proceedings of the 2019 SoutheastCon, Huntsville, AL, USA, 11–14 April 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Park, K.B.; Choi, S.H.; Moon, H.; Lee, J.Y.; Ghasemi, Y.; Jeong, H. Indirect robot manipulation using eye gazing and head movement for future of work in mixed reality. In Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Christchurch, New Zealand, 12–16 March 2022; pp. 483–484. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, X. Machine intelligence matters: Rethink human-robot collaboration based on symmetrical reality. In Proceedings of the 2020 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Recife, Brazil, 9–13 November 2020; pp. 225–228. [Google Scholar] [CrossRef]

- St-Onge, D.; Reeves, N.; Petkova, N. Robot-human interaction: A human speaker experiment. In Proceedings of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6–9 March 2017; pp. 30–38. [Google Scholar] [CrossRef]

- Guo, T.; Obidat, O.; Rodriguez, L.; Parron, J.; Wang, W. Reasoning the trust of humans in robots through physiological biometrics in human-robot collaborative contexts. In Proceedings of the 2022 IEEE MIT Undergraduate Research Technology Conference (URTC), Cambridge, MA, USA, 30 September–2 October 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Robb, D.A.; Ahmad, M.I.; Tiseo, C.; Aracri, S.; McConnell, A.C.; Page, V.; Dondrup, C.; Chiyah Garcia, F.J.; Nguyen, H.N.; Pairet, È.; et al. Robots in the danger zone: Exploring public perception through engagement. In Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, Cambridge, UK, 23–26 March 2020; pp. 93–102. [Google Scholar] [CrossRef]

- Parron, J.; Li, R.; Wang, W.; Zhou, M. Characterization of Human Trust in Robot through Multimodal Physical and Physiological Biometrics in Human-Robot Partnerships. In Proceedings of the 2024 IEEE 20th International Conference on Automation Science and Engineering (CASE), Bari, Italy, 28 August–1 September 2024; pp. 2901–2906. [Google Scholar] [CrossRef]

- Islam, S.O.B.; Lughmani, W.A. A connective framework for social collaborative robotic system. Machines 2022, 10, 1086. [Google Scholar] [CrossRef]

- Cen, D.; Sibona, F.; Indri, M. A framework for safe and intuitive human-robot interaction for assistant robotics. In Proceedings of the 2022 IEEE 27th International Conference on Emerging Technologies and Factory Automation (ETFA), Stuttgart, Germany, 6–9 September 2022. [Google Scholar] [CrossRef]

- Islam, S.O.B.; Lughmani, W.A.; Qureshi, W.S.; Khalid, A. A connective framework to minimize the anxiety of collaborative Cyber-Physical System. Int. J. Comput. Integr. Manuf. 2024, 37, 454–472. [Google Scholar] [CrossRef]

- Komatsu, T. Owners of artificial intelligence systems are more easily blamed compared with system designers in moral dilemma tasks. In Proceedings of the Companion of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6–9 March 2017; pp. 169–170. [Google Scholar] [CrossRef]

- Lewis, L.; Minor, D. Considerations of moral ontology and reasoning in human-robot interaction. In Proceedings of the 2008 AAAI Workshop, Radnor, PA, USA, 23–26 October 2008; Volume WS-08-05, pp. 11–14. [Google Scholar]

- Metzler, T.; Lewis, L. Ethical views, religious views, and acceptance of robotic applications: A pilot study. In Proceedings of the 2008 AAAI Workshop, Chicago, IL, USA, 13–14 July 2008; Volume WS-08-05, pp. 15–22. [Google Scholar]

- Dajani, L.; Ferraro, A.; Maruyama, F.; Cheng, Y. Human Rights and Ethics Guiding Human-Machine Teaming. In Proceedings of the 2024 IEEE Global Humanitarian Technology Conference (GHTC), Radnor, PA, USA, 23–26 October 2024; pp. 254–261. [Google Scholar] [CrossRef]

- Londoño, L.; Hurtado, J.V.; Hertz, N.; Kellmeyer, P.; Voeneky, S.; Valada, A. Fairness and Bias in Robot Learning. Proc. IEEE 2024, 112, 305–330. [Google Scholar] [CrossRef]

- Claure, H.; Chang, M.L.; Kim, S.; Omeiza, D.; Brandao, M.; Lee, M.K.; Jung, M. Fairness and transparency in human-robot interaction. In Proceedings of the 2022 17th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Sapporo, Japan, 7–10 March 2022; pp. 1244–1246. [Google Scholar] [CrossRef]

- Podpora, M.; Gardecki, A.; Beniak, R.; Klin, B.; Vicario, J.L.; Kawala-Sterniuk, A. Human interaction smart subsystem—Extending speech-based human-robot interaction systems with an implementation of external smart sensors. Sensors 2020, 20, 2376. [Google Scholar] [CrossRef]

- Othman, U.; Yang, E. An overview of human-robot collaboration in smart manufacturing. In Proceedings of the 2022 27th International Conference on Automation and Computing (ICAC), Bristol, UK, 1–3 September 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Jaroonsorn, P.; Neranon, P.; Dechwayukul, C.; Smithmaitrie, P. Performance comparison of compliance control based on pi and FLC for safe human-robot cooperative object carrying. In Proceedings of the 2019 First International Symposium on Instrumentation, Control, Artificial Intelligence, and Robotics (ICA-SYMP), Bangkok, Thailand, 16–18 January 2019; pp. 13–16. [Google Scholar] [CrossRef]

- Liang, C.J.; McGee, W.; Menassa, C.C.; Kamat, V.R. Real-time state synchronization between physical construction robots and process-level digital twins. Constr. Robot. 2022, 6, 57–73. [Google Scholar] [CrossRef]

- Lee, D.; Lee, S.; Masoud, N.; Krishnan, M.S.; Li, V.C. Digital twin-driven deep reinforcement learning for adaptive task allocation in robotic construction. Adv. Eng. Inform. 2022, 53, 101710. [Google Scholar] [CrossRef]

- Ryu, S.H.; Kang, Y.; Kim, S.J.; Lee, K.; You, B.J.; Doh, N.L. Humanoid path planning from hri perspective: A scalable approach via waypoints with a time index. IEEE Trans. Cybern. 2012, 43, 217–229. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Zhu, Z. Vision–based framework for automatic interpretation of construction workers’ hand gestures. Autom. Constr. 2021, 130, 103872. [Google Scholar] [CrossRef]

- Shukla, N.; Xiong, C.; Zhu, S.-C. A unified framework for human-robot knowledge transfer. In Proceedings of the 2015 AAAI Fall Symposium, Arlington, VA, USA, 12–14 November 2015; Volume FS-15. [Google Scholar]

- Breazeal, C.; Brooks, A.; Chilongo, D.; Gray, J.; Hoffman, G.; Kidd, C.; Lee, H.; Lieberman, J.; Lockerd, A. Working collaboratively with humanoid robots. In Proceedings of the 4th IEEE/RAS International Conference on Humanoid Robots, Santa Monica, CA, USA, 10–12 November 2004; Volume 1, pp. 253–272. [Google Scholar] [CrossRef]

- Peltason, J.; Wrede, B. Modeling human-robot interaction based on generic interaction patterns. In Proceedings of the 2010 AAAI Fall Symposium, Arlington, VA, USA, 11–13 November 2010; Volume FS-10-05, pp. 80–85. [Google Scholar]

- Scibilia, A.; Pedrocchi, N.; Fortuna, L. A Nonlinear Modeling Framework for Force Estimation in Human-Robot Interaction. IEEE Access 2024, 12, 97257–97268. [Google Scholar] [CrossRef]

- Mišeikis, J.; Caroni, P.; Duchamp, P.; Gasser, A.; Marko, R.; Mišeikienė, N.; Zwilling, F.; De Castelbajac, C.; Eicher, L.; Früh, M.; et al. Lio-a personal robot assistant for human-robot interaction and care applications. IEEE Robot. Autom. Lett. 2020, 5, 5339–5346. [Google Scholar] [CrossRef]

- You, K.; Ding, L.; Zhou, C.; Dou, Q.; Wang, X.; Hu, B. 5G-based earthwork monitoring system for an unmanned bulldozer. Autom. Constr. 2021, 131, 103891. [Google Scholar] [CrossRef]

- Cruz-Sandoval, D.; Eyssel, F.; Favela, J.; Sandoval, E.B. Towards a conversational corpus for human-robot conversations. In Proceedings of the Companion of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6–9 March 2017; pp. 99–100. [Google Scholar] [CrossRef]

- Eom, H.; Lee, S.H. Mode confusion of human–machine interfaces for automated vehicles. J. Comput. Des. Eng. 2022, 9, 1995–2009. [Google Scholar] [CrossRef]

- Alsayegh, O.A.; Brzakovic, D.P. Guidance of video data acquisition by myoelectric signals for smart human-robot interfaces. In Proceedings of the 1998 IEEE International Conference on Robotics and Automation (Cat. No. 98CH36146), Leuven, Belgium, 20 May 1998; Volume 4, pp. 3179–3185. [Google Scholar] [CrossRef]

- Gil, M.S.; Kang, M.S.; Lee, S.; Lee, H.D.; Shin, K.S.; Lee, J.Y.; Han, C.S. Installation of heavy duty glass using an intuitive manipulation device. Autom. Constr. 2013, 35, 579–586. [Google Scholar] [CrossRef]

- Baker, M.; Casey, R.; Keyes, B.; Yanco, H.A. Improved interfaces for human-robot interaction in urban search and rescue. In Proceedings of the 2004 IEEE International Conference on Systems, Man and Cybernetics (IEEE Cat. No. 04CH37583), The Hague, The Netherlands, 10–13 October 2004; Volume 3, pp. 2960–2965. [Google Scholar] [CrossRef]

- Marathe, A.R.; Lance, B.J.; McDowell, K.; Nothwang, W.D.; Metcalfe, J.S. Confidence metrics improve human-autonomy integration. In Proceedings of the 2014 ACM/IEEE International Conference on Human-Robot Interaction, Bielefeld, Germany, 3–6 March 2014; pp. 240–241. [Google Scholar] [CrossRef]

- Bensch, S.; Jevtic, A.; Hellström, T. On interaction quality in human-robot interaction. In Proceedings of the ICAART 2017, Proceedings of the 9th International Conference on Agents and Artificial Intelligence, Porto, Portugal, 24–26 February 2017; Volume 1, pp. 182–189. [Google Scholar] [CrossRef]

- Hoffman, G. Evaluating fluency in human–robot collaboration. IEEE Trans. Hum.-Mach. Syst. 2019, 49, 209–218. [Google Scholar] [CrossRef]

- Burghart, C.; Mikut, R.; Holzapfel, H. Cognition-oriented building blocks of future benchmark scenarios for humanoid home robots. In Proceedings of the IEEE International Conference on Humanoid Robots, Vancouver, BC, Canada, 22–23 July 2007; pp. 1–10. [Google Scholar]

- Park, S.; Yu, H.; Menassa, C.C.; Kamat, V.R. A comprehensive evaluation of factors influencing acceptance of robotic assistants in field construction work. J. Manag. Eng. 2023, 39, 04023010. [Google Scholar] [CrossRef]

- Roveda, L.; Veerappan, P.; Maccarini, M.; Bucca, G.; Ajoudani, A.; Piga, D. A human-centric framework for robotic task learning and optimization. J. Manuf. Syst. 2023, 67, 68–79. [Google Scholar] [CrossRef]

- Yucel, Z.; Salah, A.A.; Mericli, C.; Mericli, T.; Valenti, R.; Gevers, T. Joint Attention by Gaze Interpolation and Saliency. IEEE Trans. Cybern. 2013, 43, 829–842. [Google Scholar] [CrossRef]

- Ono, T.; Imai, M.; Nakatsu, R. Reading a robot’s mind: A model of utterance understanding based on the theory of mind mechanism. Adv. Robot. 2000, 14, 311–326. [Google Scholar] [CrossRef]

- Xu, X.; Holgate, T.; Coban, P.; García de Soto, B. Implementation of a robotic system for overhead drilling operations: A case study of the Jaibot in the UAE. Int. J. Autom. Digit. Transform. 2022, 1, 37–58. [Google Scholar] [CrossRef]

- Peng, H.; Shi, N.; Wang, G. Remote sensing traffic scene retrieval based on learning control algorithm for robot multimodal sensing information fusion and human-machine interaction and collaboration. Front. Neurorobot. 2023, 17, 1267231. [Google Scholar] [CrossRef] [PubMed]

- Ding, Q.; Zhang, E.; Liu, Z.; Yao, X.; Pan, G. Text-Guided Object Detection Accuracy Enhancement Method Based on Improved YOLO-World. Electronics 2024, 14, 133. [Google Scholar] [CrossRef]

- Uğuzlar, U.; Cansu, E.; Contarlı, E.C.; Sezer, V. Autonomous human following robot based on follow the gap method. In Proceedings of the 2023 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Tomar, Portugal, 26–27 April 2023; pp. 139–144. [Google Scholar] [CrossRef]

- Li, X.; Koren, Y.; Epureanu, B.I. Complementary learning-team machines to enlighten and exploit human expertise. CIRP Ann. 2022, 71, 417–420. [Google Scholar] [CrossRef]

- Ye, Y.; You, H.; Du, J. Improved trust in human-robot collaboration with ChatGPT. IEEE Access 2023, 11, 55748–55754. [Google Scholar] [CrossRef]

- Gualtieri, L.; Fraboni, F.; Brendel, H.; Pietrantoni, L.; Vidoni, R.; Dallasega, P. Updating design guidelines for cognitive ergonomics in human-centred collaborative robotics applications: An expert survey. Appl. Ergon./Appl. Ergon. 2024, 117, 104246. [Google Scholar] [CrossRef] [PubMed]

- Val-Calvo, M.; Álvarez-Sánchez, J.R.; Ferrández-Vicente, J.M.; Fernández, E. Affective robot story-telling human-robot interaction: Exploratory real-time emotion estimation analysis using facial expressions and physiological signals. IEEE Access 2020, 8, 134051–134066. [Google Scholar] [CrossRef]

- Castro, A.; Silva, F.; Santos, V. Trends of human-robot collaboration in industry contexts: Handover, learning, and metrics. Sensors 2021, 21, 4113. [Google Scholar] [CrossRef]

- Li, C.; Chrysostomou, D.; Zhang, X.; Yang, H. IRWoZ: Constructing an industrial robot wizard-of-OZ dialoguing dataset. IEEE Access 2023, 11, 28236–28251. [Google Scholar] [CrossRef]

- Gkournelos, C.; Konstantinou, C.; Angelakis, P.; Tzavara, E.; Makris, S. Praxis: A framework for AI-driven human action recognition in assembly. J. Intell. Manuf. 2023, 35, 3697–3711. [Google Scholar] [CrossRef]

- Sidner, C.L.; Lee, C. Engagement rules for human-robot collaborative interactions. In Proceedings of the SMC’03 Conference Proceedings. 2003 IEEE International Conference on Systems, Man and Cybernetics. Conference Theme-System Security and Assurance (Cat. No. 03CH37483), Washington, DC, USA, 8 October 2003; Volume 4, pp. 3957–3962. [Google Scholar] [CrossRef]

- A-lHamouz, S.O.; El-Omari, N.K.T.; Al-Naimat, A.M. An ISO compliant safety system for human workers in human-robot interaction work environment. In Proceedings of the 2019 12th International Conference on Developments in eSystems Engineering (DeSE), Kazan, Russia, 7–10 October 2019. [Google Scholar] [CrossRef]

| Keywords | Cluster | Occurrences | Average Publication Year | Links | Average Citations | Average Normalized Citations | Total Link Strength |

|---|---|---|---|---|---|---|---|

| Artificial intelligence | 1 | 32 | 2020 | 13 | 17.25 | 1.41 | 51 |

| Human–robot interaction | 3 | 55 | 2018 | 15 | 19.44 | 1.08 | 45 |

| Intelligent robots | 4 | 15 | 2023 | 14 | 24.33 | 1.43 | 38 |

| Collaborative robots | 4 | 12 | 2024 | 9 | 2.83 | 0.58 | 23 |

| Industrial robots | 4 | 8 | 2024 | 11 | 0.38 | 0.15 | 23 |

| Robot learning | 1 | 5 | 2025 | 12 | 2 | 0.50 | 19 |

| Machine learning | 1 | 14 | 2020 | 10 | 5 | 0.41 | 19 |

| Large language model | 1 | 6 | 2024 | 12 | 6.33 | 1.40 | 17 |

| Robotics | 1 | 11 | 2019 | 8 | 8.73 | 1.37 | 17 |

| Virtual reality | 2 | 7 | 2023 | 8 | 11.43 | 1.15 | 15 |

| Smart manufacturing | 1 | 6 | 2024 | 11 | 9.83 | 1.95 | 14 |

| Trust | 3 | 7 | 2021 | 7 | 3.29 | 0.79 | 13 |

| Social robots | 1 | 6 | 2019 | 8 | 9.33 | 0.46 | 13 |

| Augmented reality | 2 | 7 | 2022 | 7 | 15.71 | 1.95 | 10 |

| Computer vision | 1 | 5 | 2024 | 7 | 3 | 0.56 | 7 |

| Country | Documents | Citations | Norm. Citations | Total Link Strength |

|---|---|---|---|---|

| United States | 66 | 1397 | 73.59 | 11 |

| Italy | 13 | 65 | 12.57 | 9 |

| Spain | 9 | 124 | 8.66 | 9 |

| United Kingdom | 15 | 160 | 10.15 | 7 |

| China | 20 | 80 | 20.39 | 6 |

| Japan | 23 | 273 | 14.26 | 5 |

| Denmark | 6 | 41 | 6.17 | 5 |

| Germany | 17 | 170 | 10.07 | 4 |

| Greece | 6 | 55 | 7.76 | 3 |

| Turkey | 7 | 68 | 3.90 | 3 |

| Switzerland | 5 | 204 | 11.15 | 2 |

| South Korea | 8 | 92 | 9.06 | 2 |

| France | 6 | 79 | 3.27 | 1 |

| Canada | 7 | 50 | 5.11 | 1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Peng, B.; Antwi-Afari, M.F.; Manzoor, B.; Boateng, E.; Antwi Afari, E.N.; Wu, Z. Artificial Intelligence in Human–Robot Collaboration in the Construction Industry: A Scoping Review. Buildings 2025, 15, 3060. https://doi.org/10.3390/buildings15173060

Peng B, Antwi-Afari MF, Manzoor B, Boateng E, Antwi Afari EN, Wu Z. Artificial Intelligence in Human–Robot Collaboration in the Construction Industry: A Scoping Review. Buildings. 2025; 15(17):3060. https://doi.org/10.3390/buildings15173060

Chicago/Turabian StylePeng, Bo, Maxwell Fordjour Antwi-Afari, Bilal Manzoor, Evans Boateng, Emmanuel Nyamekye Antwi Afari, and Zezhou Wu. 2025. "Artificial Intelligence in Human–Robot Collaboration in the Construction Industry: A Scoping Review" Buildings 15, no. 17: 3060. https://doi.org/10.3390/buildings15173060

APA StylePeng, B., Antwi-Afari, M. F., Manzoor, B., Boateng, E., Antwi Afari, E. N., & Wu, Z. (2025). Artificial Intelligence in Human–Robot Collaboration in the Construction Industry: A Scoping Review. Buildings, 15(17), 3060. https://doi.org/10.3390/buildings15173060