1. Introduction

In the architecture, engineering, and construction (AEC) industry, project performance is commonly evaluated during the construction phase, with limited emphasis on the design phase [

1]. However, the design phase plays a crucial role in determining a project’s success, as early decisions significantly influence cost, quality, and time outcomes [

2,

3]. Despite this, performance measurement systems tend to prioritize post-design stages, leaving a methodological gap in how design processes are evaluated [

4]. Several studies have developed performance measurement frameworks, benchmarks, and metrics on construction execution. Notably, the works by Mellado [

5] and Chan and Chan [

6] emphasize construction-phase indicators, while other researchers have proposed general approaches to project evaluation. Yet, comprehensive systems that assess design performance, particularly in high-rise building projects, remain underdeveloped. High-rise building design is constrained by vertical transportation cores, amplified wind loads, progressive floor-cycle schedules, dense trade interfaces, and strict safety codes. These factors create tight feedback loops where late design conflicts cascade into costly rework and critical path delays; hence, early, quantitative performance feedback is essential for timely corrective action.

A few exceptions exist: Hapanova and Al-Jibouri [

4,

7] highlighted the importance of key performance indicators (KPIs) for stakeholder management in early project phases. Similarly, Belsvik [

8] and Kristensen et al. [

9] explored metrics for managing the building design process. These contributions point to the relevance of performance evaluation in design but also underscore the lack of standardized systems tailored to high-rise projects. However, no validated, indicator-based dashboard currently exists to continuously track cost, schedule, quality, planning, and people-related performance during the design of tall buildings, despite evidence that design decisions determine up to 80% of life-cycle cost.

This study aims to address this gap by developing a performance evaluation system specifically for the design phase of high-rise building projects. The system is based on a set of validated performance indicators, calculation protocols, and a user-friendly data collection and visualization platform. By focusing on early-phase performance monitoring, the proposed framework contributes to improved project outcomes and supports informed decision-making among AEC professionals. The research questions of this study are as follows: which key performance indicators (KPIs) are perceived by experts as critical for monitoring the design phase of high-rise building projects, and how accurately does the proposed KPI dashboard detect cost and time deviations ≥10 % during a simulated tall building design? Theoretically, this study extends performance management literature by operationalizing 21 rigorously validated KPIs for the design stage and empirically testing their aggregation in a simulation. Practically, it delivers an open-format Excel dashboard that enables design teams to detect planning, cost, time, quality, and people issues.

2. Background

The literature on performance measurements in construction projects emphasizes the predominance of indicators related to the execution phase, particularly cost, time, and quality outcomes. Neely et al. [

10] and Takim et al. [

11] reviewed various performance measurement systems, noting that most are result-oriented and lack applicability to earlier project phases. These systems are typically retrospective, offering limited support for proactive management during the design process.

More recent studies have attempted to address this limitation. Hapanova and Al-Jibouri [

4,

7] proposed KPIs for evaluating the stakeholder engagement process before project execution. Their findings underscore the need for process-based indicators that enable real-time monitoring and improvement. Kristensen et al. [

9] developed a framework for managing building design performance, proposing communication, collaboration, and task efficiency metrics. Similarly, Belsvik et al. [

8] emphasized the importance of integrating performance evaluation tools into virtual design and construction (VDC) environments. Also, Herrera et al. [

12] proposed key performance indicators during the design process, at the end of the design, and during the construction process to evaluate the design performance.

In high-rise buildings, limited research exists on performance evaluation systems tailored to the complexities and requirements of vertical design processes. Studies such as those by Yana and Yoctún [

13] and Morales et al. [

14] examine design inefficiencies using BIM and VDC methodologies but stop short of proposing systematic evaluation frameworks. Aravena et al. [

15] identify specific design phases in high-rise projects—preliminary, basic, and detailed—suggesting a potential structure for indicator application.

Furthermore, the development of KPIs and their calculation protocols remains fragmented. Parmeter [

16] offers general guidance for indicator construction, but these models often lack adaptation for the AEC industry. Also, governmental manuals provide additional data collection and validation frameworks but are seldom applied to project design in construction.

Table 1 summarizes indicators presented in peer-reviewed studies. Collectively, these works confirm three trends: shift to process-oriented metrics, lean or BIM approaches, and cloud-based common data environments (CDEs). Given well-documented challenges, data availability/validity, decentralized measurement, and ad hoc indicator picking, a KPI list (

Table 1) is justified through a two-source logic:

Evidence-based anchoring: retain indicators consistently reported across peer-reviewed design-phase studies (e.g., PPC, AT, TP, DL, RFI, rework/cost/time deviations) and Lean-BIM/VDC syntheses [

17,

18].

Implementability filters: prioritize KPIs that can be automated or semi-automated from BIM/CDE/LPS data structures to mitigate collection burden and enhance auditability, following CDE good practices and usage-level evidence from cross-project studies [

19,

20].

This logic aligns indicator choice with (a) demonstrated relevance in recent literature and (b) the practical realities of data pipelines in BIM/VDC environments.

Table 1.

Design performance indicators.

Table 1.

Design performance indicators.

| Indicator | Description | References |

|---|

| Percentage of Plan Completed (PPC) | Percentage of completed tasks in relation to those assigned. | [8,9,13,21,22] |

| Anticipated Tasks (AT) | Percentage of tasks planned for the following week that were included in the previous week’s anticipation plan. | [8,22,23] |

| Tasks Prepared (TP) | Ratio between the tasks that CAN be executed and the total planned tasks. | [8,22,24] |

| Rework Cost (RC) | Percentage of rework costs relative to the total design cost of the project. | [25,26,27] |

| Cost Deviation (CD) | Ratio between actual total design cost and the planned cost. | [2,28,29,30,31] |

| Design Development Efficiency (DDE) | Ratio between design income and the cost of design development. | [1,32] |

| Coordination Efficiency (CE) | Ratio between coordination hours cost and hours spent responding to RFIs. | [1,32] |

| Decision Latency (DL) | Average time from a decision request until the client or stakeholder makes the decision. | [2,8,21,30] |

| Punctuality (P) | Timeliness of the design team in delivering key outputs such as drawings, models, or reports. | [9,25,33] |

| Rework Time (RT) | Time spent on rework or defect correction. | [25,34,35] |

| Schedule Deviation (SD) | Deviation of the actual schedule from the planned schedule. | [1,28,31] |

| Number of Non-Conformity Causes (NNCC) | The number of root causes leading to missed or delayed submissions or decisions. | [8,36,37] |

| Conflicts (C) | The number of design conflicts resulting in rework. | [13,25,38,39] |

| Requests for Information (RFI) | Number of RFIs received weekly. | [8,9,12,21,40] |

| BIM Modeling Percentage (BMP) | BIM modeling progress percentage per discipline. | [13,21] |

| Changes (CH) | Number of client-initiated change orders. | [9,32] |

| Meeting Evaluation (ME) | Qualitative survey conducted by stakeholders to assess the effectiveness of meetings. | [8,21,41] |

| Client Satisfaction (CS) | Qualitative survey administered to the client to evaluate satisfaction with the design. | [9,21,25,31] |

| Collaboration (COL) | Qualitative survey to assess collaboration within the design team. | [2,9,30,42] |

| Number of Complaints (NC) | Number of complaints received from the client regarding the design’s effectiveness. | [25,35,43] |

| Commitment (COM) | Qualitative survey conducted by stakeholders to assess the design team’s commitment. | [29,31] |

Three streams of recent work are directly pertinent to a design-phase KPI system:

Lean–BIM interactions in design: A 61-paper SLR details how Lean methods (e.g., LPS, SBD, TVD, Big Room) and BIM functionalities interact in the design phase, reporting reductions in rework and improved information flow when both are applied jointly. The review also stresses that digitizing Lean methods is necessary to realize these gains consistently [

17].

Automation of planning/control and process metrics: A 112-study SLR shows how automating Last Planner System stages strengthens adherence to Lean principles (e.g., transparency, variability reduction) and surfaces process-oriented metrics (PPC, constraint readiness, look-ahead reliability) that complement traditional results KPIs [

18].

BIM/VDC data environments and measurable impact: Empirical and mixed-methods studies link common data environments (CDEs) aligned with ISO 19650 to more standardized, accessible, and auditable performance data; they also identify persistent pain points (data validity, decentralized measures) that a KPI system must address by design [

19]. In parallel, cross-project modeling from Taiwan quantifies that specific BIM uses and their usage levels (e.g., 4D, cost estimation, analytical uses) significantly influence project performance, reinforcing that indicator design should be sensitive to how BIM is actually implemented [

20].

Additionally, a recent BIM + AI scheduling framework (case study: large infrastructure) operationalizes a three-layer architecture (data, AI analysis, application) and reports both benefits (risk identification, real-time adjustments) and limitations (skill matching, UI complexity), which are directly informative for integrating a design KPI dashboard into VDC stacks [

44]. Prior approaches cluster into result-oriented systems (simple to implement; retrospective; limited diagnostic power) and process-oriented systems (richer diagnosis via workflow, rework, decision-latency, and readiness indicators; higher data-collection burden). Recent reviews strengthen the case for process orientation by (i) demonstrating Lean–BIM synergies in design and (ii) showing that automation can reduce the manual overhead traditionally associated with process metrics [

17,

18]. Concurrently, CDE studies emphasize that information governance (standard schemas, traceability) is a prerequisite to make either archetype reliable at scale [

19].

Where CDEs follow ISO 19650 information container governance, KPI definitions and calculation scripts can migrate with minimal change; standardized metadata and audit trails facilitate benchmarking [

19]. However, the effectiveness and thresholds of certain KPIs depend on local BIM usage patterns and policy settings (e.g., mandated 4D/cost uses, owner acceptance criteria). Cross-project evidence from Taiwan shows that performance gains vary with usage level of specific BIM uses, cautioning against one-size-fits-all thresholds and suggesting localization during deployment [

20].

A pragmatic integration route is a three-layer pipeline: a data layer (CDE + BIM authoring exports + LPS logs), analysis layer (indicator computation, outlier checks, imputation), and application layer (dashboarding for design reviews). This mirrors recent BIM + AI/VDC frameworks and leverages CDEs for standardized, centralized data, while acknowledging current limitations (skills, UI complexity) that call for lightweight, role-appropriate visualizations [

19,

44].

Recent research supports design-phase process-oriented KPIs, documents Lean–BIM synergies, shows the value of automation in planning/control for reliable metrics, and underlines the role of CDE-governed data for trustworthy measurement. Cross-project evidence confirms that how BIM is used materially affects measured performance, which has implications for KPI operationalization [

17,

18,

19,

20]. Despite these advances, there remains a lack of validated, design-phase KPI systems tailored to the complexities of high-rise projects that (i) are implementable within typical BIM/VDC + CDE workflows, (ii) explicitly account for usage-level variability in BIM practices across contexts, and (iii) provide lightweight, auditable dashboards usable by multidisciplinary design teams. This study addresses that gap by operationalizing the

Table 1 indicators for design management and embedding them in an integration architecture aligned with current BIM/VDC and CDE practices [

19,

20,

44].

This review highlights the opportunity for a dedicated evaluation system that defines and validates relevant indicators for the design phase and operationalizes their use through accessible tools. This study aims to bridge the methodological and operational gaps in evaluating design performance in high-rise building projects by integrating expert feedback and literature-based indicators into a practical system.

3. Materials and Methods

This research was structured into three stages: (1) identification of key performance indicators for the design phase of high-rise building projects; (2) development of standardized calculation protocols and indicator sheets; and (3) construction and validation of the performance evaluation system through simulation and expert feedback (

Figure 1). A mixed-methods approach was adopted, combining systematic literature review, quantitative survey analysis, qualitative expert interviews, and prototype testing.

3.1. Identification of Performance Indicators

The identification of relevant performance indicators for the design phase of high-rise building projects was carried out through a systematic and mixed approach. Initially, a comprehensive literature review was conducted in databases such as Scopus and Web of Science, using keywords including “key performance indicators,” “design phase,” and “high-rise buildings.” This search yielded a preliminary list of 69 indicators reported in previous studies related to project management in the architecture, engineering, and construction (AEC) industry. These indicators were filtered based on their frequency of use, explicit relevance to the design phase (excluding construction or operational indicators), and clarity in terms of definition and applicability. As a result, 21 indicators were preselected for further validation (

Table 1).

To ensure the practical relevance of these indicators, a structured survey was administered to eight experienced professionals in the Chilean AEC sector, all of whom had direct involvement in the design of high-rise buildings (

Table 2). The survey collected information regarding participants’ professional backgrounds, previous use of performance indicators, and their assessment of the importance of each indicator using a 5-point Likert scale.

The responses were analyzed using the Relative Importance Index (RII), Equation (1), which provides a normalized score for each indicator based on the experts’ ratings. All 21 indicators achieved RII scores greater than 0.7, justifying their inclusion in the final set. The highest-rated indicators were cost deviation (RII = 1.00), schedule deviation (RII = 0.95), BIM modeling percentage (RII = 0.95), and rework cost (RII = 0.93). These results strongly emphasize cost control, schedule compliance, and technological integration during the design phase. The validated set of indicators formed the basis for the subsequent development of calculation protocols and the performance evaluation system.

3.2. Development of Calculation Protocols

Once the final set of performance indicators was validated, the next step consisted in developing standardized calculation protocols to ensure consistent application, measurement, and interpretation. The objective of this stage was to define clearly how each indicator should be calculated, what data would be required, and how such data should be collected and validated in real project environments.

The process began with a comprehensive literature review focused on technical manuals, guidelines, and handbooks related to performance measurement and key performance indicator (KPI) construction. Sources included academic literature indexed in Scopus and Web of Science, in addition to government publications and reference books such as that by Parmeter [

16]. Despite the various materials consulted, this review revealed a general lack of comprehensive protocols tailored to the design phase of construction projects. Therefore, a “snowball” search technique was also applied to identify further relevant documents through citation tracking of core references.

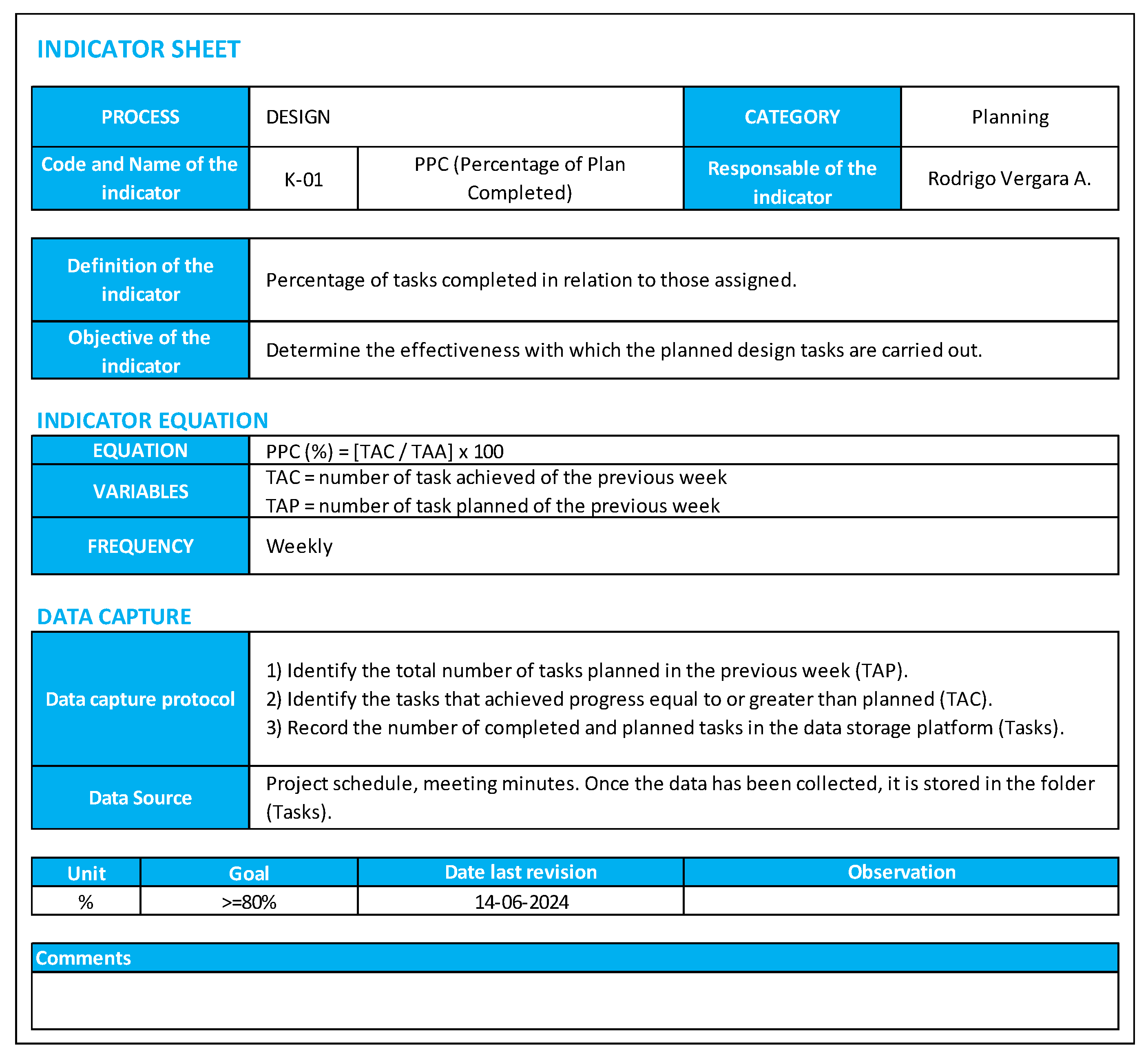

From this synthesis, a standardized structure for each indicator sheet was formulated. Each sheet includes the following components: (1) indicator name and category (planning, cost, time, quality, or people); (2) operational definition and intended objective; (3) calculation formula and explanation of all variables; (4) frequency of measurement (weekly, monthly, or by design phase); (5) data collection procedure; (6) primary data sources; (7) measurement units; and (8) target or benchmark value, where available. In cases where no empirical data was found to support specific benchmark values, those fields were left open for organizational adaptation.

The standardized KPI sheet used throughout this study consists of (i) name and category; (ii) operational definition and purpose; (iii) formula and variables; (iv) unit; (v) measurement frequency; (vi) data collection procedure; (vii) primary data sources; (viii) responsible role; and (ix) edge case rules (e.g., division by zero handling) and target/benchmark logic when available. A compiled set of the final 21 sheets is provided in

Supplementary Material File S1.

To validate the content and structure of the sheet, semi-structured interviews were conducted with six professionals with over 10 years of experience in project design and management, including academic and industry representatives. Each expert reviewed a subset of three to four indicator sheets. During these sessions—conducted remotely or in person—participants were first introduced to the objective and structure of the sheets and then asked to provide feedback on clarity, completeness, practicality, and alignment with their professional experience.

The expert review process yielded valuable observations, mostly related to improving precision in variable definitions, adjusting the level of detail in data collection protocols, and modifying measurement frequency in specific cases. For example, the “latency of decision” indicator was originally proposed as a weekly measure. Still, it was revised to a monthly frequency based on the standard pace of decision-making cycles in design coordination. Likewise, suggestions were made to eliminate fixed targets for some indicators (e.g., coordination efficiency) due to variability across project types and organizational practices.

The feedback from experts (

Table 3) was systematically incorporated into the final version of the indicator sheets. These revised documents ensure that each KPI is clearly defined, methodologically sound, and applicable to real project contexts. The set of finalized indicator sheets became the foundational input for the next stage: the construction of the performance evaluation system. To assess content validity, the authors computed the Content Validity Coefficient (CVC) following Hernández-Nieto’s approach based on expert judgements. Each indicator received a Likert rating; for item

x with mean rating

Mx and maximum scale value

Vmax, the item-level CVC is

CVCi = Mx/Vmax. A small-sample term is estimated as

Pei = 1/j (with

j experts), yielding the bias-corrected coefficient

CVC = CVCi − Pei. The resulting value was CVC = 0.84, indicating adequate content validity for the present stage of development [

45].

3.3. System Construction and Validation

Following the development and validation of the indicator sheets, the third stage involved the construction and evaluation of a functional system capable of assessing design performance using the established set of KPIs. The system was designed to be intuitive, flexible, and implementable using widely accessible tools. Microsoft Excel was selected as the development platform due to its ubiquity in professional environments and its capacity for dynamic data management and visualization.

The structure of the system consists of three integrated layers: (1) data input interfaces, (2) automated data processing and calculation tables, and (3) interactive dashboards for results visualization. The data input sheets were designed to be user-friendly, allowing manual entry or automated importation of project data according to the measurement frequency defined in each indicator sheet (weekly, monthly, or per design phase).

The processing layer employs formulas, pivot tables, and logical functions to calculate performance scores for each indicator in real time. This intermediate layer ensures that results are computed consistently and remain traceable to the original data. Indicators are grouped by category (planning, cost, time, quality, and people) and cross-tabulated to allow both individual and aggregated performance analysis.

Dashboards were developed to support visual interpretation of results. These dashboards include bar graphs, trend lines, category heatmaps, and indicator gauges, with filtering options by period or design phase. A summary panel provides overall performance scores per category and flags underperforming areas based on pre-set thresholds. To ensure data accuracy within the Excel implementation, we applied (i) schema and range checks at ingestion (units, permissible values); (ii) traceability from dashboard outputs to source entries; (iii) outlier flags using simple dispersion rules with analyst adjudication; (iv) pre-specified handling of missing data (imputation/exclusion); and (v) a lightweight audit trail of transformations. For future real project deployments, we plan random spot-checks against source documents. These measures align with CDE-oriented information governance that supports standardized, centralized and timely access to accurate data.

We first executed an AI-based simulation of a 20-story residential project (Viña del Mar, Chile) as a preliminary dry run to exercise the end-to-end workflow, data entry, KPI calculations, and dashboards, under realistic yet controlled conditions. This staged approach allows us to validate computation logic, stress-test usability and scalability, and correct issues before the tool reaches end users in real projects. The rationale is consistent with BIM–AI three-layer frameworks (data → analysis → application) that emphasize pre-deployment testing and usability refinement and with CDE-governed practices that require standardized, auditable data flows for dependable measurement. Moreover, empirical BIM usage studies underscore the need to control data quality and consult project records for accuracy, supporting a simulation-first step to verify logic prior to field adoption. To test system functionality, a simulated project was created using an AI-based language model (ChatGPT 4o). The simulation involved the design of a 20-story residential building located in Viña del Mar, Chile. The scenario included realistic assumptions regarding project timeline, stakeholder roles, coordination processes, and typical delays. The system was populated with simulated data and evaluated across three dimensions: (1) ability to compute all indicators accurately, (2) clarity and usability of dashboards, and (3) responsiveness to data changes (

Table 4).

The simulated application revealed strengths and areas for improvement. The system effectively calculated all indicators and displayed results dynamically, enabling users to identify trends and performance issues across phases. Planning and time management showed suboptimal scores, while quality and stakeholder coordination exhibited stronger performance. These insights demonstrated the system’s diagnostic capability.

Subsequently, the system was presented to four professionals with experience in architectural design and project management. Participants were guided using the tool via remote sessions and asked to assess its clarity, usefulness, and potential for adoption in real projects. Feedback was collected through Likert-scale questionnaires and open-ended questions.

Experts (

Table 5) evaluated the system positively, especially highlighting the dashboards’ readability and the coherence of indicator definitions. Recommendations were made to improve navigation and onboarding, which led to implementing a sidebar menu and a quick-start guide explaining system functions, indicator meanings, and example use cases.

4. Results and Discussion

The findings of this study are presented in three sections aligned with the methodological stages: (1) final selection of indicators, (2) validation of indicator sheets, and (3) performance of the evaluation system in a simulated project. Expert feedback and key performance patterns are also discussed.

4.1. Final Indicator Set and Validation Results

The survey conducted with eight professionals from the Chilean AEC industry confirmed the relevance and applicability of the 21 preselected performance indicators for evaluating the design phase of high-rise building projects. Each indicator was evaluated using a five-point Likert scale, and its Relative Importance Index (RII) was calculated to prioritize its perceived importance. All indicators achieved an RII score above 0.70, indicating broad expert agreement on their relevance.

The highest-rated indicators were cost deviation (RII = 1.00), schedule deviation (RII = 0.95), BIM modeling percentage (RII = 0.95), and rework cost (RII = 0.93). These results align with recurring challenges in high-rise building projects, where budget control, time compliance, and digital coordination are essential for successful design outcomes. Indicators such as the number of RFIs, percentage of plan completed (PPC), and client satisfaction also received high evaluations, reflecting the importance of information management and stakeholder engagement in the design phase.

For clarity, the 21 indicators are mapped to their groups as follows: planning—percentage of plan completed (PPC), anticipated tasks (AT), tasks prepared (TP), and changes (CH); cost—rework cost (RC), cost deviation (CD), design development efficiency (DDE), and coordination efficiency (CE); time—decision latency (DL), punctuality (P), rework time (RT), and schedule deviation (SD); quality—number of non-conformity causes (NNCC) and BIM modeling percentage (BMP); people—requests for information (RFI), meeting evaluation (ME), client satisfaction (CS), collaboration (COL), number of complaints (NC), commitment (COM), and conflicts (C).

This classification was positively received by experts, who noted that it provides a comprehensive yet manageable framework for monitoring design performance. The complete results are presented in

Table 6, which shows the RII scores for each indicator.

The validation process confirmed that the selected indicators not only reflect current priorities in design management but also possess sufficient clarity and measurability to be incorporated into a standardized evaluation system. This foundation enabled the next phase of the study: the definition of calculation protocols and integration into a functional assessment tool.

4.2. Expert Feedback on Indicator Sheets

Following the development of the indicator sheets, a structured expert validation process was conducted to assess their clarity, applicability, and methodological soundness. This step was essential to ensure that the proposed performance indicators were not only theoretically consistent but also aligned with the practical needs of professionals involved in the design phase of high-rise building projects.

A total of six professionals with more than 10 years of experience in architecture, structural engineering, and project management participated in the validation. These experts were selected for their direct involvement in design coordination and their familiarity with performance-based evaluation in project contexts. The feedback process was conducted through semi-structured interviews, either online or in person, during which each expert was asked to review three to four indicator sheets individually.

During these sessions, participants evaluated various aspects of each indicator, including the clarity of definitions, the relevance of objectives, the feasibility of the proposed data collection methods, and the appropriateness of the frequency and units of measurement. Experts were encouraged to suggest modifications and to identify any ambiguities or inconsistencies that might affect practical implementation.

The received feedback was constructive and led to several key refinements. For instance, experts proposed rewording some technical terms to make them more universally understandable, particularly for multidisciplinary teams. Measurement frequencies were also adjusted—for example, the “decision latency” indicator was initially proposed for weekly measurement but was modified to a monthly interval to better match the typical rhythm of client decision-making processes.

Figure 2 shows the standardized indicator sheet template example, which includes key sections such as process information, indicator definition and objective, calculation equation with variables and frequency, data capture protocol, and source, as well as fields for unit, target, revision date, observations, and additional comments.

Experts also highlighted the importance of distinguishing between strategic and operational indicators. While all indicators were considered relevant, some were more suitable for executive-level monitoring (e.g., cost deviation, client satisfaction), whereas others were better suited for operational control within design teams (e.g., number of RFIs, tasks prepared). As a result, an internal classification was proposed to guide future system use depending on the level of management.

Overall, the experts rated the indicator sheets positively in terms of usability, completeness, and alignment with real-world practices. Their contributions were instrumental in refining the structure and content of the sheets, which now include clearer variable definitions, standardized formulas, and flexible data sources. This feedback phase strengthened the validity and practicality of the indicators and ensured their successful integration into the performance evaluation system.

To complement the quantitative prioritization (RII) and to strengthen the practical alignment of the indicator set, we conducted a rapid thematic analysis of the six semi-structured interviews described above. Using an inductive approach, one analyst performed open coding on the interview notes, grouped codes into categories, and refined them into three cross-cutting themes. A brief codebook and theme definitions were then audited by a co-author for conceptual coherence. We did not estimate inter-coder reliability; this is acknowledged as a limitation and will be addressed in a future multi-site study.

T1—clarity and operational definitions: Experts emphasized unambiguous definitions and calculable formulas, including variable scope, units, and treatment of edge cases (e.g., zero denominators, zero-activity weeks). Illustrative excerpt (paraphrased): “Define precisely what counts as a prepared task and state how ‘no work this week’ is handled in the denominator.” Consequent edits strengthened the operational definition, variables, and data-source fields in the indicator sheets.

T2—measurement frequency and edge-case rules: Experts recommended aligning frequencies with decision rhythms and avoiding fixed targets that vary by context. Illustrative excerpts (paraphrased): “Decision Latency fits a monthly cadence consistent with client approval cycles,” and “Coordination Efficiency targets depend on project type—avoid a universal threshold.” The sheets now specify frequency, edge-case rules, and, where appropriate, the absence of fixed targets with guidance for project-level calibration.

T3—use context: strategic vs. operational indicators: Experts distinguished indicators suitable for executive dashboards (e.g., cost deviation, client satisfaction) from those suited to day-to-day coordination (e.g., RFIs, PPC, tasks prepared). Illustrative excerpt (paraphrased): “Keep CD/CS for periodic executive reviews; use RFI/PPC in weekly design meetings.” We therefore provide an internal use-context tag (strategic/operational) to guide deployment and reporting granularity.

The analysis followed inductive coding by one analyst with a subsequent audit of theme definitions; no inter-coder agreement was computed. Given the single-country sample and small

n, findings are intended to refine the instrument rather than to generalize. Future work will extend interviews across multiple countries and estimate inter-coder reliability. The finalized indicator sheets incorporate (i) strengthened operational definitions and variable lists; (ii) explicit frequency and edge-case rules (e.g., monthly decision latency); (iii) guidance on context-dependent targets (e.g., coordination efficiency); and (iv) use-context tags to support both strategic summaries and operational reviews. The updated template is shown in

Figure 2, and all finalized sheets are compiled in

Supplementary File S1.

4.3. Evaluation System Performance in Simulation

To verify the practical functioning of the performance evaluation system, a simulation was conducted based on a hypothetical design project. The selected case involved a 20-story residential building located in Viña del Mar, Chile. This simulated scenario allowed the researchers to populate the evaluation system with realistic but controlled data; test the full workflow of data input, calculation, and visualization; and assess the tool’s effectiveness in identifying performance trends and issues.

The system was implemented using Microsoft Excel, integrating the previously validated 21 performance indicators. The structure included separate modules for data input, automatic calculations through embedded formulas and pivot tables, and dashboards displaying visualizations across different categories (planning, cost, time, quality, and people). For each indicator, results were presented by a design phase (preliminary, basic, and detailed), as well as by weekly and monthly intervals.

The data entered into the system were generated using a language-based AI model (ChatGPT 4o), based on plausible project conditions, common coordination challenges, and realistic time and cost estimates. This approach enabled the emulation of typical performance variations without relying on confidential real project data.

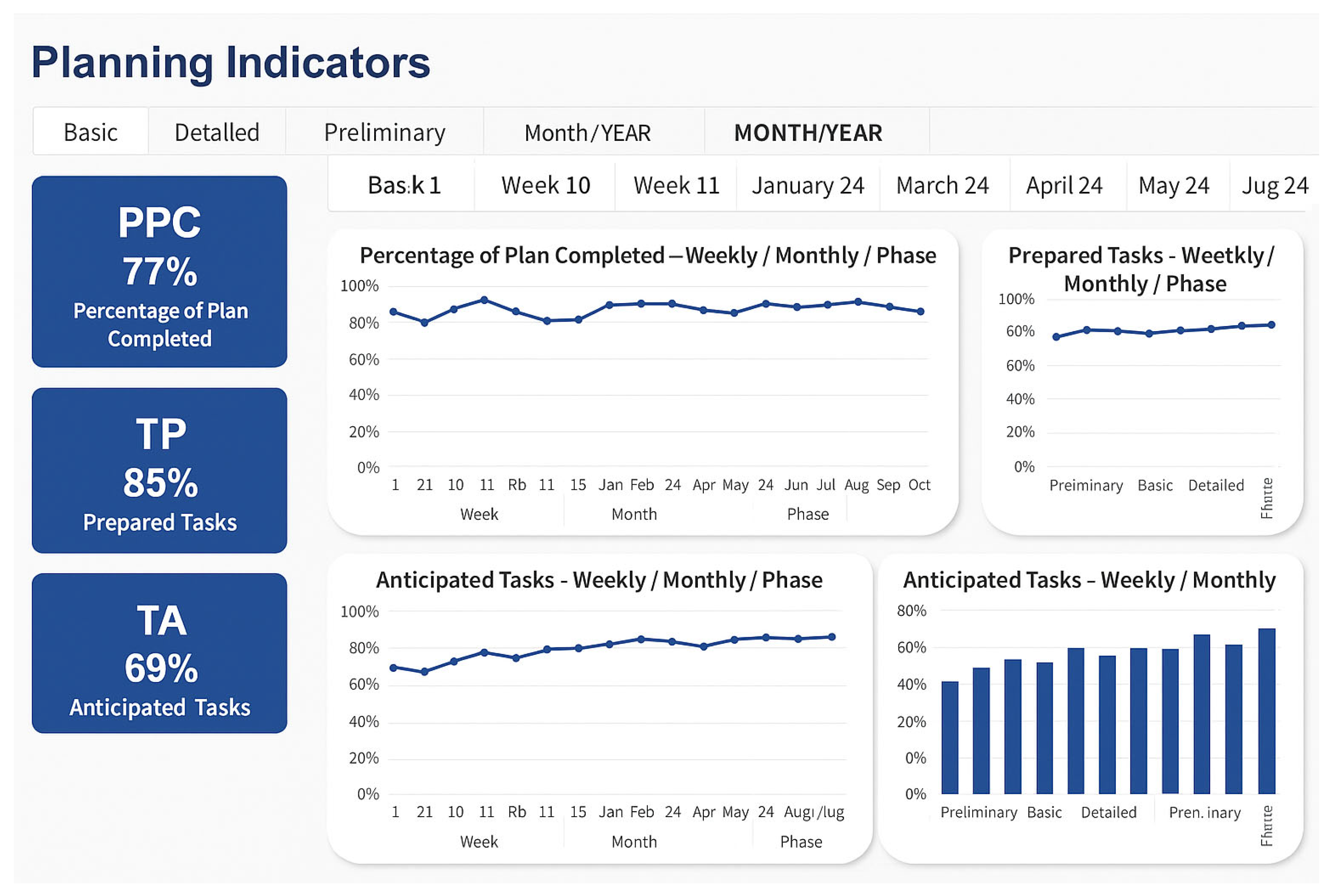

Figure 3 presents the Planning Indicators dashboard, which displays the percentage of plan completed (PPC), prepared tasks (TP), and anticipated tasks (TA), along with their trends over time by week, month, and project phase, providing a comprehensive visualization of planning performance.

The system successfully processed all input data and calculated each indicator as expected. The dashboards provided intuitive and dynamic visual outputs, including bar charts, trend lines, and category performance summaries. Key performance issues were clearly identified through visual signals and filters, allowing the user to isolate underperforming areas.

Specifically, the simulation revealed deficiencies in several indicators associated with planning and time management. The percentage of plan completed (PPC), tasks prepared, and schedule deviation indicators showed values below the expected thresholds, suggesting a lack of readiness and inefficiencies in schedule adherence. Similarly, high values in cost deviation and rework cost highlighted poor financial control during the design phase.

In contrast, performance in quality and stakeholder engagement appeared more satisfactory. Indicators such as BIM modeling percentage, number of RFIs, collaboration, and client satisfaction showed results within acceptable ranges, reflecting strong digital coordination and interpersonal dynamics.

These results demonstrated the system’s diagnostic capability and its potential to guide design teams in performance monitoring and decision-making. Moreover, the layered dashboard design allowed users to interpret results at different levels of detail—aggregated by phase, filtered by category, or focused on individual indicators—enhancing the flexibility and applicability of the system.

Overall, the simulation validated the system’s ability to compute and communicate performance information in a clear, coherent, and decision-oriented manner. This test provided critical insights into the system’s usability and laid the groundwork for the next validation step: expert evaluation of system usability and relevance.

4.4. Expert Assessment of the System

After the simulation test, the performance evaluation system was presented to a group of four professionals with substantial experience in the design and coordination of architectural and engineering projects. These experts were invited to assess the system’s usability, visual clarity, functionality, and potential for adoption in real-world design management contexts.

The evaluation process was carried out through remote sessions, during which each expert was guided through the system’s interface, including data input modules, automated calculation layers, and dashboard visualizations. Experts were then asked to complete a structured feedback form consisting of Likert-scale items and open-ended questions. The evaluation criteria covered two main dimensions: (1) the functionality of the system, including navigation, clarity of results, and indicator organization; and (2) its practical usefulness for design professionals in the AEC industry.

Experts generally rated the system as functional and accessible. The Excel-based platform was considered intuitive, particularly due to its clear interface and logical categorization of indicators. The visual structure of the dashboards was praised for its simplicity and coherence, enabling fast interpretation of results even for users with limited experience in data analysis.

In terms of practical applicability, experts acknowledged the system’s value for design teams that already work with a performance-based mindset or are seeking to adopt one. They highlighted that the system could be especially useful for periodic reviews, early detection of coordination issues, and structured reporting of design progress to clients or project managers.

Some recommendations were made to further enhance usability. For example, experts suggested incorporating a fixed navigation menu to facilitate transitions between sections, as well as a start-up guide to explain the system’s structure, indicator definitions, and expected use cases. These suggestions were implemented, improving the user experience and lowering the entry barrier for future adopters.

The experts also emphasized the importance of maintaining flexibility in the system’s configuration, allowing customization of thresholds, units, and measurement frequency according to project-specific requirements. This adaptability was seen as a key strength of the tool, increasing its potential for integration into various organizational contexts. Therefore, the expert assessment confirmed that the system meets the basic expectations of clarity, relevance, and usability. Their feedback contributed significantly to refining the final version, ensuring it is not only methodologically sound but also ready for professional implementation. The system now stands as a robust and adaptable solution for performance monitoring in the design phase of high-rise building projects.

4.5. Benchmarking Against Existing Approaches

To position the proposed system within current practice, we conducted qualitative benchmarking against representative approaches reported in the Lean/BIM/VDC and BIM + AI literature. The comparison focuses on criteria relevant to design-phase use: (i) scope and phase coverage, (ii) data sources and acquisition, (iii) level of automation, (iv) auditability/governance, (v) configurability/localization, and (vi) usability.

Scope and phase coverage: Recent syntheses show increasing interaction between Lean methods and BIM during design; however, practical optimization of the design phase remains comparatively underdeveloped, reinforcing the value of lightweight, design-oriented KPI systems. Digitizing Lean methods is highlighted as necessary to realize consistent benefits in practice [

17].

Data sources and acquisition: Enterprise solutions often integrate directly with CDEs and BIM tools. In well-structured CDEs aligned with ISO 19650-1 [

46], information management is standardized and centralized, enabling timely access to accurate data and more reliable performance measurement. Our current implementation remains Excel-based with structured imports/exports, offering deployability where enterprise integrations are not yet available [

19].

Level of automation: BIM + AI frameworks typically follow a three-layer architecture (data → analysis → application) to enable real-time ingestion, predictive modeling, and decision support; these works also describe concrete analysis and application functions (risk warnings, early alerts, visual decision tools). Our approach prioritizes low entry cost while remaining compatible with incremental automation [

44].

Auditability and governance: CDE-based studies stress that performance measurement improves when data are governed through agreed information containers and managed processes; our design emphasizes traceability from dashboard outputs back to source entries, which can later be migrated into CDE-governed workflows [

19].

Configurability and localization: Evidence from cross-project analyses indicates that BIM uses and their usage levels measure performance; therefore, thresholds should be calibrated to context rather than hard-coded. Our indicator sheets include edge-case rules and project-level calibration guidance [

20].

Usability: BIM-AI scheduling case work documents both benefits (risk identification, real-time adjustments) and adoption challenges (skills, interface complexity), supporting a staged, pilot-first rollout for new tools. Our Excel-based interface and quick-start guide target a lower learning curve at initial adoption [

44].

The proposed system is a lightweight, auditable design-phase solution that prioritizes clarity, traceability, and configurability over deep automation. Broader multi-phase predictive features and direct system-to-system integrations remain future extensions, consistent with BIM-AI roadmaps that call for stress-testing and scalability assessment before wider deployment.

5. Conclusions

This study proposed and validated a performance evaluation system tailored to the design phase of high-rise building projects, addressing a significant gap in project management literature and practice within the architecture, engineering, and construction (AEC) industry. While performance monitoring has traditionally focused on the construction stage, the early design phase is critical to project success, particularly in complex projects such as high-rise buildings where design decisions strongly influence downstream cost, quality, and time outcomes.

The research followed a structured methodology consisting of three stages: (1) identification of 21 key performance indicators through literature review and expert validation; (2) development of standardized indicator sheets with clear calculation protocols, measurement frequencies, and data sources; and (3) construction of a functional evaluation system using Microsoft Excel, followed by simulation-based testing and expert assessment. The final set of indicators was grouped into five strategic categories—planning, cost, time, quality, and people—providing a comprehensive framework for multidimensional performance analysis. The system successfully demonstrated its diagnostic capacity by identifying weaknesses in planning and time management, while also recognizing strengths in quality control and stakeholder collaboration. Experts affirmed the system’s practicality, clarity, and potential for adoption in real-world projects, especially when complemented with onboarding resources such as a navigation panel and user guide.

Theoretically, the research advances design-phase performance management by shifting attention from execution-only outcomes to process-oriented monitoring during design and by offering a replicable, indicator-based model grounded in explicit operational definitions, measurement frequencies, and data sources. Content validity was evidenced at the instrument level with a CVC of 0.84, providing a reasoned basis for subsequent empirical testing. Practically, the system offers a lightweight, auditable, and traceable tool that can be integrated into existing workflows without requiring enterprise integrations at the outset. It supports routine design reviews, early detection of coordination issues, and decision-making based on transparent computations that can be followed back to their sources. The Excel implementation lowers the barrier to initial adoption while remaining compatible with future automation and integration.

Several limitations must be acknowledged. Validation combined expert judgement and a simulated project; no real project data were analyzed at this stage. The expert samples were small and from a single country (Chile), which constrains external validity; this origin is explicitly recognized as a limitation here and in the conclusions of this manuscript. Psychometric analyses such as factor analysis or Cronbach’s alpha were not conducted because the instrument comprises single-item KPIs and available samples are not suited to those techniques; instead, we prioritized content validity. Correlation analyses among KPIs were not added because they would be artefacts of the simulation and fall outside the present scope.

Future work will progress from simulation to longitudinal, multi-project case studies with real data to verify results over time, expand samples across countries and organizations to strengthen generalizability and calibrate context-specific thresholds, and enhance data pipelines through integration with BIM/CDE and project management platforms to enable automated, real-time monitoring. Additional benchmarking against alternative systems and exploration of multi-phase predictive features will also be pursued, alongside stronger qualitative protocols (multi-site interviewing with inter-coder reliability).

Finally, enterprise adaptation will entail costs related to initial configuration of indicator sheets and thresholds, training and onboarding, data preparation and possible integrations, and ongoing operation for data quality checks and periodic reviews. While the Excel-based approach minimizes upfront investment, it may require manual effort until integration is established. Anticipated challenges include information governance and access rights, data consistency across disciplines, localization of thresholds to markets and project types, change management risks (including potential KPI gaming), required skills and bandwidth, and varying levels of digital maturity. Addressing these issues through stewardship, phased roll-out, targeted training, and progressive integration offers a clear pathway from the validated foundation presented here to real-world deployment.