SC-YOLO: A Real-Time CSP-Based YOLOv11n Variant Optimized with Sophia for Accurate PPE Detection on Construction Sites

Abstract

1. Introduction

2. Related Works

2.1. Evolution of Detection Models for Construction Safety

2.2. Specialized Detection Strategies and Backbone Architectures

2.3. Optimization Approaches in Object Detection

2.4. Comparative Analysis and Research Gap

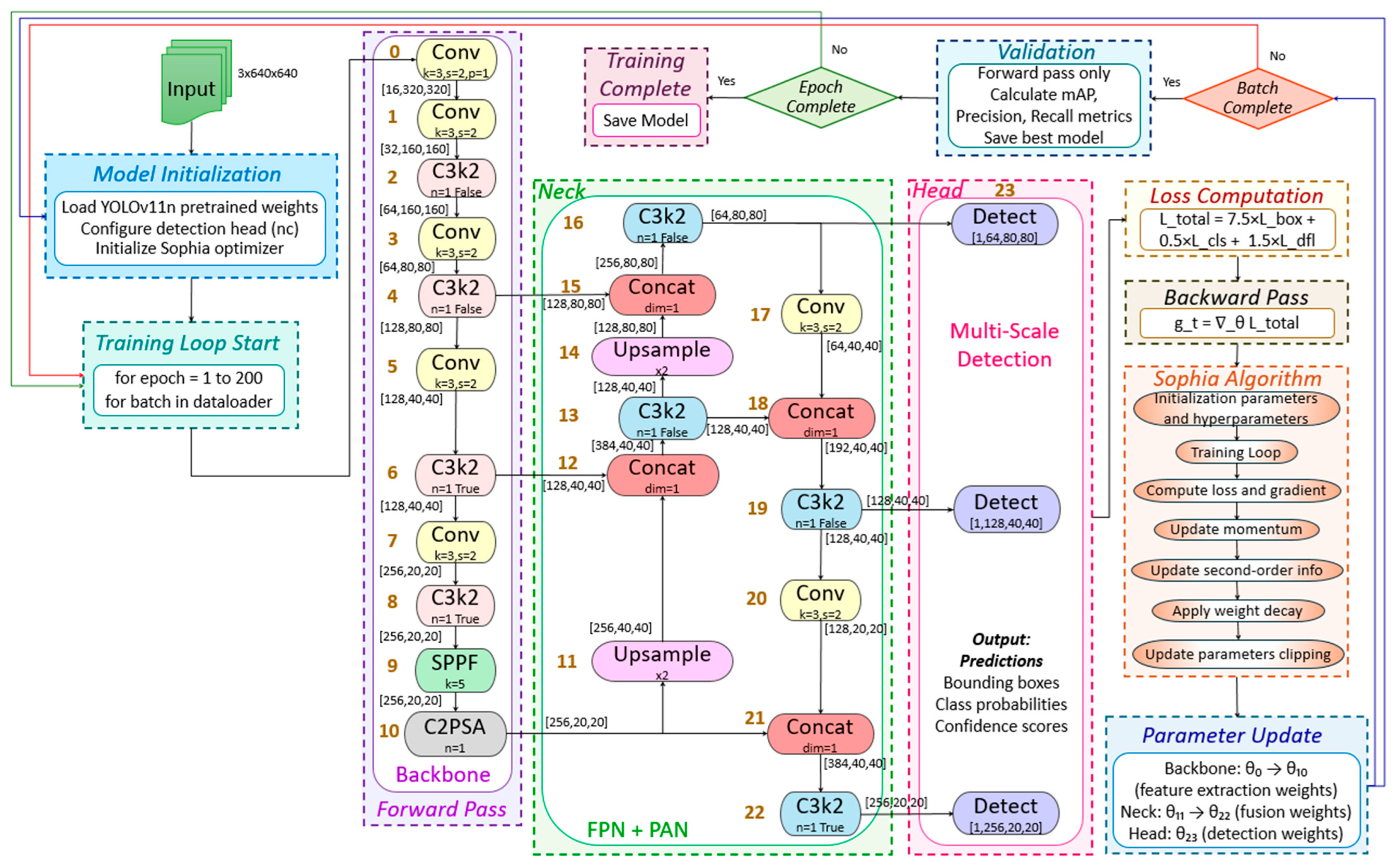

3. Methodology

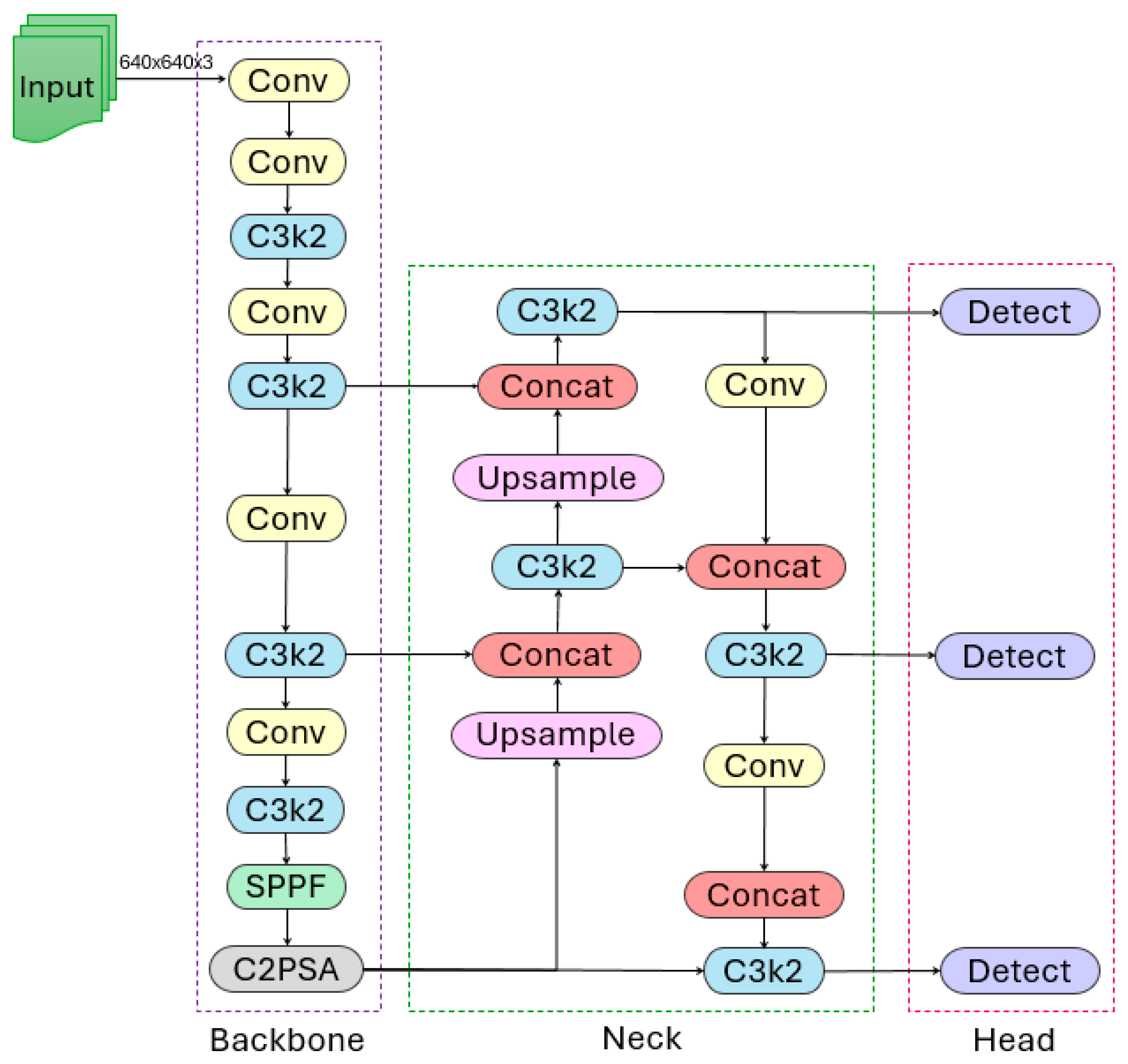

3.1. YOLOv11 Architecture Enhancement Framework

3.2. Backbone Architectures

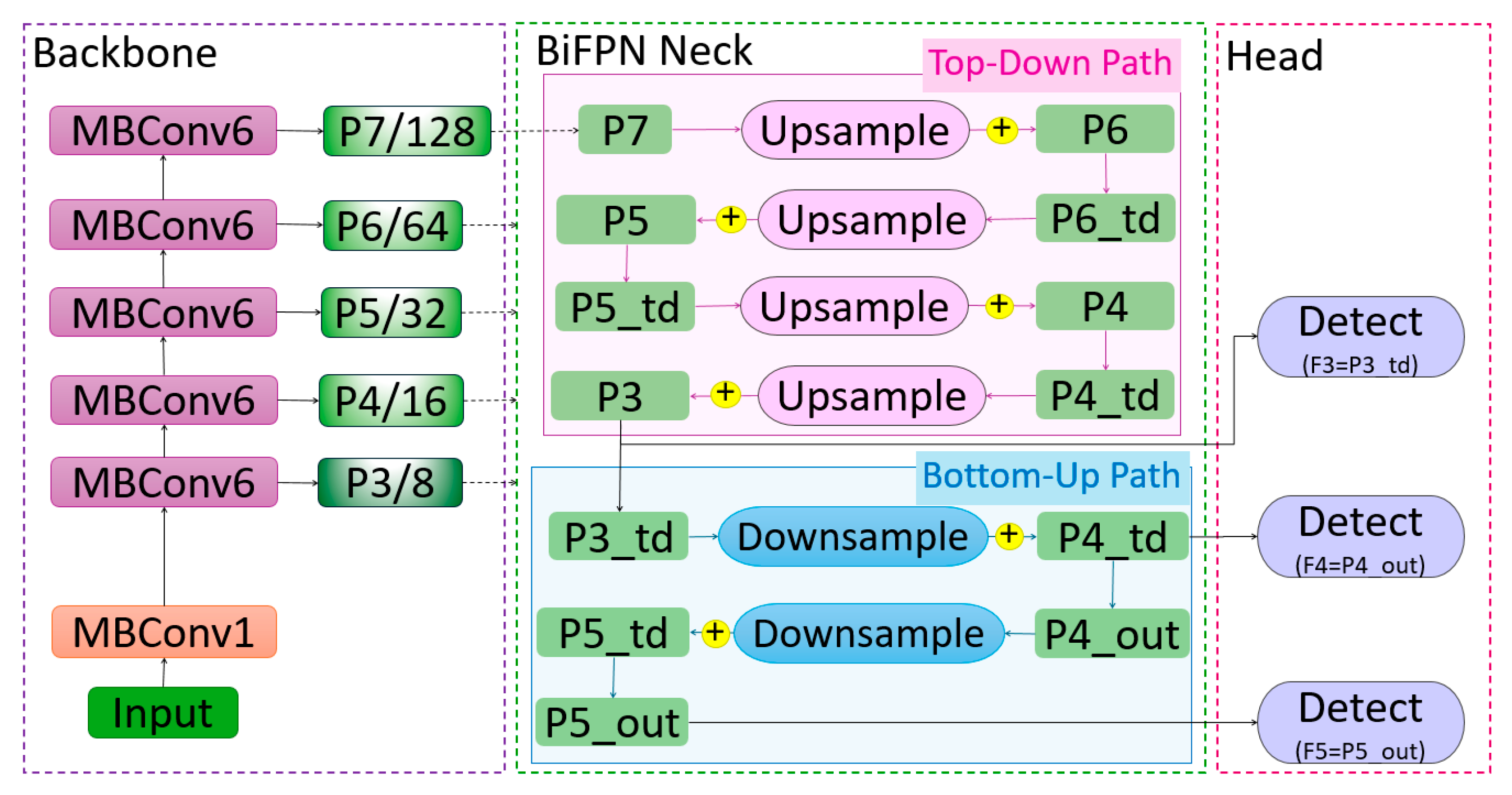

3.2.1. EfficientNet Backbone (Efficient-YOLO)

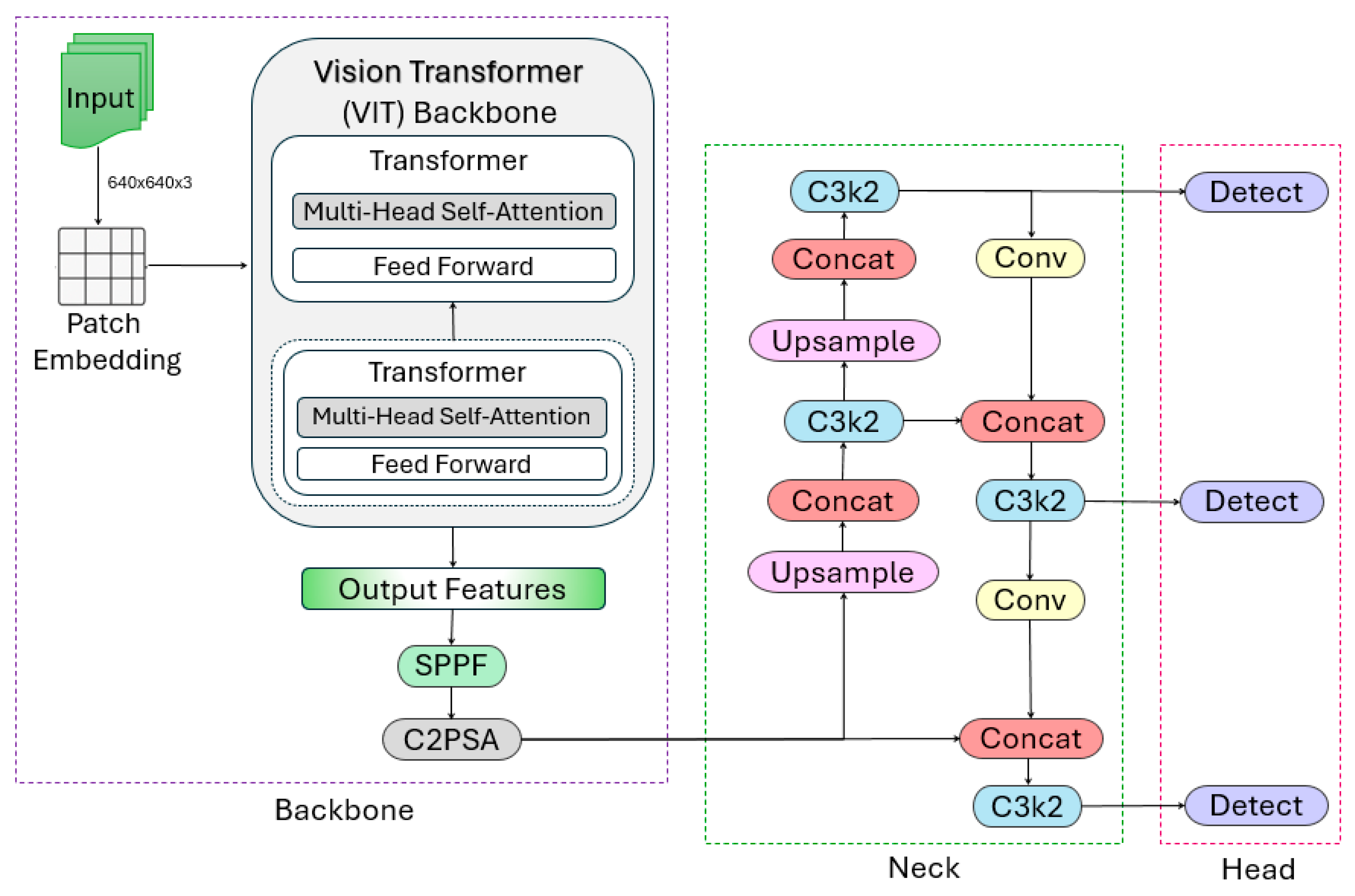

3.2.2. Self-Supervised Vision Transformer Backbone (DINOv2-YOLO)

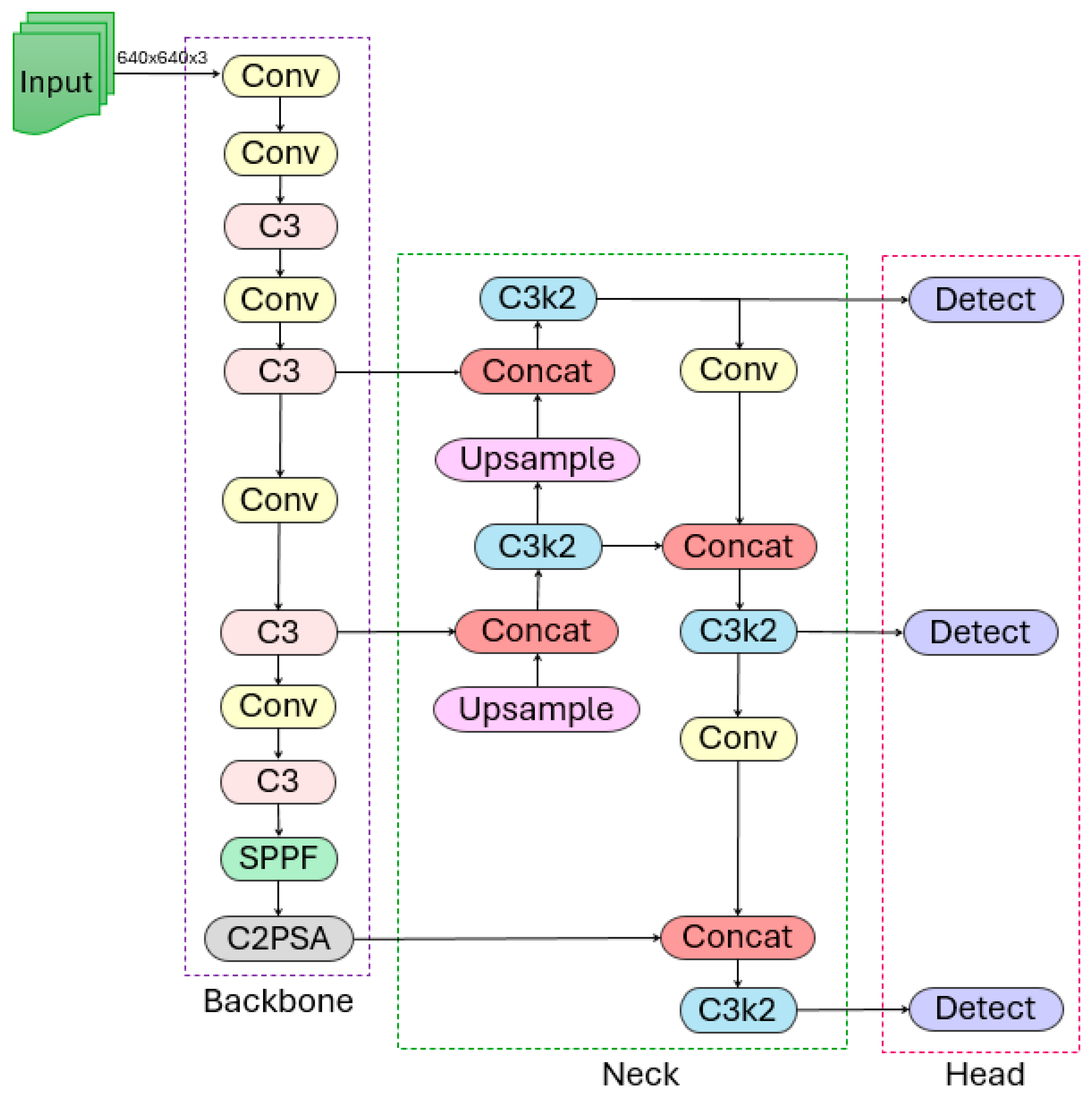

3.2.3. CSPDarknet Backbone (CSP-YOLO)

3.2.4. Proposed CSPDarknet with Sophia (SC-YOLO)

3.3. Sophia: Second-Order Clipped Stochastic Optimization

3.3.1. Motivation

3.3.2. Hessian Estimators

| Algorithm 1: Hutchinson |

| 1: Input: parameter |

| 2: Compute mini-batch loss |

| 3: Draw from |

| 4: Return |

| Algorithm 2: Gauss–Newton–Bartlett |

| 1: Input: parameter |

| 2: Draw a mini-batch of input |

| 3: Compute logits on the mini-batch: |

| 4: Sample |

| 5: Calculate |

| 6: Return |

3.3.3. Complete Algorithm

| Algorithm 3: Sophia |

| 1: Input: , learning rate , hyperparameters , and estimator choice |

| Estimator ∈ {Hutchinson, Gauss–Newton–Bartlett} |

| 2: Set |

| 3: For to do |

| 4: Compute mini-batch loss |

| 5: Compute |

| 6: |

| 7: If then |

| 8: Compute |

| 9: |

| 10: Else |

| 11: |

| 12: // weight decay |

| 13: |

| 14: End For |

3.3.4. Theoretical Properties

3.3.5. Practical Considerations

3.4. Experimental Datasets

3.4.1. VOC2007-1 Dataset

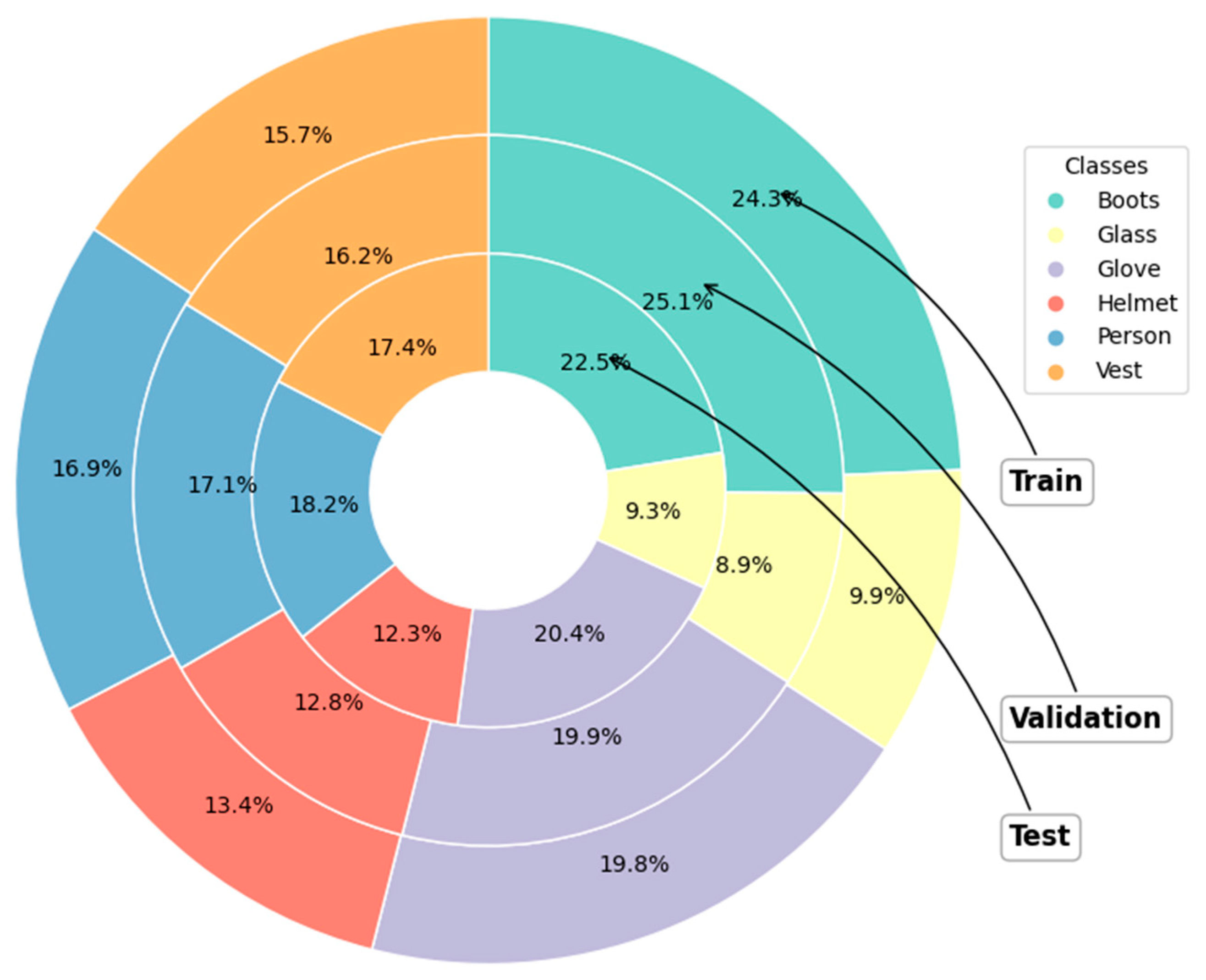

3.4.2. ML-31005 Dataset

3.5. Implementation Details

3.5.1. Computing Infrastructure

3.5.2. Training Protocol

3.5.3. Evaluation Metrics

3.6. Experimental Design

3.7. Ablation Study Design

4. Results and Discussion

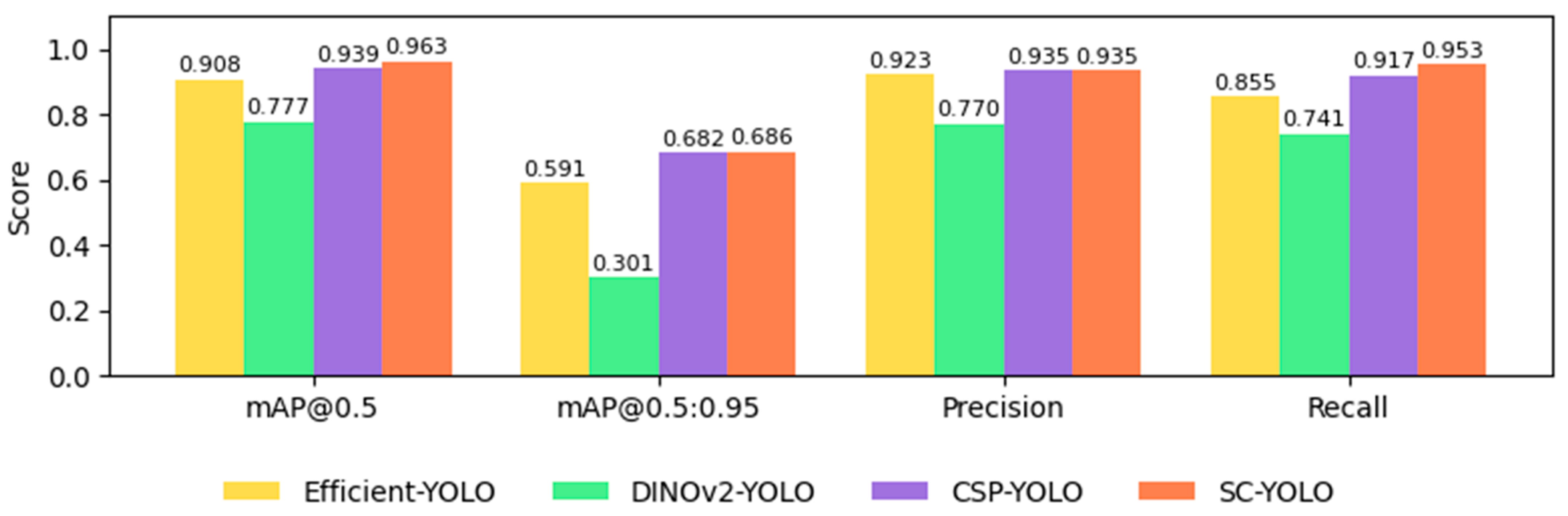

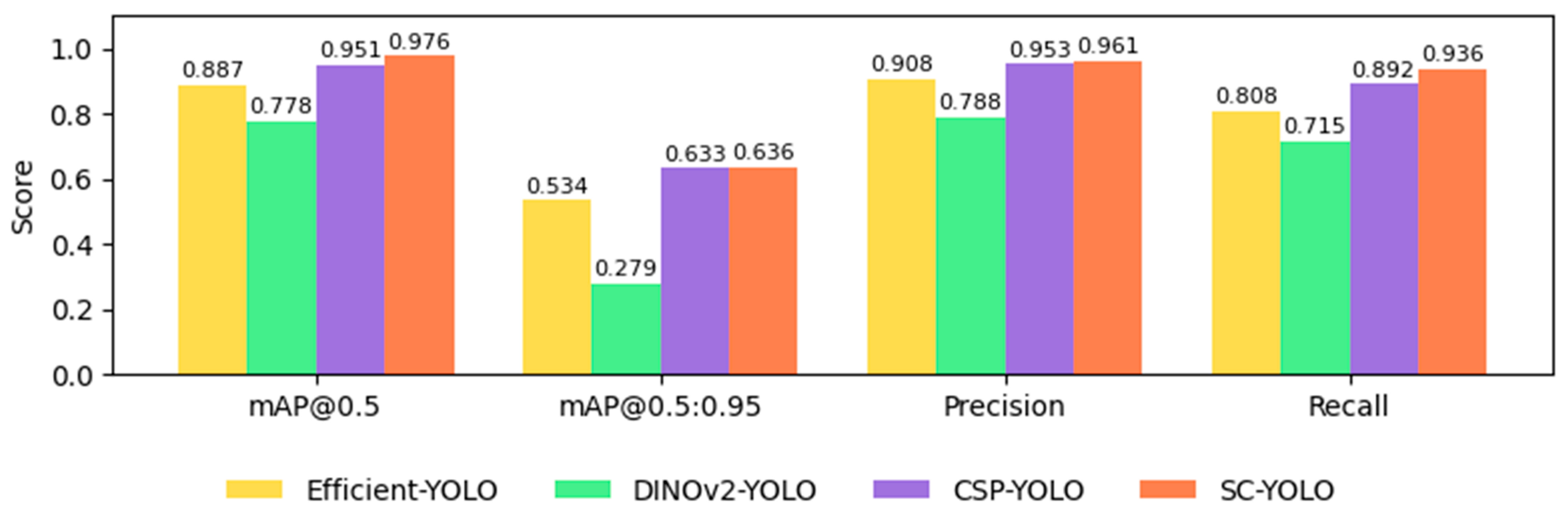

4.1. Model Architecture Comparison

4.2. Overall Detection Performance

4.3. Class-Wise Performance Analysis

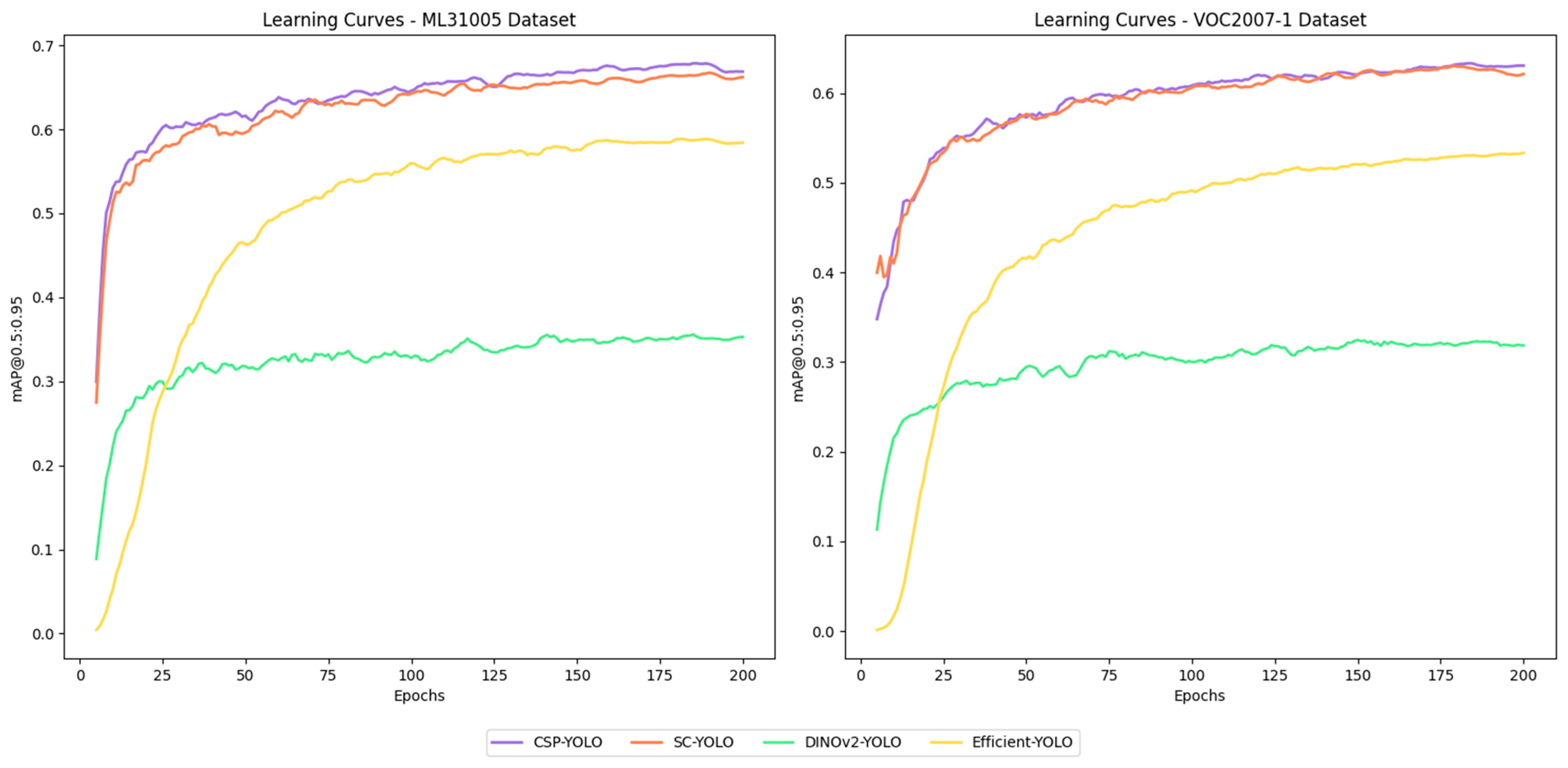

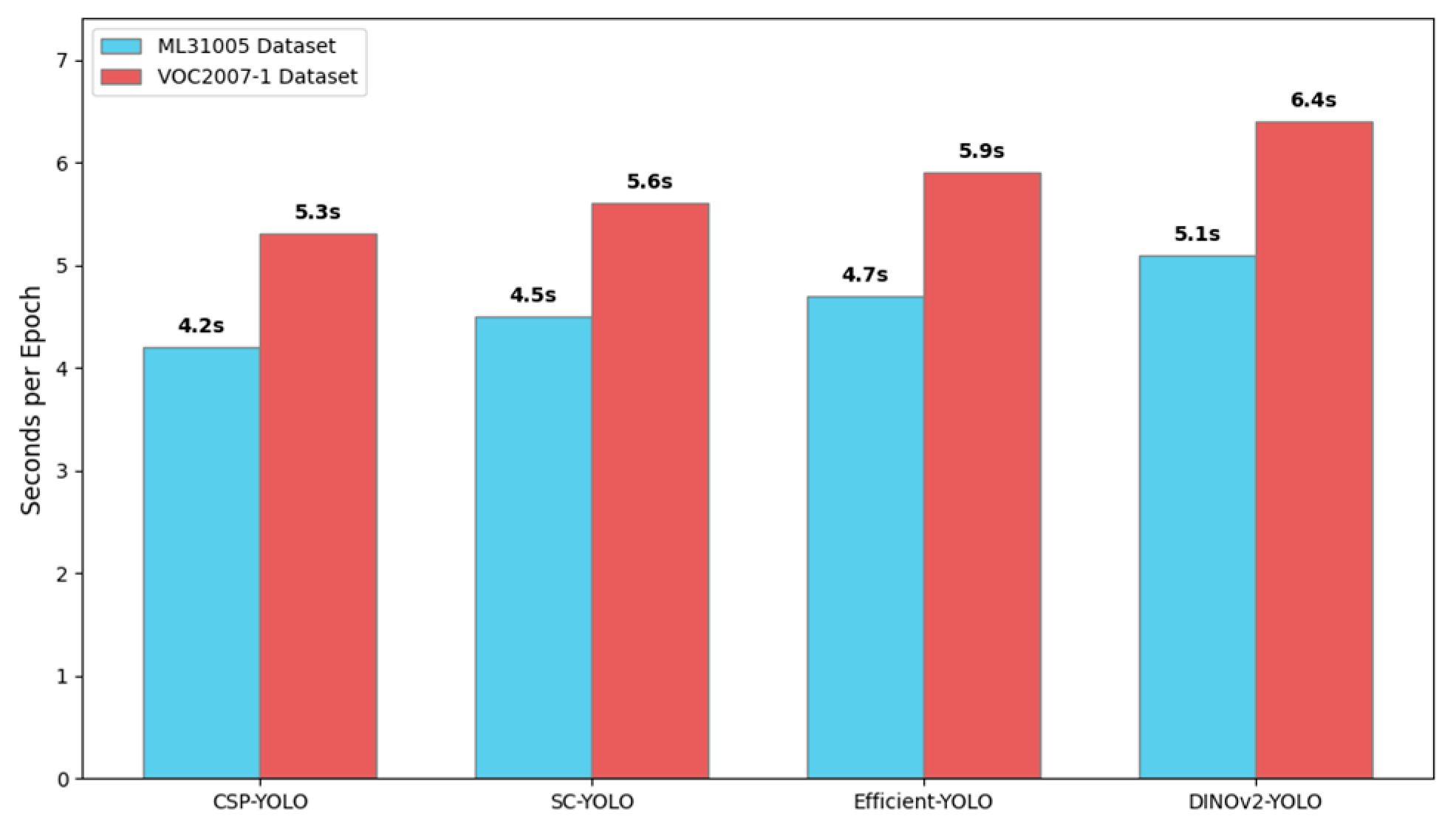

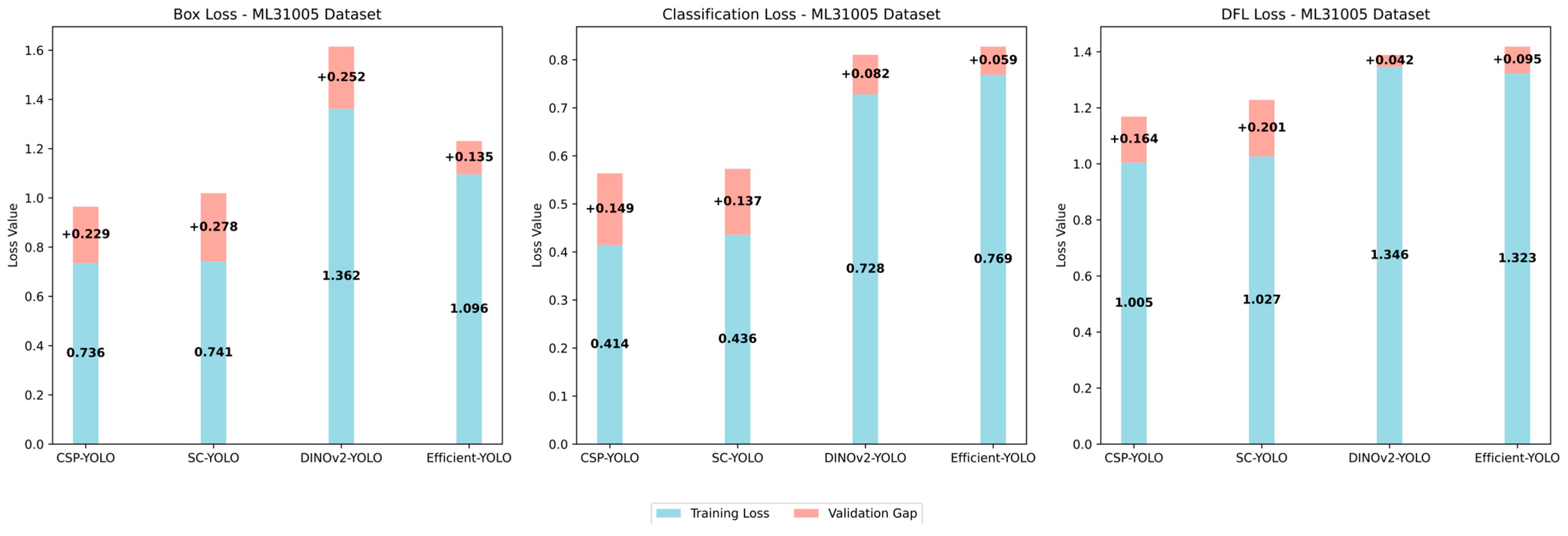

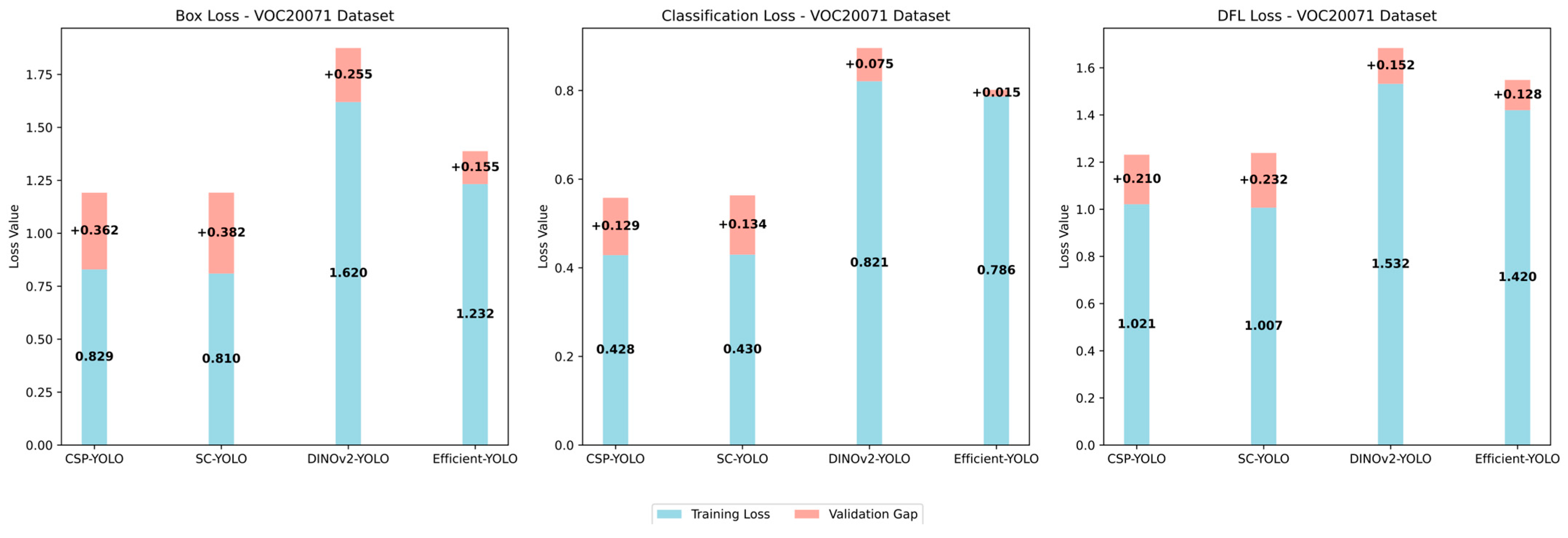

4.4. Training Dynamics and Efficiency

4.5. Generalization and Deployment Considerations

5. Conclusions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, J.; Zhao, X.; Zhou, G.; Zhang, M. Standardized use inspection of workers’ personal protective equipment based on deep learning. Saf. Sci. 2022, 150, 105689. [Google Scholar] [CrossRef]

- Kumar, S.; Gupta, H.; Yadav, D.; Ansari, I.A.; Verma, O.P. YOLOv4 algorithm for the real-time detection of fire and personal protective equipments at construction sites. Multimed. Tools Appl. 2022, 81, 22163–22183. [Google Scholar] [CrossRef]

- Al-Azani, S.; Luqman, H.; Alfarraj, M.; Sidig, A.A.I.; Khan, A.H.; Al-Hammed, D. Real-Time Monitoring of Personal Protective Equipment Compliance in Surveillance Cameras. IEEE Access 2024, 12, 121882–121895. [Google Scholar] [CrossRef]

- Riaz, M.; He, J.; Xie, K.; Alsagri, H.S.; Moqurrab, S.A.; Alhakbani, H.A.A.; Obidallah, W.J. Enhancing Workplace Safety: PPE_Swin—A Robust Swin Transformer Approach for Automated Personal Protective Equipment Detection. Electronics 2023, 12, 4675. [Google Scholar] [CrossRef]

- Xie, B.; He, S.; Cao, X. Target detection for forward looking sonar image based on deep learning. In Proceedings of the 2022 41st Chinese Control Conference (CCC), Hefei, China, 25–27 July 2022; pp. 7191–7196. [Google Scholar]

- Zhang, L.; Wang, J.; Wang, Y.; Sun, H.; Zhao, X. Automatic construction site hazard identification integrating construction scene graphs with BERT based domain knowledge. Autom. Constr. 2022, 142, 104535. [Google Scholar] [CrossRef]

- Song, Y.; Hong, S.; Hu, C.; He, P.; Tao, L.; Tie, Z.; Ding, C. MEB-YOLO: An Efficient Vehicle Detection Method in Complex Traffic Road Scenes. Comput. Mater. Contin. 2023, 75, 3. [Google Scholar] [CrossRef]

- He, L.; Zhou, Y.; Liu, L.; Ma, J. Research and Application of YOLOv11-Based Object Segmentation in Intelligent Recognition at Construction Sites. Buildings 2024, 14, 3777. [Google Scholar] [CrossRef]

- Zhao, J.; Miao, S.; Kang, R.; Cao, L.; Zhang, L.; Ren, Y. Insulator Defect Detection Algorithm Based on Improved YOLOv11n. Sensors 2025, 25, 1327. [Google Scholar] [CrossRef]

- Musarat, M.A.; Khan, A.M.; Alaloul, W.S.; Blas, N.; Ayub, S. Automated monitoring innovations for efficient and safe construction practices. Results Eng. 2024, 22, 102057. [Google Scholar] [CrossRef]

- Rasouli, S.; Alipouri, Y.; Chamanzad, S. Smart Personal Protective Equipment (PPE) for construction safety: A literature review. Saf. Sci. 2024, 170, 106368. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Chang, V. A Multi-Criteria Decision-Making Framework to Evaluate the Impact of Industry 5.0 Technologies: Case Study, Lessons Learned, Challenges and Future Directions. Inf. Syst. Front. 2024, 27, 791–821. [Google Scholar] [CrossRef]

- Zeibak-Shini, R.; Malka, H.; Kima, O.; Shohet, I.M. Analytical Hierarchy Process for Construction Safety Management and Resource Allocation. Appl. Sci. 2024, 14, 9265. [Google Scholar] [CrossRef]

- Liu, H.; Li, Z.; Hall, D.; Liang, P.; Ma, T. Sophia: A scalable stochastic second-order optimizer for language model pre-training. arXiv 2023, arXiv:2305.14342. [Google Scholar]

- Nath, N.D.; Behzadan, A.H.; Paal, S.G. Deep learning for site safety: Real-time detection of personal protective equipment. Autom. Constr. 2020, 112, 103085. [Google Scholar] [CrossRef]

- Wu, J.; Cai, N.; Chen, W.; Wang, H.; Wang, G. Automatic detection of hardhats worn by construction personnel: A deep learning approach and benchmark dataset. Autom. Constr. 2019, 106, 102894. [Google Scholar] [CrossRef]

- Chang, R.; Zhang, B.; Zhu, Q.; Zhao, S.; Yan, K.; Yang, Y. FFA-YOLOv7: Improved YOLOv7 Based on Feature Fusion and Attention Mechanism for Wearing Violation Detection in Substation Construction Safety. J. Electr. Comput. Eng. 2023, 2023, 9772652. [Google Scholar] [CrossRef]

- Zhang, L.; Sun, Z.; Tao, H.; Wang, M.; Yi, W. Research on Mine-Personnel Helmet Detection Based on Multi-Strategy-Improved YOLOv11. Sensors 2024, 25, 170. [Google Scholar] [CrossRef]

- Ban, Y.J.; Lee, S.; Park, J.; Kim, J.E.; Kang, H.S.; Han, S. Dinov2_Mask R-CNN: Self-supervised Instance Segmentation of Diabetic Foot Ulcers. In Diabetic Foot Ulcers Grand Challenge; Springer Nature Switzerland: Cham, Switzerland, 2024; pp. 17–28. [Google Scholar]

- Paramonov, K.; Zhong, J.X.; Michieli, U.; Moon, J.; Ozay, M. Swiss dino: Efficient and versatile vision framework for on-device personal object search. In Proceedings of the 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Abu Dhabi, United Arab Emirates, 14–18 October 2024; pp. 2564–2571. [Google Scholar]

- Ferdous, M.; Ahsan, S.M.M. PPE detector: A YOLO-based architecture to detect personal protective equipment (PPE) for construction sites. PeerJ Comput. Sci. 2022, 8, e999. [Google Scholar] [CrossRef]

- Zhao, L.; Tohti, T.; Hamdulla, A. BDC-YOLOv5: A helmet detection model employs improved YOLOv5. Signal Image Video Process. 2023, 17, 4435–4445. [Google Scholar] [CrossRef]

- Li, H.; Wu, D.; Zhang, W.; Xiao, C. YOLO-PL: Helmet wearing detection algorithm based on improved YOLOv4. Digit. Signal Process. 2024, 144, 104283. [Google Scholar] [CrossRef]

- Nguyen, N.T.; Tran, Q.; Dao, C.H.; Nguyen, D.A.; Tran, D.H. Automatic detection of personal protective equipment in construction sites using metaheuristic optimized YOLOv5. Arab. J. Sci. Eng. 2024, 49, 13519–13537. [Google Scholar] [CrossRef]

- Yang, X.; Wang, J.; Dong, M. SDCB-YOLO: A High-Precision Model for Detecting Safety Helmets and Reflective Clothing in Complex Environments. Appl. Sci. 2024, 14, 7267. [Google Scholar] [CrossRef]

- Song, X.; Zhang, T.; Yi, W. An improved YOLOv8 safety helmet wearing detection network. Sci. Rep. 2024, 14, 17550. [Google Scholar] [CrossRef]

- Alkhammash, E.H. Multi-Classification Using YOLOv11 and Hybrid YOLO11n-MobileNet Models: A Fire Classes Case Study. Fire 2025, 8, 17. [Google Scholar] [CrossRef]

- Kim, D.; Xiong, S. Enhancing Worker Safety: Real-Time Automated Detection of Personal Protective Equipment to Prevent Falls from Heights at Construction Sites Using Improved YOLOv8 and Edge Devices. J. Constr. Eng. Manag. 2025, 151, 04024187. [Google Scholar] [CrossRef]

- Di, B.; Xiang, L.; Daoqing, Y.; Kaimin, P. MARA-YOLO: An efficient method for multiclass personal protective equipment detection. IEEE Access 2024, 12, 24866–24878. [Google Scholar] [CrossRef]

- Zhang, H.; Mu, C.; Ma, X.; Guo, X.; Hu, C. MEAG-YOLO: A Novel Approach for the Accurate Detection of Personal Protective Equipment in Substations. Appl. Sci. 2024, 14, 4766. [Google Scholar] [CrossRef]

- Chen, H.; Li, Y.; Wen, H.; Hu, X. YOLOv5s-gnConv: Detecting personal protective equipment for workers at height. Front. Public Health 2023, 11, 1225478. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. EfficientNet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Sun, Z.; Cui, Y.; Han, Y.; Jiang, K. Substation high-voltage switchgear detection based on improved EfficientNet-YOLOv5s model. IEEE Access 2024, 12, 60015–60027. [Google Scholar] [CrossRef]

- Li, R.; Wu, J.; Cao, L. Ship target detection of unmanned surface vehicle base on efficientdet. Syst. Sci. Control Eng. 2022, 10, 264–271. [Google Scholar] [CrossRef]

- Huang, G.; Zhou, Y.; Hu, X.; Zhang, C.; Zhao, L.; Gan, W. DINO-Mix enhancing visual place recognition with foundational vision model and feature mixing. Sci. Rep. 2024, 14, 22100. [Google Scholar] [CrossRef]

- Zhang, K.; Yuan, B.; Cui, J.; Liu, Y.; Zhao, L.; Zhao, H.; Chen, S. Lightweight tea bud detection method based on improved YOLOv5. Sci. Rep. 2024, 14, 31168. [Google Scholar] [CrossRef]

- Onososen, A.O.; Musonda, I.; Onatayo, D.; Saka, A.B.; Adekunle, S.A.; Onatayo, E. Drowsiness Detection of Construction Workers: Accident Prevention Leveraging Yolov8 Deep Learning and Computer Vision Techniques. Buildings 2025, 15, 500. [Google Scholar] [CrossRef]

- Ji, X.; Gong, F.; Yuan, X.; Wang, N. A high-performance framework for personal protective equipment detection on the offshore drilling platform. Complex Intell. Syst. 2023, 9, 5637–5652. [Google Scholar] [CrossRef]

- Yipeng, L.; Junwu, W. Personal protective equipment detection for construction workers: A novel dataset and enhanced YOLOv5 approach. IEEE Access 2024, 12, 47338–47358. [Google Scholar] [CrossRef]

- Han, D.; Ying, C.; Tian, Z.; Dong, Y.; Chen, L.; Wu, X.; Jiang, Z. YOLOv8s-SNC: An Improved Safety-Helmet-Wearing Detection Algorithm Based on YOLOv8. Buildings 2024, 14, 3883. [Google Scholar] [CrossRef]

- Park, S.; Kim, J.; Wang, S.; Kim, J. Effectiveness of Image Augmentation Techniques on Non-Protective Personal Equipment Detection Using YOLOv8. Appl. Sci. 2025, 15, 2631. [Google Scholar] [CrossRef]

- Alkhammash, E.H. A Comparative Analysis of YOLOv9, YOLOv10, YOLOv11 for Smoke and Fire Detection. Fire 2025, 8, 26. [Google Scholar] [CrossRef]

- Liu, J.; Zhao, J.; Cao, Y.; Wang, Y.; Dong, C.; Guo, C. Road manhole cover defect detection via multi-scale edge enhancement and feature aggregation pyramid. Sci. Rep. 2025, 15, 10346. [Google Scholar] [CrossRef]

- Yang, L.; Chen, G.; Liu, J.; Guo, J. Wear State Detection of Conveyor Belt in Underground Mine Based on Retinex-YOLOv8-EfficientNet-NAM. IEEE Access 2024, 12, 25309–25324. [Google Scholar] [CrossRef]

- Li, K.; Zhu, J.; Li, N. Lightweight automatic identification and location detection model of farmland pests. Wirel. Commun. Mob. Comput. 2021, 2021, 9937038. [Google Scholar] [CrossRef]

- Fan, J.; Cui, L.; Fei, S. Waste detection system based on data augmentation and YOLO_EC. Sensors 2023, 23, 3646. [Google Scholar] [CrossRef]

- Rabbani, N.; Bartoli, A. Can surgical computer vision benefit from large-scale visual foundation models? Int. J. Comput. Assist. Radiol. Surg. 2024, 19, 1157–1163. [Google Scholar] [CrossRef] [PubMed]

- Chen, F.; Giuffrida, M.V.; Tsaftaris, S.A. Adapting vision foundation models for plant phenotyping. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 604–613. [Google Scholar]

- Käppeler, M.; Petek, K.; Vödisch, N.; Burgard, W.; Valada, A. Few-shot panoptic segmentation with foundation models. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024; pp. 7718–7724. [Google Scholar]

- Luo, Z.; Feng, T.; Li, G. Robust vision-based traffic anomaly detection: DINOv2 driven gated recurrent unit network. Adv. Transdiscip. Eng. 2024, 27, 261–268. [Google Scholar]

- Ge, Y.; Meng, L. A Powerful Object Detection Network for Industrial Anomaly Detection. In Proceedings of the 2024 6th International Conference on Industrial Artificial Intelligence (IAI), Shenyang, China, 21–24 August 2024; pp. 1–6. [Google Scholar]

- Qin, Y.; Kou, Z.; Han, C.; Wang, Y. Intelligent Gangue Sorting System Based on Dual-Energy X-ray and Improved YOLOv5 Algorithm. Appl. Sci. 2023, 14, 98. [Google Scholar] [CrossRef]

- Jeon, Y.D.; Kang, M.J.; Kuh, S.U.; Cha, H.Y.; Kim, M.S.; You, J.Y.; Yoon, D.K. Deep learning model based on you only look once algorithm for detection and visualization of fracture areas in three-dimensional skeletal images. Diagnostics 2023, 14, 11. [Google Scholar] [CrossRef] [PubMed]

- Zhou, J.; Xu, D.; Min, X.; Wu, D. An Improved Underwater Target Detection Algorithm Based on YOLOX. In Proceedings of the OCEANS 2024-Singapore, Singapore, 15–18 April 2024; pp. 1–7. [Google Scholar]

- Deng, X.; Qi, L.; Liu, Z.; Liang, S.; Gong, K.; Qiu, G. Weed target detection at seedling stage in paddy fields based on YOLOX. PLoS ONE 2023, 18, e0294709. [Google Scholar] [CrossRef]

- Yue, X.; Li, H.; Meng, L. An ultralightweight object detection network for empty-dish recycling robots. IEEE Trans. Instrum. Meas. 2023, 72, 1–12. [Google Scholar] [CrossRef]

- Ultralytics. YOLOv8 Documentation. Available online: https://docs.ultralytics.com/models/yolov8/ (accessed on 25 July 2025).

- Ultralytics. YOLOv5: A State-of-the-Art Real-Time Object Detection System. Available online: https://github.com/ultralytics/yolov5 (accessed on 25 July 2025).

- Xiong, R.; Tang, P. Pose guided anchoring for detecting proper use of personal protective equipment. Autom. Constr. 2021, 130, 103828. Available online: https://github.com/ruoxinx/PPE-Detection-Pose (accessed on 18 March 2025). [CrossRef]

- LukeHowardUTS. ML 31005 Dataset. Available online: https://universe.roboflow.com/lukehowarduts/ml-31005 (accessed on 18 March 2025).

- Xu, X.; Wu, X. Target recognition algorithm for UAV aerial images based on improved YOLO-X. In Proceedings of the 2023 IEEE 5th International Conference on Civil Aviation Safety and Information Technology (ICCASIT), Dali, China, 11–13 October 2023; pp. 83–87. [Google Scholar]

- Lin, M.; Ma, L.; Yu, B. An efficient and light-weight detector for wine bottle defects. In Proceedings of the 2020 16th International Conference on Control, Automation, Robotics and Vision (ICARCV), Shenzhen, China, 13–15 December 2020; pp. 957–962. [Google Scholar]

- Zheng, L.; Long, L.; Zhu, C.; Jia, M.; Chen, P.; Tie, J. A lightweight cotton field weed detection model enhanced with EfficientNet and attention mechanisms. Agronomy 2024, 14, 2649. [Google Scholar] [CrossRef]

| Study | Model | YOLO Base | Key Enhancements | Optimizer | PPE Classes | Dataset | mAP@0.5 | Notable Contribution |

|---|---|---|---|---|---|---|---|---|

| Kumar et al. [2] | - | YOLOv4 | CSPDarknet53 + SPP + PANet | Standard | Fire, Person_With_Helmet, Person, Safety Vest, Fire Extinguisher, Safety Glass | Custom | 76.86% | Real-time multi-class PPE and fire detection |

| Chang et al. [17] | FFA-YOLOv7 | YOLOv7 | Feature Fusion + Attention | Standard | Ladder, Insulator, Helmet (with and without), Safety Belt (with and without) | Custom substation dataset | 98.16% | Feature fusion pathway combining shallow position with deep semantic features |

| Zhao et al. [22] | BDC-YOLOv5 | YOLOv5x | BiFPN, CBAM Attention, Extra Head | SGD | Helmet | SHWD | 94.50% | BiFPN + CBAM + 160 × 160 head for dense scenes |

| Ji et al. [38] | RFA-YOLO | YOLOv4 | Residual Feature Augmentation | SGD | Person, Helmet, Workwear | Offshore Platform | 88.41% | Hybrid detection-classification with position features |

| Chen et al. [31] | YOLOv5s-gnConv | YOLOv5s | gnConv (Gated Convolution) | Standard | Helmet, Safety Harness | Custom | 92.96% | Higher-order spatial interactions through gated convolution |

| Yipeng and Junwu [39] | AL-YOLOv5 | YOLOv5 | Coordinate Attention + SEIoU Loss | Standard | Hardhat, Person, Reflective Clothes, Other Clothes | Custom | 93.8% | Solving overlapping detection frames |

| Di et al. [29] | MARA-YOLO | YOLOv8-s | MobileOne-S0, AS-Block, R-C2F, RASFF | Adam | Hardhat, Mask, No_Head_PPE, Gloves, No_Gloves, Safety Vest, No_Safety Vest, No_PPEs, Safety Cone | KSE-PPE | 74.7% | MobileOne and receptive field fusion for multi-class detection |

| Yang et al. [25] | SDCB-YOLO | YOLOv8n | SE Attention, DIOU Loss, CARAFE, BiFPN | Standard | Safe, Unsafe, No_Helmet, No_Jacket | Custom | 97.1% | Lightweight upsampling and attention for cluttered scenes |

| Song et al. [26] | - | YOLOv8 | DWR Attention, ASPP, NWD Loss | Anchor-free + NWD | Helmet, No_Helmet | SHWD | 92.0% | Small, distant helmet detection in complex scenes |

| Li et al. [23] | YOLO-PL | YOLOv4 | DCSPX, E-PAN, L-VoVN, MP, Swish | CSPDarknet53 | Helmet | SHWD, SHD, MHD | 94.23% | Lightweight variant for small-helmet detection |

| Nguyen et al. [24] | - | YOLOv5s | Four-scale Detection | Seahorse Optimization | Gloves, Hardhat, Mask, Safety Vest, Shoes, No_Gloves, No_Hardhat, No_Mask, No_Safety Vest, No_Shoes | Custom | 66.4% | Metaheuristic optimization for small/missing PPE |

| Zhang et al. [30] | MEAG-YOLO | YOLOv8n | MSCA, EC2f, ASFF, GhostConv, PAN | Standard | Helmet, Person, Badge, Gloves, Operating Bar, Wrong Gloves | Substation | 96.5% | Multi-attention and fusion modules for efficient detection |

| Han et al. [40] | YOLOv8s-SNC | YOLOv8s | SPD-Conv Module + SEResNeXt Detection Head + C2f-CA Module + Small Object Detection Layer (4P) | SGD | Helmet, No_Helmet | SHWD + EWHD | 92.6% | Enhanced small object detection via SPD-Conv (reduced information loss), SEResNeXt head (superior feature extraction), and dedicated small-target layer for complex construction sites. |

| He et al. [8] | YOLOv11-Seg | YOLOv11 | C3K2 Module + C2PSA (Cross-Stage Partial Self-Attention) + DWConv (Depthwise Convolution) + CSPDarknet Backbone | AdamW | Bulldozer, Concrete Mixer, Crane, Excavator, Hanging Head, Loader, Other Vehicle, Pile Driving, Pump Truck, Roller, Static Crane, Truck, Worker | SODA + MOCS | 80.8% | Real-time multi-object segmentation for construction sites, robust in dynamic scenarios at 1080P resolution. |

| Park et al. [41] | - | YOLOv8 | ViT, Swin, and PVT | Standard | No_Helmet, No_ Mask, No_Gloves, No_Vest, No_Shoes | Custom | 73.12% (PVT) | Brightness and scale augmentation for PPE absence detection |

| Kim and Xiong [28] | - | YOLOv8s | CA module, GhostConv, Transfer Learning, Merge-NMS | SGD | Helmet, No_Helmet, Harness, No_Harness, Lanyard | Custom | 92.52% | Edge-based detection of fall-prevention PPE |

| This study (2025) | SC-YOLO | YOLOv11n | CSPDarknet + Sophia Optimizer | Sophia (Second-order) | Boots, Glass, Gloves, Helmet, Person, Vest | VOC2007-1, ML-31005 | 96.3–97.6% | Second-order optimization for robust small-object detection |

| Backbone | Key Features | Optimization Method | Strengths | Limitations | Primary Applications |

|---|---|---|---|---|---|

| EfficientNet | Compound scaling, MBConv blocks | Standard (SGD) | Balanced efficiency–accuracy trade-off, parameter efficiency | Moderate feature representation power | Resource-constrained detection |

| DINOv2 | Self-supervised learning, Transformer architecture | Standard (SGD) | Long-range dependencies, strong semantic representation | High computational cost, localization challenges | Semantic segmentation, context-rich scenarios |

| CSPDarknet | Cross-stage partial connections, feature reuse | Standard (SGD) | Efficient gradient flow, feature integrity | Standard optimization limitations | Real-time detection, edge deployment |

| SC-YOLO (This study) | CSPDarknet backbone, curvature-aware updates | Sophia (second-order) | Enhanced small-object detection, faster convergence | Marginally increased computation | Construction PPE monitoring |

| Dataset | Images | Instances | Classes | Conditions | PPE Types | Adaptability For Construction Use |

|---|---|---|---|---|---|---|

| VOC2007-1 | 900 | 7223 | 3 | Mixed environments (indoor/ outdoor), diverse lighting | Hardhat, Vest, Worker | Moderate |

| Ml-31005 | 527 | 3591 | 6 | Outdoor daylight, Indoor artificial lighting | Boots, Glass, Gloves, Helmet, Person, Vest | High |

| Class | Train Set | Validation Set | Test Set | Total | ||||

|---|---|---|---|---|---|---|---|---|

| Images | Instances | Images | Instances | Images | Instances | Images | Instances | |

| All | 625 | 4965 | 183 | 1374 | 92 | 884 | 900 | 7223 |

| Hardhat | 569 | 1803 | 167 | 514 | 82 | 309 | 818 | 2626 |

| Vest | 307 | 908 | 84 | 212 | 49 | 170 | 440 | 1290 |

| Worker | 625 | 2254 | 183 | 648 | 92 | 405 | 900 | 3307 |

| Set Distribution | 68.7% | 19.0% | 12.3% | 100% | ||||

| Class | Train Set | Validation Set | Test Set | Total | ||||

|---|---|---|---|---|---|---|---|---|

| Images | Instances | Images | Instances | Images | Instances | Images | Instances | |

| All | 369 | 2472 | 80 | 549 | 78 | 570 | 527 | 3591 |

| Boots | 290 | 601 | 68 | 138 | 65 | 128 | 423 | 867 |

| Glass | 232 | 244 | 48 | 49 | 50 | 53 | 330 | 346 |

| Glove | 262 | 489 | 58 | 109 | 60 | 116 | 380 | 714 |

| Helmet | 276 | 331 | 55 | 70 | 60 | 70 | 391 | 471 |

| Person | 347 | 419 | 74 | 94 | 74 | 104 | 495 | 617 |

| Vest | 326 | 388 | 73 | 89 | 74 | 99 | 473 | 576 |

| Set Distribution | 68.8% | 15.3% | 15.9% | 100% | ||||

| Object | Efficient-YOLO | |||||

|---|---|---|---|---|---|---|

| Class | Image | Instances | Precision | Recall | mAP@0.5 | mAP@0.5:0.95 |

| All | 183 | 1374 | 0.887 | 0.808 | 0.887 | 0.534 |

| Hardhat | 167 | 514 | 0.915 | 0.794 | 0.879 | 0.495 |

| Vest | 84 | 212 | 0.822 | 0.811 | 0.871 | 0.539 |

| Worker | 183 | 648 | 0.924 | 0.819 | 0.912 | 0.567 |

| Object | DINOv2-YOLO | |||||

| Class | Image | Instances | Precision | Recall | mAP@0.5 | mAP@0.5:0.95 |

| All | 183 | 1374 | 0.788 | 0.715 | 0.778 | 0.279 |

| Hardhat | 167 | 514 | 0.727 | 0.675 | 0.661 | 0.212 |

| Vest | 84 | 212 | 0.802 | 0.689 | 0.789 | 0.277 |

| Worker | 183 | 648 | 0.835 | 0.781 | 0.883 | 0.348 |

| Object | CSP-YOLO | |||||

| Class | Image | Instances | Precision | Recall | mAP@0.5 | mAP@0.5:0.95 |

| All | 183 | 1374 | 0.953 | 0.892 | 0.951 | 0.633 |

| Hardhat | 167 | 514 | 0.974 | 0.876 | 0.939 | 0.567 |

| Vest | 84 | 212 | 0.945 | 0.890 | 0.955 | 0.640 |

| Worker | 183 | 648 | 0.940 | 0.910 | 0.960 | 0.692 |

| Object | SC-YOLO | |||||

| Class | Image | Instances | Precision | Recall | mAP@0.5 | mAP@0.5:0.95 |

| All | 183 | 1374 | 0.961 | 0.936 | 0.976 | 0.636 |

| Hardhat | 167 | 514 | 0.976 | 0.924 | 0.968 | 0.563 |

| Vest | 84 | 212 | 0.955 | 0.927 | 0.973 | 0.647 |

| Worker | 183 | 648 | 0.953 | 0.959 | 0.986 | 0.694 |

| Object | Efficient-YOLO | |||||

|---|---|---|---|---|---|---|

| Class | Image | Instances | Precision | Recall | mAP@0.5 | mAP@0.5:0.95 |

| All | 80 | 549 | 0.923 | 0.855 | 0.908 | 0.591 |

| Boots | 68 | 138 | 0.977 | 0.870 | 0.908 | 0.639 |

| Glass | 48 | 49 | 0.869 | 0.898 | 0.889 | 0.441 |

| Glove | 58 | 109 | 0.895 | 0.860 | 0.882 | 0.495 |

| Helmet | 55 | 70 | 0.963 | 0.750 | 0.887 | 0.591 |

| Person | 74 | 94 | 0.898 | 0.843 | 0.913 | 0.677 |

| Vest | 73 | 89 | 0.934 | 0.910 | 0.967 | 0.702 |

| Object | DINOv2-YOLO | |||||

| Class | Image | Instances | Precision | Recall | mAP@0.5 | mAP@0.5:0.95 |

| All | 80 | 549 | 0.770 | 0.741 | 0.777 | 0.301 |

| Boots | 68 | 138 | 0.863 | 0.797 | 0.878 | 0.290 |

| Glass | 48 | 49 | 0.412 | 0.408 | 0.325 | 0.0639 |

| Glove | 58 | 109 | 0.776 | 0.761 | 0.780 | 0.210 |

| Helmet | 55 | 70 | 0.864 | 0.816 | 0.874 | 0.354 |

| Person | 74 | 94 | 0.783 | 0.787 | 0.862 | 0.374 |

| Vest | 73 | 89 | 0.918 | 0.876 | 0.946 | 0.517 |

| Object | CSP-YOLO | |||||

| Class | Image | Instances | Precision | Recall | mAP@0.5 | mAP@0.5:0.95 |

| All | 80 | 549 | 0.935 | 0.917 | 0.939 | 0.682 |

| Boots | 68 | 138 | 0.960 | 0.906 | 0.942 | 0.734 |

| Glass | 48 | 49 | 0.833 | 0.916 | 0.853 | 0.485 |

| Glove | 58 | 109 | 0.934 | 0.890 | 0.944 | 0.619 |

| Helmet | 55 | 70 | 0.946 | 0.943 | 0.958 | 0.705 |

| Person | 74 | 94 | 0.956 | 0.925 | 0.946 | 0.756 |

| Vest | 73 | 89 | 0.982 | 0.921 | 0.990 | 0.790 |

| Object | SC-YOLO | |||||

| Class | Image | Instances | Precision | Recall | mAP@0.5 | mAP@0.5:0.95 |

| All | 80 | 549 | 0.935 | 0.953 | 0.963 | 0.686 |

| Boots | 68 | 138 | 0.972 | 0.933 | 0.956 | 0.746 |

| Glass | 48 | 49 | 0.832 | 0.988 | 0.930 | 0.467 |

| Glove | 58 | 109 | 0.967 | 0.917 | 0.954 | 0.613 |

| Helmet | 55 | 70 | 0.981 | 0.971 | 0.974 | 0.712 |

| Person | 74 | 94 | 0.928 | 0.909 | 0.951 | 0.778 |

| Vest | 73 | 89 | 0.930 | 0.995 | 0.997 | 0.799 |

| Model | Set | VOC2007-1 Dataset | Ml-31005 Dataset | ||||

|---|---|---|---|---|---|---|---|

| Box Loss | Cls Loss | Dfl Loss | Box Loss | Cls Loss | Dfl Loss | ||

| Efficient-YOLO | Train | 1.232 | 0.786 | 1.420 | 1.096 | 0.769 | 1.323 |

| Validation | 1.387 | 0.801 | 1.548 | 1.231 | 0.827 | 1.418 | |

| DINOv2-YOLO | Train | 1.620 | 0.821 | 1.532 | 1.362 | 0.728 | 1.346 |

| Validation | 1.874 | 0.896 | 1.684 | 1.615 | 0.810 | 1.389 | |

| CSP-YOLO | Train | 0.829 | 0.428 | 1.021 | 0.736 | 0.414 | 1.005 |

| Validation | 1.191 | 0.558 | 1.231 | 0.964 | 0.564 | 1.169 | |

| SC-YOLO | Train | 0.810 | 0.430 | 1.007 | 0.741 | 0.436 | 1.027 |

| Validation | 1.192 | 0.563 | 1.239 | 1.019 | 0.573 | 1.228 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Saeheaw, T. SC-YOLO: A Real-Time CSP-Based YOLOv11n Variant Optimized with Sophia for Accurate PPE Detection on Construction Sites. Buildings 2025, 15, 2854. https://doi.org/10.3390/buildings15162854

Saeheaw T. SC-YOLO: A Real-Time CSP-Based YOLOv11n Variant Optimized with Sophia for Accurate PPE Detection on Construction Sites. Buildings. 2025; 15(16):2854. https://doi.org/10.3390/buildings15162854

Chicago/Turabian StyleSaeheaw, Teerapun. 2025. "SC-YOLO: A Real-Time CSP-Based YOLOv11n Variant Optimized with Sophia for Accurate PPE Detection on Construction Sites" Buildings 15, no. 16: 2854. https://doi.org/10.3390/buildings15162854

APA StyleSaeheaw, T. (2025). SC-YOLO: A Real-Time CSP-Based YOLOv11n Variant Optimized with Sophia for Accurate PPE Detection on Construction Sites. Buildings, 15(16), 2854. https://doi.org/10.3390/buildings15162854