Development and Demonstration of the Operational Sustainability Index (OPSi): A Multidimensional Metric for Building Performance Evaluation

Abstract

1. Introduction

2. Methodology

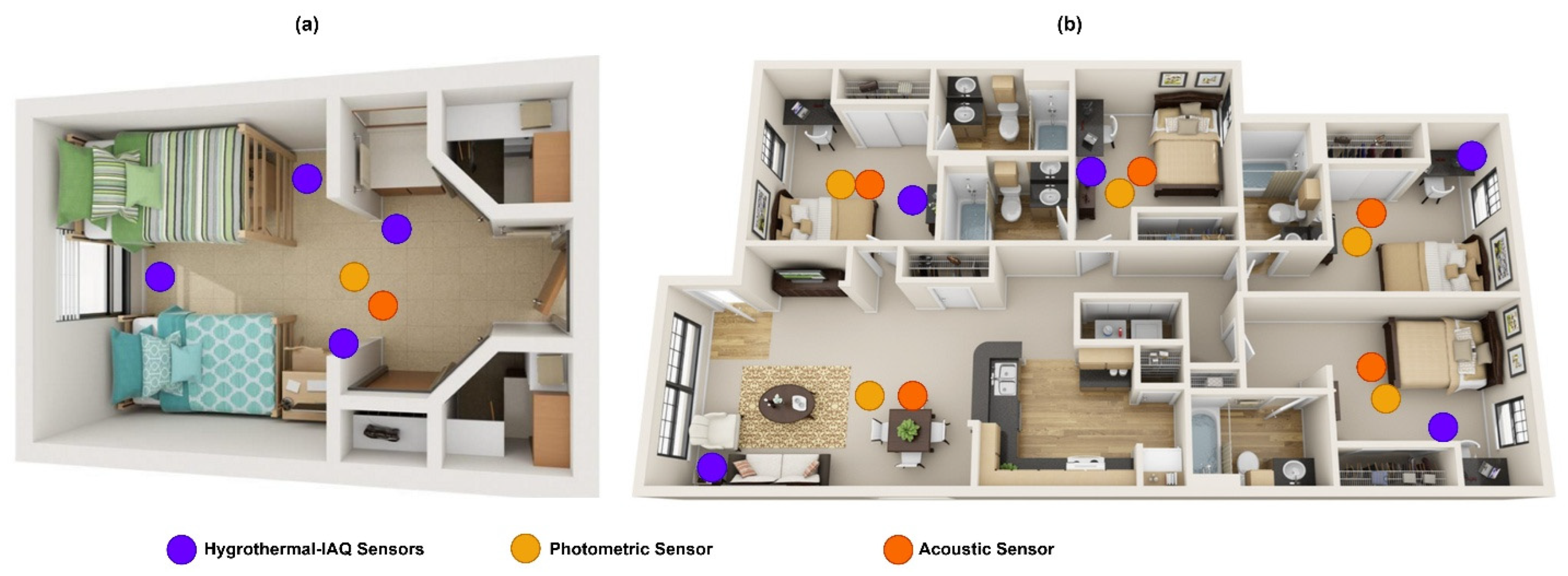

2.1. Data Collection

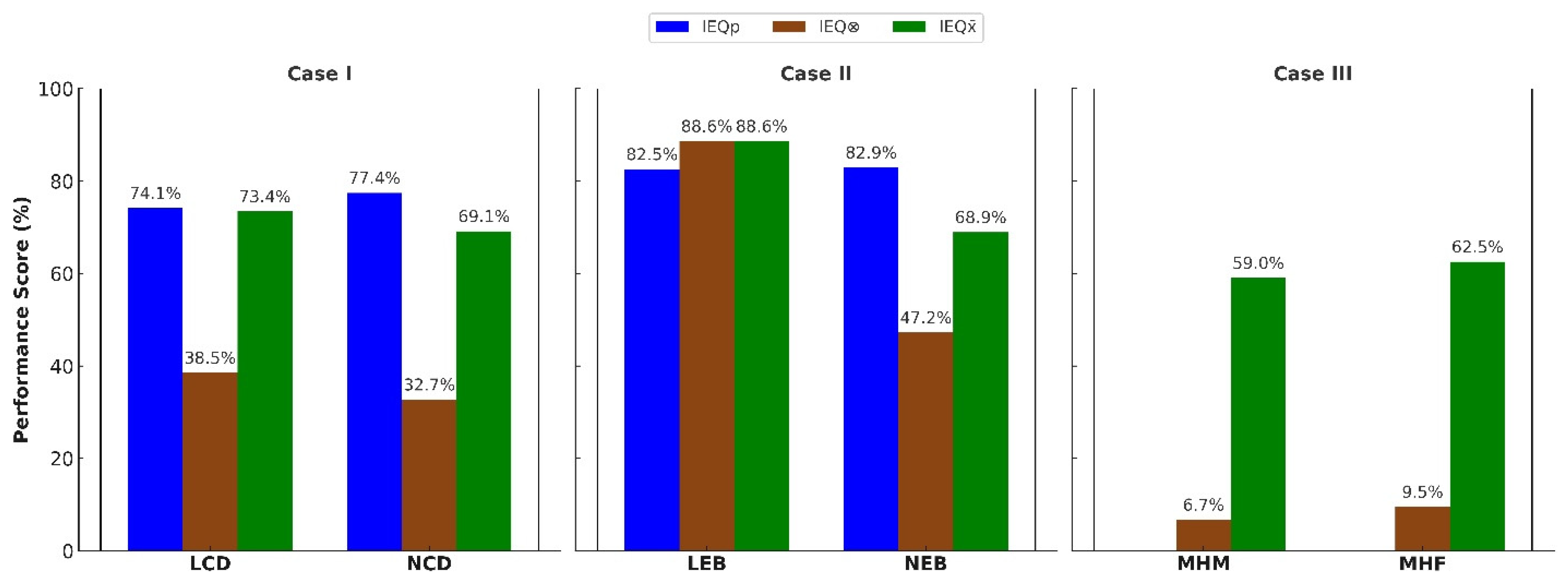

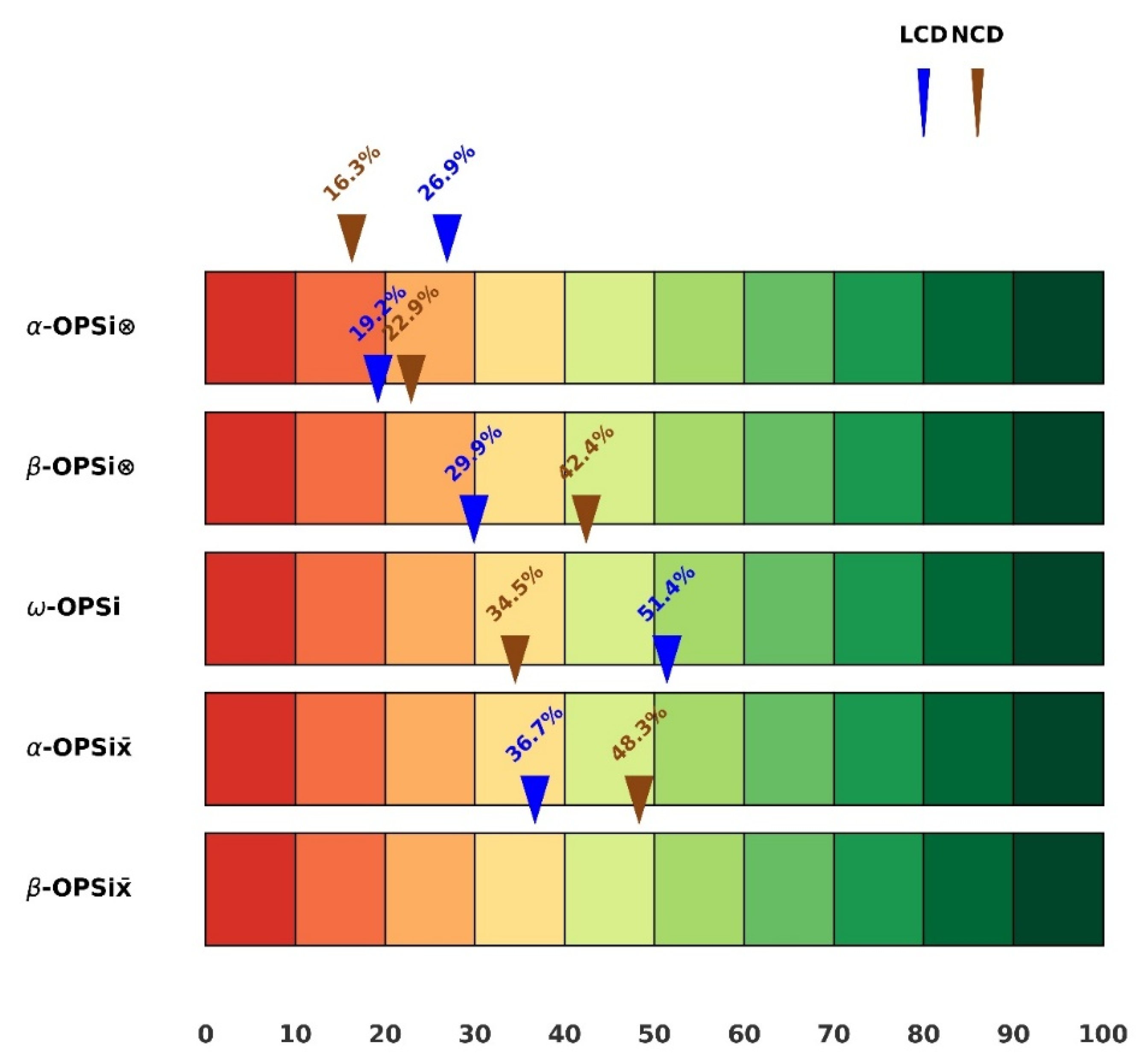

- A LEED Silver-certified dormitory (LCD) constructed in 2015 with a total floor area of 110,000 ft2;

- A non-certified dormitory (NCD) constructed in 2008 with 70,000 ft2.

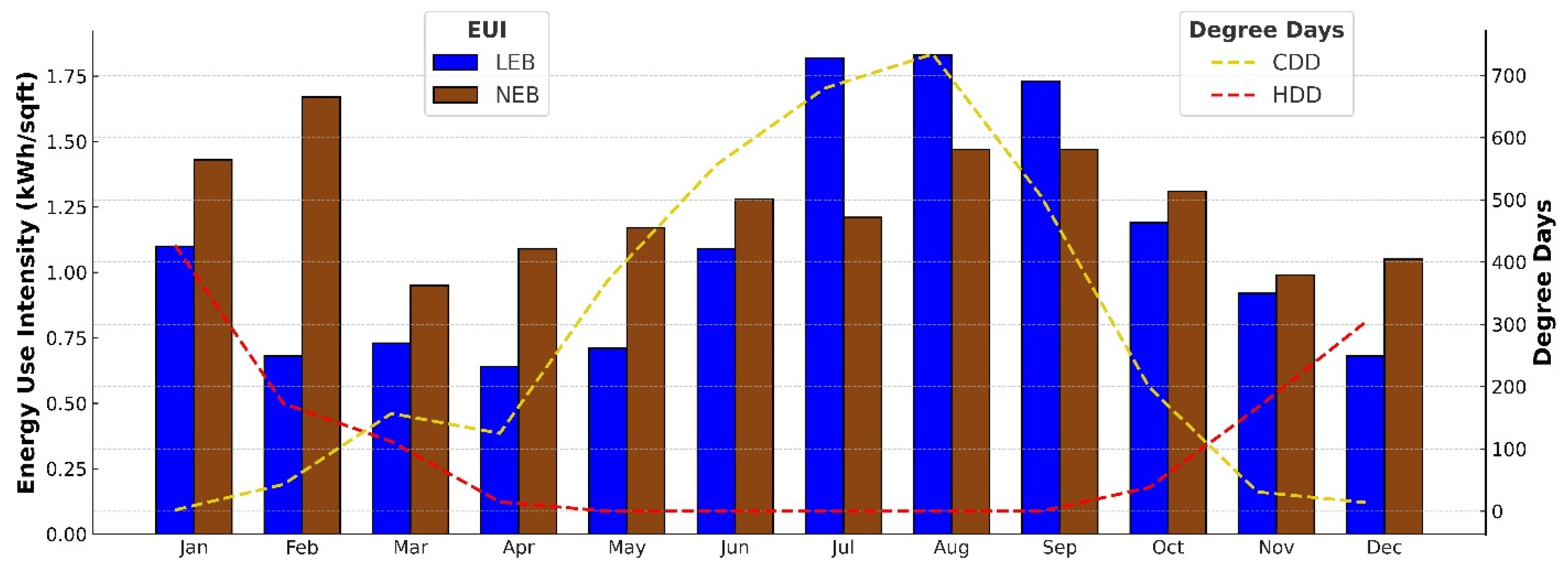

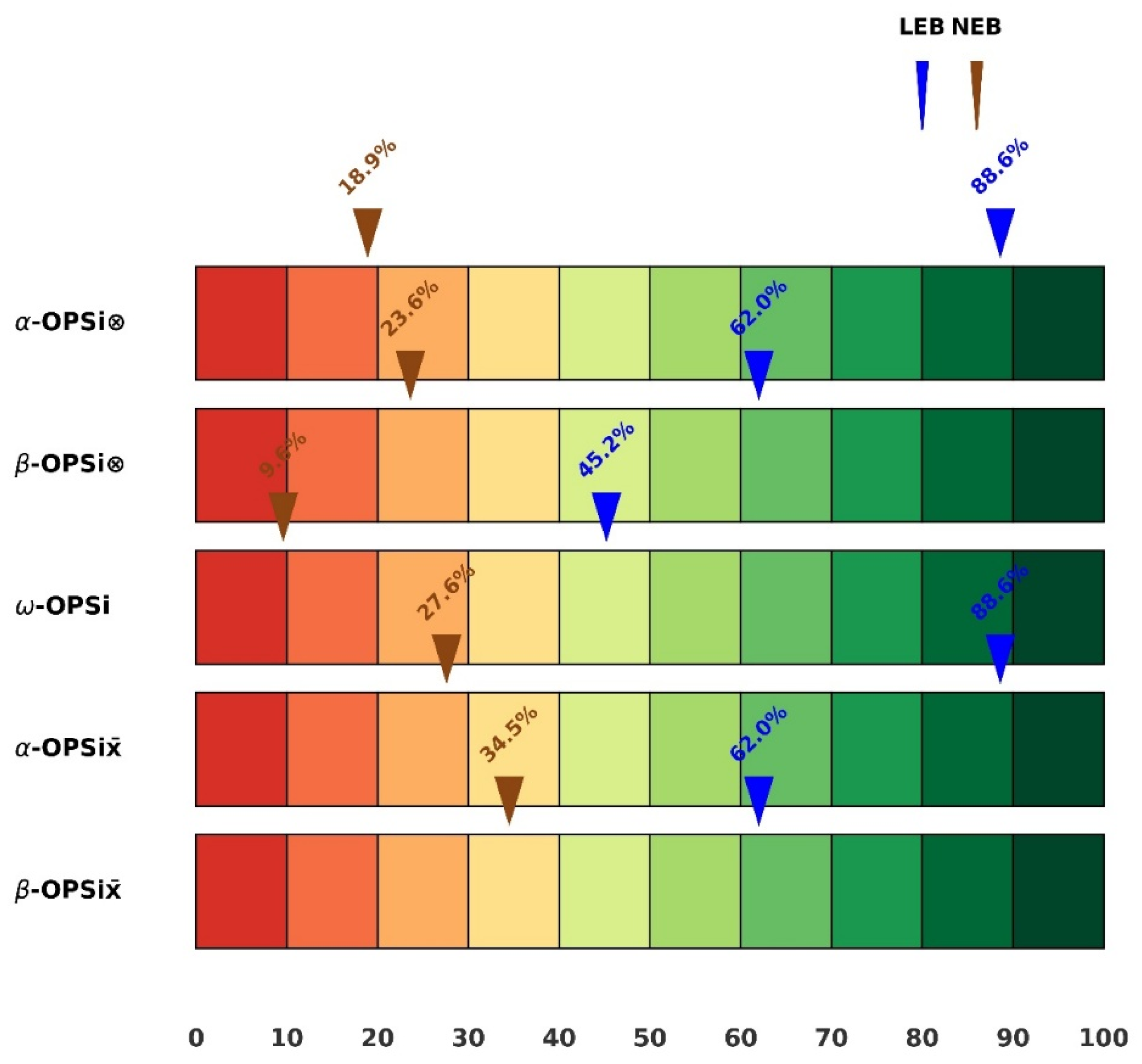

- A LEED-certified event building (LEB) completed in 2014 (single-floor, 2100 ft2);

- A non-certified event building (NEB) built in the 1980s (two-story, 60,000 ft2), comprising a conference room and a lobby.

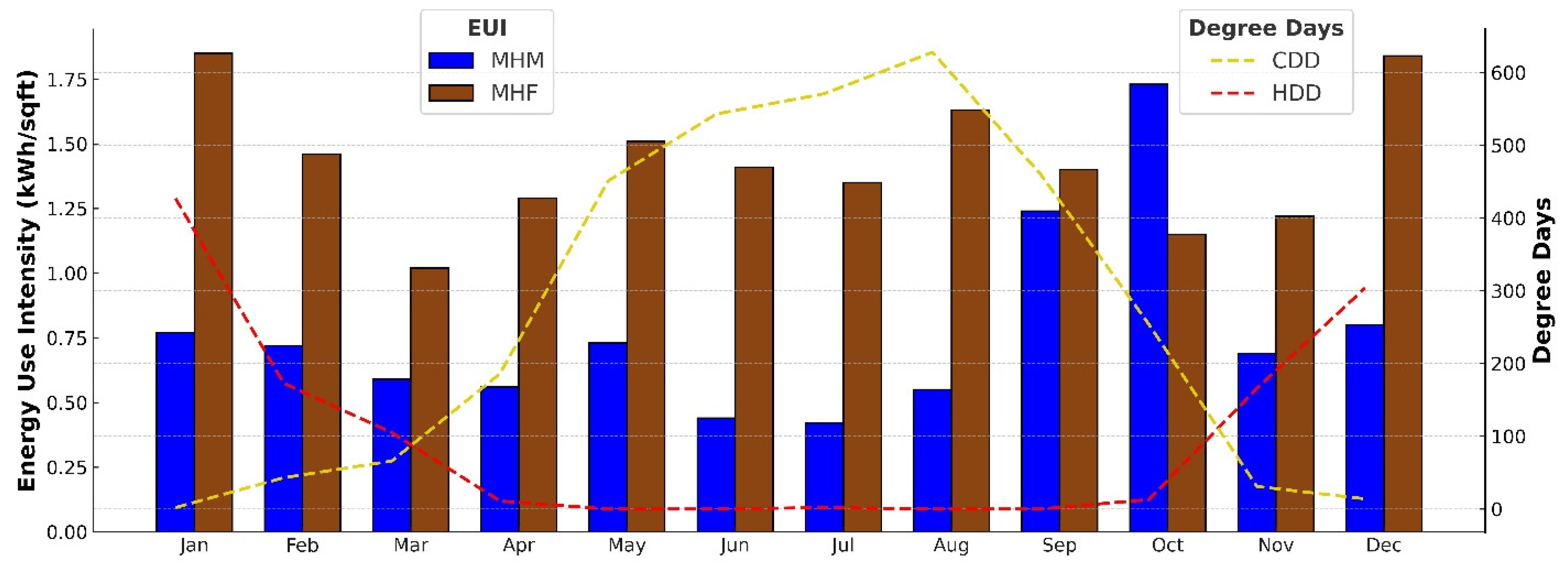

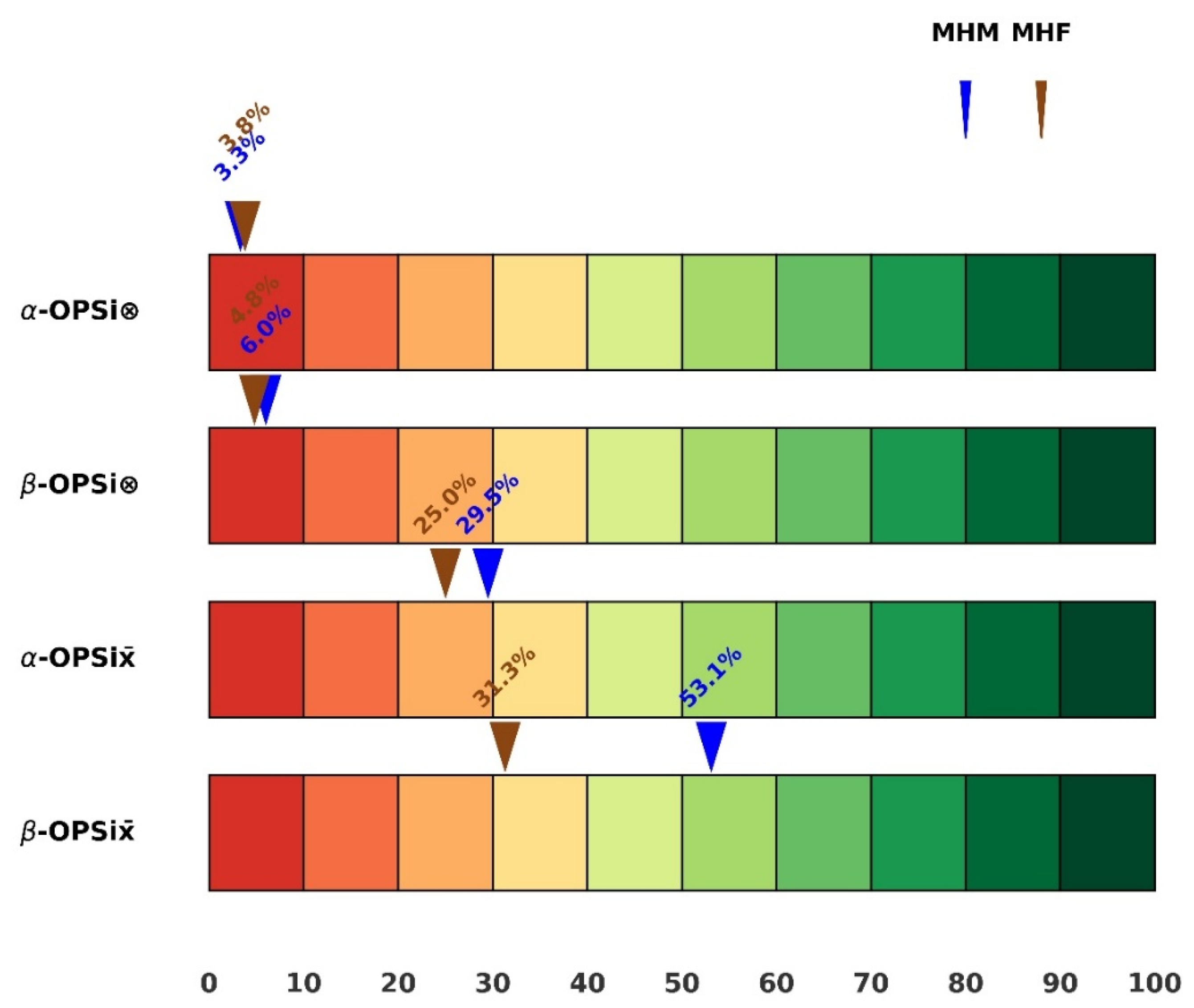

- A male-occupied unit (MHM) with four bedrooms (1100 ft2);

- A female-occupied unit (MHF) with four bedrooms (1300 ft2).

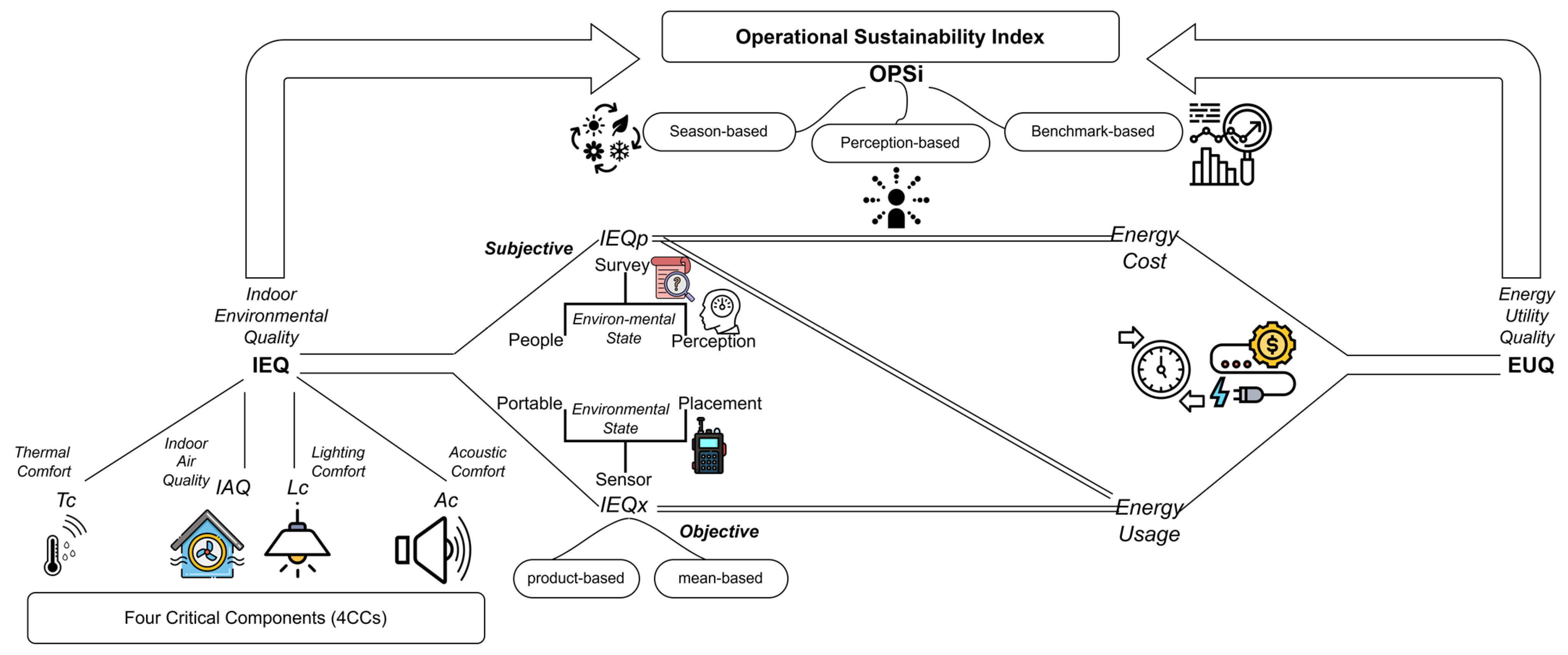

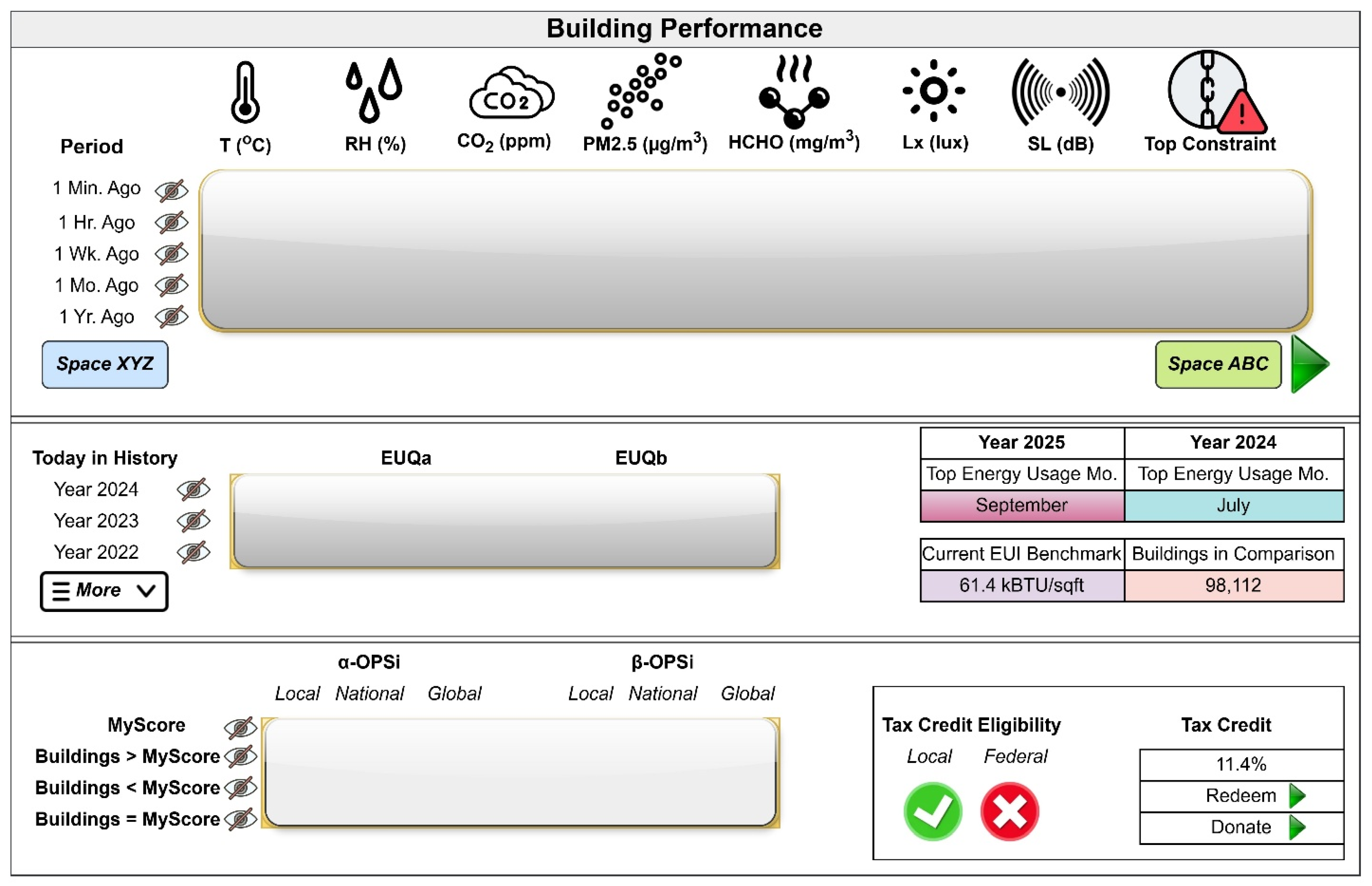

2.2. Development of the Operational Sustainability Index

2.2.1. Indoor Environmental Quality Models

- The subjective dimension (IEQp), derived from the overall mean response value (MRV) associated with survey questions on the four critical components (4CCs);

- The objective dimension (IEQx), calculated using a protocol summarized in Equations (2)–(13), adapted from Mujan et al. [48].

- For Case I (April–May), 0.5 clo was applied for dorm rooms and 0.54 clo for corridors during the cooling season, and 0.9 clo for the heating season (January–February), in line with field observations literature [51], and the mean seasonal air temperature was adopted as the operative temperature. Metabolic rates of 1.0 met and 1.2 met were used for dorm rooms and corridors, respectively, reflecting activities such as eating, reading, sitting, and sleeping in dorm rooms and fleeting standing and sitting in corridors [52].

- In Case II, an average metabolic rate of 1.1 met was assumed for both heating and cooling seasons, reflecting typical activities such as sitting and relaxed standing in event buildings, consistent with ASHRAE Standard 55 [50]. Clothing insulation values of 0.57 clo and 0.9 clo were used for the cooling (March–April) and heating (November) seasons, respectively, based on existing recommendations [53,54].

- For Case III, measured in the transition month of October, 0.75 clo was used, with metabolic rates of 1.0 met for bedrooms and 1.5 met for living rooms.

- Mean-based combination (IEQ);

- Product-based combination (IEQ⮾).

2.2.2. Energy Utility Quality Models

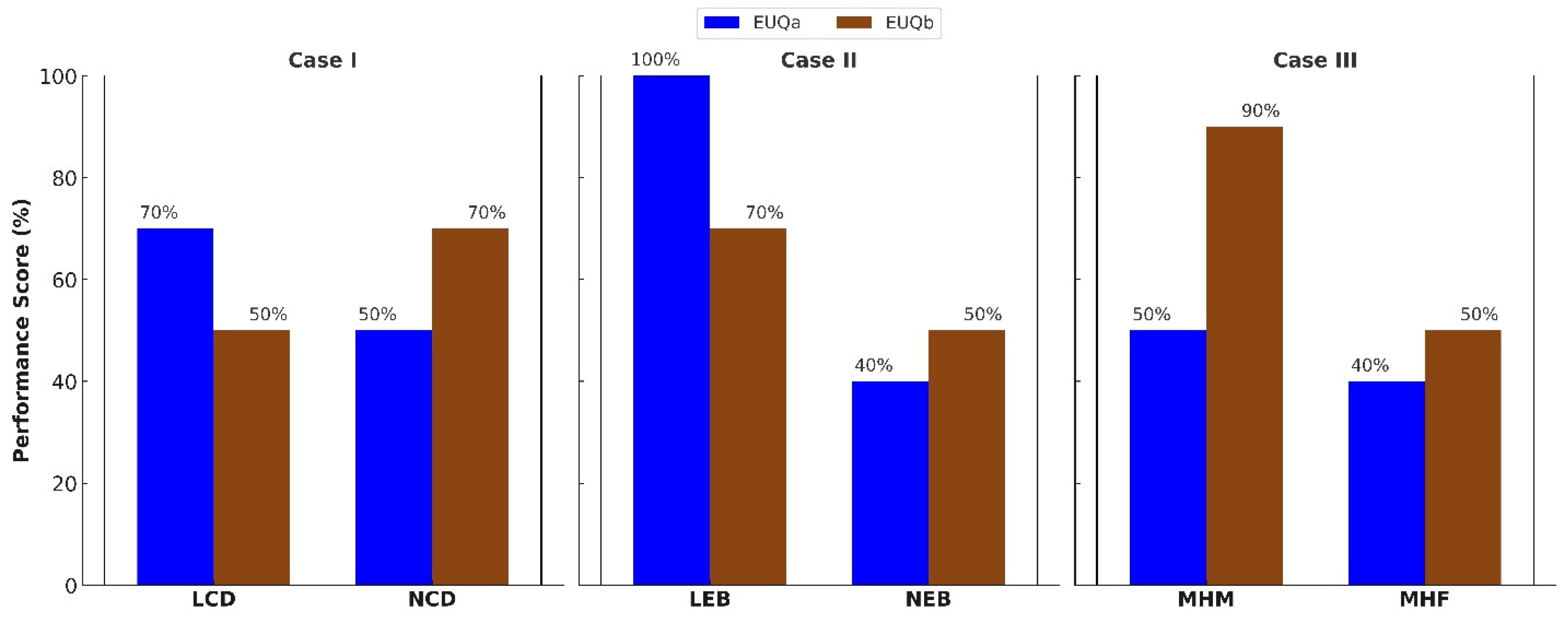

- EUQa: a seasonal comparison metric;

- EUQb: a benchmark-based deviation metric.

- Case I reference benchmark = 57.9 kBTU/sqft (or 16.97 kWh/sqft);

- Case II reference benchmark = 56.2 kBTU/sqft (or 16.47 kWh/sqft);

- Case III reference benchmark = 59.6 kBTU/sqft (or 17.47 kWh/sqft).

- These cases are based on the CBECS database for buildings with comparable functions. Positive EUQb values indicate that the building consumes more energy than the benchmark (relative inefficiency), whereas negative values indicate relative efficiency. A quasi-neutral range is defined as ±9.9% deviation from the benchmark.

2.2.3. Variants of OPSi

- Super-Optimal: 95% < Performance ≤ 100%;

- Optimal: 75% < Performance ≤ 95%;

- Suboptimal: 0% ≤ Performance ≤ 75%.

3. Results

3.1. Indoor Environmental Quality

3.2. Energy Performance

3.3. Operational Sustainability Index

4. Discussion

4.1. Discussion of Results

4.2. Implications for Policy and Practice

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Case Building/Space | Thermal Comfort (Tc) | Indoor Air Quality (IAQ) | Acoustic Comfort (Ac) | Lighting Comfort (Lc) |

|---|---|---|---|---|

| LCD—Room 1 | 40.0% | 74.1% | 89.0% | 79.1% |

| LCD—Room 2 | 66.7% | 56.6% | 52.8% | 75.2% |

| LCD—Corridor | 82.2% | 88.9% | 93.4% | 82.7% |

| NCD—Room 1 | 84.4% | 59.7% | 71.2% | 89.6% |

| NCD—Room 2 | 87.1% | 60.6% | 29.4% | 82.5% |

| NCD—Corridor | 69.2% | 68.1% | 67.8% | 59.2% |

| LEB—Auditorium | 87.2% | 93.7% | 82.0% | 91.5% |

| NEB—Lobby | 76.9% | 91.3% | 37.4% | 48.3% |

| NEB—Conference Room | 85.1% | 87.6% | 65.4% | 59.1% |

| MHM—Bedroom 1 | 75.0% | 47.4% | 100% | 29.8% |

| MHM—Bedroom 2 | 48.0% | 59.2% | 86.4% | 36.6% |

| MHM—Bedroom 3 | 44.0% | 50.1% | 100% | 31.0% |

| MHM—Bedroom 4 | 34.0% | 42.7% | 57.2% | 40.9% |

| MHM—Living Room | 89.0% | 68.6% | 93.2% | 41.4% |

| MHF—Bedroom 1 | 86.0% | 58.5% | 74.0% | 29.1% |

| MHF—Bedroom 2 | 87.0% | 52.2% | 87.0% | 37.5% |

| MHF—Bedroom 3 | 81.0% | 55.8% | 61.0% | 31.7% |

| MHF—Bedroom 4 | 70.0% | 57.4% | 90.6% | 36.0% |

| MHF—Living Room | 84.0% | 61.0% | 74.4% | 36.1% |

References

- Xu, A.; Zhu, Y.; Wang, Z. Carbon emission evaluation of eight different prefabricated components during the materialization stage. J. Build. Eng. 2024, 89, 109262. [Google Scholar] [CrossRef]

- Khan, F.A.; Ullah, Z.; Aashan, M.; Ahmad, F.; Saad, M.; Azhar, M. Life cycle assessment and energy efficiency of building façade materials: A case study of an educational building in Pakistan. J. Eng. 2025, 2025, e70047. [Google Scholar] [CrossRef]

- Akbarnezhad, A.; Xiao, J. Estimation and minimization of embodied carbon of buildings: A review. Buildings 2017, 7, 5. [Google Scholar] [CrossRef]

- Fenner, A.E.; Kibert, C.J.; Woo, J.; Morque, S.; Razkenari, M.; Hakim, H.; Lu, X. The carbon footprint of buildings: A review of methodologies and applications. Renew. Sustain. Energy Rev. 2018, 94, 1142–1152. [Google Scholar] [CrossRef]

- Labaran, Y.H.; Mathur, V.S.; Muhammad, S.U.; Musa, A.A. Carbon footprint management: A review of construction industry. Clean. Eng. Technol. 2022, 9, 100531. [Google Scholar] [CrossRef]

- Leite Ribeiro, L.M.; Piccinini Scolaro, T.; Ghisi, E. LEED Certification in Building Energy Efficiency: A Review of Its Performance Efficacy and Global Applicability. Sustainability 2025, 17, 1876. [Google Scholar] [CrossRef]

- Weigert, K.; Koolbeck, M. Building Urban Futures, September 2018. Available online: https://globalaffairs.org/sites/default/files/2020-11/report_City-Carbon%20Actions-Anchored-in-Building-Codes-and-Standards_2018-09-25.pdf (accessed on 23 March 2025).

- Awolesi, O.; Reams, M. Green building development in the US capitals: A focused comparative analysis with Baton Rouge. Urban. Sustain. Soc. 2024, 1, 133–168. [Google Scholar] [CrossRef]

- NIBS. Green Building Standards and Certification Systems. Available online: https://www.wbdg.org/resources/green-building-standards-and-certification-systems (accessed on 13 April 2025).

- Uğur, L.O.; Leblebici, N. An examination of the LEED green building certification system in terms of construction costs. Renew. Sustain. Energy Rev. 2018, 81, 1476–1483. [Google Scholar] [CrossRef]

- Mazutis, D.; Sweet, L. The business of accelerating sustainable urban development: A systematic review and synthesis. J. Clean. Prod. 2022, 357, 131871. [Google Scholar] [CrossRef]

- Amiri, A.; Ottelin, J.; Sorvari, J. Are LEED-Certified Buildings Energy-Efficient in Practice? Sustainability 2019, 11, 1672. [Google Scholar] [CrossRef]

- Bernardi, E.; Carlucci, S.; Cornaro, C.; Bohne, R.A. An analysis of the most adopted rating systems for assessing the environmental impact of buildings. Sustainability 2017, 9, 1226. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, H.; Gao, W.; Wang, F.; Zhou, N.; Kammen, D.M.; Ying, X. A survey of the status and challenges of green building development in various countries. Sustainability 2019, 11, 5385. [Google Scholar] [CrossRef]

- USGBC. LEED Certification for Existing Buildings and Spaces. Available online: https://www.usgbc.org/leed/rating-systems/existing-buildings (accessed on 6 June 2025).

- EnergyStar.gov. Green Buildings and ENERGY STAR. Available online: https://www.energystar.gov/buildings/about-us/green-buildings-and-energy-star (accessed on 13 April 2025).

- Vine, E.; Barnes, B.; Ritschard, R. Implementing home energy rating systems. Energy 1988, 13, 401–411. [Google Scholar] [CrossRef]

- Clay, K.; Severnini, E.; Sun, X. Does LEED certification save energy? Evidence from retrofitted federal buildings. J. Environ. Econ. Manag. 2023, 121, 102866. [Google Scholar] [CrossRef]

- No, S.; Won, C. Comparative analysis of energy consumption between green building certified and non-certified buildings in Korea. Energies 2020, 13, 1049. [Google Scholar] [CrossRef]

- Issa, M.H.; Attalla, M.; Rankin, J.H.; Christian, A.J. Energy consumption in conventional, energy-retrofitted and green LEED Toronto schools. Constr. Manag. Econ. 2011, 29, 383–395. [Google Scholar] [CrossRef]

- Newsham, G.R.; Mancini, S.; Birt, B.J. Do LEED-certified buildings save energy? Yes, but…. Energy Build. 2009, 41, 897–905. [Google Scholar] [CrossRef]

- Vosoughkhosravi, S.; Dixon-Grasso, L.; Jafari, A. The impact of LEED certification on energy performance and occupant satisfaction: A case study of residential college buildings. J. Build. Eng. 2022, 59, 105097. [Google Scholar] [CrossRef]

- Heinzerling, D.; Schiavon, S.; Webster, T.; Arens, E. Indoor environmental quality assessment models: A literature review and a proposed weighting and classification scheme. Build. Environ. 2013, 70, 210–222. [Google Scholar] [CrossRef]

- Zhang, D.; Mui, K.-W.; Wong, L.-T. Ten Questions Concerning Indoor Environmental Quality (IEQ) Models: The Development and Applications. Appl. Sci. 2023, 13, 3343. [Google Scholar] [CrossRef]

- Leccese, F.; Rocca, M.; Salvadori, G.; Belloni, E.; Buratti, C. A multicriteria method to identify and rank IEQ criticalities: Measurements and applications for existing school buildings. Energy Built Environ. 2023, 6, 387–401. [Google Scholar] [CrossRef]

- Quesada-Molina, F.; Astudillo-Cordero, S. Indoor Environmental Quality Assessment Model (IEQ) for Houses. Sustainability 2023, 15, 1276. [Google Scholar] [CrossRef]

- Roumi, S.; Zhang, F.; Stewart, R.A.; Santamouris, M. Commercial building indoor environmental quality models: A critical review. Energy Build. 2022, 263, 112033. [Google Scholar] [CrossRef]

- Leccese, F.; Rocca, M.; Salvadori, G.; Belloni, E.; Buratti, C. Towards a holistic approach to indoor environmental quality assessment: Weighting schemes to combine effects of multiple environmental factors. Energy Build. 2021, 245, 111056. [Google Scholar] [CrossRef]

- Piasecki, M.; Kostyrko, K.; Pykacz, S. Indoor environmental quality assessment: Part 1: Choice of the indoor environmental quality sub-component models. J. Build. Phys. 2017, 41, 264–289. [Google Scholar] [CrossRef]

- Tang, H.; Ding, Y.; Singer, B. Interactions and comprehensive effect of indoor environmental quality factors on occupant satisfaction. Build. Environ. 2020, 167, 106462. [Google Scholar] [CrossRef]

- Tang, H.; Liu, X.; Geng, Y.; Lin, B.; Ding, Y. Assessing the perception of overall indoor environmental quality: Model validation and interpretation. Energy Build. 2022, 259, 111870. [Google Scholar] [CrossRef]

- Wang, L.; Zheng, D. Integrated analysis of energy, indoor environment, and occupant satisfaction in green buildings using real-time monitoring data and on-site investigation. Build Env. 2020, 182, 107014. [Google Scholar] [CrossRef]

- Jain, N.; Burman, E.; Robertson, C.; Stamp, S.; Shrubsole, C.; Aletta, F.; Barrett, E.; Oberman, T.; Kang, J.; Raynham, P.; et al. Building performance evaluation: Balancing energy and indoor environmental quality in a UK school building. Build. Serv. Eng. Res. Technol. 2019, 41, 343–360. [Google Scholar] [CrossRef]

- Huh, S.-Y.; Woo, J.; Lim, S.; Lee, Y.-G.; Kim, C.S. What do customers want from improved residential electricity services? Evidence from a choice experiment. Energy Policy 2015, 85, 410–420. [Google Scholar] [CrossRef]

- Agha-Hossein, M.M.; El-Jouzi, S.; Elmualim, A.A.; Ellis, J.; Williams, M. Post-occupancy studies of an office environment: Energy performance and occupants’ satisfaction. Build. Environ. 2013, 69, 121–130. [Google Scholar] [CrossRef]

- Muianga, E.A.D.; KnatzKowaltowski, D.C.C.; Silva, V.G.d.; Granja, A.D.; Moreira, D.d.C.; Ruschel, R.C. Housing transformations and their impacts on the well-being of dwellers. Ambiente Construído 2022, 22, 255–274. [Google Scholar] [CrossRef]

- Al-Obaidi, K.M.; Hossain, M.; Alduais, N.A.; Al-Duais, H.S.; Omrany, H.; Ghaffarianhoseini, A. A review of using IoT for energy efficient buildings and cities: A built environment perspective. Energies 2022, 15, 5991. [Google Scholar] [CrossRef]

- Norouziasl, S.; Jafari, A.; Zhu, Y. Modeling and simulation of energy-related human-building interaction: A systematic review. J. Build. Eng. 2021, 44, 102928. [Google Scholar] [CrossRef]

- Roumi, S.; Zhang, F.; Stewart, R.A.; Santamouris, M. Indoor environment quality effects on occupant satisfaction and energy consumption: Empirical evidence from subtropical offices. Energy Build. 2024, 303, 113784. [Google Scholar] [CrossRef]

- Geng, Y.; Lin, B.; Zhu, Y. Comparative study on indoor environmental quality of green office buildings with different levels of energy use intensity. Build. Environ. 2020, 168, 106482. [Google Scholar] [CrossRef]

- Zhou, Y.; Cai, J.; Xu, Y. Indoor environmental quality and energy use evaluation of a three-star green office building in China with field study. J. Build. Phys. 2021, 45, 209–235. [Google Scholar] [CrossRef]

- Sun, Y.; Kojima, S.; Nakaohkubo, K.; Zhao, J.; Ni, S. Analysis and Evaluation of Indoor Environment, Occupant Satisfaction, and Energy Consumption in General Hospital in China. Buildings 2023, 13, 1675. [Google Scholar] [CrossRef]

- Perera, I.; Hewage, K.; Rana, A.; Sadiq, R. Combining Energy Performance and Indoor Environmental Quality (IEQ) in Buildings: A Systematic Review on Common IEQ Guidelines and Energy Codes in North America. Energies 2025, 18, 1740. [Google Scholar] [CrossRef]

- Awolesi, O. A Multi-method Approach to Evaluating Indoor Environmental Quality and Energy Performance in Residential College Buildings in a Humid Subtropical Region. Energy Built Environ. 2025, in press. [Google Scholar] [CrossRef]

- Kibert, C.J. Sustainable Construction: Green Building Design and Delivery; John Wiley & Sons: Hoboken, NJ, USA, 2016. [Google Scholar]

- Wilkinson, S.J. Sustainable construction issues. In Developing Property Sustainably; Routledge: London, UK, 2015; pp. 147–176. [Google Scholar]

- Wunderground. Baton Rouge, LA Weather History. Available online: https://www.wunderground.com/history/daily/us/la/baton-rouge/KBTR (accessed on 30 March 2025).

- Mujan, I.; Licina, D.; Kljajić, M.; Čulić, A.; Anđelković, A.S. Development of indoor environmental quality index using a low-cost monitoring platform. J. Clean. Prod. 2021, 312, 127846. [Google Scholar] [CrossRef]

- Jia, L.-R.; Han, J.; Chen, X.; Li, Q.-Y.; Lee, C.-C.; Fung, Y.-H. Interaction between thermal comfort, indoor air quality and ventilation energy consumption of educational buildings: A comprehensive review. Buildings 2021, 11, 591. [Google Scholar] [CrossRef]

- Tartarini, F.; Schiavon, S.; Cheung, T.; Hoyt, T. CBE Thermal Comfort Tool: Online tool for thermal comfort calculations and visualizations. SoftwareX 2020, 12, 100563. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, Y.; Khan, A. Thermal comfort of people in a super high-rise building with central air-conditioning system in the hot-humid area of China. Energy Build. 2020, 209, 109727. [Google Scholar] [CrossRef]

- ANSI/ASHRAE Standard 55; Thermal Environmental Conditions for Human Occupancy. ASHRAE: Peachtree Corners, GA, USA, 2017.

- Schiavon, S.; Lee, K.H. Dynamic predictive clothing insulation models based on outdoor air and indoor operative temperatures. Build. Environ. 2013, 59, 250–260. [Google Scholar] [CrossRef]

- Nam, I.; Yang, J.; Lee, D.; Park, E.; Sohn, J.-R. A study on the thermal comfort and clothing insulation characteristics of preschool children in Korea. Build. Environ. 2015, 92, 724–733. [Google Scholar] [CrossRef]

- USEPA. IRIS Toxicological Review of Formaldehyde (Inhalation). 2024. Available online: https://iris.epa.gov/static/pdfs/0419_summary.pdf (accessed on 2 October 2024).

- WHO. World Health Organization Guidelines for Indoor Air Quality: Selected Pollutants; World Health Organization: Geneva, Switzerland, 2010. [Google Scholar]

- WHO. WHO Global Air Quality Guidelines: Particulate Matter (PM2.5 and PM10), Ozone, Nitrogen Dioxide, Sulfur Dioxide and Carbon Monoxide; World Health Organization: Geneva, Switzerland, 2021. [Google Scholar]

- Mui, K.W.; Wong, L.T. Acceptable Illumination Levels for Office Occupants. Archit. Sci. Rev. 2006, 49, 116–119. [Google Scholar] [CrossRef]

- Wong, L.T.; Mui, K.W.; Hui, P.S. A multivariate-logistic model for acceptance of indoor environmental quality (IEQ) in offices. Build. Environ. 2008, 43, 1–6. [Google Scholar] [CrossRef]

- Hannah, L.; Page, W.H. A review of AS/NZS 2107:2016 Acoustics—Recommended design sound levels & reverberation times for building interiors. New Zealand Acoust. 2017, 30, 4–20. [Google Scholar]

- Ncube, M.; Riffat, S. Developing an indoor environment quality tool for assessment of mechanically ventilated office buildings in the UK—A preliminary study. Build. Environ. 2012, 53, 26–33. [Google Scholar] [CrossRef]

- Archtoolbox. Architectural Acoustics—Acceptable Room Sound Levels. Available online: https://www.archtoolbox.com/room-sound-levels/ (accessed on 9 February 2023).

- Sailor, D.J. Relating residential and commercial sector electricity loads to climate—Evaluating state level sensitivities and vulnerabilities. Energy 2001, 26, 645–657. [Google Scholar] [CrossRef]

- Mukherjee, S.; Nateghi, R. Climate sensitivity of end-use electricity consumption in the built environment: An application to the state of Florida, United States. Energy 2017, 128, 688–700. [Google Scholar] [CrossRef]

- Gesangyangji, G.; Holloway, T.; Vimont, D.J.; Acker, S.J. Future changes in state-level population-weighted degree days in the US. Environ. Res. Lett. 2024, 19, 034029. [Google Scholar] [CrossRef]

- EIA. U.S. States State Profiles and Energy Estimates. 2024. Available online: https://www.eia.gov/state/search/#?2=199&5=126&r=false (accessed on 19 March 2025).

- Lee, K.; Lim, H.; Hwang, J.; Lee, D. Development of building benchmarking index for improving gross-floor-area-based energy use intensity. Energy Build. 2025, 328, 115103. [Google Scholar] [CrossRef]

- Scofield, J.; Brodnitz, S.; Cornell, J.; Liang, T.; Scofield, T. Energy and Greenhouse Gas Savings for LEED-Certified U.S. Office Buildings. Energies 2021, 14, 749. [Google Scholar] [CrossRef]

- Turner, C.; Frankel, M. Energy Performance of LEED for New Construction Buildings. Available online: https://newbuildings.org/resource/energy-performance-leed-new-construction-buildings/ (accessed on 7 July 2024).

- Piccolo, A.; Marino, C.; Nucara, A.; Pietrafesa, M. Energy performance of an electrochromic switchable glazing: Experimental and computational assessments. Energy Build. 2018, 165, 390–398. [Google Scholar] [CrossRef]

- Cannavale, A.; Ayr, U.; Fiorito, F.; Martellotta, F. Smart electrochromic windows to enhance building energy efficiency and visual comfort. Energies 2020, 13, 1449. [Google Scholar] [CrossRef]

- Hong, X.; Shi, F.; Wang, S.; Yang, X.; Yang, Y. Multi-objective optimization of thermochromic glazing based on daylight and energy performance evaluation. Build. Simul. 2021, 14, 1685–1695. [Google Scholar] [CrossRef]

- Riffat, S.; Ahmad, M.I.; Shakir, A. Energy-Efficient Lighting Technologies for Building Applications. In Sustainable Energy Technologies and Low Carbon Buildings; Springer: Berlin/Heidelberg, Germany, 2024; pp. 185–218. [Google Scholar]

| Occupants | Facility Managers a | |

|---|---|---|

| Case I | Q1. How satisfied are you with the temperature in the building? Q2. How satisfied are you with the artificial lighting (human-made lighting sources controlled by residents or managers) in the building? Q3. How satisfied are you with the natural lighting in the building? Q4. How satisfied are you with the air quality and ventilation in the building? Q5. How satisfied are you with the noise levels in the building? Q6. How satisfied are you with your overall experience in the building? | Q1. How satisfied are you with the energy usage of the dormitory during the heating season compared to the cooling season? Q2. How satisfied are you with the energy cost (USD) of the dormitory during the heating season compared to the cooling season? |

| Case I Participants | LCD: 39; NCD: 31 | LCD: 5; NCD: 5 |

| Case II | Q1. How satisfied are you with the temperature in this building? Q2. How satisfied are you with the lighting in this building? Q3. How satisfied are you with the air quality and ventilation in this building? Q4. How satisfied are you with the noise level in this building? Q5. How satisfied are you with your overall experience in this building? | Q1. How satisfied are you with the energy usage of the event building during the heating season compared to the cooling season? Q2. How satisfied are you with the energy cost (USD) of the event building during the heating season compared to the cooling season? |

| Case II Participants | LEB: 38; NEB: 27 | LEB: 3; NEB: 3 |

| EUQa | EUQb | Explanation | ||

|---|---|---|---|---|

| Measure | Score | Measure | Score | |

| ≥+20% | 10 | ≥+55.0% | 10 | Highly Inefficient |

| +15.0% to +19.9% | 20 | +40.0% to +54.9% | 20 | Very Inefficient |

| +10.0% to +14.9% | 30 | +25.0% to +39.9% | 30 | Inefficient |

| +5.0% to +9.9% | 40 | +10.0% to +24.9% | 40 | Slightly Inefficient |

| −4.9% to +4.9% | 50 | −9.9% to +9.9% | 50 | Quasi-Neutral |

| −5.0% to −9.9% | 60 | −10.0% to −19.9% | 60 | Slightly Efficient |

| −10.0% to −14.9% | 70 | −20.0% to −29.9% | 70 | Efficient |

| −15.0% to −19.9% | 80 | −30.0% to −39.9% | 80 | Very Efficient |

| −20.0% to −24.9% | 90 | −40.0% to −49.9% | 90 | Highly Efficient |

| ≤−25.0% | 100 | ≤−50.0% | 100 | Extremely Efficient |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Awolesi, O.; Reams, M. Development and Demonstration of the Operational Sustainability Index (OPSi): A Multidimensional Metric for Building Performance Evaluation. Buildings 2025, 15, 2111. https://doi.org/10.3390/buildings15122111

Awolesi O, Reams M. Development and Demonstration of the Operational Sustainability Index (OPSi): A Multidimensional Metric for Building Performance Evaluation. Buildings. 2025; 15(12):2111. https://doi.org/10.3390/buildings15122111

Chicago/Turabian StyleAwolesi, Oluwafemi, and Margaret Reams. 2025. "Development and Demonstration of the Operational Sustainability Index (OPSi): A Multidimensional Metric for Building Performance Evaluation" Buildings 15, no. 12: 2111. https://doi.org/10.3390/buildings15122111

APA StyleAwolesi, O., & Reams, M. (2025). Development and Demonstration of the Operational Sustainability Index (OPSi): A Multidimensional Metric for Building Performance Evaluation. Buildings, 15(12), 2111. https://doi.org/10.3390/buildings15122111