Optimization of the ANN Model for Energy Consumption Prediction of Direct-Fired Absorption Chillers for a Short-Term

Abstract

:1. Introduction

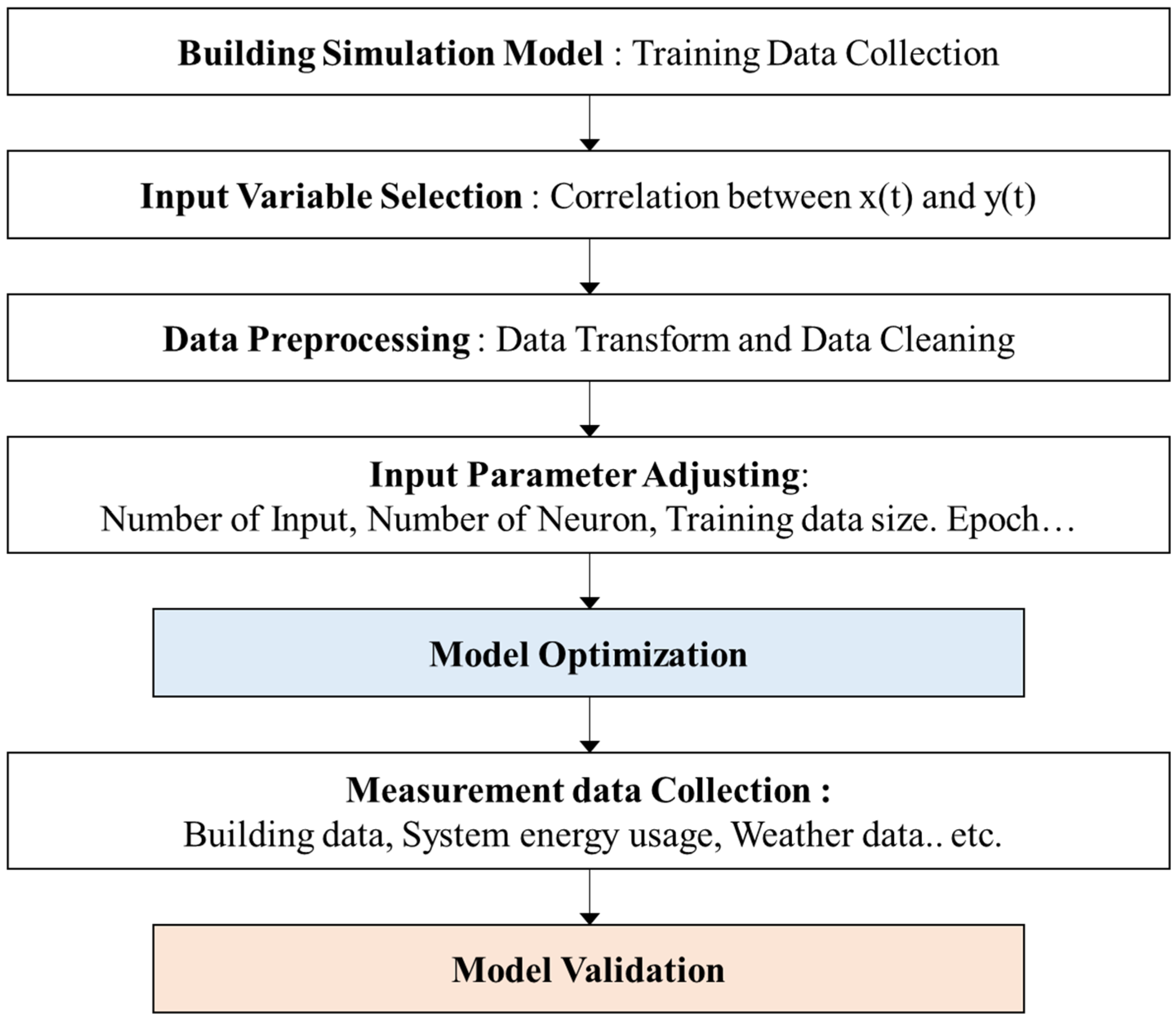

2. Optimization and Validation of an ANN-Based Prediction Model

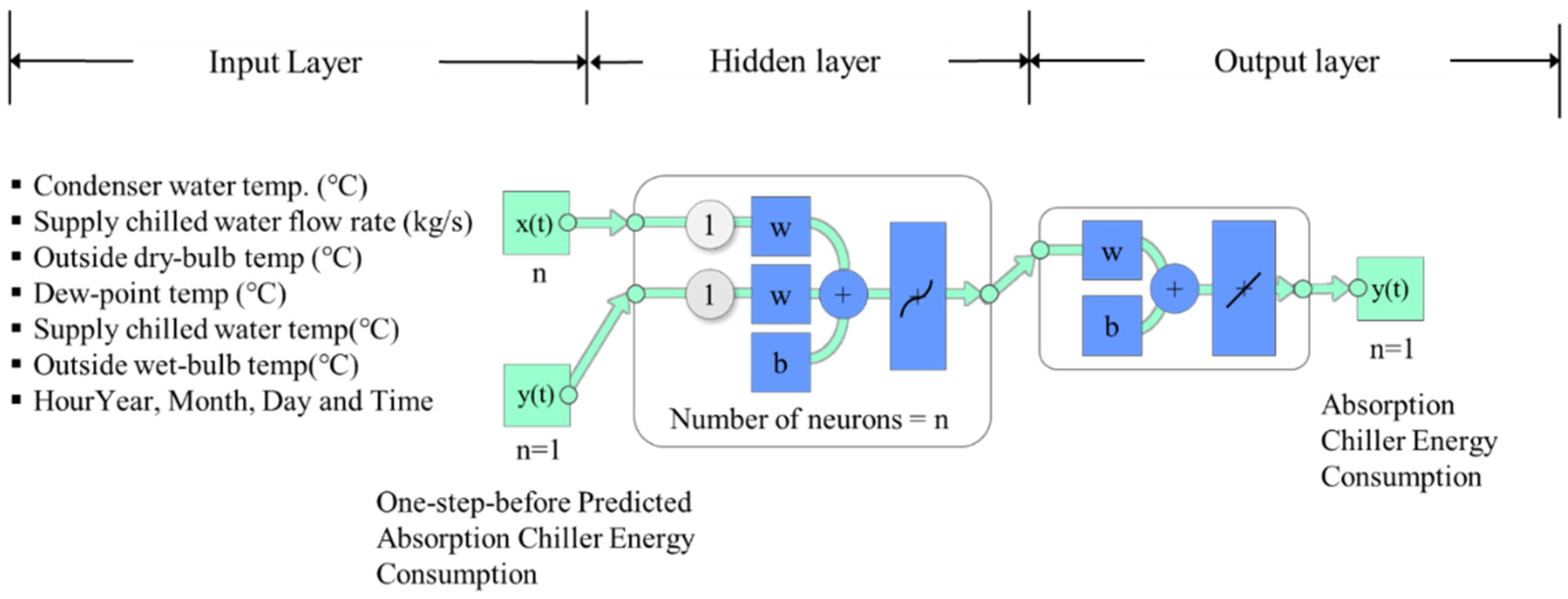

2.1. ANN Model

2.2. Assessment of the Prediction Model

3. The Optimization of the ANN-Based Model Using the Simulation Data

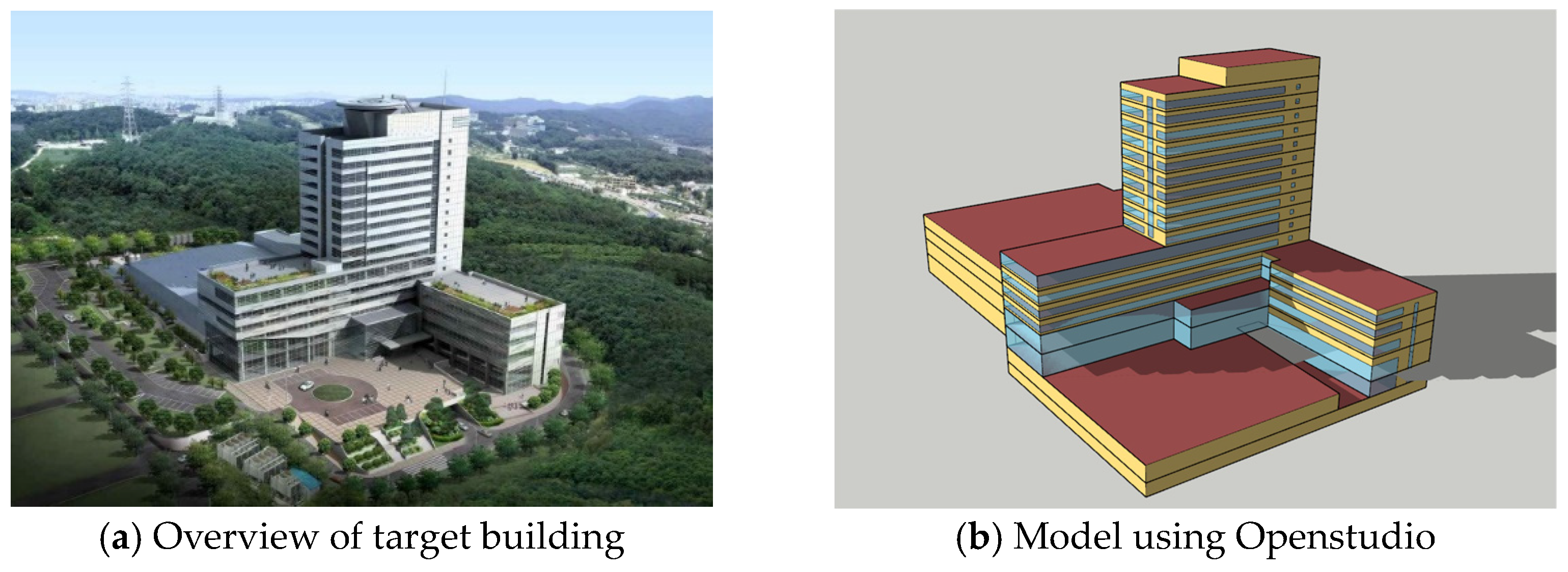

3.1. Energy Simulation for Generating Data

3.2. The Optimization Process for Improving the Predictive Performance of the ANN Model

3.2.1. Input Variables

3.2.2. Input Parameters

3.3. The Result and Discussion

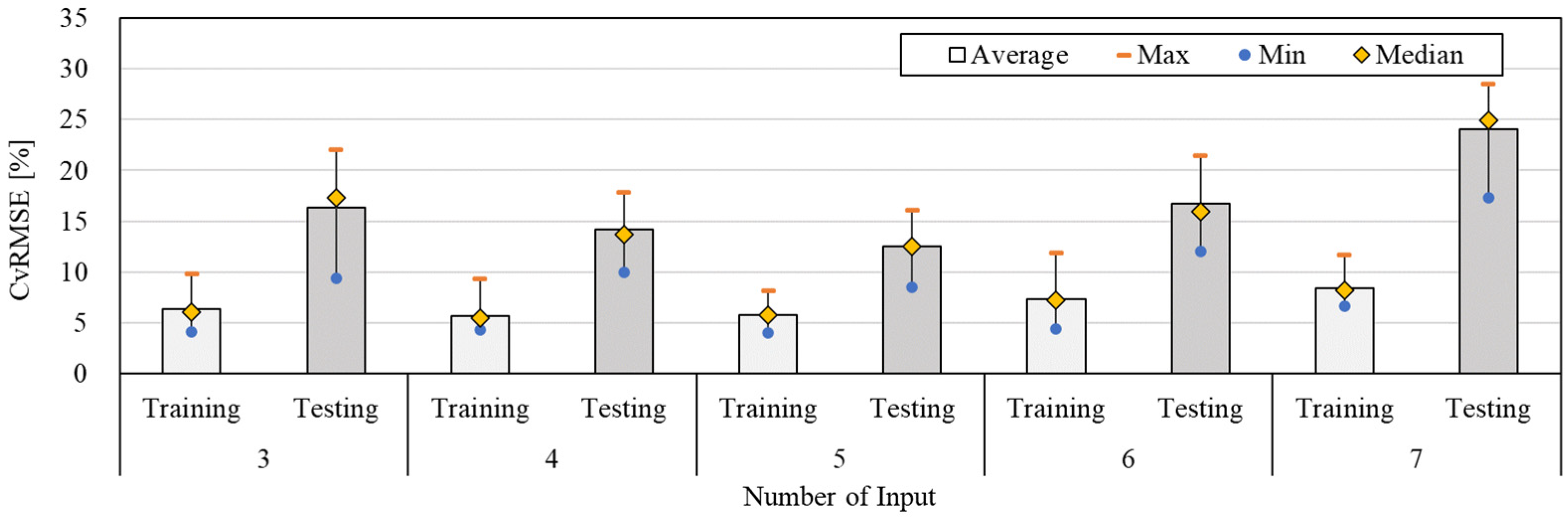

3.3.1. Predictive Performance by the Number of Input Variable Changes

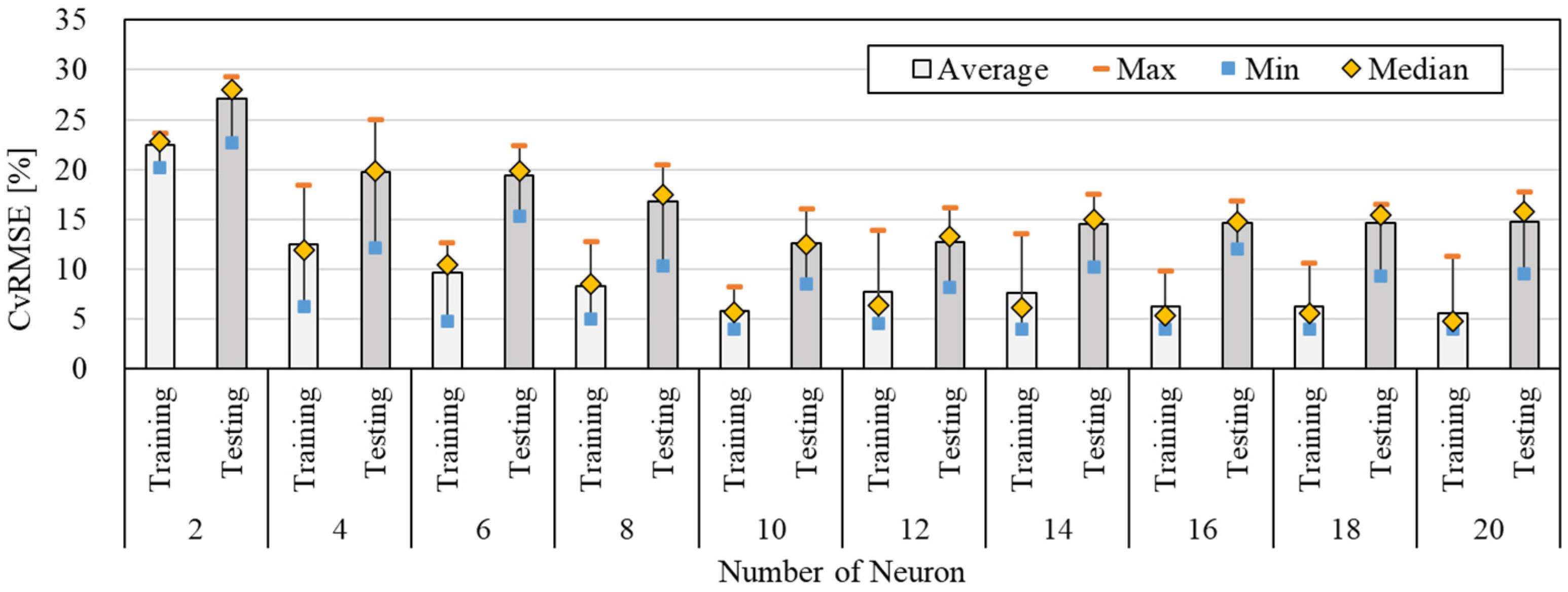

3.3.2. Predictive Performance by the Number of Neurons Changes

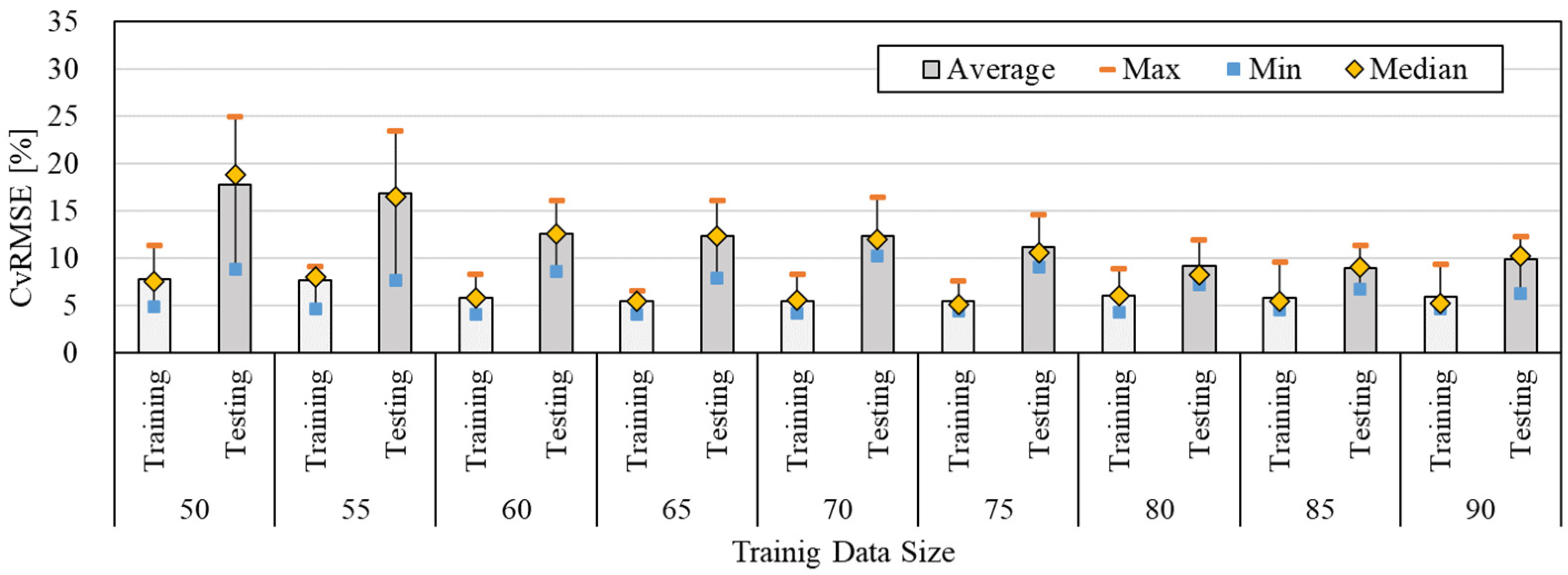

3.3.3. Predictive Performance by the Size Changes of the Training Data

4. The Validation of the ANN Model for a Short Term with Measured Data

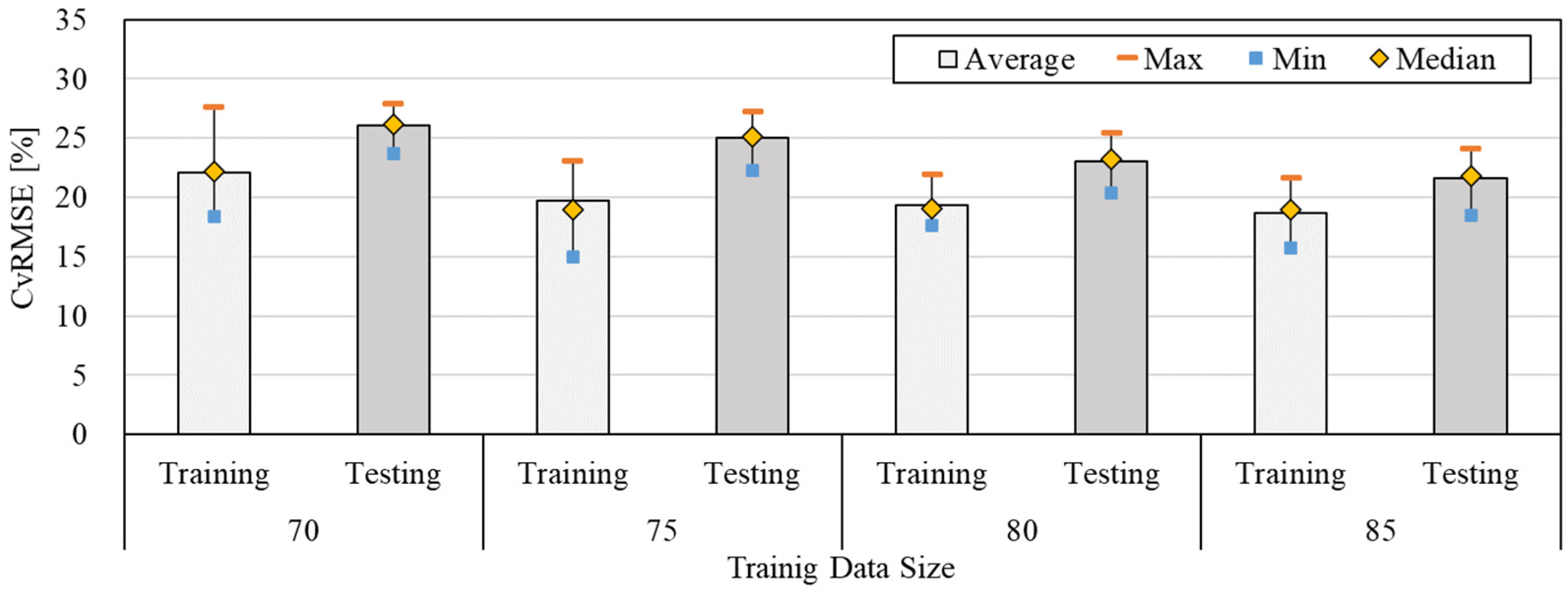

4.1. The Validation of the ANN Model with Measured Data

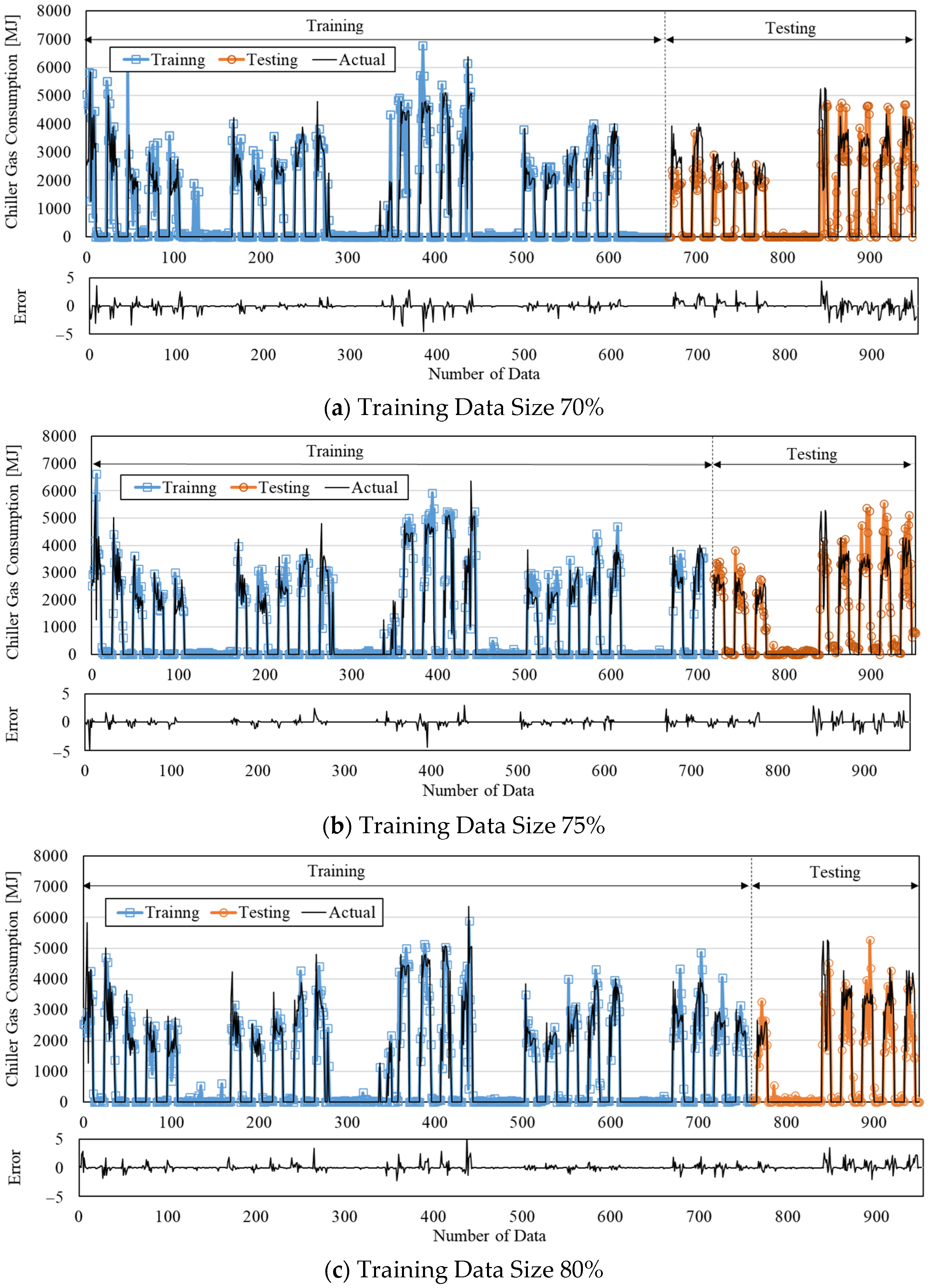

4.2. The Prediction Result of the Energy Consumption for a Short Term by Using Measured Data

5. Conclusions

- The predictive performance was investigated by varying the number of input variables. Based on the result, it is important to consider the correlation between the input layer and input variables rather than increasing the number of input variables.

- The increase in the number of neurons was not effective in improving the predictive performance of the ANN model. Thus, finding a suitable number of neurons is the key to ensuring the accuracy of the predictive performance.

- The ANN model showed acceptable predictive performance when the data size of training was set between 80 and 85%.

- The training data size plays a significant role in the predictive performance of the ANN model.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- IEA. Global Status Report For Buildings and Construction 2019; IEA: Paris, France, 2019. [Google Scholar]

- Dai, B.; Qi, H.; Liu, S.; Zhong, Z.; Li, H.; Song, M.; Ma, M.; Sun, Z. Environmental and economical analyses of transcritical CO2 heat pump combined with direct dedicated mechanical subcooling (dms) for space heating in china. Energy Convers. Manag. 2019, 198, 111317. [Google Scholar] [CrossRef]

- Dai, B.; Wang, Q.; Liu, S.; Wang, D.; Yu, L.; Li, X.; Wang, Y. Novel configuration of dual-temperature condensation and dual-temperature evaporation high-temperature heat pump system: Carbon footprint, energy consumption, and financial assessment. Energy Convers. Manag. 2023, 292, 117360. [Google Scholar] [CrossRef]

- US Department of Energy. An Assessment of Energy Technologies and Research Opportunities. Quadrennial Technology Review. 2015. Available online: https://www.energy.gov/quadrennial-technology-review-2015 (accessed on 11 July 2023).

- Kim, J.-H.; Seong, N.-C.; Choi, W. Cooling load forecasting via predictive optimization of a nonlinear autoregressive exogenous (narx) neural network model. Sustainability 2019, 11, 6535. [Google Scholar] [CrossRef]

- Regulations on the Promotion of Rational Use of Energy in Public Institutions Ministry of Trade, Industry and Energy. 2017. Available online: http://www.Motie.Go.Kr/ (accessed on 16 August 2023).

- Regulations for the Operation of Energy Management System Installation and Confirmation. Available online: https://www.Energy.Or.Kr/ (accessed on 22 August 2023).

- Amasyali, K.; El-Gohary, N.M. A review of data-driven building energy consumption prediction studies. Renew. Sustain. Energy Rev. 2018, 81, 1192–1205. [Google Scholar]

- Mena, R.; Rodríguez, F.; Castilla, M.; Arahal, M.R. A prediction model based on neural networks for the energy consumption of a bioclimatic building. Energy Build. 2014, 82, 142–155. [Google Scholar] [CrossRef]

- Yuan, Y.; Chen, Z.; Wang, Z.; Sun, Y.; Chen, Y. Attention mechanism-based transfer learning model for day-ahead energy demand forecasting of shopping mall buildings. Energy 2023, 270, 126878. [Google Scholar] [CrossRef]

- Yun, K.; Luck, R.; Mago, P.J.; Cho, H. Building hourly thermal load prediction using an indexed arx model. Energy Build. 2012, 54, 225–233. [Google Scholar] [CrossRef]

- Shi, G.; Liu, D.; Wei, Q. Energy consumption prediction of office buildings based on echo state networks. Neurocomputing 2016, 216, 478–488. [Google Scholar] [CrossRef]

- Zhou, X.; Lin, W.; Kumar, R.; Cui, P.; Ma, Z. A data-driven strategy using long short term memory models and reinforcement learning to predict building electricity consumption. Appl. Energy 2022, 306, 118078. [Google Scholar] [CrossRef]

- Xiao, Z.; Gang, W.; Yuan, J.; Chen, Z.; Li, J.; Wang, X.; Feng, X. Impacts of data preprocessing and selection on energy consumption prediction model of hvac systems based on deep learning. Energy Build. 2022, 258, 111832. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, Y.; Srinivasan, R.S. A novel ensemble learning approach to support building energy use prediction. Energy Build. 2018, 159, 109–122. [Google Scholar] [CrossRef]

- Sun, Y.; Haghighat, F.; Fung, B.C.M. A review of the-state-of-the-art in data-driven approaches for building energy prediction. Energy Build. 2020, 221, 110022. [Google Scholar] [CrossRef]

- Olu-Ajayi, R.; Alaka, H.; Owolabi, H.; Akanbi, L.; Ganiyu, S. Data-driven tools for building energy consumption prediction: A review. Energies 2023, 16, 2574. [Google Scholar] [CrossRef]

- Seong, N.; Hong, G. Comparative Evaluation of Building Cooling Load Prediction Models with Multi-Layer Neural Network Learning Algorithms. KIEAE J. 2022, 22, 35–41. [Google Scholar] [CrossRef]

- Seong, N.-C.; Hong, G. An Analysis of the Effect of the Data Preprocess on the Performance of Building Load Prediction Model Using Multilayer Neural Networks. J. Korean Inst. Archit. Sustain. Environ. Build. Syst. 2022, 16, 273–284. [Google Scholar]

- Lee, C.-W.; Seong, N.-C.; Choi, W.-C. Performance Improvement and Comparative Evaluation of the Chiller Energy Con-sumption Forecasting Model Using Python. J. Korean Inst. Archit. Sustain. Environ. Build. Syst. 2021, 15, 252–264. [Google Scholar]

- Junlong, Q.; Shin, J.-W.; Ko, J.-L.; Shin, S.-K. A Study on Energy Consumption Prediction from Building Energy Management System Data with Missing Values Using SSIM and VLSW Algorithms. Trans. Korean Inst. Electr. Eng. 2021, 70, 1540–1547. [Google Scholar] [CrossRef]

- Yoon, Y.-R.; Shin, S.-H.; Moon, H.-J. Analysis of Building Energy Consumption Patterns according to Building Types Using Clustering Methods. J. Korean Soc. Living Environ. Syst. 2017, 24, 232–237. [Google Scholar] [CrossRef]

- Gu, S.; Lee, H.; Yoon, J.; Kim, D. Investigation on Electric Energy Consumption Patterns of Residential Buildings in Four Cities through the Data Mining. J. Korean Sol. Energy Soc. 2022, 42, 127–139. [Google Scholar]

- Di Nunno, F.; Granata, F. Groundwater level prediction in apulia region (southern italy) using narx neural network. Environ. Res. 2020, 190, 110062. [Google Scholar] [CrossRef]

- Koschwitz, D.; Frisch, J.; van Treeck, C. Data-driven heating and cooling load predictions for non-residential buildings based on support vector machine regression and narx recurrent neural network: A comparative study on district scale. Energy 2018, 165, 134–142. [Google Scholar] [CrossRef]

- Kim, J.-H.; Seong, N.-C.; Choi, W.-C. Comparative evaluation of predicting energy consumption of absorption heat pump with multilayer shallow neural network training algorithms. Buildings 2022, 12, 13. [Google Scholar] [CrossRef]

- Kim, J.; Hong, Y.; Seong, N.; Kim, D.D. Assessment of ann algorithms for the concentration prediction of indoor air pollutants in child daycare centers. Energies 2022, 15, 2654. [Google Scholar] [CrossRef]

- American Society of Heating, Refrigerating and Air Conditioning Engineers. Ashrae Guideline 14-2002, Measurement of Energy and Demand Savings—Measurement of Energy, Demand and Water Savings. 2002. Available online: http://www.eeperformance.org/uploads/8/6/5/0/8650231/ashrae_guideline_14-2002_measurement_of_energy_and_demand_saving.pdf (accessed on 20 July 2023).

- M&v Guidelines: Measurement and Verification for Performance-Based Contracts, Federal Energy Management Program. Available online: https://www.Energy.Gov/eere/femp/downloads/mv-guidelines-measurement-and-verification-performance-based-contracts-version (accessed on 11 August 2023).

- Efficiency Valuation Organization. International Performance Measurement and Verification Protocol, Concepts and Options for Determining Energy and Water Savings; EVO: North Georgia, GA, USA, 2016; Volume 3. [Google Scholar]

- Seong, N.-C.; Kim, J.-H.; Choi, W. Adjustment of multiple variables for optimal control of building energy performance via a genetic algorithm. Buildings 2020, 10, 195. [Google Scholar] [CrossRef]

| Calibration Type | Index | ASHRAE Guideline 14 [22] | FEMP [23] | IP-MVP [24] |

|---|---|---|---|---|

| Monthly | MBE_monthly | ±5% | ±5% | ±20% |

| CvRMSE_monthly | 15% | 15% | - | |

| Hourly | MBE_hourly | ±10% | ±10% | ±5% |

| CvRMSE_hourly | 30% | 30% | 20% |

| Component | Features |

|---|---|

| Site Location | Latitude: 37.27° N, Longitude: 126.99° E |

| Weather Data | TRY Suwon |

| Load Convergence Tolerance Value | Delta 0.04 W (default) |

| Temperature Convergence Tolerance Value | Delta 0.4 °C (default) |

| Heat Balance Algorithm | CTF (Conduction Transfer Function) |

| Simulated Hours | 8760 [h] |

| Timestep | Hourly |

| Internal Gain | Lighting 6 [W/m2] People 20 [m2/person] Plug and Process 8 [W/m2] |

| Envelope Summary | Wall 0.36 [W/m2·K], Roof 0.20 [W/m2·K], Window 2.40 [W/m2·K], SHGC 0.497 |

| Operation Schedule | 7:00~18:00 |

| Input Variables [x(t)] | Condenser Water Temp. (°C) | Supply Chilled Water Flow Rate (kg/s) | Outside Dry-Bulb Temp. (°C) | Dew-Point Temp. (°C) | Supply Chilled Water Temp. (°C) | Outside Wet-Bulb Temp. (°C) | Hour |

|---|---|---|---|---|---|---|---|

| Rank | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| Spearman correlation | 0.72 | 0.65 | 0.54 | 0.44 | −0.38 | 0.31 | 0.25 |

| Parameter | Value | |

|---|---|---|

| Fixed | Number of Hidden Layers | 3 |

| Epochs | 500 | |

| Variable | Number of Inputs | 3~7 |

| Number of Neurons | 2~20 | |

| Training Data Size | 50~90% | |

| Number of Input | Period | Average | Maximum | Minimum | SD |

|---|---|---|---|---|---|

| 3 | Training | 6.35 | 9.88 | 4.18 | 1.67 |

| Testing | 16.30 | 22.05 | 9.44 | 4.30 | |

| 4 | Training | 5.69 | 9.37 | 4.34 | 1.49 |

| Testing | 14.23 | 17.84 | 9.99 | 2.60 | |

| 5 | Training | 5.77 | 8.21 | 4.03 | 1.40 |

| Testing | 13.25 | 19.35 | 8.56 | 3.49 | |

| 6 | Training | 7.38 | 11.92 | 4.46 | 1.94 |

| Testing | 16.74 | 21.43 | 12.05 | 3.21 | |

| 7 | Training | 8.43 | 11.65 | 6.72 | 1.45 |

| Testing | 24.04 | 28.45 | 17.32 | 3.92 |

| Number of Neurons | Period | Average | Maximum | Minimum | SD |

|---|---|---|---|---|---|

| 2 | Training | 22.44 | 23.65 | 20.23 | 1.21 |

| Testing | 27.14 | 29.33 | 22.68 | 2.41 | |

| 4 | Training | 12.47 | 18.43 | 6.22 | 3.36 |

| Testing | 19.77 | 25.01 | 12.15 | 3.79 | |

| 6 | Training | 9.70 | 12.66 | 4.83 | 2.59 |

| Testing | 19.45 | 22.40 | 15.36 | 2.22 | |

| 8 | Training | 8.28 | 12.76 | 5.00 | 2.36 |

| Testing | 16.80 | 20.50 | 10.37 | 3.73 | |

| 10 | Training | 5.77 | 8.21 | 4.03 | 1.40 |

| Testing | 12.55 | 16.05 | 8.56 | 2.44 | |

| 12 | Training | 7.70 | 13.95 | 4.58 | 3.10 |

| Testing | 12.66 | 16.14 | 8.20 | 2.84 | |

| 14 | Training | 7.62 | 13.59 | 4.02 | 3.86 |

| Testing | 14.52 | 17.55 | 10.27 | 2.33 | |

| 16 | Training | 6.22 | 9.81 | 3.98 | 1.94 |

| Testing | 14.59 | 16.82 | 12.05 | 1.42 | |

| 18 | Training | 6.22 | 10.64 | 4.01 | 2.39 |

| Testing | 14.66 | 16.50 | 9.28 | 2.27 | |

| 20 | Training | 5.61 | 11.33 | 3.96 | 2.19 |

| Testing | 14.74 | 17.72 | 9.57 | 2.84 |

| Training Data Size [%] | Period | Average | Max | Min | SD |

|---|---|---|---|---|---|

| 50 | Training | 7.74 | 11.25 | 4.82 | 1.93 |

| Testing | 17.69 | 24.88 | 8.80 | 5.78 | |

| 55 | Training | 7.60 | 9.08 | 4.63 | 1.38 |

| Testing | 16.77 | 23.43 | 7.59 | 4.70 | |

| 60 | Training | 5.77 | 8.21 | 4.03 | 1.40 |

| Testing | 12.55 | 16.05 | 8.56 | 2.44 | |

| 65 | Training | 5.36 | 6.53 | 3.98 | 0.83 |

| Testing | 12.29 | 16.04 | 7.82 | 2.78 | |

| 70 | Training | 5.45 | 8.24 | 4.11 | 1.33 |

| Testing | 12.26 | 16.42 | 10.23 | 1.88 | |

| 75 | Training | 5.37 | 7.59 | 4.32 | 1.05 |

| Testing | 11.06 | 14.54 | 9.06 | 1.83 | |

| 80 | Training | 6.04 | 8.84 | 4.28 | 1.53 |

| Testing | 9.08 | 11.85 | 7.14 | 1.81 | |

| 85 | Training | 5.76 | 9.58 | 4.48 | 1.45 |

| Testing | 8.93 | 11.23 | 6.69 | 1.45 | |

| 90 | Training | 5.87 | 9.30 | 4.65 | 1.46 |

| Testing | 9.79 | 12.20 | 6.17 | 2.10 |

| Training Data Size | Period | Average | Max | Min | SD |

|---|---|---|---|---|---|

| 70 | Training | 22.11 | 27.52 | 18.41 | 2.48 |

| Testing | 26.06 | 27.85 | 23.68 | 1.53 | |

| 75 | Training | 19.73 | 22.96 | 14.95 | 2.64 |

| Testing | 24.87 | 26.91 | 22.27 | 1.62 | |

| 80 | Training | 19.34 | 21.81 | 17.65 | 1.46 |

| Testing | 22.77 | 25.34 | 20.41 | 1.57 | |

| 85 | Training | 18.68 | 21.62 | 15.74 | 1.72 |

| Testing | 19.99 | 22.02 | 17.50 | 1.36 |

| Training Data Size | Period | Prediction [GJ] | Actual [GJ] | Error Rate [%] |

|---|---|---|---|---|

| 70% | Training Period | 678.83 | 639.39 | 5.81% |

| Testing Period | 341.46 | 358.83 | 5.09% | |

| Total Period | 1020.29 | 998.22 | 2.16% | |

| 75% | Training Period | 737.33 | 713.21 | 3.27% |

| Testing Period | 279.42 | 285.01 | 2.00% | |

| Total Period | 1016.75 | 998.22 | 1.82% | |

| 80% | Training Period | 754.86 | 772.42 | 2.33% |

| Testing Period | 229.17 | 225.80 | 1.47% | |

| Total Period | 984.03 | 998.22 | 1.44% | |

| 85% | Training Period | 812.06 | 799.14 | 1.59% |

| Testing Period | 197.38 | 199.08 | 0.86% | |

| Total Period | 1009.44 | 998.22 | 1.11% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hong, G.; Seong, N. Optimization of the ANN Model for Energy Consumption Prediction of Direct-Fired Absorption Chillers for a Short-Term. Buildings 2023, 13, 2526. https://doi.org/10.3390/buildings13102526

Hong G, Seong N. Optimization of the ANN Model for Energy Consumption Prediction of Direct-Fired Absorption Chillers for a Short-Term. Buildings. 2023; 13(10):2526. https://doi.org/10.3390/buildings13102526

Chicago/Turabian StyleHong, Goopyo, and Namchul Seong. 2023. "Optimization of the ANN Model for Energy Consumption Prediction of Direct-Fired Absorption Chillers for a Short-Term" Buildings 13, no. 10: 2526. https://doi.org/10.3390/buildings13102526

APA StyleHong, G., & Seong, N. (2023). Optimization of the ANN Model for Energy Consumption Prediction of Direct-Fired Absorption Chillers for a Short-Term. Buildings, 13(10), 2526. https://doi.org/10.3390/buildings13102526