Investigation of Edge Computing in Computer Vision-Based Construction Resource Detection

Abstract

1. Introduction

2. Materials and Methods

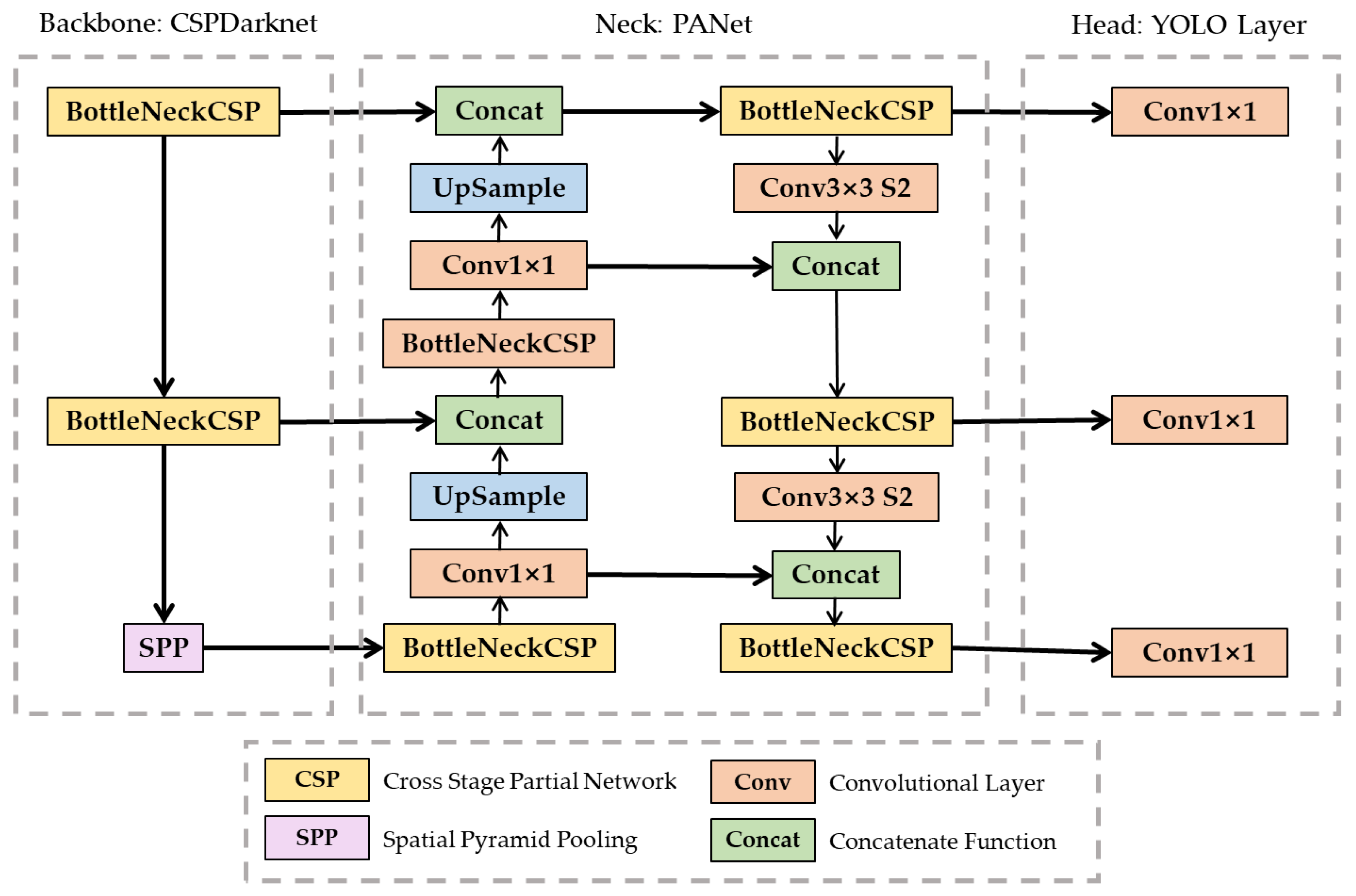

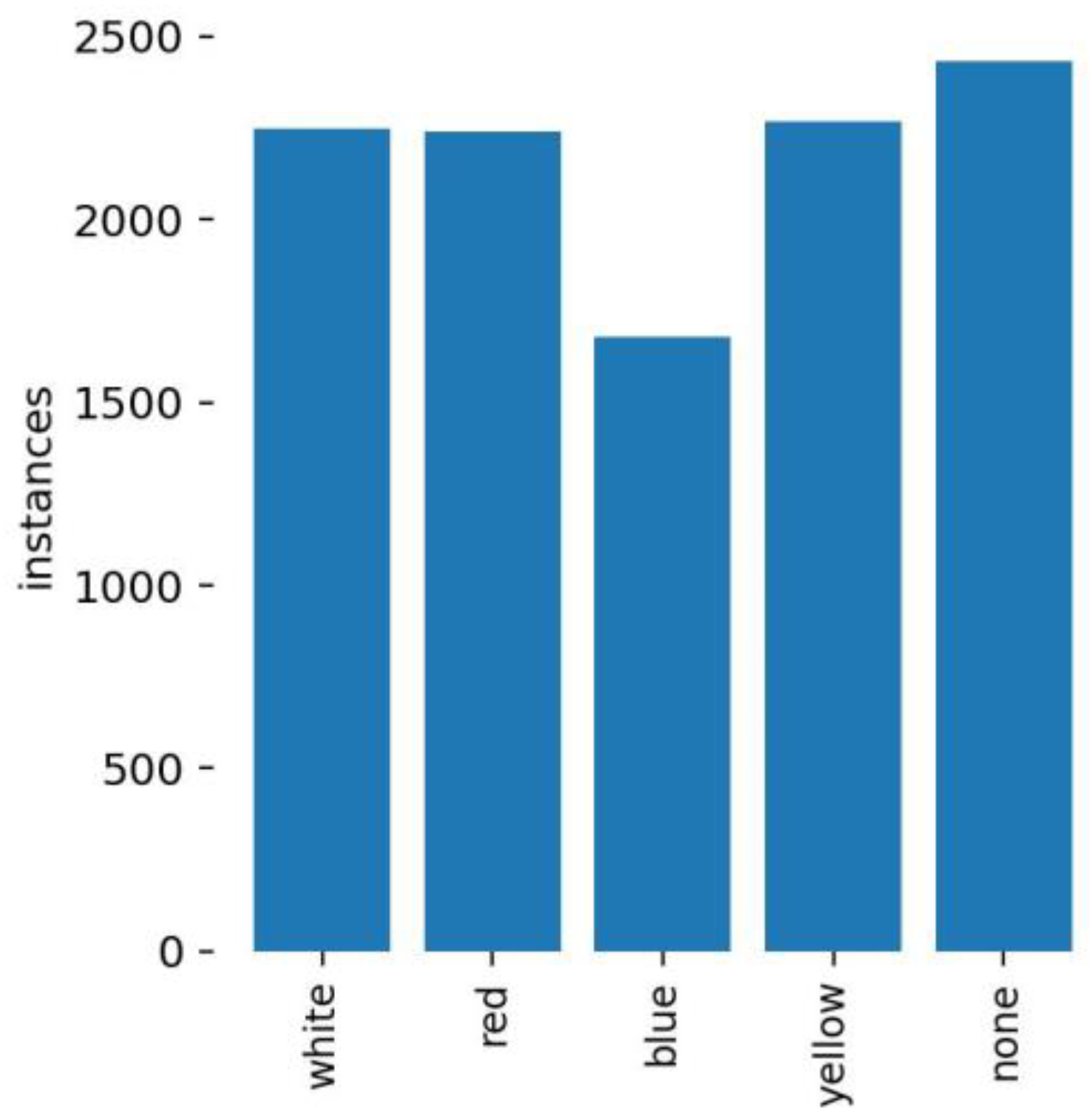

2.1. Hardhat Detection

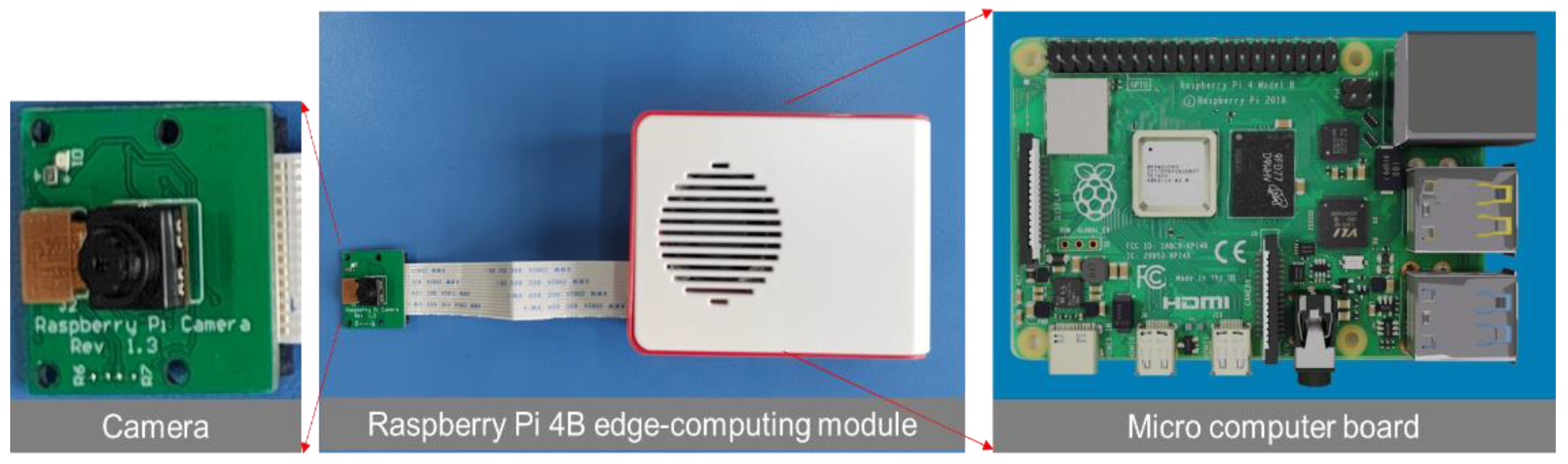

2.2. Edge Computing Device Selection

2.3. Detection Model Embedding

2.4. Evaluation Criteria and Strategies

3. Results

3.1. Training and Validation of the YOLO-v5 Model

3.2. Comparison of Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Xiao, B.; Kang, S.-C. Development of an Image Data Set of Construction Machines for Deep Learning Object Detection. J. Comput. Civ. Eng. 2021, 35, 05020005. [Google Scholar] [CrossRef]

- Huang, Y.; Hammad, A.; Zhu, Z. Providing Proximity Alerts to Workers on Construction Sites Using Bluetooth Low Energy RTLS. Autom. Constr. 2021, 132, 103928. [Google Scholar] [CrossRef]

- Sherafat, B.; Rashidi, A.; Lee, Y.-C.; Ahn, C.R. A Hybrid Kinematic-Acoustic System for Automated Activity Detection of Construction Equipment. Sensors 2019, 19, 4286. [Google Scholar] [CrossRef] [PubMed]

- Xiao, B.; Lin, Q.; Chen, Y. A Vision-Based Method for Automatic Tracking of Construction Machines at Nighttime Based on Deep Learning Illumination Enhancement. Autom. Constr. 2021, 127, 103721. [Google Scholar] [CrossRef]

- Kim, J. Visual Analytics for Operation-Level Construction Monitoring and Documentation: State-of-the-Art Technologies, Research Challenges, and Future Directions. Front. Built Environ. 2020, 6, 575738. [Google Scholar] [CrossRef]

- Chen, C.; Zhu, Z.; Hammad, A.M. Akbarzadeh, Automatic Identification of Idling Reasons in Excavation Operations Based on Excavator–Truck Relationships. J. Comput. Civ. Eng. 2021, 35, 04021015. [Google Scholar] [CrossRef]

- Xiao, B.; Chen, C.; Yin, X. Recent Advancements of Robotics in Construction. Autom. Constr. 2022, 144, 104591. [Google Scholar] [CrossRef]

- Park, M.-W.; Elsafty, N.; Zhu, Z. Hardhat-Wearing Detection for Enhancing On-Site Safety of Construction Workers. J. Constr. Eng. Manag. 2015, 141, 04015024. [Google Scholar] [CrossRef]

- Mneymneh, B.E.; Abbas, M.; Khoury, H. Automated Hardhat Detection for Construction Safety Applications. Procedia Eng. 2017, 196, 895–902. [Google Scholar] [CrossRef]

- Wu, J.; Cai, N.; Chen, W.; Wang, H.; Wang, G. Automatic Detection of Hardhats Worn by Construction Personnel: A Deep Learning Approach and Benchmark Dataset. Autom. Constr. 2019, 106, 102894. [Google Scholar] [CrossRef]

- Nath, N.D.; Behzadan, A.H.; Paal, S.G. Deep Learning for Site Safety: Real-Time Detection of Personal Protective Equipment. Autom. Constr. 2020, 112, 103085. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Stoken, A.; Borovec, J.; Kwon, Y.; Fang, J.; Michael, K.; Lorna; Abhiram, A.; Nadar, J.; et al. Ultralytics/yolov5: v6.1–TensorRT, TensorFlow Edge TPU and OpenVINO Export and Inference. 2022. Available online: https://zenodo.org/record/6222936#.Y5FoK31BxPY (accessed on 31 October 2022).

- Ding, L.; Fang, W.; Luo, H.; Love, P.E.D.; Zhong, B.; Ouyang, X. A Deep Hybrid Learning Model to Detect Unsafe Behavior: Integrating Convolution Neural Networks and Long Short-Term Memory. Autom. Constr. 2018, 86, 118–124. [Google Scholar] [CrossRef]

- Long Short-Term Memory | Neural Computation | MIT Press, (n.d.). Available online: https://direct.mit.edu/neco/article-abstract/9/8/1735/6109/Long-Short-Term-Memory?redirectedFrom=fulltext (accessed on 20 September 2022).

- Luo, X.; Li, H.; Cao, D.; Yu, Y.; Yang, X.; Huang, T. Towards Efficient and Objective Work Sampling: Recognizing Workers’ Activities in Site Surveillance Videos with Two-Stream Convolutional Networks. Autom. Constr. 2018, 94, 360–370. [Google Scholar] [CrossRef]

- Luo, X. Capturing and Understanding Workers’ Activities in Far-Field Surveillance Videos with Deep Action Recognition and Bayesian Nonparametric Learning. Comput.-Aided Civ. Infrastruct. Eng.-Wiley Online Libr. 2019, 34, 333–351. [Google Scholar] [CrossRef]

- Kim, J.; Chi, S. Action Recognition of Earthmoving Excavators Based on Sequential Pattern Analysis of Visual Features and Operation Cycles. Autom. Constr. 2019, 104, 255–264. [Google Scholar] [CrossRef]

- Chen, C.; Zhu, Z.; Hammad, A.M. Automated Excavators Activity Recognition and Productivity Analysis From Construction Site Surveillance Videos. Autom. Constr. 2020, 110, 103045. [Google Scholar] [CrossRef]

- Wang, T.; Zhang, G.; Liu, A.; Bhuiyan, M.Z.A.; Jin, Q. A Secure IoT Service Architecture With an Efficient Balance Dynamics Based on Cloud and Edge Computing. IEEE Internet Things J. 2019, 6, 4831–4843. [Google Scholar] [CrossRef]

- Cao, K.; Liu, Y.; Meng, G.; Sun, Q. An Overview on Edge Computing Research. IEEE Access. 2020, 8, 85714–85728. [Google Scholar] [CrossRef]

- Hossain, M.S.; Muhammad, G. Emotion Recognition Using Secure Edge and Cloud Computing. Inf. Sci. 2019, 504, 589–601. [Google Scholar] [CrossRef]

- Satyanarayanan, M. The Emergence of Edge Computing. Computer 2017, 50, 30–39. [Google Scholar] [CrossRef]

- Projects | Computer coding for kids and teens | Raspberry Pi, (n.d.). Available online: https://projects.raspberrypi.org/en/projects/raspberry-pi-setting-up/1 (accessed on 28 November 2022).

- Katsamenis, I.; Karolou, E.E.; Davradou, A.; Protopapadakis, E.; Doulamis, A.; Doulamis, N.; Kalogeras, D. TraCon: A Novel Dataset for Real-Time Traffic Cones Detection Using Deep Learning. arXiv 2022, arXiv:2205.11830v1. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2004, arXiv:2004.10934. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Heartexlabs/labelImg. 2022. Available online: https://github.com/heartexlabs/labelImg (accessed on 9 October 2022).

- Arabi, S.; Haghighat, A.; Sharma, A. A Deep-Learning-Based Computer Vision Solution For Construction Vehicle Detection. Comput.-Aided Civ. Infrastruct. Eng. 2020, 35, 753–767. [Google Scholar] [CrossRef]

- Cortex-A72, (n.d.). Available online: https://developer.arm.com/Processors/Cortex-A72 (accessed on 28 November 2022).

- Broadcom VideoCore VI, (n.d.). Available online: https://www.cpu-monkey.com/en/igpu-broadcom_videocore_vi-221 (accessed on 28 November 2022).

- GeForce GTX 16 Series Graphics Card | NVIDIA, (n.d.). Available online: https://www.nvidia.com/en-us/geforce/graphics-cards/16-series/ (accessed on 28 November 2022).

- Intel Core i59300H Processor 8 M Cache up to 4.10 GHz Product Specifications, (n.d.). Available online: https://ark.intel.com/content/www/us/en/ark/products/191075/intel-core-i59300h-processor-8m-cache-up-to-4-10-ghz.html (accessed on 28 November 2022).

- Openvinotoolkit/Openvino. 2022. Available online: https://github.com/openvinotoolkit/openvino (accessed on 13 October 2022).

- Xuehui, A.; Li, Z.; Zuguang, L.; Chengzhi, W.; Pengfei, L.; Zhiwei, L. Dataset and Benchmark for Detecting Moving Objects In Construction Sites. Autom. Constr. 2021, 122, 103482. [Google Scholar] [CrossRef]

- Duan, R.; Deng, H.; Tian, M.; Deng, Y.; Lin, J. SODA: A Large-Scale Open Site Object Detection Dataset for Deep Learning in Construction. Autom. Constr. 2022, 142, 104499. [Google Scholar] [CrossRef]

| Method | Applicable Situation | Network Bandwidth Pressure | Real Time | Calculation Mode |

|---|---|---|---|---|

| Cloud computing | Global | More | High | Large-scale centralization processing |

| Edge computing | Local | Less | Low | Small-scale intelligent analysis |

| OMEN by HP Laptop 15-dc1xxx | Raspberry Pi 4B | |

|---|---|---|

| CPU | Intel(R) Core (TM) i5-9300H CPU @ 2.40 GHz [32] | 1.5 GHZ 64-bit quad core ARM Cortex-A72 [29] |

| GPU | NVIDIA GeForce GTX 1660Ti [31] | Broadcom VideoCore VI@500 MHz [30] |

| Memory | 16 G | 4 G |

| Wi-Fi network | 5 GHz double | 5 GHz double |

| Size | 36 × 26.3 × 2.5 cm | 85 × 56 mm |

| Image Number | Image Size | Local Computer | Raspberry Pi | ||

|---|---|---|---|---|---|

| Time (s) | Accuracy | Time (s) | Accuracy | ||

| 1 | 5408 × 3680 | 1.465 | 12/13 | 6.57 | 12/13 |

| 2 | 4096 × 3072 | 1.271 | 4/4 | 6.47 | 4/4 |

| 3 | 4096 × 3072 | 1.524 | 5/10 | 5.39 | 5/10 |

| 4 | 5408 × 3680 | 1.497 | 9/13 | 6.12 | 9/13 |

| 5 | 5408 × 3680 | 1.619 | 3/3 | 6.40 | 3/3 |

| 6 | 5408 × 3680 | 1.606 | 4/8 | 6.40 | 4/8 |

| 7 | 5408 × 3680 | 1.551 | 5/10 | 6.41 | 5/10 |

| 8 | 4096 × 3072 | 1.304 | 1/2 | 5.13 | 1/2 |

| Average | 1.505 | 73.07% | 6.11 | 73.07% | |

| Video Frames | Video Length | Local Computer | Raspberry Pi | ||

|---|---|---|---|---|---|

| Time (s) | Accuracy (%) | Time (s) | Accuracy (%) | ||

| 34,802 | 23 min and 12 s | 33 min and 12 s | 78.06 | 3 h and 14 min | 78.06 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, C.; Gu, H.; Lian, S.; Zhao, Y.; Xiao, B. Investigation of Edge Computing in Computer Vision-Based Construction Resource Detection. Buildings 2022, 12, 2167. https://doi.org/10.3390/buildings12122167

Chen C, Gu H, Lian S, Zhao Y, Xiao B. Investigation of Edge Computing in Computer Vision-Based Construction Resource Detection. Buildings. 2022; 12(12):2167. https://doi.org/10.3390/buildings12122167

Chicago/Turabian StyleChen, Chen, Hao Gu, Shenghao Lian, Yiru Zhao, and Bo Xiao. 2022. "Investigation of Edge Computing in Computer Vision-Based Construction Resource Detection" Buildings 12, no. 12: 2167. https://doi.org/10.3390/buildings12122167

APA StyleChen, C., Gu, H., Lian, S., Zhao, Y., & Xiao, B. (2022). Investigation of Edge Computing in Computer Vision-Based Construction Resource Detection. Buildings, 12(12), 2167. https://doi.org/10.3390/buildings12122167