1. Introduction

In modern life, the new materials market has become highly competitive. As a result, clean steel has been increasingly important in the steel industry and plays a vital role in defending steel products against newer competitive materials [

1,

2,

3,

4,

5]. To produce steel with satisfactory cleanliness and low contents of impurities, such as sulfur, phosphorus, non-metallic inclusions, hydrogen and nitrogen, it is necessary to precisely control the composition and temperature of liquid steel [

6,

7]. Steelmakers are urged to improve operating conditions throughout the steelmaking process, such as by applying deoxidant and alloy additions, secondary metallurgy treatments and casting strategies, to obtain high-purity steel [

8]. In practice, vacuum tank degassing (VTD) is widely used as a secondary steelmaking process to produce products with low contents of carbon, hydrogen and nitrogen [

9,

10,

11].

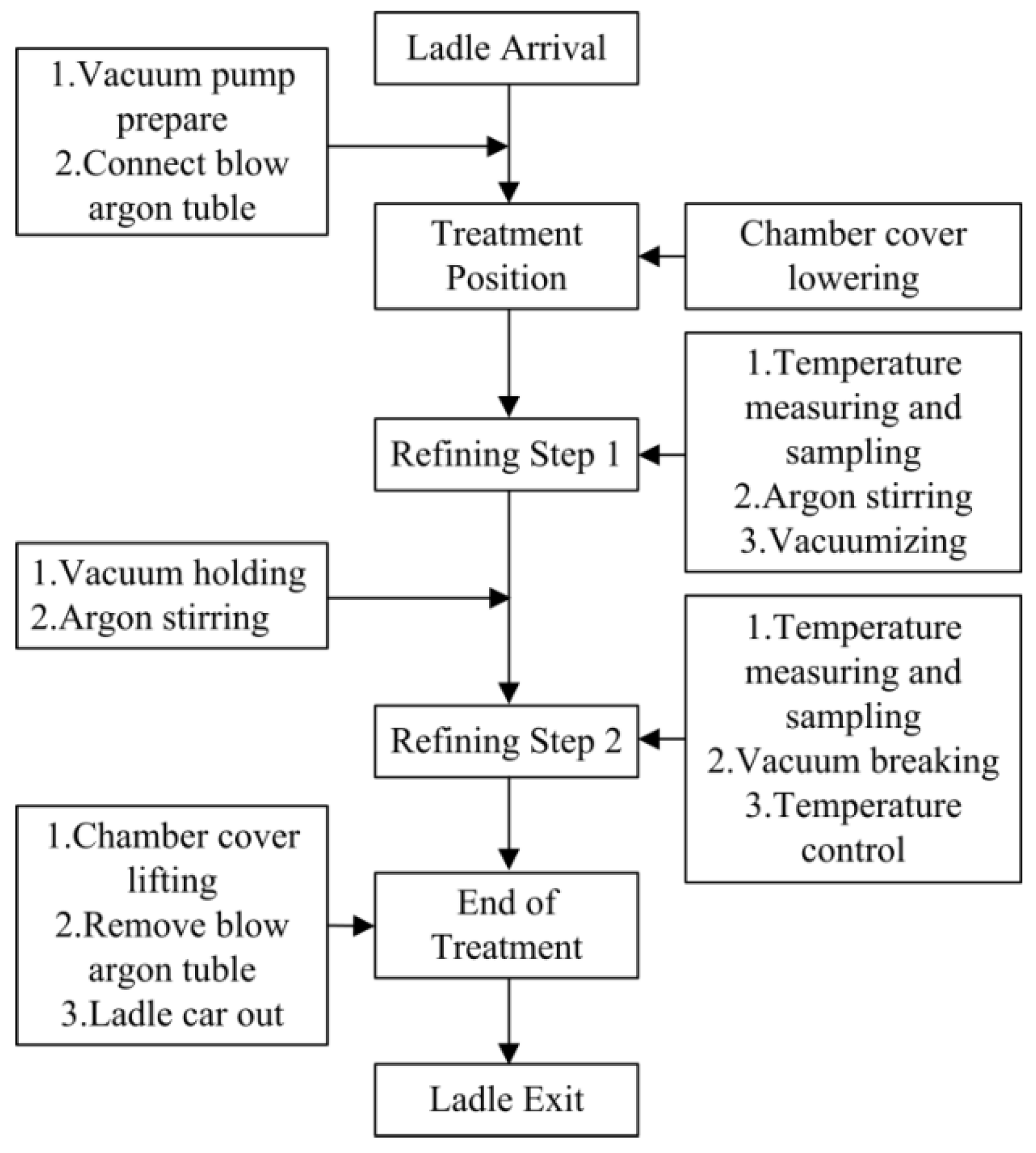

The main purpose of the VTD refining process is to obtain qualified liquid steel with the desired composition and temperature. A method for improving the temperature control level of liquid steel in VTD is to accurately predict the temperature. The degassing process in VTD, as the most critical step in the production of clean steel, has been investigated in a number of studies using various approaches with the goal of better understanding the effect of process parameters and thus further improving the energy efficiency. Several mathematical models of VTD refining have been developed [

12,

13,

14]. These models were formulated as a series of differential equations to describe the chemical and physical changes occurring in the zone of the ladle. VTD is a typical nonlinear system, and some of the mechanisms are still not very well understood. It is indeed very difficult or even impossible to establish a standard mathematical model to encompass all of the dynamics of a VTD process. These mathematical models are local models of dehydrogenation [

13] or denitrogenation [

14], which describe only some of the properties and are time-consuming in their calculations, so it is almost impossible to predict the temperature of liquid steel using these kinds of models.

An artificial neural network (ANN) is an information processing mechanism [

15] used to define a mathematical relationship between process inputs and outputs that “learn” directly from historical data. ANNs have been widely applied in the steelmaking process. Gajic et al. [

16], for instance, have constructed an energy consumption model of an electric arc furnace (EAF) based on feedforward ANNs. Temperature prediction models [

17,

18] for EAF were established by using neural networks. Rajesh et al. [

19] employed feedforward neural networks to predict the intermediate stopping temperature and end-blow oxygen in the LD converter steelmaking process. Wang et al. [

20] have developed a liquid steel temperature prediction model in a ladle furnace by using general regression neural networks as the predictor in their ensemble method. Our previous work [

21] relied on the end-temperature classification of VTD by using classification and regression trees (CARTs) and extracted operation rules for decision making in the process. The main feature that makes neural nets a suitable approach for predicting the end temperature of liquid steel in VTD is that they are nonlinear regression algorithms and can model high-dimensional systems. These black-box models offer alternatives to traditional concepts of knowledge representation to solve the prediction problem for an industrial production process system.

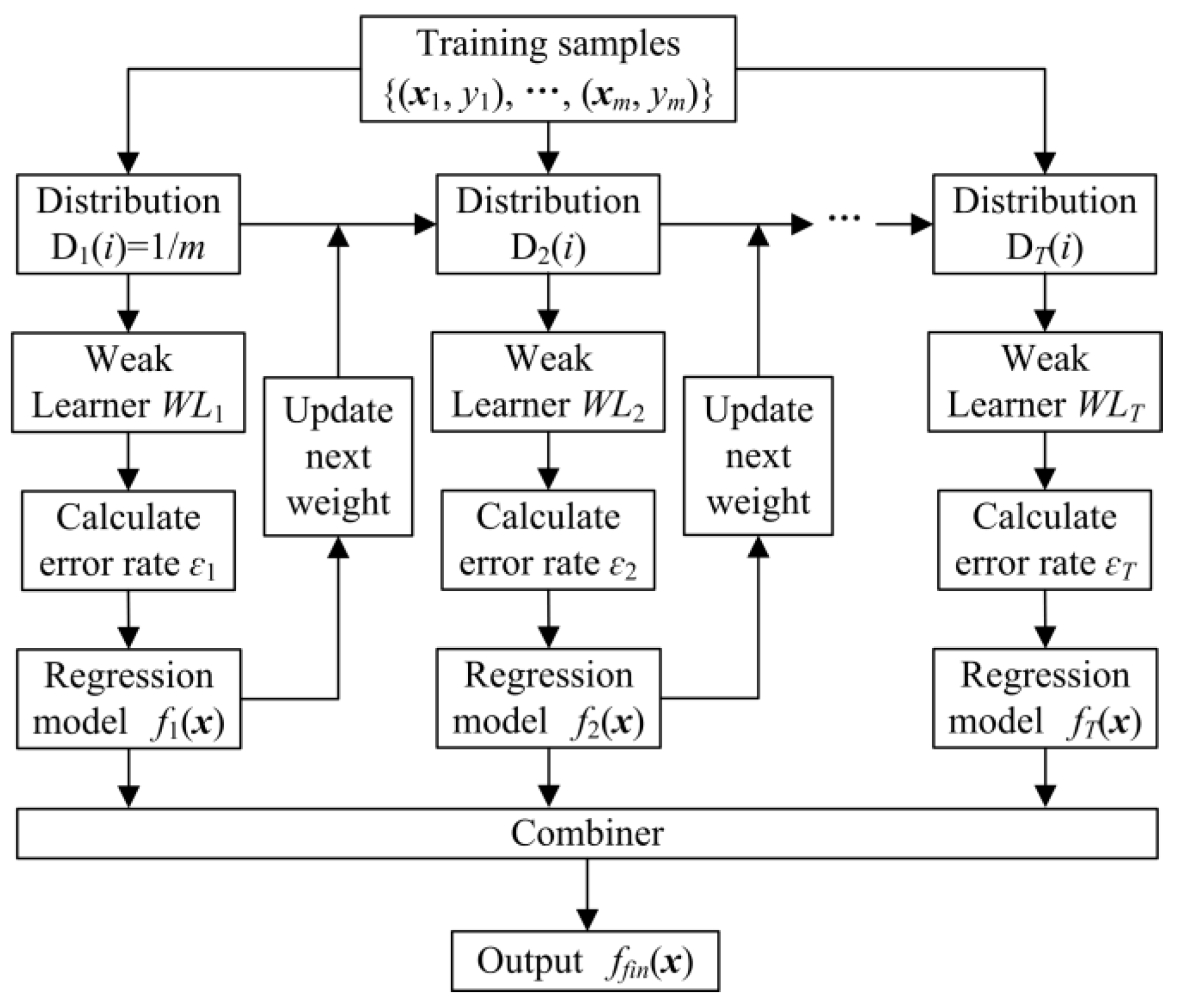

Nowadays, ensemble technologies such as Bagging [

22] and Boosting [

23] are broadly used to obtain far better performance in classification and regression problems than a single classifier and regressor. The AdaBoost (short for Adaptive Boosting) algorithm is one of the most prevalent boosting methods and was originally developed for binary classification issues [

23]. As extensions of AdaBoost, AdaBoost.M1 and AdaBoost.M2 were proposed to deal with multi-classification problems. The main idea of the AdaBoost algorithm is voting based on the weights of weak classifiers in terms of their corresponding training errors to generate an aggregated classifier. This combination of weak classifiers is stronger than any single one.

For regression issues, AdaBoost.R is extended from AdaBoost.M2 by transforming the regression sample into a classification label space. In addition, Drucker [

24] improved AdaBoost.R to AdaBoost.R2 by considering the loss function to calculate the error rate of a weak regressor. Additionally, Solomatine and Shrestha [

25] proposed AdaBoost.RT (R and T stand for regression and threshold) by introducing a constant threshold

ϕ to demarcate the samples as correct and incorrect predictions. The predictions of samples with an absolute relative error less than the threshold

ϕ are marked as correct predictions; otherwise, they are regarded as incorrect. According to Shrestha and Solomatine’s experiments [

26], the committee machine is stable when the value of

ϕ is between 0 and 0.4. To accurately select the threshold value, Tian and Mao [

27] presented a modified AdaBoost.RT algorithm by using a self-adaptive modification mechanism subjected to the change trend of the prediction error at each iteration. This approach has performed well in predicting the temperature of liquid steel in a ladle furnace, but the initial value of

ϕ0 also needs to be manually fixed. Moreover, Zhang and Yang [

28] proposed a robust AdaBoost.RT by considering the standard deviation of approximation errors to determine the threshold. The absolute error is used to demarcate the samples as either well or poorly predicted in this approach. The method has performed well on regression problems from the UCI Machine Learning Repository [

29]. In our study, a method for the dynamic self-adjustable modification of the value of

ϕ was used instead of the invariable

ϕ to improve the original AdaBoost.RT algorithm.

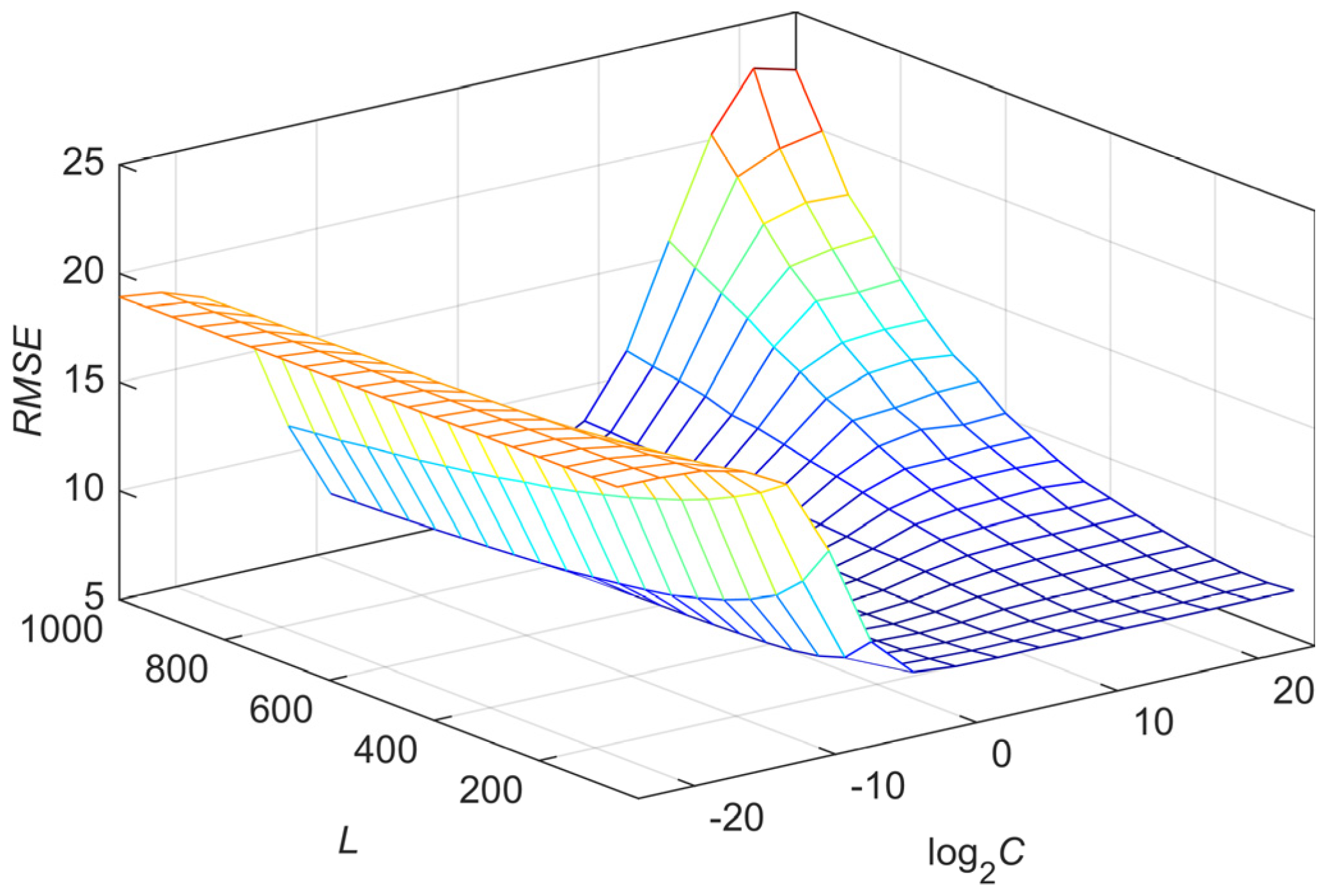

In the present work, to estimate the relative error of samples, a variation coefficient (

σ/

μ) of the predictions at each iteration is proposed instead of the constant threshold

ϕ in the original AdaBoost.RT algorithm. An adjustable relative factor

λ is introduced to make the threshold value stable. Therefore, the credible threshold for the absolute relative error is

λσ/

μ in our study. This threshold is applied to demarcate samples as either correct or incorrect predictions, and the value is self-adjusted to the prediction performance of the training samples. The structure of the work is presented as follows. Firstly, the impacts of the ladle conditions and process parameters on the end temperature of liquid steel are studied by analyzing the process flow and energy equilibrium of the VTD system. Subsequently, an extreme learning machine (ELM) network is presented for the regression problem, and a modified AdaBoost.RT algorithm that embeds statistical theory is introduced to dynamically self-adjust the threshold value. Then, an ensemble model that combines ELM with the self-adaptive AdaBoost.RT algorithm is established to model the end temperature of liquid steel. In the

Section 3, the proposed hybrid ensemble prediction model is validated on actual production data derived from a steelmaking workshop in Baosteel. The application of the ensemble model, a sensitivity analysis of the process parameters, is presented in the

Section 4. Finally, conclusions are drawn in the

Section 5.

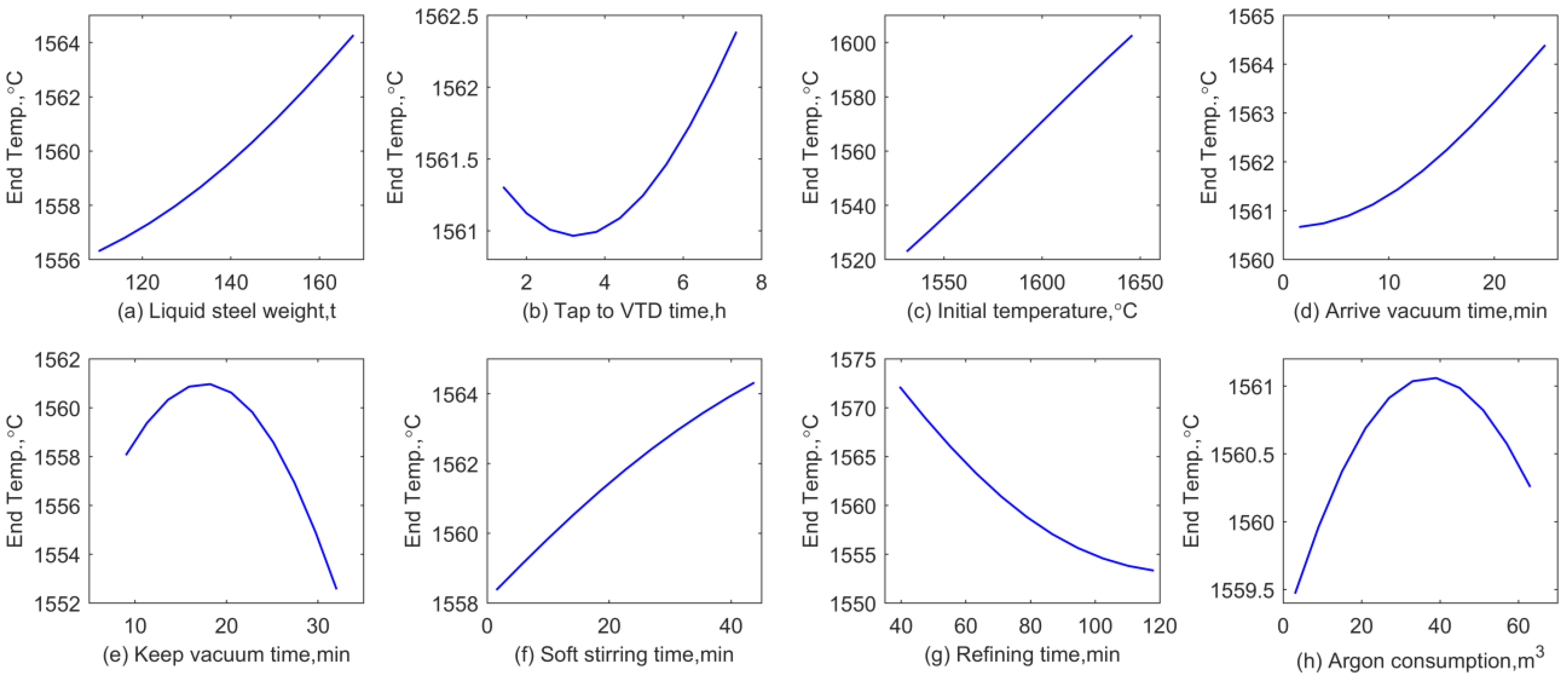

4. Application

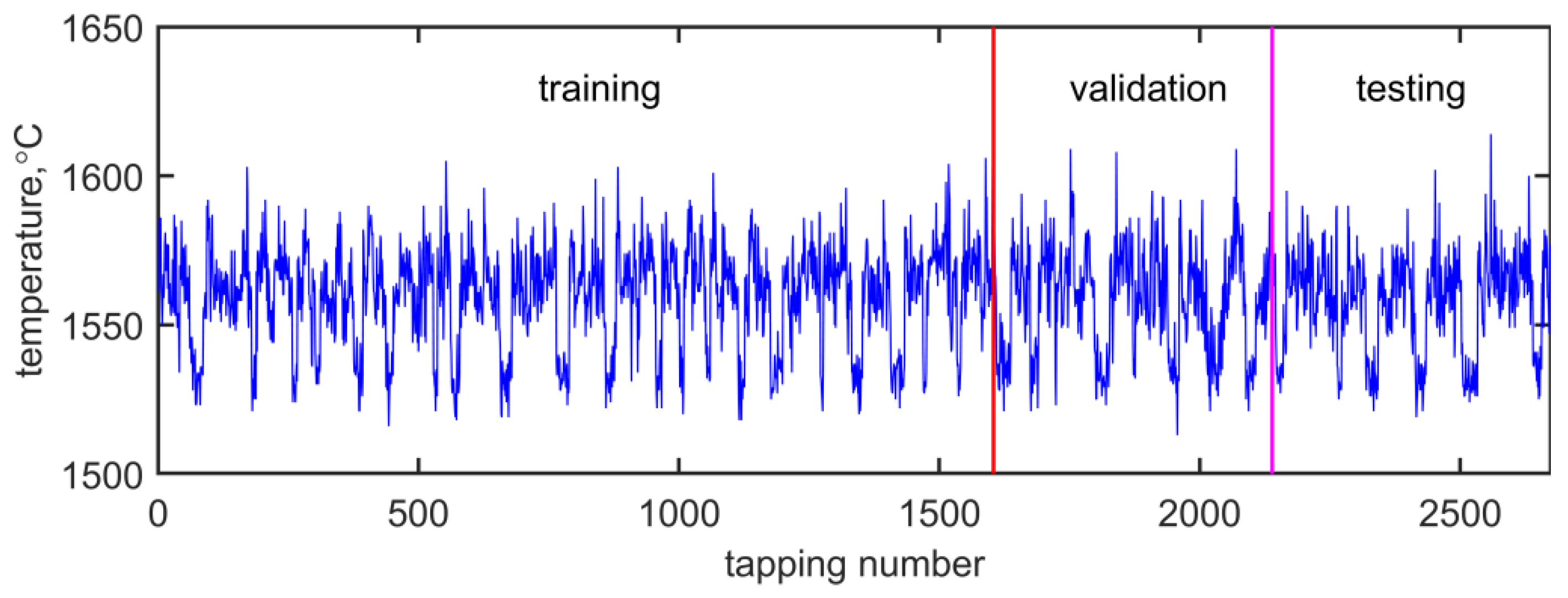

The end temperature of liquid steel during the vacuum degassing process depends on many parameters, such as the initial temperature of liquid steel in VTD, the refining process conditions of vacuum degassing (vacuum arrival time, refining time, vacuum holding time, soft stirring time and argon consumption), liquid steel status (liquid steel weight) and other process parameters (tap-to-VTD time).

The single-factor method was applied to determine the influence of each factor on the end temperature of liquid steel. The method is as follows: when analyzing the influence of factor

i on the target, factor

i is taken as an equal-difference sequence in its range, and all other input parameters are taken as an average value. Then, these data are normalized and input into the trained model as a sample matrix to obtain the output value of the index. A sensitivity analysis of parameters was carried out to understand their effects on the end temperature. The results are shown in

Figure 7.

Liquid steel is the main carrier of heat in the ladle furnace. The greater the weight of liquid steel, the more total heat it contains, and the less heat loss in the VTD system. This results in the end temperature increasing with the increase in the weight of liquid steel (see

Figure 7a for the effect of liquid steel weight on the end temperature).

The tap-to-VTD time is the time before the ladle furnace arrives at VTD, and its effect on the end temperature is depicted in

Figure 7b. The relationship between the tap-to-VTD time and the end temperature is a quadratic curve. However, the absolute influence value is small, and the maximum temperature error is less than 2 °C.

The initial temperature represents the initial state of liquid steel in the ladle furnace.

Figure 7c shows the influence of the initial temperature on the end temperature. The higher the initial temperature, the higher the end temperature.

The vacuum arrival time is the time when the pressure in the VTD drops from atmospheric pressure to a very low operating pressure (i.e., 67 Pa) inside the chamber. The effect of the vacuum arrival time on the end temperature is depicted in

Figure 7d. The vacuum holding time (

Figure 7e) governs the main vacuum degassing process as well as the soft stirring time (

Figure 7f). The main purpose of soft stirring is to move inclusions into the slag layer to improve the purity of refined steel, and the other purpose is to precisely control the temperature of cast-in-place casting. Soft stirring makes the temperature of the liquid steel uniform in the ladle furnace and reduces the temperature drop during the vacuum degassing process. The refining time represents the residence time of the ladle furnace in the vacuum chamber. A longer residence time leads to more heat loss and results in a higher temperature drop. Thus, the longer the refining time, the lower the end temperature, as shown in

Figure 7g.

Argon gas is blown into the ladle furnace to stir liquid steel and to refine steel under a vacuum and an inert gas protection environment. As shown in

Figure 7h, the relationship between the argon gas consumption and the end temperature of liquid steel presents a quadratic curve function.

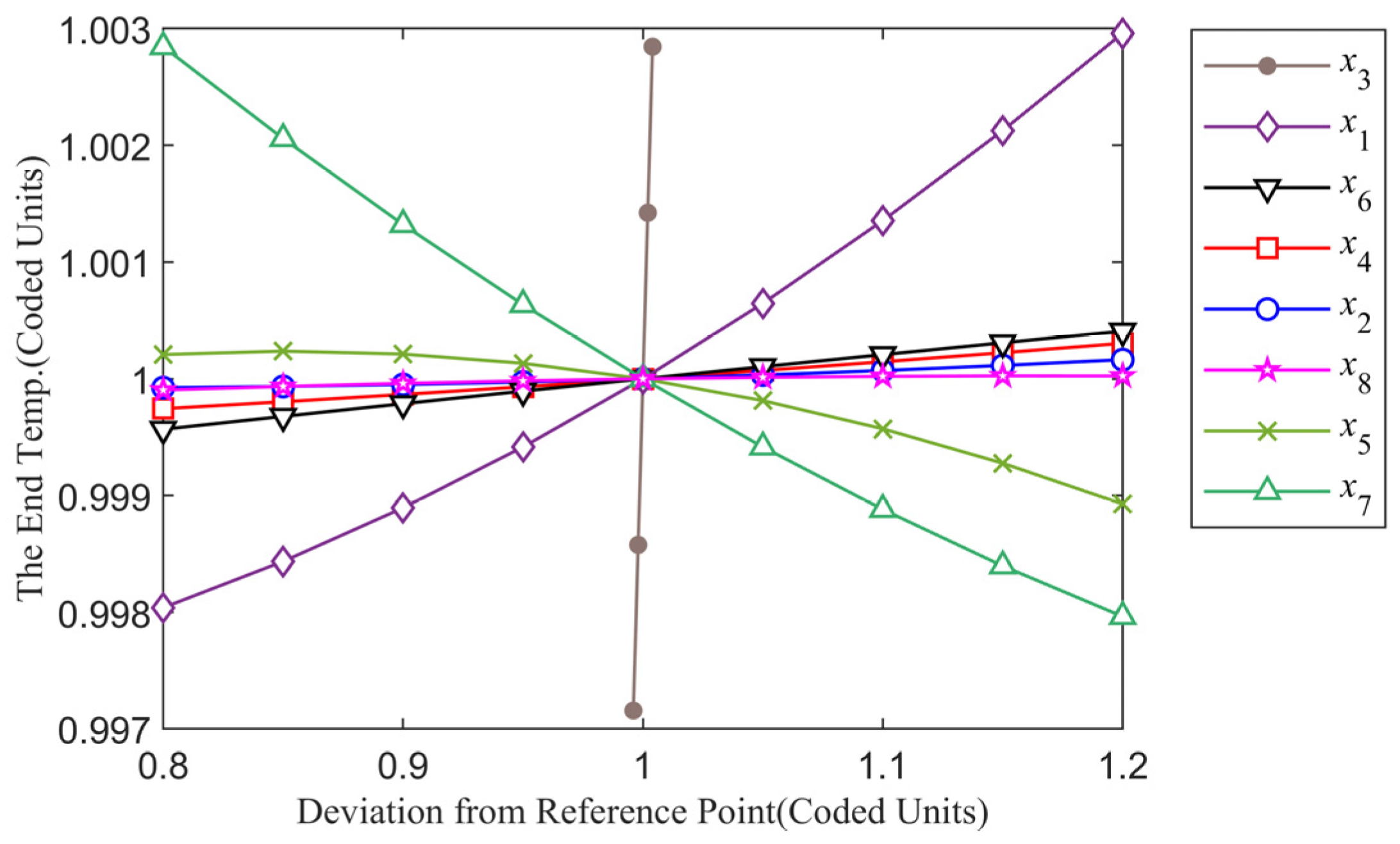

To check the significance of the effects of the operating parameters on the end temperature, a sensitivity analysis of the factors was carried out on the data obtained, as shown in

Figure 8.

Figure 8 shows a perturbation plot illustrating the influence of single independent variables on the end temperature. It can be seen that the end temperature increases with the initial temperature (

x3) more drastically than other operating parameters, and the relationship between the initial temperature and end temperature is approximately linear. In other words, the end temperature increases proportionally to the initial temperature. The value of the end temperature increases as the weight of liquid steel increases in the design range. The effect of the weight of liquid steel on the end temperature in the right region of the design center point is greater than in the left region. The end temperature decreases as the refining time increases in the design range. This phenomenon is due to heat dissipation during the vacuum degassing process. Compared with the later stage of the design center point, heat dissipation is faster in the early stage. The vacuum holding time is the fourth influencing factor of the eight variables, and the effect on the end temperature in the right region of the design center point is greater than in the left region. The effects of the other four parameters on the end temperature are not obvious according to the previous four operating factors.

To obtain the polynomial calculation equation of the end temperature, a stepwise regression method was carried out on the operating parameters and quadratic factors, which were constructed according to the sensitivity analysis of the single parameters. Equation (7) is the stepwise regression result based on the training data set. From Equation (7), it can be seen that the ladle material is the most important factor among the three discrete factors, and the end temperature decreases as the numerical value of the ladle material increases.

Further, the polynomial calculation equation was applied to the validation and test sets of the ladle furnaces in the VTD system to verify its effectiveness.

Table 7 shows the predictive performance of Equation (7) on the different production data sets. In the last row of

Table 7,

R2 is the coefficient of determination to represent the proportion of the variance in the response variable that is predictable from the independent variables. An

R2 value of 0.8409 on the test data set indicates a high correlation between the actual end temperature and predicted results. Both the training

R2 and the validation

R2 are larger than 0.85, indicating that the equation has a good generalization ability. The adequate precision in

Table 7 represents the polynomial calculation equation efficiency for predicting the end temperature of liquid steel in the VTD system.

Since control would be the ultimate goal and VTD control often means controlling the temperature and components of liquid steel, the current prediction model can better serve control purposes. A self-adaptive threshold was created to replace the constant threshold in the original AdaBoost.RT algorithm. In prediction applications, the ELM predictor and the self-adaptive AdaBoost.RT algorithm are adopted to predict the end temperature. Another contribution made in this study is the development of an exact formula to calculate the end temperature. Since it is not easy to select the nonlinear terms of independent variables, black-box modeling techniques still form the main basis for performing VTD control today. For this reason, the current work has tried to address the VTD control problem through a polynomial calculation equation from the VTD black-box SAE-ELM model using a stepwise regression method. Therefore, the novelty of this study is the presentation of a new strategy to obtain a possible solution to the VTD issue, in which the discrete ladle status is first transformed into numerical codes; then, self-adaptive AdaBoost.RT ensemble ELMs are used to perform the prediction task; finally, a polynomial calculation equation is established from the SAE-ELM black-box model through a stepwise regression method to find the solution to the end-temperature control problem.

From the viewpoint of developing VTD models, the proposed strategy renders a novel model that can take advantage of VTD black-box models to generate features. The main motivation driving the current study is that the operation of a VTD is still a serious problem in practice. Although plenty of research on the modeling and control of a VTD system has been conducted in the past few decades, the experience of skilled operators is still the main driver for a smooth operation. In addition, the paramount importance of clean steel in the national economy makes research on VTD system modeling and control still very active in the foreseeable future. Hence, the proposed model is still significant and makes a slight improvement to the VTD system.