Factors Affecting Training and Physical Performance in Recreational Endurance Runners

Abstract

:1. Introduction

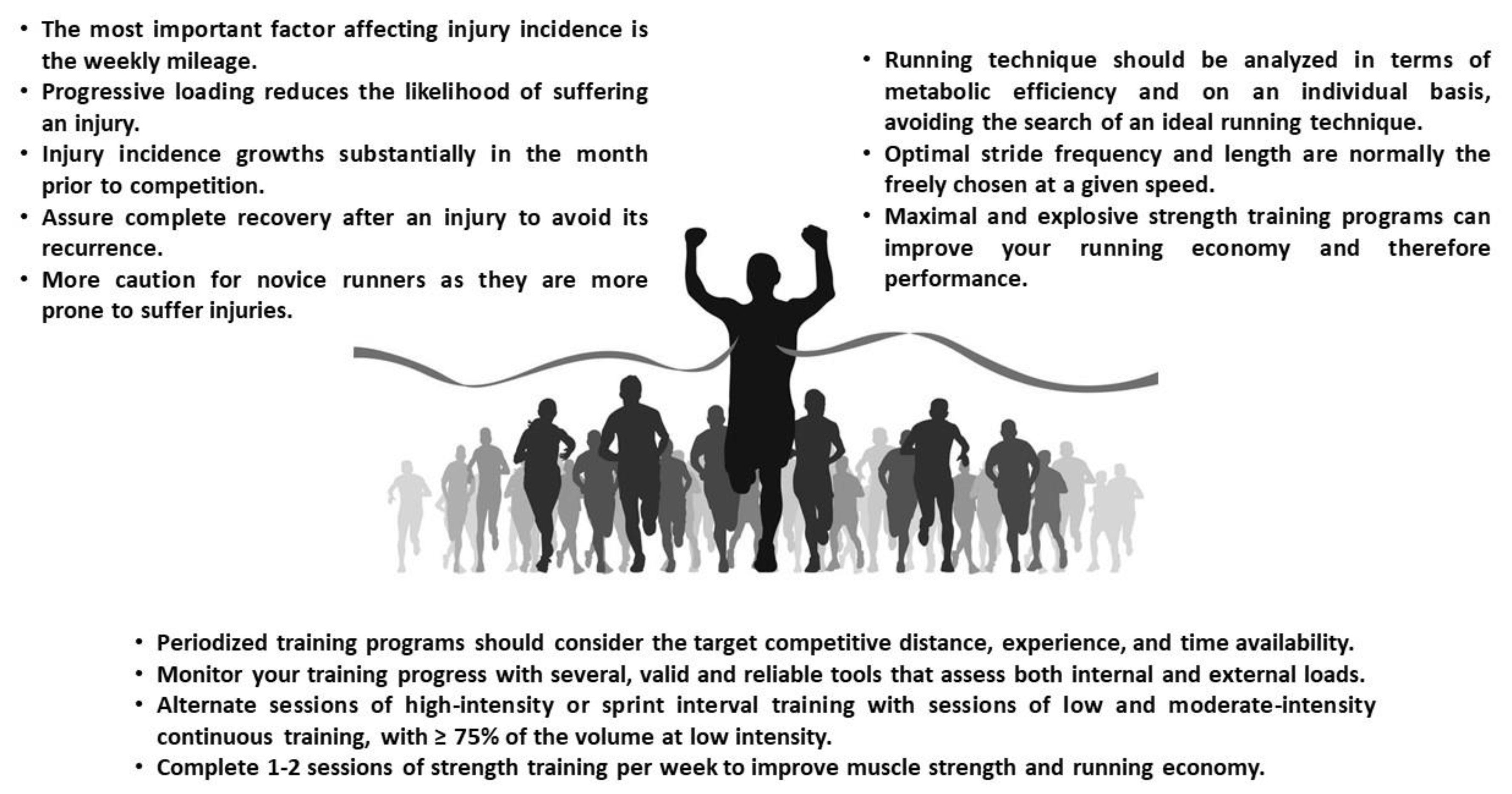

2. Training Characteristics of Recreational Endurance Runners

2.1. Running Training Methods

2.2. Strength Training

2.3. Training Intensity Distribution

2.4. Training Periodization

3. Training Monitoring

4. Performance Predictions

5. Running Technique

6. Factors Associated with Running-Related Injuries

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Bramble, D.M.; Lieberman, D.E. Endurance running and the evolution of Homo. Nature 2004, 432, 345–352. [Google Scholar] [CrossRef] [PubMed]

- Lieberman, D.E. Human Locomotion and Heat Loss: An Evolutionary Perspective. In Comprehensive Physiology; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2015; Volume 5, pp. 99–117. [Google Scholar]

- Raichlen, D.A.; Foster, A.D.; Gerdeman, G.L.; Seillier, A.; Giuffrida, A. Wired to run: Exercise-induced endocannabinoid signaling in humans and cursorial mammals with implications for the “runner’s high”. J. Exp. Biol. 2012, 215, 1331–1336. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Andersen, J.J. The State of Running 2019 | RunRepeat. Available online: https://runrepeat.com/state-of-running (accessed on 15 December 2019).

- Scheerder, J.; Breedveld, K.; Borgers, J. Who Is Doing a Run with the Running Boom? In Running across Europe; Palgrave Macmillan UK: London, UK, 2015; pp. 1–27. [Google Scholar]

- Hespanhol Junior, L.C.; Pillay, J.D.; van Mechelen, W.; Verhagen, E. Meta-Analyses of the Effects of Habitual Running on Indices of Health in Physically Inactive Adults. Sports Med. 2015, 45, 1455–1468. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hollander, K.; Baumann, A.; Zech, A.; Verhagen, E. Prospective monitoring of health problems among recreational runners preparing for a half marathon. BMJ Open Sport Exerc. Med. 2018, 4, e000308. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Seiler, S. What is best practice for training intensity and duration distribution in endurance athletes? Int. J. Sports Physiol. Perform. 2010, 5, 276–291. [Google Scholar] [CrossRef] [PubMed]

- Vezzoli, A.; Pugliese, L.; Marzorati, M.; Serpiello, F.R.; La Torre, A.; Porcelli, S. Time-course changes of oxidative stress response to high-intensity discontinuous training versus moderate-intensity continuous training in masters runners. PLoS ONE 2014, 9, e87506. [Google Scholar] [CrossRef] [Green Version]

- Bangsbo, J.; Gunnarsson, T.P.; Wendell, J.; Nybo, L.; Thomassen, M. Reduced volume and increased training intensity elevate muscle Na+-K+ pump α2-subunit expression as well as short- and long-term work capacity in humans. J. Appl. Physiol. 2009, 107, 1771–1780. [Google Scholar] [CrossRef]

- González-Mohíno, F.; González-Ravé, J.M.; Juárez, D.; Fernández, F.A.; Barragán Castellanos, R.; Newton, R.U. Effects of Continuous and Interval Training on Running Economy, Maximal Aerobic Speed and Gait Kinematics in Recreational Runners. J. Strength Cond. Res. 2016, 30, 1059–1066. [Google Scholar] [CrossRef]

- Gunnarsson, T.P.; Bangsbo, J. The 10-20-30 training concept improves performance and health profile in moderately trained runners. J. Appl. Physiol. 2012, 113, 16–24. [Google Scholar] [CrossRef] [Green Version]

- Gliemann, L.; Gunnarsson, T.P.; Hellsten, Y.; Bangsbo, J. 10-20-30 training increases performance and lowers blood pressure and VEGF in runners. Scand. J. Med. Sci. Sport. 2015, 25, e479–e489. [Google Scholar] [CrossRef]

- Denadai, B.S.; Ortiz, M.J.; Greco, C.C.; De Mello, M.T. Interval training at 95% and 100% of the velocity at VO2max: Effects on aerobic physiological indexes and running performance. Appl. Physiol. Nutr. Metab. 2006, 31, 737–743. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Esfarjani, F.; Laursen, P.B. Manipulating high-intensity interval training: Effects on VO2max, the lactate threshold and 3000 m running performance in moderately trained males. J. Sci. Med. Sport 2007, 10, 27–35. [Google Scholar] [CrossRef] [PubMed]

- Smith, T.P.; Coombes, J.S.; Geraghty, D.P. Optimising high-intensity treadmill training using the running speed at maximal O2 uptake and the time for which this can be maintained. Eur. J. Appl. Physiol. 2003, 89, 337–343. [Google Scholar] [CrossRef] [PubMed]

- Zaton, M.; Michalik, K. Effects of Interval Training-Based Glycolytic Capacity on Physical Fitness in Recreational Long-Distance Runners. Hum. Mov. 2015, 16, 71–77. [Google Scholar]

- Faelli, E.; Ferrando, V.; Bisio, A.; Ferrando, M.; La Torre, A.; Panasci, M.; Ruggeri, P. Effects of Two High-intensity Interval Training Concepts in Recreational Runners. Int. J. Sports Med. 2019, 40, 639–644. [Google Scholar] [CrossRef] [PubMed]

- Hottenrott, K.; Ludyga, S.; Schulze, S. Effects of high intensity training and continuous endurance training on aerobic capacity and body composition in recreationally active runners. J. Sports Sci. Med. 2012, 11, 483–488. [Google Scholar] [PubMed]

- Saunders, P.U.; Pyne, D.B.; Telford, R.D.; Hawley, J.A. Factors affecting running economy in trained distance runners. Sports Med. 2004, 34, 465–485. [Google Scholar] [CrossRef]

- Blagrove, R.C.; Howatson, G.; Hayes, P.R. Effects of Strength Training on the Physiological Determinants of Middle- and Long-Distance Running Performance: A Systematic Review. Sports Med. 2018, 48, 1117–1149. [Google Scholar] [CrossRef] [Green Version]

- Trowell, D.; Vicenzino, B.; Saunders, N.; Fox, A.; Bonacci, J. Effect of Strength Training on Biomechanical and Neuromuscular Variables in Distance Runners: A Systematic Review and Meta-Analysis. Sports Med. 2019, 40, 639–644. [Google Scholar] [CrossRef]

- Alcaraz-Ibañez, M.; Rodríguez-Pérez, M. Effects of resistance training on performance in previously trained endurance runners: A systematic review. J. Sports Sci. 2018, 36, 613–629. [Google Scholar] [CrossRef]

- Johnston, R.E.; Quinn, T.J.; Kertzer, R.; Vroman, N.B. Strength training in female distance runners: Impact on running economy. J. Strength Cond. Res. 1997, 11, 224–229. [Google Scholar] [CrossRef]

- Albracht, K.; Arampatzis, A. Exercise-induced changes in triceps surae tendon stiffness and muscle strength affect running economy in humans. Eur. J. Appl. Physiol. 2013, 113, 1605–1615. [Google Scholar] [CrossRef]

- Piacentini, M.F.; De Ioannon, G.; Comotto, S.; Spedicato, A.; Vernillo, G.; La Torre, A. Concurrent strength and endurance training effects on running economy in master endurance runners. J. Strength Cond. Res. 2013, 27, 2295–2303. [Google Scholar] [CrossRef] [PubMed]

- Festa, L.; Tarperi, C.; Skroce, K.; Boccia, G.; Lippi, G.; Torre, A.L.A.; Schena, F. Effects of flywheel strength training on the running economy of recreational endurance runners. J. Strength Cond. Res. 2019, 33, 684–690. [Google Scholar] [CrossRef] [PubMed]

- Turner, A.M.; Owings, M.; Schwane, J.A. Improvement in running economy after 6 weeks of plyometric training. J. Strength Cond. Res. 2003, 17, 60–67. [Google Scholar] [PubMed] [Green Version]

- Beattie, K.; Kenny, I.C.; Lyons, M.; Carson, B.P. The effect of strength training on performance in endurance athletes. Sports Med. 2014, 44, 845–865. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pellegrino, J.; Ruby, B.C.; Dumke, C.L. Effect of plyometrics on the energy cost of running and MHC and titin isoforms. Med. Sci. Sports Exerc. 2016, 48, 49–56. [Google Scholar] [CrossRef]

- Ferrauti, A.; Bergermann, M.; Fernandez-Fernandez, J. Effects of a concurrent strength and endurance training on running performance and running economy in recreational marathon runners. J. Strength Cond. Res. 2010, 24, 2770–2778. [Google Scholar] [CrossRef] [Green Version]

- Trappe, S.; Harber, M.; Creer, A.; Gallagher, P.; Slivka, D.; Minchev, K.; Whitsett, D. Single muscle fiber adaptations with marathon training. J. Appl. Physiol. 2006, 101, 721–727. [Google Scholar] [CrossRef] [Green Version]

- Schoenfeld, B.J.; Ogborn, D.; Krieger, J.W. Effects of Resistance Training Frequency on Measures of Muscle Hypertrophy: A Systematic Review and Meta-Analysis. Sports Med. 2016, 46, 1689–1697. [Google Scholar] [CrossRef]

- Wilson, J.M.; Marin, P.J.; Rhea, M.R.; Wilson, S.M.C.; Loenneke, J.P.; Anderson, J.C. Concurrent training: A meta-analysis examining interference of aerobic and resistance exercises. J. Strength Cond. Res. 2012, 26, 2293–2307. [Google Scholar] [CrossRef] [PubMed]

- Seiler, K.S.; Kjerland, G.Ø. Quantifying training intensity distribution in elite endurance athletes: Is there evidence for an “optimal” distribution? Scand. J. Med. Sci. Sports 2006, 16, 49–56. [Google Scholar] [CrossRef] [PubMed]

- Fiskerstrand, Å.; Seiler, K.S. Training and performance characteristics among Norwegian International Rowers 1970–2001. Scand. J. Med. Sci. Sports 2004, 14, 303–310. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Esteve-Lanao, J.; Foster, C.; Seiler, S.; Lucia, A. Impact of training intensity distribution on performance in endurance athletes. J. Strength Cond. Res. 2007, 21, 943–949. [Google Scholar]

- Boullosa, D.A.; Abreu, L.; Varela-Sanz, A.; Mujika, I. Do olympic athletes train as in the paleolithic era? Sports Med. 2013, 43, 909–917. [Google Scholar] [CrossRef]

- Holmberg, H.-C. Träningslära Längd; CEWE-förlaget, Ed.; Bjästa: Örnsköldsvik, Sweden, 1996. [Google Scholar]

- Muñoz, I.; Seiler, S.; Bautista, J.; España, J.; Larumbe, E.; Esteve-Lanao, J. Does polarized training improve performance in recreational runners? Int. J. Sports Physiol. Perform. 2014, 9, 265–272. [Google Scholar] [CrossRef]

- Zinner, C.; Schäfer Olstad, D.; Sperlich, B. Mesocycles with different training intensity distribution in recreational runners. Med. Sci. Sports Exerc. 2018, 50, 1641–1648. [Google Scholar] [CrossRef]

- Pérez, A.; Ramos-Campo, D.J.; Freitas, T.T.; Rubio-Arias, J.; Marín-Cascales, E.; Alcaraz, P.E. Effect of two different intensity distribution training programmes on aerobic and body composition variables in ultra-endurance runners. Eur. J. Sport Sci. 2019, 19, 636–644. [Google Scholar] [CrossRef]

- Issurin, V.B. New horizons for the methodology and physiology of training periodization. Sports Med. 2010, 40, 189–206. [Google Scholar] [CrossRef]

- Bradbury, D.G.; Landers, G.J.; Benjanuvatra, N.; Goods, P.S.R. Comparison of Linear and Reverse Linear Periodized Programs with Equated Volume and Intensity for Endurance Running Performance. J. Strength Cond. Res. 2018, 1. [Google Scholar] [CrossRef]

- García-Pinillos, F.; Soto-Hermoso, V.M.; Latorre-Román, P.A. How does high-intensity intermittent training affect recreational endurance runners? Acute and chronic adaptations: A systematic review. J. Sport Health Sci. 2017, 6, 54–67. [Google Scholar] [CrossRef] [PubMed]

- Boullosa, D.; Esteve-Lanao, J.; Seiler, S. Potential Confounding Effects of Intensity on Training Response. Med. Sci. Sports Exerc. 2019, 51, 1973–1974. [Google Scholar] [CrossRef] [PubMed]

- Tuimil, J.L.; Boullosa, D.A.; Fernández-Del-Olmo, M.Á.; Rodríguez, F.A. Effect of equated continuous and interval running programs on endurance performance and jump capacity. J. Strength Cond. Res. 2011, 25, 2205–2211. [Google Scholar] [CrossRef] [PubMed]

- Varela-Sanz, A.; Tuimil, J.L.; Abreu, L.; Boullosa, D.A. Does concurrent training intensity distribution matter? J. Strength Cond. Res. 2017, 31, 181–195. [Google Scholar] [CrossRef]

- Hautala, A.; Martinmaki, K.; Kiviniemi, A.; Kinnunen, H.; Virtanen, P.; Jaatinen, J.; Tulppo, M. Effects of habitual physical activity on response to endurance training. J. Sports Sci. 2012, 30, 563–569. [Google Scholar] [CrossRef]

- Bassett, D.R.; Howley, E.T. Limiting factors for maximum oxygen uptake and determinants of endurance performance. Med. Sci. Sports Exerc. 2000, 32, 70. [Google Scholar] [CrossRef]

- Billat, L.V.; Koralsztein, J.P. Significance of the velocity at VO2max and time to exhaustion at this velocity. Sports Med. 1996, 22, 90–108. [Google Scholar] [CrossRef]

- Semin, K.; Stahlnecker IV, A.C.; Heelan, K.; Brown, G.A.; Shaw, B.S.; Shaw, I. Discrepancy between training, competition and laboratory measures of maximum heart rate in NCAA division 2 distance runners. J. Sports Sci. Med. 2008. [Google Scholar]

- Cottin, F.; Médigue, C.; Lopes, P.; Leprêtre, P.M.; Heubert, R.; Billat, V. Ventilatory thresholds assessment from heart rate variability during an incremental exhaustive running test. Int. J. Sports Med. 2007, 28, 287–294. [Google Scholar] [CrossRef]

- Berthon, P.; Fellmann, N.; Bedu, M.; Beaune, B.; Dabonneville, M.; Coudert, J.; Chamoux, A. A 5-min running field test as a measurement of maximal aerobic velocity. Eur. J. Appl. Physiol. Occup. Physiol. 1997, 75, 233–238. [Google Scholar] [CrossRef]

- Dabonneville, M.; Berthon, P.; Vaslin, P.; Fellmann, N. The 5 min running field test: Test and retest reliability on trained men and women. Eur. J. Appl. Physiol. 2003, 88, 353–360. [Google Scholar] [CrossRef] [PubMed]

- Galbraith, A.; Hopker, J.; Lelliott, S.; Diddams, L.; Passfield, L. A single-visit field test of critical speed. Int. J. Sports Physiol. Perform. 2014, 9, 931–935. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Etxegarai, U.; Insunza, A.; Larruskain, J.; Santos-Concejero, J.; Gil, S.M.; Portillo, E.; Irazusta, J. Prediction of performance by heart rate-derived parameters in recreational runners. J. Sports Sci. 2018, 36, 2129–2137. [Google Scholar] [CrossRef] [PubMed]

- Garcia-Tabar, I.; Izquierdo, M.; Gorostiaga, E.M. On-field prediction vs monitoring of aerobic capacity markers using submaximal lactate and heart rate measures. Scand. J. Med. Sci. Sports 2017, 27, 462–473. [Google Scholar] [CrossRef] [PubMed]

- Garcia-Tabar, I.; Llodio, I.; Sánchez-Medina, L.; Asiain, X.; Ibáñez, J.; Gorostiaga, E.M. Validity of a single lactate measure to predict fixed lactate thresholds in athletes. J. Sports Sci. 2017, 35, 385–392. [Google Scholar] [CrossRef] [PubMed]

- Buchheit, M.; Chivot, A.; Parouty, J.; Mercier, D.; Al Haddad, H.; Laursen, P.B.; Ahmaidi, S. Monitoring endurance running performance using cardiac parasympathetic function. Eur. J. Appl. Physiol. 2010, 108, 1153–1167. [Google Scholar] [CrossRef] [PubMed]

- Achten, J.; Jeukendrup, A.E. Heart rate monitoring: Applications and limitations. Sports Med. 2003, 33, 517–538. [Google Scholar] [CrossRef]

- Llodio, I.; Gorostiaga, E.M.; Garcia-Tabar, I.; Granados, C.; Sánchez-Medina, L. Estimation of the Maximal Lactate Steady State in Endurance Runners. Int. J. Sports Med. 2016, 37, 539–546. [Google Scholar] [CrossRef] [Green Version]

- Miller, J.R.; Van Hooren, B.; Bishop, C.; Buckley, J.D.; Willy, R.W.; Fuller, J.T. A Systematic Review and Meta-Analysis of Crossover Studies Comparing Physiological, Perceptual and Performance Measures Between Treadmill and Overground Running. Sports Med. 2019, 49, 763–782. [Google Scholar] [CrossRef]

- Kenneally, M.; Casado, A.; Santos-Concejero, J. The effect of periodization and training intensity distribution on middle-and long-distance running performance: A systematic review. Int. J. Sports Physiol. Perform. 2018, 13, 1114–1121. [Google Scholar] [CrossRef]

- Foster, C.; Rodriguez-Marroyo, J.A.; De Koning, J.J. Monitoring training loads: The past, the present, and the future. Int. J. Sports Physiol. Perform. 2017, 12, S2-2–S2-8. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Impellizzeri, F.M.; Marcora, S.M.; Coutts, A.J. Internal and External Training Load: 15 Years On. Int. J. Sports Physiol. Perform. 2019, 14, 270–273. [Google Scholar] [CrossRef] [PubMed]

- Van Hooren, B.; Goudsmit, J.; Restrepo, J.; Vos, S. Real-time feedback by wearables in running: Current approaches, challenges and suggestions for improvements. J. Sports Sci. 2019, 38, 214–230. [Google Scholar] [CrossRef] [PubMed]

- Boullosa, D.A.; Foster, C. “Evolutionary” based periodization in a recreational runner. Sport Perform. Sci. Rep. 2018, 1, 36. [Google Scholar]

- Vesterinen, V.; Nummela, A.; Heikura, I.; Laine, T.; Hynynen, E.; Botella, J.; Häkkinen, K. Individual Endurance Training Prescription with Heart Rate Variability. Med. Sci. Sports Exerc. 2016, 48, 1347–1354. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kiviniemi, A.M.; Hautala, A.J.; Kinnunen, H.; Tulppo, M.P. Endurance training guided individually by daily heart rate variability measurements. Eur. J. Appl. Physiol. 2007, 101, 743–751. [Google Scholar] [CrossRef] [PubMed]

- Hautala, A.J.; Kiviniemi, A.M.; Tulppo, M.P. Individual responses to aerobic exercise: The role of the autonomic nervous system. Neurosci. Biobehav. Rev. 2009, 33, 107–115. [Google Scholar] [CrossRef]

- Vesterinen, V.; Häkkinen, K.; Laine, T.; Hynynen, E.; Mikkola, J.; Nummela, A. Predictors of individual adaptation to high-volume or high-intensity endurance training in recreational endurance runners. Scand. J. Med. Sci. Sports 2016, 26, 885–893. [Google Scholar] [CrossRef]

- da Silva, D.F.; Ferraro, Z.M.; Adamo, K.B.; Machado, F.A. Endurance Running Training Individually Guided by HRV in Untrained Women. J. Strength Cond. Res. 2019, 33, 736–746. [Google Scholar] [CrossRef]

- Altini, M.; Amft, O. HRV4Training: Large-scale longitudinal training load analysis in unconstrained free-living settings using a smartphone application. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBS, Orlando, FL, USA, 16–20 August 2016. [Google Scholar]

- Coquart, J.B.J.; Alberty, M.; Bosquet, L. Validity of a nomogram to predict long distance running performance. J. Strength Cond. Res. 2009, 23, 2119–2123. [Google Scholar] [CrossRef]

- Coe, P.; Martin, D. Training Distance Runners; Human Kinetics Publishers Inc.: Champaign, IL, USA, 1991. [Google Scholar]

- Deason, J.; Powers, S.K.; Lawler, J.; Ayers, D.; Stuart, M.K. Physiological correlates to 800 meter running performance. J. Sports Med. Phys. Fit. 1991, 31, 499–504. [Google Scholar]

- Noakes, T.D.; Myburgh, K.H.; Schall, R. Peak treadmill running velocity during the VO2max test predicts running performance. J. Sports Sci. 1990, 8, 35–45. [Google Scholar] [CrossRef] [PubMed]

- Farrel, P.A.; Wilmor, J.H.; Coyl, E.F.; Billin, J.E.; Costil, D.L. Plasma lactate accumulation and distance running performance. Med. Sci. Sports Exerc. 1993, 25, 1091–1097. [Google Scholar] [CrossRef] [PubMed]

- Slovic, P. Empirical study of training and performance in the marathon. Res. Q. Am. Alliance Health Phys. Educ. Recreat. 1977, 48, 769777. [Google Scholar] [CrossRef]

- Mercier, D.; Leger, L.; Desjardins, M. Nomogramme pour prédire la performance, le VO2max et l’endurance relative en course de fond. Méd. Sport 1984, 58, 181–187. [Google Scholar]

- Tabben, M.; Bosquet, L.; Coquart, J.B. Effect of performance level on the prediction of middle-distance-running performances using a nomogram. Int. J. Sports Physiol. Perform. 2016, 11, 623–626. [Google Scholar] [CrossRef]

- Coquart, J.B.J.; Bosquet, L. Precision in the prediction of middle distance-running performances using either a nomogram or the modeling of the distance-time relationship. J. Strength Cond. Res. 2010, 24, 2920–2926. [Google Scholar] [CrossRef]

- Scott, B.K.; Houmard, J.A. Peak running velocity is highly related to distance running performance. Int. J. Sports Med. 1994, 15, 504–507. [Google Scholar] [CrossRef]

- Houmard, J.A.; Craib, M.W.; O’Brien, K.F.; Smith, L.L.; Israel, R.G.; Wheeler, W.S. Peak running velocity, submaximal energy expenditure, VO2max, and 8 km distance running performance. J. Sports Med. Phys. Fit. 1991, 31, 345–350. [Google Scholar]

- Scrimgeour, A.G.; Noakes, T.D.; Adams, B.; Myburgh, K. The influence of weekly training distance on fractional utilization of maximum aerobic capacity in marathon and ultramarathon runners. Eur. J. Appl. Physiol. Occup. Physiol. 1986, 55, 202–209. [Google Scholar] [CrossRef]

- Grant, S.; Craig, I.; Wilson, J.; Aitchison, T. The relationship between 3 km running performance and selected physiological variables. J. Sports Sci. 1997, 15, 403–410. [Google Scholar] [CrossRef] [PubMed]

- Yoshida, T.; Udo, M.; Iwai, K.; Yamaguchi, T. Physiological characteristics related to endurance running performance in female distance runners. J. Sports Sci. 1993, 11, 57–62. [Google Scholar] [CrossRef] [PubMed]

- Daniels, J.; Daniels, N. Running economy of elite male and elite female runners. Med. Sci. Sports Exerc. 1992, 24, 483–489. [Google Scholar] [CrossRef] [PubMed]

- Morgan, D.W.; Baldini, F.D.; Martin, P.E.; Kohrt, W.M. Ten kilometer performance and predicted velocity at VO2max among well-trained male runners. Med. Sci. Sports Exerc. 1989, 21, 78–83. [Google Scholar] [CrossRef]

- Gómez-Molina, J.; Ogueta-Alday, A.; Camara, J.; Stickley, C.; Rodríguez-Marroyo, J.A.; García-López, J. Predictive variables of half-marathon performance for male runners. J. Sports Sci. Med. 2017, 16, 187–194. [Google Scholar]

- Bragada, J.A.; Santos, P.J.; Maia, J.A.; Colaço, P.J.; Lopes, V.P.; Barbosa, T.M. Longitudinal study in 3,000 m male runners: Relationship between performance and selected physiological parameters. J. Sports Sci. Med. 2010, 9, 439–444. [Google Scholar]

- Nicholson, R.M.; Sleivert, G.G. Indices of lactate threshold and their relationship with 10-km running velocity. Med. Sci. Sports Exerc. 2001, 33, 339–342. [Google Scholar] [CrossRef]

- Roecker, K.; Schotte, O.; Niess, A.M.; Horstmann, T.; Dickhuth, H.H. Predicting competition performance in long-distance running by means of a treadmill test. Med. Sci. Sports Exerc. 1998, 30, 1552–1557. [Google Scholar] [CrossRef]

- Fohrenbach, R.; Mader, A.; Hollmann, W. Determination of endurance capacity and prediction of exercise intensities for training and competition in marathon runners. Int. J. Sports Med. 1987, 8, 11–18. [Google Scholar] [CrossRef] [Green Version]

- Brandon, L.J. Physiological Factors Associated with Middle Distance Running Performance. Sports Med. 1995, 19, 268–277. [Google Scholar] [CrossRef]

- Knechtle, B.; Tanda, G. Effects of training and anthropometric factors on marathon and 100 km ultramarathon race performance. Open Access J. Sports Med. 2015, 6, 129. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Knechtle, B.; Barandun, U.; Knechtle, P.; Zingg, M.A.; Rosemann, T.; Rüst, C.A. Prediction of half-marathon race time in recreational female and male runners. Springerplus 2014, 3, 248. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Berg, K.; Latin, R.W.; Coffey, C. Relationship of somatotype and physical characteristics to distance running performance in middle age runners. J. Sports Med. Phys. Fit. 1998, 38, 253–257. [Google Scholar]

- Bale, P.; Rowell, S.; Colley, E. Anthropometric and training characteristics of female marathon runners as determinants of distance running performance. J. Sports Sci. 1985, 3, 115–126. [Google Scholar] [CrossRef]

- Salinero, J.J.; Soriano, M.L.; Lara, B.; Gallo-Salazar, C.; Areces, F.; Ruiz-Vicente, D.; Abián-Vicén, J.; González-Millán, C.; Del Coso, J. Predicting race time in male amateur marathon runners. J. Sports Med. Phys. Fit. 2017, 57, 1169–1177. [Google Scholar]

- Péronnet, F. Maratón; Inde: Barcelona, Spain, 2001. [Google Scholar]

- Larumbe, E.; Perez-Llantada, M.C.; Lopez de la Llave, A.; Buceta, J.M. Development and preliminary psychometric characteristics of the PODIUM questionnaire for recreational marathon runners. Cuad. Psicol. Deporte 2015, 15, 41–52. [Google Scholar] [CrossRef] [Green Version]

- Esteve-Lanao, J.; Del Rosso, S.; Larumbe-Zabala, E.; Cardona, C.; Alcocer-Gamboa, A.; Boullosa, D.A. Predicting Recreational Runners’ Marathon Performance Time During Their Training Preparation. J. Strength Cond. Res. 2019, 1, 1–7. [Google Scholar] [CrossRef]

- Altini, M.; Amft, O. Estimating Running Performance Combining Non-invasive Physiological Measurements and Training Patterns in Free-Living. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBS, Honolulu, HI, USA, 18–21 July 2018; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2018; Volume 2018-July, pp. 2845–2848. [Google Scholar]

- Tartaruga, M.P.; Brisswalter, J.; Peyré-Tartaruga, L.A.; Ávila, A.O.V.; Alberton, C.L.; Coertjens, M.; Cadore, E.L.; Tiggemann, C.L.; Silva, E.M.; Kruel, L.F.M. The relationship between running economy and biomechanical variables in distance runners. Res. Q. Exerc. Sport 2012, 83, 367–375. [Google Scholar] [CrossRef]

- Moore, I.S. Is There an Economical Running Technique? A Review of Modifiable Biomechanical Factors Affecting Running Economy. Sports Med. 2016, 46, 793–807. [Google Scholar] [CrossRef] [Green Version]

- Moore, I.S.; Jones, A.M.; Dixon, S.J. Mechanisms for improved running economy in beginner runners. Med. Sci. Sports Exerc. 2012, 44, 1756–1763. [Google Scholar] [CrossRef] [Green Version]

- Williams, K.R.; Cavanagh, P.R. Relationship between distance running mechanics, running economy, and performance. J. Appl. Physiol. 1987, 63, 1236–1245. [Google Scholar] [CrossRef] [PubMed]

- Barton, C.J.; Bonanno, D.R.; Carr, J.; Neal, B.S.; Malliaras, P.; Franklyn-Miller, A.; Menz, H.B. Running retraining to treat lower limb injuries: A mixed-methods study of current evidence synthesised with expert opinion. Br. J. Sports Med. 2016, 50, 513–526. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Messier, S.P.; Cirillo, K.J. Effects of a verbal and visual feedback system on running technique, perceived exertion and running economy in female novice runners. J. Sports Sci. 1989, 7, 113–126. [Google Scholar] [CrossRef] [PubMed]

- Crowell, H.P.; Milnert, C.E.; Hamill, J.; Davis, I.S. Reducing impact loading during running with the use of real-time visual feedback. J. Orthop. Sports Phys. Ther. 2010, 40, 206–213. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Okwumabua, T.M.; Meyers, A.W.; Schleser, R.; Cooke, C.J. Cognitive strategies and running performance: An exploratory study. Cognit. Ther. Res. 1983, 7, 363–369. [Google Scholar] [CrossRef]

- McDonnell, J.; Willson, J.D.; Zwetsloot, K.A.; Houmard, J.; DeVita, P. Gait biomechanics of skipping are substantially different than those of running. J. Biomech. 2017, 64, 180–185. [Google Scholar] [CrossRef] [PubMed]

- McFarlane, B. A basic and advanced technical model for speed. Natl. Strength Cond. Assoc. J. 1993, 15, 57. [Google Scholar] [CrossRef]

- Johnson, S.T.; Golden, G.M.; Mercer, J.A.; Mangus, B.C.; Hoffman, M.A. Ground-reaction forces during form skipping and running. J. Sport Rehabil. 2005, 14, 4. [Google Scholar] [CrossRef]

- Warne, J.P.; Warrington, G.D. Four-week habituation to simulated barefoot running improves running economy when compared with shod running. Scand. J. Med. Sci. Sports 2014, 24, 563–568. [Google Scholar] [CrossRef]

- Shih, H.T.; Teng, H.L.; Gray, C.; Poggemiller, M.; Tracy, I.; Lee, S.P. Four weeks of training with simple postural instructions changes trunk posture and foot strike pattern in recreational runners. Phys. Ther. Sport 2019, 35, 89–96. [Google Scholar] [CrossRef]

- Romanov, N.S.; Robson, J. Dr. Nicholas Romanov’s Pose Method of Running: A New Paradigm of Running; Dr. Romanov’s Sport Education; PoseTech: Coral Gables, FL, USA, 2002; ISBN 9780972553766. [Google Scholar]

- Dunn, M.D.; Claxton, D.B.; Fletcher, G.; Wheat, J.S.; Binney, D.M. Effects of running retraining on biomechanical factors associated with lower limb injury. Hum. Mov. Sci. 2018, 58, 21–31. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dallam, G.M.; Wilber, R.L.; Jadelis, K.; Fletcher, G.; Romanov, N. Effect of a global alteration of running technique on kinematics and economy. J. Sports Sci. 2005, 23, 757–764. [Google Scholar] [CrossRef] [PubMed]

- Wei, R.X.; Au, I.P.H.; Lau, F.O.Y.; Zhang, J.H.; Chan, Z.Y.S.; MacPhail, A.J.C.; Mangubat, A.L.; Pun, G.; Cheung, R.T.H. Running biomechanics before and after Pose® method gait retraining in distance runners. Sports Biomech. 2019. [Google Scholar] [CrossRef] [PubMed]

- Paavolainen, L.; Häkkinen, K.; Hämäläinen, I.; Nummela, A.; Rusko, H. Explosive-strength training improves 5-km running time by improving running economy and muscle power. J. Appl. Physiol. 1999, 86, 1527–1533. [Google Scholar] [CrossRef]

- Danielsson, T.; Carlsson, J.; Schreyer, H.; Ahnesjo, J.; Ten Siethoff, L.; Ragnarsson, T.; Tugetam, A.; Bergman, P.; Parkkali, S.; Joosten, R.; et al. Predictive variables for half-Ironman triathlon performance. Sports Med. Arthrosc. 2013, 20, 1147–1154. [Google Scholar]

- Karsten, B.; Stevens, L.; Colpus, M.; Larumbe-Zabala, E.; Naclerio, F. The effects of sport-specific maximal strength and conditioning training on critical velocity, anaerobic running distance, and 5-km race performance. Int. J. Sports Physiol. Perform. 2016, 11, 80–85. [Google Scholar] [CrossRef] [Green Version]

- Finatto, P.; Da Silva, E.S.; Okamura, A.B.; Almada, B.P.; Oliveira, H.B.; Peyré-Tartaruga, L.A. Pilates training improves 5-km run performance by changing metabolic cost and muscle activity in trained runners. PLoS ONE 2018, 13, e0194057. [Google Scholar]

- Agresta, C.E.; Peacock, J.; Housner, J.; Zernicke, R.F.; Zendler, J.D. Experience does not influence injury-related joint kinematics and kinetics in distance runners. Gait Posture 2018, 61, 13–18. [Google Scholar] [CrossRef]

- da Rosa, R.G.; Oliveira, H.B.; Gomeñuka, N.A.; Masiero, M.P.B.; da Silva, E.S.; Zanardi, A.P.J.; de Carvalho, A.R.; Schons, P.; Peyré-Tartaruga, L.A. Landing-Takeoff Asymmetries Applied to Running Mechanics: A New Perspective for Performance. Front. Physiol. 2019, 10, 415. [Google Scholar] [CrossRef]

- Cavagna, G.A.; Legramandi, M.A.; Peyré-Tartaruga, L.A. The landing-take-off asymmetry of human running is enhanced in old age. J. Exp. Biol. 2008, 211, 1571–1578. [Google Scholar] [CrossRef] [Green Version]

- Cavagna, G.A.; Legramandi, M.A.; Peyré-Tartaruga, L.A. Old men running: Mechanical work and elastic bounce. Proc. R. Soc. B Biol. Sci. 2008, 275, 411–418. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hafer, J.F.; Brown, A.M.; deMille, P.; Hillstrom, H.J.; Garber, C.E. The effect of a cadence retraining protocol on running biomechanics and efficiency: A pilot study. J. Sports Sci. 2015, 33, 724–731. [Google Scholar] [CrossRef] [PubMed]

- Hafer, J.F.; Silvernail, J.F.; Hillstrom, H.J.; Boyer, K.A. Changes in coordination and its variability with an increase in running cadence. J. Sports Sci. 2016, 34, 1388–1395. [Google Scholar] [CrossRef] [PubMed]

- Heiderscheit, B.C.; Chumanov, E.S.; Michalski, M.P.; Wille, C.M.; Ryan, M.B. Effects of step rate manipulation on joint mechanics during running. Med. Sci. Sports Exerc. 2011, 43, 296. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lenhart, R.L.; Thelen, D.G.; Wille, C.M.; Chumanov, E.S.; Heiderscheit, B.C. Increasing running step rate reduces patellofemoral joint forces. Med. Sci. Sports Exerc. 2014, 46, 557. [Google Scholar] [CrossRef] [Green Version]

- Cavanagh, P.R.; Williams, K.R. The effect of stride length variation on oxygen uptake during distance running. Med. Sci. Sports Exerc. 1982, 14, 30–35. [Google Scholar] [CrossRef]

- Hunter, I.; Smith, G.A. Preferred and optimal stride frequency, stiffness and economy: Changes with fatigue during a 1-h high-intensity run. Eur. J. Appl. Physiol. 2007, 100, 653–661. [Google Scholar] [CrossRef]

- Stearne, S.M.; Alderson, J.A.; Green, B.A.; Donnelly, C.J.; Rubenson, J. Joint kinetics in rearfoot versus forefoot running: Implications of switching technique. Med. Sci. Sports Exerc. 2014, 46, 1578–1587. [Google Scholar] [CrossRef]

- Boyer, E.R.; Derrick, T.R. Lower extremity joint loads in habitual rearfoot and mid/forefoot strike runners with normal and shortened stride lengths. J. Sports Sci. 2018, 36, 499–505. [Google Scholar] [CrossRef]

- Roper, J.L.; Doerfler, D.; Kravitz, L.; Dufek, J.S.; Mermier, C. Gait Retraining from Rearfoot Strike to Forefoot Strike does not change Running Economy. Int. J. Sports Med. 2017, 38, 1076–1082. [Google Scholar] [CrossRef]

- Anderson, L.; Barton, C.; Bonanno, D. The effect of foot strike pattern during running on biomechanics, injury and performance: A systematic review and meta-analysis. J. Sci. Med. Sport 2017, 20, e54. [Google Scholar] [CrossRef]

- Högberg, P. How do stride length and stride frequency influence the energy-output during running? Arbeitsphysiologie 1952, 14, 437–441. [Google Scholar] [CrossRef] [PubMed]

- Cavagna, G.A.; Mantovani, M.; Willems, P.A.; Musch, G. The resonant step frequency in human running. Pflugers Arch. Eur. J. Physiol. 1997, 434, 678–684. [Google Scholar] [CrossRef]

- Del Coso, J.; Fernández, D.; Abián-Vicen, J.; Salinero, J.J.; González-Millán, C.; Areces, F.; Ruiz, D.; Gallo, C.; Calleja-González, J.; Pérez-González, B.; et al. Running Pace Decrease during a Marathon Is Positively Related to Blood Markers of Muscle Damage. PLoS ONE 2013, 8, e57602. [Google Scholar] [CrossRef]

- Brughelli, M.; Cronin, J.; Chaouachi, A. Effects of Running Velocity on Running Kinetics and Kinematics. J. Strength Cond. Res. 2011, 25, 933–939. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- van Gent, R.N.; Siem, D.; van Middelkoop, M.; van Os, A.G.; Bierma-Zeinstra, S.M.A.; Koes, B.W.; Taunton, J.E. Incidence and determinants of lower extremity running injuries in long distance runners: A systematic review * COMMENTARY. Br. J. Sports Med. 2007, 41, 469–480. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Videbæk, S.; Bueno, A.M.; Nielsen, R.O.; Rasmussen, S. Incidence of Running-Related Injuries Per 1000 h of running in Different Types of Runners: A Systematic Review and Meta-Analysis. Sports Med. 2015, 45, 1017–1026. [Google Scholar] [CrossRef] [Green Version]

- van Mechelen, W. Running Injuries. Sports Med. 1992, 14, 320–335. [Google Scholar] [CrossRef]

- Kluitenberg, B.; van Middelkoop, M.; Diercks, R.; van der Worp, H. What are the Differences in Injury Proportions Between Different Populations of Runners? A Systematic Review and Meta-Analysis. Sports Med. 2015, 45, 1143–1161. [Google Scholar] [CrossRef] [Green Version]

- Knechtle, B.; Nikolaidis, P.T. Physiology and pathophysiology in ultra-marathon running. Front. Physiol. 2018, 9, 634. [Google Scholar] [CrossRef] [Green Version]

- Van Middelkoop, M.; Kolkman, J.; Van Ochten, J.; Bierma-Zeinstra, S.M.A.; Koes, B. Prevalence and incidence of lower extremity injuries in male marathon runners. Scand. J. Med. Sci. Sports 2007, 18, 140–144. [Google Scholar] [CrossRef] [PubMed]

- Buist, I.; Bredeweg, S.W.; Bessem, B.; van Mechelen, W.; Lemmink, K.A.P.M.; Diercks, R.L. Incidence and risk factors of running-related injuries during preparation for a 4-mile recreational running event. Br. J. Sports Med. 2010, 44, 598–604. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hottenrott, K.; Ludyga, S.; Schulze, S.; Gronwald, T.; Jäger, F.S. Does a run/walk strategy decrease cardiac stress during a marathon in non-elite runners? J. Sci. Med. Sport 2016, 19, 64–68. [Google Scholar] [CrossRef] [PubMed]

- Hulme, A.; Nielsen, R.O.; Timpka, T.; Verhagen, E.; Finch, C. Risk and Protective Factors for Middle- and Long-Distance Running-Related Injury. Sports Med. 2017, 47, 869–886. [Google Scholar] [CrossRef]

- Saragiotto, B.T.; Yamato, T.P.; Hespanhol Junior, L.C.; Rainbow, M.J.; Davis, I.S.; Lopes, A.D. What are the Main Risk Factors for Running-Related Injuries? Sports Med. 2014, 44, 1153–1163. [Google Scholar] [CrossRef]

- Gabbett, T.J. The training—Injury prevention paradox: Should athletes be training smarter and harder? Br. J. Sports Med. 2016, 50, 273–280. [Google Scholar] [CrossRef] [Green Version]

- Lopes, A.D.; Hespanhol, L.C.; Yeung, S.S.; Costa, L.O.P. What are the Main Running-Related Musculoskeletal Injuries? Sports Med. 2012, 42, 891–905. [Google Scholar] [CrossRef]

- Taunton, J.E.; Ryan, M.B.; Clement, D.B.; McKenzie, D.C.; Lloyd-Smith, D.R.; Zumbo, B.D. A retrospective case-control analysis of 2002 running injuries. Br. J. Sports Med. 2002, 36, 95–101. [Google Scholar] [CrossRef] [Green Version]

- Knobloch, K.; Yoon, U.; Vogt, P.M. Acute and overuse injuries correlated to hours of training in master running athletes. Foot Ankle Int. 2008, 29, 671–676. [Google Scholar] [CrossRef]

- Gallo, R.A.; Plakke, M.; Silvis, M.L. Common leg injuries of long-distance runners: Anatomical and biomechanical approach. Sports Health 2012, 4, 485–495. [Google Scholar] [CrossRef] [Green Version]

- Hreljac, A. Impact and Overuse Injuries in Runners. Med. Sci. Sports Exerc. 2004, 845–849. [Google Scholar] [CrossRef] [PubMed]

- Walter, S.D.; Hart, L.E.; McIntosh, J.M.; Sutton, J.R. The Ontario cohort study of running-related injuries. Arch. Intern. Med. 1989, 149, 2561–2564. [Google Scholar] [CrossRef] [PubMed]

- van der Worp, M.P.; ten Haaf, D.S.M.; van Cingel, R.; de Wijer, A.; Nijhuis-van der Sanden, M.W.G.; Staal, J.B. Injuries in runners; a systematic review on risk factors and sex differences. PLoS ONE 2015, 10, e0114937. [Google Scholar] [CrossRef] [PubMed]

- Satterthwaite, P.; Norton, R.; Larmer, P.; Robinson, E. Risk factors for injuries and other health problems sustained in a marathon. Br. J. Sports Med. 1999, 33, 22–26. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Theisen, D.; Malisoux, L.; Genin, J.; Delattre, N.; Seil, R.; Urhausen, A. Influence of midsole hardness of standard cushioned shoes on running-related injury risk. Br. J. Sports Med. 2014, 48, 371–376. [Google Scholar] [CrossRef]

- Malisoux, L.; Delattre, N.; Urhausen, A.; Theisen, D. Shoe cushioning, body mass and running biomechanics as risk factors for running injury: A study protocol for a randomised controlled trial. BMJ Open 2017, 7, e017379. [Google Scholar] [CrossRef]

- Malisoux, L.; Delattre, N.; Urhausen, A.; Theisen, D. Shoe Cushioning Influences the Running Injury Risk According to Body Mass: A Randomized Controlled Trial Involving 848 Recreational Runners. Am. J. Sports Med. 2019, 48, 473–480. [Google Scholar] [CrossRef]

- Jenkins, J.; Beazell, J. Flexibility for Runners. Clin. Sports Med. 2010, 29, 365–377. [Google Scholar] [CrossRef]

- Yeung, S.S.; Yeung, E.W.; Gillespie, L.D. Interventions for preventing lower limb soft-tissue running injuries. Cochrane Database Syst. Rev. 2011. [Google Scholar] [CrossRef]

- Baxter, C.; Mc Naughton, L.R.; Sparks, A.; Norton, L.; Bentley, D. Impact of stretching on the performance and injury risk of long-distance runners. Res. Sports Med. 2017, 25, 78–90. [Google Scholar] [CrossRef]

- Tenforde, A.S.; Sayres, L.C.; McCurdy, M.L.; Collado, H.; Sainani, K.L.; Fredericson, M. Overuse injuries in high school runners: Lifetime prevalence and prevention strategies. PM R 2011, 3, 125–131. [Google Scholar] [CrossRef] [PubMed]

- Pons, V.; Riera, J.; Capó, X.; Martorell, M.; Sureda, A.; Tur, J.A.; Drobnic, F.; Pons, A. Calorie restriction regime enhances physical performance of trained athletes. J. Int. Soc. Sports Nutr. 2018, 15, 12. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Maughan, R.J.; Burke, L.M.; Dvorak, J.; Larson-Meyer, D.E.; Peeling, P.; Phillips, S.M.; Rawson, E.S.; Walsh, N.P.; Garthe, I.; Geyer, H.; et al. IOC consensus statement: Dietary supplements and the high-performance athlete. Br. J. Sports Med. 2018, 52, 439–455. [Google Scholar] [CrossRef] [PubMed]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Boullosa, D.; Esteve-Lanao, J.; Casado, A.; Peyré-Tartaruga, L.A.; Gomes da Rosa, R.; Del Coso, J. Factors Affecting Training and Physical Performance in Recreational Endurance Runners. Sports 2020, 8, 35. https://doi.org/10.3390/sports8030035

Boullosa D, Esteve-Lanao J, Casado A, Peyré-Tartaruga LA, Gomes da Rosa R, Del Coso J. Factors Affecting Training and Physical Performance in Recreational Endurance Runners. Sports. 2020; 8(3):35. https://doi.org/10.3390/sports8030035

Chicago/Turabian StyleBoullosa, Daniel, Jonathan Esteve-Lanao, Arturo Casado, Leonardo A. Peyré-Tartaruga, Rodrigo Gomes da Rosa, and Juan Del Coso. 2020. "Factors Affecting Training and Physical Performance in Recreational Endurance Runners" Sports 8, no. 3: 35. https://doi.org/10.3390/sports8030035

APA StyleBoullosa, D., Esteve-Lanao, J., Casado, A., Peyré-Tartaruga, L. A., Gomes da Rosa, R., & Del Coso, J. (2020). Factors Affecting Training and Physical Performance in Recreational Endurance Runners. Sports, 8(3), 35. https://doi.org/10.3390/sports8030035