1. Introduction

Cesarean section (C-section) is currently the most commonly performed surgery worldwide and represents approximately one third of all deliveries in the United States [

1]. Moreover, this rate does not appear to be declining despite efforts to reduce the rates of both primary and repeat C-sections [

2]. The overall C-section rate in the United States increased from a rate of 20.7% in 1996 to a rate of 32.9% in 2009, representing a 60% increase in this time period. Between 2009 to 2019, the C-section rate slightly declined to 31.7% but again increased in 2020 (31.8%) and 2021 (32.1%) [

3].

C-section itself is a significant risk factor for hospital readmission in the postpartum period, with one study finding that C-section increased the chance of readmission by 2.69 times compared to vaginal delivery [

4]. In addition, women who have had a complicated C-section, as defined by reoperation, intraoperative organ injury, or surgical site complications, have been found to have a significantly higher overall readmission rate compared to uncomplicated surgeries [

5].

There are many known risk factors and comorbidities associated with higher rates of postpartum readmission in general, including maternal medical conditions, multifetal pregnancy, prolonged labor, postpartum hemorrhage, fever, advanced maternal age, substance use disorders, social determinants of health as well as birth conditions such as C-section or operative birth [

6]. That is why the creation of risk-stratification tools for postpartum complications, such as readmission, can aid in proactive management of high-risk patients in hopes to improve their C-section outcomes.

Machine learning (ML) and artificial intelligence serve as powerful tools that may improve the understanding of predictive metrics in all areas of medicine [

7]. While numerous specialties have displayed the potential uses of ML in predictive modeling and risk-stratification, such literature in the field of obstetrics and gynecology is scarce. One study by Firouzbakht et al. implemented different machine learning models to predict postpartum readmission rates in Iranian women, however they did not report performance of models using AUC or confusion matrices nor compare the performance of the ML to other non-ML models [

4]. Other studies in the field of obstetrics and gynecology have utilized machine learning and statistical models to predict postpartum hemorrhage and readmission for hypertensive disorders of pregnancy, however the research remains limited in the field [

8,

9]. This study aims to develop ML algorithms capable of predicting 30-day readmission in patients undergoing C-section using a large-scale, nationwide dataset. The study seeks to identify significant preoperative and perioperative predictors of readmission which could be utilized to inform clinical decision-making and optimize patient care.

2. Materials and Methods

2.1. Study Design and Data Source

This is a retrospective cohort analysis conducted using data from the American College of Surgeons National Surgical Quality Improvement Project (ACS NSQIP) database, Participant Use File (PUF) spanning the years 2012–2022. The NSQIP database is a nationally validated, risk-adjusted and outcomes-based program designed to measure the quality of surgical care [

10]. It includes data from over 700 participating hospitals across the United States. The PUF is available to researchers at participating institutions under a standard data use agreement, and this study was conducted in compliance with the ACS NSQIP data use policies.

2.2. Study Population

The study population includes all women who underwent a C-section during the study period. Patients were identified using current procedural terminology (CPT) codes (59510, 59514, 59620, 59622). Inclusion criteria were women aged 18 years or older, those undergoing a primary or repeat C-section and availability of preoperative, perioperative and postoperative data in the NSQIP database. Exclusion criteria included missing key demographic or clinical variables.

2.3. Data Collection

Demographic, preoperative, and postoperative variables were extracted from the NSQIP database, including patient age, BMI, race, comorbid conditions, operative details, preoperative baseline conditions (

Table 1) and postoperative characteristics (

Table 2). The primary outcome of interest was a 30-day hospital readmission following a C-section.

The main outcome of interest was readmission, which was defined as unplanned readmission to the hospital within 30 days of discharge. Post-operative complications were defined as organ/surgical site infection, wound infection, dehiscence, pneumonia, reintubation, pulmonary embolism, failure to wean ventilation, urinary tract infection, bleeding requiring a transfusion (within 72 h), deep vein thrombosis, sepsis, return to operating room, and total hospital length of stay.

2.4. Machine Learning Model Development

Two machine learning models, Random Forest (RF) and Extreme Gradient Boosting (XGBoost), were developed and compared to logistic regression (LR) [

11,

12]. These models were chosen based on their ability to handle large complex datasets with numerous predictor variables. Both pre- and post-operative variables were initially used to create the models, and the best performing one was used to create a final model that is only based on pre-operative variables. The RF and XGBoost models that include postoperative variables offer a comprehensive retrospective analysis of risk factors leading up to readmission, while the latter pre-operative variables-only model is meant to support bedside prediction at or prior to discharge.

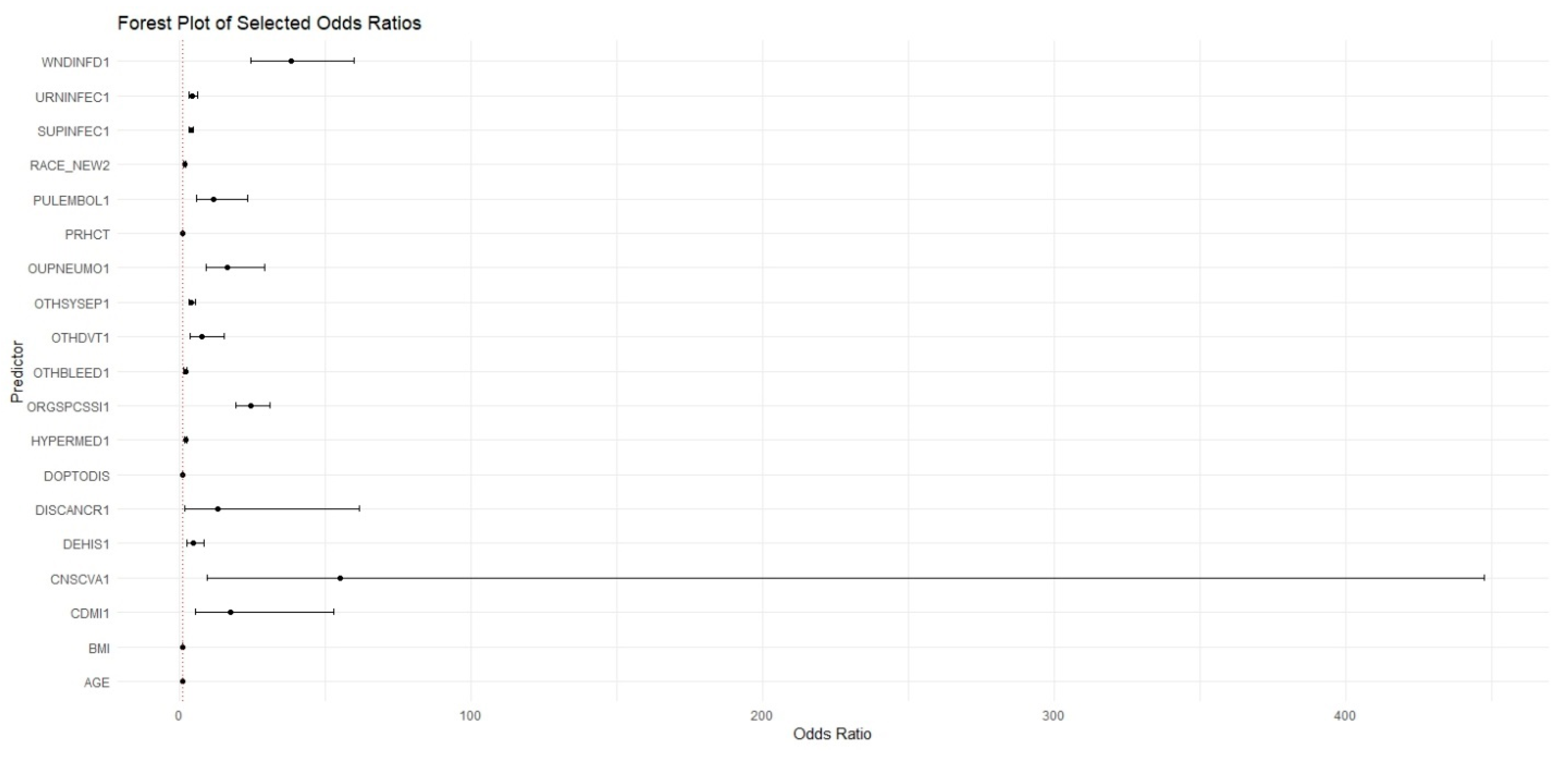

A multivariable LR model was developed as a baseline for comparison. The model was implemented using the glm function in R with a binomial family to predict the binary outcome of 30-day readmission. To ensure a direct and fair comparison, the LR model included the exact same set of predictor variables as the RF and XGBoost models.

Prior to model development, data preprocessing steps were applied to ensure model compatibility and reproducibility. Categorical variables were one-hot encoded using the dummyVars function from the caret package. Continuous variables, including laboratory values and vital signs, were centered and scaled to normalize the distributions across predictors. Records with missing data in key predictive fields were excluded from the analysis. These preprocessing steps were applied consistently across all machine learning models to ensure fairness in model comparisons.

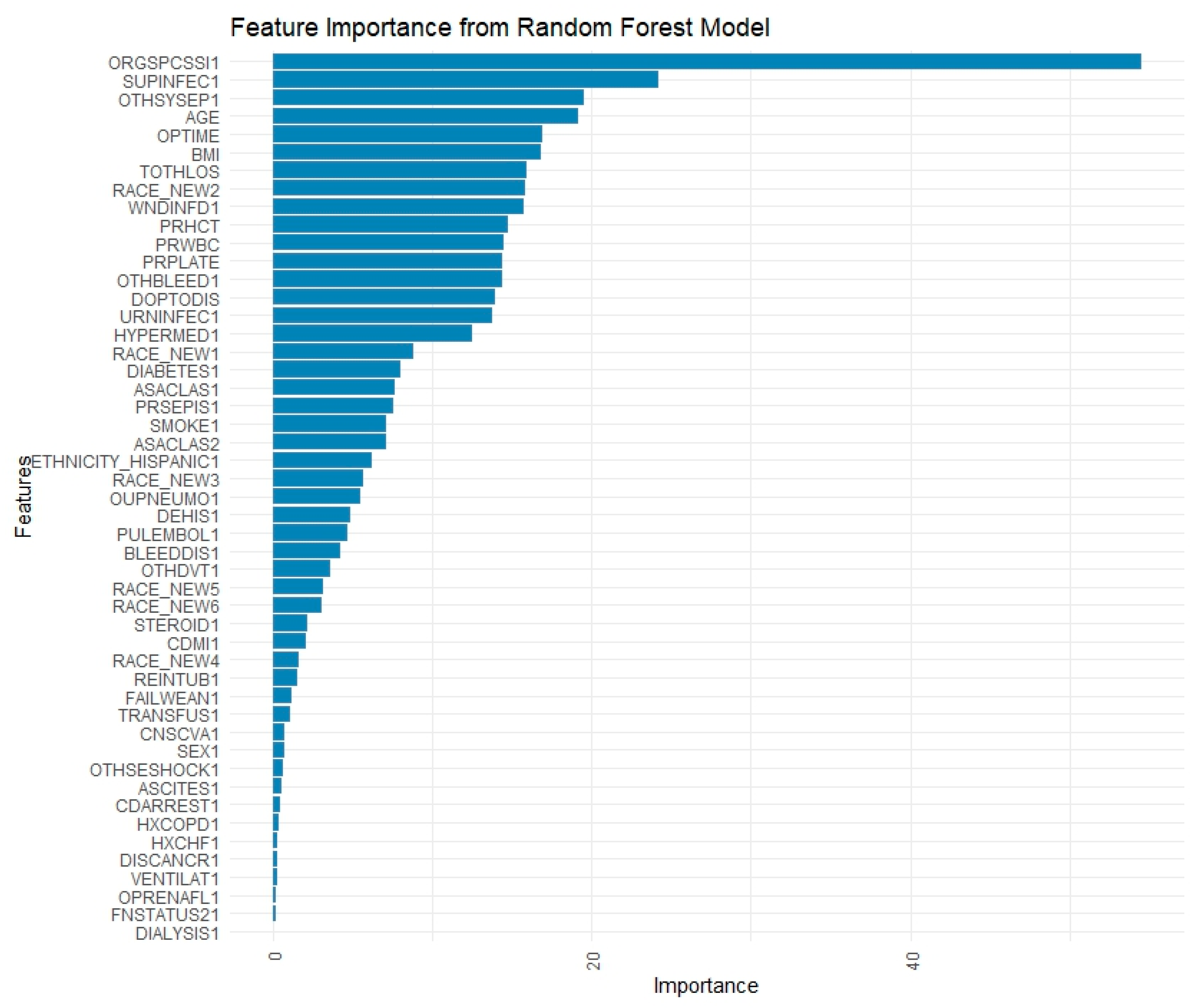

The RF model was implemented using the “ranger” and “caret” packages in R. Hyperparameter optimization was performed using a grid search strategy with 10-fold cross-validation. The specific hyperparameters tuned included the number of variables randomly sampled at each split (mtry), with values tested at the square root of the number of predictors, 50% of the predictors, and the total number of predictors minus one. The node-splitting rule (splitrule) was varied between the “gini” and “extratrees” criteria. The minimum node size (min.node.size) was tested at values of 1, 5, and 10. The final model was selected based on the highest average area under the receiver operating characteristic (ROC) curve during cross-validation. Variable importance was assessed using the impurity-based method and visualized with bar plots. Classification performance on the held-out testing set was evaluated using confusion matrices to report sensitivity, specificity, precision, and F1 score, as well as ROC analysis to compute the AUC. Additionally, classification thresholds were optimized based on Youden’s index, and recall-precision trade-offs were assessed over a range of thresholds to evaluate model calibration and robustness.

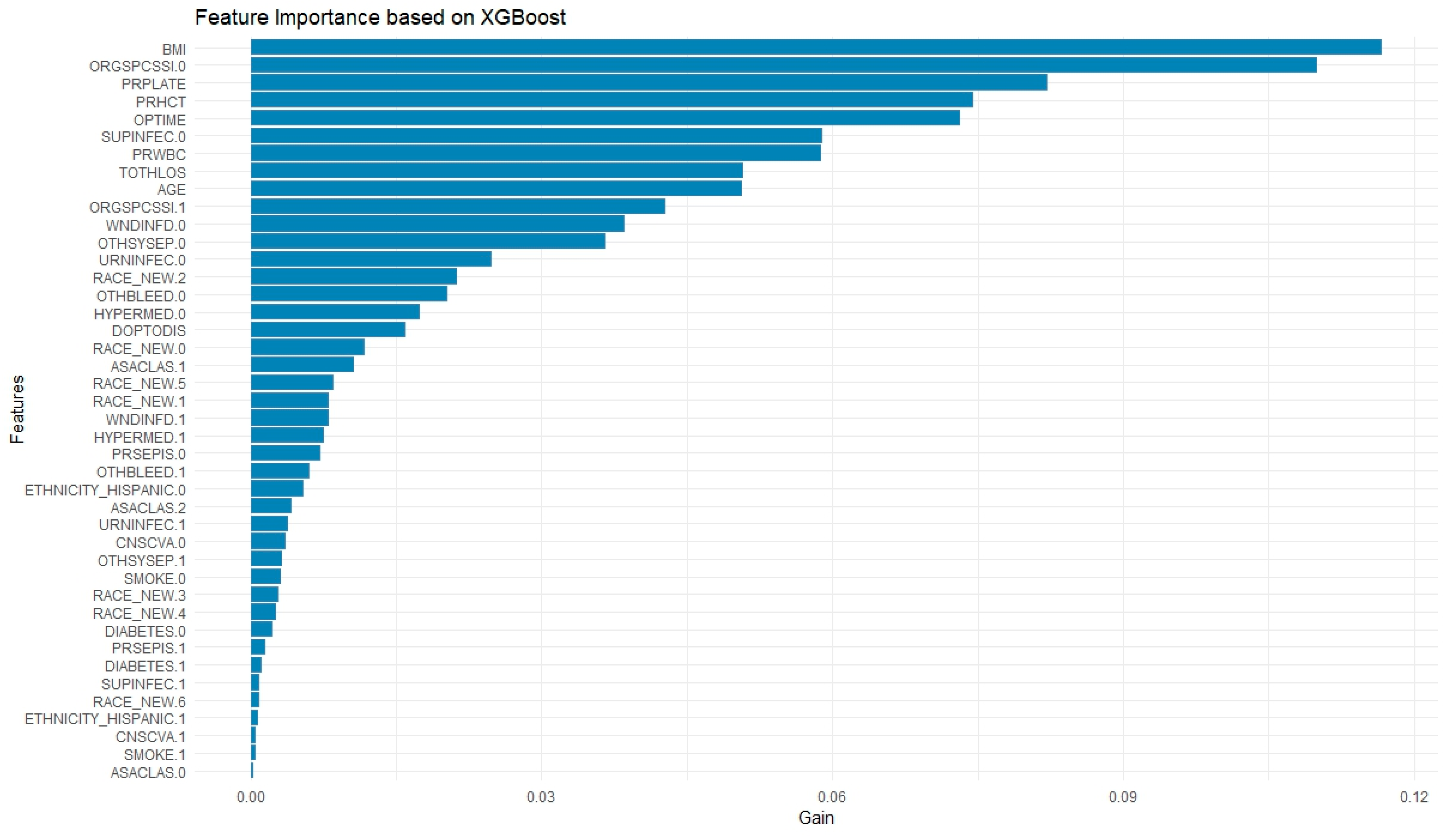

The XGBoost model was constructed using the “xgboost” and “caret” packages. data was converted to a numeric matrix format as required by the xgboost training function. The following hyperparameters were tuned or specified: the learning rate (eta) was fixed at 0.1 to promote stable convergence; the maximum tree depth (max_depth) was tested in the range of 3 to 6, with 4 ultimately selected to reduce overfitting while maintaining complexity; and both row and column subsampling ratios (subsample and colsample_bytree) were set to 0.8 to introduce stochasticity. To address class imbalance, the scale_pos_weight parameter was set to the ratio of negative to positive class instances in the training data. The number of boosting rounds (nrounds) was set to 100 with an early stopping criterion of 10 rounds, using AUC as the evaluation metric. Model performance was assessed using ROC curves and AUC, and classification metrics such as precision, recall, and F1 score were derived from confusion matrices. Threshold optimization was again performed using Youden’s index to refine classification accuracy.

Feature importance for RF was calculated using the impurity-based criterion, specifically the mean decrease in Gini index, which measures the total reduction in node impurity attributed to each variable across all trees. Feature importance in XGBoost was computed using the default “gain” metric, which reflects the average improvement in the splitting criterion (log loss or classification error) brought by each feature across all trees.

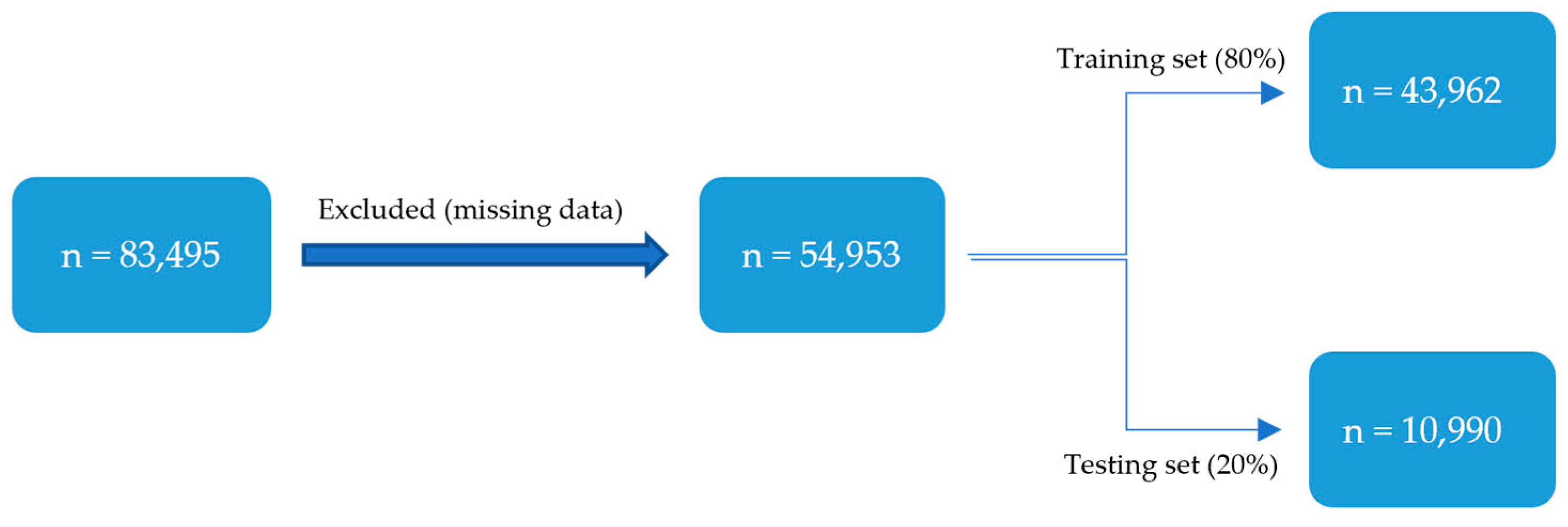

The dataset was randomly split into training (80%) and testing (20%) sets. This split was stratified by the readmission outcome to ensure that the class distribution (2.39% readmitted) was preserved in both subsets. The training set was used for all model development, including hyperparameter tuning via 10-fold cross-validation, while the testing set was reserved for a single, final evaluation of the optimized models. Model performance was evaluated using confusion matrices (sensitivity, specificity) as well as the area under the curve (AUC) of the receiver operating characteristic (ROC) curve. The optimal classification threshold for converting model probabilities into binary predictions was determined using Youden’s J statistic (J = Sensitivity + Specificity − 1). This method identifies the threshold on the ROC curve that maximizes the vertical distance from the line of no-discrimination, thereby finding an optimal balance between the true positive rate and the false positive rate. DeLong’s method was used to impute confidence intervals (CIs) for the training and testing AUCs. Different models were compared using McNemar’s test, and continuity-corrected χ2 statistic and corresponding two-sided p-values were calculated. A p-value ≤ 0.05 indicates significance. R studio 2022.12.0 was used for statistical analysis.

4. Discussion

To our knowledge, the current study is the first to describe the use of ML to predict 30-day readmission rates for individuals undergoing C-section in the US using a large national database. We demonstrated that ML algorithms trained on readily available preoperative clinical data could predict the rate of readmission following C-section, with a sensitivity up to 83.14%. To date, many studies have noted C-section characteristics and complications that are associated with readmissions following C-section delivery; however, ML tools, which provide more powerful and dynamic predictive capabilities, have not been widely used in this area.

A similar study by Firouzbakht et al. was recently published, where different models were built to predict postpartum readmission rates in Iranian women [

4]. Per their multivariate analysis, they found that the method of labor pain onset (e.g., labor pain timing based on the mode of delivery), mode of delivery, and intrapartum complications were the strongest predictors of readmission following childbirth [

4]. An ML model was also built using RF, which found labor pain onset, gravidity, and birth weight to be the most predictive of readmission based on their importance features plot. However, the study does not report the performance of their models using AUC or confusion matrices (e.g., sensitivity, specificity, etc.) and does not compare the performance of the ML to other basic non-ML statistics models they built, which limits the interpretability of their models.

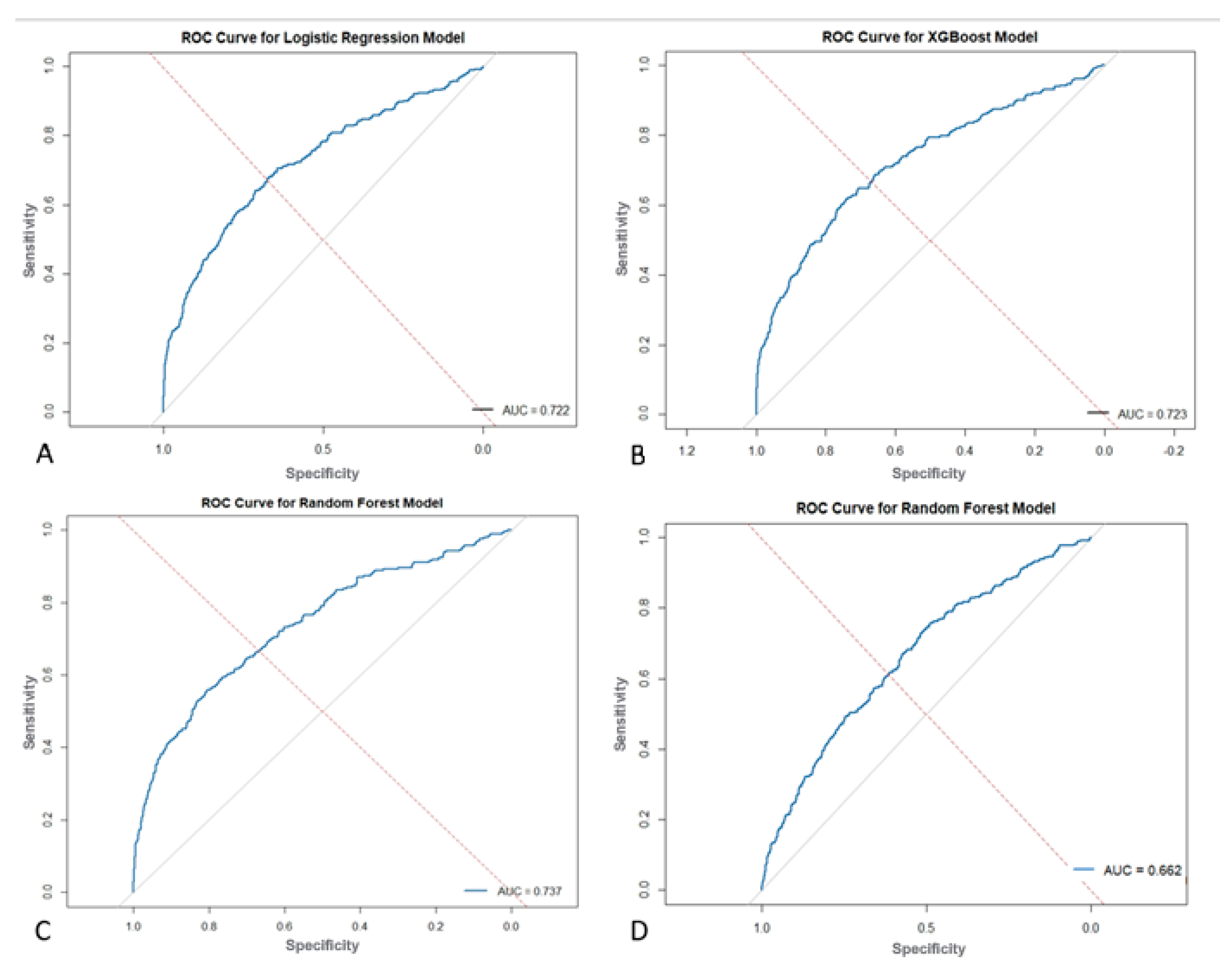

The present study found that ML models outperform standard statistical measures in predicting 30-day readmission using both a combination of pre, intra, and postoperative variables as well as only preoperative variables. With all variables combined, the RF model outperformed the XGBoost and LR models in overall predictive accuracy (AUC = 0.737 vs. 0.723 vs. 0.722) with a sensitivity of 72.03%. Operation time as well as postoperative complications, mainly surgical site infections and sepsis, were strong predictors of readmission in these ML models. A separate RF model with only preoperative variables was built which achieved moderate predictive accuracy of 0.662 and a sensitivity of 83.14%, which highlights its ability to predict true readmissions in the cohort. The purpose of building this model with only preoperative variables is to show how ML can be easily used in the preoperative setting to risk stratify patients prior to their C-section using data that is readily available about them. This would not only contribute to a more comprehensive pre-surgical evaluation, but would also allow for proactive postsurgical planning and appropriate management for patients at high risk of readmission.

Our findings align with several studies that have shown that pregnancy complications and maternal comorbidities increase the rate of readmission following childbirth [

13,

14,

15]. One study identified the most common early postpartum readmission risk factors (defined as within 6 days postpartum) as sepsis, severe maternal morbidity, and preeclampsia before birth [

16]. There was also a notable 2-fold higher risk for readmission among patients with preterm birth <34 weeks gestation or a major mental health condition [

16]. Postpartum readmission following C-section not only presents significant clinical challenges but also imposes significant economic burdens on the healthcare system. Readmissions often include extended hospital stays, increased utilization of resources, and overall increased healthcare costs for both the individual patient and the hospital. For example, among 11.8 million US Medicare beneficiaries who were discharged from a hospital in 2003 to 2004, >2.3 million (19.6%) were rehospitalized within 30 days with an estimated cost of over

$17 billion [

6]. It is clear that patients, healthcare systems, and providers should all aim to avoid unnecessary readmissions while simultaneously ensuring patient safety. Identification of patients at risk of immediate return to the hospital after birth could help in identifying those needing additional preparedness for discharge and thus minimizing the need for readmission [

16].

The clinical relevance of ML models lies in their ability to perform complex modeling to identify patients at risk of readmission and help to tailor discharge and follow-up care, thereby increasing overall patient satisfaction and patient outcomes as well as reducing healthcare costs. With a low event rate (2.39%), models with higher specificity (e.g., LR) yield fewer overall classification errors at the pre-specified operating points, whereas RF/XGBoost achieve higher sensitivity at the cost of more false positives. This trade-off is also reflected in PPV/NPV and can be tuned via alternative thresholds. We acknowledge that the low specificity (34.47%) of the pre-operative model is a significant trade-off for its high sensitivity, which translates to a high false-positive rate. Therefore, its intended clinical application is not as a standalone diagnostic predictor, but as a screening tool to flag patients for low-intensity interventions. For example, a high-risk flag could trigger enhanced discharge education or an automated follow-up call, where the cost of a false positive is minimal compared to the clinical and financial burden of an unforeseen readmission. ML models have been utilized in various other specialties, specifically neurosurgery and thoracic surgery, to predict post-surgical readmission rates, all with an AUC > 0.70 [

7,

17]. ML can be incorporated to increase pre-surgical evaluation of patients to identify risk factors and predictors of readmission to the hospital following delivery, thus assisting in shared decision-making, resource allocation, and quality improvement.

Strengths and Limitations

The study utilizes a large, nationwide database that provides access to a large and diverse population of patients across institutions within the US, thus improving the robustness of the study’s findings and allowing a representative sample of patients undergoing C-section. The use of real-world clinical data enhances the study’s relevance to clinical practice as well. Utilization of ML algorithms to predict 30-day readmission is a novel approach compared to more traditional statistical methods, and this study demonstrates the potential of ML in healthcare to improve outcomes. We utilize internal validation through the testing sets to ensure the reliability of the models and provide consistent predictions within the dataset.

However, this study has several limitations that should be considered when interpreting the findings. The retrospective nature of the study limits the ability to establish causality between observed associations. Hospitals that are not participating in the NSQIP database have been excluded, and thus, input from smaller hospitals or those in rural areas may have different resources, staffing and care patterns that could affect readmission rates but are not reflected in the NSQIP database. The multi-center design could pose a limitation as well, as NSQIP spans over 700 hospitals but is split by patient rather than by hospital site, which limited our ability to carry out a hospital-stratified validation. There is also limited use of socioeconomic and behavioral health data in predicting readmissions as this data was not available in the database, which may play a role in many populations. Similarly, factors such as social determinants of health, patient support networks and outpatient follow up are often not included in the NSQIP clinical and perioperative data. These factors can heavily influence readmission risk, limiting the model’s application to certain real-world settings. Regarding the ML model that was created using only preoperative data, the RF showed great potential in its ability to flag patients as potential readmissions due to its relatively high sensitivity (83.14%), yet its low specificity (34.47%) denotes that it may have challenges in recognizing patients who will not be readmitted. This could potentially contribute to alert fatigue among clinicians and must be carefully considered before any real-world implementation. However, many published risk scores, including the Wells Score for pulmonary embolism, have a high sensitivity and low specificity yet are reliably used for detection of certain conditions [

18]. The notable gap between training (AUC 0.957) and testing (AUC 0.737) performance in the RF model suggests a degree of overfitting, where the model learned patterns specific to the training data that did not fully generalize. While 10-fold cross-validation and hyperparameter tuning was done to mitigate this, the complexity of the model likely contributed to this discrepancy. Therefore, the testing set results should be considered the primary indicator of real-world performance. Overfitting was addressed through 10-fold cross-validation during model training, grid search-based hyperparameter tuning, and evaluation on an independent test set. Additionally, early stopping (for XGBoost) and threshold optimization techniques were incorporated to further promote model robustness. Another limitation of this study is the inherent class imbalance in the outcome variable, with only 2.39% of patients experiencing 30-day readmission. While we accounted for this in the XGBoost model using the scale_pos_weight parameter to rebalance the class distribution during training, we did not apply additional balancing techniques such as oversampling, undersampling, or synthetic data generation. This was done to preserve the nature of the data and provide a representative predictive tool based on real clinical values.

Our internal validation relies on a single stratified 80/20 train-test split. While standard practice, this approach can be subject to partitioning variance, and model performance could differ slightly with a different random split. While this provides a consistent estimate of internal model performance, the absence of external or temporal validation limits the generalizability of our findings. The notable gap between training and testing AUC in the Random Forest model (0.957 vs. 0.737) also suggests potential model optimism despite cross-validation and tuning. Future work will include external datasets or time-split validation to better assess real-world performance.

Future research should be aimed at improving predictive ML models through integration of additional variables such as patient socioeconomic status, mental health factors, or geographic variations with validation on external cohorts. In addition, the hope is to use the ML models to develop a risk calculator that can be used at the bedside or integrated into EHRs where C-section patients are assigned percentage risk of readmission automatically.