Enhancing Radiotherapy Workflow for Head and Neck Cancer with Artificial Intelligence: A Systematic Review

Abstract

1. Introduction

2. Materials and Methods

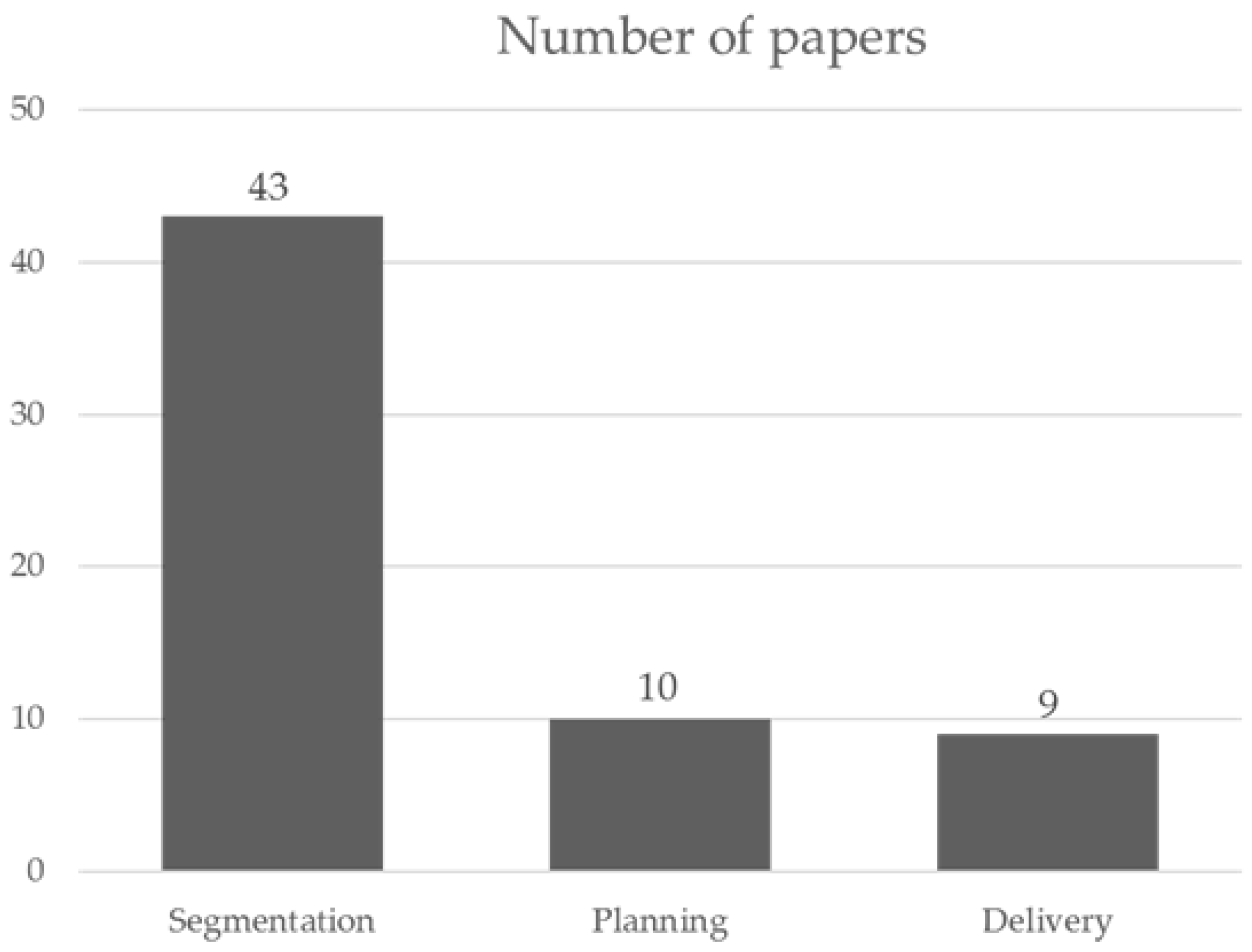

3. Results

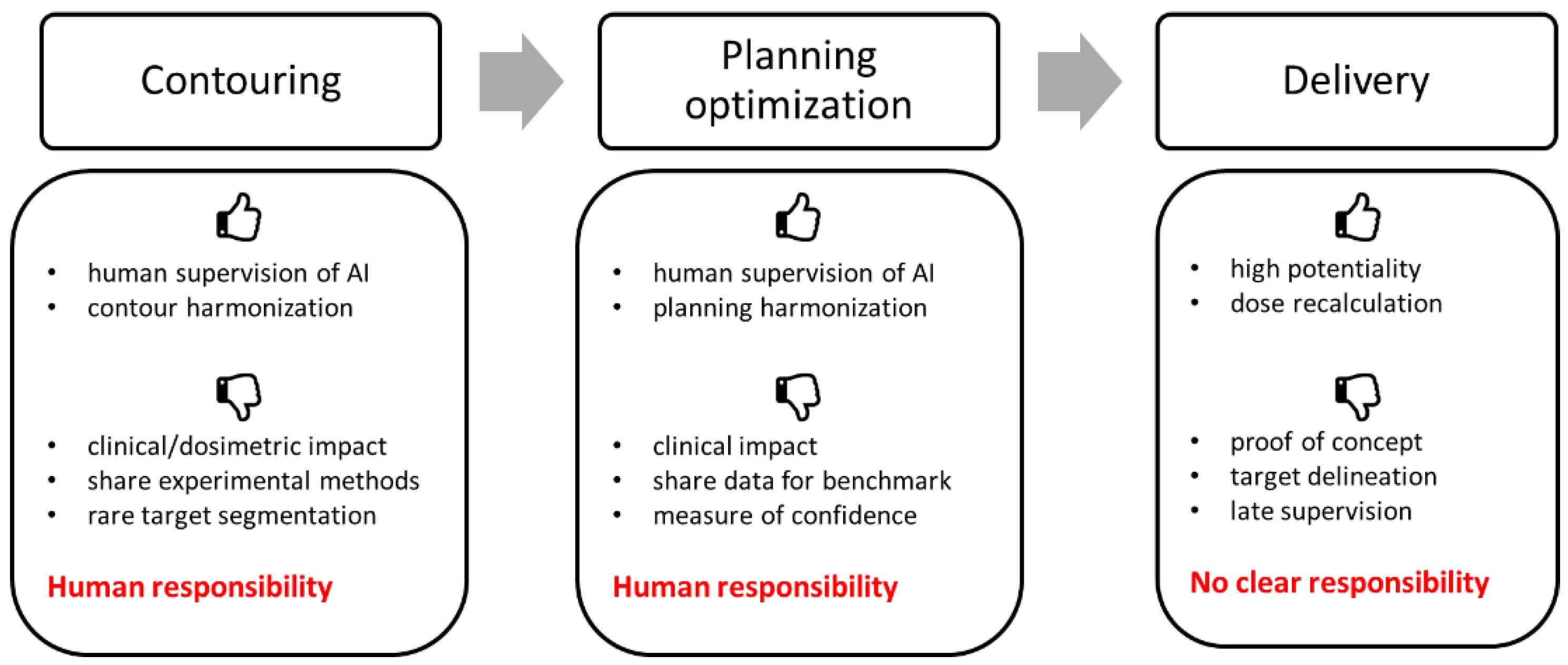

3.1. AI in HNC Target and Organs at Risk Segmentation

Future Prospective

3.2. AI in HNC RT Planning Optimization

Future Prospective

3.3. AI in HNC RT Delivery

Future Prospective

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Alterio, D.; Marvaso, G.; Ferrari, A.; Volpe, S.; Orecchia, R.; Jereczek-Fossa, B.A. Modern Radiotherapy for Head and Neck Cancer. Semin. Oncol. 2019, 46, 233–245. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Zhu, X.; Hong, J.C.; Zheng, D. Artificial Intelligence in Radiotherapy Treatment Planning: Present and Future. Technol. Cancer Res. Treat. 2019, 18, 153303381987392. [Google Scholar] [CrossRef]

- Francolini, G.; Desideri, I.; Stocchi, G.; Salvestrini, V.; Ciccone, L.P.; Garlatti, P.; Loi, M.; Livi, L. Artificial Intelligence in Radiotherapy: State of the Art and Future Directions. Med. Oncol. 2020, 37, 50. [Google Scholar] [CrossRef] [PubMed]

- Appelt, A.L.; Elhaminia, B.; Gooya, A.; Gilbert, A.; Nix, M. Deep Learning for Radiotherapy Outcome Prediction Using Dose Data—A Review. Clin. Oncol. 2022, 34, e87–e96. [Google Scholar] [CrossRef] [PubMed]

- Valdes, G.; Simone, C.B.; Chen, J.; Lin, A.; Yom, S.S.; Pattison, A.J.; Carpenter, C.M.; Solberg, T.D. Clinical Decision Support of Radiotherapy Treatment Planning: A Data-Driven Machine Learning Strategy for Patient-Specific Dosimetric Decision Making. Radiother. Oncol. 2017, 125, 392–397. [Google Scholar] [CrossRef]

- McIntosh, C.; Welch, M.; McNiven, A.; Jaffray, D.A.; Purdie, T.G. Fully Automated Treatment Planning for Head and Neck Radiotherapy Using a Voxel-Based Dose Prediction and Dose Mimicking Method. Phys. Med. Biol. 2017, 62, 5926–5944. [Google Scholar] [CrossRef]

- Shiraishi, S.; Moore, K.L. Knowledge-Based Prediction of Three-Dimensional Dose Distributions for External Beam Radiotherapy: Knowledge-Based Prediction of 3D Dose Distributions. Med. Phys. 2015, 43, 378–387. [Google Scholar] [CrossRef]

- You, D.; Aryal, M.; Samuels, S.E.; Eisbruch, A.; Cao, Y. Temporal Feature Extraction from DCE-MRI to Identify Poorly Perfused Subvolumes of Tumors Related to Outcomes of Radiation Therapy in Head and Neck Cancer. Tomography 2016, 2, 341–352. [Google Scholar] [CrossRef] [PubMed]

- Tryggestad, E.; Anand, A.; Beltran, C.; Brooks, J.; Cimmiyotti, J.; Grimaldi, N.; Hodge, T.; Hunzeker, A.; Lucido, J.J.; Laack, N.N.; et al. Scalable Radiotherapy Data Curation Infrastructure for Deep-Learning Based Autosegmentation of Organs-at-Risk: A Case Study in Head and Neck Cancer. Front. Oncol. 2022, 12, 936134. [Google Scholar] [CrossRef]

- Vrtovec, T.; Močnik, D.; Strojan, P.; Pernuš, F.; Ibragimov, B. Auto-segmentation of Organs at Risk for Head and Neck Radiotherapy Planning: From Atlas-based to Deep Learning Methods. Med. Phys. 2020, 47, e929–e950. [Google Scholar] [CrossRef]

- Kosmin, M.; Ledsam, J.; Romera-Paredes, B.; Mendes, R.; Moinuddin, S.; de Souza, D.; Gunn, L.; Kelly, C.; Hughes, C.O.; Karthikesalingam, A.; et al. Rapid Advances in Auto-Segmentation of Organs at Risk and Target Volumes in Head and Neck Cancer. Radiother. Oncol. 2019, 135, 130–140. [Google Scholar] [CrossRef] [PubMed]

- Giraud, P.; Giraud, P.; Gasnier, A.; El Ayachy, R.; Kreps, S.; Foy, J.-P.; Durdux, C.; Huguet, F.; Burgun, A.; Bibault, J.-E. Radiomics and Machine Learning for Radiotherapy in Head and Neck Cancers. Front. Oncol. 2019, 9, 174. [Google Scholar] [CrossRef]

- Kearney, V.; Chan, J.W.; Valdes, G.; Solberg, T.D.; Yom, S.S. The Application of Artificial Intelligence in the IMRT Planning Process for Head and Neck Cancer. Oral Oncol. 2018, 87, 111–116. [Google Scholar] [CrossRef]

- Fritscher, K.D.; Peroni, M.; Zaffino, P.; Spadea, M.F.; Schubert, R.; Sharp, G. Automatic Segmentation of Head and Neck CT Images for Radiotherapy Treatment Planning Using Multiple Atlases, Statistical Appearance Models, and Geodesic Active Contours: Segmentation of Head-Neck CT Images Using MABSInShape. Med. Phys. 2014, 41, 051910. [Google Scholar] [CrossRef] [PubMed]

- Walker, G.V.; Awan, M.; Tao, R.; Koay, E.J.; Boehling, N.S.; Grant, J.D.; Sittig, D.F.; Gunn, G.B.; Garden, A.S.; Phan, J.; et al. Prospective Randomized Double-Blind Study of Atlas-Based Organ-at-Risk Autosegmentation-Assisted Radiation Planning in Head and Neck Cancer. Radiother. Oncol. 2014, 112, 321–325. [Google Scholar] [CrossRef] [PubMed]

- Ibragimov, B.; Xing, L. Segmentation of Organs-at-Risks in Head and Neck CT Images Using Convolutional Neural Networks. Med. Phys. 2017, 44, 547–557. [Google Scholar] [CrossRef]

- Tam, C.; Tian, S.; Beitler, J.J.; Jiang, X.; Li, S.; Yang, X. Automated Delineation of Organs-at-Risk in Head and Neck CT Images Using Multi-Output Support Vector Regression. In Proceedings of the Medical Imaging 2018: Biomedical Applications in Molecular, Structural, and Functional Imaging, Houston, TX, USA, 12 March 2018; Gimi, B., Krol, A., Eds.; SPIE: Houston, TX, USA, 2018; p. 75. [Google Scholar]

- Nikolov, S.; Blackwell, S.; Zverovitch, A.; Mendes, R.; Livne, M.; De Fauw, J.; Patel, Y.; Meyer, C.; Askham, H.; Romera-Paredes, B.; et al. Clinically Applicable Segmentation of Head and Neck Anatomy for Radiotherapy: Deep Learning Algorithm Development and Validation Study. J. Med. Internet Res. 2021, 23, e26151. [Google Scholar] [CrossRef]

- Zhong, Y.; Yang, Y.; Fang, Y.; Wang, J.; Hu, W. A Preliminary Experience of Implementing Deep-Learning Based Auto-Segmentation in Head and Neck Cancer: A Study on Real-World Clinical Cases. Front. Oncol. 2021, 11, 638197. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Wang, H.; Tian, S.; Zhang, X.; Li, J.; Lei, R.; Gao, M.; Liu, C.; Yang, L.; Bi, X.; et al. A Slice Classification Model-Facilitated 3D Encoder–Decoder Network for Segmenting Organs at Risk in Head and Neck Cancer. J. Radiat. Res. 2021, 62, 94–103. [Google Scholar] [CrossRef]

- Brunenberg, E.J.L.; Steinseifer, I.K.; van den Bosch, S.; Kaanders, J.H.A.M.; Brouwer, C.L.; Gooding, M.J.; van Elmpt, W.; Monshouwer, R. External Validation of Deep Learning-Based Contouring of Head and Neck Organs at Risk. Phys. Imaging Radiat. Oncol. 2020, 15, 8–15. [Google Scholar] [CrossRef]

- Chen, W.; Li, Y.; Dyer, B.A.; Feng, X.; Rao, S.; Benedict, S.H.; Chen, Q.; Rong, Y. Deep Learning vs. Atlas-Based Models for Fast Auto-Segmentation of the Masticatory Muscles on Head and Neck CT Images. Radiat. Oncol. 2020, 15, 176. [Google Scholar] [CrossRef]

- van Dijk, L.V.; Van den Bosch, L.; Aljabar, P.; Peressutti, D.; Both, S.; Steenbakkers, R.J.H.M.; Langendijk, J.A.; Gooding, M.J.; Brouwer, C.L. Improving Automatic Delineation for Head and Neck Organs at Risk by Deep Learning Contouring. Radiother. Oncol. 2020, 142, 115–123. [Google Scholar] [CrossRef] [PubMed]

- Urago, Y.; Okamoto, H.; Kaneda, T.; Murakami, N.; Kashihara, T.; Takemori, M.; Nakayama, H.; Iijima, K.; Chiba, T.; Kuwahara, J.; et al. Evaluation of Auto-Segmentation Accuracy of Cloud-Based Artificial Intelligence and Atlas-Based Models. Radiat. Oncol. 2021, 16, 175. [Google Scholar] [CrossRef]

- Guo, H.; Wang, J.; Xia, X.; Zhong, Y.; Peng, J.; Zhang, Z.; Hu, W. The Dosimetric Impact of Deep Learning-Based Auto-Segmentation of Organs at Risk on Nasopharyngeal and Rectal Cancer. Radiat. Oncol. 2021, 16, 113. [Google Scholar] [CrossRef]

- Kim, N.; Chun, J.; Chang, J.S.; Lee, C.G.; Keum, K.C.; Kim, J.S. Feasibility of Continual Deep Learning-Based Segmentation for Personalized Adaptive Radiation Therapy in Head and Neck Area. Cancers 2021, 13, 702. [Google Scholar] [CrossRef]

- Brouwer, C.L.; Boukerroui, D.; Oliveira, J.; Looney, P.; Steenbakkers, R.J.H.M.; Langendijk, J.A.; Both, S.; Gooding, M.J. Assessment of Manual Adjustment Performed in Clinical Practice following Deep Learning Contouring for Head and Neck Organs at Risk in Radiotherapy. Phys. Imaging Radiat. Oncol. 2020, 16, 54–60. [Google Scholar] [CrossRef]

- Oktay, O.; Nanavati, J.; Schwaighofer, A.; Carter, D.; Bristow, M.; Tanno, R.; Jena, R.; Barnett, G.; Noble, D.; Rimmer, Y.; et al. Evaluation of Deep Learning to Augment Image-Guided Radiotherapy for Head and Neck and Prostate Cancers. JAMA Netw Open 2020, 3, e2027426. [Google Scholar] [CrossRef]

- Bai, T.; Balagopal, A.; Dohopolski, M.; Morgan, H.E.; McBeth, R.; Tan, J.; Lin, M.-H.; Sher, D.J.; Nguyen, D.; Jiang, S. A Proof-of-Concept Study of Artificial Intelligence–Assisted Contour Editing. Radiol. Artif. Intell. 2022, 4, e210214. [Google Scholar] [CrossRef]

- Wong, J.; Huang, V.; Wells, D.; Giambattista, J.; Giambattista, J.; Kolbeck, C.; Otto, K.; Saibishkumar, E.P.; Alexander, A. Implementation of Deep Learning-Based Auto-Segmentation for Radiotherapy Planning Structures: A Workflow Study at Two Cancer Centers. Radiat. Oncol. 2021, 16, 101. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Sun, C.; Wang, H.; Li, Z.; Gao, Y.; Lei, W.; Zhang, S.; Wang, G.; Zhang, S. Automatic Segmentation of Organs-at-risks of Nasopharynx Cancer and Lung Cancer by Cross-layer Attention Fusion Network with TELD-Loss. Med. Phys. 2021, 48, 6987–7002. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.; Zhang, X.; Si, W.; Ni, X. Multiview Self-Supervised Segmentation for OARs Delineation in Radiotherapy. Evid.-Based Complement. Altern. Med. 2021, 2021, 1–5. [Google Scholar] [CrossRef]

- Iyer, A.; Thor, M.; Onochie, I.; Hesse, J.; Zakeri, K.; LoCastro, E.; Jiang, J.; Veeraraghavan, H.; Elguindi, S.; Lee, N.Y.; et al. Prospectively-Validated Deep Learning Model for Segmenting Swallowing and Chewing Structures in CT. Phys. Med. Biol. 2022, 67, 024001. [Google Scholar] [CrossRef]

- Wong, J.; Fong, A.; McVicar, N.; Smith, S.; Giambattista, J.; Wells, D.; Kolbeck, C.; Giambattista, J.; Gondara, L.; Alexander, A. Comparing Deep Learning-Based Auto-Segmentation of Organs at Risk and Clinical Target Volumes to Expert Inter-Observer Variability in Radiotherapy Planning. Radiother. Oncol. 2020, 144, 152–158. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhao, T.; Gay, H.; Zhang, W.; Sun, B. Weaving Attention U-net: A Novel Hybrid CNN and Attention-based Method for Organs-at-risk Segmentation in Head and Neck CT Images. Med. Phys. 2021, 48, 7052–7062. [Google Scholar] [CrossRef] [PubMed]

- Tong, N.; Gou, S.; Yang, S.; Ruan, D.; Sheng, K. Fully Automatic Multi-organ Segmentation for Head and Neck Cancer Radiotherapy Using Shape Representation Model Constrained Fully Convolutional Neural Networks. Med. Phys. 2018, 45, 4558–4567. [Google Scholar] [CrossRef]

- Liang, S.; Tang, F.; Huang, X.; Yang, K.; Zhong, T.; Hu, R.; Liu, S.; Yuan, X.; Zhang, Y. Deep-Learning-Based Detection and Segmentation of Organs at Risk in Nasopharyngeal Carcinoma Computed Tomographic Images for Radiotherapy Planning. Eur. Radiol. 2019, 29, 1961–1967. [Google Scholar] [CrossRef] [PubMed]

- Men, K.; Geng, H.; Cheng, C.; Zhong, H.; Huang, M.; Fan, Y.; Plastaras, J.P.; Lin, A.; Xiao, Y. Technical Note: More Accurate and Efficient Segmentation of Organs-at-risk in Radiotherapy with Convolutional Neural Networks Cascades. Med. Phys. 2018, 46, 286–292. [Google Scholar] [CrossRef]

- Zhong, T.; Huang, X.; Tang, F.; Liang, S.; Deng, X.; Zhang, Y. Boosting-based Cascaded Convolutional Neural Networks for the Segmentation of CT Organs-at-risk in Nasopharyngeal Carcinoma. Med. Phys. 2019, 46, 5602–5611. [Google Scholar] [CrossRef]

- Tappeiner, E.; Pröll, S.; Hönig, M.; Raudaschl, P.F.; Zaffino, P.; Spadea, M.F.; Sharp, G.C.; Schubert, R.; Fritscher, K. Multi-Organ Segmentation of the Head and Neck Area: An Efficient Hierarchical Neural Networks Approach. Int. J. CARS 2019, 14, 745–754. [Google Scholar] [CrossRef]

- Sultana, S.; Robinson, A.; Song, D.Y.; Lee, J. Automatic Multi-Organ Segmentation in Computed Tomography Images Using Hierarchical Convolutional Neural Network. J. Med. Imaging 2020, 7, 055001. [Google Scholar]

- Hänsch, A.; Schwier, M.; Gass, T.; Morgas, T. Evaluation of Deep Learning Methods for Parotid Gland Segmentation from CT Images. J. Med. Imaging 2018, 6, 011005. [Google Scholar] [CrossRef]

- Tappeiner, E.; Pröll, S.; Fritscher, K.; Welk, M.; Schubert, R. Training of Head and Neck Segmentation Networks with Shape Prior on Small Datasets. Int. J. CARS 2020, 15, 1417–1425. [Google Scholar] [CrossRef]

- Fang, Y.; Wang, J.; Ou, X.; Ying, H.; Hu, C.; Zhang, Z.; Hu, W. The Impact of Training Sample Size on Deep Learning-Based Organ Auto-Segmentation for Head-and-Neck Patients. Phys. Med. Biol. 2021, 66, 185012. [Google Scholar] [CrossRef]

- Hague, C.; McPartlin, A.; Lee, L.W.; Hughes, C.; Mullan, D.; Beasley, W.; Green, A.; Price, G.; Whitehurst, P.; Slevin, N.; et al. An Evaluation of MR Based Deep Learning Auto-Contouring for Planning Head and Neck Radiotherapy. Radiother. Oncol. 2021, 158, 112–117. [Google Scholar] [CrossRef]

- Dai, X.; Lei, Y.; Wang, T.; Zhou, J.; Rudra, S.; McDonald, M.; Curran, W.J.; Liu, T.; Yang, X. Multi-Organ Auto-Delineation in Head-and-Neck MRI for Radiation Therapy Using Regional Convolutional Neural Network. Phys. Med. Biol. 2022, 67, 025006. [Google Scholar] [CrossRef]

- Korte, J.C.; Hardcastle, N.; Ng, S.P.; Clark, B.; Kron, T.; Jackson, P. Cascaded Deep Learning-based Auto-segmentation for Head and Neck Cancer Patients: Organs at Risk on T2-weighted Magnetic Resonance Imaging. Med. Phys. 2021, 48, 7757–7772. [Google Scholar] [CrossRef]

- Dai, X.; Lei, Y.; Wang, T.; Zhou, J.; Roper, J.; McDonald, M.; Beitler, J.J.; Curran, W.J.; Liu, T.; Yang, X. Automated Delineation of Head and Neck Organs at Risk Using Synthetic MRI-aided Mask Scoring Regional Convolutional Neural Network. Med. Phys. 2021, 48, 5862–5873. [Google Scholar] [CrossRef]

- Kieselmann, J.P.; Fuller, C.D.; Gurney-Champion, O.J.; Oelfke, U. Cross-modality Deep Learning: Contouring of MRI Data from Annotated CT Data Only. Med. Phys. 2021, 48, 1673–1684. [Google Scholar] [CrossRef]

- Comelli, A.; Bignardi, S.; Stefano, A.; Russo, G.; Sabini, M.G.; Ippolito, M.; Yezzi, A. Development of a New Fully Three-Dimensional Methodology for Tumours Delineation in Functional Images. Comput. Biol. Med. 2020, 120, 103701. [Google Scholar] [CrossRef]

- Naser, M.A.; van Dijk, L.V.; He, R.; Wahid, K.A.; Fuller, C.D. Tumor Segmentation in Patients with Head and Neck Cancers Using Deep Learning Based-on Multi-Modality PET/CT Images. In Head and Neck Tumor Segmentation; Andrearczyk, V., Oreiller, V., Depeursinge, A., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2021; Volume 12603, pp. 85–98. ISBN 978-3-030-67193-8. [Google Scholar]

- Groendahl, A.R.; Skjei Knudtsen, I.; Huynh, B.N.; Mulstad, M.; Moe, Y.M.; Knuth, F.; Tomic, O.; Indahl, U.G.; Torheim, T.; Dale, E.; et al. A Comparison of Methods for Fully Automatic Segmentation of Tumors and Involved Nodes in PET/CT of Head and Neck Cancers. Phys. Med. Biol. 2021, 66, 065012. [Google Scholar] [CrossRef]

- Guo, Z.; Guo, N.; Gong, K.; Zhong, S.; Li, Q. Gross Tumor Volume Segmentation for Head and Neck Cancer Radiotherapy Using Deep Dense Multi-Modality Network. Phys. Med. Biol. 2019, 64, 205015. [Google Scholar] [CrossRef]

- Gurney-Champion, O.J.; Kieselmann, J.P.; Wong, K.H.; Ng-Cheng-Hin, B.; Harrington, K.; Oelfke, U. A Convolutional Neural Network for Contouring Metastatic Lymph Nodes on Diffusion-Weighted Magnetic Resonance Images for Assessment of Radiotherapy Response. Phys. Imaging Radiat. Oncol. 2020, 15, 1–7. [Google Scholar] [CrossRef]

- Ren, J.; Eriksen, J.G.; Nijkamp, J.; Korreman, S.S. Comparing Different CT, PET and MRI Multi-Modality Image Combinations for Deep Learning-Based Head and Neck Tumor Segmentation. Acta Oncol. 2021, 60, 1399–1406. [Google Scholar] [CrossRef]

- Moe, Y.M.; Groendahl, A.R.; Tomic, O.; Dale, E.; Malinen, E.; Futsaether, C.M. Deep Learning-Based Auto-Delineation of Gross Tumour Volumes and Involved Nodes in PET/CT Images of Head and Neck Cancer Patients. Eur. J. Nucl. Med. Mol. Imaging 2021, 48, 2782–2792. [Google Scholar] [CrossRef]

- van der Veen, J.; Gulyban, A.; Nuyts, S. Interobserver Variability in Delineation of Target Volumes in Head and Neck Cancer. Radiother. Oncol. 2019, 137, 9–15. [Google Scholar] [CrossRef]

- van der Veen, J.; Gulyban, A.; Willems, S.; Maes, F.; Nuyts, S. Interobserver Variability in Organ at Risk Delineation in Head and Neck Cancer. Radiat. Oncol. 2021, 16, 120. [Google Scholar] [CrossRef]

- van der Veen, J.; Willems, S.; Bollen, H.; Maes, F.; Nuyts, S. Deep Learning for Elective Neck Delineation: More Consistent and Time Efficient. Radiother. Oncol. 2020, 153, 180–188. [Google Scholar] [CrossRef]

- van der Veen, J.; Willems, S.; Deschuymer, S.; Robben, D.; Crijns, W.; Maes, F.; Nuyts, S. Benefits of Deep Learning for Delineation of Organs at Risk in Head and Neck Cancer. Radiother. Oncol. 2019, 138, 68–74. [Google Scholar] [CrossRef]

- Oreiller, V.; Andrearczyk, V.; Jreige, M.; Boughdad, S.; Elhalawani, H.; Castelli, J.; Vallières, M.; Zhu, S.; Xie, J.; Peng, Y.; et al. Head and Neck Tumor Segmentation in PET/CT: The HECKTOR Challenge. Med. Image Anal. 2022, 77, 102336. [Google Scholar] [CrossRef]

- Zuley, M.L.; Jarosz, R.; Kirk, S.; Lee, Y.; Colen, R.; Garcia, K.; Delbeke, D.; Pham, M.; Nagy, P.; Sevinc, G.; et al. The Cancer Genome Atlas Head-Neck Squamous Cell Carcinoma Collection (TCGA-HNSC). 2016. Available online: https://wiki.cancerimagingarchive.net/pages/viewpage.action?pageId=11829589 (accessed on 4 May 2023).

- Fan, J.; Wang, J.; Chen, Z.; Hu, C.; Zhang, Z.; Hu, W. Automatic Treatment Planning Based on Three-Dimensional Dose Distribution Predicted from Deep Learning Technique. Med. Phys. 2019, 46, 370–381. [Google Scholar] [CrossRef]

- Nguyen, D.; Jia, X.; Sher, D.; Lin, M.-H.; Iqbal, Z.; Liu, H.; Jiang, S. 3D Radiotherapy Dose Prediction on Head and Neck Cancer Patients with a Hierarchically Densely Connected U-Net Deep Learning Architecture. Phys. Med. Biol. 2019, 64, 065020. [Google Scholar] [CrossRef]

- Miki, K.; Kusters, M.; Nakashima, T.; Saito, A.; Kawahara, D.; Nishibuchi, I.; Kimura, T.; Murakami, Y.; Nagata, Y. Evaluation of Optimization Workflow Using Custom-Made Planning through Predicted Dose Distribution for Head and Neck Tumor Treatment. Phys. Med. 2020, 80, 167–174. [Google Scholar] [CrossRef]

- Li, X.; Wang, C.; Sheng, Y.; Zhang, J.; Wang, W.; Yin, F.; Wu, Q.; Wu, Q.J.; Ge, Y. An Artificial Intelligence-driven Agent for Real-time Head-and-neck IMRT Plan Generation Using Conditional Generative Adversarial Network (CGAN). Med. Phys. 2021, 48, 2714–2723. [Google Scholar] [CrossRef]

- Gronberg, M.P.; Gay, S.S.; Netherton, T.J.; Rhee, D.J.; Court, L.E.; Cardenas, C.E. Technical Note: Dose Prediction for Head and Neck Radiotherapy Using a Three-dimensional Dense Dilated U-net Architecture. Med. Phys. 2021, 48, 5567–5573. [Google Scholar] [CrossRef]

- Sher, D.J.; Godley, A.; Park, Y.; Carpenter, C.; Nash, M.; Hesami, H.; Zhong, X.; Lin, M.-H. Prospective Study of Artificial Intelligence-Based Decision Support to Improve Head and Neck Radiotherapy Plan Quality. Clin. Transl. Radiat. Oncol. 2021, 29, 65–70. [Google Scholar] [CrossRef]

- Carlson, J.N.K.; Park, J.M.; Park, S.-Y.; Park, J.I.; Choi, Y.; Ye, S.-J. A Machine Learning Approach to the Accurate Prediction of Multi-Leaf Collimator Positional Errors. Phys. Med. Biol. 2016, 61, 2514–2531. [Google Scholar] [CrossRef]

- Koike, Y.; Anetai, Y.; Takegawa, H.; Ohira, S.; Nakamura, S.; Tanigawa, N. Deep Learning-Based Metal Artifact Reduction Using Cycle-Consistent Adversarial Network for Intensity-Modulated Head and Neck Radiation Therapy Treatment Planning. Phys. Med. 2020, 78, 8–14. [Google Scholar] [CrossRef]

- Scholey, J.E.; Rajagopal, A.; Vasquez, E.G.; Sudhyadhom, A.; Larson, P.E.Z. Generation of Synthetic Megavoltage CT for MRI-only Radiotherapy Treatment Planning Using a 3D Deep Convolutional Neural Network. Med. Phys. 2022, 49, 6622–6634. [Google Scholar] [CrossRef]

- Maspero, M.; Houweling, A.C.; Savenije, M.H.F.; van Heijst, T.C.F.; Verhoeff, J.J.C.; Kotte, A.N.T.J.; van den Berg, C.A.T. A Single Neural Network for Cone-Beam Computed Tomography-Based Radiotherapy of Head-and-Neck, Lung and Breast Cancer. Phys. Imaging Radiat. Oncol. 2020, 14, 24–31. [Google Scholar] [CrossRef]

- Barateau, A.; De Crevoisier, R.; Largent, A.; Mylona, E.; Perichon, N.; Castelli, J.; Chajon, E.; Acosta, O.; Simon, A.; Nunes, J.; et al. Comparison of CBCT-based Dose Calculation Methods in Head and Neck Cancer Radiotherapy: From Hounsfield Unit to Density Calibration Curve to Deep Learning. Med. Phys. 2020, 47, 4683–4693. [Google Scholar] [CrossRef]

- Gan, Y.; Langendijk, J.A.; Oldehinkel, E.; Scandurra, D.; Sijtsema, N.M.; Lin, Z.; Both, S.; Brouwer, C.L. A Novel Semi Auto-Segmentation Method for Accurate Dose and NTCP Evaluation in Adaptive Head and Neck Radiotherapy. Radiother. Oncol. 2021, 164, 167–174. [Google Scholar] [CrossRef]

- Chen, W.; Li, Y.; Yuan, N.; Qi, J.; Dyer, B.A.; Sensoy, L.; Benedict, S.H.; Shang, L.; Rao, S.; Rong, Y. Clinical Enhancement in AI-Based Post-Processed Fast-Scan Low-Dose CBCT for Head and Neck Adaptive Radiotherapy. Front. Artif. Intell. 2021, 3, 614384. [Google Scholar] [CrossRef]

- Ma, L.; Chi, W.; Morgan, H.E.; Lin, M.; Chen, M.; Sher, D.; Moon, D.; Vo, D.T.; Avkshtol, V.; Lu, W.; et al. Registration-guided Deep Learning Image Segmentation for Cone Beam CT–Based Online Adaptive Radiotherapy. Med. Phys. 2022, 49, 5304–5316. [Google Scholar] [CrossRef]

- Liang, X.; Chun, J.; Morgan, H.; Bai, T.; Nguyen, D.; Park, J.; Jiang, S. Segmentation by Test-time Optimization for CBCT-based Adaptive Radiation Therapy. Med. Phys. 2022, 50, 1947–1961. [Google Scholar] [CrossRef]

- Guidi, G.; Maffei, N.; Meduri, B.; D’Angelo, E.; Mistretta, G.M.; Ceroni, P.; Ciarmatori, A.; Bernabei, A.; Maggi, S.; Cardinali, M.; et al. A Machine Learning Tool for Re-Planning and Adaptive RT: A Multicenter Cohort Investigation. Phys. Med. 2016, 32, 1659–1666. [Google Scholar] [CrossRef]

- Harms, J.; Lei, Y.; Wang, T.; McDonald, M.; Ghavidel, B.; Stokes, W.; Curran, W.J.; Zhou, J.; Liu, T.; Yang, X. Cone-beam CT-derived Relative Stopping Power Map Generation via Deep Learning for Proton Radiotherapy. Med. Phys. 2020, 47, 4416–4427. [Google Scholar] [CrossRef]

- Lalonde, A.; Winey, B.; Verburg, J.; Paganetti, H.; Sharp, G.C. Evaluation of CBCT Scatter Correction Using Deep Convolutional Neural Networks for Head and Neck Adaptive Proton Therapy. Phys. Med. Biol. 2020, 65, 245022. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Franzese, C.; Dei, D.; Lambri, N.; Teriaca, M.A.; Badalamenti, M.; Crespi, L.; Tomatis, S.; Loiacono, D.; Mancosu, P.; Scorsetti, M. Enhancing Radiotherapy Workflow for Head and Neck Cancer with Artificial Intelligence: A Systematic Review. J. Pers. Med. 2023, 13, 946. https://doi.org/10.3390/jpm13060946

Franzese C, Dei D, Lambri N, Teriaca MA, Badalamenti M, Crespi L, Tomatis S, Loiacono D, Mancosu P, Scorsetti M. Enhancing Radiotherapy Workflow for Head and Neck Cancer with Artificial Intelligence: A Systematic Review. Journal of Personalized Medicine. 2023; 13(6):946. https://doi.org/10.3390/jpm13060946

Chicago/Turabian StyleFranzese, Ciro, Damiano Dei, Nicola Lambri, Maria Ausilia Teriaca, Marco Badalamenti, Leonardo Crespi, Stefano Tomatis, Daniele Loiacono, Pietro Mancosu, and Marta Scorsetti. 2023. "Enhancing Radiotherapy Workflow for Head and Neck Cancer with Artificial Intelligence: A Systematic Review" Journal of Personalized Medicine 13, no. 6: 946. https://doi.org/10.3390/jpm13060946

APA StyleFranzese, C., Dei, D., Lambri, N., Teriaca, M. A., Badalamenti, M., Crespi, L., Tomatis, S., Loiacono, D., Mancosu, P., & Scorsetti, M. (2023). Enhancing Radiotherapy Workflow for Head and Neck Cancer with Artificial Intelligence: A Systematic Review. Journal of Personalized Medicine, 13(6), 946. https://doi.org/10.3390/jpm13060946