Automatic Diagnosis of Infectious Keratitis Based on Slit Lamp Images Analysis

Abstract

1. Introduction

2. Methods

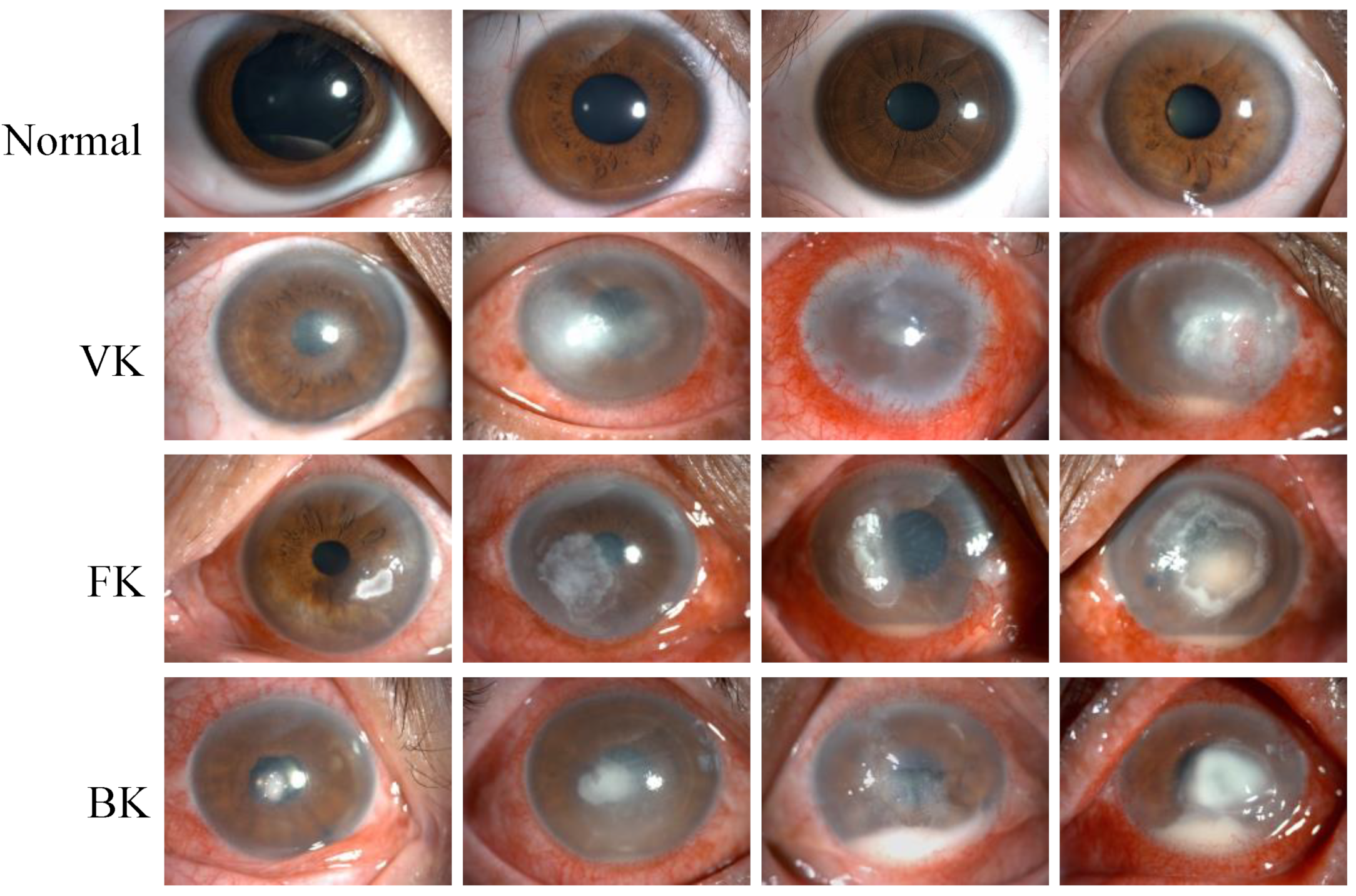

2.1. Image Dataset

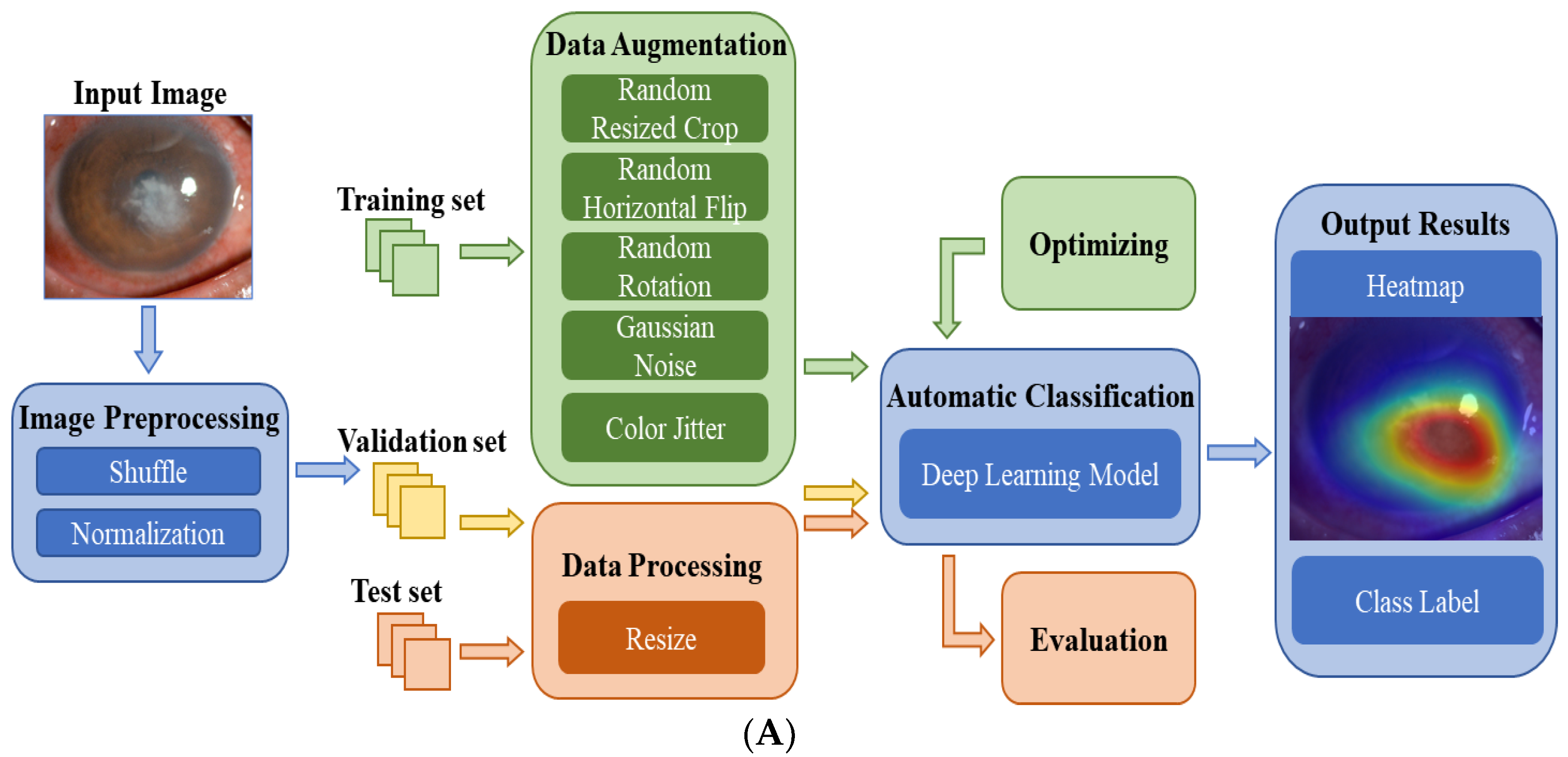

2.2. Data Preparation

2.3. Deep Learning Model

2.4. Performance Assessment

3. Results

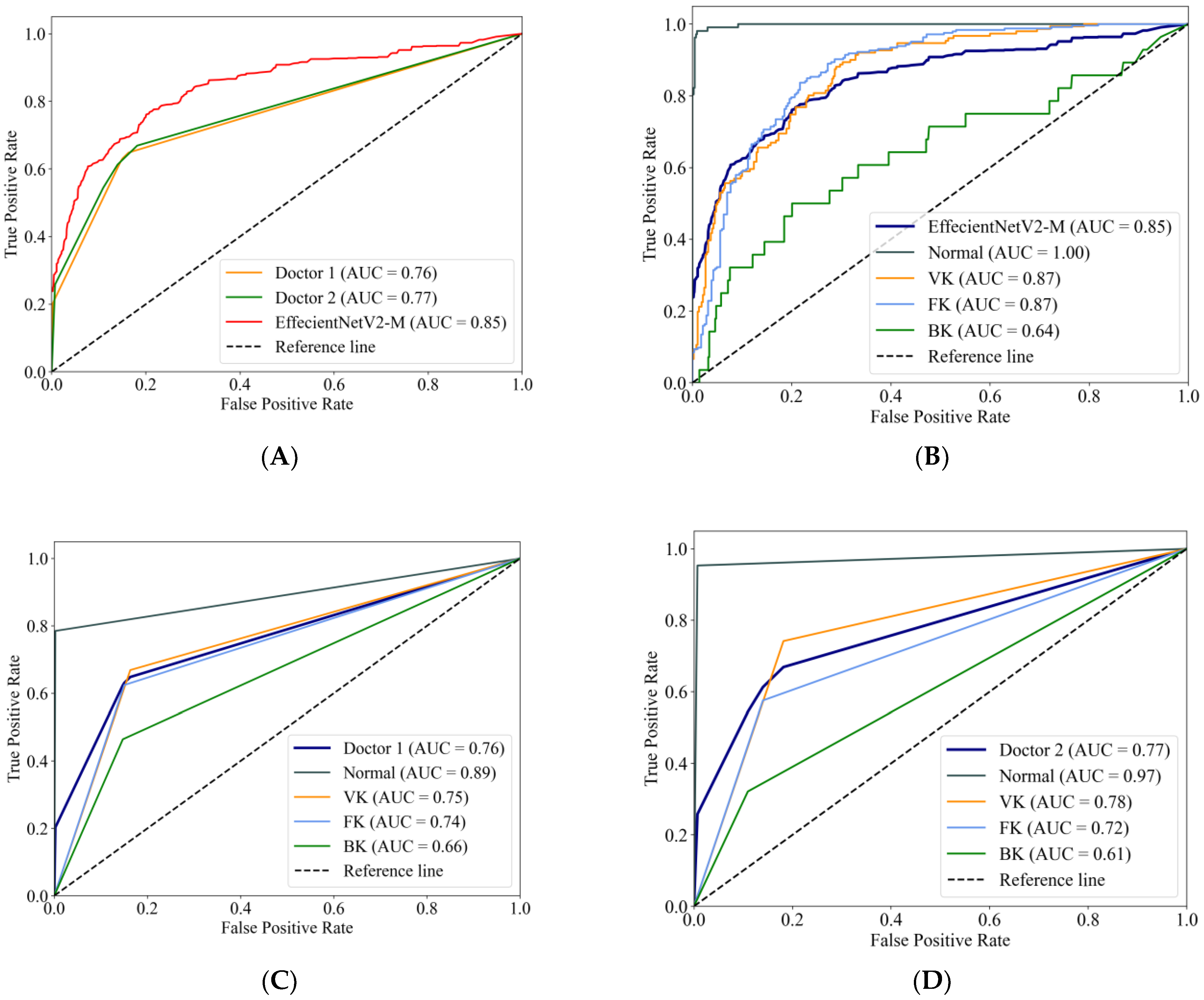

3.1. Performance of the DL Models

3.2. Comparison with the Ophthalmologists

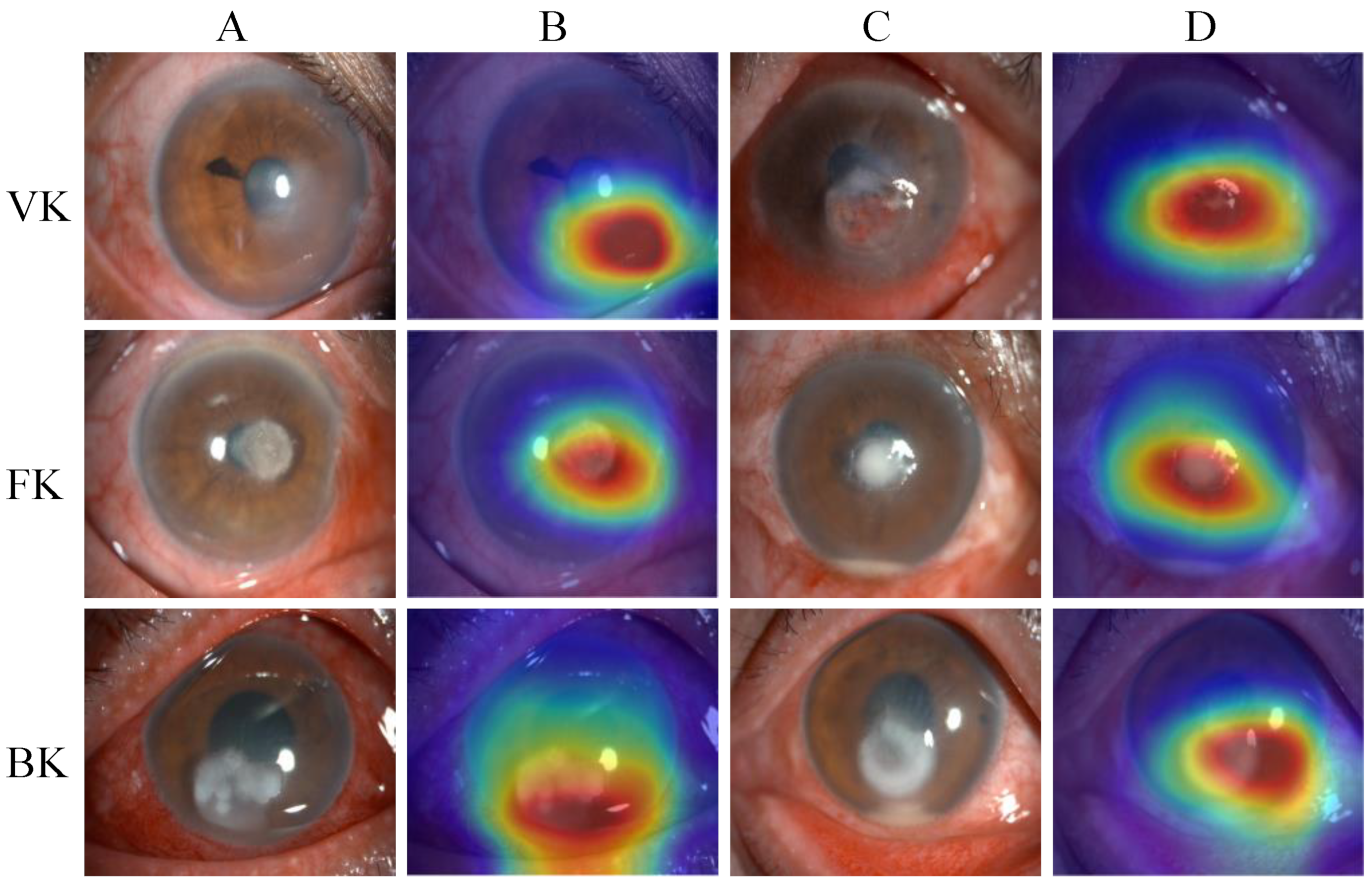

3.3. Heatmaps

3.4. Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Flaxman, S.R.; Bourne, R.R.A.; Resnikoff, S.; Ackland, P.; Braithwaite, T.; Cicinelli, M.V.; Das, A.; Jonas, J.B.; Keeffe, J.; Kempen, J.H.; et al. Global causes of blindness and distance vision impairment 1990–2020: A systematic review and meta-analysis. Lancet Glob. Health 2017, 5, E1221–E1234. [Google Scholar] [CrossRef] [PubMed]

- Ung, L.; Bispo, P.J.M.; Shanbhag, S.S.; Gilmore, M.S.; Chodosh, J. The persistent dilemma of microbial keratitis: Global burden, diagnosis and antimicrobial resistance. Surv. Ophthalmol. 2019, 64, 255–271. [Google Scholar] [CrossRef] [PubMed]

- Gopinathan, U.; Garg, P.; Fernandes, M.; Sharma, S.; Athmanathan, S.; Rao, G.N. The epidemiological features and laboratory results of fungal keratitis: A 10-year review at a referral eye care center in South India. Cornea 2002, 21, 555–559. [Google Scholar] [CrossRef] [PubMed]

- Clemens, L.E.; Jaynes, J.; Lim, E.; Kolar, S.S.; Reins, R.Y.; Baidouri, H.; Hanlon, S.; McDermott, A.M.; Woodburn, K.W. Designed Host Defense Peptides for the Treatment of Bacterial Keratitis. Investig. Ophthalmol. Vis. Sci. 2017, 58, 6273–6281. [Google Scholar] [CrossRef]

- Leck, A.; Burton, M. Distinguishing fungal and bacterial keratitis on clinical signs. Community Eye Health 2015, 28, 6–7. [Google Scholar]

- Mader, T.H.; Stulting, R.D. Viral keratitis. Infect. Dis. Clin. N. Am. 1992, 6, 831–849. [Google Scholar] [CrossRef]

- Watson, S.; Cabrera-Aguas, M.; Khoo, P. Common eye infections. Aust. Prescr. 2018, 41, 67–72. [Google Scholar] [CrossRef]

- Green, M.; Apel, A.; Stapleton, F. Risk factors and causative organisms in microbial keratitis. Cornea 2008, 27, 22–27. [Google Scholar] [CrossRef]

- Shalchi, Z.; Gurbaxani, A.; Baker, M.; Nash, J. Antibiotic Resistance in Microbial Keratitis: Ten-Year Experience of Corneal Scrapes in the United Kingdom. Ophthalmology 2011, 118, 2161–2165. [Google Scholar] [CrossRef]

- Brunner, M.; Somerville, T.; Corless, C.E.; Myneni, J.; Rajhbeharrysingh, T.; Tiew, S.; Neal, T.; Kaye, S.B. Use of a corneal impression membrane and PCR for the detection of herpes simplex virus type-1. J. Med. Microbiol. 2019, 68, 1324–1329. [Google Scholar] [CrossRef]

- Geetha, D.K.; Sivaraman, B.; Rammohan, R.; Venkatapathy, N.; Ramatchandirane, P.S. A SYBR Green based multiplex Real-Time PCR assay for rapid detection and differentiation of ocular bacterial pathogens. J. Microbiol. Methods 2020, 171, 105875. [Google Scholar] [CrossRef]

- Kulandai, L.T.; Lakshmipathy, D.; Sargunam, J. Novel Duplex Polymerase Chain Reaction for the Rapid Detection of Pythium insidiosum Directly from Corneal Specimens of Patients with Ocular Pythiosis. Cornea 2020, 39, 775–778. [Google Scholar] [CrossRef]

- Wang, Y.E.; Tepelus, T.C.; Vickers, L.A.; Baghdasaryan, E.; Gui, W.; Huang, P.; Irvine, J.A.; Sadda, S.; Hsu, H.Y.; Lee, O.L. Role of in vivo confocal microscopy in the diagnosis of infectious keratitis. Int. Ophthalmol. 2019, 39, 2865–2874. [Google Scholar] [CrossRef]

- Dalmon, C.; Porco, T.C.; Lietman, T.M.; Prajna, N.V.; Prajna, L.; Das, M.R.; Kumar, J.A.; Mascarenhas, J.; Margolis, T.P.; Whitcher, J.P.; et al. The Clinical Differentiation of Bacterial and Fungal Keratitis: A Photographic Survey. Investig. Ophthalmol. Vis. Sci. 2012, 53, 1787–1791. [Google Scholar] [CrossRef]

- Chan, H.P.; Samala, R.K.; Hadjiiski, L.M.; Zhou, C. Deep Learning in Medical Image Analysis. In Deep Learning in Medical Image Analysis: Challenges and Applications. Advances in Experimental Medicine and Biology; Lee, G., Fujita, H., Eds.; IEEE: New York, NY, USA, 2020; Volume 1213, pp. 3–21. [Google Scholar]

- Marques, G.; Agarwal, D.; de la Torre Díez, I. Automated medical diagnosis of COVID-19 through EfficientNet convolutional neural network. Appl. Soft. Comput. 2020, 96, 106691. [Google Scholar] [CrossRef]

- Resnikoff, S.; Felch, W.; Gauthier, T.M.; Spivey, B. The number of ophthalmologists in practice and training worldwide: A growing gap despite more than 200,000 practitioners. Br. J. Ophthalmol. 2012, 96, 783–787. [Google Scholar] [CrossRef]

- Gupta, N.; Tandon, R.; Gupta, S.K.; Sreenivas, V.; Vashist, P. Burden of corneal blindness in India. Indian J. Commun. Med. 2013, 38, 198–206. [Google Scholar]

- Ting, D.S.W.; Cheung, C.Y.L.; Lim, G.; Tan, G.S.W.; Quang, N.D.; Gan, A.; Hamzah, H.; Garcia-Franco, R.; Yeo, I.Y.S.; Lee, S.Y.; et al. Development and Validation of a Deep Learning System for Diabetic Retinopathy and Related Eye Diseases Using Retinal Images from Multiethnic Populations with Diabetes. J. Am. Med. Assoc. 2017, 318, 2211–2223. [Google Scholar] [CrossRef]

- Hood, D.C.; De Moraes, C.G. Efficacy of a Deep Learning System for Detecting Glaucomatous Optic Neuropathy Based on Color Fundus Photographs. Ophthalmology 2018, 125, 1207–1208. [Google Scholar] [CrossRef]

- Li, Z.W.; Guo, C.; Nie, D.Y.; Lin, D.R.; Zhu, Y.; Chen, C.; Wu, X.H.; Xu, F.B.; Jin, C.J.; Zhang, X.Y.; et al. Deep learning for detecting retinal detachment and discerning macular status using ultra-widefield fundus images. Commun. Biol. 2020, 3, 15. [Google Scholar] [CrossRef]

- Yan, Y.; Jin, K.; Gao, Z.; Huang, X.; Wang, F.; Wang, Y.; Ye, J. Attention-based deep learning system for automated diagnoses of age-related macular degeneration in optical coherence tomography images. Med. Phys. 2021, 48, 4926–4934. [Google Scholar] [CrossRef] [PubMed]

- Gao, Z.; Jin, K.; Yan, Y.; Liu, X.; Shi, Y.; Ge, Y.; Pan, X.; Lu, Y.; Wu, J.; Wang, Y.; et al. End-to-end diabetic retinopathy grading based on fundus fluorescein angiography images using deep learning. Graefes Arch. Clin. Exp. Ophthalmol. 2022, 260, 1663–1673. [Google Scholar] [CrossRef] [PubMed]

- Cao, J.; You, K.; Jin, K.; Lou, L.; Wang, Y.; Chen, M.; Pan, X.; Shao, J.; Su, Z.; Wu, J.; et al. Prediction of response to anti-vascular endothelial growth factor treatment in diabetic macular oedema using an optical coherence tomography-based machine learning method. Acta Ophthalmol. 2021, 99, e19–e27. [Google Scholar] [CrossRef] [PubMed]

- Gu, H.; Guo, Y.W.; Gu, L.; Wei, A.J.; Xie, S.R.; Ye, Z.Q.; Xu, J.J.; Zhou, X.T.; Lu, Y.; Liu, X.Q.; et al. Deep learning for identifying corneal diseases from ocular surface slit-lamp photographs. Sci. Rep. 2020, 10, 17851. [Google Scholar] [CrossRef] [PubMed]

- Tran, T.; Pham, T.; Carneiro, G.; Palmer, L.; Reid, I. A Bayesian Data Augmentation Approach for Learning Deep Models. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Simonyan, K.; Zisserman, A.-P. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.Q.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning. AAAI Conf. Artif. Intell. 2016, 31. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Tan, M.X.; Le, Q.V. EfficientNetV2: Smaller Models and Faster Training. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 7102–7110. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Kuo, M.T.; Hsu, B.W.Y.; Yin, Y.K.; Fang, P.C.; Lai, H.Y.; Chen, A.; Yu, M.S.; Tseng, V.S. A deep learning approach in diagnosing fungal keratitis based on corneal photographs. Sci. Rep. 2020, 10, 14424. [Google Scholar] [CrossRef]

- Ghosh, A.K.; Thammasudjarit, R.; Jongkhajornpong, P.; Attia, J.; Thakkinstian, A. Deep Learning for Discrimination Between Fungal Keratitis and Bacterial Keratitis: DeepKeratitis. Cornea 2022, 41, 616–622. [Google Scholar] [CrossRef]

- Li, Z.W.; Jiang, J.W.; Chen, K.; Chen, Q.Q.; Zheng, Q.X.; Liu, X.T.; Weng, H.F.; Wu, S.J.; Chen, W. Preventing corneal blindness caused by keratitis using artificial intelligence. Nat. Commun. 2021, 12, 3738. [Google Scholar] [CrossRef]

- Xu, Y.S.; Kong, M.; Xie, W.J.; Duan, R.P.; Fang, Z.Q.; Lin, Y.X.; Zhu, Q.; Tang, S.L.; Wu, F.; Yao, Y.F. Deep Sequential Feature Learning in Clinical Image Classification of Infectious Keratitis. Engineering 2021, 7, 1002–1010. [Google Scholar] [CrossRef]

- Redd, T.K.; Prajna, N.V.; Srinivasan, M.; Lalitha, P.; Krishnan, T.; Rajaraman, R.; Venugopal, A.; Lujan, B.; Acharya, N.; Seitzman, G.D.; et al. Expert Performance in Visual Differentiation of Bacterial and Fungal Keratitis. Ophthalmology 2022, 129, 227–230. [Google Scholar] [CrossRef]

- Yahya, G.; Ebada, A.; Khalaf, E.M.; Mansour, B.; Nouh, N.A.; Mosbah, R.A.; Saber, S.; Moustafa, M.; Negm, S.; El-Sokkary, M.M.A.; et al. Soil-Associated Bacillus Species: A Reservoir of Bioactive Compounds with Potential Therapeutic Activity against Human Pathogens. Microorganisms 2021, 9, 1131. [Google Scholar] [CrossRef]

- El-Telbany, M.; Mohamed, A.A.; Yahya, G.; Abdelghafar, A.; Abdel-Halim, M.S.; Saber, S.; Alfaleh, M.A.; Mohamed, A.H.; Abdelrahman, F.; Fathey, H.A.; et al. Combination of Meropenem and Zinc Oxide Nanoparticles; Antimicrobial Synergism, Exaggerated Antibiofilm Activity, and Efficient Therapeutic Strategy against Bacterial Keratitis. Antibiotics 2022, 11, 1374. [Google Scholar] [CrossRef]

| Dataset (n) | Normal | VK | FK | BK | Total |

|---|---|---|---|---|---|

| Training | 425 | 583 | 753 | 164 | 1925 |

| Validation | 60 | 79 | 127 | 35 | 301 |

| Test | 107 | 151 | 245 | 28 | 531 |

| Total | 592 | 813 | 1125 | 227 | 2757 |

| Model | Accuracy | Recall | Specificity |

|---|---|---|---|

| ResNet34 | 0.635 | 0.554 | 0.861 |

| DenseNet121 | 0.637 | 0.637 | 0.875 |

| ViT-Base | 0.697 | 0.598 | 0.888 |

| VGG16 | 0.708 | 0.583 | 0.890 |

| InceptionV4 | 0.716 | 0.640 | 0.897 |

| EffecientNetV2-M | 0.735 | 0.680 | 0.904 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, S.; Sun, Y.; Li, J.; Xu, P.; Xu, M.; Zhou, Y.; Wang, Y.; Wang, S.; Ye, J. Automatic Diagnosis of Infectious Keratitis Based on Slit Lamp Images Analysis. J. Pers. Med. 2023, 13, 519. https://doi.org/10.3390/jpm13030519

Hu S, Sun Y, Li J, Xu P, Xu M, Zhou Y, Wang Y, Wang S, Ye J. Automatic Diagnosis of Infectious Keratitis Based on Slit Lamp Images Analysis. Journal of Personalized Medicine. 2023; 13(3):519. https://doi.org/10.3390/jpm13030519

Chicago/Turabian StyleHu, Shaodan, Yiming Sun, Jinhao Li, Peifang Xu, Mingyu Xu, Yifan Zhou, Yaqi Wang, Shuai Wang, and Juan Ye. 2023. "Automatic Diagnosis of Infectious Keratitis Based on Slit Lamp Images Analysis" Journal of Personalized Medicine 13, no. 3: 519. https://doi.org/10.3390/jpm13030519

APA StyleHu, S., Sun, Y., Li, J., Xu, P., Xu, M., Zhou, Y., Wang, Y., Wang, S., & Ye, J. (2023). Automatic Diagnosis of Infectious Keratitis Based on Slit Lamp Images Analysis. Journal of Personalized Medicine, 13(3), 519. https://doi.org/10.3390/jpm13030519