Privacy and Trust in eHealth: A Fuzzy Linguistic Solution for Calculating the Merit of Service

Abstract

1. Introduction

2. Definitions

- Attitude is an opinion based on beliefs. It represents our feelings about something and the way a person expresses beliefs and values [10];

- Belief is the mental acceptance that something exists or is true without proof. Beliefs can be rational, irrational or dogmatic [14];

- eHealth is the transfer and exchange of health information between health service consumers (subject of care), health professionals, researchers and stakeholders using information and communication networks, and the delivery of digital health services [15];

- Harm is a potential direct or indirect damage, injury or negative impact of a real or potential economic, physical or social (e.g., reputational) action [11];

- Perception refers to the way a person notices something using his or her senses, or the way a person interprets, understands or thinks about something. It is a subjective process that influences how we process, remember, interpret, understand and act on reality [16]. Perception occurs in the mind and, therefore, perceptions of different people can vary;

3. Methods

4. Related Research

5. Solution to Calculate the Merit of eHealth Services

5.1. Conceptual Model for the eHealth Ecosystem

5.2. Privacy and Trust Challenges in eHealth Ecosystems

5.3. Privacy and Trust Models for eHealth

5.4. A Method for Calculating the Value of Merit of eHealth Services

5.5. Information Sources and Quantification of Privacy and Trust

5.6. Case Study

6. Discussions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Privacy Needs/Questions | Meaning in a Privacy Policy Document | Requirements Exressed by Law (General Data Protection Regulation, EU GDPR) 1 |

|---|---|---|

| PHI used only for purposes defined by the service provider | How and why a service provider collects and uses PHI | Limited by what is necessary in relation to purpose. Explicit purpose |

| PHI not disclosed to third parties | What data and how PHI is shared with third party | Personal policiesTransparency |

| Regulatory compliance | Level Regulatory compliance | Lawfully processing Demonstrate regulatory compliance |

| What is the content of a personal privacy policy? | Edit and deletion | Erase, right to become forgotten, right to object processing, explicit purpose |

| What are the service provider’s characteristics? | Type of organisation address | |

| Encryption | Communication privacy | Encryption |

| How PHI is stored for future use | Data retention (stored as long as needed to perform the requested service/indefinitely) | Retention no longer than necessary for purpose |

| User access to audit trail | What data is shared/transparency | Lawfully processing and transparency |

| User access to own PHI | User access, rights to view records | Access to collected PHI. Right to erase and object processing |

| How personal privacy needs are supported | User choice/control (consent, Opt in/opt out, purpose) | Accept personal privacy policies/explicit consent |

| Does PHI belongs to the customer? | Ownership of data | The individual owns the rights to their data |

| Does a registered office and address exist? | Contact information | |

| Privacy guarantees | Third-party seals or certificates | |

| Transparency | Transparency | Right to become informed |

Appendix B. Trust Attributes for eHealth

- General trust of the health website;

- Personality;

- Privacy concerns;

- Subjective belief of suffering a loss;

- Beliefs in ability, integrity and benevolence.

- Website design and presence, website design for easy access and enjoyment;

- System usability, perceived as easy to use;

- Technical functionality;

- Website quality (being able to fulfil the seekers’ needs);

- Perceived information quality and usefulness;

- Quality (familiarity) that allows better understanding;

- Simple language used;

- Professional appearance of the health website;

- Integrity of the health portal policies with respect to privacy, security, editorial, and advertising.

- Credibility and impartiality;

- Reputation;

- Ability to perform promises made;

- Accountability of misuse;

- Familiarity;

- Branding, brand name and ownership;

- System quality (functionality flexibility), quality of systems, stability;

- Professional expertise;

- Similarity with other systems, ability, benevolence, integrity of the health portal with the same brand;

- Transparency, oversight;

- Privacy, security;

- Privacy and security policies, strategies implemented;

- Regulatory compliance.

- Quality of links;

- Information quality and content (accuracy of content, completeness, relevance, understandable, professional, unbiased, reliable, adequacy and up-to-date), source expertise, scientific references;

- Information source credibility, relevant and good information, usefulness, accuracy, professional appearance of a health website;

- Information credibility;

- Information impartiality.

- Personal interactions;

- Personal experiences;

- Past (prior) experiences;

- Presence of third-party seals (e.g., HONcode, Doctor Trusted™, TrustE).

References

- Kangsberger, R.; Kunz, W.H. Consumer trust in service companies: A multiple mediating analysis. Manag. Serv. Qual. 2010, 20, 4–25. [Google Scholar] [CrossRef]

- Gupta, P.; Akshat Dubey, A. E-Commerce-Study of Privacy, Trust and Security from Consumer’s Perspective. Int. J. Comput. Sci. Mob. Comput. 2016, 5, 224–232. [Google Scholar]

- Tan, Y.-H.; Thoen, W. Toward a Generic Model of Trust for Electronic Commerce. Int. J. Electron. Commer. 2000, 5, 61–74. [Google Scholar]

- Taviani, H.T.; Moor, J.M. Privacy Protection, Control of Information, and Privacy-Enhancing Technologies. ACM SIGCAS Comput. Soc. 2001, 31, 6–11. [Google Scholar] [CrossRef]

- Fairfield, J.A.T.; Engel, C. Privacy as a public good. Duke Law J. 2015, 65, 3. Available online: https://scholarship.law.duke.edu/cgi/viewcontent.cgi?article=3824&context=dlj (accessed on 11 April 2022).

- Li, J. Privacy policies for health social networking sites. J. Am. Med. Inform. Assoc. 2013, 20, 704–707. [Google Scholar] [CrossRef]

- Corbitt, B.J.; Thanasankit, T.; Yi, H. Trust and e-commerce: A study of consumer perceptions. Electron. Commer. Res. Appl. 2003, 2, 203–215. [Google Scholar] [CrossRef]

- Gerber, N.; Reinheimer, B.; Volkamer, M. Investigating People’s Privacy Risk Perception. Proc. Priv. Enhancing Technol. 2019, 3, 267–288. [Google Scholar] [CrossRef]

- Yan, Z.; Holtmanns, S. Trust Modeling and Management: From Social Trust to Digital Trust. In Computer Security, Privacy and Politics; Current Issues, Challenges and Solutions; IGI Global: Hershey, PA, USA, 2007. [Google Scholar] [CrossRef]

- Weitzner, D.J.; Abelson, H.; Berners-Lee, T.; Feigenbaum, J.; Hendler, J.; Sussman, G.J. Information Accountability; Computer Science and Artificial Intelligence Laboratory Technical Report, MIT-CSAIL-TR-2007-034; Massachusetts Institute of Technology: Cambridge, UK, 2007. [Google Scholar]

- Richards, N.; Hartzog, W. Taking Trust Seriously in Privacy Law. Stanf. Tech. Law Rev. 2016, 19, 431. [Google Scholar] [CrossRef]

- Pravettoni, G.; Triberti, S. P5 eHealth: An Agenda for the Health Technologies of the Future; Springer Open: Berlin/Heidelberg, Germany, 2020. [Google Scholar] [CrossRef]

- Ruotsalainen, P.; Blobel, B. Health Information Systems in the Digital Health Ecosystem—Problems and Solutions for Ethics, Trust and Privacy. Int. J. Environ. Res. Public Health 2020, 17, 3006. [Google Scholar] [CrossRef]

- Kimi, A.; Choobineh, J. Trust in Electronic Commerce: Definition and Theoretical Considerations. In Proceedings of the Thirty-First Hawaii International Conference on System Sciences, Kohala Coast, HI, USA, 9 January 1998; Volume 4, pp. 51–61. [Google Scholar] [CrossRef]

- World Health Organization. Available online: https://who.int/trade/glossary/story021/en/ (accessed on 11 April 2022).

- LEXICO. Available online: https://www.lexico.com/definition/perception (accessed on 11 April 2022).

- Jøsang, A.; Ismail, R.; Boyd, C. A Survey of Trust and Reputation Systems for Online Service Provision. Decis. Support Syst. 2007, 43, 618–644. [Google Scholar] [CrossRef]

- Liu, X.; Datta, A.; Lim, E.-P. Computational Trust Models and Machine Learning; Chapman & Hall/CRC Machine Learning & Pattern Recognition Series, 2015 Taylor and Francis Group; CRC Press: New York, NY, USA, 2015; ISBN 978-1-4822-2666-9. [Google Scholar]

- Bhatia, J.; Breaux, T.D. Empirical Measurement of Perceived Privacy Risk. ACM Trans. Comput.-Hum. Interact. 2018, 25, 1–47. [Google Scholar] [CrossRef]

- Chang, L.Y.; Lee, H. Understanding perceived privacy: A privacy boundary management model. In Proceedings of the 19th Pacific Asia Conference on Information Systems (PACIS 2015), Singapore, 5–9 July 2015. [Google Scholar]

- Solove, D.J. A Taxonomy of Privacy. Univ. Pa. Law Rev. 2006, 154, 2. [Google Scholar] [CrossRef]

- Smith, H.J.; Dinev, T.; Xu, H. Information privacy research: An interdisciplinary review. MIS Q. 2011, 35, 989–1015. [Google Scholar] [CrossRef]

- Marguilis, S.T. Privacy as a Social Issue and Behavioral Concept. J. Soc. Issues 2003, 59, 243–261. [Google Scholar] [CrossRef]

- Kasper, D.V.S. Privacy as a Social Good. Soc. Thought Res. 2007, 28, 165–189. [Google Scholar] [CrossRef][Green Version]

- Lilien, L.; Bhargava, B. Trading Privacy for Trust in Online Interactions; Center for Education and Research in Information Assurance and Security (CERIAS), Purdue University: West Lafayette, IN, USA, 2009. [Google Scholar] [CrossRef]

- Kehr, F.; Kowatsch, T.; Wentzel, D.; Fleish, E. Blissfully Ignorant: The Effects of General Privacy Concerns, General Institutional Trust, and Affect in the Privacy Calculus. Inf. Syst. J. 2015, 25, 607–635. [Google Scholar] [CrossRef]

- Zwick, D. Models of Privacy in the Digital Age: Implications for Marketing and E-Commerce; University of Rhode Island: Kingston, RI, USA, 1999; Available online: https://www.researchgate.net/profile/Nikhilesh-Dholakia/publication/236784823_Models_of_privacy_in_the_digital_age_Implications_for_marketing_and_e-commerce/links/0a85e5348ac5589862000000/Models-of-privacy-in-the-digital-age-Implications-for-marketing-and-e-commerce.pdf?origin=publication_detail (accessed on 11 April 2022).

- Ritter, J.; Anna Mayer, A. Regulating Data as Property: A New Construct for Moving Forward. Duke Law Technol. Rev. 2018, 16, 220–277. Available online: https://scholarship.law.duke.edu/dltr/vol16/iss1/7 (accessed on 11 April 2022).

- Goodwin, C. Privacy: Recognition of consumer right. J. Public Policy Mark. 1991, 10, 149–166. [Google Scholar] [CrossRef]

- Dinev, T.; Xu, H.; Smith, J.H.; Hart, P. Information privacy and correlates: An empirical attempt to bridge and distinguish privacy-related concepts. Eur. J. Inf. Syst. 2013, 22, 295–316. [Google Scholar] [CrossRef]

- Fernández-Alemán, J.L.; Señor, I.C.; Pedro Lozoya, Á.O.; Toval, A. Security and privacy in electronic health records. J. Biomed. Inform. 2013, 46, 541–562. [Google Scholar] [CrossRef] [PubMed]

- Vega, L.C.; Montague, E.; DeHart, T. Trust between patients and health websites: A review of the literature and derived outcomes from empirical studies. Health Technol. 2011, 1, 71–80. [Google Scholar] [CrossRef]

- Li, Y. A model of individual multi-level information privacy beliefs. Electron. Commer. Res. Appl. 2014, 13, 32–44. [Google Scholar] [CrossRef]

- Kruthoff, S. Privacy Calculus in the Context of the General Data Protection Regulation and Healthcare: A Quantitative Study. Bachelor’s Thesis, University of Twente, Enschede, The Netherlands, 2018. [Google Scholar]

- Mitchell, V.-M. Consumer perceived risk: Conceptualizations and models. Eur. J. Mark. 1999, 33, 163–195. [Google Scholar] [CrossRef]

- Chen, C.C.; Dhillon, G. Interpreting Dimensions of Consumer Trust in E-Commerce. Inf. Technol. Manag. 2003, 4, 303–318. [Google Scholar] [CrossRef]

- Schoorman, F.D.; Mayer, R.C.; Davis, J.H. An integrative model of organizational trust: Past, present, and future. Acad. Manag. Rev. 2007, 32, 344–354. [Google Scholar] [CrossRef]

- Pennanen, K. The Initial Stages of Consumer Trust Building in E-Commerce; University of Vaasa: Vaasa, Finland, 2009; ISBN 978–952–476–257–1.; Available online: https://www.uwasa.fi/materiaali/pdf/isbn_978-952-476-257-1.pdf (accessed on 11 April 2022)ISBN 978.

- Beldad, A.; de Jong, M.; Steehouder, M. How shall I trust the faceless and the intangible? A literature review on the antecedents of online trust. Comput. Hum. Behav. 2010, 26, 857–869. [Google Scholar] [CrossRef]

- Wanga, S.W.; Ngamsiriudomb, W.; Hsieh, C.-H. Trust disposition, trust antecedents, trust, and behavioral intention. Serv. Ind. J. 2015, 35, 555–572. [Google Scholar] [CrossRef]

- Grabner-Kräuter, S.; Kaluscha, E.A. Consumer Trust in Electronic Commerce: Conceptualization and Classification of Trust Building Measures; Chapter in ‘Trust and New Technologies’; Kautonen, T., Karjaluoto, H., Eds.; Edward Elgar Publishing: Cheltenham, UK, 2008; pp. 3–22. [Google Scholar]

- Castelfranchi, C. Trust Mediation in Knowledge Management and Sharing. In Lecture Notes in Computer Science; Trust Management. iTrust 2004; Jensen, C., Poslad, S., Dimitrakos, T., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; Volume 2995. [Google Scholar] [CrossRef]

- Wiedmann, K.-P.; Hennigs, N.; Dieter Varelmann, D.; Reeh, M.O. Determinants of Consumers’ Perceived Trust in IT-Ecosystems. J. Theor. Appl. Electron. Commer. Res. 2010, 5, 137–154. [Google Scholar] [CrossRef]

- Mayer, R.C.; Davis, J.H.; Schoorman, F.D. An Integrative Model of Organizational Trust. Acad. Manag. Rev. 1995, 20, 709–734. Available online: http://www.jstor.org/stable/258792.137-154 (accessed on 11 April 2022). [CrossRef]

- Medic, A. P2P Appliance Calculation Method for Trust between Nodes within a P2P Network. Int. J. Comput. Sci. Issues 2012, 9, 125–130. [Google Scholar]

- Sabater, J.; Sierra, C. Review on computational trust and reputation models. Artif. Intell. Rev. 2005, 24, 33–60. [Google Scholar] [CrossRef]

- Braga, D.D.S.; Niemann, M.; Hellingrath, B.; Neto, F.B.D.L. Survey on Computational Trust and Reputation Models. ACM Comput. Surv. 2019, 51, 1–40. [Google Scholar] [CrossRef]

- Liu, X.; Datta, A.; Rzadca, K. Stereo Trust: A Group Based Personalized Trust Model. In Proceedings of the 18th ACM Conference on Information and Knowledge Management (CIKM’09), Hong Kong, China, 2–6 November 2009. ACM978-1-60558-512-3/09/1. [Google Scholar] [CrossRef]

- Kim, Y. Trust in health information websites: A systematic literature review on the antecedents of trust. Health Inform. J. 2016, 22, 355–369. [Google Scholar] [CrossRef]

- Vu, L.-H.; Aberer, K. Effective Usage of Computational Trust Models in Rational Environments. ACM Trans. Auton. Adapt. Syst. 2011, 6, 1–25. [Google Scholar] [CrossRef]

- Nafi, K.W.; Kar, T.S.; Hossain Md, A.; Hashem, M.M.A. A Fuzzy logic based Certain Trust Model for e-commerce. In Proceedings of the 2013 International Conference on Informatics, Electronics and Vision (ICIEV), Dhaka, Bangladesh, 17–18 May 2013; pp. 1–6. [Google Scholar] [CrossRef]

- Jøsang, A. Computational Trust. In Subjective Logic. Artificial Intelligence: Foundations, Theory, and Algorithms; Springer: Cham, Switzerland, 2016. [Google Scholar] [CrossRef]

- Ries, S. Certain Trust: A Model for Users and Agents. In Proceedings of the 2007 ACM Symposium on Applied Computing, Seoul, Korea, 11–15 March 2007. [Google Scholar]

- Skopik, F. Dynamic Trust in Mixed Service-Oriented Systems Models, Algorithms, and Applications. Ph.D. Thesis, Technischen Universität Wien, Fakultät für Informatik, Austria, 2010. [Google Scholar]

- Nefti, S.; Meziane, F.; Kasiran, K. A Fuzzy Trust Model for E-Commerce. In Proceedings of the Seventh IEEE International Conference on E-Commerce Technology (CEC’05), Munich, Germany, 19–22 July 2005. ISBN 0-7695-2277-7. [Google Scholar] [CrossRef]

- Truong, N.B.; Lee, G.M. A Reputation and Knowledge Based Trust Service Platform for Trustworthy Social Internet of Things. In Proceedings of the 19th International Conference on Innovations in Clouds, Internet and Networks (ICIN 2016), Paris, France, 1–3 March 2016. [Google Scholar]

- Nguyen, H.-T.; Md Dawal, S.Z.; Nukman, Y.; Aoyama, H.; Case, K. An Integrated Approach of Fuzzy Linguistic Preference Based AHP and Fuzzy COPRAS for Machine Tool Evaluation. PLoS ONE 2015, 10, e0133599. [Google Scholar] [CrossRef]

- Keshwani, D.R.; Jones, D.D.; Meyer, G.E.; Brand, R.M. Ruke-based Mamdani-type fuzzy modeling of skin permeability. Appl. Soft Comput. 2008, 8, 285–294. [Google Scholar] [CrossRef]

- Afshari, A.R.; Nikolić, M.; Ćoćkalo, D. Applications of Fuzzy Decision Making for Personnel Selection Problem-A Review. J. Eng. Manag. Compet. (JEMC) 2014, 4, 68–77. [Google Scholar] [CrossRef]

- Mahalle, P.N.; Thakre, P.A.; Prasad, N.R.; Prasad, R. A fuzzy approach to trust based access control in internet of things. In Proceedings of the 3rd International Conference on Wireless Communication, Vehicular Technology, Information Theory and Aerospace & Electronic Systems Technology (Wireless ViTAE) 2013, Atlantic City, NJ, USA, 24–27 June 2013. [Google Scholar] [CrossRef]

- Lin, C.-T.; Chen, Y.-T. Bid/no-bid decision-making–A fuzzy linguistic approach. Int. J. Proj. Manag. 2004, 22, 585–593. [Google Scholar] [CrossRef]

- Herrera, T.; Herrera-Viedma, E. Linguistic decision analysis: Steps for solving decision problems under linguistic information. Fuzzy Sets Syst. 2000, 115, 67–82. [Google Scholar] [CrossRef]

- Mishaand, S.; Prakash, M. Study of fuzzy logic in medical data analytics, supporting medical diagnoses. Int. J. Pure Appl. Math. 2018, 119, 16321–16342. [Google Scholar]

- Gürsel, G. Healthcare, uncertainty, and fuzzy logic. Digit. Med. 2016, 2, 101–112. [Google Scholar] [CrossRef]

- de Medeiros, I.B.; Machadoa, M.A.S.; Damasceno, W.J.; Caldeira, A.M.; Santos, R.C.; da Silva Filho, J.B. A Fuzzy Inference System to Support Medical Diagnosis in Real Time. Procedia Comput. Sci. 2017, 122, 167–173. [Google Scholar] [CrossRef]

- Hameed, K.; Bajwa, I.S.; Ramzan, S.; Anwar, W.; Khan, A. An Intelligent IoT Based Healthcare System Using Fuzzy Neural Networks. Hindawi Sci. Program. 2020, 2020, 8836927. [Google Scholar] [CrossRef]

- Athanasiou, G.; Anastassopoulos, G.C.; Tiritidou, E.; Lymberopoulos, D. A Trust Model for Ubiquitous Healthcare Environment on the Basis of Adaptable Fuzzy-Probabilistic Inference System. IEEE J. Biomed. Health Inform. 2018, 22, 1288–1298. [Google Scholar] [CrossRef] [PubMed]

- Huckvale, K.; Prieto, J.T.; Tilney, M.; Benghozi, P.-J.; Car, J. Unaddressed privacy risks in accredited health and wellness apps: A cross-sectional systematic assessment. BMC Med. 2015, 13, 214. [Google Scholar] [CrossRef]

- Bellekens, X.; Seeam, P.; Franssen, Q.; Hamilton, A.; Nieradzinska, K.; Seeam, A. Pervasive eHealth services a security and privacy risk awareness survey. In Proceedings of the 2016 International Conference On Cyber Situational Awareness, Data Analytics And Assessment (CyberSA), London, UK, 13–14 June 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Krasnova, H.; Günther, G.; Spiekermann, S.; Koroleva, K. Privacy concerns and identity in online social networks. IDIS 2009, 2, 39–63. [Google Scholar] [CrossRef]

- Abe, A.; Simpson, A. Formal Models for Privacy. In Proceedings of the Workshop Proceedings of the EDBT/ICDT 2016 Joint Conference, Bordeaux, France, 15 March 2016; ISSN 1613-0073. Available online: CEUR-WS.org (accessed on 11 April 2022).

- Baldwin, A.; Jensen, C.D. Enhanced Accountability for Electronic Processes; iTrust 2004, LNCS 2995; Jensen, C., Poslad, S., Dimitrakos, T., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; pp. 319–332. [Google Scholar]

- Culnan, M.J.; Bies, R.J. Consumer Privacy: Balancing Economic and Justice Considerations. J. Soc. Issues 2003, 59, 323–342. [Google Scholar] [CrossRef]

- Lanier, C.D., Jr.; Saini, A. Understanding Consumer Privacy: A Review and Future Directions. Acad. Mark. Sci. Rev. 2008, 12, 2. Available online: https://www.amsreview.org/articles/lanier02-200 (accessed on 11 April 2022).

- Wetzels, M.; Ayoola, I.; Bogers, S.; Peters, P.; Chen, W.; Feijs, L. Consume: A privacy-preserving authorization and authentication service for connecting with health and wellbeing APIs. Pervasive Mob. Comput. 2017, 43, 20–26. [Google Scholar] [CrossRef]

- Wanigasekera, C.P.; Feigenbaum, J. Trusted Systems Protecting Sensitive Information through Technological Solutions. Sensitive Information in a Wired World Course (CS457) Newhaven, Yale University, 12 December 2003, 16p. Available online: http://zoo.cs.yale.edu/classes/cs457/backup (accessed on 11 April 2022).

- Papageorgiou, A.; Strigkos, M.; Politou, E.; Alepis, E.; Solanas, A.; Patsakis, C. Security and Privacy Analysis of Mobile Health Applications: The Alarming State of Practice. IEEE Access 2018, 6, 9390–9403. [Google Scholar] [CrossRef]

- Kosa, T.A.; El-Khati, K.; Marsh, S. Measuring Privacy. J. Internet Serv. Inf. Secur. 2011, 1, 60–73. [Google Scholar]

- Škrinjarić, B. Perceived quality of privacy protection regulations and online privacy concern. Econ. Res.-Ekon. Istraživanja 2019, 32, 982–1000. [Google Scholar] [CrossRef]

- McKnight, D.H. Trust in Information Technology. In The Blackwell Encyclopedia of Management; Management Information Systems; Davis, G.B., Ed.; Blackwell: Malden, MA, USA, 2005; Volume 7, pp. 329–333. [Google Scholar]

- McKnigth, H.D.; Choundhury, V.; Kacmar, C. Developing and Validating Trust Measures for e-Commerce: An Integrative Typology. Inf. Syst. Res. 2002, 13, 334–359. [Google Scholar] [CrossRef]

- Coltman, T.; Devinney, T.M.; Midgley, D.F.; Venaik, S. Formative versus reflective measurement models: Two applications of formative measurement. J. Bus. Res. 2008, 61, 1250–1262. [Google Scholar] [CrossRef]

- Herrera, F.; Martinez, L.; Sanchez, P.J. Integration of Heterogeneous Information in Decision-Making Problems. Available online: https://sinbad2.ujaen.es/sites/default/files/publications/Herrera2000b_IPMU.pdf (accessed on 11 April 2022).

- Andayani, S.; Hartati, S.; Wardoyo, R.; Mardapi, D. Decision-Making Model for Student Assessment by Unifying Numerical and Linguistic Data. Int. J. Electr. Comput. Eng. 2017, 7, 363–373. [Google Scholar] [CrossRef]

- Delgado, M.; Herrera, F.; Herrera-Viedma, E.; Marffnez, L. Combining numerical and linguistic information in group decision making. J. Inf. Sci. 1998, 107, 177–194. [Google Scholar] [CrossRef]

- Zhang, X.; Ma, W.; Chen, L. New Similarity of Triangular Fuzzy Numbers and its applications. Hindawi Sci. World J. 2014, 2014, 215047. [Google Scholar] [CrossRef]

- Luo, M.; Cheng, Z. The Distance between Fuzzy Sets in Fuzzy Metric Space. In Proceedings of the 12th International Conference on Fuzzy Systems and Knowledge Discovery 2015 (FSKD’15), Zhangjiajie, China, 15–17 August 2015; pp. 190–194. [Google Scholar] [CrossRef]

- Abdul-Rahman, A.; Hailes, S. Supporting Trust in Virtual Communities. In Proceedings of the 33rd Annual Hawaii International Conference on System Sciences, Maui, HI, USA, 4–7 January 2000; Volume 1, pp. 1–9. [Google Scholar] [CrossRef]

- Jøsang, A.; Presti, S.L. Analysing the Relationship between Risk and Trust. In Lecture Notes in Computer Science; Trust Management. iTrust 2004.; Jensen, C., Poslad, S., Dimitrakos, T., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; Volume 2995. [Google Scholar] [CrossRef]

- Pinyol, I.; Sabater-Mir, J. Computational trust and reputation models for open multi-agent systems: A review. Artif. Intell. Rev. 2013, 40, 1–25. [Google Scholar] [CrossRef]

- Wang, G.; Chen, S.; Zhou, Z.; Liu, J. Modelling and Analyzing Trust Conformity in E-Commerce Based on Fuzzy Logic. Wseas Trans. Syst. 2015, 14, 1–10. [Google Scholar]

- Meziane, F.; Nefti, S. Evaluating E-Commerce Trust Using Fuzzy Logic. Int. J. Intell. Inf. Technol. 2007, 3, 25–39. [Google Scholar] [CrossRef]

- Guo, G.; Zhang, J.; Thalmann, D.; Basu, A.; Yorke-Smith, N. From Ratings to Trust: An Empirical Study of Implicit Trust in Recommender Systems. In Proceedings of the 29th Annual ACM Symposium on Applied Computing (SAC’14), Gyeongju Korea, 24–28 March 2014; pp. 248–253. [Google Scholar] [CrossRef]

- Patel, G.N. Evaluation of Consumer Buying Behaviour for Spefic Food Commodity Using FUZZY AHP Approach. In Proceedings of the International Symposium on the Analytic Hierarchy Process, London, UK, 4–7 August 2016. [Google Scholar] [CrossRef]

- Egger, F.N. From Interactions to Transactions: Designing the Trust Experience for Business-to-Consumer Electronic Commerce; Eindhoven University of Technology: Eindhoven, The Netherlands, 2003; ISBN 90-386-1778-X. [Google Scholar]

- Manchala, D. E-Commerce Trust Metrics and Models. IEEE Internet Comput. 2000, 4, 36–44. [Google Scholar] [CrossRef]

- Kim, Y.A.; Ahmad, M.A.; Srivastava, J.; Kim, S.H. Role of Computational Trust Models in Service Science. 5 January 2009; Journal of Management Information Systems, Forth-coming, KAIST College of Business Working Paper Series No. 2009-002, 36 pages. Available online: https://ssrn.com/abstract (accessed on 11 April 2022).

- Wilson, S.; Schaub, F.; Dara, A.A.; Liu, F.; Cherivirala, S.; Leon, P.G.; Andersen, M.S.; Zimmeck, S.; Sathyendra, K.M.; Russell, N.C.; et al. The Creation and Analysis of a Website Privacy Policy Corpus. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Berlin, Germany, 7–12 August 2016. [Google Scholar] [CrossRef]

- Mazmudar, M.; Goldberg, I. Mitigator: Privacy policy compliance using trusted hardware. Proc. Priv. Enhancing Technol. 2020, 2020, 204–221. [Google Scholar] [CrossRef]

- Pollach, I. What’s wrong with online privacy policies? Commun. ACM 2007, 50, 103–108. [Google Scholar] [CrossRef]

- Iwaya, L.H.; Fischer-Hubner, S.; Åhlfeldt, R.-M.; Martucci, L.A. mHealth: A Privacy Threat Analysis for Public Health Surveillance Systems. In Proceedings of the 2018 IEEE 31st International Symposium on Computer-Based Medical Systems (CBMS), Karlstad, Sweden, 18–21 June 2018; pp. 42–47. [Google Scholar] [CrossRef]

- Oltramari, A.; Piraviperumal, D.; Schaub, F.; Wilson, S.; Cherivirala, S.; Norton, T.; Russell, N.C.; Story, P.; Reidenberg, J.; Sadeh, N. PrivOnto: A Semantic Framework for the Analysis of Privacy Policies. Semant. Web 2017, 9, 185–203. [Google Scholar] [CrossRef]

- Harkous, H.; Fawaz, K.; Lebret, R.; Schaub, F.; Shin, K.G.; Aberer, K. Polisis: Automated Analysis and Presentation of Privacy Policies Using Deep Learning. In Proceedings of the 27th USENIX Security Symposium, Baltimore, MD, USA, 15–17 August 2018. [Google Scholar] [CrossRef]

- Costance, E.; Sun, Y.; Petkovic, M.; den Hartog, J. A Machine Learning Solution to Assess Privacy Policy Completeness. In Proceedings of the 2012 ACM Workshop on Privacy in the Electronic Society (WPES ‘12), Raleigh North, CA, USA, 15 October 2012; pp. 91–96. [Google Scholar] [CrossRef]

- Beke, F.; Eggers, F.; Verhof, P.C. Consumer Informational Privacy: Current Knowledge and Research Directions. Found. Trends Mark. 2018, 11, 1–71. [Google Scholar] [CrossRef]

- Hussin, A.R.C.; Macaulay, L.; Keeling, K. The Importance Ranking of Trust Attributes in e-Commerce Website. In Proceedings of the 11th Pacific-Asia Conference on Information Systems, Auckland, New Zealand, 4–6 July 2007; 2007. [Google Scholar]

- Söllner, M.; Leimeister, J.M. What We Really Know About Antecedents of Trust: A Critical Review of the Empirical Information Systems Literature on Trust. In Psychology of Trust: New Research Gefen, D., Ed.; Nova Science Publishers: Hauppauge, NY, USA, 2013; pp. 127–155. ISBN 978-1-62808-552-5. [Google Scholar]

- Rocs, J.C.; Carcia, J.J.; de la Bega, J. The importance of perceived trust, security and privacy in online trading systems. Inf. Manag. Comput. Secur. 2009, 17, 96–113. [Google Scholar] [CrossRef]

- Arifin, D.M. Antecedents of Trust in B2B Buying Process: A Literature Review. In Proceedings of the 5th IBA Bachelor Thesis Conference, Enschede, The Netherlands, 2 July 2015. [Google Scholar]

- Tamini, N.; Sebastinelli, R. Understanding eTrust. J. Inf. Priv. Secur. 2007, 3, 3–17. [Google Scholar] [CrossRef]

- Ruotsalainen, P.; Blobel, B. How a Service User Knows the Level of How a Service User Knows the Level of Privacy and to Whom Trust in pHealth Systems? Stud. Health Technol. Inf. 2021, 285, 39–48. [Google Scholar] [CrossRef]

- Sillence, E.; Bruggs, P.; Fishwick, L.; Harris, P. Trust and mistrust of Online Health Sites. In Proceedings of the 2004 Conference on Human Factors in Computing Systems, CHI 2004, Vienna, Austria, 24–29 April 2004. [Google Scholar] [CrossRef]

- Li, X.; Hess, T.J.; Valacich, J.S. Why do we trust new technology a study of initial trust formation with organizational information systems. J. Strateg. Inf. Syst. 2008, 17, 39–71. [Google Scholar] [CrossRef]

- Seigneur, J.-M.; Jensen, C.D. Trading Privacy for Trust, Trust Management. In Proceedings of the Second International Conference, iTrust 2004, Oxford, UK, 29 March–1 April 2004; Volume 2995, pp. 93–107. [Google Scholar] [CrossRef]

- Jøsang, A.; Ismail, R. The Beta Reputation System. In Proceedings of the 15th Bled Conference on Electronic Commerce, Bled, Slovenia, 17–19 June 2002. [Google Scholar]

- Wang, Y.; Lin, E.-P. The Evaluation of Situational Transaction Trust in E-Service Environments. In Proceedings of the 2008 IEEE International Conference on e-Business Engineering, Xi’an, China, 22–24 October 2008; pp. 265–272. [Google Scholar] [CrossRef]

- Matt, P.-A.; Morge, A.; Toni, F. Combining statistics and arguments to compute trust. In Proceedings of the 9th International Conference on Autonomous Agents and Multiagent Systems (AAMAS 2010), Toronto, ON, Canada, 10–14 May 2010; Volume 1–3. [Google Scholar] [CrossRef]

- Fuchs, C. Toward an alternative concept of privacy. J. Commun. Ethics Soc. 2011, 9, 220–237. [Google Scholar] [CrossRef]

- Acquisti, A.; Grossklags, J. Privacy and Rationality in Individual Decision Making. IEEE Secur. Priv. 2005, 3, 26–33. Available online: https://ssrn.com/abstract=3305365 (accessed on 11 April 2022). [CrossRef]

- Lankton, N.K.; McKnight, D.H.; Tripp, J. Technology, Humanness, and Trust: Rethinking Trust in Technology. J. Assoc. Inf. Syst. 2015, 16, 880–918. [Google Scholar] [CrossRef]

- EU-GDPR. Available online: htpps://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A02016R0679-2016950&qid=1532348683434 (accessed on 11 April 2022).

- Luo, W.; Najdawi, M. Trust-building Measures: A Review of Consumer Health Portals. Commun. ACM 2004, 47, 109–113. [Google Scholar] [CrossRef]

- Adjekum, A.; Blasimme, A.; Vayena, E. Elements of Trust in Digital Health Systems: Scoping Review. J. Med. Internet Res. 2018, 20, e11254. [Google Scholar] [CrossRef]

- Boon-itt, S. Quality of health websites and their influence on perceived usefulness, trust and intention to use: An analysis from Thailand. J. Innov. Entrep. 2019, 8, 4. [Google Scholar] [CrossRef]

- Geissbuhler, A.; Safran, C.; Buchan, I.; Bellazzi, R.; Labkoff, S.; Eilenberg, K.; Leese, A.; Richardson, C.; Mantas, J.; Murray, P.; et al. Trustworthy reuse of health data: A transnational perspective. Int. J. Med. Inf. 2013, 82, 1–9. [Google Scholar] [CrossRef]

- Esmaeilzadeh, P. The Impacts of the Perceived Transparency of Privacy Policies and Trust in Providers for Building Trust in Health Information Exchange: Empirical Study. JMIR Med. Inf. 2019, 7, e14050. [Google Scholar] [CrossRef]

- Vega, J.A. Determiners of Consumer Trust towards Electronic Commerce: An Application to Puerto Rico. Esic Mark. Econ. Bus. J. 2015, 46, 125–147. [Google Scholar] [CrossRef]

| Highly sensitive health-related data (e.g., diseases, symptoms, social behavior, and psychological features) are collected, used and shared |

| Healthcare-specific laws regulate the collection, use, retention and disclosure of PHI |

| To use services, the user must disclose sensitive PHI |

| Misuse of PHI can cause serious discrimination and harm |

| Service provided is often information, knowledge or recommendations without quality guarantee or return policy |

| The service provider can be a regulated or non-regulated healthcare service provider, wellness-service provider or a computer application |

| Service user can be a patient, and there exists a fiducial patient–doctor relationship |

| Direct measurements, experiences, interactions and observations |

| Service provider’s privacy policy document |

| Content of privacy certificate or seal for the medical quality of information, content of certificate for legal compliance (structural assurance), andaudit trial (transparency). |

| Past experiences, transaction history, previous expertise |

| Information available on service provider’s website |

| Provider’s promises and manifestations |

| Others recommendations and ratings, expected quality of services |

| Information of service provider’s properties and information system |

| Vendor’s type or profile (similarity information) |

| Name | Meaning of Attribute | Value = 2 | Value = 1 | Value = 0 |

|---|---|---|---|---|

| P1 | PHI disclosed to third parties | No data disclosed to third parties | Only anonymous datais disclosed | Yes/no information |

| P2 | Regulatory Compliance | Compliance certified by experts third-party privacy seals | Demonstrated regulatory complianceAvailable | Manifesto or no information |

| P3 | PHI Retention | Kept no longer than necessary for purposes of collection | Stored in encrypted form for further use | No retention time expressed |

| P4 | Use of PHI | Used only for presented purposes | Used for other named purposes | Purposes defined by the vendor |

| P5 | User access to collected PHI | Direct access via network | Vendor made document of collected PHI is available on request | No access or no information available |

| P6 | Transparency | Customer has access to audit trail | No user access to audit trail | No audit trail or no information |

| P7 | Ownership of the PHI | PHI belongs to DS (user) | Shared ownership of PHI | Ownership of PHI remains at vendor or no information |

| P8 | Support of SerU’s privacy needs | SerU’s own privacy policy supported | Informed consent supported | No support of DS’ privacy policies or no information |

| P9 | Presence of organisation | Name, registered office address, e-mail address and contact address of privacy officer available | Name, physical address, e-mail address available | Only name and e-mail address available |

| P10 | Communication privacy | End-to-end encryption for collected PHI | HTTPS is supported | Raw data collected or no information |

| Name | Attribute | Meaning | Sources |

|---|---|---|---|

| T1 | Perceived Credibility | How SerP keeps promises, type of organisation, external seals, ownership of organisation | Previous experiences, website information |

| T2 | Reputation | General attitude of society | Websites, other sources |

| T3 | Perceived competence and professionalism of the service provider | Type of organisation, qualification of employees/experts, similarity with other organisations | Website information, external information |

| T4 | Perceived quality and professionalism of health information | General information quality and level of professionalism, quality of links and scientific references | Own experience, third party ratings, other’s proposals, website information, |

| T5 | Past experiences | Overall quality of past experiences | Personal past experiences |

| T6 | Regulatory compliance | Type and ownership of organisation. Experiences how the SerP keeps its promises | Websites, oral information, social networks and media. Previous experiences |

| T7 | Website functionality and ease of use | Easy to use, usability, understandability, look of the website, functionality | Direct experiences |

| T8 | Perceived quality of the information system | Functionality, helpfulness, structural assurance, reliability (system operates properly) | Own experiences, others recommendations |

| P1 = 0. | P2 = 0 | P3 = 0 | P4 = 1 | P5 = 0 | P6 = 0 | P7 = 0 | P8 = 0 | P9 = 1 | P10 = 1 |

|---|---|---|---|---|---|---|---|---|---|

| T1 = M | T2 = MH | T3 = ML | T4 = M | T5 = H | T6 = L | T7 = H | T8 = M | EXPHI = M |

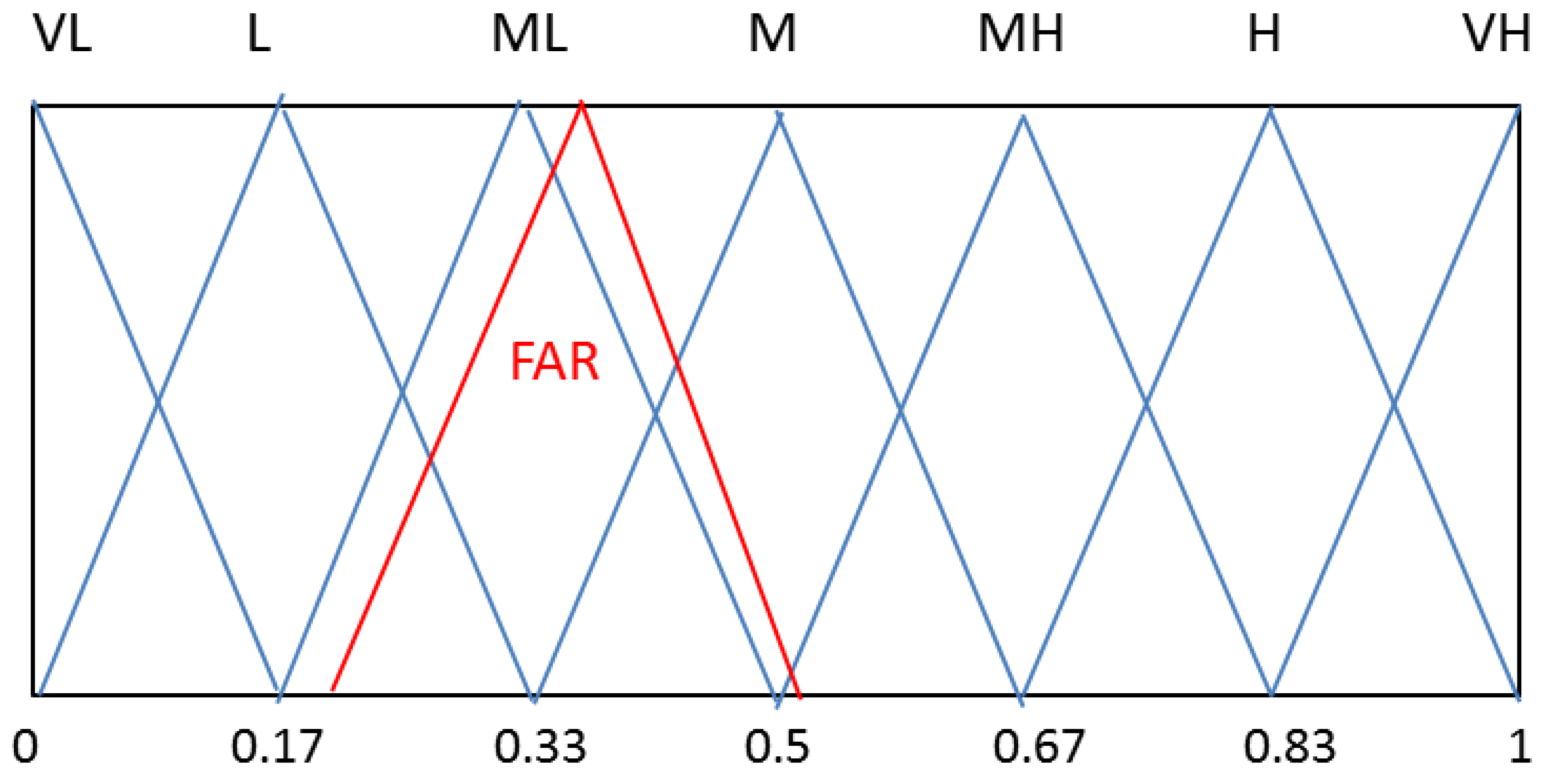

| Factor | Fuzzy Value | Fuzzy Weight |

|---|---|---|

| Privacy | L (0.0, 0.17, 0.33) | VH (0.8, 1, 1) |

| Trust | (0.375, 0.54, 0.71) | H (0.6, 0.8, 1) |

| EXPHI | M (0.33, 0.5, 0.67) | M (0.4, 0.6, 0.8) |

| FAR | (0.198, 0.376, 0.562) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ruotsalainen, P.; Blobel, B.; Pohjolainen, S. Privacy and Trust in eHealth: A Fuzzy Linguistic Solution for Calculating the Merit of Service. J. Pers. Med. 2022, 12, 657. https://doi.org/10.3390/jpm12050657

Ruotsalainen P, Blobel B, Pohjolainen S. Privacy and Trust in eHealth: A Fuzzy Linguistic Solution for Calculating the Merit of Service. Journal of Personalized Medicine. 2022; 12(5):657. https://doi.org/10.3390/jpm12050657

Chicago/Turabian StyleRuotsalainen, Pekka, Bernd Blobel, and Seppo Pohjolainen. 2022. "Privacy and Trust in eHealth: A Fuzzy Linguistic Solution for Calculating the Merit of Service" Journal of Personalized Medicine 12, no. 5: 657. https://doi.org/10.3390/jpm12050657

APA StyleRuotsalainen, P., Blobel, B., & Pohjolainen, S. (2022). Privacy and Trust in eHealth: A Fuzzy Linguistic Solution for Calculating the Merit of Service. Journal of Personalized Medicine, 12(5), 657. https://doi.org/10.3390/jpm12050657