Texture-Based Neural Network Model for Biometric Dental Applications

Abstract

:1. Introduction

2. Materials and Methods

2.1. Teeth Collection, Scanning, and Classification

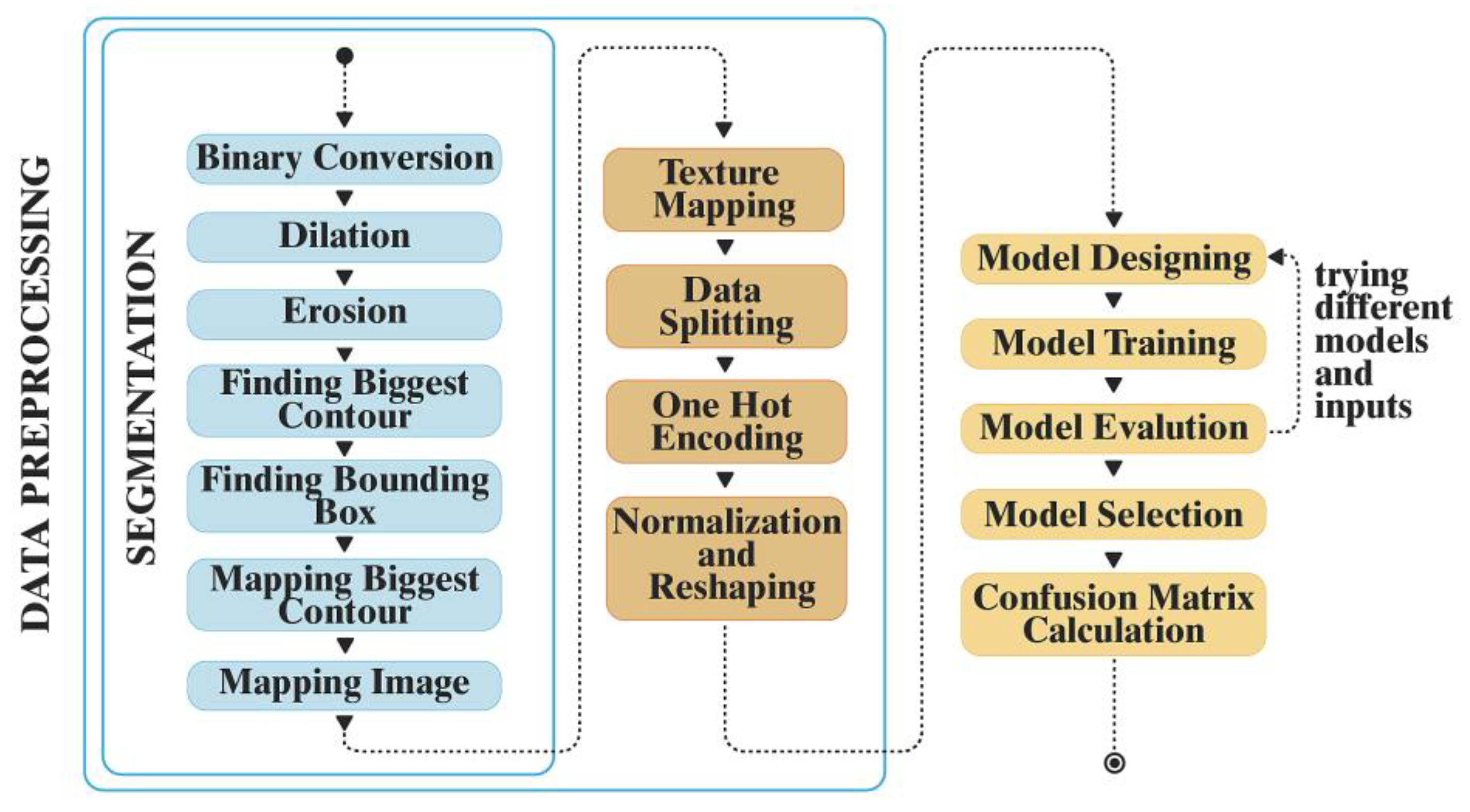

2.2. Preprocessing

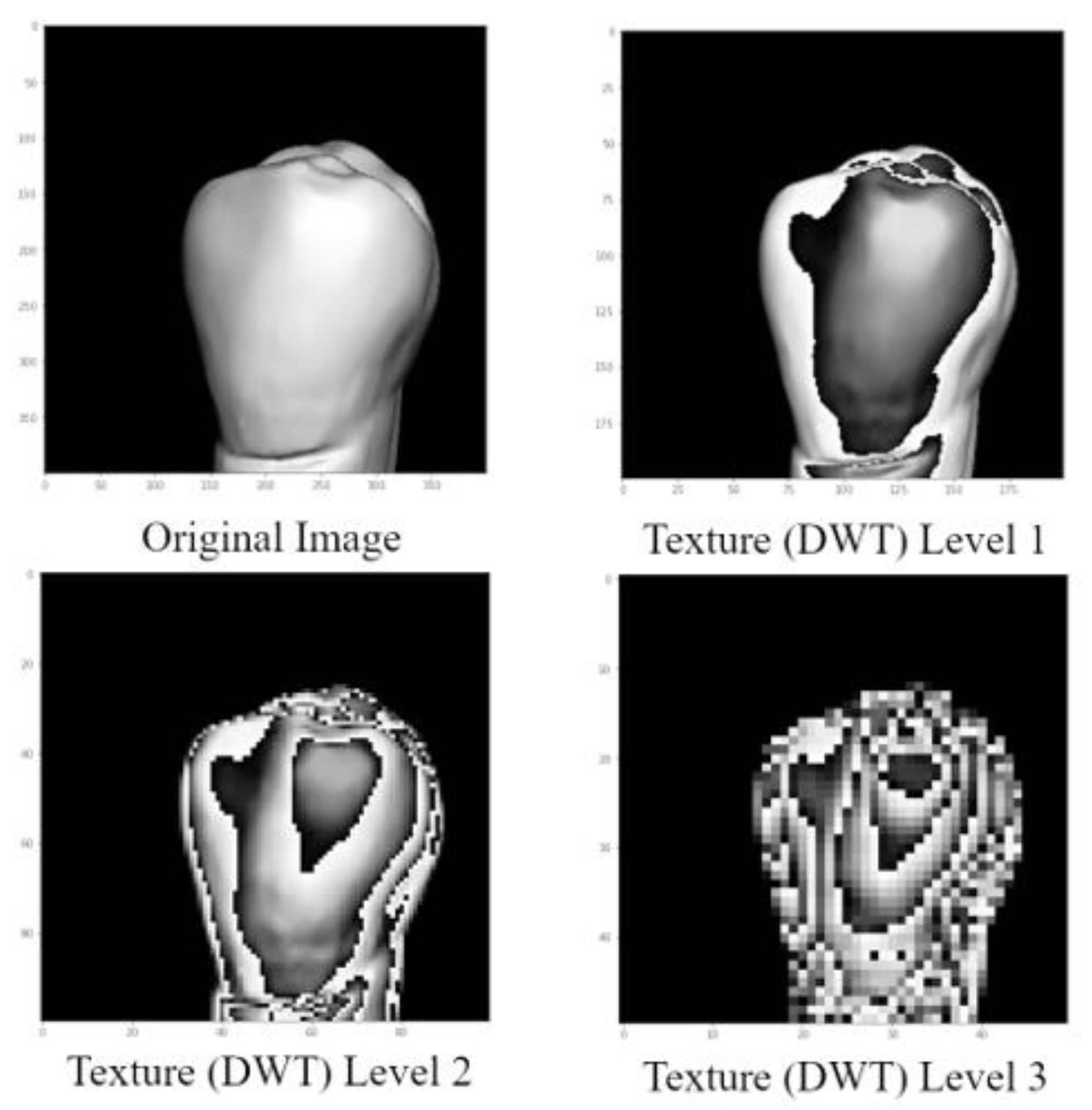

2.3. Extracting Textural Features Using DWT

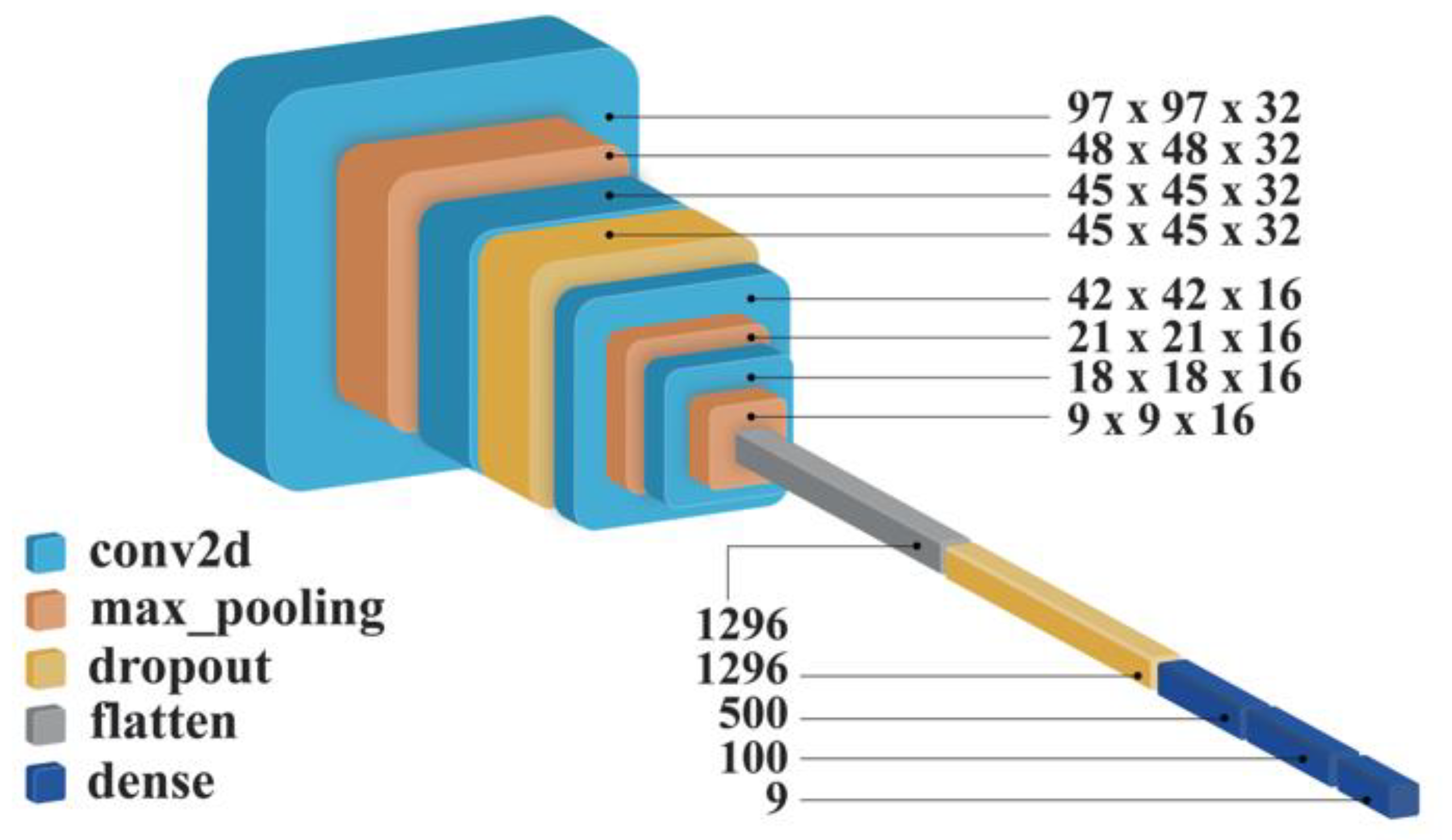

2.4. Deep Convolutional Neural Networks for Classification

3. Results

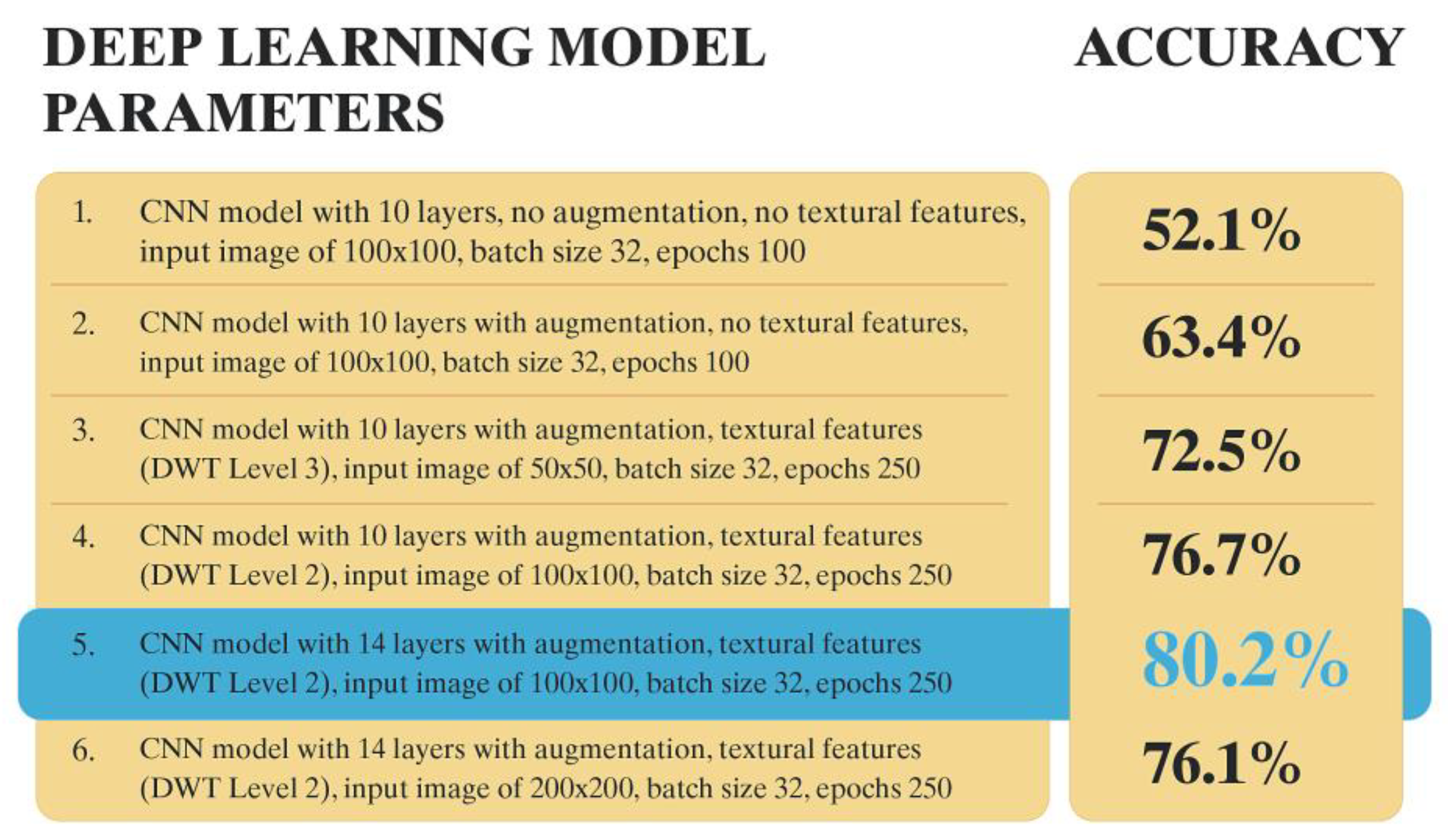

3.1. Experimental Results and Improvement Steps

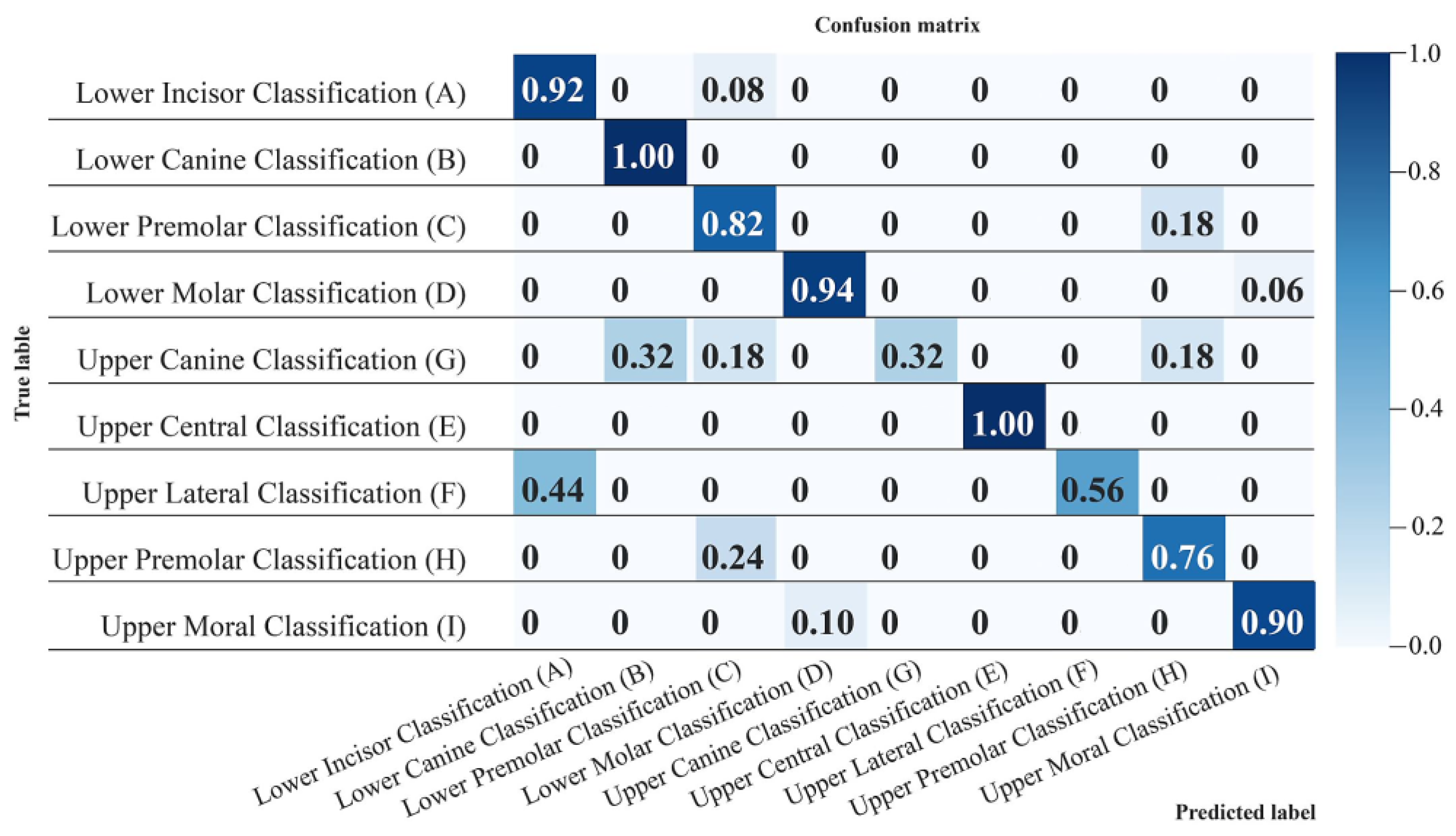

3.2. Confusion Matrix

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

- The impact of data augmentation can clearly be seen from the comparison of configuration 1 and 2. The rest of the parameters are the same; however, the only difference is data augmentation that results in improvement of around 11.3% in accuracy.

- The role of textural features is evident from the comparison with configuration 2 and 4. The accuracy of configuration 4 is 13.3% higher than the accuracy of configuration 2.

- The DWT level is also playing a crucial role. For example, configuration 3 uses DWT level 3 and configuration 4 uses DWT level 2. As can be seen from their comparative results, the accuracy of configuration 4 is 4.2% higher than the accuracy of configuration 3. One more thing to be noted is the image size is larger in configuration 4 and still configuration 4 is performing better. We can conclude that DWT level 2 is a better performer in this case.

- Another important performance element is the number of layers. The only difference between configurations 4 and 5 is the number of layers they are using. As can be seen from the table, the accuracy of configuration 5 is 3.6% higher than the accuracy of configuration 4 and that is due to 4 extra layers. Hence, it concludes that layers are also improving accuracy.

- One last observation that we can extract from this data is the poor impact of image size. If we compare accuracies of configuration 5 and 6 then we find that a higher image adversely affects the performance of the classifier.

| Evaluation Metrics/Teeth Classes | Precision | Recall | F-Measure | Accuracy |

|---|---|---|---|---|

| Lower Incisor Classification (A) | 0.9 | 0.8 | 0.9 | 0.9 |

| Lower Canine Classification (B) | 0.9 | 0.8 | 1 | 1 |

| Lower Premolar Classification (C) | 0.8 | 0.8 | 0.8 | 0.8 |

| Lower Molar Classification (D) | 1 | 0.8 | 0.9 | 0.9 |

| Upper Canine Classification (G) | 0.3 | 0.3 | 0.4 | 0.3 |

| Upper Central Classification (E) | 0.9 | 1 | 1 | 1 |

| Upper Lateral Classification (F) | 0.5 | 1 | 0.6 | 0.6 |

| Upper Premolar Classification (H) | 0.7 | 0.7 | 0.8 | 0.8 |

| Upper Molar Classification (I) | 0.9 | 0.8 | 0.9 | 0.9 |

| Average | 0.8 | 0.8 | 0.8 | 0.8 |

References

- Adserias-Garriga, J.; Thomas, C.; Ubelaker, D.H.; Zapico, S.C. When Forensic Odontology Met Biochemistry: Multidisciplinary Approach in Forensic Human Identification. Arch. Oral Biol. 2018, 87, 7–14. [Google Scholar] [CrossRef] [PubMed]

- Reesu, G.V.; Woodsend, B.; Mânica, S.; Revie, G.F.; Brown, N.L.; Mossey, P.A. Automated Identification from Dental Data (AutoIDD): A New Development in Digital Forensics. Forensic Sci. Int. 2020, 309, 110218. [Google Scholar] [CrossRef] [PubMed]

- Chouhan, S.; Sansanwal, M.; Bhateja, S.; Arora, G. Ameloglyphics: A Feasible Forensic Tool in Dentistry. J. Oral Med. Oral Surg. Oral Pathol. Oral Radiol. 2020, 5, 119–120. [Google Scholar] [CrossRef]

- Darwin, D.; Sakthivel, S.; Castelino, R.L.; Babu, G.S.; Asan, M.F.; Sarkar, A.S. Oral Cavity: A Forensic Kaleidoscope. J. Health Allied Sci. NU 2021, 12, 7–12. [Google Scholar] [CrossRef]

- Sha, S.K.; Rao, B.V.; Rao, M.S.; Kumari, K.H.; Chinna, S.K.; Sahu, D. Are Tooth Prints a Hard Tissue Equivalence of Finger Print in Mass Disaster: A Rationalized Review. J. Pharm. Bioallied Sci. 2017, 9, S29–S33. [Google Scholar] [CrossRef] [PubMed]

- Albernaz Neves, J.; Antunes-Ferreira, N.; Machado, V.; Botelho, J.; Proença, L.; Quintas, A.; Sintra Delgado, A.; Mendes, J.J. An Umbrella Review of the Evidence of Sex Determination Procedures in Forensic Dentistry. J. Pers. Med. 2022, 12, 787. [Google Scholar] [CrossRef]

- Wang, L.; Mao, J.; Hu, Y.; Sheng, W. Tooth Identification Based on Teeth Structure Feature. Syst. Sci. Control Eng. 2020, 8, 521–533. [Google Scholar] [CrossRef]

- Divakar, K.P. Forensic Odontology: The New Dimension in Dental Analysis. Int. J. Biomed. Sci. 2017, 13, 1–5. [Google Scholar] [PubMed]

- Chugh, A.; Narwal, A. Oral Mark in the Application of an Individual Identification: From Ashes to Truth. J. Forensic Dent. Sci. 2017, 9, 51–55. [Google Scholar] [CrossRef]

- Bjelopavlovic, M.; Zeigner, A.-K.; Hardt, J.; Petrowski, K. Forensic Dental Age Estimation: Development of New Algorithm Based on the Minimal Necessary Databases. J. Pers. Med. 2022, 12, 1280. [Google Scholar] [CrossRef]

- Eto, N.; Yamazoe, J.; Tsuji, A.; Wada, N.; Ikeda, N. Development of an Artificial Intelligence-Based Algorithm to Classify Images Acquired with an Intraoral Scanner of Individual Molar Teeth into Three Categories. PLoS ONE 2022, 17, e0261870. [Google Scholar] [CrossRef] [PubMed]

- RC, W. Dental Anatomy and Physiology, 3rd ed.; WB Saunder: Philadelphia, PA, USA, 1963. [Google Scholar]

- Winkler, J.; Gkantidis, N. Trueness and Precision of Intraoral Scanners in the Maxillary Dental Arch: An in Vivo Analysis. Sci. Rep. 2020, 10, 1172. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hori, M.; Hori, T.; Ohno, Y.; Tsuruta, S.; Iwase, H.; Kawai, T. A Novel Identification Method Using Perceptual Degree of Concordance of Occlusal Surfaces Calculated by a Python Program. Forensic Sci. Int. 2020, 313, 110358. [Google Scholar] [CrossRef]

- Martínez-Rodríguez, C.; Patricia, J.-P.; Ricardo, O.-A.; Alejandro, I.-L. Personalized Dental Medicine: Impact of Intraoral and Extraoral Clinical Variables on the Precision and Efficiency of Intraoral Scanning. J. Pers. Med. 2020, 10, 92. [Google Scholar] [CrossRef]

- Nulty, A.B. A Comparison of Full Arch Trueness and Precision of Nine Intra-Oral Digital Scanners and Four Lab Digital Scanners. Dent. J. 2021, 9, 75. [Google Scholar] [CrossRef] [PubMed]

- Bernauer, S.A.; Zitzmann, N.U.; Joda, T. The Use and Performance of Artificial Intelligence in Prosthodontics: A Systematic Review. Sensors 2021, 21, 6628. [Google Scholar] [CrossRef] [PubMed]

- Joda, T.; Zitzmann, N.U. Personalized Workflows in Reconstructive Dentistry—Current Possibilities and Future Opportunities. Clin. Oral Investig. 2022, 26, 4283–4290. [Google Scholar] [CrossRef]

- Chau, R.C.W.; Chong, M.; Thu, K.M.; Chu, N.S.P.; Koohi-Moghadam, M.; Hsung, R.T.C.; McGrath, C.; Lam, W.Y.H. Artificial Intelligence-Designed Single Molar Dental Prostheses: A Protocol of Prospective Experimental Study. PLoS ONE 2022, 17, e0268535. [Google Scholar] [CrossRef] [PubMed]

- Bayraktar, Y.; Ayan, E. Diagnosis of Interproximal Caries Lesions with Deep Convolutional Neural Network in Digital Bitewing Radiographs. Clin. Oral Investig. 2022, 26, 623–632. [Google Scholar] [CrossRef]

- Chung, M.; Lee, J.; Park, S.; Lee, M.; Lee, C.E.; Lee, J.; Shin, Y.G. Individual Tooth Detection and Identification from Dental Panoramic X-Ray Images via Point-Wise Localization and Distance Regularization. Artif. Intell. Med. 2021, 111, 101996. [Google Scholar] [CrossRef] [PubMed]

- Schwendicke, F.; Krois, J. Data Dentistry: How Data Are Changing Clinical Care and Research. J. Dent. Res. 2021, 101, 21–29. [Google Scholar] [CrossRef]

- Chen, H.; Zhang, K.; Lyu, P.; Li, H.; Zhang, L.; Wu, J.; Lee, C.H. A Deep Learning Approach to Automatic Teeth Detection and Numbering Based on Object Detection in Dental Periapical Films. Sci. Rep. 2019, 9, 3840. [Google Scholar] [CrossRef] [Green Version]

- Miki, Y.; Muramatsu, C.; Hayashi, T.; Zhou, X.; Hara, T.; Katsumata, A.; Fujita, H. Classification of Teeth in Cone-Beam CT Using Deep Convolutional Neural Network. Comput. Biol. Med. 2017, 80, 24–29. [Google Scholar] [CrossRef]

- Niño-Sandoval, T.C.; Vasconcelos, B.C. Biotypic Classification of Facial Profiles Using Discrete Cosine Transforms on Lateral Radiographs. Arch. Oral Biol. 2021, 131, 105249. [Google Scholar] [CrossRef] [PubMed]

- Reesu, G.V.; Mânica, S.; Revie, G.F.; Brown, N.L.; Mossey, P.A. Forensic Dental Identification Using Two-Dimensional Photographs of a Smile and Three-Dimensional Dental Models: A 2D-3D Superimposition Method. Forensic Sci. Int. 2020, 313, 110361. [Google Scholar] [CrossRef]

- Reesu, G.V.; Brown, N.L. Application of 3D Imaging and Selfies in Forensic Dental Identification. J. Forensic Leg. Med. 2022, 89, 102354. [Google Scholar] [CrossRef]

- Armi, L.; Fekri-Ershad, S. Texture Image Analysis and Texture Classification Methods—A Review. arXiv 2019, arXiv:1904.06554. [Google Scholar]

- Alhammadi, M.; Al-Mashraqi, A.; Alnami, R.; Ashqar, N.; Alamir, O.; Halboub, E.; Reda, R.; Testarelli, L.; Patil, S. Accuracy and Reproducibility of Facial Measurements of Digital Photographs and Wrapped Cone Beam Computed Tomography (CBCT) Photographs. Diagnostics 2021, 11, 757. [Google Scholar] [CrossRef] [PubMed]

- University of Florida. Introduction to the Discrete Wavelet Transform (DWT). Mach. Learn. Lab. 2004, 3, 1–8. [Google Scholar]

- Kociolek, M.; Materka, A.; Strzelecki, M.; Szczypinski, P. Discrete Wavelet Transform—Derived Features for Digital Image Texture Analysis. Int. Conf. Signals Electron. Syst. 2001, 2, 99–104. [Google Scholar]

- Daniel, W.W. Biostatistics: A Foundation for Analysis in the Health Sciences, 9th ed.; Wiley & Sons: Hoboken, NJ, USA, 1999; ISBN 978-0-470-10582-5. [Google Scholar]

- de Almeida Gonçalves, M.; Silva, B.L.G.; Conte, M.B.; Campos, J.Á.D.B.; de Oliveira Capote, T.S. Identification of Lower Central Incisors. In Dental Anatomy; IntechOpen: London, UK, 2018. [Google Scholar]

- van Rossum, G.; Drake, F.L. Python 3 Reference Manual Createspace; CreateSpace: Scotts Valley, CA, USA, 2009. [Google Scholar]

- Derakhshani, R.; Ross, A. A Texture-Based Neural Network Classifier for Biometric Identification Using Ocular Surface Vasculature. In Proceedings of the IEEE International Conference on Neural Networks, Orlando, FL, USA, 12–17 August 2007; pp. 2982–2987. [Google Scholar] [CrossRef]

- Fan, C.; Chen, M.; Wang, X.; Wang, J.; Huang, B. A Review on Data Preprocessing Techniques Toward Efficient and Reliable Knowledge Discovery From Building Operational Data. Front. Energy Res. 2021, 9, 652801. [Google Scholar] [CrossRef]

- Tabik, S.; Peralta, D.; Herrera-Poyatos, A.; Herrera, F. A Snapshot of Image Pre-Processing for Convolutional Neural Networks: Case Study of MNIST. Int. J. Comput. Intell. Syst. 2017, 10, 555–568. [Google Scholar] [CrossRef]

- Joshi, S. Discrete Wavelet Transform Based Approach for Touchless Fingerprint Recognition. In Proceedings of the International Conference on Data Science and Applications, Kolkata, India, 26–27 March 2022; pp. 397–412. [Google Scholar]

- Mallat, S.G. Multifrequency Channel Decompositions of Images and Wavelet Models. IEEE Trans. Acoust. Speech Signal Process. 1989, 37, 2091–2110. [Google Scholar] [CrossRef] [Green Version]

- Mesejo, P.; Martos, R.; Ibáñez, Ó.; Novo, J.; Ortega, M. A Survey on Artificial Intelligence Techniques for Biomedical Image Analysis in Skeleton-Based Forensic Human Identification. Appl. Sci. 2020, 10, 4703. [Google Scholar] [CrossRef]

- Sohoni, N.S.; Aberger, C.R.; Leszczynski, M.; Zhang, J.; Ré, C. Low-Memory Neural Network Training: A Technical Report. arXiv 2019, arXiv:1904.10631. [Google Scholar]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of Deep Learning: Concepts, CNN Architectures, Challenges, Applications, Future Directions; Springer International Publishing: Berlin/Heidelberg, Germany, 2021; Volume 8, ISBN 4053702100444. [Google Scholar]

- Al-jabery, K.K.; Obafemi-Ajayi, T.; Olbricht, G.R.; Wunsch II, D.C. Data Analysis and Machine Learning Tools in MATLAB and Python. In Computational Learning Approaches to Data Analytics in Biomedical Applications; Elsevier: Amsterdam, The Netherlands, 2020; pp. 231–290. [Google Scholar]

- Liu, F.; Li, Z.; Quinn, W. Teeth Classification Based on Haar Wavelet Transform and Support Vector Machine; Atlantis Press: Dordrecht, The Netherlands, 2018. [Google Scholar]

- Kataoka, S.; Nishimura, Y.; Sadan, A. Nature’s Morphology: An Atlas of Tooth Shape and Form; Quintessence Publishing: Batavia, IL, USA, 2002; ISBN 9780867154115. [Google Scholar]

| Label | Tooth Class Name | Number of Images | |

|---|---|---|---|

| 0 | Lower Anterior | (A) | 64 |

| 1 | Lower Canine | (B) | 87 |

| 2 | LowerPremolar | (C) | 77 |

| 3 | Lower Molar | (D) | 71 |

| 4 | Upper Centra | (E) | 75 |

| 5 | Upper Lateral | (F) | 49 |

| 6 | Upper Canine | (G) | 34 |

| 7 | UpperPremolar | (H) | 80 |

| 8 | Upper Molar | (I) | 63 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Saleh, O.; Nozaki, K.; Matsumura, M.; Yanaka, W.; Miura, H.; Fueki, K. Texture-Based Neural Network Model for Biometric Dental Applications. J. Pers. Med. 2022, 12, 1954. https://doi.org/10.3390/jpm12121954

Saleh O, Nozaki K, Matsumura M, Yanaka W, Miura H, Fueki K. Texture-Based Neural Network Model for Biometric Dental Applications. Journal of Personalized Medicine. 2022; 12(12):1954. https://doi.org/10.3390/jpm12121954

Chicago/Turabian StyleSaleh, Omnia, Kosuke Nozaki, Mayuko Matsumura, Wataru Yanaka, Hiroyuki Miura, and Kenji Fueki. 2022. "Texture-Based Neural Network Model for Biometric Dental Applications" Journal of Personalized Medicine 12, no. 12: 1954. https://doi.org/10.3390/jpm12121954

APA StyleSaleh, O., Nozaki, K., Matsumura, M., Yanaka, W., Miura, H., & Fueki, K. (2022). Texture-Based Neural Network Model for Biometric Dental Applications. Journal of Personalized Medicine, 12(12), 1954. https://doi.org/10.3390/jpm12121954