Abstract

Full completion of cognitive screening tests can be problematic in the context of a stroke. Our aim was to examine the completion of various brief cognitive screens and explore reasons for untestability. Data were collected from consecutive stroke admissions (May 2016–August 2018). The cognitive assessment was attempted during the first week of admission. Patients were classified as partially untestable (≥1 test item was incomplete) and fully untestable (where assessment was not attempted, and/or no questions answered). We assessed univariate and multivariate associations of test completion with: age (years), sex, stroke severity (National Institutes of Health Stroke Scale (NIHSS)), stroke classification, pre-morbid disability (modified Rankin Scale (mRS)), previous stroke and previous dementia diagnosis. Of 703 patients admitted (mean age: 69.4), 119 (17%) were classified as fully untestable and 58 (8%) were partially untestable. The 4A-test had 100% completion and the clock-draw task had the lowest completion (533/703, 76%). Independent associations with fully untestable status had a higher NIHSS score (odds ratio (OR): 1.18, 95% CI: 1.11–1.26), higher pre-morbid mRS (OR: 1.28, 95% CI: 1.02–1.60) and pre-stroke dementia (OR: 3.35, 95% CI: 1.53–7.32). Overall, a quarter of patients were classified as untestable on the cognitive assessment, with test incompletion related to stroke and non-stroke factors. Clinicians and researchers would benefit from guidance on how to make the best use of incomplete test data.

1. Introduction

Cognitive screening following a stroke is recommended in international clinical guidelines [1,2] and routinely performed in acute stroke settings in many countries. However, completion of a cognitive test battery in a medically unwell person with recent neurological insult is challenging. Previous research has demonstrated that around 20% of stroke patients cannot fully complete many of the cognitive screening tests commonly used in stroke practice, for example the Montreal Cognitive Assessment (MoCA) [3] and the Mini-Mental State Examination (MMSE) [4]. Test non-completion is reported in both acute stroke [5] and rehabilitation settings [6] (Table 1). However, published data appear conflicting and other centres have reported that a lengthy neuropsychological battery can be performed in the acute setting [7].

Table 1.

Previous studies addressing feasibility of cognitive assessments post-stroke.

Feasibility of completing a cognitive assessment is multifactorial; some aspects may relate to the stroke (extent of damage, presence of aphasia, limb weakness) and others may relate to the nature of the testing (timing and length of assessment, complexity). Looking at the patient characteristics and approaches to assessment can explain the apparently contradictory findings in the literature. Patients included in studies of cognitive tests are often not representative of a typical stroke unit. For example, studies may favour the inclusion of those with minor strokes, no (or little) pre-stroke disability and those who are able to provide informed consent, whilst patients with severe aphasia or an existing diagnosis of dementia are often excluded [17]. This selection bias will underestimate the true incidence of untestable patients.

An incomplete cognitive test has clinical implications. Inexperienced assessors may erroneously ascribe an incomplete test to cognitive impairment, when in the context of stroke, non-completion may relate to physical impairments. Ultimately, test non-completion could risk false positive and false negative diagnosis of cognitive problems with attendant harm. An understanding of the extent of test non-completion and knowledge of factors relating to untestability could potentially avoid this. The issue of test incompletion also complicates stroke research and audit. Often patients with incomplete assessments are excluded from analyses (since a total score cannot be calculated). This practice biases results, underestimates levels of cognitive impairment and could also lead to erroneous results [11]. Various approaches to incorporate incomplete tests have been proposed but there is no consensus on the best method [16].

There are different ways to address these feasibility issues, but the approach taken will depend on the aspect of feasibility of greatest relevance. For example, one may decide to choose a test specifically designed for a stroke (e.g., Oxford Cognitive Screen (OCS) [18]). This approach recognises that many traditional cognitive tests were designed for memory clinic populations and are not suited to the specific challenges encountered in acute stroke settings. Stroke specific, multi-domain tests are described and may be less biased by physical, communication and visuospatial impairments. Another approach may be to choose a shorter cognitive screen. This approach may be particularly suited to the acute medical setting where clinicians have limited time and other investigations may be prioritised in the first few days. Shorter tests may also be attractive to patients as there will be a reduced test burden. Short cognitive screens have been largely ignored in research conducted in the stroke setting. Stroke care is continuously evolving and differs internationally, but there is currently a paucity of feasibility research on cognitive tests in an acute, National Health Service (NHS) context. Our research aimed to meet these two gaps.

Our primary aim was to describe the test completion (feasibility) of some of the shortest cognitive screens (deliverable in under five min) in an unselected group admitted to our hyper-acute stroke unit. Our secondary aims were to explore reasons for assessors giving a patient a label of being untestable and to describe factors associated with being untestable.

2. Methods

We conducted an observational, cross-sectional study, using routinely collected data from an urban UK, teaching hospital. This was approved by the West of Scotland Research Ethics Committee (ws/16/0001) on 4 February 2016. We followed Standards of Reporting of Neurological Disorders (STROND) guidance [19] for the design, conduct and reporting of the study.

2.1. Setting and Population

We collected anonymised, routine, clinical data from consecutive admissions to our hyper-acute stroke unit (HASU). The unit admits all suspected stroke and transient ischaemic attack (TIA) patients with no exclusions in relation to age, disability or comorbidity. The unit offers level two (high dependency) clinical care and only patients requiring multi-organ support would be admitted to a higher-level care facility. Recruitment occurred during four timepoints: May 2016–February 2017; April–June 2017; October–December 2017; and July–August 2018. For the purposes of this study, we made no exclusions around stroke severity or stroke-related impairments. Written informed consent was not required for assessment.

2.2. Clinical and Demographic Assessment

Clinical and demographic data were collected for each patient by five trained researchers (four postgraduate students in psychology/neuroscience and one undergraduate medical student). Data collected were a mix of prospective assessment and retrospective derivation from medical case notes. Stroke severity was determined by the National Institutes of Health Stroke Scale (NIHSS) [20] on admission. Medical history, including any pre-stroke diagnosis of dementia, was recorded using medical notes and primary care summary data. Pre-stroke functioning was established using the modified Rankin Scale (mRS) [21,22,23]. Bamford stroke classification was completed for both ischaemic and haemorrhagic patients.

2.3. Cognitive Assessment

The cognitive assessment consisted of 13 questions, covering 8 different cognitive screening tests: the 10-point Abbreviated mental test score (AMTS) [24] and its shorter version AMT-4 [25], General Practitioner Assessment of Cognition (GPCOG) (patient section) [26], Mini-Cog [27], six item cognitive impairment test (6-CIT) [28], National Institute Neurological Disorders S-Canadian Stroke Network (NINDS-CSN) 5-min MoCA [29], abbreviated MoCA [30], and the 4 ‘A’s Test (4AT) (Available online: www.the4AT.com) (Table 2). Each of these individual tests can be administered in under 5 min (and so suitable for use in acute clinical practice). They cover a variety of cognitive domains and have some supporting validation work in primary and geriatric care [31].

Table 2.

Short cognitive tests ordered by number of items.

Assessment was attempted during the first week of admission. Patients were only approached once for assessment, unless the patient requested for the assessment to be done at a later time-point, the assessment was interrupted by another clinical investigation (e.g., scan) or if the patient requested the assessment to be done over two sessions. Patients were not approached at all (and categorised fully untestable) if the parent clinical team reported that the patient was too unwell to undergo assessment or if the assessor felt that any form of direct testing would not be possible. In these patients, who could not be directly assessed, we checked if a cognitive assessment was documented by the parent clinical team since admission.

2.4. Defining the Test Completion Outcomes

Patients were classified as fully untestable when no part of the assessment was attempted (decision made by researcher in consultation with parent clinical team) and/or when no questions were answered when testing was attempted. Partially untestable was defined when at least one item in a test could not be completed or was not attempted (decided by either the patient, parent clinical team or researcher). A list of potential categories was created by the authors based on clinical experience, previous literature and initial scoping of free text responses. The free text reasons documented for each patient classified as untestable by the individual assessor were later collated into categories (e.g., aphasia and dysarthria both captured under speech problems) by the lead author (E.Elliott) with discussion with the stroke consultant (T.Quinn). Where more than one reason was listed, we chose the primary factor deemed to have the greatest impact on assessment (e.g., a patient documented as both acutely confused and dysarthric was categorised under confusion). Cases where a test item was attempted but poorly completed, for example, a patient with limb weakness who attempted the clock-draw with their weak or non-dominant hand, were classed as testable.

2.5. Statistical Analysis

We described patients as fully or partially untestable using the definitions above. We looked at the completion rate of each question in our assessment and then calculated completion rates for the different tests. Patients who ended up with a non-stroke diagnosis were kept in the analyses as we were interested in the feasibility of tests within all patients admitted with a suspected stroke and we retained admission NIHSS for these patients where it was completed.

We assessed univariable and multivariable associations with outcomes of interest using logistic regression. Variables were chosen based on previous literature [10,11] and plausible associations with feasibility. The following 12 covariates were used in both univariate and multivariate analyses: age (years), sex, NIHSS, Bamford stroke classification (TIA, partial anterior circulation stroke—PACS, total anterior circulation stroke—TACS, posterior circulation stroke—POCS, lacunar stroke—LACS, non-stroke (used as reference group)), pre-morbid mRS, presence of intracerebral haemorrhage (ICH), previous diagnosis of dementia and previous TIA/stroke. We did not include delirium in the model since our only measure was the 4AT scale and all untestable patients would have ended up with a label of delirium. Associations were described as odds ratios (OR) with corresponding 95% confidence intervals. We used the rule of 10 outcome events per predictor variable to determine the number of covariates we could include in the model and so required 120 “cases” for the model.

Analyses were run twice to account for how partially untestable patients are treated differently in the literature; in the first analysis they were treated as testable and in the second treated as untestable (grouped with the fully untestable patients). All data analyses were performed using the statistical software package SPSS (version 25 IBM, Armonk, NY, USA).

3. Results

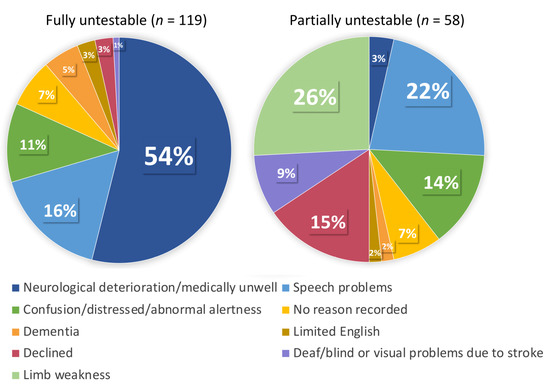

The full sample included 703 patients (mean age 69.4 ± 13.7, 382 (54%) males, median NIHSS 2 (interquartile range, IQR 1–5)) (Table 3 for full patient characteristics). Of these, 119 (17%) were classified as fully untestable on all tests. Reasons for fully untestable fell under eight categories but for more than half of the group this was due to neurological deterioration (e.g., patients who were unresponsive, very unwell, palliative) (62/119, 54%). A further 58 (8%) patients in the full sample were separately classified as partially untestable (did not attempt ≥1 question); reasons fell under nine categories, with limb weakness 15 (26%) and speech problems 13 (22%) being most prevalent (full breakdown of reasons detailed in Figure 1). A large proportion of patients in the fully untestable group (n = 50, 42%) had a TACS, compared to only 3 (5%) in the partially untestable group.

Table 3.

Characteristics of the sample.

Figure 1.

Reasons for fully/partially untestable.

Patients who ended up with a non-stroke diagnosis (n = 109) were a diverse group (diagnoses included migraine, subarachnoid haemorrhage and vasovagal events). Of these, 20 (18%) were untestable in some way. Only 12 patients (2%) of the full sample declined the cognitive assessment; three declined the full assessment and nine declined certain questions. Characteristics: 7 (58%) males, mean age of 74.3 (SD = 13.9), median NIHSS of 3 (IQR 2–5), diagnoses: 1 non-stroke, 3 TIAs, 3 PACS, 3 POCS and 2 LACS.

We looked at the completion of each individual question within our full cognitive assessment (Table S1). Clock-draw had the lowest completion rate (533/703 (76%)), whilst age had the highest (583/703 (83%)). For 25/58 (43%) patients in the partially untestable group, clock-draw was the only task that they did not attempt. The completion rate of each individual test is given in Table 2; the 4AT was the only test which could be scored in full for all patients.

In the univariate analyses: higher age, TACS, ICH, higher NIHSS, higher pre-morbid mRS and a previous diagnosis of dementia were associated with being untestable, whilst a lacunar stroke was associated with being testable (Table 4). In the first multivariable regression analysis (n = 680), independent associations with fully untestable status were: higher NIHSS score (OR: 1.18, 95% CI: 1.11–1.26), higher pre-morbid mRS (OR: 1.28, 95% CI: 1.02–1.60) and pre-stroke dementia (OR: 3.35, 95% CI: 1.53–7.32). A lacunar stroke classification was associated with being testable (OR: 0.19, 95% CI: 0.06–0.65). In the second analysis (where the partially untestable group was combined with the fully untestable), the above variables remained significant. In addition, the following associations were found for being untestable: older age (OR: 1.04, 95% CI: 1.02–1.06) and presence of ICH (OR: 3.44, 95% CI: 1.13–10.44); whilst a TIA classification was associated with being testable (OR: 0.45, 95% CI: 0.20–0.997) (Table 4).

Table 4.

Feasibility associations.

4. Discussion

In an unselected sample of 703 patients admitted to our HASU, a quarter were classified as partially or fully untestable on brief cognitive screening tests. In those patients classified as partially untestable, the clock-draw was the most problematic, so tests including this item had the lowest completion rate. The 4AT was the only test which could be scored in full for all patients as it includes a score for being untestable. Factors associated with being fully untestable were previous diagnosis of dementia, higher pre-morbid mRS and higher NIHSS on admission, whilst a diagnosis of lacunar stroke was associated with being testable.

4.1. Research in Context

Our findings are generally in keeping with the limited literature on test feasibility. The associations of non-completion with stroke severity and dementia have face validity and the reasons given for a label of untestable were similar to those described in previous studies (for example limb weakness [5,11,16], aphasia [5,10,11], pre-morbid functional status [5] and reduced consciousness [11]), although reporting reasons for cognitive test non-completion in research is the exception rather than the norm. These findings highlight that non-completion is driven by both stroke specific and non-stroke related factors. Our finding that the clock-draw was the most problematic test is also in keeping with previous research findings for a stroke population; Lees et al. [16] found the lowest rates of completion on test items that required copying or drawing. Although tasks which assess visuospatial abilities, such as the clock-drawing test, can be challenging for stroke patients, they provide useful information on a key cognitive domain and can predict longer-term outcomes [32].

We decided to focus on the shortest cognitive tests available, in the hopes that they would be more practical for both the patient and clinician. Our results showed that the rates of completion for these short tests were similar to the completion rates for longer multi-domain cognitive tests previously studied (MoCA, MMSE). This should not be interpreted as meaning that the shortest tests are just as likely to be incomplete as more detailed tests. We did not include or directly compare longer tests with our short screens and our unselected population is not comparable with the patients tested in previous studies. There is a concern that shorter cognitive tests are inferior to longer, more detailed tests. Previous work has suggested that there is a trade-off between duration of administration and diagnostic accuracy [33] in the context of dementia. A focus on length of assessment alone (number of questions, administration time) is perhaps too simplistic, and test content is likely to be more important. For example, a long test could assess one area of cognition in depth yet neglect other domains.

4.2. Strengths and Weaknesses of the Research

A major strength of our study is that we had access to an unbiased, real-world sample, including patients who are often excluded from research (for example those with severe aphasia and dementia). While using clinical data have these benefits, we also have to acknowledge that due to the ‘messy reality’ of acute clinical practice, data are often missing. Our approach allowed us to retrospectively derive missing data from various sources including inpatient medical records, primary care data and consultation with the parent clinical team. Retrospective scoring can increase some inaccuracy, for example, calculating NIHSS based on the symptoms documented in medical case notes, rather than carrying it out directly with the patient.

There were some potentially interesting aspects of feasibility/applicability where we did not record data. We did not record the total number of patients who had limb weakness from their stroke and attempted the clock-draw using their weak or non-dominant hand (classed as testable). Data on this subgroup would be useful as many will lose points or score zero for poorly completed drawing tasks. We also did not record if an assessment had to be completed over two sessions or if any part of the assessment was interrupted.

Although we operationalised our concept of partially and fully untestable there is still subjectivity in the interpretation. It is essentially a judgement call by the clinician whether patients with aphasia, limb weakness and visual problems can complete a task (if the patient does not decline themselves). The same patient could therefore be classified differently purely based on who assessed them. This is particularly relevant in our study, where differing assessors performed the cognitive testing. This could be considered both a strength and weakness as it provides further real-world validity (some people might be better at encouraging patients to complete an assessment than others).

Finally, a limitation of determining feasibility of different questions and their resulting tests is the order in which the questions are asked. We acknowledge asking questions in the same order for each patient introduces some bias and is an issue because some patients will struggle to focus for longer periods of time or are easily fatigued.

4.3. Recommendations for Future Research and Practice

The strict administration and scoring criteria required for cognitive tests can be problematic for the stroke setting. Clinicians and researchers can therefore expect to encounter a number of stroke patients that will be untestable on certain tasks, or patients who are testable, but their stroke-related impairments result in a misleading test score. While in clinical practice an assessment can be put into context, in research it is more important that a-priori rules are set for dealing with incomplete tests. The importance of doing this is highlighted by the fact our analyses showed different results depending on how partially untestable patients were classified. Numerous approaches exist to deal with missing data [16], but to maximise the utility of the data collected, we recommend, where possible, that researchers make full use of incomplete participant data, rather than applying a complete-case analysis approach.

Tests which incorporate scoring for untestable patients, such as the 4AT, are helpful. Although the 4AT is primarily a delirium screen, the same approach could be applied to general cognitive tests. Guidance documents exist for scoring other stroke scales such as the NIHSS in patients who are comatose, confused, etc., so these types of resources could be made available for challenging cases in cognitive assessment.

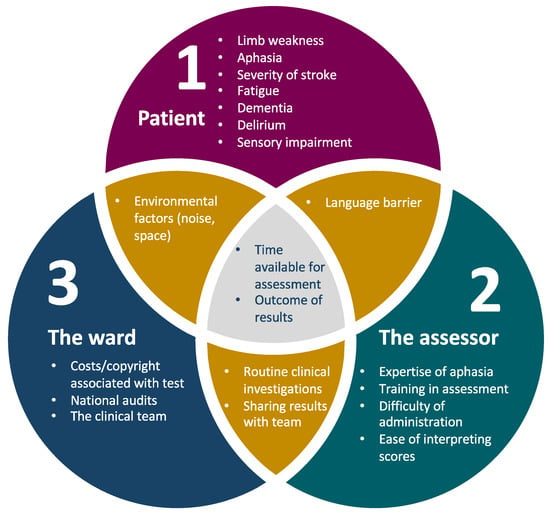

Test completion rates are just one measure of feasibility. Feasibility covers a range of factors relating to the patient, assessor and the ward setting (Figure 2), so future research studies should include data addressing these other perspectives. To date, there has been little data published on the clinician’s experience of cognitive assessment, environmental factors affecting assessment on the ward (noise, space, interruptions) and practical aspects, such as how assessors have misinterpreted administration/scoring instructions. With the increased use of computerised versions of cognitive tests in the future, feasibility issues from the assessor’s side are likely to improve; for example, automatic scoring saves time and reduces scoring errors and subjectivity. Future research should also make use of routinely collected clinical data, such as that collected by the Sentinel Stroke National Audit Programme (SSNAP) and the Scottish stroke care audit in the United Kingdom. One could argue that any study using a researcher to administer a scale, rather than a clinical member of staff, is not truly addressing broader feasibility and implementation issues.

Figure 2.

Factors affecting feasibility of cognitive assessment in acute stroke. Factors listed are illustrative but not exhaustive.

5. Conclusions

In a real-world sample, a quarter of patients in our HASU were classified as fully or partially untestable on brief cognitive screening tests. Clinicians and researchers should make a-priori plans on how to address incomplete assessments. Feasibility is a multi-faceted term, and factors from both clinician and patient point of view should be considered.

Supplementary Materials

The following are available online at https://www.mdpi.com/2075-4418/9/3/95/s1, Table S1: Questions attempted by the partially untestable group (n = 58).

Author Contributions

Conceptualisation, T.J.Q.; data collection, E.E., B.A.D., M.T.-R., R.C.S., G.C.; formal Analysis, E.E.; writing-original draft, E.E.; writing-reviewing & editing, all authors; supervision, T.J.Q.

Funding

This research was funded by the Stroke Association, grant number PPA 2015/01_CSO.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Quinn, T.J.; Elliott, E.; Langhorne, P. Cognitive and Mood Assessment Tools for Use in Stroke. Stroke 2018, 49, 483–490. [Google Scholar] [CrossRef] [PubMed]

- Intercollegiate Stroke Working Party. National Clinical Guideline for Stroke, 5th ed.; Royal College of Physicians: London, UK, 2016. [Google Scholar]

- Nasreddine, Z.S.; Phillips, N.A.; Bedirian, V.; Charbonneau, S.; Whitehead, V.; Collin, I.; Cummings, J.L.; Chertkow, H. The Montreal Cognitive Assessment, MoCA: A brief screening tool for mild cognitive impairment. J. Am. Geriatr. Soc. 2005, 53, 695–699. [Google Scholar] [CrossRef] [PubMed]

- Folstein, M.F.; Folstein, S.E.; McHugh, P.R. “Mini-mental state”. A practical method for grading the cognitive state of patients for the clinician. J. Psychiatr Res. 1975, 12, 189–198. [Google Scholar] [CrossRef]

- Pasi, M.; Salvadori, E.; Poggesi, A.; Inzitari, D.; Pantoni, L. Factors predicting the Montreal cognitive assessment (MoCA) applicability and performances in a stroke unit. J. Neurol 2013, 260, 1518–1526. [Google Scholar] [CrossRef] [PubMed]

- Benaim, C.; Barnay, J.L.; Wauquiez, G.; Bonnin-Koang, H.Y.; Anquetil, C.; Perennou, D.; Piscicelli, C.; Lucas-Pineau, B.; Muja, L.; le Stunff, E.; et al. The Cognitive Assessment scale for Stroke Patients (CASP) vs. MMSE and MoCA in non-aphasic hemispheric stroke patients. Ann. Phys. Rehabil. Med. 2015, 58, 78–85. [Google Scholar] [CrossRef] [PubMed]

- van Zandvoort, M.J.E.; Kessels, R.P.C.; Nys, G.M.S.; de Haan, E.H.F.; Kappelle, L.J. Early neuropsychological evaluation in patients with ischaemic stroke provides valid information. Clin. Neurol. Neurosurg. 2005, 107, 385–392. [Google Scholar] [CrossRef]

- Alderman, S.; Vahidy, F.; Bursaw, A.; Savitz, S. Abstract TP337: Feasibility of Cognitive Testing in Patients with Acute Ischemic Stroke. Stroke 2013, 44, ATP337. [Google Scholar] [CrossRef]

- Collas, D. Detecting Cognitive Impairment in Acute Stroke Using the Oxford Cognitive Screen: Characteristics of Left and Right Hemisphere Strokes, with More Severe Impairment in AF Strokes (P1.190). Neurology 2016, 86, P1.190. [Google Scholar]

- Horstmann, S.; Rizos, T.; Rauch, G.; Arden, C.; Veltkamp, R. Feasibility of the Montreal Cognitive Assessment in acute stroke patients. Eur. J. Neurol. 2014, 21, 1387–1393. [Google Scholar] [CrossRef]

- Pendlebury, S.T.; Klaus, S.P.; Thomson, R.J.; Mehta, Z.; Wharton, R.M.; Rothwell, P.M. Methodological Factors in Determining Risk of Dementia After Transient Ischemic Attack and Stroke: (III) Applicability of Cognitive Tests. Stroke 2015, 46, 3067–3073. [Google Scholar] [CrossRef]

- Barnay, J.L.; Wauquiez, G.; Bonnin-Koang, H.Y.; Anquetil, C.; Perennou, D.; Piscicelli, C.; Lucas-Pineau, B.; Muja, L.; le Stunff, E.; de Boissezon, X.; et al. Feasibility of the cognitive assessment scale for stroke patients (CASP) vs. MMSE and MoCA in aphasic left hemispheric stroke patients. Ann. Phys. Rehabil. Med. 2014, 57, 422–435. [Google Scholar] [CrossRef]

- Cumming, T.B.; Bernhardt, J.; Linden, T. The montreal cognitive assessment: Short cognitive evaluation in a large stroke trial. Stroke 2011, 42, 2642–2644. [Google Scholar] [CrossRef]

- Kwa, V.I.H.; Limburg, M.; Voogel, A.J.; Teunisse, S.; Derix, M.M.A.; Hijdra, A. Feasibility of cognitive screening of patients with ischaemic stroke using the CAMCOG A hospital-based study. J. Neurol. 1996, 243, 405–409. [Google Scholar] [CrossRef]

- Mancuso, M.; Demeyere, N.; Abbruzzese, L.; Damora, A.; Varalta, V.; Pirrotta, F.; Antonucci, G.; Matano, A.; Caputo, M.; Caruso, M.G.; et al. Using the Oxford Cognitive Screen to Detect Cognitive Impairment in Stroke Patients: A Comparison with the Mini-Mental State Examination. Front. Neurol. 2018, 9, 101. [Google Scholar] [CrossRef]

- Lees, R.A.; Hendry Ba, K.; Broomfield, N.; Stott, D.; Larner, A.J.; Quinn, T.J. Cognitive assessment in stroke: Feasibility and test properties using differing approaches to scoring of incomplete items. Int. J. Geriatr. Psychiatry 2017, 32, 1072–1078. [Google Scholar] [CrossRef]

- Pendlebury, S.T.; Chen, P.J.; Bull, L.; Silver, L.; Mehta, Z.; Rothwell, P.M. Methodological factors in determining rates of dementia in transient ischemic attack and stroke: (I) impact of baseline selection bias. Stroke 2015, 46, 641–646. [Google Scholar] [CrossRef]

- Demeyere, N.; Riddoch, M.J.; Slavkova, E.D.; Bickerton, W.L.; Humphreys, G.W. The Oxford Cognitive Screen (OCS): Validation of a stroke-specific short cognitive screening tool. Psychol. Assess. 2015, 27, 883–894. [Google Scholar] [CrossRef]

- Bennett, D.A.; Brayne, C.; Feigin, V.L.; Barker-Collo, S.; Brainin, M.; Davis, D.; Gallo, V.; Jette, N.; Karch, A.; Kurtzke, J.F.; et al. Development of the standards of reporting of neurological disorders (STROND) checklist: A guideline for the reporting of incidence and prevalence studies in neuroepidemiology. Eur. J. Epidemiol. 2015, 30, 569–576. [Google Scholar] [CrossRef]

- Brott, T.; Adams, H.P., Jr.; Olinger, C.P.; Marler, J.R.; Barsan, W.G.; Biller, J.; Spilker, J.; Holleran, R.; Eberle, R.; Hertzberg, V.; et al. Measurements of acute cerebral infarction: A clinical examination scale. Stroke 1989, 20, 864–870. [Google Scholar] [CrossRef]

- Rankin, J. Cerebral vascular accidents in patients over the age of 60. I. General considerations. Scott. Med. J. 1957, 2, 127–136. [Google Scholar] [CrossRef]

- van Swieten, J.C.; Koudstaal, P.J.; Visser, M.C.; Schouten, H.J.; van Gijn, J. Interobserver agreement for the assessment of handicap in stroke patients. Stroke 1988, 19, 604–607. [Google Scholar] [CrossRef]

- Fearon, P.; McArthur, K.S.; Garrity, K.; Graham, L.J.; McGroarty, G.; Vincent, S.; Quinn, T.J. Prestroke modified rankin stroke scale has moderate interobserver reliability and validity in an acute stroke setting. Stroke 2012, 43, 3184–3188. [Google Scholar] [CrossRef]

- Hodkinson, H.M. Evaluation of a mental test score for assessment of mental impairment in the elderly. Age Ageing 1972, 1, 233–238. [Google Scholar] [CrossRef]

- Swain, D.G.; Nightingale, P.G. Evaluation of a shortened version of the Abbreviated Mental Test in a series of elderly patients. Clin. Rehabil. 1997, 11, 243–248. [Google Scholar] [CrossRef]

- Brodaty, H.; Pond, D.; Kemp, N.M.; Luscombe, G.; Harding, L.; Berman, K.; Huppert, F.A. The GPCOG: A new screening test for dementia designed for general practice. J. Am. Geriatr. Soc. 2002, 50, 530–534. [Google Scholar] [CrossRef]

- Borson, S.; Scanlan, J.M.; Chen, P.; Ganguli, M. The Mini-Cog as a screen for dementia: Validation in a population-based sample. J. Am. Geriatr. Soc. 2003, 51, 1451–1454. [Google Scholar] [CrossRef]

- Brooke, P.; Bullock, R. Validation of a 6 item cognitive impairment test with a view to primary care usage. Int. J. Geriatr. Psychiatry 1999, 14, 936–940. [Google Scholar] [CrossRef]

- Hachinski, V.; Iadecola, C.; Petersen, R.C.; Breteler, M.M.; Nyenhuis, D.L.; Black, S.E.; Powers, W.J.; DeCarli, C.; Merino, J.G.; Kalaria, R.N.; et al. National Institute of Neurological Disorders and Stroke-Canadian Stroke Network vascular cognitive impairment harmonization standards. Stroke 2006, 37, 2220–2241. [Google Scholar] [CrossRef]

- Panenková, E.; Kopecek, M.; Lukavsky, J. Item analysis and possibility to abbreviate the Montreal Cognitive Assessment. Ceska Slov. Psychiatr. 2016, 112, 63–69. [Google Scholar]

- Ismail, Z.; Rajji, T.K.; Shulman, K.I. Brief cognitive screening instruments: An update. Int. J. Geriatr. Psychiatry 2010, 25, 111–120. [Google Scholar] [CrossRef]

- Champod, A.S.; Gubitz, G.J.; Phillips, S.J.; Christian, C.; Reidy, Y.; Radu, L.M.; Darvesh, S.; Reid, J.M.; Kintzel, F.; Eskes, G.A. Clock Drawing Test in acute stroke and its relationship with long-term functional and cognitive outcomes. Clin. Neuropsychol. 2019, 33, 817–830. [Google Scholar] [CrossRef] [PubMed]

- Larner, A.J. Speed versus accuracy in cognitive assessment when using CSIs. Prog. Neurol. Psychiatry 2015, 19, 21–24. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).