Abstract

Background/Objectives: Breast cancer (BC) has emerged as a significant threat among female malignancies, resulting in approximately 670,000 fatalities. The capacity to identify BC has advanced over the past two decades because of deep learning (DL), machine learning (ML), and artificial intelligence. The early detection of BC is crucial; yet, conventional diagnostic techniques, including MRI, mammography, and biopsy, are costly, time-intensive, less sensitive, incorrect, and necessitate skilled physicians. This narrative review will examine six novel imaging approaches for BC diagnosis. Methods: Optical coherence tomography (OCT) surpasses existing approaches by providing non-invasive, high-resolution imaging. Raman Spectroscopy (RS) offers detailed chemical and structural insights into cancer tissue that traditional approaches cannot provide. Photoacoustic Imaging (PAI) provides superior optical contrast, exceptional ultrasonic resolution, and profound penetration and visualization capabilities. Hyperspectral Imaging (HSI) acquires spatial and spectral data, facilitating non-invasive tissue classification with superior accuracy compared to grayscale imaging. Contrast-Enhanced Spectral Mammography (CESM) utilizes contrast agents and dual energy to improve the visualization of blood vessels, enhance patient comfort, and surpass standard mammography in sensitivity. Multispectral Imaging (MSI) enhances tissue classification by employing many wavelength bands, resulting in high-dimensional images that surpass the ultrasound approach. The imaging techniques studied in this study are very useful for diagnosing tumors, staging them, and guiding surgery. They are not detrimental to morphological or immunohistochemical analysis, which is the gold standard for diagnosing breast cancer and determining molecular characteristics. Results: These imaging modalities provide enhanced sensitivity, specificity, and diagnostic accuracy. Notwithstanding their considerable potential, the majority of these procedures are not employed in standard clinical practices. Conclusions: Validations, standardization, and large-scale clinical trials are essential for the real-time application of these approaches. The analyzed studies demonstrated that the novel modalities displayed enhanced diagnostic efficacy, with reported sensitivities and specificities often exceeding those of traditional imaging methods. The results indicate that they may assist in early detection and surgical decision-making; however, for widespread adoption, they must be standardized, cost-reduced, and subjected to extensive clinical trials. This study offers a concise summary of each methodology, encompassing the methods and findings, while also addressing the many limits encountered in the imaging techniques and proposing solutions to mitigate these issues for future applications.

1. Introduction

Breast Cancer (BC) is among the most prevalent forms of cancer affecting women globally [1]. It is expanding more swiftly in developing nations than in developed ones [2]. According to the World Health Organization’s mortality statistics, there were 2.3 million new cases and 670,000 deaths in 2022, with an annual increase rate of 1% to 5% [3]. In 2020, the International Agency for Research on Cancer, part of the World Health Organization, declared that BC has officially become the most prevalent cancer worldwide [4].

The staging system for breast cancer is the globally acknowledged Tumor, Node, Metastasis (TNM) classification system, endorsed by guidelines such as NCCN and ESMO. The tumor stage is determined by three primary factors: T (size and location of the primary tumor), N (regional lymph node involvement), and M (absence or presence of metastasis in other areas). Non-invasive breast cancer, referred to as carcinoma in situ (DCIS or LCIS), should not be conflated with invasive carcinoma, which involves the proliferation of tumor cells beyond the basement membrane. The nodal status (N0-N3) and the presence of metastases (M0 or M1) can be utilized to further stratify invasive breast cancers. The accuracy of staging is crucial as it directly influences prognosis and guides the selection of appropriate treatments, including surgery, systemic therapy, and radiotherapy [5,6]. Morphological examination is still the main method of malignant tumor diagnosis. Radiologists, pathologists, and surgeons find it harder to make a diagnosis because there are so many BC patients. They need to know the type and stage of the tumor in order to plan the best treatment. In the past, imaging techniques like mammography, ultrasound, and MRI as well as histopathological analysis of biopsy specimens have been used to diagnose diseases. These methods are still the best way to confirm that a disease is cancerous. However, these traditional methods often face challenges stemming from observer variability, significant workloads, and the complexities involved in detecting early or subtle lesions. Digital pathology, machine learning, and deep learning algorithms are some of the new technologies that have come together in the last few years. These technologies are now a powerful tool that can help doctors find and classify breast cancer more accurately, quickly, and fairly [7].

Optical coherence tomography (OCT) is an imaging technique that produces high-resolution images with near-infrared light, resulting in detailed images comparable to ultrasound images [8]. This procedure is less unpleasant as it does not involve the insertion of devices into the body or any incisions to the skin [9]. OCT generates images that are crisper and sharper than those produced by conventional BC imaging modalities such as mammography and ultrasound. It was first developed to facilitate the management of BC via a non-destructive high-resolution imaging technique for the examination of tumor morphology [10].

The manual diagnosis of BC from mammography pictures is time-consuming and requires significant effort to identify and classify the type of cancer present [11]. In the initial stage, the likelihood of survival is enhanced, but in the advanced stage, the survival prospects for a BC patient diminish. Women over 40 should consistently attend the hospital for breast screenings, as diagnoses often occur at advanced stages due to neglect in self-examinations and clinical assessments [12]. Numerous robust models such as ResNet, GoogLeNet, and VGG have been developed over the past decade. Deep learning (DL) is a prevalent technique in picture segmentation and object recognition, significantly aiding in the detection of cancer images [13]. BC can be detected using many technologies, including X-ray scans, ultrasound imaging, Computed Tomography scans, and thermography [14]. Currently, many approaches, including OCT, Raman Spectroscopy (RS), Photoacoustic Imaging (PAI), Hyperspectral Imaging (HSI), Contrast-Enhanced Spectral Mammography (CESM), Multispectral Imaging (MSI), are emerging that demonstrate enhanced efficiency in the identification of BC.

In recent years, RS has cultivated a significant interest in cancer research and surgical procedures. It has been utilized to investigate and identify tumors in the brain, breast, lung, skin, uterus, and digestive tract, both in laboratory samples and during surgical procedures [15]. RS is extremely sensitive to minor molecular and structural alterations in tissues, making it effective for diagnosing biochemical changes in those tissues. RS does not obliterate the sample and supplies information regarding biomolecular alterations, and it is unnecessary to label or stain the tissue before detection [16].

Photoacoustic spectrum imaging is a developing, novel, and non-invasive technique utilized for cancer detection. It identifies specific molecules in tissues based on their light absorption properties and can indicate their composition and physical attributes [17]. When a tissue sample is pulsed or modulated with light at specified wavelengths, the absorbed light energy induces fast heating, leading to small tissue expansion that generates measurable pressure waves, thus yielding information about the sample [18]. This approach enables real-time mapping of tumor oxygenation. It detects internal light-absorbing chromophores such as hemoglobin and generates a three-dimensional parametric map of tissue oxygen saturation [19].

HSI, digital imaging is integrated with spectroscopy. Each pixel of the image is recorded and encompasses the reflected intensity for a distinct range of colors referred to as a spectrum [20]. HSI simultaneously captures both spatial and spectral information. Narrowly spaced wavelength bands are captured, subsequently leading to the construction of a detailed 3D dataset that illustrates the unique spectral fingerprints of distinct tissues [21]. HSI offers numerous advantages since it is non-invasive, non-contact, rapid, and does not require contrast chemicals or expose individuals to radiation [22].

In 2001, Lewin et al. proposed CESM, which enhances mammography by the successful use of intravenous contrast enhancement [23]. CESM distinguishes itself as a comprehensive method relative to other techniques, since it concurrently provides mammogram-like images alongside contrasting images that yield data on the neo-angiogenesis of lesions [24]. It has established itself as a unique and therapeutically valuable technique for early breast evaluation [25].

MSI is one of the spectrum imaging techniques that captures reflection or radiation data from an object using a defined and limited number of spectral bands, encompassing visible and near-infrared light. The reflectance or radiance of the target in that band, which contributes to the spectral information database, is documented [26]. He methodology typically relies on spectral bandwidths in the ultraviolet, visible, and infrared ranges to obtain spatial and spectral data from the objects being assessed [27].

There are many well-known problems with traditional diagnostic and morphological methods for breast cancer, even though they are widely used. Mammography and ultrasound are less accurate in women with dense breasts, and they can give false-positive results, which can lead to unnecessary biopsies and anxiety for the patient. Also, mammography exposes the patient to ionizing radiation. MRI is very sensitive and costly, takes a long time, and is not available in all clinical settings. Histopathological diagnosis remains the gold standard; however, this method is invasive due to biopsy sampling, carries a risk of sampling error, and is subject to inter-observer variability among pathologists. All these problems show how important it is to create and test new imaging methods that can either add to or improve existing ones and improve early diagnosis, diagnostic accuracy, and patient outcomes. This study discusses six distinct imaging modalities utilized for the detection of BC. The document comprises various sections, including methodology and a concise overview of each approach, detailing its history, principles, advantages, limitations, and applications. Each strategy is accompanied by case studies that detail the methodology, dataset utilized, and results obtained. The limitations section outlines the constraints of each technique, while the solutions part provides remedies for these limitations. Ultrasound, mammography, CT, and MRI are essential diagnostic modalities in oncology for identifying the primary tumor and assessing disease dissemination. However, the diagnosis of breast cancer and the determination of its molecular subtype are feasible solely following the morphological and immunohistochemical analysis of tumor tissue. The new imaging techniques discussed in this review, such as OCT, RS, PAI, HSI, CESM, and MSI, can be seen as useful diagnostic tools that can be used with morphological tools but not instead of them. They might help with early diagnosis, characterizing lesions, and evaluating surgical margins, but they cannot replace the diagnostic pathway that is already in place. This review adds to the literature by providing in-depth analyses of six novel breast imaging modalities that are not yet widely utilized in clinical practice but possess significant potential for early detection, lesion characterization, and intraoperative guidance. It discusses the pros and cons of each method in terms of how easy it is to use in a clinical setting, how comfortable it is for patients, how easy it is to get to, and how well it fits into the workflow. It identifies the evidence gaps, meaning that there are different types of studies, no standardization of endpoints, and not enough large-scale clinical validation, which shows that the current evidence base is weak. It also discusses where each of the modalities would fit best into current clinical regimens and what needs to be done to make them more widely used, while emphasizing the necessity to standardize and implement multicenter trials, as well as to integrate AI and radiomics, in order to maximize the clinical utility of these technologies. The paper aims to bridge the gap between promising research-level performance and actual clinical application, thereby offering a more comprehensive framework for future studies and clinical translation.

2. Literature Search Strategy

Relevant studies on BC were obtained by a comprehensive search of several scientific databases. Examples include ScienceDirect, ResearchGate, and Google Scholar, among others. It employs diverse combinations of keywords and various medical terminologies pertinent to BC, its diagnosis, treatments, and the array of imaging techniques utilized for detection, to identify the most relevant and suitable research publications.

Inclusion and Exclusion Criteria for Review Article

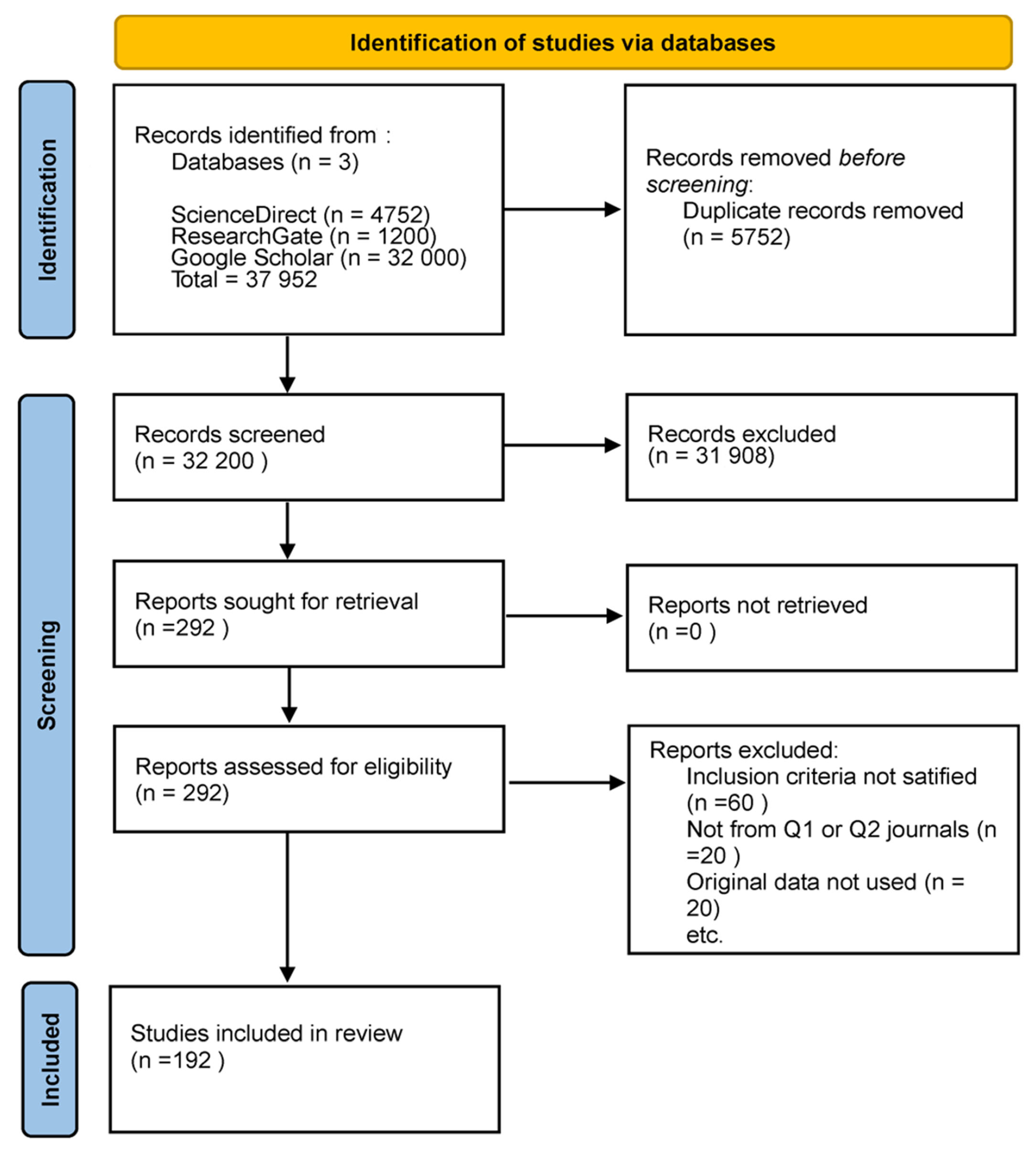

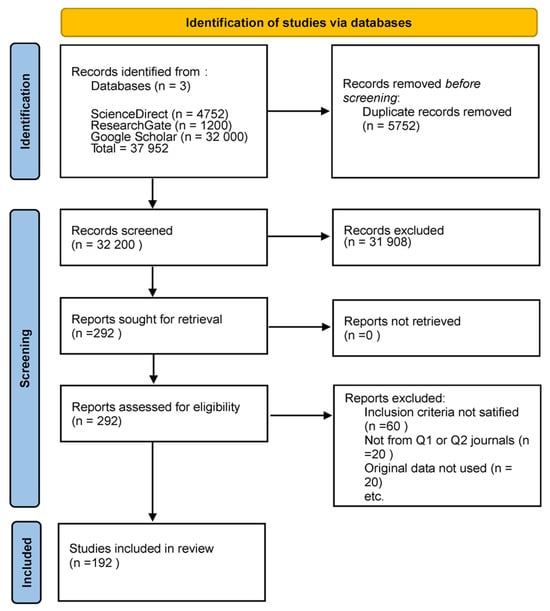

This review exclusively comprised original research studies utilizing human subjects and incorporating one or more of the emerging imaging methodologies: OCT, RS, PAI, HSI, CESM, or MSI. This review was conducted in accordance with the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines. A comprehensive search was performed in PubMed, Scopus, Web of Science, and IEEE Xplore for articles published between January 2019 and December 2024 that appeared in peer-reviewed journals of scientific merit, primarily those classified as Q1 or Q2, with an H-index of 75 or greater. Research was necessary to demonstrate adherence to ethical standards, including obtaining institutional approval for human subject research. The excluded materials comprised case reports, editorials, review articles, meta-analyses, and conference abstracts. We excluded studies that lacked methodological detail, did not provide original imaging data, or utilized exceedingly small sample sizes. Nonetheless, in certain circumstances, less stringent thresholds were incorporated when pertinent due to their methodological contribution or the limited evidence available in a specific modality. This adaptability offered a comprehensive overview while maintaining methodological rigor. Figure 1 shows the PRISMA flow diagram, the literature identification and screening selection process, detailing the number of retrieved records, the reasons for exclusion during screening and eligibility assessments, and the final sample of studies incorporated in this review.

Figure 1.

PRISMA flow diagram of the literature search and selection process. The figure shows the total number of studies retrieved from databases, the number excluded after screening and eligibility assessment, and the final number of studies included in this narrative review.

While the inclusion criteria primarily focused on human-based clinical trials, it was also consulted specific preclinical and technical trials as necessary. The rationale for incorporating these studies is that certain imaging modalities examined in this research have not been fully translated into clinical practice, and preclinical studies may represent the sole evidence regarding the device’s performance, reconstruction limitations, or functionality. Direct harmonization of endpoints across the included studies was not feasible due to significant design heterogeneity. Some studies reported results on a per-patient basis, while others analyzed outcomes on a per-patch or per-lesion basis. In vivo and ex vivo studies differed in their settings, with reference standards ranging from histopathology to radiologic or surgical confirmation. Furthermore, the diagnostic thresholds and performance metrics had not been standardized across modalities. Rather than enforcing artificial congruence, we have also presented the metrics as reported in their respective contexts to uphold methodological integrity. This diversity highlights a significant gap in the field, specifically the urgent need for standardized endpoints, harmonized protocols, and uniform reporting criteria to facilitate more meaningful cross-comparison and meta-analysis in future research.

3. Overview of Imaging Techniques

This section provides a concise overview of each imaging technology employed in the detection of BC. Every imaging modality possesses its history, principles, advantages, limitations, and uses. In consolidating the results across modalities, prioritized per-patient metrics, including sensitivity, specificity, re-excision rates, and time-to-diagnosis, were selected as they represent the most clinically relevant indicators of diagnostic efficacy. In clinical applications, results have been aggregated and compared whenever feasible. However, the majority of the included studies reported outcomes at alternative levels of analysis, such as per-lesion, per-patch, or region-of-interest (ROI). This data has not been excluded, as it often provides the sole evidence of new modalities, but has specified each measure according to the level of analysis on which it is based. This ensures that the results of the exploratory presentations are displayed in an unconfounded manner, independent of per-patient clinical outcomes. The heterogeneity in reporting is explicitly acknowledged as a limitation of the current body of evidence. This stratified presentation of results aims to balance methodological rigor and completeness, providing the reader with a clear understanding of the clinical applicability and the developmental stage of each modality at present. This underscores the necessity for future research to adopt standardized endpoints on a per-patient basis and consistent reporting practices, enabling meaningful comparisons across studies and bolstering confidence in clinical assertions.

3.1. OCT

OCT was initially developed in 1990 and disseminated by researchers at the Massachusetts Institute of Technology in Cambridge, Massachusetts, USA. It was originally employed in ophthalmology for both scientific and clinical purposes due to its applicability in various medical contexts [28]. OCT generates high-resolution cross-sectional two-dimensional pictures of interior tissue microstructures. It was implemented in clinical practice and commercialized in 1996 [29].

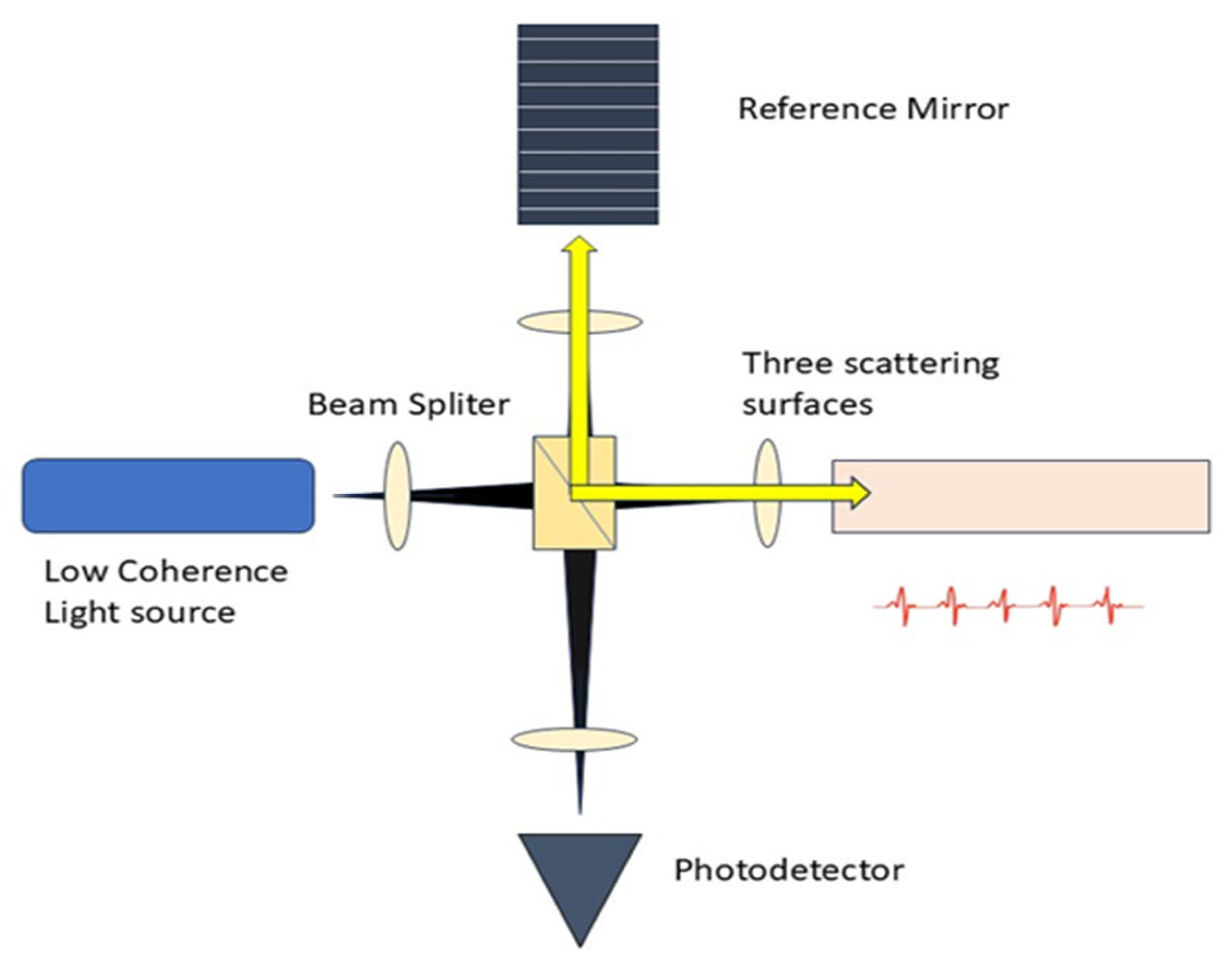

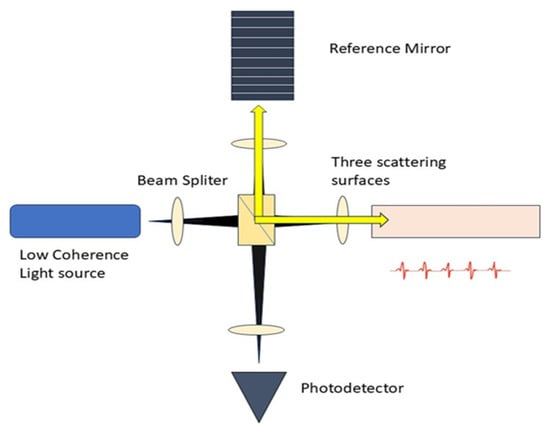

The core concept of OCT involves utilizing low-coherence optical interferometry to detect near-infrared light that is scattered back, thereby reconstructing the depth profile of biological tissue samples. The initial low resolution of OCT devices has been substantially enhanced, allowing for the discernment of smaller and more subtle changes in image quality [30]. It gathers the backscattered and back-reflected light signals from weakly uniform waves that impact upon various tissue depths, as seen in Figure 2. A substantial amount of information is produced through horizontal scanning, subsequently resulting in the creation of a cross-sectional image. The 3D stereoscopic image of the material is ultimately generated using longitudinal scanning [31].

Figure 2.

Schematic Diagram of OCT. The setup consists of a low-coherence light source, a beam splitter, reference mirror, and scattering surfaces.

It can conduct real-time, non-invasive, and non-contact measurements in reflection to facilitate three-dimensional sample viewing. OCT necessitates rapid speed or a variable standoff distance between the objective and the sample, particularly when the sample topology is irregular. Light penetration constitutes a significant challenge in OCT [32,33]. OCT is extensively utilized in both scientific and clinical settings, including ophthalmology, dermatology, and gastrointestinal, among others [34]. It possesses a distinctive benefit in lumpectomy, demonstrating that OCT can be adaptively employed in intraoperative clinical practice.

3.2. RS

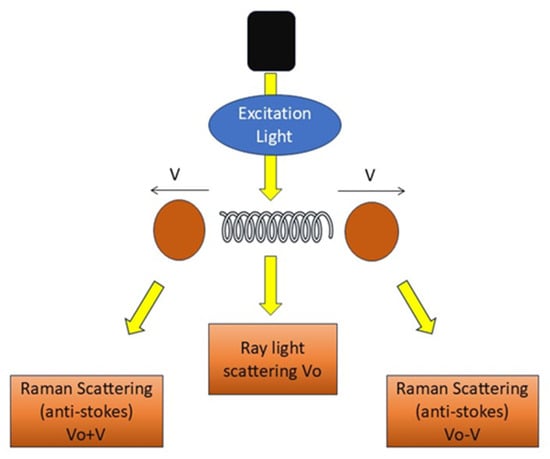

The Raman effect was discovered in 1928 by Chandrasekhara Venkata Raman and Kariamanickham Srinivasa Krishnan. The integration of an optical microscope with a Raman spectrometer in the 1970s utilized laser excitation, enabling the recording of spectra from laboratory specimens for the first time [35]. RS gained prominence in the late 1980s due to advancements in the creation and commercial accessibility of Raman microprobes [36].

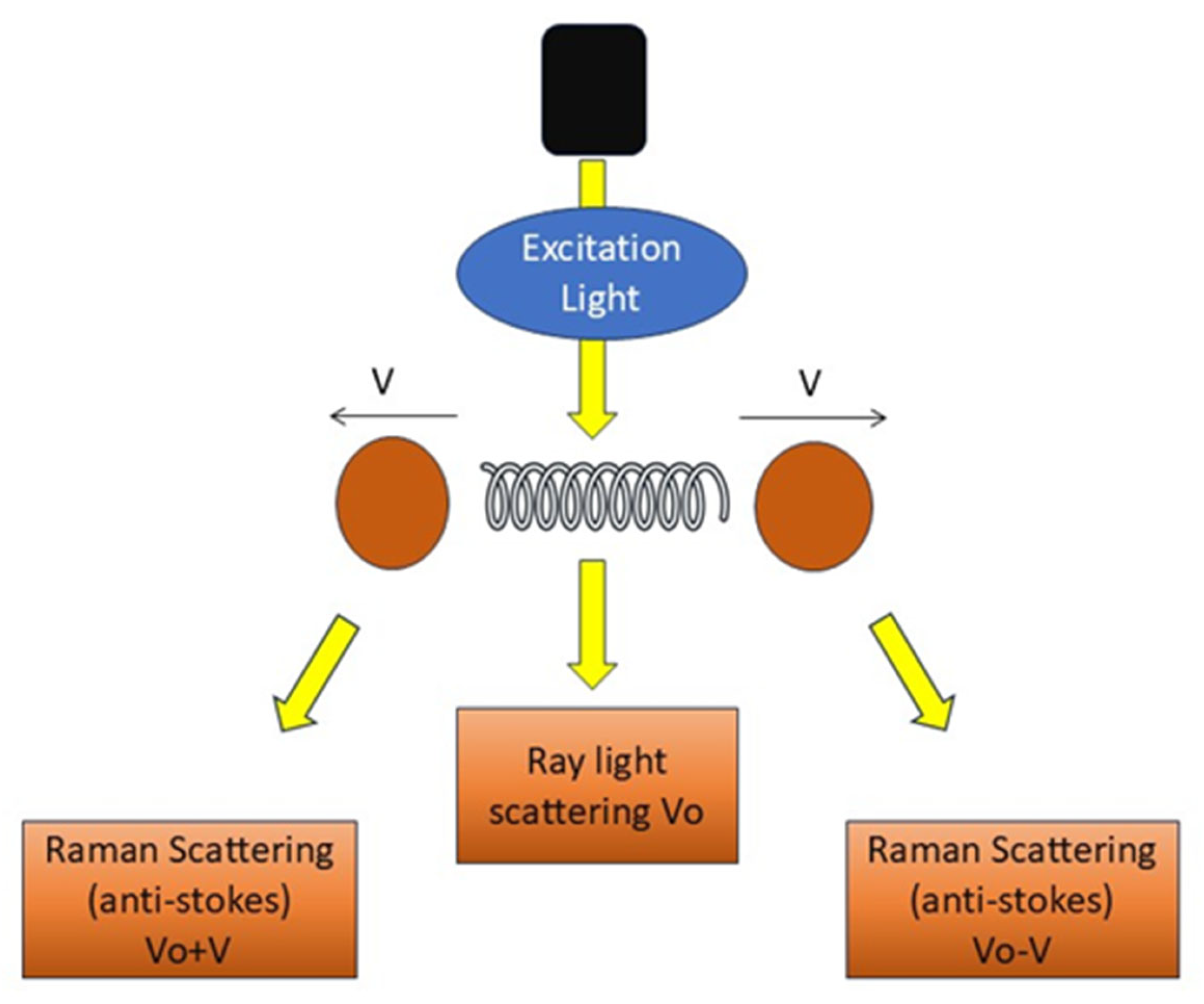

RS employs light effects that directly mirror the structures and chemical states of molecules within a sample, as depicted in Figure 3, facilitating the direct viewing of the chemical responses of biomolecules in living cells and tissues [37]. It offers label-free and comprehensive quantitative data of biological material in situ without disturbance. It generates substantial information with excellent precision. Histochemical approaches employed to advance biological sciences are surpassed by RS [38].

Figure 3.

Principle of RS. Schematic representation of RS showing excitation light interacting with vibrating molecules.

RS demonstrates a significant ability to enhance the precision of cancer diagnosis and surgical interventions [39]. RS offers the benefits of being non-destructive, enabling standoff detection, facilitating optical excitation, and possessing potential for portability [40]. Spectral aberrations or elevated fluorescence hinder accurate identification [41]. It possesses two critical deficiencies: limited repeatability and diminished signal intensity [42]. Raman signal-reporting labels may occasionally yield false-positive outcomes [43]. RS applications exhibit great accuracy in the detection of breast, lung, and various other cancers [44]. RS is employed in interdisciplinary applications, such as integrating optics, computer, and microbiology for imaging complicated samples in three dimensions [45]. RS fingerprints are utilized to non-invasively distinguish organoid phenotypes in both fixed and living salivary gland organoids [46].

3.3. PAI

The origin of photoacoustic imaging, also known as optoacoustic imaging, traces back to Bell’s serendipitous observation in 1880 that fragmented sunlight striking a solar cell produced audible sound. Since the 1980s, numerous advancements in light delivery technology have enhanced PAI [47].

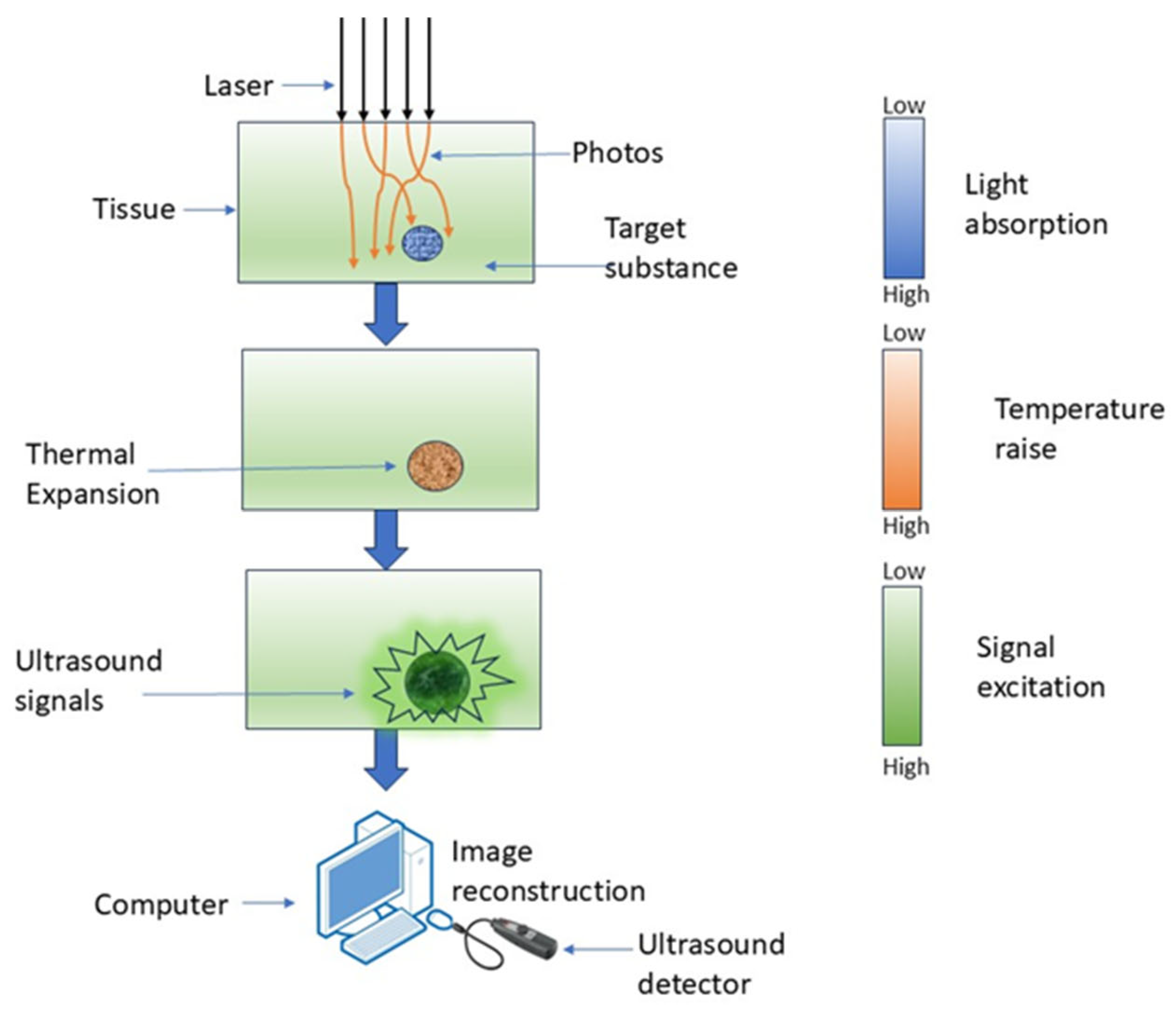

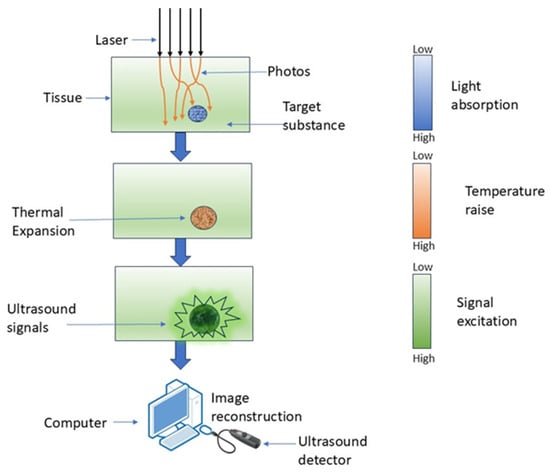

PAI is a hybrid imaging technique wherein optical excitation induces thermal effects in tissue, leading to elastic expansion and the production of an acoustic signal [48]. It employs laser-induced photoacoustic signals to produce highly detailed pictures by detecting the acoustic waves generated from the absorption of pulsed laser light by tissue chromophores [49]. PAI non-invasively retrieves functional tissue parameters at depths of several centimeters using the photoacoustic effect, as depicted in Figure 4 [50].

Figure 4.

Working of PAI, where laser light is absorbed by tissue, causing localized thermal expansion and generation of ultrasound signals.

PAI has numerous benefits in high-resolution brain imaging. It has the combined advantages of enhanced optical contrast and higher ultrasound penetration resolution, while also facilitating the quantitative assessment of hemodynamic parameters such as oxygen, hemoglobin, and water concentration using multi-wavelength photoacoustic data [51]. A trade-off exists between imaging resolution and penetration depth in PAI [52]. The bandwidth of the observed photoacoustic waves is constrained [53]. PAI can be utilized in superficial tumors to enhance radiation dose administration, patient classification, therapeutic response, and monitoring of radiation-related adverse effects [54].

3.4. HSI

In 1983, the NASA Jet Propulsion Laboratory captured the first hyperspectral image from the world’s first aerosol imaging spectrometer [55]. In 1997, HSI gained prominence in clinical research when Freeman et al. employed it to enhance physicians’ capacity to identify unknown issues in clinical methodologies by providing a perspective beyond the naked eye [56]. In 2006, HSI facilitated in vivo observation of cutaneous edema by enabling the visualization of the water percentage in each pixel, together with oxyhemoglobin and deoxyhemoglobin levels [57]. Brain cancer was identified utilizing HSI in 2018, yielding effective results in the detection of high-grade tumors [58].

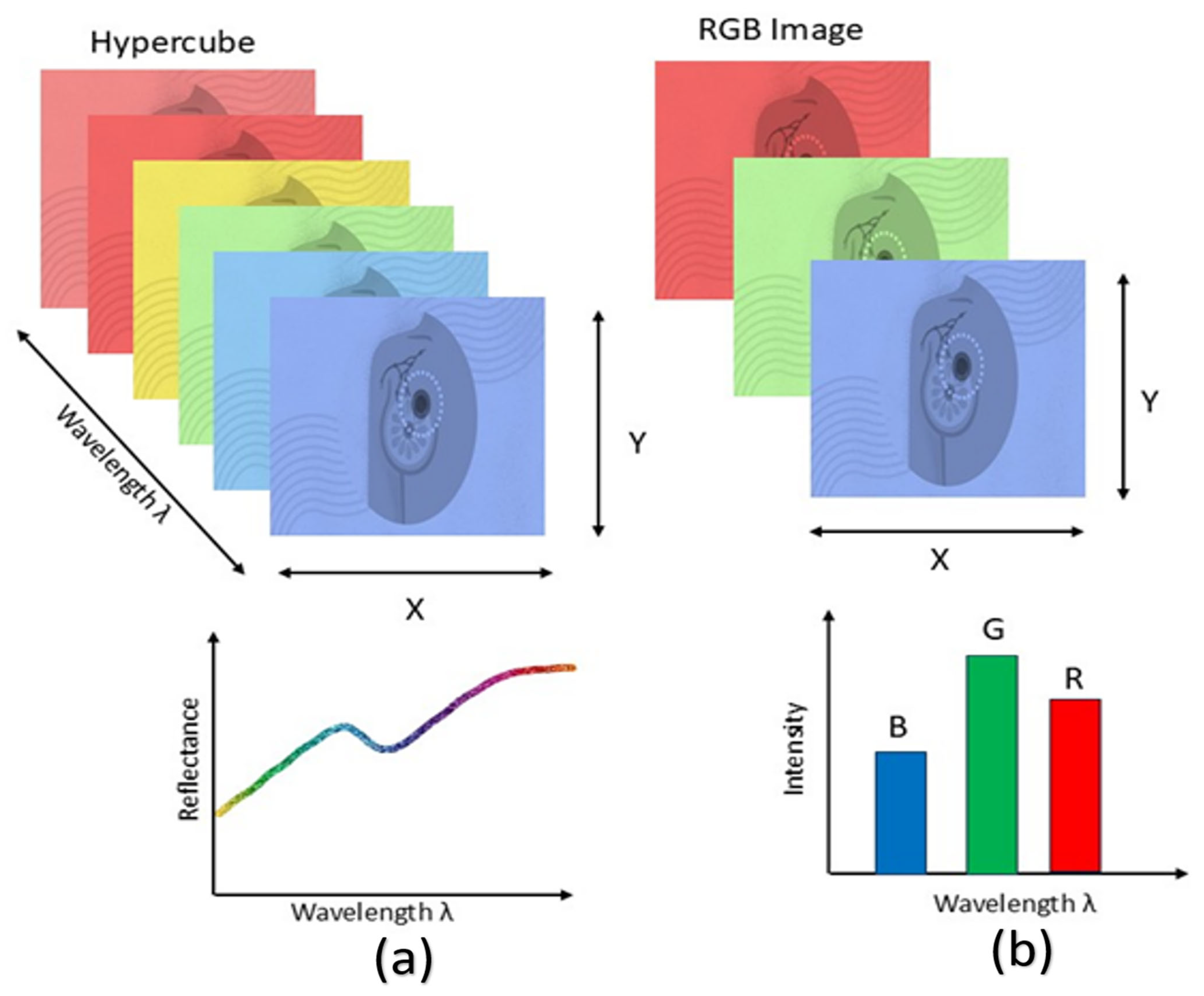

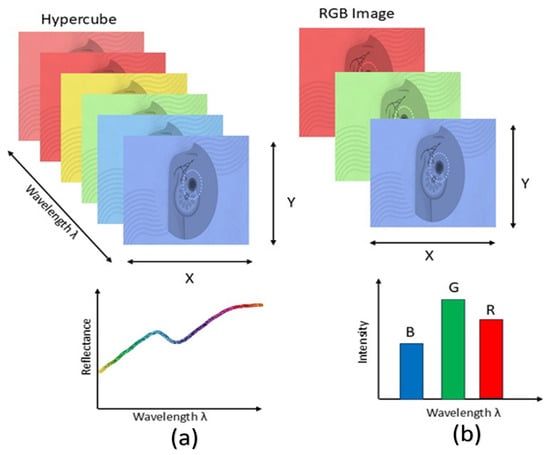

When light in the near-infrared wavelength range interacts with biological tissue, it scatters due to tissue heterogeneity and is absorbed by constituents such as hemoglobin, melanin, and water. The fluctuations in fluorescence, tissue absorption, and scattering characteristics during disease alter the spectrum data captured by the device, which conveys pathological information [59]. A 3D hyperspectral cube can be constructed from the generated image data, incorporating spatial information in two dimensions and spectral information in one dimension. The reflectance is related to the absorption and scattering characteristics of cancerous tissue [60]. Figure 5 illustrates the comparison between HSI and RGB imaging.

Figure 5.

Comparison of HSI and RGB imaging. HSI (a) captures information across a wide range of wavelengths, forming a hypercube that provides detailed spectral signatures for each pixel. In contrast, conventional RGB imaging (b) captures only three broad wavelength bands, limiting the amount of spectral information available.

It is a non-invasive imaging method in comparison to alternatives. Utilizing a wideband light source to assess optical tissue qualities across a broad spectrum of electromagnetic bands [61]. HSI cameras have high adaptability and compatibility with current medical devices, including endoscopes, otoscopes, and laparoscopes [62]. A broad spectral range encompassing the visible spectrum, near-infrared, mid-infrared, and far-infrared, with numerous continuous wavebands included. HSI can also discern items that are difficult to distinguish in natural RGB photos [63].

Hyperspectral cameras continue to adhere to passive imaging technologies. These cameras require an external light source to record objects and depict the intensity of the spectrum. The acquired photos are significantly influenced by the ambient lighting conditions. HSI systems are inoperative in dark or low-light conditions [64]. Higher resolutions lead to extended acquisition durations, creating a trade-off between image quality and data acquisition duration [65]. HSI is an effective technique for medicinal applications. Besides cancer diagnosis and neurosurgery, HSI has been utilized in other forms of image-guided surgery, including abdominal surgeries, cholecystectomy, and renal procedures. HSI is utilized in domains such as remote sensing and food quality assessment [66]. Due to its thorough and complete information, it can be widely utilized across multiple sectors, including agricultural monitoring, geological exploration, and environmental surveillance [67].

3.5. CESM

CESM was established in 1985 with the advent of digital subtraction angiography for the breast. The objective was to differentiate benign from malignant breast tumors to prevent surgical biopsy [68,69]. The inaugural study on temporal subtraction was published by Jong et al. in 2003, originating from a group in Toronto [70]. In the same year, Lewin et al. discovered CESM utilizing a dual energy technique, which served as an alternative to the temporal technique [71]. In 2011, the FDA authorized CSEM as a medical method for detecting BC [72].

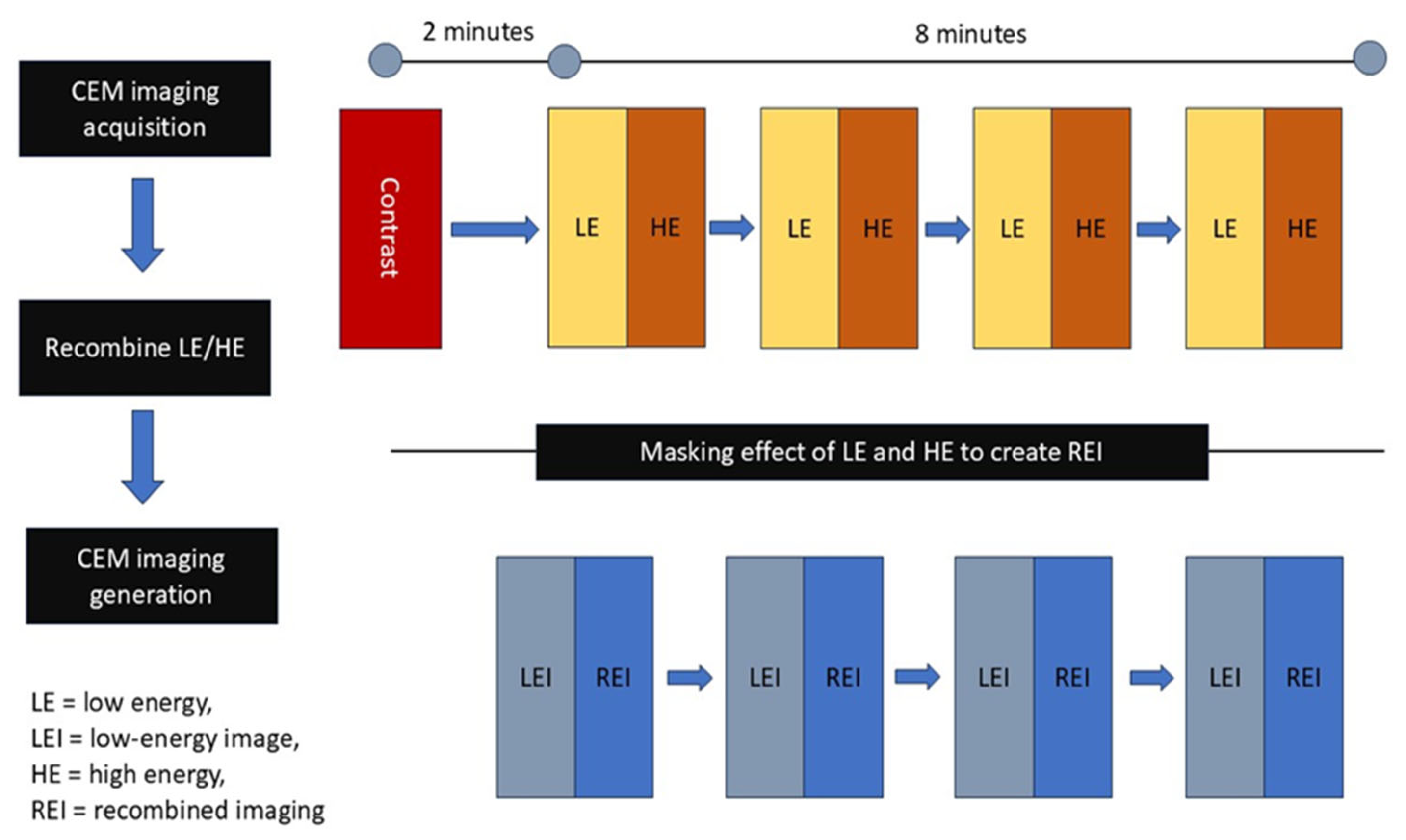

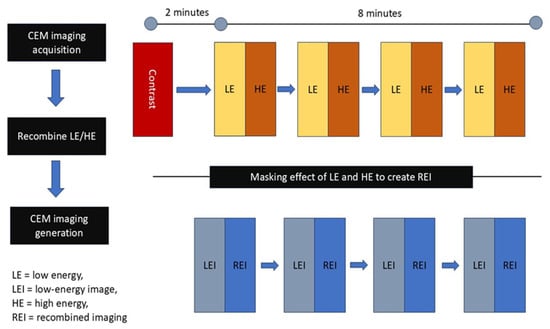

CSEM relies on the contrast enhancement created by newly developed proliferating tumor vasculature and the elevated permeability in tumor regions [73]. It facilitates both a morphological examination of standard digital mammography and an ongoing assessment of tumor neovascularity [74]. It integrates mammography principles with the administration of an intravenous iodinated contrast medium, enabling a contrast-enhanced graphical assessment of the breast similar to MR imaging; this highlights regions that absorb the contrast medium, indicative of neo-angiogenesis in neoplasms [75]. Figure 6 illustrates the operating concepts of CESM.

Figure 6.

Principle of CESM. The CESM process involves acquisition of low-energy and high-energy images after contrast injection, followed by recombination to generate recombined images.

CESM exhibits diagnostic sensitivity comparable to breast MRI, and its specificity may be superior in individuals contraindicated for breast MRI. It is as dependable as MRI in assessing the efficacy of Neoadjuvant Chemotherapy [76]. The hormonal condition does not influence CESM. This can offer significant supplementary information about the identification of lesions in individuals exhibiting a pronounced level of background parenchymal enhancement when it is objectively challenging to distinguish a lesion from the non-enhanced background [77]. CESM may be constrained by two factors: firstly, the administration of iodinated contrast medium entails risks of adverse reactions, which may encompass hypersensitivity reactions and contrast-induced nephropathy. Secondly, CESM involves a greater radiation dose compared to full-field digital mammography due to the dual-energy exposure for each projection [78]. CESM demonstrates superior reliability in diagnosing BC in thick breast tissue compared to traditional mammography [79]. CESM is remarkable in high-risk screening and interpretation of ambiguous data, in addition to its function in assessing tumor response to neoadjuvant treatment [80].

3.6. MSI

Multispectral satellite remote sensing has a history of nearly 40 years, starting with the launch of the first Landsat satellite in 1972. MSI has been crucial in various satellite and aircraft remote sensing systems. This imaging modality has a long history, having been utilized for over a decade to improve quantitative accuracy, facilitate multicolor immunohistochemistry analysis, and mitigate the effects of contrast-reducing tissue autofluorescence prevalent in formalin-fixed, paraffin-embedded specimens [81,82].

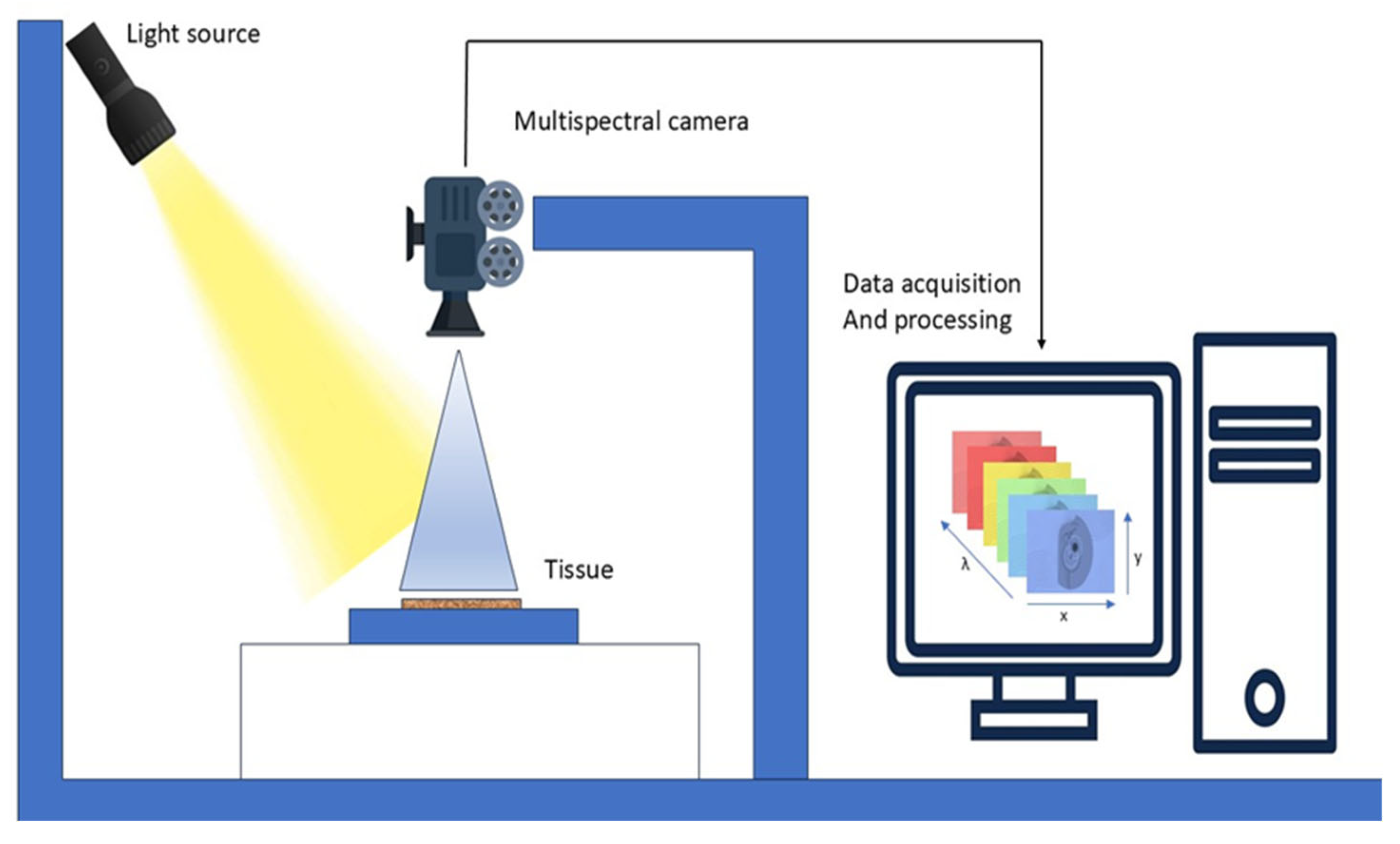

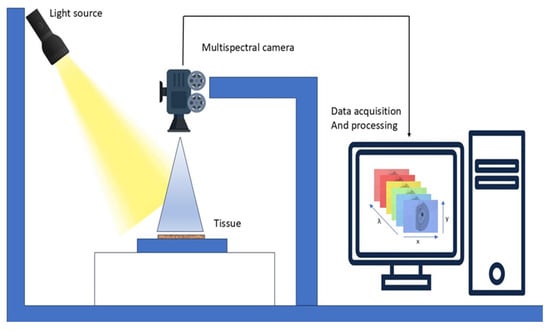

MSI is also called multiband analysis, involves collecting a set of images from the same area over multiple wavelengths, as illustrated in Figure 7. It utilizes a mix of spectroscopy and imaging techniques to obtain spectral pictures that contain multiple 2-D images. Utilizing the x, y, and lambda axes, MSI constructs a three-dimensional cube. MSI apparatus employs charge-coupled devices, optical filters, and various LEDs over ultraviolet, visible, and infrared spectral ranges to produce reflectance and acquire pictures [83].

Figure 7.

Working of MSI. A light source illuminates the tissue sample, and a multispectral camera captures reflected light across multiple wavelengths.

MSI integrates enhanced subsets of HSI, making it more efficient [84]. A significant benefit of MSI in cultural heritage is that it does not necessitate the extraction of samples from the object. MSI facilitates the comparison and contrast of various inks and pigments, while also revealing latent features and insights into the current condition of an object [85]. MSI devices have significant potential for the identification and diagnostic evaluation of melanocytic skin lesions by revealing absorption data unseen to the human eye [86].

Current technologies use conventional ML algorithms that encounter numerous obstacles in managing intricate skin lesion circumstances, hence constraining the clinical application of MSI technology [87,88]. Due to the high dimensionality of MSI, processing is slow, and identifying the ideal spectral range is challenging. Various investigations have shown that MSI enhances conventional RGB imaging in the classification of cancer cells [89]. MSI has facilitated significant advancements across many domains like environmental monitoring, astronomy, agricultural sciences, biological imaging, medical diagnostics, food quality control, aerospace, defense, and biomedicine [90]. It is also applicable in forestry and hydrology [91].

4. Reported Studies on Imaging Techniques

This section presents many case studies conducted by researchers on distinct imaging techniques, illustrating the methodologies employed, the datasets utilized, and the outcomes achieved in BC detection. It might be tempting to compare performance parameters between different modalities, but this wouldn’t be a good idea because the methods used in each study are different. Presenting results in a synthesized format will provide a comprehensive overview of the existing evidence while preserving the methodological framework of the specific research. The underlying studies are highly heterogeneous, differing in patient cohorts, study design, imaging context (in vivo vs. ex vivo), reference standards (histopathology, radiology, or surgical confirmation), and even the unit of analysis (per-patient, per-lesion, or per-patch). Attempting to normalize these outcomes across such varied conditions would risk misrepresenting the actual performance of the techniques and creating misleading equivalence. Instead, we chose to present the reported metrics in aggregate to provide a broad synthesis of the evidence base, while maintaining the methodological context of each study. This approach highlights the promise of emerging imaging methods while also drawing attention to the current lack of harmonized reporting standards. Future studies should adopt standardized endpoints, consistent reference standards, and multicenter validation to enable meaningful cross-modality comparisons and meta-analysis.

4.1. OCT

In the past decade, there have been significant advancements in the application of OCT imaging techniques for the identification of BC. The following case studies demonstrate the clinical and experimental applications of OCT and various techniques, including FF-OCT, CP-OCT, and PS-OCT. These include DL classification models, intraoperative margin intervals, and the integration of imaging and histology, as outlined in Table 1.

Table 1.

Case Studies on BC Detection Using OCT.

The research conducted by Dhiman et al. used OCT images from 48 patients aged 35 to 60 years, featuring a mixture of healthy and malignant breast tissues, collected from the All-India Institute of Medical Sciences in New Delhi. The ensemble model is constructed by ranking and selecting classifiers using the Technique for Order of Preference by Similarity to Ideal Solution, with optimal weights obtained by the Crow Search Algorithm. The results demonstrate that the suggested ensemble classifier exhibited superior performance metrics, with precision, recall, accuracy, F1-score, Kappa, and MCC values of 92.1%, 92.1%, 92.3%, 0.921, 0.846, and 0.846, respectively [92].

Zhang et al. researched 224 D-FFOCT slides of breast tissues derived from 13,497 patches belonging to 129 patients. The Swin Transformer DL model was employed to differentiate between normal and cancerous tissues. The model demonstrated a diagnostic accuracy of 97.62%, a sensitivity of 96.88%, and a specificity of 100%. The intraoperative evaluation technique has been improved, allowing tissue sample assessment to be done in 3 min, markedly less than traditional histopathological approaches [93].

Sanderson et al. utilized data from 16 patients who underwent breast-conserving surgery at Fiona Stanley Hospital in Western Australia, examining 139 in vivo OCT scans and correlating them with ex vivo histology from cavity shavings obtained during the procedure. The spectral domain OCT system was connected to a portable OCT probe. This approach achieved a co-registration rate of 78%, with 109 out of 139 in vivo OCT scans successfully matched to corresponding ex vivo histology [94].

Yang et al. investigated the application of FF-OCT and Dynamic Cell Imaging as non-destructive optical imaging modalities that can be rapidly implemented intraoperatively for the diagnosis of BC surgery. Analysis of a data set of 314 tissue specimens, including 173 breast biopsies and 141 lymph nodes, collected from 158 patients. The research conducted demonstrated the potential for diagnostic efficacy. DCI exhibited superior sensitivity and specificity, reaching 88.6% and 95.1%, respectively, compared to FF-OCT, which demonstrated sensitivity and specificity of 85.6% and 85.4% in breast tissue evaluation [95].

Simon et al. investigated the application of Dynamic Full-Field OCT for the rapid treatment of BC in a clinical setting, utilizing a dataset including 217 samples from 152 patients, which included 144 breast lesions and 61 lymph nodes. This imaging technique combines high-resolution FF-OCT with DCI to provide structural and metabolic contrast without the need for staining or tissue preparation. The outcomes indicated a sensitivity of 77%, specificity of 64%, positive predictive value of 74%, and negative predictive value of 75% [96].

Gubarkova et al. used the cross-polarization OCT technique for imaging intraoperative ex vivo human BC specimens and conducted a qualitative and quantitative evaluation of 3D CP-OCT data through a depth-resolved methodology for estimating the attenuation coefficient and analyzing cross-polarization channels. A study was conducted on 68 excised human breast specimens, comprising both tumorous and adjacent non-tumorous tissues. The research indicates a diagnosis accuracy ranging from 91% to 99%, sensitivity from 96% to 98%, and specificity from 87% to 99% [97].

Faragalla et al. examined the concept of OCT on preserved and unprocessed breast tissue specimens. A total of 175 tissue samples from 40 breast specimens were scanned using a spectral-domain OCT device. This approach identified that 30% of residual malignancy and invasive carcinoma samples could be differentiated from normal fibroglandular tissue based on heterogeneous texture, uneven margins, and diminished penetration depth. The findings supported the efficacy of OCT in producing an accurate image in BC [98].

Levy et al. conducted a study utilizing a substantial cohort of 585 Wide Field OCT margin scans from 151 subjects, employing this data for training and validation, alongside a distinct and independent test set including 155 margin scans from 29 patients with pathology-verified results. The researchers utilized a WF-OCT equipped with a compact Convolutional Neural Network (CNN) model derived from the VGG architecture, tailored for real-time, on-device margin diagnosis during BC. The CNN model demonstrated commendable performance, with an AUROC of 0.976, a sensitivity of 93% and a specificity of 98%. It identified 96.8% of pathology-positive margins, suggesting its efficacy in minimizing reoperations and providing intraoperative decision support [99].

This study by Sun et al. explored the use of paired OCT intensity images and polarization-sensitive OCT images from various human breast tissues to train a DL model. A total of 22,072 PS-OCT images were accurately aligned with the actual images, yielding SSIM scores of 0.8531 for degree of polarization uniformity (DOPU) and 0.6659 for phase retardation. The synthetic PS-OCT attained area under the curve (AUC) values of 0.979 for DOPU and 0.952 for phase retardation in cancer and normal classification, surpassing the natural PS-OCT, which recorded AUC values of 0.975 for DOPU and 0.956 for phase retardation [100].

Basu et al. proposed an advanced full-field polarization-sensitive OCT ensemble model for breast classification, utilizing 220 samples scanned via FF-PS-OCT simulation to extract phase information. The multi-level ensemble classifier achieved a precision of 94.8%, a recall of 92.5%, an F-score of 93.7%, and a Matthews correlation coefficient (MCC) of 82.3% on the testing dataset [101].

4.2. RS

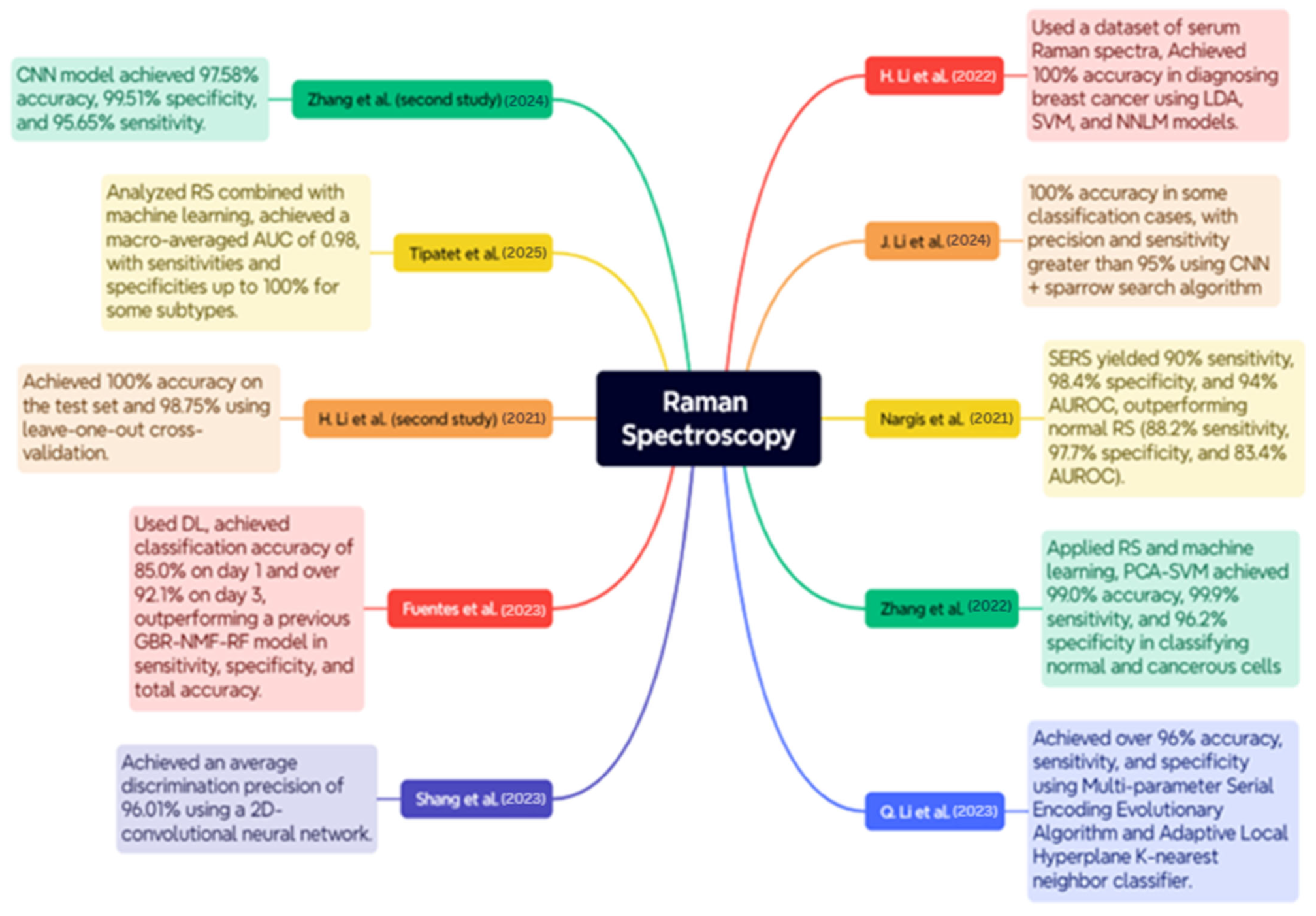

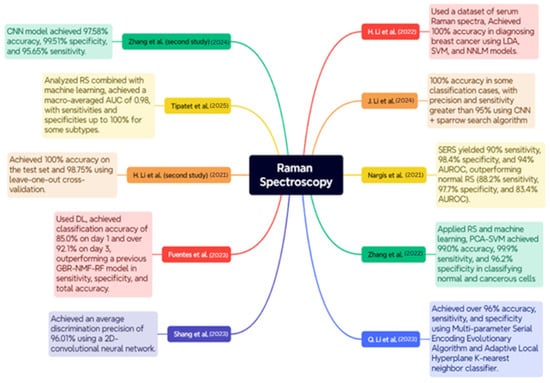

RS is a delicate, non-invasive optical instrument utilized for the diagnosis and classification of BC, as well as for conducting molecular analyses of the disease. The case studies below encompass a wide range of RS applications, including serum and tissue analysis, polarization, and surface enhancement, integrated with ML and DL models to enhance diagnostic accuracy. An overview of the case studies is depicted in Figure 8.

Figure 8.

Case Studies on RS for BC Diagnosis [102,103,104,105,106,107,108,109,110,111].

The study conducted by H. Li et al. utilized a dataset consisting of blood Raman spectra from 171 patients diagnosed with invasive ductal carcinoma and 100 healthy participants. The pre-processing stages included baseline correction by airPLS and a smoothing procedure utilizing Savitzky–Golay. The results demonstrated exceptional classification accuracy for models such as LDA, SVM, and NNLM, achieving 100% accuracy in BC diagnosis [102].

The research conducted by J. Li et al. employed many datasets generated by a Monte Carlo sampling method to enhance the model’s validity. A combination of Random Search and CNNs optimized by the Sparrow Search Algorithm. The results indicated that the CNN achieved a 100% accuracy rate in certain classification scenarios, with precision and sensitivity above 95%, hence demonstrating enhanced diagnostic capabilities and effective feature visualization with Grad-CAM [103].

Nargis et al. conducted a study comparing surface-enhanced Raman spectroscopy (SERS) with conventional RS for classifying BC using serum samples from 17 BC patients and 12 healthy individuals. The results indicated that SERS outperformed conventional RS, achieving 90% sensitivity, 98.4% specificity, and 94% AUROC, in contrast to 88.2%, 97.7%, and 83.4% for sensitivity, specificity, and AUROC, respectively, in conventional RS. The findings can corroborate SERS due to its non-invasive ability to differentiate between BC stages and healthy samples in blood serum [104].

Zhang et al. and collaborators employed RS and ML to differentiate between BC subtypes utilizing six cultured cell lines of both malignant and normal breast cells: SUM149, MDA-MB-231, MCF-7, ZR-75-1, BT474, and MCF-10A; approximately 4500 Raman spectra were measured in total. The methodologies employed were Principal Component Analysis (PCA), Discriminant Function Analysis, and Support Vector Machine (SVM) analysis. The PCA-SVM achieved 99.0% accuracy, 99.9% sensitivity, and 96.2% specificity in distinguishing between normal and malignant cells, whereas the classification of BC subtype reached 93.9% accuracy [105].

Q. Li et al. employed a feature fusion methodology for feature identification with remote sensing, incorporating adaptive hyperparameter optimization as a binary classification instrument. The dataset samples were obtained from 16 patients at Peking University. The experiment employed the Multi-parameter Serial Encoding Evolutionary Algorithm along with the Adaptive Local Hyperplane K-nearest neighbor classifier. The model exhibited accuracy, sensitivity, and specificity of 96%, surpassing ALHK with hyperparameter tuning, which is a manual procedure [106].

Shang et al. employed polarized RS to identify structural and compositional changes in breast tissue associated with cancer. The dataset comprised breast tissue samples from patients at Jiangsu Cancer Hospital, collected with ethical approval and informed agreement. The study involved analyzing Raman spectra at various polarization angles to obtain structural information. The training was conducted on this spectral data utilizing a 2D-CNN. The average discrimination precision achieved was 96.01%, beating traditional methods such as KNN and 1D-CNN [107].

Fuentes et al. employed DL techniques based on remote sensing to identify post-irradiation biochemical changes in tumor tissue. A total of 3054 Raman spectra were acquired on day 1 and 6708 Raman spectra on day 3 following the irradiation of breast tumor xenografts. This method employed Random Sampling and CNNs to train and identify irradiated and non-irradiated data. The CNN achieved a classification accuracy of 85.0% on day one, over 92.1% on day three, but exhibited poor performance under leave-one-out validation schemes. CNN demonstrated superior sensitivity, specificity, and overall accuracy compared to a previous GBR-NMF-RF model, establishing it as an excellent choice for early, label-free monitoring of tumor response to radiation [108].

The research conducted by H. Li et al. focused on employing RS for the diagnosis of BC using frozen tissue sections from 22 individuals. The dataset included samples of healthy control, solid papillary cancer, mucinous carcinoma, ductal carcinoma in situ, and invasive ductal carcinoma. Raman spectra were acquired using a confocal Raman microscope with a 633 nm HeNe laser. The researchers employed Principal Component Analysis and Linear Discriminant Analysis to identify the tissue type. The classification model achieved a flawless score of 100% on the test set and 98.75% using leave-one-out cross-validation. These findings demonstrate the efficacy of employing multivariate analysis in conjunction with RS to reliably diagnose BC without the imposition of labels [109].

Tipatet et al. conducted an analysis of the combination of RS and ML for the diagnosis and classification of BC subtypes. The dataset comprises blood plasma samples from 12 BC patients and 12 healthy volunteers sourced from the Biobank and BC Tissue Bank. The study successfully categorized the four major types of BC: Luminal A, Luminal B, HER2-positive, and Triple Negative, achieving a high level of accuracy in classification. The model achieved a macro-averaged AUC of 0.98, with sensitivity and specificity of 90% and 95% for certain subtypes, indicating that liquid biopsy and RS may be highly applicable for non-invasively subtyping early BC [110].

Zhang et al. employed RS in combination with ML to differentiate between normal and late-stage early malignant breast tissues in a mouse model. A dataset comprising images of 20 female mice implanted with 4T1 BC cells, which simulate human stage IV BC, was utilized. A 785 nm laser was employed for Raman measurements, which were analyzed using Random Forest, SVM, and CNN methodologies. The CNN model exhibited superior performance in classification, achieving accuracy, specificity, and sensitivity rates of 97.58%, 99.51%, and 95.65%, respectively [111].

4.3. PAI

PAI has developed into a hybrid, non-invasive imaging approach that delivers essential structural and functional information regarding BC tissue by integrating optical and ultrasonic imaging modalities. The following case studies outline the clinical and experimental applications of PAI in BC detection, subtype differentiation, and tumor evaluation, with major findings summarized in Table 2.

Table 2.

Case Studies on BC Detection Using PAI.

A study by Tong et al. used a panoramic photoacoustic computed tomography system to clinically evaluate breast tissue in 39 patients. The optimum classifier achieved an area under the Receiver Operating Characteristic curve (AUC-ROC) of 0.89 for differentiating between normal and diseased tissue, comparable to conventional imaging [112].

G. Li et al. investigated preoperative dual-modal photoacoustic ultrasound imaging for early-stage BC involving 324 patients. The model comprising three independent clinical factors achieved an area under the curve of 0.775 in the training cohort and 0.783 in the test cohort. The comparison indicated that the nomogram exhibited optimal performance, achieving an AUC of 0.906 on the training set and 0.868 on the testing set [113].

Huang et al. introduced a PA radiomics technique to categorize breast nodules among 317 patients with lesions classified as BI-RADS 3-5. Radiomic characteristics were combined with clinical factors by univariate and multivariate logistic regression analysis. The optimal model was MODC, which incorporated clinicopathological, ultrasonography, and SO2 values from PAI, with an AUC ranging from 0.815 to 0.950 and a 95% confidence interval [114].

Li et al. conducted in vivo multispectral photoacoustic imaging to distinguish BC molecular subtypes in mice. Fifty xenografts were acquired by implanting human BC cells into the mammary glands of nude mice. The research utilized each tumor to acquire PA spectra over a range of wavelengths and employed partial least squares discriminant analysis. The Murine model provided significant molecular insights into contemporary approaches. The algorithm demonstrated an accuracy of 72%, with a sensitivity of 66% and a specificity of 78% when utilizing the ideal spectra, surpassing the full range spectra by 6%, 4%, and 8%, respectively [115].

Rodrigues et al. designed a sterile photoacoustic sensor to visualize the in vivo progression of breast tumors and implemented an ML technique for cancer diagnosis, measuring PA spectra at various time points and doing feature extraction. The breast tumor xenografts were produced from five athymic nude mice. A classifier utilizing SVM was developed to differentiate each stage following a tumor from a baseline. SVM-RBF, SVM-Polynomial, and SVM-Linear exhibit accuracies of 95.2%, 99.5%, and 80.3%, respectively [116].

Huang et al. conducted a study utilizing photoacoustic radiomics to predict the Ki-67 proliferation index in 359 BC patients. Multivariate logistic regression was employed to obtain significant clinical variables. The system developed a nomogram for high-Ki-67 status utilizing tumor PA image features, a 6 mm peritumoral margin, and clinical data, achieving an AUC of 0.899 on the test set [117].

Guoqiu Li et al. analyzed the characteristics of deep networks using PA data for breast tumor diagnosis in a patient population of 334, comparing CNNs based on ResNet50 with and without an attention mechanism. The PA input model combined with attention screening achieved the optimal performance, evidenced by a training AUC of 0.917 and a testing AUC of 0.870. The sensitivity, specificity, and accuracy were 78.6%, 87.2%, and 83.6%, respectively, in the test set [118].

Zhang et al. observed a novel approach of multispectral photoacoustic imaging to differentiate between healthy and malignant breast tissues. Formalin-fixed paraffin-embedded blocks of both healthy and malignant human breast tissue were acquired from PrecisionMed, Inc. (Carlsbad, CA, USA). The model employed spectral analysis, incorporating PCA and cosine similarity, utilizing formalin-fixed paraffin-embedded tissue samples from healthy and invasive ductal carcinoma breast tissue. The imaging device detected a wavelength range of 680 nm to 2000 nm to identify tissue-specific absorption characteristics. The mean correlation between healthy tissue and cancer tissue was determined to be between 0.801 and 0.967, and between 0.762 and 0.954, respectively [119].

The study led by Wang et al. examines the application of PAI in evaluating breast intraductal lesions, utilizing a dataset of 45 patients, of whom two were inadequately imaged, twenty-six exhibited non-intraductal malignancies, and seventeen presented with breast intraductal neoplasms, breast fibromas, or breast adenosis. At a PA score threshold of 2.5, the method demonstrated a sensitivity of 90% and a specificity of 87.5% in differentiating intraductal lesions from benign ones. The results suggest that the PAI is a highly promising non-invasive technique for the accurate diagnosis and BI-RADS classification of breast intraductal lesions [120].

Using PAI and radiomics, Guoqiu Li et al. devised a new approach for BC diagnosis to ascertain the pathophysiology of worrisome lesions categorized as BI-RADS 4-5. The research team utilized data from 119 female patients, along with pertinent ultrasound and PAI data. The authors conducted radiomics on PAI pictures, extracting 1125 features, which were subsequently reduced to six significant features by LASSO regression. The average AUC of this composite model was 0.925 during training and 0.926 during testing, far surpassing that of either BI-RADS or PAI radiomics individually [121].

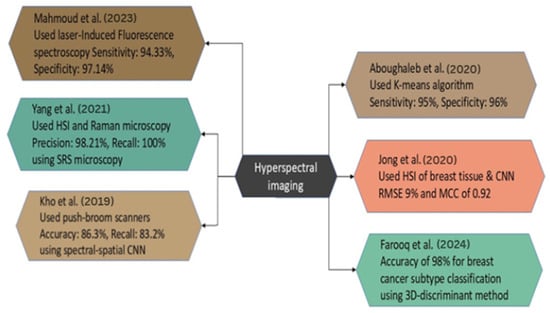

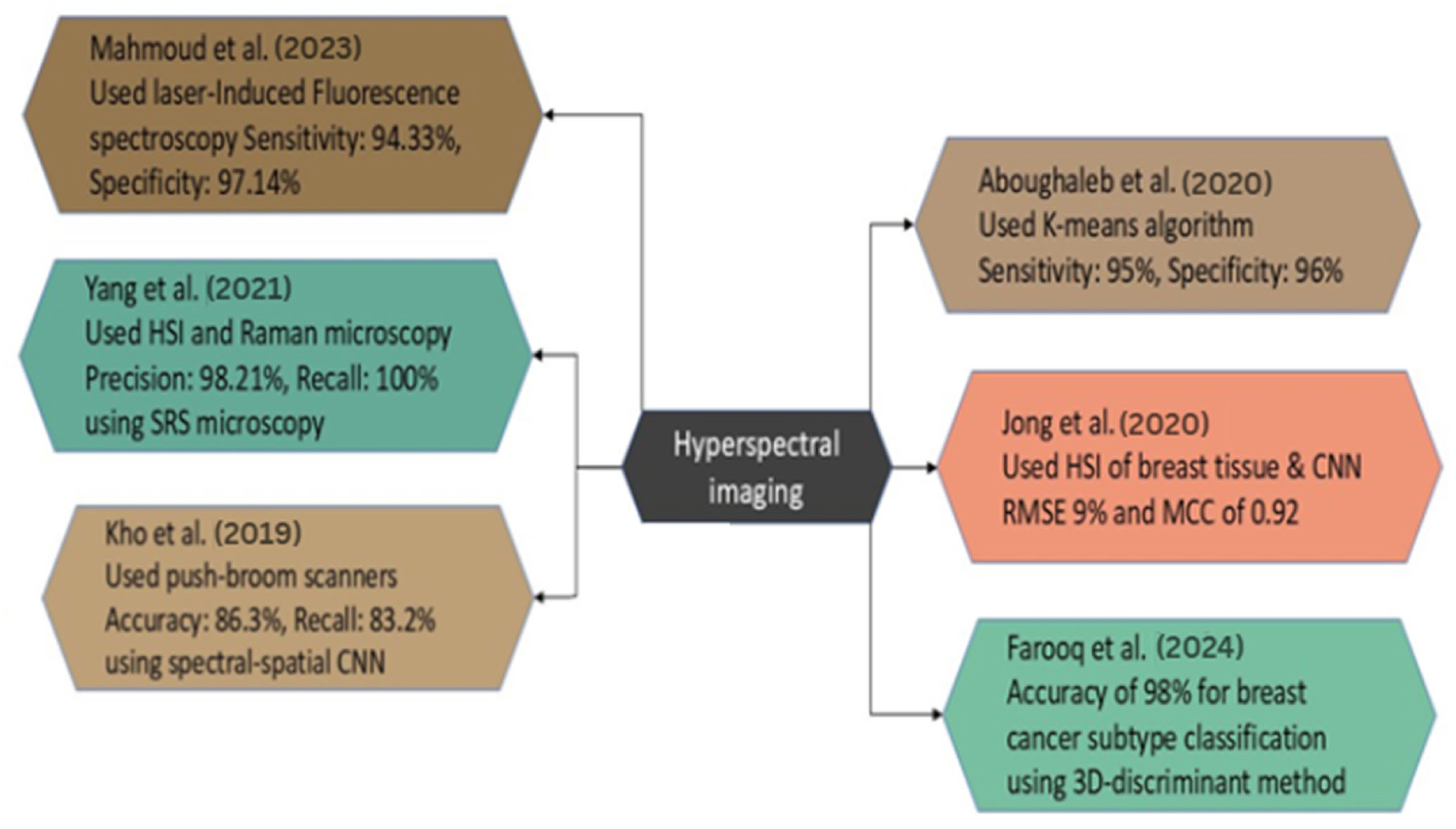

4.4. HSI

HSI represents a novel methodology for the identification of BC. HSI effectively distinguishes between normal and malignant cancerous regions by analyzing the spectrum specific to each tissue type. Recent case studies have employed HSI alongside image processing and ML methods, achieving great accuracy, sensitivity, and reliability. The research examined is illustrated in Figure 9 to offer a comparative analysis of methodologies and outcomes.

Aboughaleb et al. conducted a study focused on ex vivo BC detection with hyperspectral imaging. The scientists analyzed resected breast tissue samples from 10 patients diagnosed with BC, utilizing ethical preparation and imaging with a custom-built hyperspectral camera system across a spectral range of 420–620 nm. The methodology employed in HSI involved the acquisition of hyperspectral data with advanced image processing techniques, including normalization, noise reduction through a moving average filter, and spectral grouping utilizing the K-means algorithm. This facilitated the classification of tissues by distinguishing between normal and malignant tissue signals. The results demonstrated high efficacy, with a sensitivity of 95% and a specificity of 96% in detecting tumor margins [122].

Jong et al. analyzed HSI data acquired from breast tissue slices and lumpectomy specimens from female patients at the Netherlands Cancer Institute—Antoni van Leeuwenhoek Hospital. The authors presented domain adaptation approaches and the spectral and spectral-spatial CNN models to categorize tissue types and evaluate tumor margins. To improve classification accuracy across datasets, a domain adaptation loss function was employed in the methodology. The findings indicated that the models predicted tissue percentages with a root mean square error (RMSE) of approximately 9% and an MCC of 0.92, suggesting a high level of accuracy in distinguishing tumor tissue from healthy tissue during a very brief duration during the surgical procedure [123].

Farooq et al. performed a study on the identification of BC subtypes utilizing HSI samples BT474 and SKBR3, comprising a dataset of 2048 spectra within the fingerprint regime. The primary instrument employed was Fourier-transform infrared spectroscopy, with data pre-processed using a Savitzky–Golay filter and extended multiplicative signal correction. The primary analytical methodology employed was the 3D-discriminant, which significantly outperformed conventional unfolding procedures. The new 3D framework exhibited an accuracy of over 98% in classifying BC subtypes, representing a significant improvement over the 85% accuracy of conventional methods, hence highlighting its potential for personalized treatments. This approach enhanced sensitivity and specificity to 98% and 94%, respectively [124].

Kho et al. generated hyperspectral images of breast tissue, employing two push-broom HSI scanners to capture images in the visible and near-infrared bands. The wavelength range of 450 to 1650 nm yielded optimal classification performance. The authors employ two classification methodologies: a spectral classification algorithm and a deep CNN that integrates spectral and spatial information. The investigation revealed that the highest categorization level was achieved using the complete spectrum of wavelengths and the fiber-optic spectral-spatial method. The recall for U-Net was 86.3%, while LDA exhibited a recall of 83.2%, with both demonstrating identical recall rates for healthy tissues [125].

Halicek et al. examined hyperspectral imaging data from 102 head and neck cancer patients undergoing surgical resection, with 256 measurement sites across tumor mar-gins. To achieve this, the researchers employed convolutional neural networks combined with spectral-spatial feature extraction, enabling automated tissue classification directly from hyperspectral data validated against histopathology. The classification model de-veloped using this approach achieved notable metrics: 91% sensitivity, 83% specificity, an MCC of 0.69, and an AUC of 0.89, demonstrating strong performance in distin-guishing between cancerous and healthy tissue during intraoperative margin assess-ment [126].

Figure 9.

Case Studies on HSI for BC Diagnosis [122,123,124,125,127,128].

Figure 9.

Case Studies on HSI for BC Diagnosis [122,123,124,125,127,128].

The study by Yang et al. focuses on the detailed examination of breast tumor malignancy through the measurement of tissue calcifications using hyperspectral stimulated Raman scattering microscopy. The study utilized specimens from fresh breast biopsies acquired from 23 female patients. In this instance, there were 11 benign cases and 12 malignant cases, respectively. Among 211 patients with identified calcification locations, SRS was employed for imaging. The primary method of analysis employed is hyperspectral stimulated Raman scattering microscopy. The researchers achieved a precision of 98.21% and a recall of 100% in classifying benign and malignant cases. This has been accomplished by optimizing a combination of the chemical and geometrical characteristics of microcalcifications [127].

In the study of Mahmoud et al., an innovative label-free approach for detecting BC was developed utilizing HSI and laser-induced fluorescence spectroscopy. The study included 10 samples of BC, demonstrating that fluorescence distribution serves as an efficient marker for tumor identification. The average sensitivity was 94.33%, and the average specificity was 97.14% across all samples utilizing image segmentation and K-means clustering methods. This non-invasive technology possesses significant potential to improve the accuracy of diagnosis and surgical planning in BC detection [128].

4.5. CESM

CESM is an effective imaging technique for detecting BC, as it reveals significant lesions through the use of iodinated contrast chemicals that provide superior image quality. Recent investigations have employed DL, radiomics, and advanced image processing pipelines to improve diagnostic accuracy. The clinical and research characteristics of CESM are delineated in the subsequent case studies and are presented in Table 3.

Table 3.

Studies on BC Detection Using CESM.

Jailin et al. used data from 1673 individuals, comprising a total of 7443 contrast-enhanced mammography images collected from various hospitals and imaging centers. The research employs a DL methodology with YOLO for the identification and classification of breast lesions on CESM. The results demonstrate that the optimized model achieved an AUROC of 0.964 in BC classification, successfully recognizing 90% of tumors at a false positive rate of 0.128 per image. The results indicate that the developed AI model surpasses existing studies and closely approximates the accuracy of radiologists in detecting [129].

Bouzarjomehri et al. applied the CDD-CESM dataset comprising 326 patients to develop a DL framework for BC lesion classification. The CESM utilized an attention-based pipeline that enhanced the accuracy of lesion detection and recognition. The classification pipeline demonstrated superior performance, achieving an accuracy of 94.74%, an F1-score of 97.67%, specificity of 93.75%, and sensitivity of 95.45%. Concerning the modality comparison, the model trained on DM images had a little higher accuracy, around 98.85% compared to 97.47% for CM [130].

In a multicenter study conducted by Mao et al., a dataset of preoperative CESM images from 1239 patients, who were pathologically diagnosed, was utilized and divided into training, testing, and validation sets by both internal and external approaches. The primary strategy was employing three prevalent CNN architectures, namely DenseNet121, Xception, and ResNet50, as backbone frameworks, and incorporating the convolutional block attention module to facilitate categorization. The Xception, part of the CBAM family, achieved superior performance, with an AUC-ROC of 0.970, sensitivity of 84.8%, specificity of 100%, and accuracy of 89.1% on the external test set [131].

Chen et al. conducted a study proposing a multiprocess detection and classification system (MDCS) for the detection and automated categorization of breast lesions in a substantial multicenter dataset of 1903 CESM scans from females. In detection tasks, utilizing AUROC as a classification metric, the system achieved average free-response receiver operating characteristic scores of 0.953 and 0.963, with AUC values of 0.909 and 0.912 on pooled external and prospective testing sets, respectively. The MDCS has demonstrated superior diagnostic efficiency, with an average reading time of 5 s, in contrast to the radiologist’s 3.2 min, and it significantly enhances radiologists’ performance when utilized as assistance [132].

Miller et al. conducted retrospective cohort research from 2014 to 2020, reporting on 159 worrisome breast findings sent to the institution for CESM and tissue collection. The study employed logistic regression and penalized linear discriminant analysis to characterize the radially distributed mammographic density and contrast with enhancement. The results indicated that density histograms surpassed a random classifier with an accuracy of 62.37%, while the integration of concatenated density histograms with contrast histograms yielded a notable enhancement, achieving 71.25% accuracy. Furthermore, incorporating both demographic and clinical patient data such as age, race, previous medical history of BC, menopausal state, and breast density into the models yielded an improved AUC-ROC of 0.81 [133].

Moffa et al. compared CESM with traditional tests, specifically digital mammography and breast ultrasonography, for diagnosing women with thick breasts. The study involved 51 patients with 65 breast lesions, collected between March 2021 and February 2022. In the CESM procedure, a non-ionic iodinated contrast agent was intravenously administered via power injection at a rate of 1.5 mL/kg. A mammographic image was subsequently captured, consisting of two acquisitions at low and high energy per view, which were then processed using specialized software to produce recombined images. The data demonstrated that CESM had great diagnostic capability, with a sensitivity of 93.5%, specificity ranging from 79.4% to 82.4%, and a significantly enhanced accuracy of 86.1% to 87.7% [134].

Lin et al. conducted a retrospective study to develop a radiomics nomogram for distinguishing benign from malignant tumors smaller than 1 cm in the breast utilizing CESM. This dataset comprises 139 patients with lesions smaller than 1 cm, including 39 malignant and 100 benign lesions, which are further categorized into training and validation cohorts. Independent predicting factors were determined by ANOVA and multivariate logistic regression. The data revealed that the radiomics nomogram outperformed Rad-score, BI-RADS category, and age, achieving a 0.940 AUC with a 95% confidence interval in the validation cohort, surpassing both Rad-score alone and the clinico-radiological model [135].

Zheng et al. performed a study involving 1912 patients with a discrepant breast lesion identified using CESM. Researchers established a completely automated pipeline utilizing RefineNet and Xception with Pyramid pooling for the segmentation and categorization of breast tissue. It employs a channel fusion technique and pyramid scene parsing networks to utilize the features produced by low-energy and recombined CEM. The system’s performance yielded favorable results, with the Dice similarity coefficients recorded at 0.888 ± 0.101, 0.820 ± 0.148, and 0.837 ± 0.132, respectively. For classification, the AUC values were 0.947, 0.940, and 0.891 in the external and prospective test sets, respectively, indicating a high level of accuracy and potential clinical utility [136].

Gouda et al. conducted a study involving a cohort of women diagnosed with BC who exhibited suspected multifocal or multicentric illness. The dataset comprised 60 patients diagnosed with BC and probable multifocal illness. The authors employed a retrospective research design to examine patients who underwent CESM and contrast-enhanced MRI prior to surgery. The results indicated that CESMs exhibited a sensitivity of 97% and an accuracy of 95%, closely comparable to MRI, which had a sensitivity of 99% and an accuracy of 94%. The specificity of CESM at 67% was significantly greater than that of MRI at 33%, suggesting that CESM may serve as a useful alternative in the preoperative assessment of BC [137].

Research by Song et al. proposed the implementation of hybrid DL to improve BC detection utilizing CESM, which incorporates two imaging modalities: a generative adversarial network (GAN)-based image fusion module and a Res2Net-based classification module. The dataset comprised 760 CESM images from 95 patients aged 21 to 74. The results indicated that the complementary features of both modalities were preserved in the fused images, and the classification model exhibited impressive performance: 94.784% accuracy, 95.016% precision, 95.912% recall, 94.5% specificity, 95.5% F1-score, and 0.947 AUC [138].

Pediconi et al. concentrated on examining the diagnostic efficacy of CESM with high-concentration iodinated contrast medium (HCCM) at 400 mg/mL. Two Italian imaging centers involved 205 patients exposed to CESM, all of whom underwent CEM between March 2021 and February 2022. The findings demonstrated commendable diagnostic accuracy, with sensitivity ranging from 96% to 97%, specificity from 84% to 87.5%, and total accuracy between 93% and 95%. The AUC ranged from 0.90 to 0.92, indicating that CESM’s capability in distinguishing and detecting aggressive breast tumors in conjunction with HCCM is exceptional as well [139].

Sun et al. examined the parameters that may affect the success rate of radiomics models in classifying breast lesions using CESM. The target dataset comprised 157 women and 161 breast lesions, acquired by retrospectively obtained contrast-enhanced mammograms, specifically gathered from November 2018 to February 2020. The radiomic characteristics were obtained using the CESM. The results demonstrated that both models performed effectively. The least absolute shrinkage and selection operator (LASSO) yielded an average AUC of 0.926 ± 0.047, an accuracy of 89.5% ± 6.1%, a sensitivity of 89.1% ± 8.5%, and a specificity of 90.8% ± 9.6%. The Random Forest model yielded an average AUC of 0.915 ± 0.055, an accuracy of 88% ± 6.8%, a sensitivity of 87.8% ± 9.7%, and a specificity of 88.6% ± 10.8% [140].

In this study by Song et al., the researchers created a DL-based classification model called MVMM-Net to enhance the identification of BC using CESM. The dataset comprised 760 CESM images from 95 patients. The procedure comprised three essential steps: preprocessing to integrate the multiview and multimodal capabilities of CESM, deep feature extraction using a Res2Net50 network, and final classification with MVMM-Net. The results indicated a performance accuracy of 96.591%, sensitivity of 96.396%, specificity of 96.350%, precision of 96.833%, F1-score of 0.966, and AUC of 0.966 [141].

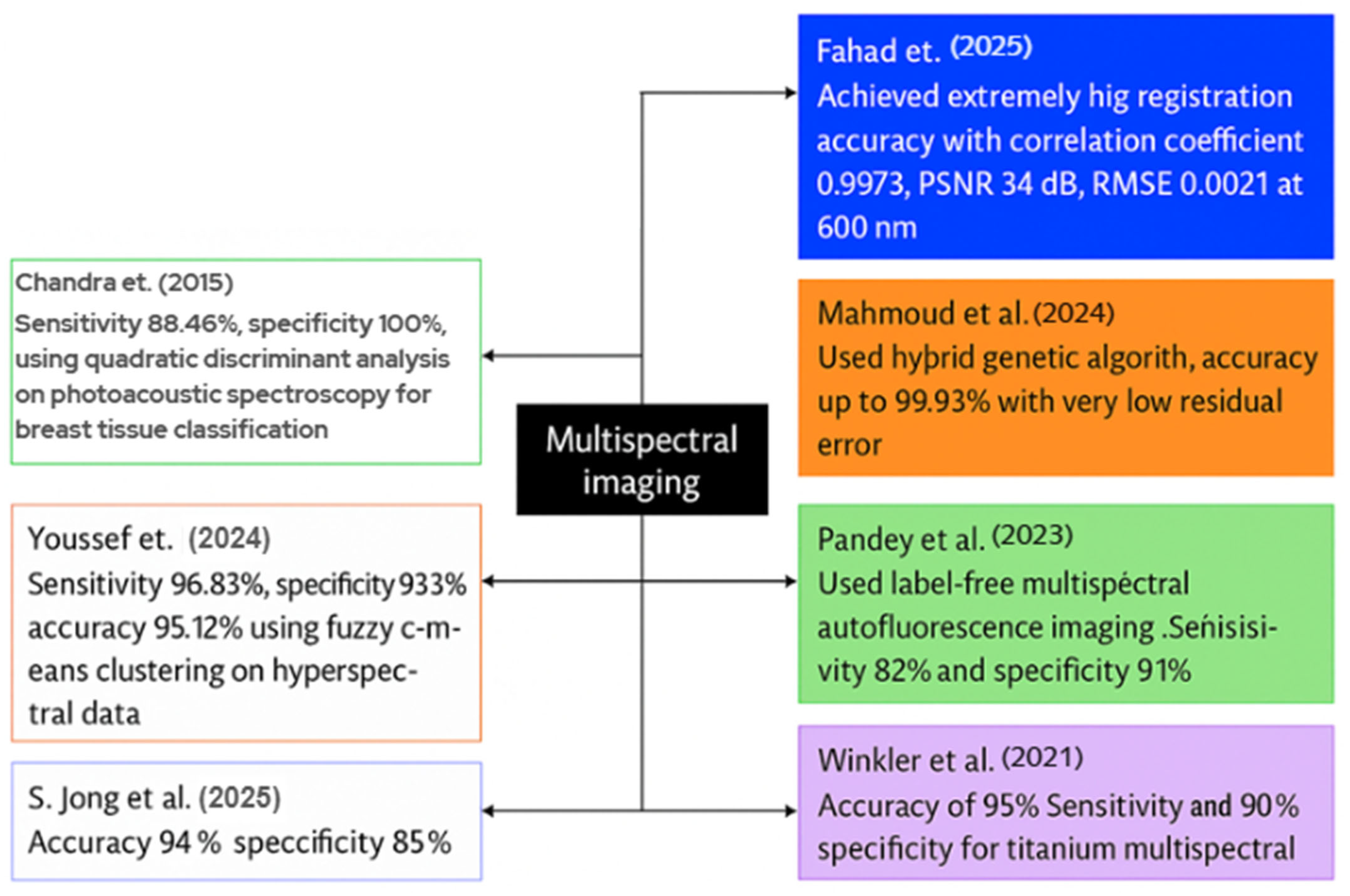

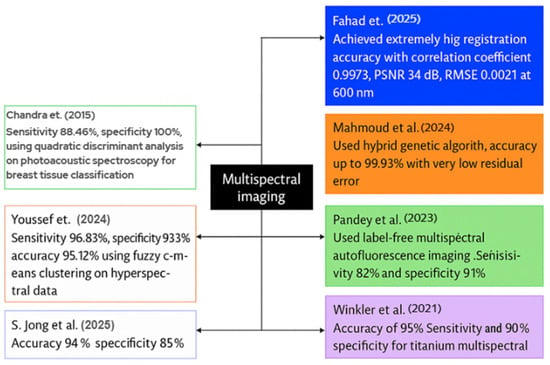

4.6. MSI

MSI enhances BC diagnosis accuracy by providing comprehensive spectral information on tissues. The current scientific evaluations indicate great accuracy rates, contrast, and diagnostic reliability achieved through the integration of imaging, ML, and optimization techniques. This case study will present various MSI techniques employed for BC detection. Figure 10 illustrates a graphic representation of recent MSI studies in the detection of BC.

Figure 10.

Visual representation of recent MSI case studies in BC detection [142,143,144,145,146,147,148].

Fahad et al. introduced a deep-learning system for the registration and enhancement of multispectral breast transillumination images. His team captured transmission images of human participants using a smartphone camera at four LED illumination wavelengths: 600 nm, 620 nm, 670 nm, and 760 nm. The results for 600 nm indicated a correlation coefficient of 0.9973 with an RMSE of 0.0211; for 620 nm, the correlation coefficient was 0.9893 with an RMSE of 0.043; for 670 nm, the correlation coefficient was 0.995 with an RMSE of 0.0248; and for 760 nm, the correlation coefficient was 0.982 with an RMSE of 0.0388. The model employed a hybrid vision-transformer and LSTM network to align and integrate successive multispectral frames [142].

Mahmoud et al. designed a metaheuristic framework to optimize the number of frames required for high contrast in multispectral breast imaging. Two healthy adult female volunteers participated, and the researchers acquired a ten-second video clip utilizing four wavelengths: 600 nm, 620 nm, 670 nm, and 760 nm, across a broad spectrum of LED light. A hybrid genetic algorithm-constriction-factor particle swarm optimization method was employed to align and average the frames at each wavelength. The registration accuracies reached 99.93%, with minimal residual error seen by Mahmoud et al., leading to significant enhancements in image contrast [143].

Pandey et al. examined label-free multispectral autofluorescence imaging for the diagnosis of BC. He, together with colleagues, obtained 172 fresh lumpectomy tissue blocks from 115 patients, utilizing an ex vivo multispectral scanner for autofluorescence analysis. No dyes were introduced; instead, indigenous fluorophores were activated to produce multispectral fluorescence emission data. In validation, the system exhibited a sensitivity of 82%, specificity of 91%, positive predictive value of 84%, and negative predictive value of 89% in detecting malignant and benign zones [144].

Winkler et al. conducted a study including 20 patients; all patients received multispectral MRI immediately before and after the placement of titanium marker clips. The multispectral approach acquired dual-echo data instead of a traditional single-echo image. The objective was to utilize signal variance between the two echo periods to improve the visibility of the metal clips. In the 2-bin MSI model, the sensitivity of clip detection was 90%, the specificity was 100%, and the accuracy was 95% when spectral-image scans were utilized, in contrast to the insufficient detection rate observed with typical single-echo scans [145].

To differentiate between normal and malignant breast histology, S. Jong et al. employed MSI and spectrum unmixing techniques. The group obtained new tissue samples from 189 patients who underwent lumpectomy, utilizing 151 samples for training and 38 for testing purposes. It obtained pure spectral fingerprints of healthy and malignant tissues in the training set using a linear unmixing algorithm. The unmixing characteristics were trained via a K-nearest-neighbor classifier. The findings indicated a sensitivity of 94% and a specificity of 85% in pixel-level tumor detection on the independent test set [146].

Youssef et al. developed a visible-near-infrared MSI set to facilitate the automated identification of BC in tissue samples. The scientists conducted an ex vivo scan of the excised breast specimen across a broad spectrum of wavelengths and employed fuzzy c-means clustering on the hyperspectral cube to delineate the tissue sections. The system demonstrated excellent performance in classifying tumor versus normal tissue regions, with a sensitivity of around 96.83%, a specificity of 93.39%, and an accuracy of 95.12% on the test dataset, including tissue samples [147].

Chandra et al. introduced a photoacoustic spectroscopy method for the label-free identification and categorization of breast cancer tissue. A pulsed laser operating at 281 nm from a Nd:YAG laser system illuminated fresh ex vivo breast tissue samples including 28 normal and 28 malignant specimens. The photoacoustic spectra were captured in the time domain, converted into frequency domain patterns, and examined by quadratic discriminant analysis. The investigation revealed significant variation in tissue types, with the classification model achieving 100% specificity and 88.46% sensitivity within the frequency range of 406.25-625.31 kHz, indicating its potential for clinical breast tumor diagnosis [148].

Khouj et al. reviewed a dataset of hyperspectral pictures of breast tissue specimens from 10 distinct patients, featuring tissue sections on slides that included both hematoxylin and eosin (H&E) stained and unstained samples. To characterize tissues, the researchers employed a snapshot hyperspectral imaging system functioning within the visible spectrum (461–641 nm), enabling the identification of spectral variations between normal and ductal carcinoma in situ (DCIS) tissues. This classification model used the K-means unsupervised clustering algorithm on hyperspectral data cubes, with study indicating that wavelengths approaching 550 nm exhibited the most effective discrimination across tissue types. The classification method attained significant metrics: 85.45% sensitivity, 94.64% specificity, a true negative rate of 95.8%, and a false positive rate of 4.2%, suggesting strong ability in distinguishing between DCIS and healthy tissue for histologic assessment [149].

5. Barriers to Clinical Adoption

This section explores the diverse problems encountered by the researchers throughout the investigation. These limitations constitute the primary obstacles to employing these approaches for real-time BC detection.

5.1. OCT

5.1.1. Constraints of Physical Imaging Resolution and Penetration

OCT is a helpful tool for examining inside tissues, but it has natural drawbacks. A major concern is its inability to penetrate deeply into the tissue. Gubarkova et al. found that the limited penetration depth of OCT allows scanning to a maximum of approximately 1.5 mm into the tissue. This results in the examination of a limited tissue volume, while the regions of coagulation and hemorrhage also induce alterations in the characteristics of the OCT signal and optical attenuation. Thouvenin et al. stated that the low sensitivity of the faster cameras employed by OCT restricts the penetration depth to 50 μm. This implies that if cancer cells are situated deeper within the tissue, OCT may fail to identify them. The duration needed to obtain information from the sample is greater with FF-OCT due to the constraints of the camera’s frame rate. The integration of FF-OCT with structured illumination microscopy demonstrated inadequate robustness against aberrations and multiple scattering, exhibiting limited penetration into scattering samples beyond 30 to 50 μm [150].

5.1.2. Speed and Real-Time Processing

BC clinical research emphasizes the critical importance of identifying tumor margins to administer appropriate treatment. Given that BC resection can impact women’s appearance and quality of life, it is imperative to provide the most accurate diagnosis. To assist in cancer treatment during surgery, surgeons perform a biopsy to identify the presence of tumor tissues, utilizing Hematoxylin and Eosin (H&E) staining under optical microscopy by a pathologist. The biopsy, which includes resection and H&E staining analysis, may prolong the surgical procedure and complicate the correct identification of tumor margins [151]. During BC surgery, it is essential for surgeons to know immediately whether cancer has been excised. Levy et al. discussed a significant issue when OCT scans a surgical site, such as a lumpectomy margin, resulting in the generation of thousands of minute images. A computer must rapidly verify all of them throughout the ongoing surgery. Conventional DL models such as VGG or ResNet are excessively huge and slow for real-time applications due to their millions of components and parameters. The experiments evaluated 155 margins, corresponding to 1.9 million picture patches, in around 25 min. This results in an average of 10.51 s per surgical margin, with a standard deviation of 6.48 s. If the model is excessively slow, it may postpone surgery and risk the patient’s well-being. Consequently, the speed is crucial in cancer detection models.

5.1.3. Speckle Noise

Speckle is a common phenomenon in coherent imaging systems. When a coherent source and a coherent or noncoherent detector are used to investigate a medium that is rough relative to the wavelength, speckle formation occurs. It resembles a granular structure overlaid on the acquired image, which constitutes a primary constraint in detecting low-contrast lesions. It constitutes a form of noise. Speckle noise can disrupt and influence the assessments of the physician [152]. It is also created by arbitrary interference among numerous reflected photons originating from multiple directions. OCT images are affected by the speckle phenomenon, which serves both as a source of noise and as a carrier of information. It imparts a gritty texture to the OCT pictures. This granular texture diminishes the signal-to-noise ratio (SNR) and reduces the accuracy of interpretation. The primary cause of speckle noise creation is the utilization of spatially coherent light [153]. Speckle noise adversely impacts visual quality and the efficacy of automated analysis, and it hides minor yet critical morphological characteristics [154].

5.2. RS

5.2.1. Fails to Extract Discriminative Features