Criteria and Protocol: Assessing Generative AI Efficacy in Perceiving EULAR 2019 Lupus Classification

Abstract

1. Introduction

1.1. Prospective Roles for AI in Medical Record Extraction

1.2. Potential Applications for Rheumatology

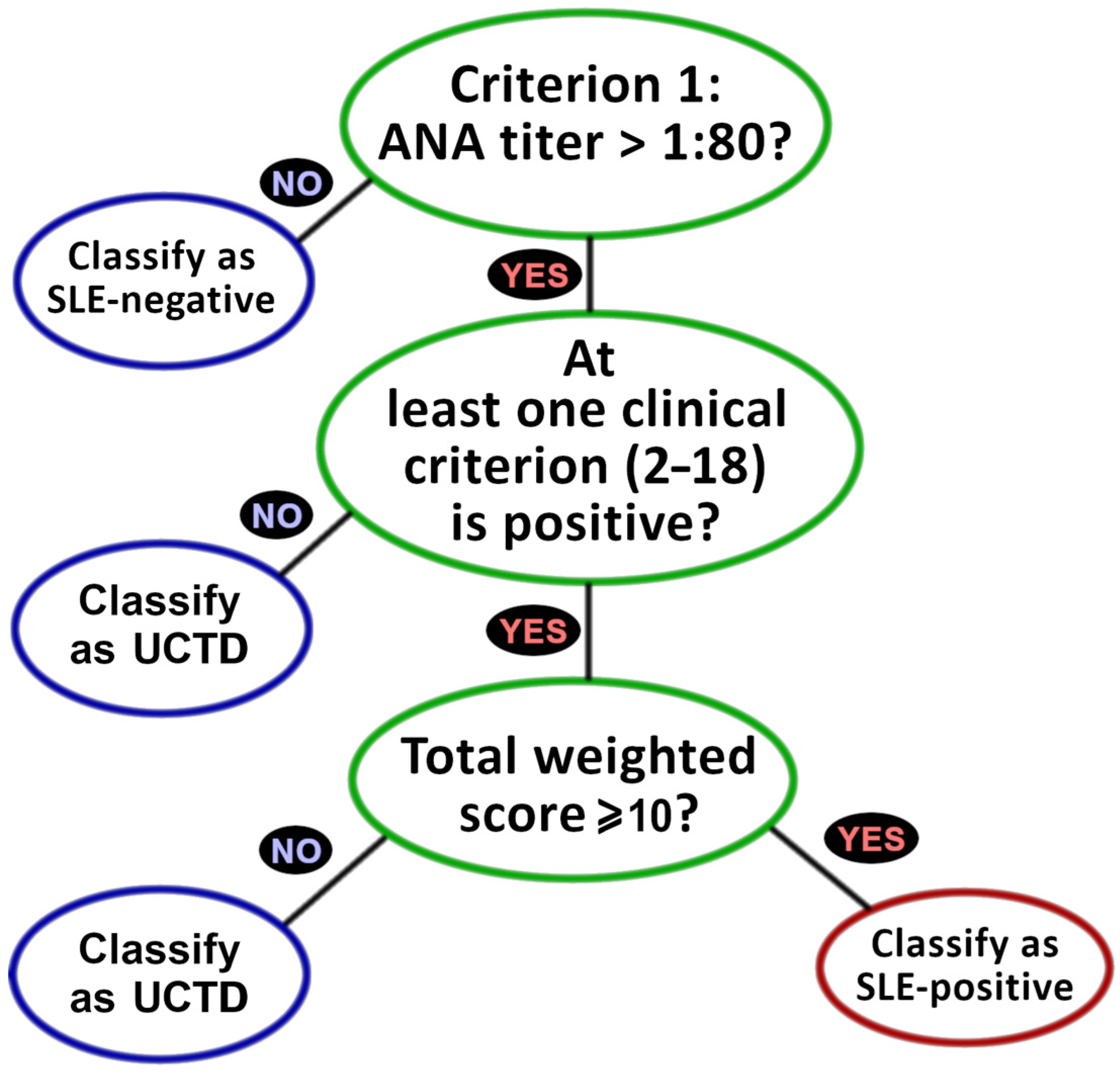

1.3. Applications to SLE Classification Criteria

2. Materials and Methods

2.1. Available Medical Records

- A total of 39 individuals whose pre-classification case histories [24] (hereafter called ‘pre-SC’) covered 1+ years, ending with clinical evaluation for SLE, via either the ACR 1997 or EULAR 2019 protocols.

- A total of 39 individuals with confirmed SLE cases [25] (hereafter called ‘post-SC’) covering 1+ years, all beginning at some unspecified duration after prior SLE classification.

2.2. EULAR 2019 Criteria

2.3. Search Parameters and Prompt Specifications

2.4. Criterial Assessment Procedure

2.5. Statistical Analysis

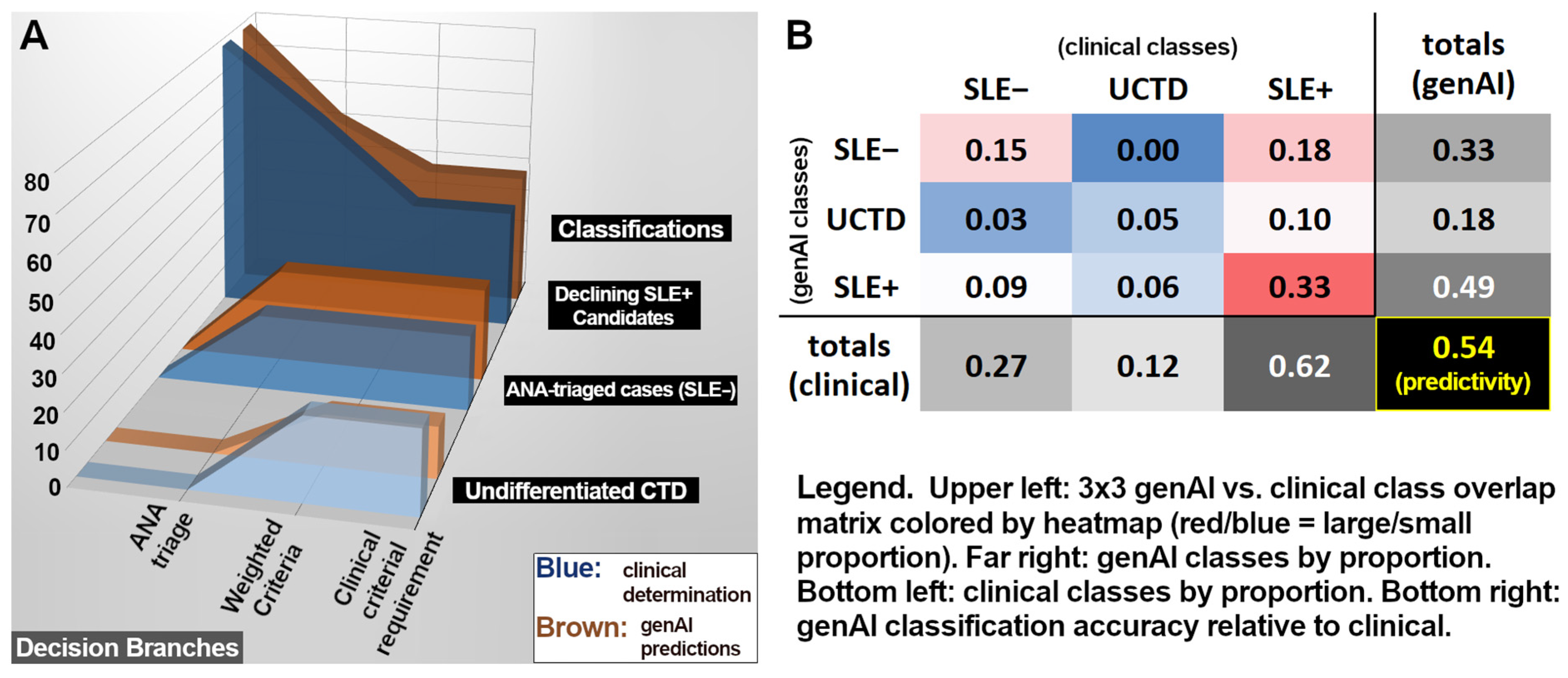

3. Results

3.1. ANA Results

3.2. Criteria Differentiating UCTD Versus SLE+

4. Discussion

4.1. GenAI Progress Toward ANA Determination

4.2. GenAI Progress Toward Criterial Discrimination Between UCTD and SLE+

4.3. Sampling Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| acute cutaneous lupus | ACL |

| American College of Rheumatology | ACR |

| anti-dsDNA/anti-Smith antibodies | ADS |

| artificial intelligence | AI |

| autoimmune hemolysis | AIH |

| antinuclear antibody | ANA |

| ANA negative | ANA− |

| ANA positive | ANA+ |

| anti-phospholipid antibodies | APL |

| Amazon web services | AWS |

| confidence interval | CI |

| low C3 AND low C4 | C3+4 |

| low C3 OR low C4 | C3/4 |

| European Alliance of Associations for Rheumatology | EULAR |

| false negative | FN |

| false positive | FP |

| generative artificial intelligence | genAI |

| institutional review board | IRB |

| large language model | LLM |

| lupus nephritis class II/V | LN25 |

| lupus nephritis class III/IV | LN34 |

| medical record | MR |

| natural language processing | NLP |

| negative predictive value | NPV |

| non-scarring alopecia | NSA |

| post-SLE classification | post-SC |

| pleural/pericardial effusion | PPE |

| positive predictive value | PPV |

| pre-SLE classification | pre-SC |

| systemic lupus erythematosus | SLE |

| SLE negative | SLE− |

| SLE positive | SLE+ |

| subacute cutan./discoid lupus | SCD |

| thrombocytopenia | Thromb. |

| true negative | TN |

| true positive | TP |

| undifferentiated connective tissue disorder | UCTD |

Appendix A

| Clinical Determination | genAI Predictions | |||||||

|---|---|---|---|---|---|---|---|---|

| Criteria | Pos. | Neg. | Unspec. | Sens. | Spec. | PPV | NPV | Consistency |

| 1. ANA | 57 # | 21 @ | 0 | 0.75 | 0.57 | 0.83 | 0.46 | 1 |

| 2. Fever | 1 | 14 | 63 | 0 | 1 | n/a | 0.93 | 1 |

| 3. Leukopenia | 1 | 14 | 63 | 0 | 0.93 | 0 | 0.93 | 1 |

| 4. Thromb. | 0 | 15 | 63 | n/a | 1 | n/a | 1 | 0.99 |

| 5. AIH | 0 | 15 | 63 | n/a | 1 | n/a | 1 | 1 |

| 6. Delirium | 0 | 15 | 63 | n/a | 1 | n/a | 1 | 1 |

| 7. Psychosis | 0 | 15 | 63 | n/a | 1 | n/a | 1 | 1 |

| 8. Seizure | 0 | 15 | 63 | n/a | 0.8 | 0 | 1 | 1 |

| 9. NSA | 4 | 11 | 63 | 0.5 | 0.91 | 0.67 | 0.83 | 1 |

| 10. Oral ulcers | 5 | 10 | 63 | 0.2 | 0.8 | 0.33 | 0.67 | 1 |

| 11. SCD | 0 | 15 | 63 | n/a | 1 | n/a | 1 | 1 |

| 12. ACL | 0 | 15 | 63 | n/a | 1 | n/a | 1 | 1 |

| 13. PPE | 0 | 15 | 63 | n/a | 1 | n/a | 1 | 1 |

| 14. Acute pericarditis | 0 | 15 | 63 | n/a | 1 | n/a | 1 | 1 |

| 15. Joint involvement | 2 | 13 | 63 | 1 | 0.15 | 0.15 | 1 | 1 |

| 16. Proteinuria | 0 | 15 | 63 | n/a | 0.53 | 0 | 1 | 0.99 |

| 17. LN25 | 0 | 15 | 63 | n/a | 1 | n/a | 1 | 1 |

| 18. LN34 | 0 | 15 | 63 | n/a | 1 | n/a | 1 | 1 |

| 19. APL | 0 | 15 | 63 | n/a | 0.67 | 0 | 1 | 1 |

| 20. Low C3/4 | 0 | 15 | 63 | n/a | 1 | n/a | 1 | 0.97 |

| 21. Low C3+4 | 0 | 15 | 63 | n/a | 1 | n/a | 1 | 1 |

| 22. ADS | 0 | 15 | 63 | n/a | 0.87 | 0 | 1 | 0.99 |

| Total (±95% c.i) | 70 | 323 | 1323 | 0.69 ±0.11 | 0.87 ±0.04 | 0.54 ±0.10 | 0.93 ±0.03 | 1 ±0.00 |

Appendix B

| Counts | Influence [Wilson 95% Confidence Interval] | |||

|---|---|---|---|---|

| SLE+ | UCTD | SLE+ (Equation (1a)) | UCTD (Equation (1b)) | |

| 2. Fever | 5 | 1 | 0.227 [0.092, 0.506] | 0.200 [0.010, 0.913] |

| 3. Leukopenia | 19 | 1 | 1.295 [0.801, 1.767] | 0.300 [0.015, 1.368] |

| 4. Thromb. | 5 | 0 | 0.455 [0.184, 1.012] | 0.000 [0.000, 0.000] |

| 5. AIH | 3 | 0 | 0.273 [0.072, 0.788] | 0.000 [0.000, 0.000] |

| 6. Delirium | 2 | 0 | 0.091 [0.016, 0.334] | 0.000 [0.000, 0.000] |

| 7. Psychosis | 1 | 0 | 0.068 [0.006, 0.405] | 0.000 [0.000, 0.000] |

| 8. Seizure | 1 | 0 | 0.045 [0.004, 0.270] | 0.000 [0.000, 0.000] |

| 9. NSA | 10 | 5 | 0.455 [0.240, 0.764] | 1.000 [0.402, 1.598] |

| 10. Oral ulcers | 19 | 4 | 0.864 [0.534, 1.178] | 0.800 [0.274, 1.452] |

| 11. SCD | 2 | 0 | 0.182 [0.032, 0.668] | 0.000 [0.000, 0.000] |

| 12. ACL | 5 | 1 | 0.682 [0.276, 1.518] | 0.600 [0.030, 2.736] |

| 13. PPE | 2 | 0 | 0.227 [0.040, 0.835] | 0.000 [0.000, 0.000] |

| 14. Acute pericarditis | 2 | 0 | 0.273 [0.048, 1.002] | 0.000 [0.000, 0.000] |

| 15. Joint involvement | 40 | 8 | 5.455 [4.644, 5.826] | 4.800 [2.652, 5.790] |

| 16. Proteinuria | 9 | 5 | 0.818 [0.412, 1.432] | 2.000 [0.804, 3.196] |

| 17. LN25 | 0 | 0 | 0.000 [0.000, 0.000] | 0.000 [0.000, 0.000] |

| 18. LN34 | 1 | 0 | 0.227 [0.020, 1.350] | 0.000 [0.000, 0.000] |

| 19. APL | 9 | 1 | 0.409 [0.206, 0.716] | 0.200 [0.010, 0.912] |

| 20. Low C3/4 | 14 | 0 | 0.955 [0.573, 1.431] | 0.000 [0.000, 0.000] |

| 21. Low C3+4 | 4 | 0 | 0.364 [0.120, 0.904] | 0.000 [0.000, 0.000] |

| 22. ADS | 23 | 0 | 3.136 [2.214, 4.038] | 0.000 [0.000, 0.000] |

Appendix C

- Study Inclusion Exclusion Criteria

References

- Joo, J.Y. Fragmented care and chronic illness patient outcomes: A systematic review. Nurs. Open 2023, 10, 3460–3473. [Google Scholar] [CrossRef]

- Wong, Z.S.-Y.; Gong, Y.; Ushiro, S. A pathway from fragmentation to interoperability through standards-based enterprise architecture to enhance patient safety. npj Digit. Med. 2025, 8, 41. [Google Scholar] [CrossRef]

- Walunas, T.L.; Jackson, K.L.; Chung, A.H.; Mancera-Cuevas, K.A.; Erickson, D.L.; Ramsey-Goldman, R.; Kho, A. Disease Outcomes and Care Fragmentation Among Patients with Systemic Lupus Erythematosus. Arthritis Care Res. 2017, 69, 1369–1376. [Google Scholar] [CrossRef] [PubMed]

- Khairat, S.; Morelli, J.; Boynton, M.H.; Bice, T.; A Gold, J.; Carson, S.S. Investigation of Information Overload in Electronic Health Records: Protocol for Usability Study. JMIR Res. Protoc. 2025, 14, e66127. [Google Scholar] [CrossRef]

- Asgari, E.; Kaur, J.; Nuredini, G.; Balloch, J.; Taylor, A.M.; Sebire, N.; Robinson, R.; Peters, C.; Sridharan, S.; Pimenta, D. Impact of Electronic Health Record Use on Cognitive Load and Burnout Among Clinicians: Narrative Review. JMIR Med. Inform. 2024, 12, e55499. [Google Scholar] [CrossRef]

- Cahill, M.; Cleary, B.J.; Cullinan, S. The influence of electronic health record design on usability and medication safety: Systematic review. BMC Health Serv. Res. 2025, 25, 31. [Google Scholar] [CrossRef]

- Nijor, S.; Rallis, G.B.; Lad, N.; Gokcen, E. Patient Safety Issues from Information Overload in Electronic Medical Records. J. Patient Saf. 2022, 18, e999–e1003. [Google Scholar] [CrossRef] [PubMed]

- Reddy, S. Generative AI in healthcare: An implementation science informed translational path on application, integration and governance. Implement. Sci. 2024, 19, 27. [Google Scholar] [CrossRef] [PubMed]

- Sequí-Sabater, J.M.; Benavent, D. Artificial intelligence in rheumatology research: What is it good for? RMD Open 2025, 11, e004309. [Google Scholar] [CrossRef]

- Templin, T.; Perez, M.W.; Sylvia, S.; Leek, J.; Sinnott-Armstrong, N.; Silva, J.N.A. Addressing 6 challenges in generative AI for digital health: A scoping review. PLoS Digit. Health 2024, 3, e0000503. [Google Scholar] [CrossRef]

- Chustecki, M. Benefits and Risks of AI in Health Care: Narrative Review. Interact. J. Med. Res. 2024, 13, e53616. [Google Scholar] [CrossRef]

- Hassan, R.; Faruqui, H.; Alquraa, R.; Eissa, A.; Alshaiki, F.; Cheikh, M. Classification Criteria and Clinical Practice Guidelines for Rheumatic Diseases. In Skills in Rheumatology; Springer: Singapore, 2021; pp. 521–566. [Google Scholar] [CrossRef]

- June, R.R.; Aggarwal, R. The use and abuse of diagnostic/classification criteria. Best Pract. Res. Clin. Rheumatol. 2014, 28, 921–934. [Google Scholar] [CrossRef]

- Aletaha, D.; Neogi, T.; Silman, A.J.; Funovits, J.; Felson, D.T.; Bingham, C.O., 3rd; Birnbaum, N.S.; Burmester, G.R.; Bykerk, V.P.; Cohen, M.D.; et al. 2010 Rheumatoid arthritis classification criteria: An American College of Rheumatology/European League Against Rheumatism collaborative initiative. Ann. Rheum. Dis. 2010, 69, 1580–1588. [Google Scholar] [CrossRef]

- Van den Hoogen, F.; Khanna, D.; Fransen, J.; Johnson, S.R.; Baron, M.; Tyndall, A.; Matucci-Cerinic, M.; Naden, R.P.; Medsger, T.A., Jr.; Carreira, P.E.; et al. 2013 classification criteria for systemic sclerosis: An American college of rheumatology/European league against rheumatism collaborative initiative. Ann. Rheum. Dis. 2013, 72, 1747–1755. [Google Scholar] [CrossRef]

- Neogi, T.; Jansen, T.L.T.A.; Dalbeth, N.; Fransen, J.; Schumacher, H.R.; Berendsen, D.; Brown, M.; Choi, H.; Edwards, N.L.; Janssens, H.J.E.M.; et al. 2015 Gout Classification Criteria: An American College of Rheumatology/European League Against Rheumatism collaborative initiative. Arthritis Rheumatol. 2015, 67, 2557–2568. [Google Scholar] [CrossRef]

- Wolfe, F.; Clauw, D.J.; Fitzcharles, M.-A.; Goldenberg, D.L.; Katz, R.S.; Mease, P.; Russell, A.S.; Russell, I.J.; Winfield, J.B.; Yunus, M.B. The American College of rheumatology preliminary diagnostic criteria for fibromyalgia and measurement of symptom severity. Arthritis Care Res. 2010, 62, 600–610. [Google Scholar] [CrossRef] [PubMed]

- Taylor, W.; Gladman, D.; Helliwell, P.; Marchesoni, A.; Mease, P.; Mielants, H.; CASPAR Study Group. Classification criteria for psoriatic arthritis: Development of new criteria from a large international study. Arthritis Care Res. 2006, 54, 2665–2673. [Google Scholar] [CrossRef] [PubMed]

- Lundberg, I.E.; Msc, A.T.; Bottai, M.; Werth, V.P.; Mbbs, M.C.P.; de Visser, M.; Alfredsson, L.; Amato, A.A.; Barohn, R.J.; Liang, M.H.; et al. 2017 European League Against Rheumatism/American College of Rheumatology Classification Criteria for Adult and Juvenile Idiopathic Inflammatory Myopathies and Their Major Subgroups. Arthritis Rheumatol. 2017, 69, 2271–2282. [Google Scholar] [CrossRef] [PubMed]

- Aringer, M.; Costenbader, K.; Daikh, D.; Brinks, R.; Mosca, M.; Ramsey-Goldman, R.; Smolen, J.S.; Wofsy, D.; Boumpas, D.T.; Kamen, D.L.; et al. 2019 European League Against Rheumatism/American College of Rheumatology Classification Criteria for Systemic Lupus Erythematosus. Arthritis Rheumatol. 2019, 71, 1400–1412. [Google Scholar] [CrossRef]

- Nair, S.; Lushington, G.H.; Purushothaman, M.; Rubin, B.; Jupe, E.; Gattam, S. Prediction of Lupus Classification Criteria via Generative AI Medical Record Profiling. BioTech 2025, 14, 15. [Google Scholar] [CrossRef]

- Claude, version 3.0; Anthropic: San Francisco, CA, USA, 2024.

- Hochberg, M.C. Updating the American College of Rheumatology revised criteria for the classification of systemic lupus ery-thematosus. Arthritis Rheum. 1997, 40, 1725. [Google Scholar] [CrossRef]

- Jupe, E.; Nadipelli, V.; Lushington, G.; Crawley, J.; Rubin, B.; Nair, S.; Nair, S.; Purushothaman, M.; Munroe, M.; Walker, C.; et al. Expediting lupus classification of at-risk individuals using novel technology: Outcomes of a pilot study. J. Rheumatol. 2025, 52 (Suppl. 1), 214. [Google Scholar] [CrossRef]

- Jupe, E.R.; Purushothaman, M.; Wang, B.; Lushington, G.; Nair, S.; Nadipelli, V.R.; Rubin, B.; Munroe, M.E.; Crawley, J.; Nair, S.; et al. Impact of a digital platform and flare risk blood biomarker index on lupus: A study protocol design for evaluating self efficacy and disease management. Contemp. Clin. Trials Commun. 2025, 45, 101471. [Google Scholar] [CrossRef] [PubMed]

- Belval, E.; Delteil, T.; Schade, M.; Radhakrishna, S. Amazon Textract, version 1.9.2.; Amazon Web Services: Seattle, DC, USA, 2025.

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.-T.; Rocktäschel, T.; et al. Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks. Adv. Neural Inf. Process. Syst. 2020, 33, 9459–9474. [Google Scholar]

- Chen, B.; Zhang, Z.; Langrené, N.; Zhu, S. Unleashing the potential of prompt engineering for large language models. Patterns 2025, 6, 101260. [Google Scholar] [CrossRef] [PubMed]

- Claude, version 3.5; Anthropic: San Francisco, CA, USA, 2025.

- Johnson, W.B.; Lindenstrauss, J. Extensions of Lipschitz mappings into a Hilbert space. Contemp. Math. 1984, 26, 189–206. [Google Scholar] [CrossRef]

- Sauro, J.; Lewis, J.R. Comparison of Wald, Adj-Wald, exact, and Wilson intervals calculator. In Proceedings of the Human Factors and Ergonomics Society, 49th Annual Meeting (HFES 2005), Orlando, FL, USA, 26–30 September 2005; pp. 2100–2104. [Google Scholar]

- VBommineni, L.; Bhagwagar, S.; Balcarcel, D.; Davatzikos, C.; Boyer, D. Performance of ChatGPT on the MCAT: The Road to Personalized and Equitable Premedical Learning. MedRxiv 2023. [Google Scholar] [CrossRef]

- Takita, H.; Kabata, D.; Walston, S.L.; Tatekawa, H.; Saito, K.; Tsujimoto, Y.; Miki, Y.; Ueda, D. A systematic review and meta-analysis of diagnostic performance comparison between generative AI and physicians. npj Digit. Med. 2025, 8, 175. [Google Scholar] [CrossRef]

- Ali, Y. Rheumatologic Tests: A Primer for Family Physicians. Am. Fam. Physician 2018, 98, 164–170. [Google Scholar]

- Solomon, D.H.; Kavanaugh, A.J.; Schur, P.H.; American College of Rheumatology Ad Hoc Committee on Immunologic Testing Guidelines. Evidence-based guidelines for the use of immunologic tests: Antinuclear antibody testing. Arthritis Care Res. 2002, 47, 434–444. [Google Scholar] [CrossRef]

- Verizhnikova, Z.; Aleksandrova, E.; Novikov, A.; Panafidina, T.; Seredavkina, N.; Roggenbuck, D.; Nasonov, E. AB1027 Diagnostic Accuracy of Automated Determination of Antinuclear Antibodies (ANA) by Indirect REAction of Immunofluorescence on Human Hep-2 Cells (IIF-HEP-2) and Enzyme-Linked Immunosorbent Assay (ELISA) for Diagnosis of Systemic Lupus Erythematosus (SLE). Ann. Rheum. Dis. 2014, 73, 1140. [Google Scholar] [CrossRef]

- Chen, Y.; Esmaeilzadeh, P. Generative AI in Medical Practice: In-Depth Exploration of Privacy and Security Challenges. J. Med. Internet Res. 2024, 26, e53008. [Google Scholar] [CrossRef]

- Xu, R.; Wang, Z. Generative artificial intelligence in healthcare from the perspective of digital media: Applications, opportunities and challenges. Heliyon 2024, 10, e32364. [Google Scholar] [CrossRef]

- Bhuyan, S.S.; Sateesh, V.; Mukul, N.; Galvankar, A.; Mahmood, A.; Nauman, M.; Rai, A.; Bordoloi, K.; Basu, U.; Samuel, J. Generative Artificial Intelligence Use in Healthcare: Opportunities for Clinical Excellence and Administrative Efficiency. J. Med. Syst. 2025, 49, 10. [Google Scholar] [CrossRef]

- Loni, M.; Poursalim, F.; Asadi, M.; Gharehbaghi, A. A review on generative AI models for synthetic medical text, time series, and longitudinal data. npj Digit. Med. 2025, 8, 281. [Google Scholar] [CrossRef]

- Spillias, S.; Ollerhead, K.M.; Andreotta, M.; Annand-Jones, R.; Boschetti, F.; Duggan, J.; Karcher, D.B.; Paris, C.; Shellock, R.J.; Trebilco, R. Evaluating generative AI for qualitative data extraction in community-based fisheries management literature. Environ. Evid. 2025, 14, 9. [Google Scholar] [CrossRef] [PubMed]

- Safdar, M.; Xie, J.; Mircea, A.; Zhao, Y.F. Human–Artificial Intelligence Teaming for Scientific Information Extraction from Data-Driven Additive Manufacturing Literature Using Large Language Models. J. Comput. Inf. Sci. Eng. 2025, 25, 074501. [Google Scholar] [CrossRef]

- Li, Y.; Datta, S.; Rastegar-Mojarad, M.; Lee, K.; Paek, H.; Glasgow, J.; Liston, C.; He, L.; Wang, X.; Xu, Y. Enhancing systematic literature reviews with generative artificial intelligence: Development, applications, and performance evaluation. J. Am. Med. Inform. Assoc. 2025, 32, 616–625. [Google Scholar] [CrossRef]

- Shahid, F.; Hsu, M.-H.; Chang, Y.-C.; Jian, W.-S. Using Generative AI to Extract Structured Information from Free Text Pathology Reports. J. Med. Syst. 2025, 49, 36. [Google Scholar] [CrossRef] [PubMed]

- Hasan, S.S.; Fury, M.S.; Woo, J.J.; Kunze, K.N.; Ramkumar, P.N. Ethical Application of Generative Artificial Intelligence in Medicine. Arthrosc. J. Arthrosc. Relat. Surg. 2024, 41, 874–885. [Google Scholar] [CrossRef] [PubMed]

- Tran, M.; Balasooriya, C.; Jonnagaddala, J.; Leung, G.K.-K.; Mahboobani, N.; Ramani, S.; Rhee, J.; Schuwirth, L.; Najafzadeh-Tabrizi, N.S.; Semmler, C.; et al. Situating governance and regulatory concerns for generative artificial intelligence and large language models in medical education. npj Digit. Med. 2025, 8, 315. [Google Scholar] [CrossRef] [PubMed]

- Ning, Y.; Teixayavong, S.; Shang, Y.; Savulescu, J.; Nagaraj, V.; Miao, D.; Mertens, M.; Ting, D.S.W.; Ong, J.C.L.; Liu, M.; et al. Generative artificial intelligence and ethical considerations in health care: A scoping review and ethics checklist. Lancet Digit. Health 2024, 6, e848–e856. [Google Scholar] [CrossRef] [PubMed]

- Yim, D.; Khuntia, J.; Parameswaran, V.; Meyers, A. Preliminary Evidence of the Use of Generative AI in Health Care Clinical Services: Systematic Narrative Review. JMIR Med. Inform. 2024, 12, e52073. [Google Scholar] [CrossRef]

- Sjöwall, C.; Parodis, I. Clinical Heterogeneity, Unmet Needs and Long-Term Outcomes in Patients with Systemic Lupus Erythematosus. J. Clin. Med. 2022, 11, 6869. [Google Scholar] [CrossRef]

- Dai, X.; Fan, Y.; Zhao, X. Systemic lupus erythematosus: Updated insights on the pathogenesis, diagnosis, prevention and therapeutics. Signal Transduct. Target. Ther. 2025, 10, 102. [Google Scholar] [CrossRef]

- Karlson, E.W.; Sanchez-Guerrero, J.; Wright, E.A.; Lew, R.A.; Daltroy, L.H.; Katz, J.N.; Liang, M.H. A connective tissue disease screening questionnaire for population studies. Ann. Epidemiol. 1995, 5, 297–302. [Google Scholar] [CrossRef]

- Karlson, E.W.; Costenbader, K.H.; McAlindon, T.E.; Massarotti, E.M.; Fitzgerald, L.M.; Jajoo, R.; Husni, E.; Wright, E.A.; Pankey, H.; Fraser, P.A. High sensitivity, specificity and predictive value of the Connective Tissue Disease Screening Questionnaire among urban African-American women. Lupus 2005, 14, 832–836. [Google Scholar] [CrossRef] [PubMed]

- Petri, M.; Orbai, A.; Alarcón, G.S.; Gordon, C.; Merrill, J.T.; Fortin, P.R.; Bruce, I.N.; Isenberg, D.; Wallace, D.J.; Nived, O.; et al. Derivation and validation of the Systemic Lupus International Collaborating Clinics classification criteria for systemic lupus erythematosus. Arthritis Rheum. 2012, 64, 2677–2686. [Google Scholar] [CrossRef]

| Criteria (Abbreviation) | Role (Clin-Dets) | Data Type | Weight |

|---|---|---|---|

| 1. Antinuclear antibodies (ANA) | Required (78) | quantitative test | - |

| 2. Fever | Clinical (15) | quantitative test | 2 |

| 3. Leukopenia | Clinical (15) | quantitative test | 3 |

| 4. Thrombocytopenia (Thromb.) | Clinical (15) | quantitative test | 4 |

| 5. Autoimmune hemolysis (AIH) | Clinical (15) | quantitative test | 4 |

| 6. Delirium | Clinical (15) | qualitative exam | 2 |

| 7. Psychosis | Clinical (15) | qualitative exam | 3 |

| 8. Seizure | Clinical (15) | qualitative exam | 2 |

| 9. Non-scarring alopecia (NSA) | Clinical (15) | qualitative exam | 2 |

| 10. Oral ulcers | Clinical (15) | qualitative exam | 2 |

| 11. Subacute cutan./discoid lupus (SCD) | Clinical (15) | qualitative exam | 4 |

| 12. Acute cutaneous lupus (ACL) | Clinical (15) | quantitative test | 6 |

| 13. Pleural/pericardial effusion (PPE) | Clinical (15) | qualitative imaging | 5 |

| 14. Acute pericarditis | Clinical (15) | qualitative or imaging | 6 |

| 15. Joint involvement | Clinical (15) | qualitative exam | 6 |

| 16. Proteinuria | Clinical (15) | quantitative test | 4 |

| 17. Lupus nephritis class II/V (LN25) | Clinical (15) | separate classification | 8 |

| 18. Lupus nephritis class III/IV (LN34) | Clinical (15) | separate classification | 10 |

| 19. Anti-phospholipid antibodies (APL) | Immunolog. (15) | quantitative test | 2 |

| 20. Low C3 OR low C4 (C3/4) | Immunolog. (15) | quantitative test | 3 |

| 21. Low C3 AND low C4 (C3+4) | Immunolog. (15) | quantitative test | 4 |

| 22. Anti-dsDNA/anti-Smith antibodies (ADS) | Immunolog. (15) | quantitative test | 6 |

| Clinical Determination | genAI Predictions | ||||||

|---|---|---|---|---|---|---|---|

| Key Criteria | Pos. | Neg. | Unspecified | Sens. | Spec. | PPV | NPV |

| 1. ANA | 57 # | 21 @ | 0 | 0.75 | 0.57 | 0.83 | 0.46 |

| 3. Leukopenia | 1 | 14 | 63 | 0 | 0.93 | 0 | 0.93 |

| 9. NSA | 4 | 11 | 63 | 0.5 | 0.91 | 0.67 | 0.83 |

| 10. Oral ulcers | 5 | 10 | 63 | 0.2 | 0.8 | 0.33 | 0.67 |

| 15. Joint involvement | 2 | 13 | 63 | 1 | 0.15 | 0.15 | 1 |

| 16. Proteinuria | 0 | 15 | 63 | n/a | 0.53 | n/a | 1 |

| 20. Low C3/4 | 0 | 15 | 63 | n/a | 1 | n/a | 1 |

| 22. ADS | 0 | 15 | 63 | n/a | 0.87 | n/a | 1 |

| Total (all 22 criteria) (± 95% c.i) | 70 | 323 | 1323 | 0.69 ±0.11 | 0.87 ±0.04 | 0.54 ±0.10 | 0.93 ±0.03 |

| Total | Numerical (e.g., Titer > 1:80 or Titer < 1:80) | Phrase (e.g., “ANA Positive” or “ANA Negative”) | Not Reported in MR | |

|---|---|---|---|---|

| TP | 43 | 32 | 11 | 0 |

| FN | 14 | 0 | 0 | 14 |

| FP | 9 | 6 | 3 | 0 |

| TN | 12 | 1 | 7 | 4 |

| Criterial genAI Positives | Influence [Wilson 95% Confi-dence Interval] | |||

|---|---|---|---|---|

| SLE+ | UCTD | SLE+ (Equation (1a)) | UCTD (Equation (1b)) | |

| 3. Leukopenia | 19 | 1 | 1.295 [0.801, 1.767] | 0.300 [0.015, 1.368] |

| 9. NSA | 10 | 5 | 0.455 [0.240, 0.764] | 1.000 [0.402, 1.598] |

| 10. Oral ulcers | 19 | 4 | 0.864 [0.534, 1.178] | 0.800 [0.274, 1.452] |

| 15. Joint involvement | 40 | 8 | 5.455 [4.644, 5.826] | 4.800 [2.652, 5.790] |

| 16. Proteinuria | 9 | 5 | 0.818 [0.412, 1.432] | 2.000 [0.804, 3.196] |

| 20. Low C3/4 | 14 | 0 | 0.955 [0.573, 1.431] | 0.000 [0.000, 0.000] |

| 22. ADS | 23 | 0 | 3.136 [2.214, 4.038] | 0.000 [0.000, 0.000] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lushington, G.H.; Nair, S.; Jupe, E.R.; Rubin, B.; Purushothaman, M. Criteria and Protocol: Assessing Generative AI Efficacy in Perceiving EULAR 2019 Lupus Classification. Diagnostics 2025, 15, 2409. https://doi.org/10.3390/diagnostics15182409

Lushington GH, Nair S, Jupe ER, Rubin B, Purushothaman M. Criteria and Protocol: Assessing Generative AI Efficacy in Perceiving EULAR 2019 Lupus Classification. Diagnostics. 2025; 15(18):2409. https://doi.org/10.3390/diagnostics15182409

Chicago/Turabian StyleLushington, Gerald H., Sandeep Nair, Eldon R. Jupe, Bernard Rubin, and Mohan Purushothaman. 2025. "Criteria and Protocol: Assessing Generative AI Efficacy in Perceiving EULAR 2019 Lupus Classification" Diagnostics 15, no. 18: 2409. https://doi.org/10.3390/diagnostics15182409

APA StyleLushington, G. H., Nair, S., Jupe, E. R., Rubin, B., & Purushothaman, M. (2025). Criteria and Protocol: Assessing Generative AI Efficacy in Perceiving EULAR 2019 Lupus Classification. Diagnostics, 15(18), 2409. https://doi.org/10.3390/diagnostics15182409