Abstract

Background: Segmenting nerve fibres in histological images is a tricky job because of how much the tissue looks can change. Modern neural network architectures, including U-Net and transformers, demonstrate varying degrees of effectiveness in this area. The aim of this study is to conduct a comparative analysis of the SegFormer, VGG-UNet, and FabE-Net models in terms of segmentation quality and speed. Methods: The training sample consisted of more than 75,000 pairs of images of different tissues (original slice and corresponding mask), scaled from 1024 × 1024 to 224 × 224 pixels to optimise computations. Three neural network architectures were used: the classic VGG-UNet, FabE-Net with attention and global context perception blocks, and the SegFormer transformer model. For an objective assessment of the quality of the models, expert validation was carried out with the participation of four independent pathologists, who evaluated the quality of segmentation according to specified criteria. Quality metrics (precision, recall, F1-score, accuracy) were calculated as averages based on the assessments of all experts, which made it possible to take into account variability in interpretation and increase the reliability of the results. Results: SegFormer achieved stable stabilisation of the loss function faster than the other models—by the 20–30th epoch, compared to 45–60 epochs for VGG-UNet and FabE-Net. Despite taking longer to train per epoch, SegFormer produced the best segmentation quality, with the following metrics: precision 0.84, recall 0.99, F1-score 0.91 and accuracy 0.89. It also annotated a complete histological section in the fastest time. Visual analysis revealed that, compared to other models, which tended to produce incomplete or excessive segmentation, SegFormer more accurately and completely highlights nerve structures. Conclusions: Using attention mechanisms in SegFormer compensates for morphological variability in tissues, resulting in faster and higher-quality segmentation. Image scaling does not impair training quality while significantly accelerating computational processes. These results confirm the potential of SegFormer for practical use in digital pathology, while also highlighting the need for high-precision, immunohistochemistry-informed labelling to improve segmentation accuracy.

1. Introduction

Modern medicine is increasingly oriented towards standardisation, acceleration, and enhanced accuracy in diagnostics, with digital pathology playing a pivotal role in this transformation. This approach entails digitising histological specimens and subsequently processing them using computer algorithms, enabling pathologists to identify structures, perform morphometric analyses, and, in some cases, detect patterns that may not be apparent to the human eye.

Conventional image analysis techniques—such as thresholding, morphological operations, and texture analysis—have generally shown limited effectiveness when applied to histological data. The main challenges include high variability in staining, the presence of artefacts, and the intricate morphology of biological structures.

The first notable study to propose an alternative methodology was conducted by Sjöström et al. (1999) [1]. The authors employed a partially fully connected neural network (NN) with two hidden layers (28–32 and 4–5 neurons, respectively) to automate cell counting in histological sections. The input layer processed image fragments of 24 × 24 or 48 × 48 pixels. The results demonstrated that the NN achieved accuracy comparable to manual counting (R2 ≈ 0.85–0.94) while offering a six-fold increase in processing speed (~25 s versus ~3 min for an expert). This work marked a key milestone in the adoption of neural networks within digital pathology.

At the turn of the 2000s, fully connected architectures were progressively replaced by convolutional neural networks (CNNs). One of the earliest studies in this area was conducted by Malon et al. [2], who employed a CNN similar to LeNet-5 to analyse histological images of biopsy specimens from breast and gastric cancer patients, despite the limited amount of training data available. The model demonstrated high accuracy in recognising colonic crypts (area under the curve, AUC ≈ 1) and achieved efficiency comparable to that of pathologists in identifying mitotic figures (κ = 0.40 versus 0.45–0.64 for physicians), while also yielding good results in segmenting ring-shaped cells.

These and subsequent studies have significantly advanced the field: as of 2025, more than 150 publications have been devoted to the application of CNNs in histological image processing.

CNNs are a specialised class of neural networks (NNs) designed for processing structured data, such as images. Their defining feature is the use of convolutional layers, which apply sets of filters (convolution kernels) to the input data, scanning the image and detecting local patterns. At the initial stages, the network identifies simple features such as edges, textures, and geometric shapes (e.g., lines and circles). In deeper layers, the CNN integrates these elementary features into more complex representations, including cell nuclei, tissue-specific morphological characteristics, and even entire histological patterns.

The effectiveness of CNNs in feature extraction can be attributed to two principal mechanisms:

- Hierarchical learning: The network automatically constructs a hierarchy of features, beginning with low-level characteristics such as edges and textures, and progressing to high-level representations such as object shapes and their spatial relationships. This process is analogous to the functional principles of the human visual cortex.

- Transformation invariance: Through pooling operations, a CNN becomes robust to minor shifts, scaling, and distortions in images, while preserving the essential features [3].

CNN training is performed via backpropagation, in which the filter weights are iteratively adjusted to minimise the discrepancy between the network’s predictions and the ground-truth data. During the validation stage, the trained model applies the learned patterns to previously unseen images, thereby demonstrating its capacity for generalisation. Variations among CNN architectures (e.g., LeNet-5, ResNet, and U-Net) are determined by factors such as network depth, the types of layers used (convolutional, pooling, and fully connected), and the manner in which these layers are interconnected. This flexibility enables the architecture to be tailored to specific tasks, ranging from cell segmentation to tumour classification.

2. Materials and Methods

For the study, 64 histological sections stained with haematoxylin and eosin were selected. To enhance the model’s accuracy and its capacity to differentiate between various tissue types, the dataset included a diverse range of histological materials: 14 prostate tissue sections, 18 sections of the aorta and pulmonary artery, 5 sections of clitoral and vulvar tissue, 16 myocardial sections with epicardial tissue, 6 colon sections, and 5 liver tissue sections.

The study was conducted in accordance with the Declaration of Helsinki and was approved by the Ethics Committee of the Almazov National Medical Research Centre (Protocol No. 10-22 dated 3 October 2022). Written informed consent was obtained from all participants whose data could be potentially identifiable.

All samples were digitised using an Aperio AT2 histological scanner (Leica Biosystems, Vista, CA, USA) at 20× magnification, corresponding to approximately 300–400× magnification under an optical microscope. The resulting digital images were processed using Aperio ImageScope software v. 12.4 (Leica Biosystems Imaging, Vista, CA, USA), in which nerve fibres and ganglia were manually annotated. The annotation results were stored in XML files containing the precise coordinates of each identified object.

The next stage of data processing involved the implementation of two distinct approaches. For 60% of the samples, only regions containing nerve fibres and adjacent tissues were extracted, yielding fragments measuring 1024 × 1024 pixels. For the remaining 40%, the entire histological sections were tiled into non-overlapping squares of 1024 × 1024 pixels. For each selected area, a corresponding binary mask was generated, in which a value of 0 (black) denoted background tissue and a value of 1 (white) indicated the presence of nerve structures.

This processing resulted in a comprehensive training dataset comprising more than 75,000 image–mask pairs (original histological fragment and corresponding mask). For validation, 10% of the images were randomly selected to provide an unbiased estimate of model performance during training. In addition, model testing was performed on a specialised dataset containing seven myocardial scans, from which 5600 labelled images were generated to ensure independent evaluation on previously unseen histological material. To optimise computational efficiency, all images were resized from 1024 × 1024 pixels to 224 × 224 pixels. This conversion was performed for the following reasons:

- To significantly accelerate data processing.

- To reduce the computational load during the model training stage.

- To prepare the model for more effective application to real-world data.

- To ensure compliance with standard input dimensions used in most modern CNN architectures.

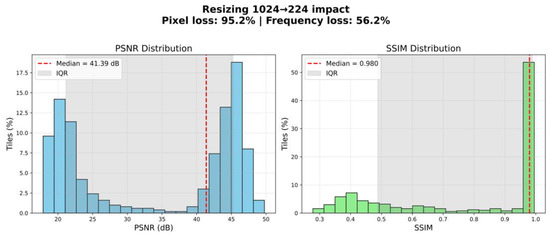

It should be noted that the scaling process preserved the key morphological characteristics of the tissues, as confirmed by visual quality control of the images following resizing. To verify that resizing did not compromise morphological integrity, we performed a quantitative evaluation of information loss. Specifically, we compared original and resized–upscaled tiles using peak signal-to-noise ratio (PSNR) and structural similarity index (SSIM). The analysis demonstrated a median PSNR of 41.39 dB (IQR: 21.14–45.24) and a median SSIM of 0.980 (IQR: 0.488–0.989). Although resizing theoretically implied a 95.2% reduction in pixel count and an estimated 56.3% loss of frequency content, the preserved structural similarity remained high (Figure 1).

Figure 1.

Estimating information loss during image resizing.

These findings indicate that the scaling process retained the essential morphological features of the tissues. In particular, large anatomical structures such as nerve fibres remained clearly distinguishable, ensuring the suitability of the resized dataset for the intended segmentation task. Thus, resizing to 224 × 224 pixels represents a justified balance between computational feasibility and preservation of biologically relevant information.

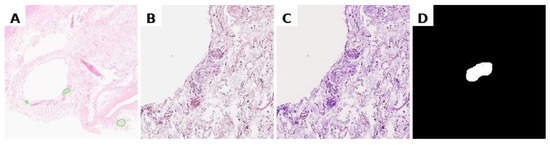

To minimise the impact of staining variability in histological preparations, image normalisation was performed using the Macenko method, which standardises tissue colour representation while preserving morphological features. The procedure involved: (1) converting the images to optical density space; (2) extracting the stain matrix using singular value decomposition (SVD); and (3) normalising stain concentrations relative to a reference sample (Figure 2). Binary masks were generated from polygonal annotations on whole-slide images using a custom Python script with OpenSlide. For each region of interest, the annotated area was cropped with a margin, and the corresponding binary mask was created by filling the annotation polygon (values 0/255). A full implementation of this method is briefly described in Supplementary Material (“Script for preprocessing svs-files to create mask–original image pairs.html”).

Figure 2.

Preprocessing data for training. (A) marking of the svs file in Aperio ImageScope, (B) obtaining a histological image tile with a nerve at specified coordinates, resizing to 224 × 224 pixels; (C) normalisation of the histological image; (D) binary segmentation mask.

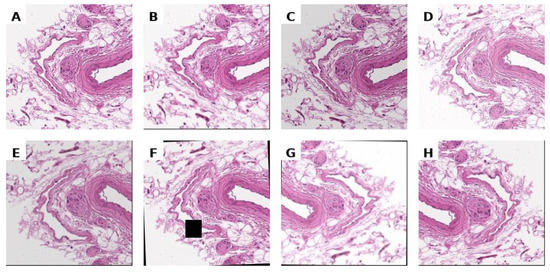

To increase the diversity of the training dataset and mitigate overfitting, we applied real-time data augmentation using the Albumentations library. The augmentation pipeline comprised: (1) geometric transformations, including random horizontal and vertical reflections as well as small-angle rotations up to ±15°; (2) photometric modifications, specifically random brightness and contrast adjustments; and (3) morphological distortions via elastic deformations and coarse dropout (random patch removal). All transformations were applied stochastically during training, ensuring that each input image was potentially unique across epochs. This strategy improved model robustness to variability in tissue morphology, staining, and acquisition artefacts without requiring duplication of the original samples. Examples of images after the application of augmentation are shown in Figure 3.

Figure 3.

Unnormalized histological image (A) and its arbitrary augmentations (B–H).

Three neural network architectures were employed for the segmentation of nerve fibres in histological images: VGG-UNet, FabE-Net, and SegFormer. The models were trained on a graphics processing unit (GPU) with 15 GB of RAM, using interactive environments provided by Kaggle and Google Colab (Python 3.13). The computational framework was primarily based on PyTorch 2.8 and TensorFlow 2.20 for deep learning. Data preprocessing and augmentation utilised Albumentations 0.0.10, OpenCV 4.12, scikit-image 0.25, and PIL 11.3. Additional numerical and utility operations were implemented with NumPy 2.3, scikit-learn 1.7, tqdm 4.67, and the Python standard library (os). Whole-slide image handling was performed with OpenSlide 3.4. Visualization and plotting relied on Matplotlib 3.10 and Seaborn 0.13.

2.1. The Model VGG-UNet

The VGG-UNet model is based on a modified U-Net architecture incorporating a VGG-type encoder. It accepts a 224 × 224 × 3 colour image as input and outputs a 224 × 224 × 1 binary mask representing the predicted location of nerve fibres.

The encoder is implemented using a pre-trained VGG-16 convolutional network comprising five blocks, each containing 2–3 Conv2D layers with ReLU activation, followed by a MaxPooling2D layer for progressive spatial downsampling. The encoder’s weights are initialised with parameters pre-trained on ImageNet, which accelerates training and enhances segmentation performance when working with limited datasets.

The decoder mirrors the encoder’s structure and consists of the following components: transposed convolutions (Conv2DTranspose) for resolution restoration; feature concatenation (skip connections) with the corresponding encoder layers; two consecutive convolutional layers (Conv2D) with BatchNormalization and LeakyReLU activation; and Dropout layers for regularisation.

The final layer of the model is a 1 × 1 convolution with sigmoid activation, which projects the output onto a probability map for the nerve fibre/ganglion class (the model architecture diagram is provided in Supplementary Material, file Architecture of VGG-UNet.png).

The primary loss function was a composite metric designed to account not only for the degree of mask overlap but also for the impact of false positive predictions—an aspect of particular importance when analysing histological images containing fine structures. The overall loss function comprised the following components:

Binary Cross-Entropy (BCE): Measures the local pixel-wise difference between the predicted mask and the ground-truth mask (formulas for all loss functions are available in the Formulas.html in Supplementary Material).

IoU Loss (Jaccard loss): Evaluates the quality of the intersection between the predicted and true regions.

False Positive Penalty: Introduces an additional penalty for false positive pixels, i.e., background tissue incorrectly classified as nerve fibre. The penalty is scaled by a factor of α = 2.5.

The composite loss function used in training is expressed as follows:

The model was trained for 50 epochs using the Adam optimiser with a batch size of 8 and a learning rate of 0.0001. For each epoch, the number of training and validation steps was determined by the size of the respective datasets. Data augmentation was applied exclusively to the training set, while the validation set was used in its original, non-augmented form. A summary script for retraining the model is provided in Supplementary Material (Script for retraining the VGG-UNet model.html), and the original model is available in the same Supplementary Material as VGG-UNet.keras.

2.2. The Model FabE-Net

The FabE-Net model is also based on a modified U-Net architecture, but it incorporates an EfficientNetV2-S encoder and an enhanced decoder. A pre-trained EfficientNetV2-S model (trained on ImageNet) was used as the backbone encoder, from which feature maps at multiple scales—corresponding to downsampling factors of 2, 4, 8, 16, and 32—were extracted.

Between the encoder and decoder, a series of contextual perception enhancement modules were introduced, including: (1) a Global Context Block, which aggregates global contextual information using attention mechanisms, and (2) an Atrous Spatial Pyramid Pooling (ASPP) module with dilation rates of 1, 6, 12, and 18 to capture features across multiple spatial scales.

The decoder consists of four hierarchically organised spatial resolution recovery blocks, each combining upsampled features with corresponding encoder features via skip connections. Each decoder block is augmented with a Convolutional Block Attention Module (CBAM), which sequentially applies channel attention and spatial attention to adaptively select informative features. In the final stage, a convolutional block followed by bilinear interpolation is applied to restore the output to the original image size. The model architecture diagram is provided in Supplementary Material (Architecture of FabE-Net.png).

To optimise training, a composite loss function was employed, combining the following components:

- Focal Tversky Loss: A modification of the Tversky function designed to emphasise difficult-to-classify pixels, with additional scaling for the positive (foreground) class.

- Balanced Dice Loss: A balanced variant of the Dice loss that accounts for the relative weight of the foreground class.

- Foreground Focal Loss: A modified binary cross-entropy incorporating a focusing factor and weight amplification for positive-class pixels.

- False Positive Penalty: A soft penalty applied to false positive segmentations.

The total loss function was defined as a weighted combination of these components:

The model was trained using the AdamW optimiser with an initial learning rate of 0.0001 and a batch size of 8, over 50 epochs. The Dice coefficient, Intersection over Union (IoU), and Recall were used as evaluation metrics to assess the completeness of target object segmentation. A summary script for retraining the model is provided in Supplementary Material (Script for retraining the FabE-Net model.html), and the original model is available in the same Supplementary Material as FabE-Net.pth.

2.3. The Model SegFormer

The SegFormer semantic segmentation model is based on a hierarchical transformer architecture and is designed for precise object extraction in images. In this study, the SegFormer-B2 variant was used, pre-trained on the ADE20K dataset and subsequently fine-tuned for the binary segmentation task.

The encoder is a multi-level transformer based on the Mix-Transformer (MiT), which integrates local and global attention mechanisms to enable effective feature extraction across multiple levels of detail. It comprises several stages, each reducing the spatial resolution of feature maps through patching and convolution operations. The early stages of the model focus on processing local image patches, while the deeper stages construct a global contextual representation, enabling the capture of relationships between spatially distant regions. At each successive stage, the number of channels increases, allowing the model to generate progressively more abstract and semantically rich feature representations.

The decoder is a lightweight and computationally efficient module that aggregates multi-level features from the encoder to produce the final segmentation map. A distinctive feature of the SegFormer decoder is the absence of complex upsampling blocks with trainable parameters; instead, it employs simple concatenation and convolution operations, thereby reducing computational complexity and accelerating training.

In the output layer, the model generates logits for each class (in this study, two classes: background and object), which are subsequently converted into probabilities via the softmax function. The model architecture diagram is provided in Supplementary Material (Architecture of SegFormer.png).

Model training was guided by a composite loss function consisting of:

The model was trained using the SegTrainer class, built upon the Trainer extension from the Hugging Face Transformers library. This custom class redefined the loss function computation to integrate Dice Loss and implemented quality metric calculations. Optimisation was performed using the Adam algorithm with weight decay (AdamW) and an initial learning rate of 0.0001. Training was conducted for 25 epochs with a batch size of 8.

A summary script for model retraining is provided in Supplementary Material (Script for retraining the SegFormer model.html), and the original model is available in the same Supplementary Material within the SegFormer folder. A comparative summary of the architectural features of the models and hyperparameters is presented in Table 1 and Table 2.

Table 1.

Architectural features of the models.

Table 2.

Training Hyperparameters.

3. Results

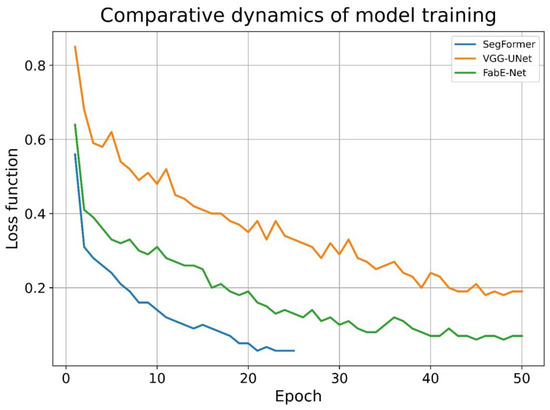

During model training, the convergence rate of gradient descent varied across architectures. For the SegFormer model, stable convergence was achieved between the 20th and 30th epochs, whereas the VGG-UNet and FabE-Net models required substantially more iterations, approximately 45 to 60 epochs. Notably, the SegFormer model exhibited the fastest loss function decay, while the VGG-UNet model demonstrated the slowest (Figure 4).

Figure 4.

The dynamics of the decrease in the loss function value in the models under study.

In addition to differences in convergence dynamics, the models also varied substantially in training time. Using identical computing hardware (CPU, 15 GB RAM), the time required for one epoch—comprising approximately 75,000 image pairs—depended on the architecture: for VGG-UNet, training one epoch took an average of ~20 min; for FabE-Net, 35–40 min; and for SegFormer, 90–100 min.

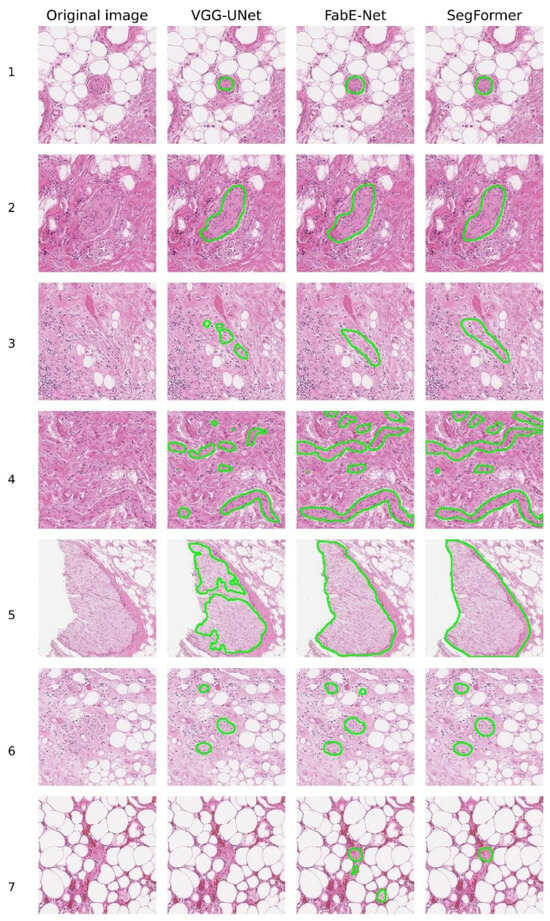

The results of histological image segmentation produced by the different neural network architectures are shown in Figure 5. Qualitative (visual) analysis revealed that the VGG-UNet model often produced incomplete coverage of nerve structures, particularly noticeable in rows 3, 4, and 5. In addition, in some cases (e.g., rows 4 and 7), false-negative segmentation occurred, where nerve fibres were not detected and highlighted by the model.

Figure 5.

Examples of nerve fibre segmentation by different NNs.

When comparing the FabE-Net and SegFormer models, it was found that the FabE-Net model is characterised by a more pronounced tendency towards segmentation errors. Among the most frequently observed errors are excessive segmentation, i.e., the selection of a wider area than the actual location of the nerve fibre (e.g., line 2), as well as false positives, where there was no nerve, but the model mistakenly classified other tissues as nerve structures. A typical example is line 7, where FabE-Net recognised macrophages and adipose tissue as nerve tissue.

The image shown in line 4 deserves special attention, as it proved difficult to interpret by all the models under consideration (Figure 5). In this case, all three architectures (VGG-UNet, FabE-Net, and SegFormer) misinterpreted the area of fibrous tissue located in the centre-left region of the image as a nerve fibre. It is also worth noting the time spent on labelling nerve fibres on a complete histological section in svs format with a size of ~600 MB. For the VGG-UNet model, complete marking of the section took about 45 min, for the FabE-Net model—approximately 20 min, while the SegFormer model completed the marking in about 13 min.

To evaluate the effectiveness of the segmentation models, they were retrained on a specialised dataset containing seven myocardial scans, followed by the generation of 5600 labelled images. From this array, 100 images were randomly selected for expert validation so that 60% of the images contained labelled nerve fibres and 40% did not. It is important to note that the selected data was not used during the model training phase.

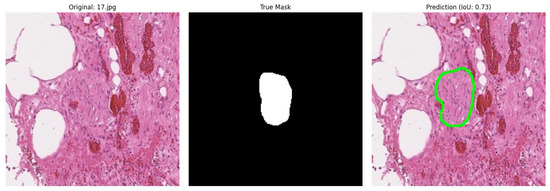

During the automatic analysis of the correspondence between expert annotation and model predictions, a significant problem was discovered: in cases where the model correctly identified nerve fibres according to experts, the quantitative metrics (IoU, Dice, etc.) showed low values. This contradiction was explained by the incomplete coincidence of predictions with the original annotation (Figure 6).

Figure 6.

Example of incorrect metric calculation (IoU) during model validation.

To objectively resolve this issue, a group of four independent pathologists who had not participated in the initial marking was brought in. The experts were presented with a series of marked images for evaluation according to the following criteria: Optimal segmentation (complete and accurate nerve isolation); Suboptimal segmentation (partial isolation of the nerve); False positive segmentation; False negative segmentation; True negative segmentation.

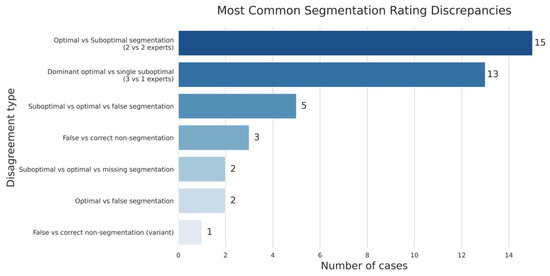

The results of the expert validation revealed significant discrepancies in the assessment of segmentation quality. Only in 55% of cases was there complete agreement among all experts, while in 45% of cases their opinions differed. The most frequent source of disagreement (28 out of 45 cases, or 62% of discrepancies) was the subjective boundary between optimal and suboptimal segmentation of nerve fibres.

Of particular note is the fact that in 17% of cases, the experts demonstrated fundamentally different interpretations–some specialists identified nerve structures in the image, while others denied their presence (Figure 7). This indicates the existence of diagnostically challenging cases where even experienced pathologists cannot reach a consensus on the presence of nerve fibres on histological sections without additional immunohistochemical staining.

Figure 7.

The spectrum of disagreement in expert assessment of nerve fibre segmentation.

To assess inter-expert reliability of the annotations, we calculated agreement metrics across 100 images scored by four independent raters on a 5-point ordinal scale. For each image, individual ratings were tabulated into category frequency distributions, which were then used to compute Fleiss’ κ as a measure of overall categorical agreement and Krippendorff’s α (ordinal) to account for the ordered nature of the scale. Bootstrap resampling (2000 iterations) was employed to estimate 95% confidence intervals. In addition, we quantified the mean observed agreement across all items and the proportion of images with high within-item agreement (Pi ≥ 0.80). The analysis demonstrated substantial agreement between experts: Fleiss’ κ was 0.657 [95% CI: 0.573–0.735], Krippendorff’s α (ordinal) was 0.928 [95% CI: 0.897–0.952], the mean observed agreement was 0.768 and 61% of images showed high within-item agreement (Pi ≥ 0.80).

To objectively evaluate the performance of various deep learning architectures, a comprehensive analysis of three models was conducted: VGG-UNet, FabE-Net, and SegFormer. The comparison was based on standard segmentation quality metrics, including precision, recall, F1-score, and accuracy, which were calculated as averages based on expert group assessments. The number of true positives was also calculated as the average opinion of the experts.

The study revealed a significant dependence of the evaluation results on the selected segmentation interpretation criteria. When using the most stringent criteria, where only cases of optimal segmentation were considered true positives, the SegFormer model showed the best results, demonstrating satisfactory performance. At the same time, the VGG-UNet and FabE-Net models showed unsatisfactory results under these conditions, indicating their limited effectiveness with this approach to evaluation.

However, the situation changed dramatically when milder evaluation criteria were applied, when both optimal and suboptimal segmentation were considered true positives. In this case, all models showed a significant improvement in metrics, but the degree of this improvement varied considerably. The greatest increase in performance was observed in the VGG-UNet model, which indicates its tendency towards partial rather than complete segmentation of nerve fibres. This conclusion was further confirmed by visual analysis of the model’s results. In contrast, SegFormer showed the smallest increase in metrics when the evaluation criteria were changed, indicating its ability to provide complete segmentation more often. The FabE-Net model occupied an intermediate position, demonstrating good segmentation quality indicators overall, but at the same time, slightly more often than SegFormer, providing partial segmentation of nerve structures (Table 3).

Table 3.

Training metrics of the models under investigation depending on the evaluation strategy.

4. Discussion

The segmentation of nerves in histological images is an important area of pathomorphological research with a wide range of practical applications, from the routine detection of perineural tumour invasion, which in some cases is considered a significant independent prognostic factor [4], to fundamental studies of organ innervation in various pathological conditions and after denervation interventions [5,6]. This method is also used to reconstruct anatomically accurate three-dimensional models of nerve fibres [7,8], which currently involves significant difficulties associated with the need for laborious manual or semi-automatic segmentation and complex comparison of the location of fibres between different levels of histological sections.

Such technologies are particularly useful in the analysis of large experimental datasets, including studies aimed at developing new approaches to the treatment of pulmonary arterial hypertension (PAH)—a severe progressive disease characterised by remodelling of the pulmonary arteries, increased pressure in the pulmonary circulation, development of chronic pulmonary heart disease and high mortality [9,10]. Since there is currently no complete cure for PAH, and existing methods of treatment either affect only individual links in the pathogenesis or involve heart and lung transplantation [11,12,13], the phenomenon of hyperactivation of the sympathetic nervous system (SNS) in PAH is of particular interest [14,15].

The development of pulmonary artery denervation methods aimed at removing periarterial nerve structures in order to reduce pressure in the pulmonary circulation is a promising direction for treatment [16,17,18]. Endovascular access with preliminary mapping of nerve structures appears to be the most promising [19]. Experimental work in this area includes detailed mapping of pulmonary artery nerve structures in animal models and humans [20,21], but there are no comprehensive studies in humans in normal conditions and with PAH.

A promising approach to solving this problem is the automation of the identification of periarterial nerve structures using artificial intelligence methods, which is particularly relevant when using large datasets obtained both by standard staining methods and by immunohistochemistry.

U-Net-like architectures traditionally occupy a central place in medical imaging, especially when solving problems of histological image segmentation. Their efficiency, resistance to data variability, and wide testing in medical research make them the architectures of choice for such tasks [22,23,24].

With the development of visual transformer architectures (Vision Transformers), their capabilities have been actively studied in medical segmentation tasks. MiT (Mix Transformer) family networks have shown high efficiency in the analysis of histopathological images [25,26]. The self-attention mechanism in transformers allows modelling the global context, identifying dependencies between distant areas of the image and overcoming the local limitations of convolutional networks [27]. This ability to form a holistic view of an image is especially important for histopathology, where spatial relationships between structures and the context of morphological features are critical [28]. However, the use of transformers in this field is still rare, and the results remain controversial. In particular, Yıldız S. et al. showed that combining U-Net-like architectures with Vision Transformer components can yield worse results compared to the classic U-Net [29]. At the same time, a study by Tsiporenko, I. et al. demonstrated that modified transformer architectures, such as SWIN-Supernet, can significantly improve the quality of histological image segmentation compared to U-Net [30].

Our research confirms the hypothesis that attention mechanisms play a key role in achieving high segmentation results. Thus, the FabE-Net architecture, which uses a series of blocks to improve contextual perception, has shown an advantage over the classic U-Net, emphasising the importance of attention layers and multi-level feature processing.

In addition, the SegFormer model also demonstrated superiority over U-Net-like architectures not only in terms of segmentation quality but also in terms of computational efficiency. Thanks to fewer parameters, SegFormer requires fewer resources and reduces the processing time of a complete histological image by 3–3.5 times.

The data obtained allow us to hypothesise that in the tasks of segmenting histopathological images, attention mechanisms play a role not only in integrating the global context but also in compensating for the high morphological variability of tissues. In conditions where the structure and location of cellular elements can vary significantly even within a single pathology, the ability of the model to adaptively redistribute the weight of features depending on their contextual significance becomes a critical factor in segmentation accuracy.

To speed up the segmentation process, we scaled the original images from 1024 × 1024 to 224 × 224 pixels, which significantly reduced the computational load and accelerated processing without losing key morphological features, as confirmed by visual quality control. This approach optimises the performance of models on real data and meets the input requirements of most modern CNN architectures. To our knowledge, such systematic use of reducing the resolution of histological slide tiles in the context of deep learning for segmentation has not yet been described in the literature, which makes the proposed method innovative.

All models considered in the study—including SegFormer, FabE-Net, and VGG-UNet—are available in the Supplementary Material to the article. They can be freely used for retraining and adaptation to new segmentation tasks, as well as partially applied as components for building model ensembles to further improve segmentation quality and reliability of results.

A comparative analysis of three architectures—VGG-UNet, FabE-Net, and SegFormer—showed a clear advantage of the SegFormer model in the task of segmenting nerve structures in histological images. Under strict evaluation criteria, which considered only cases of optimal segmentation as true positives, SegFormer demonstrated the best results across all key metrics (precision, recall, F1-score, accuracy), significantly outperforming VGG-UNet and FabE-Net. Unlike competing architectures, SegFormer performed partial segmentation less frequently and more often provided complete and accurate extraction of target structures, as confirmed by the minimal increase in metrics when switching from strict to more lenient evaluation criteria. This result indicates the model’s high resistance to interpretation variations and its ability to form masks that are more consistent with experts.

The data obtained also revealed a key problem in the segmentation of histological images: the high dependence of the results on the quality of the initial labelling. Expert validation showed that even among experienced pathologists, the level of complete agreement on optimal segmentation is only 55%, with 17% of cases showing fundamental differences in the interpretation of the presence of nerve structures. This emphasises that errors and variability at the annotation stage can significantly affect training samples and, as a result, the final quality of deep learning models. It is important to emphasise that the partial agreement among pathologists reflects not only the inherent subjectivity of histological interpretation but also the methodological limitations of annotation practices. While our dataset relied on expert labelling, the observed discrepancies highlight the potential benefits of consensus-based approaches, where annotations are harmonised across multiple pathologists to minimise individual bias.

It is important to note that even experienced pathologists do not always agree when evaluating the same histological structures. For example, one study noted that the percentage of agreement varied from weak to moderate, especially when analysing micrometastases and small clusters of tumour cells [31]. Significant discrepancies were also observed when counting mitoses in breast tissue—even at the level of individual mitotic figures, where two-thirds agreement among experts was often not achieved [32]. The data indicate that subjective interpretation characteristics, as well as the quality of micro-preparations—including sections, staining, and visualisation—have a particularly critical impact on the accuracy of diagnosing fine structures such as small nerve fibres.

It is also important to note that standard segmentation metrics do not always capture the clinical acceptability of results. For nerve fibres in particular, the precise delineation of boundaries is often limited by preparation artefacts (e.g., tissue tears), endoneurial oedema, or partial loss of the specimen beyond the glass slide. These factors frequently explain cases of partial disagreement among experts, where quantitative metrics produced relatively low scores despite the segmentation being judged as clinically meaningful. Future evaluation frameworks should therefore combine conventional quantitative metrics with consensus-based expert scoring systems to provide a more accurate assessment of clinical relevance.

One promising way to improve the accuracy and consistency of annotation is to use immunohistochemical (IHC) staining to create annotations. The proposed approach can be implemented as follows:

- Two consecutive sections of the same tissue area are digitised—the first with immunohistochemical staining (IHC) for markers specific to the structures of interest (e.g., S100 nerve fibres), the second with the standard haematoxylin-eosin (H&E) method.

- Precise registration (alignment) of the images of the two sections is performed, taking into account microdeformations of the tissue.

- Information about the location of structures identified on the IHC slide is transferred to the corresponding H&E slide.

- The resulting mask is used as a reference label for training segmentation models.

This method minimises subjective errors by annotators and creates the most accurate and reproducible training samples possible. Given the demonstrated superiority of SegFormer over other architectures studied, the integration of these models based on visual transformer architecture with IHC-annotated datasets appears particularly promising. This is likely to lead to the formation of a new standard for the quality of histological image segmentation, increased reproducibility of results, and simplified clinical interpretation, which is particularly important for the development of reliable decision support systems in pathomorphology.

Most previously published studies addressed narrower segmentation tasks, such as identifying myelin, axons, or specific cortical cells, which differ conceptually from our approach focused on nerve fibre segmentation in heterogeneous histological material. Therefore, direct comparison is not entirely appropriate. Our SegFormer model nevertheless demonstrated strong performance (Precision = 0.84, Recall = 0.99, F1 = 0.91, Accuracy = 0.89 for optimal + suboptimal segmentation), clearly surpassing the VGG-UNet and FabE-Net baselines.

By contrast, A. Rasool et al. reported only accuracy values (98–94%), without external expert validation and with signs of annotation inconsistencies [33]. D. Tovbis et al. showed excellent precision, recall, and F1 (~0.91) in fascicle detection, though segmentation was only a secondary step and the dataset was relatively simple [34]. D. Ono et al. achieved high AP and IoU in sural nerve biopsies, but the material was homogeneous and did not involve complex tissue background [35]. Finally, M. M. Fraz demonstrated strong results with FABnet (IoU = 78.4, Dice = 87.9, Accuracy = 96.3), yet their task was restricted to oral cavity tissue, limiting generalizability [36]. Compared to these works, our results highlight the robustness of SegFormer in more challenging and diverse histological settings, supported by evaluation across multiple complementary metrics rather than accuracy alone.

5. Conclusions

Attention mechanisms in NN probably play a key role in compensating for the high morphological variability of tissues, ensuring more accurate and consistent segmentation of structures in histological images. In our study, the SegFormer model demonstrated superiority in both nerve fibre segmentation quality and processing speed, significantly outperforming the VGG-UNet and FabE-Net architectures.

The results also highlight the significant impact of annotation variability and subjectivity on the model training process. In this regard, the use of immunohistochemically informed annotation appears to be a promising direction for improving segmentation accuracy and reproducibility.

In addition, we have shown that scaling input images from 1024 × 1024 to 224 × 224 pixels significantly speeds up both the training and mask prediction stages without compromising segmentation quality. This approach can serve as an effective tool for optimising computational resources and is recommended for implementation when developing and training your own histological image segmentation models.

Study Limitations

A key limitation of this study is the variability in expert annotations. Even among experienced pathologists, complete agreement on the presence or absence of nerve structures was not consistently achieved, and in some cases, fundamental differences in interpretation were observed. This variability inevitably influences the quality of the training data and, consequently, the performance of deep learning models. Future work should consider strategies to mitigate this limitation, including the use of consensus annotations from multiple experts and the integration of immunohistochemistry-based labelling, which may provide more objective reference standards. Although the dataset contained more than 75,000 image–mask pairs, it was ultimately derived from only 64 histological sections obtained from a limited range of tissue types. This restriction may limit the diversity of morphological patterns represented in the training data and raises the possibility of dataset-specific biases. Consequently, the current models should be interpreted with caution when applied to histological images obtained under different staining protocols, tissue origins, or imaging systems. Future work will therefore require external validation on independent datasets encompassing a wider variety of stains, tissue types, and acquisition modalities to confirm the generalizability and robustness of the proposed approach.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/diagnostics15182408/s1, Script for preprocessing svs-files to create mask–original image pairs.html.

Author Contributions

Conceptualization, I.M.; methodology, I.M.; software, I.M.; validation, I.M., L.M., A.P., E.K. and G.B.; formal analysis I.M., E.K., G.B. and A.P.; investigation, I.M.; resources, I.M., E.K. and T.M.; data curation, I.M.; writing—original draft preparation, I.M. and E.K.; writing—review and editing, I.M., A.S., D.K., T.M. and L.M.; visualisation, I.M. and G.B.; supervision, L.M., A.S. and D.K.; project administration, L.M., A.S. and D.K.; funding acquisition, L.M., A.S. and D.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was financially supported by the Ministry of Science and Higher Education of the Russian Federation in the framework of a scientific project under agreement No. 075-15-2022-1110.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Ethics Committee of the Almazov National Medical Research Centre (Protocol No. 10-22 dated 3 October 2022).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The original contributions presented in this study are included in the article/Supplementary Material. Further inquiries can be directed to the corresponding author.

Acknowledgments

During the preparation of this manuscript, the authors used ChatGPT (GPT-5, OpenAI, San Francisco, CA, USA) to correct grammatical errors and improve the clarity and quality of the English language. In addition, ChatGPT was employed to generate supporting HTML code used for Supplementary Materials (Formulas.html), which are provided as a Supplementary file to the article. All scientific content, interpretations, and conclusions were developed independently by the authors. It is drawn up in accordance with the position of the “GAMER statement”.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Sjöström, P.J.; Frydel, B.R.; Wahlberg, L.U. Artificial neural network-aided image analysis system for cell counting. Cytometry 1999, 36, 18–26. [Google Scholar] [CrossRef]

- Malon, C.; Miller, M.; Christopher, H.B.; Cosatto, E.; Graf, H.P. Identifying histological elements with convolutional neural networks. In Proceedings of the 5th International Conference on Soft Computing as Transdisciplinary Science and Technology (CSTST ‘08), Cergy-Pontoise, France, 28–31 October 2008; Association for Computing Machinery: New York, NY, USA, 2008; pp. 450–456. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef]

- Qin, L.; Heng, Y.; Deng, S.; Gu, J.; Mao, F.; Xue, Y.; Jiang, Z.; Wang, J.; Cheng, D.; Wu, K.; et al. Perineural invasion affects prognosis of patients undergoing colorectal cancer surgery: A propensity score matching analysis. BMC Cancer 2023, 23, 452. [Google Scholar] [CrossRef]

- Haider, S.A.; Sharif, R.; Sharif, F. Multi-Organ Denervation: The Past, Present and Future. J. Clin. Med. 2025, 14, 2746. [Google Scholar] [CrossRef]

- Sakaoka, A.; Takami, A.; Onimura, Y.; Hagiwara, H.; Terao, H.; Kumagai, F.; Matsumura, K. Acute changes in histopathology and intravascular imaging after catheter-based renal denervation in a porcine model. Catheter. Cardiovasc. Interv. Off. J. Soc. Card. Angiogr. Interv. 2017, 90, 631–638. [Google Scholar] [CrossRef]

- Das, S.; Gordián-Vélez, W.J.; Ledebur, H.C.; Mourkioti, F.; Rompolas, P.; Chen, H.I.; Serruya, M.D.; Cullen, D.K. Innervation: The missing link for biofabricated tissues and organs. NPJ Regen. Med. 2020, 5, 11. [Google Scholar] [CrossRef] [PubMed]

- Zhong, Y.; Wang, L.; Dong, J.; Zhang, Y.; Luo, P.; Qi, J.; Liu, X.; Xian, C.J. Three-dimensional Reconstruction of Peripheral Nerve Internal Fascicular Groups. Sci. Rep. 2015, 5, 17168. [Google Scholar] [CrossRef]

- Guignabert, C.; Dorfmuller, P. Pathology and pathobiology of pulmonary hypertension. Semin. Respir. Crit. Care Med. 2013, 34, 551–559. [Google Scholar] [CrossRef] [PubMed]

- Sahay, S. Evaluation and classification of pulmonary arterial hypertension. J. Thorac. Dis. 2019, 11 (Suppl. 14), S1789–S1799. [Google Scholar] [CrossRef] [PubMed]

- Vazquez, Z.G.S.; Klinger, J.R. Guidelines for the Treatment of Pulmonary Arterial Hypertension. Lung 2020, 198, 581–596. [Google Scholar] [CrossRef] [PubMed]

- Humbert, M.; Guignabert, C.; Bonnet, S.; Dorfmüller, P.; Klinger, J.R.; Nicolls, M.R.; Olschewski, A.J.; Pullamsetti, S.S.; Schermuly, R.T.; Stenmark, K.R.; et al. Pathology and pathobiology of pulmonary hypertension: State of the art and research perspectives. Eur. Respir. J. 2019, 53, 1801887. [Google Scholar] [CrossRef]

- Coons, J.C.; Pogue, K.; Kolodziej, A.R.; Hirsch, G.A.; George, M.P. Pulmonary Arterial Hypertension: A Pharmacotherapeutic Update. Curr. Cardiol. Rep. 2019, 21, 141. [Google Scholar] [CrossRef]

- Ameri, P.; Bertero, E.; Meliota, G.; Cheli, M.; Canepa, M.; Brunelli, C.; Balbi, M. Neurohormonal activation and pharmacological inhibition in pulmonary arterial hypertension and related right ventricular failure. Heart Fail. Rev. 2016, 21, 539–547. [Google Scholar] [CrossRef]

- Vaillancourt, M.; Chia, P.; Sarji, S.; Nguyen, J.; Hoftman, N.; Ruffenach, G.; Eghbali, M.; Mahajan, A.; Umar, S. Autonomic nervous system involvement in pulmonary arterial hypertension. Respir. Res. 2017, 18, 201. [Google Scholar] [CrossRef]

- Xie, Y.; Liu, N.; Xiao, Z.; Yang, F.; Zeng, Y.; Yang, Z.; Xia, Y.; Chen, Z.; Xiao, Y. The progress of pulmonary artery denervation. Cardiol. J. 2022, 29, 381–387. [Google Scholar] [CrossRef]

- Garcia-Lunar, I.; Pereda, D.; Santiago, E.; Solanes, N.; Nuche, J.; Ascaso, M.; Bobí, J.; Sierra, F.; Dantas, A.P.; Galán, C.; et al. Effect of pulmonary artery denervation in postcapillary pulmonary hypertension: Results of a randomized controlled translational study. Basic Res. Cardiol. 2019, 114, 5. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.L.; Zhang, H.; Xie, D.J.; Zhang, J.; Zhou, L.; Rothman, A.M.; Stone, G.W. Hemodynamic, functional, and clinical responses to pulmonary artery denervation in patients with pulmonary arterial hypertension of different causes: Phase II results from the Pulmonary Artery Denervation-1 study. Circ. Cardiovasc. Interv. 2015, 8, e002837. [Google Scholar] [CrossRef] [PubMed]

- Goncharova, N.S.; Moiseeva, O.M.; Condori Leandro, H.I.; Zlobina, I.S.; Berezina, A.V.; Malikov, K.N.; Tashkhanov, D.M.; Lebedev, D.S.; Mikhaylov, E.N. Electrical Stimulation-Guided Approach to Pulmonary Artery Catheter Ablation in Patients with Idiopathic Pulmonary Arterial Hypertension: A Pilot Feasibility Study with a 12-Month Follow-Up. BioMed Res. Int. 2020, 2020, 8919515. [Google Scholar] [CrossRef]

- Condori Leandro, H.I.; Lebedev, D.S.; Mikhaylov, E.N. Discrimination of ventricular tachycardia and localization of its exit site using surface electrocardiography. J. Geriatr. Cardiol. JGC 2019, 16, 362–377. [Google Scholar] [CrossRef]

- Vakhrushev, A.D.; Condori Leandro, H.I.; Goncharova, N.S.; Korobchenko, L.E.; Mitrofanova, L.B.; Makarov, I.A.; Andreeva, E.M.; Lebedev, D.S.; Mikhaylov, E.N. Laser renal denervation: A comprehensive evaluation of microstructural renal artery lesions. Anat. Rec. 2023, 306, 2378–2387. [Google Scholar] [CrossRef] [PubMed]

- Copurkaya, C.; Meric, E.; Patlar Akbulut, F.; Catal, C. A multi-pretraining U-Net architecture for semantic segmentation. Signal Image Video Process. 2025, 19, 669. [Google Scholar] [CrossRef]

- Li, J.; Li, X. MIU-Net: MIX-Attention and Inception U-Net for Histopathology Image Nuclei Segmentation. Appl. Sci. 2023, 13, 4842. [Google Scholar] [CrossRef]

- Dostovalova, A.M.; Gorshenin, A.K.; Starichkova, J.V.; Arzamasov, K.M. Comparative analysis of modifications of U-Net neural network architectures in the problem of medical image segmentation. Digit. Diagn. 2024, 5, 833–853. [Google Scholar] [CrossRef]

- Imran, M.; Islam Tiwana, M.; Mohsan, M.M.; Alghamdi, N.S.; Akram, M.U. Transformer-based framework for multi-class segmentation of skin cancer from histopathology images. Front. Med. 2024, 11, 1380405. [Google Scholar] [CrossRef] [PubMed]

- Hörst, F.; Rempe, M.; Heine, L.; Seibold, C.; Keyl, J.; Baldini, G.; Egger, J.; Kleesiek, J. CellViT: Vision Transformers for precise cell segmentation and classification. Med. Image Anal. 2024, 94, 103143. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Pang, S.; Zhang, R.; Zhu, J.; Fu, X.; Tian, Y.; Gao, J. ATTransUNet: An enhanced hybrid transformer architecture for ultrasound and histopathology image segmentation. Comput. Biol. Med. 2023, 152, 106365. [Google Scholar] [CrossRef]

- Zhao, Y.; Wang, S.; Zhang, Y.; Ren, Y.; Zhai, X.; Wu, W.; Pang, S. ConTNet: Cross attention convolution and transformer for aneurysm image segmentation. In Proceedings of the IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Istanbul, Turkiye, 5–8 December 2023; pp. 3618–3625. [Google Scholar] [CrossRef]

- Yıldız, S.; Memiş, A.; Varlı, S. Segmentation of Cell Nuclei in Histology Images with Vision Transformer Based U-Net Models. In Proceedings of the 32nd Signal Processing and Communications Applications Conference (SIU), Mersin, Turkiye, 15–18 May 2024; pp. 1–4. [Google Scholar] [CrossRef]

- Tsiporenko, I.; Chizhov, P.; Fishman, D. Going Beyond U-Net: Assessing Vision Transformers for Semantic Segmentation in Microscopy Image Analysis. In Computer Vision—ECCV 2024 Workshops, ECCV 2024, Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Del Bue, A., Canton, C., Pont-Tuset, J., Tommasi, T., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2025; Volume 15638. [Google Scholar] [CrossRef]

- van der Zaag, E.S.; Welling, L.; Peters, H.M.; van de Vijver, M.J.; Bemelman, W.A.; Buskens, C.J. Categorization of occult tumour cells in lymph nodes in patients with colon cancer not reliable enough. Ned. Tijdschr. Voor Geneeskd. 2011, 155, A2697. [Google Scholar]

- Malon, C.; Brachtel, E.; Cosatto, E.; Graf, H.P.; Kurata, A.; Kuroda, M.; Meyer, J.S.; Saito, A.; Wu, S.; Yagi, Y. Mitotic figure recognition: Agreement among pathologists and computerized detector. Anal. Cell. Pathol. 2012, 35, 97–100. [Google Scholar] [CrossRef]

- Rasool, A.; Fraz, M.M.; Javed, S. Multiscale Unified Network for Simultaneous Segmentation of Nerves and Micro-vessels in Histology Images. In Proceedings of the International Conference on Digital Futures and Transformative Technologies (ICoDT2), Islamabad, Pakistan, 20–21 May 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Tovbis, D.; Agur, A.; Mogk, J.P.M.; Zariffa, J. Automatic three-dimensional reconstruction of fascicles in peripheral nerves from histological images. PLoS ONE 2020, 15, e0233028. [Google Scholar] [CrossRef]

- Ono, D.; Kawai, H.; Kuwahara, H.; Yokota, T. Automated whole slide morphometry of sural nerve biopsy using machine learning. Neuropathol. Appl. Neurobiol. 2024, 50, e12967. [Google Scholar] [CrossRef] [PubMed]

- Fraz, M.M.; Khurram, S.A.; Graham, S.; Shaban, M.; Hassan, M.; Loya, A.; Rajpoot, N.M. FABnet: Feature attention-based network for simultaneous segmentation of microvessels and nerves in routine histology images of oral cancer. Neural Comput. Appl. 2020, 32, 9915–9928. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).